GraphTrace: A Modular Retrieval Framework Combining Knowledge Graphs and Large Language Models for Multi-Hop Question Answering

Abstract

1. Introduction

2. Related Work

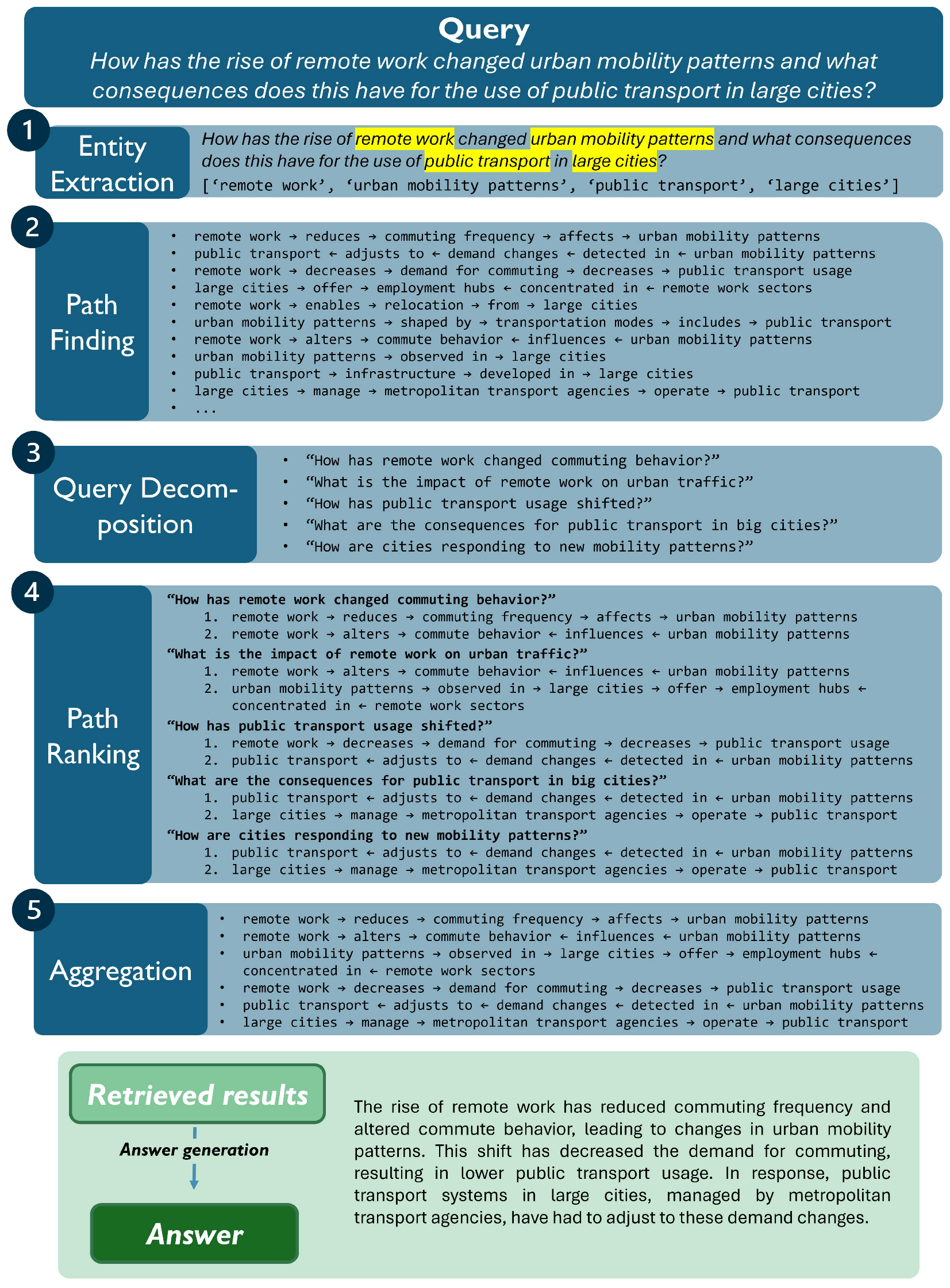

3. Methodology Overview

3.1. Entity Extraction

3.2. Path Finding

3.3. Query Decomposition

3.4. Path Ranking

3.5. Aggregation

4. Evaluation

4.1. Evaluation Setup

- Linear Paths (Chain Reasoning)Linear paths represent a direct sequence of connected entities and relationships. Each step in the path depends on the preceding one, forming a straight-line inference structure.Structure: A → B → C → D

- Converging Paths (Directed Acyclic Graphs—DAGs)Converging paths involve multiple reasoning branches that lead to a common node. These structures are used when synthesizing multiple sources of information to reach a unified conclusion.Structure: (A → B → C), (D → E → C)

- Divergent Paths (Polytrees)Divergent paths originate from a single entity that connects to multiple downstream branches, each representing an independent line of inference.Structure: A → B, A → C, A → D

- Naive RAG: This is a basic retrieval-augmented generation approach that performs a semantic search over a CSV-based knowledge graph. It uses SentenceTransformer (all-MiniLM-L6-v2) with FAISS [24] to embed and index KG triples. For each query, the top six most relevant triples are retrieved and combined with the query and then passed to OpenAI’s gpt-4o-mini to generate an answer.

- Naive RAG with Subquery: This extends the basic Naive RAG approach by decomposing complex queries into simpler subqueries using an LLM (gpt-4o-mini). Each subquery is processed individually to improve the retrieval effectiveness through query simplification.

- Hybrid RAG: This combines dense (vector-based) and sparse (BM25) retrieval. Results are merged and used as context for generation.

- Rerank RAG: This retrieves top triples using dense embeddings and then reranks them with a CrossEncoder to improve the result quality before answer generation.

- Naive GraphRAG: This applies BFS and DFS over the KG starting from entities extracted via KeyBERT [25]. Aggregated paths are used for generation.

- KG RAG: Based on [26], this is a graph-based retrieval method that uses the LLM gpt-4o-mini to guide the step-by-step exploration of a knowledge graph. Based on the input query, the LLM creates a plan to decide whether to explore nodes or relationships. For node exploration, it finds the top five candidates using vector search, and the LLM selects the most relevant ones. For relationships, it identifies paths between important nodes and verifies their relevance. This process continues until enough information is gathered to answer the query. If the plan fails after three revisions, the system stops and does not return an answer.

- Think on Graphs (ToG): This is graph-based retrieval method from [10] that explores the knowledge graph iteratively. Since the original version was designed for Freebase [27] or Wikidata [28], this study adapts it to work with the Economic_KG by integrating KeyBERT for entity recognition. The system starts from identified key entities and uses beam search to explore surrounding nodes and relations. Irrelevant results are filtered through pruning, and reasoning steps (guided by gpt-4o-mini) determine whether enough context has been retrieved or more exploration is needed. This process continues until the system decides that an answer can be generated.

4.2. Retrieval Evaluation

4.3. Generation Evaluation

- Comprehensiveness measures the level of detail and completeness in the answer. A comprehensive response is consistent, covers all relevant aspects of the query, and incorporates contextual and adjacent information aligned with the question’s complexity.

- Diversity evaluates the variety and richness of content. This includes the presentation of different perspectives, insights, or arguments and ensures that the answer offers novel information rather than paraphrasing existing responses.

- Empowerment assesses the extent to which an answer enables the reader to understand the topic and make informed decisions. This metric emphasizes critical thinking, user autonomy, and the capacity to promote reflective understanding.

- Directness captures how precisely the answer addresses the core of the question. A direct response avoids digressions and unnecessary elaboration, while remaining clear, structured, and specific. Excessive detail is only acceptable if it supports comprehension.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, Y.; Li, Y.; Cui, L.; Cai, D.; Liu, L.; Fu, T.; Huang, X.; Zhao, E.; Zhang, Y.; Chen, Y.; et al. Siren’s song in the AI ocean: A survey on hallucination in large language models. arXiv 2023, arXiv:2309.01219. [Google Scholar] [CrossRef]

- Adlakha, V.; BehnamGhader, P.; Lu, X.H.; Meade, N.; Reddy, S. Evaluating correctness and faithfulness of instruction-following models for question answering. Trans. Assoc. Comput. Linguist. 2024, 12, 681–699. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, Y.; Brockett, C.; Mao, Y.; Sui, Z.; Chen, W.; Dolan, B. A token-level reference-free hallucination detection benchmark for free-form text generation. arXiv 2021, arXiv:2104.08704. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Tang, Y.; Yang, Y. Multihop-rag: Benchmarking retrieval-augmented generation for multi-hop queries. arXiv 2024, arXiv:2401.15391. [Google Scholar]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph retrieval-augmented generation: A survey. arXiv 2024, arXiv:2408.08921. [Google Scholar] [CrossRef]

- Edge, D.; Trinh, H.; Larson, J. LazyGraphRAG: Setting a New Standard for Quality and Cost. 2024. Available online: https://www.microsoft.com/en-us/research/blog/lazygraphrag-setting-a-new-standard-for-quality-and-cost/ (accessed on 1 July 2025).

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Metropolitansky, D.; Ness, R.O.; Larson, J. From Local to Global: A Graph RAG Approach to Query-Focused Summarization. arXiv 2025, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Sun, J.; Xu, C.; Tang, L.; Wang, S.; Lin, C.; Gong, Y.; Ni, L.M.; Shum, H.Y.; Guo, J. Think-on-graph: Deep and responsible reasoning of large language model on knowledge graph. arXiv 2023, arXiv:2307.07697. [Google Scholar]

- Cao, Y.; Gao, Z.; Li, Z.; Xie, X.; Zhou, K.; Xu, J. LEGO-GraphRAG: Modularizing Graph-based Retrieval-Augmented Generation for Design Space Exploration. arXiv 2025, arXiv:2411.05844. [Google Scholar] [CrossRef]

- Liu, R.; Jiang, H.; Yan, X.; Tang, B.; Li, J. PolyG: Effective and Efficient GraphRAG with Adaptive Graph Traversal. arXiv 2025, arXiv:2504.02112. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Yu, H.; Gan, A.; Zhang, K.; Tong, S.; Liu, Q.; Liu, Z. Evaluation of Retrieval-Augmented Generation: A Survey. In Proceedings of the Big Data; Zhu, W., Xiong, H., Cheng, X., Cui, L., Dou, Z., Dong, J., Pang, S., Wang, L., Kong, L., Chen, Z., Eds.; Springer: Singapore, 2025; pp. 102–120. [Google Scholar]

- Gao, Y.; Xiong, Y.; Wang, M.; Wang, H. Modular RAG: Transforming RAG Systems into LEGO-like Reconfigurable Frameworks. arXiv 2024, arXiv:2407.21059. [Google Scholar] [CrossRef]

- Hu, Y.; Lei, Z.; Zhang, Z.; Pan, B.; Ling, C.; Zhao, L. GRAG: Graph Retrieval-Augmented Generation. arXiv 2024, arXiv:2405.16506. [Google Scholar] [CrossRef]

- Shi, Y.; Tan, Q.; Wu, X.; Zhong, S.; Zhou, K.; Liu, N. Retrieval-enhanced Knowledge Editing in Language Models for Multi-Hop Question Answering. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, New York, NY, USA, 21–25 October 2024; CIKM ’24. pp. 2056–2066. [Google Scholar] [CrossRef]

- Mavi, V.; Jangra, A.; Jatowt, A. Multi-hop Question Answering. Found. Trends Inf. Retr. 2024, 17, 457–586. [Google Scholar] [CrossRef]

- Gruenwald, L.; Jain, S.; Groppe, S. (Eds.) Leveraging Artificial Intelligence in Global Epidemics; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Khorashadizadeh, H.; Mihindukulasooriya, N.; Ranji, N.; Ezzabady, M.; Ieng, F.; Groppe, J.; Benamara, F.; Groppe, S. Construction and Canonicalization of Economic Knowledge Graphs with LLMs. In Proceedings of the International Knowledge Graph and Semantic Web Conference; Springer: Cham, Switzerland, 2024; pp. 334–343. [Google Scholar]

- Hurst, A.; Lerer, A.; Goucher, A.P.; Perelman, A.; Ramesh, A.; Clark, A.; Ostrow, A.; Welihinda, A.; Hayes, A.; Radford, A.; et al. Gpt-4o system card. arXiv 2024, arXiv:2410.21276. [Google Scholar] [CrossRef]

- Sentence Transformers Team msmarco-MiniLM-L6-en-de-v1. 2021. Available online: https://huggingface.co/cross-encoder/msmarco-MiniLM-L6-en-de-v1 (accessed on 30 June 2025).

- Khorashadizadeh, H.; Tiwari, S.; Benamara, F.; Mihindukulasooriya, N.; Groppe, J.; Sahri, S.; Ezzabady, M.; Ieng, F.; Groppe, S. EcoRAG: A Multi-hop Economic QA Benchmark for Retrieval Augmented Generation Using Knowledge Graphs. In Proceedings of the Natural Language Processing and Information Systems (NLDB), Kanazawa, Japan, 4–6 July 2025. [Google Scholar]

- Cross-Encoder Team all-MiniLM-L6-v2. 2021. Available online: https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2 (accessed on 30 June 2025).

- Grootendorst, M. KeyBERT: Minimal Keyword Extraction with BERT. 2020. Available online: https://www.maartengrootendorst.com/blog/keybert/ (accessed on 1 July 2025).

- Sanmartin, D. Kg-rag: Bridging the gap between knowledge and creativity. arXiv 2024, arXiv:2405.12035. [Google Scholar] [CrossRef]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1247–1250. [Google Scholar]

- Vrandečić, D.; Krötzsch, M. Wikidata: A free collaborative knowledgebase. Commun. ACM 2014, 57, 78–85. [Google Scholar] [CrossRef]

- Wang, J.; Liang, Y.; Meng, F.; Sun, Z.; Shi, H.; Li, Z.; Xu, J.; Qu, J.; Zhou, J. Is chatgpt a good nlg evaluator? a preliminary study. arXiv 2023, arXiv:2303.04048. [Google Scholar] [CrossRef]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.; et al. Judging llm-as-a-judge with mt-bench and chatbot arena. Adv. Neural Inf. Process. Syst. 2023, 36, 46595–46623. [Google Scholar]

| Uses KG | Entity Extraction | Path Finding | Query Decomposition | Semantic Path Ranking | Context Aggregation | LLM-Aware Retrieval | Modular Architecture | Latency/Quality Tradeoff | Answer Uses KG Context | Query-to-Path Alignment | Adaptive Multi-Hop Control | Subgraph Diversity Handling | Path Canonicalization | LLM-Based Evaluation Strategy | Hop-Wise Breakdown | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Naive RAG [4] | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Advanced RAG [14] | ✗ | ✓ | ✗ | ✓ | ✗ | ✓ | ✓ | (✗) 1 | (✗) 2 | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Modular RAG [15] | ✗ | ✓ | ✗ | ✓ | (✗) 3 | ✓ | ✓ | ✓ | (✗) 2 | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| GraphRAG [9] | ✓ | ✓ | ✓ | (✗) 4 | ✓ | ✓ | (✗) 5 | ✗ | ✗ | ✓ | ✗ | (✗) 6 | (✗) 7 | ✗ | ✗ | ✗ |

| LazyGraphRAG [8] | ✓ | ✓ | (✓) 8 | (✗) 9 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | (✗) 10 | (✓) 11 | (✗) 12 | ✗ | ✗ | ✗ |

| LEGO-GraphRAG [11] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | (✗) 13 | (✓) 14 | (✓) 15 | ✗ | ✗ | ✗ |

| GRAG [16] | ✓ | ✓ | ✓ | ✗ | (✓) 16 | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | (✓) 17 | (✗) 18 | ✗ | ✗ | ✗ |

| GraphTrace (our approach) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | (✓) 2 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | Graph TRACE | |

|---|---|---|---|---|---|---|---|

| MRR | 0.3745 | 0.3597 | 0.3543 | 0.3318 | 0.0546 | 0.0808 | 0.4477 |

| MAP | 0.0873 | 0.1136 | 0.0870 | 0.0873 | 0.0137 | 0.0143 | 0.1906 |

| Hit@10 | 0.7940 | 0.8202 | 0.7940 | 0.8427 | 0.1573 | 0.1723 | 0.8127 |

| Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | Graph TRACE | |

|---|---|---|---|---|---|---|---|

| MRR | 0.3502 | 0.368 | 0.3431 | 0.3102 | 0.0581 | 0.1013 | 0.484 |

| MAP | 0.0814 | 0.1332 | 0.0826 | 0.086 | 0.0161 | 0.0179 | 0.2314 |

| Hit@10 | 0.7889 | 0.8778 | 0.7667 | 0.8333 | 0.2667 | 0.2556 | 0.8444 |

| Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | Graph TRACE | |

|---|---|---|---|---|---|---|---|

| MRR | 0.4261 | 0.3766 | 0.3925 | 0.3551 | 0.017 | 0.0863 | 0.4149 |

| MAP | 0.1038 | 0.1164 | 0.0995 | 0.0926 | 0.0049 | 0.0144 | 0.175 |

| Hit@10 | 0.8 | 0.8333 | 0.8111 | 0.8444 | 0.0556 | 0.1667 | 0.8 |

| Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | Graph TRACE | |

|---|---|---|---|---|---|---|---|

| MRR | 0.3464 | 0.3336 | 0.3262 | 0.33 | 0.0897 | 0.054 | 0.4442 |

| MAP | 0.0763 | 0.0905 | 0.0785 | 0.0831 | 0.0203 | 0.0103 | 0.1644 |

| Hit@10 | 0.7931 | 0.7471 | 0.8046 | 0.8506 | 0.1494 | 0.092 | 0.7931 |

| Hop Count | Q/A Count | Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | GraphTrace |

|---|---|---|---|---|---|---|---|---|

| MRR | ||||||||

| 4 | 1 | 0.5000 | 0.5000 | 0.3333 | 0.5000 | 0.0000 | 0.0000 | 0.2000 |

| 5 | 2 | 0.7500 | 0.2667 | 0.3750 | 0.5000 | 0.0000 | 0.0000 | 0.7500 |

| 6 | 23 | 0.4094 | 0.4650 | 0.5345 | 0.4301 | 0.0605 | 0.0336 | 0.5765 |

| 7 | 47 | 0.3482 | 0.3501 | 0.3055 | 0.2889 | 0.0607 | 0.1606 | 0.5377 |

| 8 | 17 | 0.2196 | 0.2908 | 0.1849 | 0.1737 | 0.0580 | 0.0470 | 0.1960 |

| MAP | ||||||||

| 4 | 1 | 0.1250 | 0.2500 | 0.0833 | 0.2083 | 0.0000 | 0.0000 | 0.1056 |

| 5 | 2 | 0.1900 | 0.1517 | 0.1083 | 0.2429 | 0.0000 | 0.0000 | 0.3100 |

| 6 | 23 | 0.1250 | 0.2073 | 0.1597 | 0.1366 | 0.0187 | 0.0072 | 0.3276 |

| 7 | 47 | 0.0677 | 0.1078 | 0.0591 | 0.0714 | 0.0145 | 0.0285 | 0.2433 |

| 8 | 17 | 0.0452 | 0.0940 | 0.0402 | 0.0322 | 0.0196 | 0.0064 | 0.0667 |

| Hit@10 | ||||||||

| 4 | 1 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.0000 | 0.0000 | 1.0000 |

| 5 | 2 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.0000 | 0.0000 | 1.0000 |

| 6 | 23 | 0.9565 | 0.9130 | 0.9565 | 0.9565 | 0.3913 | 0.1739 | 1.0000 |

| 7 | 47 | 0.7660 | 0.8936 | 0.7447 | 0.8511 | 0.2128 | 0.3617 | 0.8511 |

| 8 | 17 | 0.5882 | 0.7647 | 0.5294 | 0.5882 | 0.2941 | 0.1176 | 0.5882 |

| Hop Count | Q/A Count | Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | GraphTrace |

|---|---|---|---|---|---|---|---|---|

| MRR | ||||||||

| 4 | 1 | 0.2500 | 0.5000 | 0.2500 | 0.2500 | 0.0000 | 0.0000 | 0.2000 |

| 5 | 10 | 0.2917 | 0.2010 | 0.3617 | 0.3017 | 0.0324 | 0.1091 | 0.3169 |

| 6 | 70 | 0.4433 | 0.4037 | 0.3993 | 0.3689 | 0.0148 | 0.0798 | 0.4356 |

| 7 | 8 | 0.3937 | 0.2661 | 0.3762 | 0.2958 | 0.0104 | 0.0114 | 0.3721 |

| 8 | 1 | 1.0000 | 1.0000 | 0.5000 | 0.5000 | 0.0909 | 1.0000 | 0.5000 |

| MAP | ||||||||

| 4 | 1 | 0.0625 | 0.1250 | 0.0625 | 0.0625 | 0.0000 | 0.0000 | 0.2339 |

| 5 | 10 | 0.1010 | 0.0813 | 0.1310 | 0.0913 | 0.0090 | 0.0218 | 0.1377 |

| 6 | 70 | 0.1060 | 0.1233 | 0.0985 | 0.0943 | 0.0035 | 0.0133 | 0.1797 |

| 7 | 8 | 0.0836 | 0.0704 | 0.0716 | 0.0703 | 0.0015 | 0.0031 | 0.1650 |

| 8 | 1 | 0.1750 | 0.3438 | 0.1125 | 0.1994 | 0.0968 | 0.1250 | 0.2396 |

| Hit@10 | ||||||||

| 4 | 1 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.0000 | 0.0000 | 1.0000 |

| 5 | 10 | 0.7000 | 0.8000 | 0.7000 | 0.9000 | 0.1000 | 0.1000 | 0.8000 |

| 6 | 70 | 0.8000 | 0.8143 | 0.8143 | 0.8143 | 0.0571 | 0.1857 | 0.7857 |

| 7 | 8 | 0.8750 | 1.0000 | 0.8750 | 1.0000 | 0.0000 | 0.0000 | 0.8750 |

| 8 | 1 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.0000 | 1.0000 | 1.0000 |

| Hop Count | Q/A Count | Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | GraphTrace |

|---|---|---|---|---|---|---|---|---|

| MRR | ||||||||

| 4 | 4 | 0.2500 | 0.3542 | 0.1542 | 0.3208 | 0.0625 | 0.0000 | 0.4750 |

| 5 | 6 | 0.3667 | 0.2750 | 0.3111 | 0.3472 | 0.0833 | 0.1667 | 0.3806 |

| 6 | 77 | 0.3498 | 0.3371 | 0.3364 | 0.3292 | 0.0916 | 0.0480 | 0.4475 |

| MAP | ||||||||

| 4 | 4 | 0.0625 | 0.1522 | 0.0385 | 0.1576 | 0.0156 | 0.0000 | 0.3552 |

| 5 | 6 | 0.0978 | 0.0645 | 0.0717 | 0.0885 | 0.0167 | 0.0333 | 0.1083 |

| 6 | 77 | 0.0754 | 0.0893 | 0.0811 | 0.0788 | 0.0208 | 0.0090 | 0.1589 |

| Hit@10 | ||||||||

| 4 | 4 | 1.0000 | 1.0000 | 0.7500 | 1.0000 | 0.2500 | 0.0000 | 1.0000 |

| 5 | 6 | 0.6667 | 0.6667 | 0.6667 | 0.8333 | 0.1667 | 0.1667 | 0.8333 |

| 6 | 77 | 0.7922 | 0.7403 | 0.8182 | 0.8442 | 0.1429 | 0.0909 | 0.7792 |

| Criterion | Naive RAG | Naive RAG + Subquery | Hybrid RAG | Rerank RAG | Naive GraphRAG | KG RAG | GraphTrace |

|---|---|---|---|---|---|---|---|

| Converging | |||||||

| Comprehensiveness | 3.70% | 16.30% | 0.37% | 15.93% | 30.74% | 12.96% | 20.00% |

| Diversity | 6.30% | 26.67% | 3.33% | 16.30% | 15.93% | 20.37% | 11.11% |

| Empowerment | 2.59% | 17.04% | 2.22% | 13.33% | 26.30% | 20.74% | 17.78% |

| Directness | 6.30% | 19.26% | 4.07% | 12.22% | 3.70% | 23.70% | 30.74% |

| Overall | 3.70% | 17.04% | 0.37% | 15.93% | 30.37% | 12.59% | 20.00% |

| Divergent | |||||||

| Comprehensiveness | 13.33% | 10.74% | 5.56% | 14.44% | 20.37% | 15.93% | 19.63% |

| Diversity | 11.11% | 24.81% | 5.56% | 13.33% | 10.00% | 16.67% | 18.52% |

| Empowerment | 13.33% | 10.74% | 4.07% | 13.33% | 18.89% | 21.85% | 17.78% |

| Directness | 19.26% | 11.11% | 8.89% | 14.07% | 0.37% | 17.04% | 29.26% |

| Overall | 13.70% | 10.74% | 5.56% | 14.81% | 19.63% | 15.56% | 20.00% |

| Linear | |||||||

| Comprehensiveness | 19.54% | 11.88% | 2.68% | 17.62% | 17.62% | 14.94% | 15.71% |

| Diversity | 15.71% | 22.99% | 7.28% | 12.26% | 15.33% | 9.58% | 16.86% |

| Empowerment | 18.39% | 11.88% | 2.68% | 14.94% | 18.01% | 18.77% | 15.33% |

| Directness | 21.07% | 8.81% | 2.30% | 15.71% | 5.75% | 22.22% | 24.14% |

| Overall | 18.39% | 13.41% | 2.30% | 17.24% | 17.24% | 15.71% | 15.71% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Osipjan, A.; Khorashadizadeh, H.; Kessel, A.-L.; Groppe, S.; Groppe, J. GraphTrace: A Modular Retrieval Framework Combining Knowledge Graphs and Large Language Models for Multi-Hop Question Answering. Computers 2025, 14, 382. https://doi.org/10.3390/computers14090382

Osipjan A, Khorashadizadeh H, Kessel A-L, Groppe S, Groppe J. GraphTrace: A Modular Retrieval Framework Combining Knowledge Graphs and Large Language Models for Multi-Hop Question Answering. Computers. 2025; 14(9):382. https://doi.org/10.3390/computers14090382

Chicago/Turabian StyleOsipjan, Anna, Hanieh Khorashadizadeh, Akasha-Leonie Kessel, Sven Groppe, and Jinghua Groppe. 2025. "GraphTrace: A Modular Retrieval Framework Combining Knowledge Graphs and Large Language Models for Multi-Hop Question Answering" Computers 14, no. 9: 382. https://doi.org/10.3390/computers14090382

APA StyleOsipjan, A., Khorashadizadeh, H., Kessel, A.-L., Groppe, S., & Groppe, J. (2025). GraphTrace: A Modular Retrieval Framework Combining Knowledge Graphs and Large Language Models for Multi-Hop Question Answering. Computers, 14(9), 382. https://doi.org/10.3390/computers14090382