1. Introduction

In the modern digital era, usability is not merely a desirable feature, but a critical determinant of an application’s success or failure. Empirical data indicate that 88% of users are less likely to return after a poor user experience, underscoring the high stakes of usability failure [

1]. Additionally, approximately 70% of app uninstallations occur due to performance issues [

2].

This underscores the growing demand for developers to prioritize usability in every stage of the design and deployment lifecycle.

The new version of ISO 9241-11 [

3] preserves and elaborates upon the concepts established in the 1988 edition of the standard. It extends the original definition of usability to encompass both systems and services: “the extent to which a system, product, or service can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use.” Additionally, the content has been expanded to incorporate contemporary approaches to usability and user experience [

3].

Traditional usability evaluation methods, such as heuristic evaluation, user testing, and survey-based feedback, though effective, are often resource-intensive, time-consuming, and difficult to scale in rapidly evolving software environments. In contrast, user-generated content—particularly negative app reviews—offers a continuous, real-time stream of usability insights directly from end-users; however, manually analyzing such content at scale is impractical, and existing automated sentiment analysis tools often lack the semantic depth and contextual awareness needed to translate feedback into actionable design improvements. This gap is significant, as usability is a key determinant of software quality, as it directly influences user satisfaction, reduces errors and frustrations, and enhances effectiveness, efficiency, adoption, and retention. In [

4], the author further emphasizes that customer satisfaction arises from meeting or exceeding expectations, a principle particularly relevant in contexts such as logistics, where delays or errors often generate negative emotions and dissatisfaction. App reviews therefore serve as a valuable resource for eliciting user requirements, shaping design decisions, and guiding future releases. By systematically incorporating insights from app reviews into the development process, organizations can better align applications with user expectations, strengthen engagement, and improve long-term retention, making reviews one of the most important sources of feedback during the maintenance phase [

5].

Recent advancements in LLMs—such as OpenAI’s GPT-4 and Google’s Gemini—offer a new frontier in bridging this gap. These models possess powerful capabilities in natural language understanding and generation, enabling them to not only analyze unstructured text but also to generate human-like recommendations grounded in real user feedback.

This paper proposes a novel, intelligent framework that leverages LLMs to extract and generate usability enhancement recommendations from negative app reviews. By integrating prompt engineering and fine-tuning strategies, the proposed system addresses critical usability dimensions including correctness, completeness, and satisfaction.

Through the construction of a manually labeled dataset of 5000 Instagram app reviews, this study provides a benchmark to evaluate the comparative performance of GPT, Gemini, and BLOOM. Evaluation is conducted using BLEU, ROUGE, and METEOR scores, which are widely recognized metrics in natural language generation research.

The key contributions of this study are as follows:

A novel and scalable framework that leverages large language models (LLMs) to transform negative user feedback into precise, usability-driven recommendations across core usability dimensions: correctness, completeness, and satisfaction.

A manually annotated dataset of negative app reviews, curated by software engineering experts, which serves as a benchmark resource for future research in automated usability analysis and AI-assisted quality assurance.

A comparative evaluation of three prominent LLMs—GPT-4, Gemini, and BLOOM—under prompting and fine-tuning settings, highlighting performance trade-offs, model-specific strengths, and practical implications for usability recommendation tasks.

A fully integrated, web-based tool that operationalizes the framework, enabling practitioners to analyze user reviews and receive real-time, AI-generated usability recommendations—facilitating faster decision-making in the software development lifecycle.

A practical contribution to user-centered software engineering, demonstrating how generative AI can augment traditional usability engineering by enabling scalable, context-aware analysis of user sentiment throughout the design, testing, and maintenance phases.

The remainder of this paper is structured as follows:

Section 2 reviews related work on usability analysis and the application of large language models (LLMs) in software engineering.

Section 3 outlines the methodology for developing the proposed LLM-based framework, including dataset collection, manual annotation, prompting, and fine-tuning strategies.

Section 4 introduces the Review Analyzer tool, which operationalizes the framework in a web-based environment.

Section 5 presents the experimental results, comparing the performance of GPT-4, Gemini, and BLOOM, and discusses the technical and methodological challenges encountered. Finally,

Section 6 concludes the paper and highlights future research directions for advancing AI-driven usability engineering.

2. Related Work

The analysis of user reviews for usability insights has evolved into two primary paradigms: non-tuning approaches and tuning-based approaches. Non-tuning approaches rely on leveraging pre-trained models without further adaptation to specific datasets, while tuning-based approaches involve the fine-tuning of such models to improve performance in context-specific tasks [

6]. This section reviews both paradigms, highlighting their strengths, limitations, and relevance to our proposed framework.

2.1. Non-Tuning Paradigm

LLMs have demonstrated remarkable zero-shot and few-shot capabilities across a wide range of previously unseen tasks [

7]. As a result, recent studies suggest that LLMs possess inherent recommendation capabilities that can be activated through carefully crafted prompts. These studies employ contemporary paradigms such as instruction learning and in-context learning to adapt LLMs for recommendation tasks without the need for parameter tuning. Depending on whether the prompt includes demonstration examples, research within this paradigm can be broadly classified into two main categories: prompting and in-context learning.

The following subsection provides a detailed review of the prompting-based approaches under this paradigm.

Prompting

This line of research explores how carefully designed instructions and prompts can improve LLMs’ ability to perform recommendation tasks. Liu et al. [

8] evaluated ChatGPT on five common tasks: rating prediction, sequential recommendation, direct recommendation, explanation generation, and review summarization. Similarly, Dai et al. [

9] examined ChatGPT’s performance on three information retrieval tasks—point-wise, pair-wise, and list-wise ranking—using task-specific prompts and role instructions (e.g., “You are a news recommendation system”).

To test prompt effectiveness, Sanner et al. [

10] created three template types: one based on item attributes, one on user preferences, and one combining both. Their results showed that zero-shot and few-shot prompting can perform competitively with traditional collaborative filtering, especially in cold-start cases. Other researchers have developed task-specific strategies. For example, Sileo et al. [

11] built movie recommendation prompts from GPT-2′s pretraining corpus, while Hou et al. [

12] proposed recency-focused sequential prompting to capture temporal patterns and bootstrapping to reduce ranking bias.

Since LLMs have token limits that make it difficult to handle long candidate lists, Sun et al. [

13] introduced a sliding window technique to iteratively rank smaller segments. Yang et al. [

14] developed a sequence-residual prompt that improves the interpretability of sequential recommenders by making recommendations clearer.

Beyond using LLMs as recommenders, several works explored their use for enriching model features in traditional systems. Yang et al. [

15] and Lyu et al. [

16] used prompting for content augmentation, improving item-side representations. On the user side, Wang and Lim [

17] generated user preference descriptions, while Wang et al. [

18] built reasoning graphs with ChatGPT and a knowledge base to enrich user representations.

Wei et al. [

19] and Ren et al. [

20] created textual features for users and items using ChatGPT, enhancing ID-based learning. Likewise, Liu et al. [

21] proposed GENRE, which uses three prompting techniques to improve news recommendations: refining titles, extracting keywords from user reading histories, and generating synthetic articles to simulate user interactions.

Recommendation systems typically comprise ranking models, content repositories, and candidate retrieval modules. Some studies position LLMs as controllers across this pipeline. Frameworks such as Gao et al.’s ChatREC [

22], Shu et al.’s RAH [

23], Shi et al.’s BiLLP [

24], and Huang et al.’s InteRecAgent [

25] facilitate interactive recommendations through multi-turn conversations, enabling dynamic tool integration and user support, as further discussed by Huang et al. [

26] in the context of trust and satisfaction.

Personalized recommendation services are also advancing through LLMs. Zhang et al. [

27] explored agent–user–item collaboration, while Petrov et al. [

28] developed GeneRec to determine whether to recommend existing or newly generated items. Simulation-based recommender environments have also emerged, such as RecAgent [

29] and Agent4Rec [

30], which model real-world user behaviors and enable reinforcement learning and feedback tracking.

As an alternative to using LLMs as system controllers, Zhang et al. [

31] proposed UniLLMRec, an end-to-end recommendation framework that connects recall, ranking, and re-ranking using natural language prompts. This method utilizes the zero-shot power of LLMs, offering a practical and low-cost solution for real-world recommendation problems.

In summary, this line of research emphasizes the design of instructional prompts that allow LLMs to perform recommendation tasks effectively. Studies such as those by Wang and Lim [

17] and Dai et al. [

9] introduced tailored prompt structures and role instructions to improve task alignment and domain adaptation. Others, like Sanner et al. [

10], focused on template evaluation and cold-start performance. Innovative strategies—like sliding window prompts and prompt-based feature generation—demonstrate the expanding role of LLMs in both standalone and hybrid recommender architectures. Frameworks like ChatREC and GeneRec showcase how interactive and generative paradigms are shaping the next generation of LLM-driven recommendation systems.

As evidenced by

Table 1, the majority of prompting-based studies succeed in generating basic recommendations but fall short in delivering complete, correct, and satisfying outputs for end users. Most models achieve only partial effectiveness, particularly lacking in handling negative app reviews and meeting usability standards such as completeness and user satisfaction. While frameworks like BiLLP and Rec4Agentverse demonstrate isolated strengths (e.g., in satisfaction), they still do not provide holistic usability support. In contrast, our proposed approach—leveraging GPT, Gemini, and BLOOM—demonstrates a consistent and comprehensive usability advantage across all evaluated dimensions. This suggests that the design of our prompting strategy more effectively aligns with real-world user needs and highlights the importance of usability-centric evaluation in recommendation research.

2.2. Tuning Paradigm

As previously noted, LLMs demonstrate strong zero-shot and few-shot capabilities, enabling them to generate recommendations that significantly surpass random guessing when guided by well-designed prompts. However, it is not surprising that such recommendation systems may still fall short of the performance achieved by models explicitly trained on task-specific datasets. Consequently, a growing body of research focuses on enhancing the recommendation performance of LLMs through tuning techniques. These tuning approaches can be broadly categorized into two main types: fine-tuning and prompt tuning.

In fine-tuning, both discriminative and generative LLMs are typically used as encoders to extract user or item representations. The model parameters are then updated using task-specific loss functions to optimize performance for downstream recommendation tasks.

Fine-Tuning

Fine-tuning refers to the additional training of a pre-trained model on a task-specific dataset to tailor its capabilities to a particular domain. This process adjusts the model’s internal parameters, enhancing its performance on new data, especially in cases where pre-trained knowledge alone is insufficient. The “pre-train, fine-tune” paradigm has gained traction in recommendation systems for several reasons: it improves initialization for better generalization, accelerates convergence, and introduces universal knowledge that benefits downstream tasks. Additionally, fine-tuning helps mitigate overfitting in low-resource environments by serving as a form of regularization [

32].

The holistic model approach pre-trains an LLM on large datasets and fine-tunes all parameters using varied downstream data sources. Kang et al. [

33], for example, pre-trained a GPT model on segmented API code and fine-tuned it using code from another library to support cross-library recommendations. Similarly, Wang et al. [

34] fine-tuned DialoGPT on domain-specific conversations for recommendation dialogues, while Xiao et al. [

35] adapted a transformer model to learn user-news embeddings. Their results showed that tuning the entire model yielded better performance, underscoring the trade-off between accuracy and training cost.

In contrast, the partial model approach aims to fine-tune only a subset of the model’s parameters, offering a balanced solution between efficiency and effectiveness. Many Language Model Recommendation Systems (LMRSs) adopt this strategy. For instance, Hou et al. [

30] applied a linear transformation layer to correct item embeddings across multiple domains, addressing domain-specific biases. They also proposed contrastive multi-task objectives to align domain patterns. Their findings suggest that fine-tuning a small fraction of parameters enables rapid adaptation, including in cold-start scenarios.

Further contributions include GPTRec [

28], a generative sequential recommender built on GPT-2 that employs SVD tokenization for memory efficiency and supports flexible next-K predictions, distinguishing it from the discriminative BERT4Rec. Another study [

36] formatted user–item interactions as prompts for rating prediction and found that FLAN-T5-XXL, when fine-tuned, outperformed other models. Moreover, [

37] investigated GPT-3′s collaborative filtering performance, while [

21] leveraged ChatGPT to enhance item information before transitioning to LLaMA for feature embedding. Lastly, [

21] explored embedding-based fine-tuning for recommendation, finding that while LLMs can improve accuracy, traditional ID-based collaborative filtering still performs strongly in warm-start settings.

In summary, the “pre-train, fine-tune” paradigm is becoming increasingly influential in recommendation systems due to its strengths in generalization, convergence, and adaptability. Holistic models optimize the full parameter set for cross-domain recommendation, while partial models selectively update parameters to reduce computational cost and enhance domain robustness. Cutting-edge systems like GPTRec exemplify the use of generative strategies, while broader comparative analyses highlight the relative strengths of both LLM-based and traditional methods.

Table 2 summarizes the usability evaluation of these approaches in recommendation tasks.

As shown in

Table 2, most fine-tuned language models demonstrate limited usability performance, typically excelling in recommendation generation while failing to meet criteria such as completeness, correctness, and user satisfaction. Notably, our proposed approach using GPT, Gemini, and BLOOM outperforms all prior works by achieving full usability across all evaluation metrics. This highlights the effectiveness of our fine-tuning strategy in addressing the usability gaps commonly found in existing LLM-based recommendation models.

3. Methodology

This study aims to evaluate the usability metrics of LLMs in generating actionable usability recommendations from negative app reviews. The proposed methodology consists of five key stages. First, negative user reviews are collected and annotated based on usability issues related to three core factors: correctness, completeness, and satisfaction. Second, the annotated reviews are analyzed to identify the causes of usability issues using both prompt-based and fine-tuned LLM approaches. Third, the system generates usability improvement recommendations to assist developers in addressing the identified issues across the specified factors. Fourth, the quality and relevance of the generated recommendations are assessed using standard automatic evaluation metrics alongside expert human validation. Finally, an integrated tool is developed to streamline the entire process within a unified interface.

Figure 1 presents the overall framework of the methodology.

3.1. Negative Reviews Collection and Annotation

In the authors’ previous study [

38], the Instagram App Reviews dataset from Kaggle was utilized to develop an intelligent aspect-based sentiment analysis model capable of classifying user reviews by sentiment polarity (positive or negative) across three core usability factors: correctness, completeness, and satisfaction. Building on that work, the present study utilizes the same model to extract negative user reviews specifically linked to these usability factors, resulting in a dataset of 309 negative reviews.

Annotation of Negative Reviews by Identifying Usability Issue Causes and Corresponding Improvement Recommendations

To ensure a reliable evaluation of the usability issue causes identified by LLMs and the corresponding recommendations they generate, the negative app reviews were manually annotated by software engineering experts. These experts identified the root causes of usability problems and mapped them to appropriate, actionable recommendations. The resulting expert annotations serve as ground truth references against which the outputs of both prompt-based and fine-tuned LLMs can be systematically compared.

Three software engineering experts systematically analyzed each negative review to identify the root causes of user dissatisfaction and map them to the relevant usability factors—correctness, completeness, or satisfaction. Based on these identified causes, the experts formulated actionable recommendations aimed at effectively resolving the underlying usability issues. To ensure a consistent and systematic annotation of negative reviews, the following guidelines were provided to the experts. First, in

Table 3 the following usability factors definitions and examples were provided.

Second, each expert was instructed to follow these steps independently:

Carefully read the entire review to understand the user’s complaint in context.

Identify the root cause of the issue—whether it reflects a lack of functionality (negative completeness), functional failure (negative correctness), or a poor user experience negative (negative satisfaction).

Formulate a clear, actionable recommendation that a developer or designer could feasibly implement to resolve the issue.

Avoid vague, non-specific suggestions and ensure all recommendations are user-centered and aligned with usability best practices.

Third, to ensure annotation reliability:

All reviews were independently analyzed by the three experts.

In cases of disagreement, annotations were discussed and resolved through consensus.

Recommendations were reviewed to ensure they are aligned with user-centered design principles and actionable from a development perspective.

Table 4 presents annotation examples for each negative usability factor, along with the identified cause of the issue and the corresponding recommendation to address it.

3.2. Prompting and Fine-Tuning LLMs to Identify the Causes of Usability-Related Issues

Three distinct LLMs—GPT-4, Gemini, and BLOOM—were deployed to identify the underlying causes of usability issues from negative reviews. Each model offers unique technical advantages. GPT, developed by OpenAI, is recognized for its advanced language understanding and ability to generate coherent, contextually relevant responses. Gemini, introduced by Google DeepMind, excels in long-context reasoning and multimodal integration, enabling more nuanced interpretation of user feedback. BLOOM, from Hugging Face, stands out for its open-source nature and strong multilingual capabilities, making it ideal for research and diverse language support.

3.2.1. Prompting Approach

Two prompting strategies were employed: Zero-Shot Prompting and Few-Shot Prompting. In

Table 5 both approaches aim to guide the LLMs in identifying the causes of usability issues from negative reviews [

7].

3.2.2. Fine-Tuning Approach

The three LLMs (GPT-4, Gemini, and BLOOM) were fine-tuned to accurately identify the root causes of usability issues in negative app reviews. The fine-tuning process involved retraining each model on the negative reviews’ dataset annotated by software engineering experts. Each negative review in the dataset was annotated with the underlying cause of usability issue.

The fine-tuning process begins by installing essential libraries and importing dependencies for data preprocessing, model training, and evaluation [

30]. A CSV file containing negative reviews and expert-annotated causes of usability issues is then uploaded, cleaned, and prepared for training. Next, appropriate hyperparameters are configured, including 10 training epochs, a batch size of 32, a learning rate of 1 × 10

−5, and the AdamW optimizer [

34]. The model’s tokenizer is then used to encode the input–output pairs for training. The LLM is fine-tuned using the Hugging Face Transformers framework on the annotated dataset, with model weights saved at each epoch for checkpointing [

33]. Once fine-tuning is complete, the trained model is employed to identify the causes of usability issues from negative reviews. Finally, the model’s outputs are evaluated using standard metrics and validated by domain experts to assess accuracy and reliability.

3.3. Prompting and Fine-Tuning LLMs to Generate Actionable Recommendations

The same three LLMs—GPT-4, Gemini, and BLOOM—used to identify the causes of usability issues were also employed to generate actionable recommendations aimed at addressing the identified causes.

3.3.1. Prompting Approach

Similarly, two prompting strategies—Zero-Shot and Few-Shot Prompting—were employed with three LLMs: GPT-4, Gemini, and BLOOM. In

Table 6, these prompts were carefully designed to guide the models in generating clear, developer-relevant recommendations based on the identified causes of usability issues related to the core factors of correctness, completeness, and satisfaction.

3.3.2. Fine-Tuning Approach

The fine-tuning process begins by installing the necessary libraries and importing dependencies required for data preprocessing, model training, and evaluation [

26]. A CSV file containing negative app reviews and corresponding expert-authored recommendations is uploaded, cleaned, and formatted for training. The models are then fine-tuned using a standard supervised learning approach, with hyperparameters set to 10 training epochs, a batch size of 32, a learning rate of 1 × 10

−5, and the AdamW optimizer. Each model’s native tokenizer is used to encode the data, ensuring compatibility and preserving semantic integrity. Once fine-tuned, the LLMs are employed to generate usability improvement recommendations for new, previously unseen reviews. These outputs are evaluated through a combination of automated metrics and expert human validation to assess their clarity, practicality, and relevance to the identified usability issues.

3.4. Results Evaluation Strategy

The recommendations generated by LLMs were evaluated using a combination of automated metrics and expert human validation to assess their quality, clarity, and relevance.

3.4.1. Automated Evaluation Using Standard Metrics

To evaluate the quality of the outputs generated by the LLMs, three widely adopted metrics were used: BLEU, ROUGE, and METEOR. These metrics provide a robust framework for evaluating lexical similarity, grammatical quality, and semantic adequacy of the generated usability recommendations.

- 2.

ROUGE Score (Recall-Oriented Understudy for Gusting Evaluation): Evaluates the overlap of n-grams and sequences between the generated and reference texts, particularly useful for summarization-style outputs. The formula is as follows:

- 3.

METEOR Score (Metric for Evaluation of Translation with Explicit Ordering): Considers both precision and recall, while incorporating synonymy and stemming to evaluate semantic similarity. The METEOR score is computed as follows:

3.4.2. Expert Human Validation

In parallel with automated metrics, a panel of software engineering experts independently reviewed a sample of outputs. Each output was evaluated based on the following qualitative criteria:

Clarity: Is the cause or recommendation clearly articulated and easy to understand?

Usefulness: Does the output offer actionable insight that would be meaningful to a developer?

Relevance: Is the output accurately aligned with the underlying usability factor (correctness, completeness, or satisfaction)?

4. Tool Implementation: The Review Analyzer System

A web-based tool named Review Analyzer was developed using Streamlit to automate the end-to-end process of usability feedback analysis. Designed with a clean and intuitive interface, the tool allows users to input negative app reviews, securely enter their OpenAI API key, and select their preferred language for analysis. A dedicated text box supports direct review entry, with built-in examples to guide formatting, while an “Analyze Review” button initiates real-time processing. The tool then detects negative sentiment, identifies the root causes of usability issues, and generates structured, AI-driven recommendations aligned. By bridging raw user sentiment with expert-like structured feedback, Review Analyzer provides actionable insights that enable software teams to prioritize fixes and enhancements more effectively, thereby strengthening the development feedback loop and improving overall user experience.

Figure 2 illustrates the tool interface and highlights these primary functionalities.

Figure 3 presents an example of a negative user review entered through the interface, demonstrating the format and nature of typical input data.

Figure 4 displays the tool’s output: detecting negative sentiment, extracting root causes of usability issues, and generating structured, actionable recommendations.

This example highlights the tool’s ability to bridge raw user sentiment and structured usability feedback, effectively mimicking expert analysis in real time. The generated suggestions align with core usability dimensions (e.g., completeness and satisfaction), demonstrating the model’s contextual understanding and practical value. Such automated triage enables software teams to prioritize design fixes and enhancements based on actual user pain points, significantly improving the development feedback loop.

- -

Review Analyzer Interface:

This figure displays the user interface of a web-based application called Review Analyzer, developed by Nahed Al-Saleh, and deployed using the Streamlit framework. The purpose of this tool is to analyze user-submitted app reviews and identify potential usability issues.

- -

Step-by-Step Description:

- 1-

Interface Title and Author:

The application title, Review Analyzer, is prominently displayed at the top of the interface.

The name of the developer, Nahed Al-Saleh, is listed below the title, indicating authorship.

- 2-

Language and API Key Inputs:

On the left sidebar:

There is a language selection dropdown, defaulted to English.

Below it is a field labeled “Enter your OpenAI API key”, indicating that the application relies on OpenAI’s services for review analysis and requires the user to input a valid API key for functionality.

- 3-

Review Input Field:

In the center of the interface, there is a large text input box where users are prompted to enter a review.

A sample review is provided as a placeholder example:

“The app crashes frequently and takes too long to load.”

- 4-

Action Button:

- 5-

Footer and Interface Elements:

A red heart icon is shown in the bottom-right corner, likely indicating an appreciation or support feature (typical in Streamlit apps).

Standard browser interface elements, tabs, and file path indicators are visible, suggesting that the app is accessed locally or through a deployed Streamlit link.

- -

Purpose of the Application:

5. Experimental Results and Discussion

5.1. Experimental Setup

This section outlines the experimental design and implementation details used to evaluate the effectiveness of large language models (LLMs) in identifying the causes of usability issues and generating actionable recommendations from negative app reviews. The experiment was structured around five key stages: dataset preparation and annotation, LLM-based usability issue identification, recommendation generation, evaluation using automated and expert methods, and tool integration.

This section presents the experimental results assessing the usability metrics of three advanced LLMs—GPT, Gemini, and BLOOM—in generating actionable recommendations aligned with the identified causes of usability issues across the core usability factors. The analysis emphasizes key usability dimensions and employs established evaluation metrics—BLEU, ROUGE, and METEOR—to assess the clarity, relevance, and quality of the generated outputs.

5.2. Results of Prompting LLMs

The performance of three LLMs—GPT, Gemini, and BLOOM—in generating usability improvement recommendations using a prompt-based approach was evaluated across three types of usability issues: completeness, correctness, and satisfaction. The results were evaluated using standard metrics: BLEU, ROUGE, and METEOR. These metrics assess lexical similarity (BLEU), content overlap (ROUGE), and semantic alignment and fluency (METEOR) against expert-labeled references.

The experimental results demonstrate that GPT consistently outperforms Gemini and BLOOM in generating usability recommendations across all three factors—completeness, correctness, and satisfaction. For completeness-related issues, such as missing features, in

Figure 5A, GPT achieves the highest scores (BLEU 0.62, ROUGE 0.80, METEOR 0.90), indicating strong linguistic and semantic alignment with expert references, while Gemini (0.06, 0.38, 0.37) and BLOOM (0.01, 0.27, 0.22) perform significantly worse. A similar pattern is observed for correctness-related issues, where GPT again in

Figure 5B records high scores (BLEU 0.60, ROUGE 0.79, METEOR 0.90), reflecting its stable performance across different usability contexts; Gemini performs slightly better in this category (0.07, 0.39, 0.38) compared to completeness, whereas BLOOM remains weak (0.01, 0.28, 0.23). For satisfaction-related issues, such as interface intuitiveness and performance, GPT maintains its lead in

Figure 5C (0.61, 0.80, 0.90), highlighting its ability to interpret user sentiment and address experiential concerns more effectively, while Gemini (0.05, 0.37, 0.36) and BLOOM (0.01, 0.20, 0.16) again lag behind. Overall, these results confirm GPT’s consistent superiority in producing contextually appropriate and actionable recommendations, with Gemini showing moderate capability and BLOOM demonstrating limited effectiveness across all usability dimensions.

Overall, as detailed in

Table 7, GPT demonstrated consistently strong performance across all usability dimensions, highlighting its robustness in addressing issues related to missing functionality (completeness), functional errors (correctness), and suboptimal user experience (satisfaction). In contrast, Gemini and BLOOM exhibited minimal variation across these categories, suggesting a limited capacity to differentiate between nuanced usability factors within a prompt-based setting. These findings underscore that while prompt-based LLMs are capable of producing actionable usability recommendations, the choice of model significantly impacts the quality of the outputs. GPT emerges as the most reliable across all metrics and usability dimensions, whereas Gemini and BLOOM may require further optimization, such as advanced prompting techniques or fine-tuning, to achieve comparable performance.

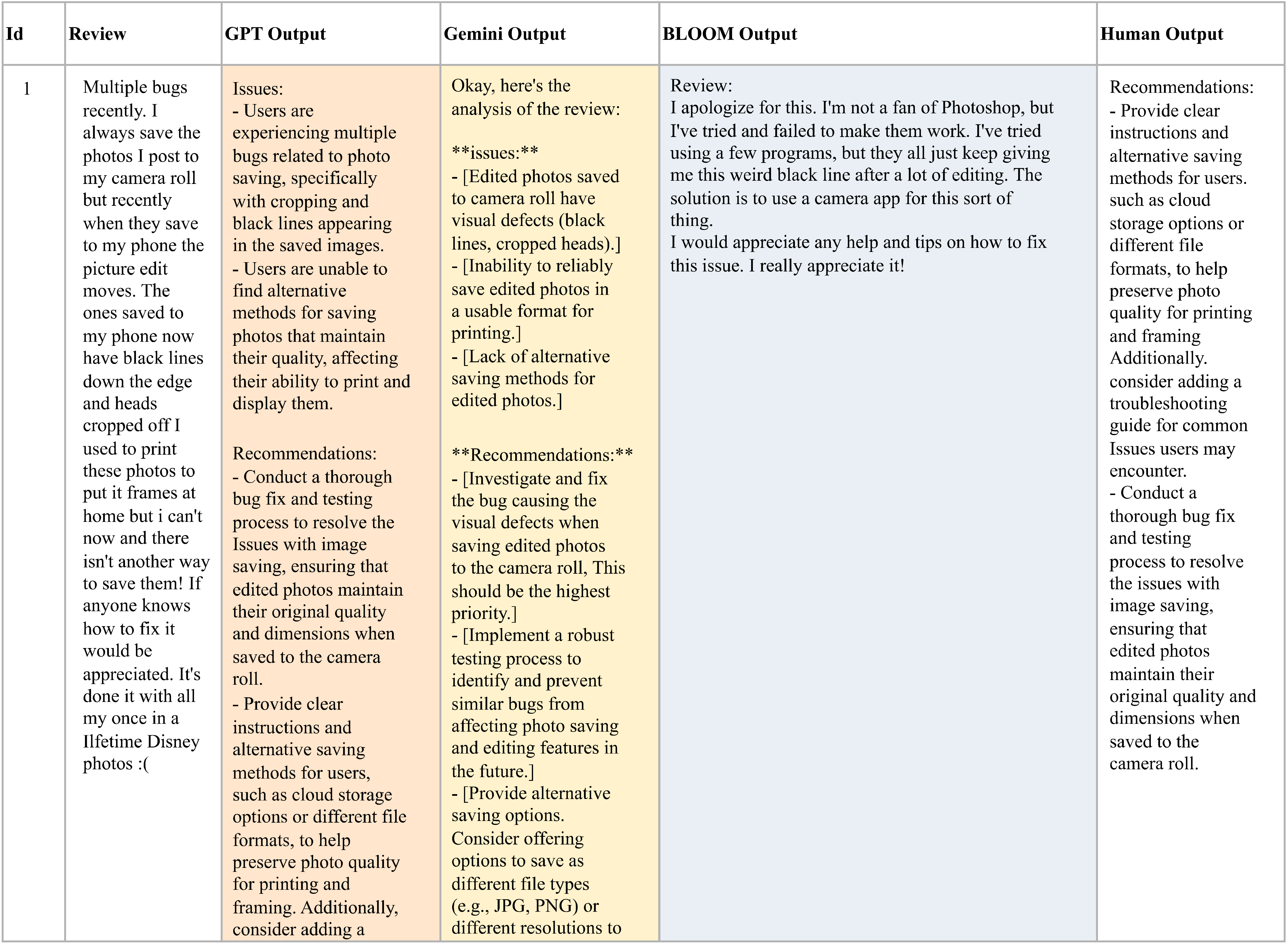

Figure 6 shows sample usability recommendations generated using prompting techniques with the three LLMs (GPT, Gemini, and BLOOM) in response to a given negative user review, shown alongside the corresponding expert-provided recommendation.

5.3. Results of Fine-Tuning LLMs

This section analyzes the performance of the three LLMs—GPT, Gemini, and BLOOM—in generating actionable usability improvement recommendations using fine-tuning approach based on negative app reviews. The models were evaluated across three core usability factors: completeness, correctness, and satisfaction, using standard metrics: BLEU, ROUGE, and METEOR.

The results are displayed in

Table 5 and further visualized in

Figure 7A–C, which highlight model-specific performance across the three usability dimensions—completeness, correctness, and satisfaction—using standard evaluation metrics.

The fine-tuned GPT model consistently outperforms Gemini and BLOOM across all usability dimensions—completeness, correctness, and satisfaction—demonstrating its strong ability to generate accurate and contextually appropriate recommendations. For completeness-related issues (

Figure 7A), GPT achieved the highest scores (BLEU 0.64, ROUGE 0.45, METEOR 0.89), reflecting its effectiveness in addressing gaps in application functionality, while Gemini (0.04, 0.15, 0.35) showed limited improvement and BLOOM (0.12, 0.28, 0.26) displayed moderate gains from fine-tuning. For correctness-related concerns (

Figure 7B), GPT again led with scores of 0.61, 0.44, and 0.88, confirming its capacity to interpret technical problems and provide reliable solutions, whereas Gemini (0.04, 0.17, 0.36) and BLOOM (0.12, 0.28, 0.25) remained comparatively weak. Similarly, for satisfaction-related issues (

Figure 7C), GPT maintained top performance (BLEU 0.64, ROUGE 0.45, METEOR 0.88), effectively capturing user experience and emotional aspects, while Gemini and BLOOM showed only modest improvements with performance patterns similar to earlier categories. Overall, these results confirm GPT’s robustness and adaptability when fine-tuned, while underscoring the need for further optimization of Gemini and BLOOM to address the full complexity of usability-related feedback.

Overall, the results presented in

Table 8 clearly indicate that the fine-tuned GPT model significantly outperforms both Gemini and BLOOM in generating high-quality, actionable usability recommendations across all core usability factors. GPT’s consistent scores across BLEU, ROUGE, and METEOR suggest it effectively understands and responds to the causes of user dissatisfaction. Meanwhile, even after fine-tuning, Gemini and BLOOM require further optimization or access to larger, domain-specific datasets to achieve performance levels comparable to GPT.

Figure 8 presents sample recommendations generated by the three fine-tuned LLMs (GPT, Gemini, and BLOOM) in response to a given negative user review, alongside the corresponding recommendation provided by a human expert to enhance usability.

This sample reveals several key insights. GPT-4′s output closely mirrors the expert recommendation, demonstrating high contextual alignment and completeness. In contrast, Gemini’s recommendation lacks specificity, while BLOOM’s output shows partial relevance but omits key usability aspects. The side-by-side analysis emphasizes the advantage of fine-tuning in enhancing model accuracy, while also underscoring the continued value of human oversight. Ultimately, such comparisons validate the feasibility of integrating LLMs as assistive agents in usability review workflows—particularly when models are properly adapted to domain-specific tasks.

5.4. Comparison Between Prompting and Fine-Tuning Approaches

GPT-4 consistently delivered strong results across both approaches. In the prompting setting, it achieved high METEOR scores (0.90) and ROUGE scores up to 0.80. Fine-tuning slightly improved BLEU (from 0.62 to 0.64) but led to reduced ROUGE scores, suggesting a trade-off between fluency and lexical overlap. Gemini showed weak performance in both settings, with minimal improvement after fine-tuning, indicating limited sensitivity to usability context. BLOOM, while weak under prompting (BLEU: 0.01), benefited from fine-tuning, achieving BLEU scores of 0.12 and modest gains in METEOR and ROUGE.

In summary, GPT-4 excels in both prompting and fine-tuning, while BLOOM improves notably with fine-tuning. Prompting is efficient for high-performing models, but fine-tuning offers better alignment for less capable LLMs.

To further clarify the trade-offs between prompting and fine-tuning,

Table 9 summarizes their key strengths, limitations, and recommended usage scenarios based on the experimental results and practical implications of this study.

This comparative view suggests that prompting is ideal for high-performing models and scenarios requiring rapid deployment, while fine-tuning is more effective when working with lower-tier or open-source models and where task-specific adaptation is essential. Software teams can leverage this insight to balance accuracy, cost, and speed when integrating LLMs into usability engineering workflows.

5.5. Discussion

While prior studies such as Dai et al. [

3], Sanner et al. [

4], and Wang and Lim [

11] have demonstrated the potential of large language models (LLMs) like ChatGPT in recommendation tasks using zero-shot or few-shot prompting techniques, their focus has primarily been on traditional domains such as product and content recommendation. Dai et al. [

3] assessed ChatGPT across ranking-based recommendation paradigms using prompt engineering and role-based context, while Sanner et al. [

4] showed that LLMs can compete with cold-start recommenders through template-based prompting for item and language preferences. Similarly, Wang and Lim [

11] explored zero-shot next-item recommendation by repurposing pre-trained LLMs to predict future user interactions without fine-tuning. In contrast, our study targets the software usability domain and goes beyond prompting by incorporating expert-annotated datasets and fine-tuning three distinct LLMs—GPT-4, Gemini, and BLOOM—to identify the causes of usability issues in negative app reviews and generate actionable, developer-oriented recommendations. Furthermore, our work contributes a deployable system and validates output quality through both automatic metrics and expert human evaluation, offering a more application-driven and domain-specific approach than the more exploratory or generalized methodologies seen in prior work.

The results of this study provide clear evidence that LLMs, particularly GPT-4, can play a transformative role in usability engineering. The ability to accurately interpret and generate responses from negative app reviews suggests a new paradigm in AI-assisted software quality assurance. GPT-4′s strong performance across completeness, correctness, and satisfaction demonstrates its capacity to generate actionable, contextually sound recommendations that align with developer expectations and user-centered design. These findings also contribute to a growing body of research that supports the integration of LLMs into human-in-the-loop software engineering processes. Notably, the success of prompting-based approaches with high-performing models like GPT-4 suggests that lightweight integration of LLMs can provide value without the need for extensive fine-tuning, which is resource-intensive. Meanwhile, for models like BLOOM and Gemini, fine-tuning considerably improved their output quality, emphasizing its importance for lower-tier or general-purpose models. From a software engineering perspective, this framework aligns with principles of continuous feedback, agile development, and usability testing. LLMs can augment traditional usability evaluation by providing scalable, automated interpretation of user sentiment—particularly valuable in early prototyping or post-deployment stages. Furthermore, the integration of this framework into a web-based tool bridges the gap between research and real-world practice, offering software teams immediate insight into usability bottlenecks. However, it is critical to recognize that LLMs are not yet replacements for human usability experts. Their outputs can be inconsistent without domain adaptation and expert verification. As such, their optimal use is as intelligent assistants that augment, rather than replace, human judgment in the software development lifecycle. While LLMs significantly streamline the analysis of negative user reviews and the generation of usability recommendations, their integration should follow a human-in-the-loop (HITL) approach. In this paradigm, the AI system serves as a first-pass analyzer, transforming large volumes of unstructured feedback into structured, actionable suggestions. These suggestions are then reviewed, validated, and refined by usability experts, product managers, or designers before implementation. This process ensures the following:

Contextual Relevance: Human experts can assess whether a recommendation fits the app’s goals, user base, and design constraints.

Feasibility Check: Not all AI-generated suggestions are technically or practically feasible; expert review ensures realistic decision-making.

Bias Mitigation: Human oversight helps identify and correct possible biases or misinterpretations produced by the model.

Iterative Improvement: Feedback from experts can be used to fine-tune the model or refine prompting strategies over time.

Therefore, LLMs should not be seen as autonomous decision-makers but rather as collaborative partners that augment human judgment. This hybrid workflow balances efficiency and accuracy, supporting more robust, ethical, and user-centered software development practices.

These insights pave the way for future research into hybrid AI–human usability workflows and highlight the importance of maintaining expert involvement in AI-assisted design processes.

5.6. Limitations

This study presents several limitations that should be acknowledged. First, the reliance on automated evaluation metrics such as BLEU, ROUGE, and METEOR, while useful for assessing lexical and semantic alignment, may not fully capture the contextual relevance and practical feasibility of the generated recommendations. Human validation is essential for ensuring the quality of outputs. Additionally, the manual annotation process for creating the dataset demanded significant time and expertise from domain specialists, which may pose challenges for scalability and rapid adoption in different contexts.

Moreover, while GPT-4 demonstrated consistent performance, the sensitivity of models like BLOOM and Gemini to prompt formulation and training data quality limits their reproducibility and generalization across varied usability contexts. Fine-tuning these large-scale language models requires substantial computational resources, which may not be accessible to smaller research teams or software developers, further complicating implementation.

These limitations underscore the need for further research and development to enhance model robustness, expand the applicability of the framework, and incorporate more comprehensive evaluation methods to ensure practical usability in diverse scenarios.

6. Challenges

Despite the strong performance of GPT-4 and the feasibility of the proposed framework, several technical, practical, and methodological challenges remain. These challenges encompass data-related limitations, model usability concerns, and broader issues of scalability and generalization, as follows:

Manual Annotation Burden: Creating a high-quality annotated dataset demanded considerable time and expertise from domain specialists, posing challenges to scalability and rapid adoption.

Model Sensitivity: While GPT-4 delivered consistently strong results, BLOOM and Gemini exhibited greater sensitivity to prompt formulation and training data quality, limiting reproducibility and generalizability.

Computational Demands: Fine-tuning large-scale language models required substantial computational resources, including high-end GPUs, which may not be accessible to smaller research groups or software development teams.

Prompt Engineering Expertise: Designing effective prompts—especially in zero-shot and few-shot settings—requires in-depth understanding of model behavior and natural language generation, making it challenging for non-expert users to achieve reliable results.

Evaluation Limitations: While automated metrics such as BLEU, ROUGE, and METEOR provide valuable insights into lexical and semantic alignment, they fall short in capturing contextual relevance and implementation feasibility. Human validation remains indispensable.

Limited Tool Generalization: The current tool implementation focuses solely on mobile app reviews. Adapting it to other domains or platforms would require retraining models and redesigning certain interface and workflow components.

7. Conclusions

This study proposed a large language model (LLM)-based framework that leverages GPT-4, Gemini, and BLOOM to automatically extract actionable usability recommendations from negative app reviews. By employing both prompting (zero-shot and few-shot) and fine-tuning strategies, the framework systematically maps unstructured user feedback to three key usability dimensions: correctness, completeness, and satisfaction. Using a manually annotated dataset of 309 Instagram reviews, the results demonstrated that fine-tuned models significantly outperformed prompt-based methods in terms of accuracy, clarity, and relevance. Among the models evaluated, GPT-4 consistently achieved the highest performance across BLEU (0.64), ROUGE (0.80), and METEOR (0.90) scores, confirming its superior capacity for contextual understanding and recommendation generation. In addition to quantitative evaluations, expert human assessments validated the clarity and usefulness of the outputs, particularly for developer-oriented usability improvements. As a practical contribution, the study introduced a web-based Review Analyzer Tool that integrates the fine-tuned models to facilitate real-time analysis of negative reviews. Overall, the findings underscore the potential of fine-tuned LLMs—especially GPT-4—as intelligent, scalable tools for enhancing usability evaluation and supporting user-centered software design.

Building on the promising outcomes of this study, several directions can be pursued to further enhance the proposed framework:

Expanding the framework to encompass more diverse applications beyond mobile apps could provide broader insights into user experience across various digital platforms.

Explore more advanced and hybrid LLM architectures.

Enhance fine-tuning strategies by incorporating advanced techniques such as Reinforcement Learning from Human Feedback (RLHF).