1. Introduction

Equipping first responders with the right training to be able to perform in dangerous and dynamic situations is an important aspect of public safety. The unpredictable nature of contemporary emergency events, such as terrorist attacks and large-scale public disorder, requires personnel to possess not just procedural knowledge but also decision-making abilities supported by context. Traditional training methods do not provide stable, scalable, and secure environments consistently. This is attributed to the constraints of physical training environments, which have numerous considerations for the ideal execution of training, including logistical expenses, safety issues, and poor adaptability.

These constraints, and the emergence of contemporary technological advancements, have created an increasing need for virtual simulation technologies. This requirement can be fulfilled through immersive technologies such as Virtual Reality (VR), Augmented Reality (AR), and Digital Twins (DTs). They have the potential to create new possibilities for connecting theoretical education and practical readiness, and enhancing the accessibility and variability of training technology. It is now feasible to utilize the aforementioned technologies to establish interactive, context-sensitive learning environments that simulate the conditions and problems of the actual operating environments in a realistic manner. Regardless of the increased availability of such technologies, there is still a shortage of methodologies that can inform their usage in the specialized context of first responder training. Current solutions tend to remain domain-specific, scenario-bound, or disjoint in technological integration.

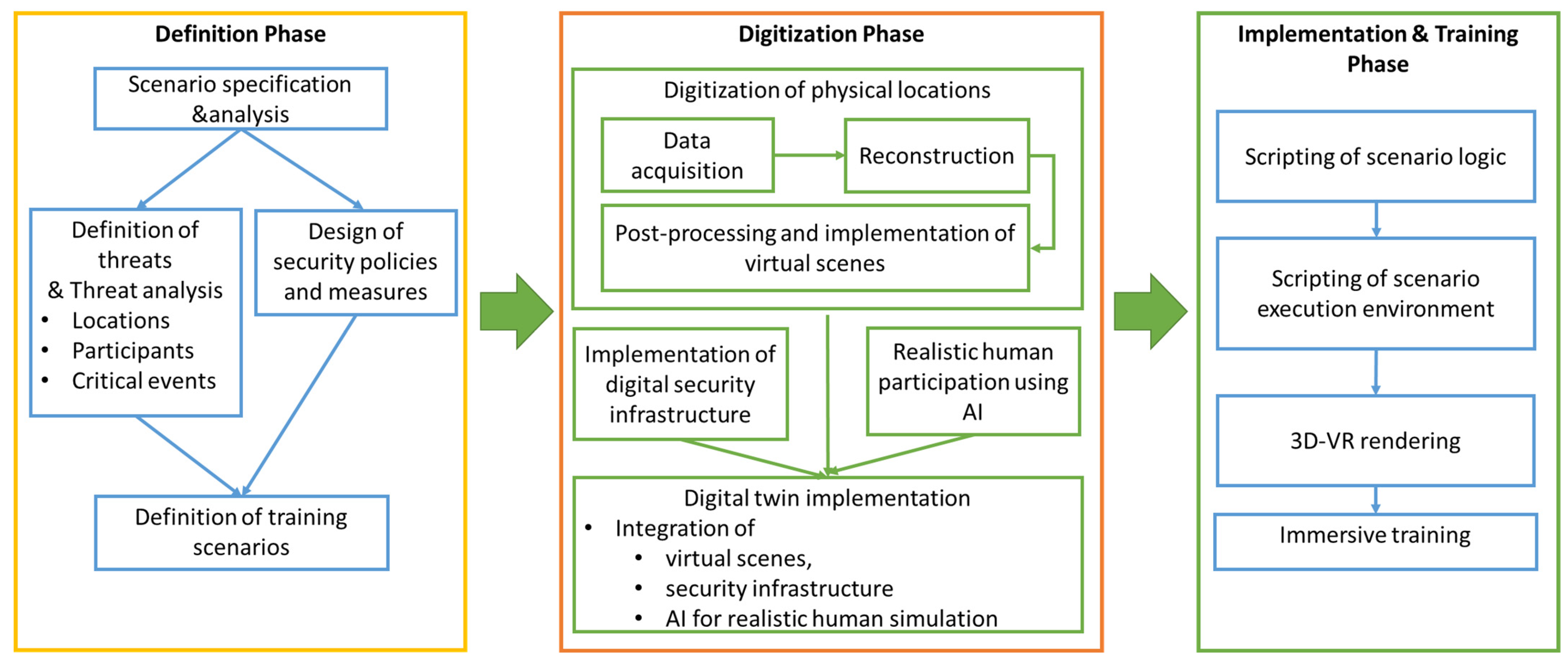

In this paper, we provide an overarching, adaptable, and reproducible VR-based DT design and deployment process. The process is divided into three distinct phases: (1) Definition, in which threats are defined, security policy is established, and training objectives are identified; (2) Digitization, in which the physical space is redeveloped and integrated with digital infrastructure; and (3) Implementation & Training, in which interactive simulations are authored, rendered, and executed for facilitating immersive learning.

The relevance of the approach is illustrated using a detailed case study of a simulated terrorist attack at a major public gathering in an urban European setting.

2. Background and Related Work

The training of first responders is a critical component of public safety, requiring the development of skills that are both complex and context-dependent. Traditional training methods, such as classroom instruction and live drills, often fall short of providing realistic, scalable, and repeatable scenarios [

1]. Recent advancements in VR, AR, and DT technology offer promising solutions to these challenges, enabling immersive, situated learning environments that closely mimic real-world conditions.

2.1. Digital Twins in Training and Simulation

DTs, defined as virtual replicas of physical systems or environments, have gained traction across various industries, including manufacturing, healthcare, and urban planning [

2]. According to [

3], “A DT is a system-of-systems which goes far beyond the traditional computer-based simulations and analysis. It is a replication of all the elements, processes, dynamics, and firmware of a physical system into a digital counterpart” [

3].

The concept of a DT originated in the aerospace industry, where they replicated and monitored the behavior of aircraft components [

4]. The technology has since grown to include a large variety of applications, from predictive maintenance in industries [

5] to personalized medicine [

6]. In training, DTs provide a dynamic and interactive space for simulating real-world scenarios. As an example, in industrial settings, DTs have been used to train operators on equipment maintenance and emergency response with significant enhancement in skill acquisition and retention [

7]. The ability to create highly accurate and dynamic virtual environments renders DTs highly suited to training first responders, where situational awareness and decision-making under duress are paramount [

8].

2.2. VR/AR-Based Training for First Responders

VR has emerged as a powerful tool for immersive training, with the potential to offer a platform for safe and controlled rehearsal of dangerous scenarios. VR-based training applications have been around since the 1990s. The technology has matured considerably since that time, with high-end VR systems offering high-fidelity graphics, true haptic feedback, and seamless interactivity [

9,

10]. Studies have shown that VR-based training enhances spatial awareness, process understanding, and stress management among first responders [

11]. For instance, VR simulations have been successfully employed to train firefighters in building navigation and hazard recognition, and the performance has been superior to traditional methods [

12,

13]. Similarly, VR has been used to teach paramedics emergency medical procedures, where trainees have demonstrated enhanced confidence and proficiency [

14]. The multimodal nature of VR, which combines visual, auditory, and haptic stimulation, markedly increases the legitimacy and effectiveness of training sessions [

15].

AR has also drawn more attention as a complementary technology to VR for first responder training. As opposed to VR, which fully immerses users in the virtual world, AR superimposes digital information on the real world, allowing trainees to interact with both virtual and physical objects simultaneously [

16]. This makes AR especially useful for hands-on training setups and real-time decision-making procedures. For example, AR has been used to guide firefighters through smoky conditions by overlaying directional information on their helmets [

17]. Similarly, AR systems have been developed to assist paramedics in performing complex medical procedures by providing step-by-step visual instructions [

18]. Portability and integration into the real world are some factors that make AR a valuable tool for first responders who need to train in diverse and unpredictable environments [

19].

2.3. Integration of VR, AR, and Digital Twins

The integration of VR, AR, and DT technology represents a significant advancement for practice training. By integrating VR and AR interfaces into DT spaces, trainees are able to interact with highly realistic and context-rich scenarios. This approach has been applied in the healthcare domain, where hospital settings have been digitally twinned in VR to train medical staff for emergency response [

20]. Similarly, the VR-powered DT has been applied in disaster management to simulate natural disasters, providing first responders with experience in the coordination of mass rescues [

21]. The convergence of VR, AR, and DTs also supports real-time data, allowing trainees to respond to dynamic changes in the environment [

22,

23,

24]. For example, in factory training, DTs can simulate equipment failure, and AR provides real-time guidance for troubleshooting and repair [

25].

2.4. AI-Driven Simulations and Adaptive Training

Artificial intelligence (AI) is increasingly being incorporated into VR and AR training platforms to build highly individualized and adaptive learning experiences. AI computer programs can measure trainee performance in real time and tailor the difficulty and complexity of scenarios to the unique skills of the individual [

26]. For example, AI-powered VR simulations were designed to train police officers in de-escalation techniques, where the system automatically changes virtual characters’ behavior based on the trainee’s behavior [

27]. Similarly, AI-powered AR systems were used to provide real-time feedback to paramedics in training exercises so they can streamline their skills and improve decision-making [

28]. The application of artificial intelligence in training programs not only increases the quality of simulations but also generates critical data for assessing trainee development and areas requiring improvement [

29].

2.5. Haptic Feedback and Multimodal Interaction

Haptic feedback systems, which offer simulations of haptic sensations to the user, are important for some forms of immersive training systems. By simulating touch, haptic devices enable trainees to interact with virtual objects more realistically and naturally [

30]. An example is the utilization of haptic gloves in VR firefighter training for simulating equipment handling and movement through rubble [

31]. Likewise, haptic feedback has been incorporated into AR systems for the delivery of tactile assistance in procedures like intravenous (IV) line insertion and cardiopulmonary resuscitation (CPR) [

32]. The combination of haptic feedback with visual and auditory stimuli creates a fully multimodal training experience, thereby increasing both participant engagement and learning outcomes [

33].

2.6. Collaborative VR/AR Environments

Mixed VR and AR environments where multiple users are collaborating in the same virtual or augmented environment are also being used in new applications in first responder training. These systems enable groups of individuals to practice coordination and communication in complex scenarios, e.g., responding to a large disaster or negotiating a hostage situation [

34]. Collaborative VR-based platforms, for example, have been developed to provide training for emergency response units to coordinate search and rescue operations [

35]. Similarly, AR technology has been utilized to facilitate real-time communication between command centers and field responders, improving decision-making and situational awareness. Having the capability to train in a collaborative environment is particularly critical for first responders, who are likely to be operating in teams and in need of good communication in order to accomplish the task.

2.7. Contribution

Despite growing interest in the application of immersive technologies for training, this study identifies significant areas where the existing literature is not providing sufficient coverage. One of the key gaps concerns the absence of generalizable approaches for the implementation of VR-based situated Digital Twins for first responders’ training. The approach proposed by this research work incorporates scenario definition, 3D digitization, locomotion-driven Virtual Human (VH) behavior, and immersive VR-based rendering to create an innovative end-to-end training pipeline. Although numerous studies have deconstructed these elements separately—i.e., digitization of spaces to facilitate virtual simulation [

36], or simulation of virtual agents’ movement [

37]—there is limited current research that illustrates the method for integrating these elements into an adaptive methodology that can be applied to a broad variety of scenarios.

This paper bridges the existing gap by proposing and demonstrating an integrated approach that enables end-to-end scenario generation, spanning from threat and policy identification to physical environment digitization and incorporation of simulated human behavior into completely rendered VR training environments. The approach is modular and flexible, hence enabling potential reuse in a broad spectrum of training contexts beyond the illustrative cases shown.

Despite this, a continued imbalance exists in standardizing validation and assessment techniques for immersive training environments. While this project presents a framed validation process to help guarantee all environments operate as planned, the field still has a more complete need for unified metrics and validation procedures. We complement this gap by planning a user-based evaluation, further to the performed validation, so as to further assess not the methodology but the formulated training scenarios. These results and the evaluation framework will be reported in the future in a separate publication. In this work, we present the validation process and highlight our plans for evaluation.

In conclusion, the study makes important contributions to the methodological and technical state of the art of immersive training systems based on DTs. The following presentation on this research work focuses highly on establishing the methodology and providing guidance for its replication across various contexts with the ambition to rationalize the design and implementation of training scenarios in the future.

3. Proposed Methodology

The methodology proposed by this work is a structured framework for developing immersive security training solutions through three phases: Definition, Digitization, and Implementation & Training. This methodology is designed to be generic enough to be broadly applicable across various security scenarios, enabling the creation of realistic virtual training environments.

In the Definition Phase, the security context of the training scenario is established. This involves analyzing potential threats and identifying key locations, relevant participants, and critical events that may unfold. Additionally, appropriate security policies and measures are formulated to address these risks. The result of executing this initial phase is the definition of a structured training scenario that ensures that the virtual environment to be implemented reflects realistic security challenges.

Following this, the Digitization Phase transforms physical spaces into detailed digital counterparts. The process begins with data acquisition, using technologies such as 3D scanning or photogrammetry to capture spatial and structural data. The acquired data are used for 3D reconstruction, which has as a result the provision of an accurate virtual space that is a copy of the physical world. Post-processing techniques further develop the environment, with increased visual fidelity and interactivity. This phase also involves the digital security infrastructure, where the virtualized monitoring systems, access controls, and other security features are included. To mimic realism, human behaviors are included using locomotion to simulate realistic interactions and responses within the environment. Together, these features form a DT that accurately mirrors both the physical environment and its security dynamics.

The Implementation & Training Phase facilitates the implementation of a virtual environment to build interactive training scenarios. This involves scripting scenario logic to define event sequences and expected security responses. A scenario execution environment is established to facilitate interaction and system responsiveness. The approach leverages real-time 3D rendering and VR to provide an immersive experience, allowing users to interact with the simulation dynamically. Trainees can move around in the virtual world, interact with security measures, and react to changing situations, thereby developing their situational awareness and decision-making capabilities in a risk-free and controlled setting.

An overview of the proposed methodology is graphically illustrated in

Figure 1. This methodology can be applied across a variety of security training domains, such as emergency response, infrastructure protection, law enforcement training, and corporate security training. Through the application of digital twins, virtual human interactions, and immersive VR technologies, the methodology supports adaptable and scalable training solutions configured to meet the unique security requirements in a variety of domains.

Of course, the applicability of the methodology cannot be validated by itself if not accompanied by use cases of its application. In the following section, an overview of the use case implemented on top of the provided methodology is presented. At the same time, in order to foster replicability of the methodology, technical details are presented, allowing, to a certain extent, follow-up on the implementation steps in producing custom training scenarios for use cases out of the context of this research work.

4. Use Case

In this section, we present how the use case implements a training scenario on top of a hypothetical event. The use case takes into account the physical spaces that will host the “Street Food Festival”. This is an annual event attracting thousands of visitors, taking place in a different European capital every year. Local and international food exhibitors, artists, music bands, and different entertainers take part. In 2025, the festival will take place in Athens, which is the capital defined as the Cultural Capital of Europe, giving more importance to the event. The event will cover a big open space of the capital, including squares, streets, and parks. During the opening ceremony, which will be held concurrently in the amphitheater of a local university and all the urban spaces allocated for the festival, big attendance is expected, and the presence of local agents, members of the Parliament, and members of the European Parliament is expected.

4.1. Definition Phase

4.1.1. Scenario Specification

In this use case, the scenario studied regards information coming to the local anti-terrorism authorities regarding an international terrorist organization, having conducted terrorist actions in different European capitals in the last decade, planning to carry out a massive attack during the opening of the event. It is expected that the threat will be implemented in the form of simultaneous attacks at different points in the area where the event is taking place.

4.1.2. Definition of Threats

According to the same sources, the terrorists (perpetrators) will start the attack when all the official guests are on the stage set up in the central square of the area where the event is taking place. The attack will happen with the use of firearms and the explosion of a trapped vehicle. The police managed to get information that the vehicle would be prepared in a warehouse located in a deserted area near Athens.

4.1.3. Design of Security Policies and Measures

The police take increased security measures and inspect the place of the event to find points of vulnerability that could be used by the terrorists. Based on the analysis of the event space, the following measures are identified that should be implemented in the security policies and measures:

Surveillance of two abandoned buildings near the event place that could be used by the terrorist organization. The two abandoned buildings should be thoroughly checked and secured within a perimeter;

Agreement with the prosecutorial authorities to use drones to monitor the area where the warehouse is located. The drone inspects the rural area where the warehouse is located. An operational center is set up for the coordination in real time of the police forces, the data given by the drones and cameras;

Installation of fixed cameras at critical points to monitor the entrances to the event area, as well as the outdoor space around the abandoned buildings;

Shut down of traffic on the roads around the square;

Forbid parking near the event location.

At the same time, the modus operandi of the terrorist organization is studied over time, using data from previous attacks by this organization as well as from terrorist attacks in general that occurred at such events and are analyzed.

4.1.4. Definition of Training Scenarios

Based on the above-mentioned information, the planning of security measures and the analysis of the modus operandi of the organization, the police formulated the following training scenarios that ideally should be studied in virtual training conditions:

Event #1. Scan the building where the ceremony will be held for malicious material;

Event #2. Suspect vehicle detection (five hours before the opening ceremony). A vehicle leaves the warehouse and is spotted by the drone. A few kilometers further on, it is chased by police vehicles and stopped. It was found that it was rigged with explosives and intended to be placed near the event area. The explosive mechanism is located and defused. The driver is arrested, and further investigations take place at the warehouse;

Event #3. Suspect arrest (an hour before the event starts). On one of the abandoned buildings, shut out by the police, human presence is located by the cameras an hour before the opening of the event. This human is found to have entered the blocked perimeter and is heading towards the building. They have a big backpack, and it is estimated by the operations center that they may be carrying weapons. The person is arrested by the ground special forces;

Step #4. Fight and panic detection (a few minutes before the end of the event). The fixed cameras detect a fight near the west entrance of the event, and immediately after that, a mass panic attack and quick departure of the people from that entrance. The operational center is notified, and the ground police forces arrest a person who has stabbed two of the people present.

4.2. Digitization Phase

The digitization phase regards the implementation of the DTs that will be facilitated in the above-mentioned training scenarios.

4.2.1. Digitization of Physical Locations

Data Acquisition

Data acquisition occurred at the location where police training is taking place. This location serves as a parametric installation where various training setups can be simulated. The rationale for the selection of the training area relates to the possibility given to performing both virtual and physical training exercises in the same training space in its physical and digital versions.

Several flights had to be conducted to extract the 3D outdoor models. The maximum depth of the scanned scene in our use case was approximately 80–100 m from the UAV, which results from the selection of the altitude for the majority of the flights (30 m) based on the UAV specs. Furthermore, for more troublesome locations within the scene, we made flights from a lower altitude of 10–15 m using manual operation of the UAV. The spatial precision achieved using a mixture of medium-altitude flights and manual low-altitude flights ranges from 2 to 5 cm, depending on the flight height and overlap (minimum 70% frontal and side overlap) between images. Most flights were conducted autonomously via the Pix4Dcapture [

38] app and in the double-grid mode. We selected Pix4Dcapture and Pix4Dmapper [

39] due to their robust integration with drone photogrammetry workflows and their capacity to deliver high-resolution 3D reconstructions optimized for VR experiences. Pix4Dcapture provides automated mission planning using double-grid paths, ensuring uniform coverage and minimizing data gaps. Pix4Dmapper offers efficient reconstruction, dense point cloud generation, and mesh creation with texture mapping, which are essential for creating immersive and realistic virtual environments [

40]. Compared to other photogrammetry tools like Agisoft Metashape [

41] or RealityCapture [

42], Pix4D provides streamlined UAV compatibility, real-time processing previews, and cloud deployment options, which are advantageous in time-sensitive public safety contexts [

8].

In addition, some free flying had to be conducted to avoid obstacles, and so a lower-altitude flight was conducted with the same application. Low-altitude flights are intended for covering parts of the space with physical occlusions that need a special photographic setup to be covered. After the data were collected, they were loaded into the photogrammetry programs. For the data with a low number of images, the program Pix4Dmapper [

39] was used, and for the data with a large number of images, the program Pix4Dmatic [

43] was used. The flight path selected was grid-wise, while a second grid, perpendicular to the first, was used for increased reconstruction robustness (see

Figure 2).

A problem with this approach was that the segments of interest in a scene may not be visible from aerial views, such as the scene locations below the eaves of buildings. This is partially compensated through low-altitude manual scans. At the same time, due to the inherent limitations of the method, the achieved resolution may be sufficient for display purposes but needs further enhancement using post-processing techniques to achieve an environment that can be experienced in VR and 3D.

A total of 1692 photos were taken: 1000 from a high altitude and 692 from a low altitude and manual flight. Three high-altitude, one low-altitude, and one manual flight were coducted, with an average of 350 photos per flight. Indicative sequential photos of the site are presented in

Figure 3.

Reconstruction

After processing the photogrammetry program exports, a 3D model consisting of a fairly good structure with very good textures is created. Several views of the reconstruction are presented in

Figure 4. As shown in this figure, the reconstruction has enough detail to present the scene from a distance.

Moving closer and enabling shading reveals several falls in the reconstruction, which are also visible when rendering the reconstructed mesh structure without textures. Examples of such falls can be seen in

Figure 5. Thus, a critical step in preparing the virtual scene for VR-based simulation is the refinement of the model through the removal or correction of problematic elements. Raw photogrammetric outputs often contain visual noise, redundant geometry, or incomplete surfaces, particularly in areas with occlusions, reflective materials, or complex architectural features such as overhangs and narrow gaps. These artifacts can impair the realism, usability, and navigability of the virtual environment and may hinder the effective execution of training scenarios.

The simplest methodology that can be followed for enhancing the quality of the reconstruction is segmenting and cleaning reconstruction segments, and when possible, replacing reconstructed artifacts of low quality with higher-quality digital 3D models. This is an exceptionally good method when dealing with artifacts of no historical, archaeological, or scientific value. In our case, the containers are such a case. Cutting off all the containers and then replacing them with 3D models is very straightforward and requires fewer resources than manually remodeling the environment. The same stands for the basketball court, where a lot of errors exist on the fence and the court itself.

Figure 6 presents the various segments created from the scene and how these were cleaned using a simple height extraction operator, i.e., cutting off all content over a specific height in the model. The resulting segments are merged again to proceed with the post-processing operation.

The main terrain of the DT is created by remerging the cut segments and then enhancing it with 3D objects. In this use case, a shipping container was used and edited to include custom windows and a door to replace the ill-reconstructed initial structures. Furthermore, the basketball court was replaced with 3D models, and the location of the food festival, as indicated by the scenario, was modeled. The resulting scene is presented in

Figure 7.

For the internal event case, an amphitheater DT was employed in this scenario as it also provides the option of exploiting ready-to-use components as part of the execution of the proposed methodology. An overview of the amphitheater used in the scenario is presented in

Figure 8.

Until now, the methodology is replicable but needs to be applied in each use case for the virtual environment to be generated. From this point forward, the proposed methodology systematizes the implementation by providing reusable components.

Justification for the Selection of Digitization Technology

The selection of photogrammetry over alternative reconstruction methods such as LiDAR or 360° panoramic imaging was driven by a trade-off between spatial accuracy, scene coverage, operational efficiency, and cost. UAV-based photogrammetry provides sufficient accuracy (2–5 cm per pixel) for immersive VR applications and allows for rapid acquisition of large-scale environments in a single day. Multiple flights at varying altitudes and angles ensure robust reconstruction, even in occluded or structurally complex areas.

Although LiDAR scanning can achieve sub-centimeter accuracy and better geometric fidelity in occluded or vegetated areas, its deployment in the field is often constrained by high equipment and processing costs, logistical overhead (especially when scanning from multiple positions), and the need for additional post-processing to enhance poor-quality textures. As LiDAR outputs are typically low in color fidelity, the preparation of photorealistic training environments requires the overlay of supplementary texture data or significant manual editing.

On the other end of the spectrum, 360° video and panoramic imaging techniques, while lightweight and efficient for generating navigable environments, lack depth information and do not support metrically accurate 3D reconstructions. These approaches are suitable for passive exploration or training that does not require object interaction or spatial measurement, but are suboptimal for dynamic and interactive VR-based simulations. Furthermore, they rely on specialized cameras and proprietary software platforms, limiting their flexibility and generalizability in custom simulation pipelines.

In this use case, UAV-based photogrammetry offered the best balance between fidelity, scalability, and practicality. This, of course, is not a constraint applied by our methodology. The methodology applies to any digitization technology. The careful planning and selection of digitization technology is a prerequisite for acquiring the best possible reconstruction.

4.2.2. Implementation of Digital Security Infrastructure

This section details the integration of digital surveillance infrastructure, which plays a pivotal role in enabling situational awareness training. The virtual CCTV and drone systems replicate real-world monitoring workflows and allow users to interactively review feeds, identify security breaches, and make operational decisions.

Virtual surveillance cameras replicate real-world closed-circuit television (CCTV) systems within the virtual environment. These cameras provide real-time monitoring of specific locations by capturing virtual space and rendering the feed onto in-world screens. A similar approach is the use of virtual drones, which replicate real-world drone-based surveillance of a location of interest and render results into the virtual space. In both cases, a Unity 3D camera component is implemented that renders output in screen space coordinates and that can be placed wherever in the scene.

A virtual surveillance camera in Unity 3D is implemented by creating a Camera object positioned at a specific location within the virtual environment. Instead of rendering its output directly to the user’s view, the camera’s feed is redirected to a Render Texture, which acts as a dynamic image source. This texture is then applied to a UI Panel or a 3D mesh, allowing the captured scene to be displayed as a live video feed on in-game monitors or interfaces. To ensure smooth performance, optimizations such as reducing the Render Texture resolution, adjusting the camera update frequency, and implementing occlusion culling techniques can help manage the computational load.

The drone is a similar component on which a flight path is attached. The flight path is a path component with a moving animation applied to the camera. A virtual drone camera in Unity 3D differs from a fixed surveillance camera in that it follows a predefined or dynamically generated path to capture an aerial view of the scene. This is achieved by attaching a Camera object to a moving GameObject that follows a scripted trajectory. The path can be created using animation curves, waypoints, or procedural movement algorithms to simulate realistic drone flight behavior. The camera feed is rendered to a Render Texture, allowing it to be displayed on in-game monitors or interfaces. Unlike static surveillance cameras, drone cameras provide dynamic perspectives, covering larger areas and adjusting their view based on mission requirements. Optimizations such as adjusting movement smoothing, frame rate adaptation, and culling strategies help maintain performance while ensuring a fluid and realistic simulation.

Aspects of these components, including the moving animation pace, are editable through the Unity 3D editor.

4.2.3. Realistic Virtual Human Participation

To further increase the sense of realism and contextual richness in the virtual training space, the simulation features dynamically generated VH entities that mimic the movement of civilians present at a live public event. The additional simulation layer is designed to replicate the background motion and crowds commonly encountered in large gatherings to enable trainees to engage in scenarios characterized by realistic environmental factors and human activity. The VHs employed in animation adhere to a locomotion system allowing free movement about the environment following precomputed or random paths. The waypoint-based locomotion system is built upon Unity’s NavMesh framework. The Unity NavMesh framework provides tools for creating navigable areas in your scenes, allowing AI-controlled characters to navigate and avoid obstacles [

44]. Movement paths are influenced by steering behaviors derived from Reynolds’ Boids algorithm, including cohesion, alignment, and separation [

45]. Points of interest (POIs), such as kiosks or stages, act as attractors with weighted probabilities that affect agent path selection. This configuration yields realistic crowd dynamics and emergent spatial clustering [

37]. Furthermore, their movement is regulated by specific parameters; the simulation contains predestined points of interest—vendor booths, performance stages, or signage—that attract the attention of nearby agents. This results in the formation of organically occurring clusters or overpopulated areas, reflecting the crowd behavior generally expected with a lively urban gathering.

On top of the general patterns of crowd behavior, the system also accommodates the inclusion of predefined or choreographed behavior for particular agents, hence the simulation of more complicated and event-dependent behaviors. In these instances, individual virtual entities are brought to life by utilizing motion capture (MoCap) data, thereby facilitating realistic and natural motion in critical interactions. Critical agent actions within high-risk scenarios are animated using motion capture data. This approach enables nuanced and lifelike behaviors, such as in suspect apprehension (Event Scene #3) and crowd panic simulations (Event Scene #4), enhancing the immersive quality and behavioral realism of the training exercises.

This aspect is especially vital in training exercises related to security threats or societal disruptions, for instance, conflict, panic responses, or aberrant behavior. Through the combination of large-scale ambient human presence and painstakingly detailed behavior-driven agents, the system portrays a sophisticated and subtle simulation of crowd behavior, thereby facilitating a wide range of operational and behavioral training scenarios. The hybrid approach compromises between computational efficacy and behavioral complexity, leading to an effective model for immersive situational awareness and response training.

4.2.4. Digital Twin Implementation

The final portion of the digitization process is combining all the virtual components into a DT that accurately depicts the physical characteristics and operational behaviors of the real-world environment. The deployment of this combination unifies the 3D reconstruction of the environment with the digital security system and simulation of human movement and activity, producing an interactive and immersive virtual representation of the training environment.

Central to the DT concept is the reconstructed spatial model developed via photogrammetric techniques and subsequently sharpened by post-processing actions. The model serves as the structural basis upon which further functional components are then built on top of. In the case of simulating real-world surveillance configurations, virtual surveillance systems are integrated based on the actual space characteristics and the scenarios under investigation. Furthermore, they are associated with in situ virtual displays to allow users to examine surveillance data in real-time.

The utilization of VHs significantly improves the behavioral realism of the DT. These agents inhabit the world, move autonomously, and display movement patterns motivated by attention that are defined by specific points of interest, thus producing realistic crowds and areas of congestion. At the same time, scenario-dependent human activities, i.e., disruptions or threats, are introduced through MoCap-controlled characters with precisely scripted actions to fit the training goals. All of the elements, when incorporated in one simulation platform, formulate the so-called DT.

4.3. Implementation and Training Phase

In the initiation of this stage, the DT is available, and all the static behaviors of the system have been set up. This practically means that only the behavior of the system under specific scenario circumstances is needed to be able to provide event-based training.

4.3.1. Setting Up Event Scenes

Using the implemented DTs, four event scenes were created by integrating the appropriate components and actions into the created DTs.

Event scene #1. Scan the building where the ceremony will be held for malicious material. In this event, suspicious people and objects were integrated into the scene to be scanned and deactivated by the security personnel.

Event scene #2. Suspect vehicle detection (five hours before the opening ceremony). A vehicle in a distant location and an abandoned warehouse are to be located by the patrolling police cars.

Event scene #3. Suspect arrest (an hour before the event starts). Detection of a threat and proceeding to the arrest of a suspicious person.

Event scene #4. Fight and panic detection (a few minutes before the end of the event). Detection of the area where the fight occurs. Stopping the fight and calming people attending the event.

4.3.2. Implementing Training Scenarios

The implementation scenarios regard the orchestration of the event logic that binds the event scenes with the actions and activities performed by the participants. The following Figures present instances from the studied scenarios. More specifically,

Figure 9 presents the amphitheater incidents,

Figure 10 presents the chase incident,

Figure 11 presents the car-chase incident, and

Figure 12 presents the fight incident.

4.3.3. User Interface and Interaction

Participants interact with the training environment either in 3D or in VR. In the 3D version of the prototype, navigation replicated the standard key structure followed by first-person shooter games (keys W, A, S, D, and space; mouse for camera control; left click for interaction with objects). Virtual control panels are accessed through shortcut keys (keys 1 to 5). In the VR variation, navigation within the scene is handled by joystick movement or teleportation prompts. CCTV and drone feeds are accessed via virtual control panels that allow scene switching and monitoring perspective changes. The XR Interaction Toolkit in Unity was used to implement Head-Mounted Display (HMD) overlays and in-world menus. These allow seamless transitions between training phases and scenario setups.

4.4. Validation

To ensure the stability and effectiveness of the created training environment, a validation process was carried out. The process focused on verifying the correct setup and functionality of all event scenes and training scenarios outlined in the design and implementation phases. Every scenario was thoroughly analyzed, and interactions, stimuli, and repercussions were carefully examined concerning the expectations.

Regarding operational components, the validation included testing of surveillance elements, AI-based behavior of virtual humans, adaptive reactions of Motion Capture technology-based agents, and accurate progression of the environment in various phases of the training procedure. Particular emphasis was placed on scenario logic being aligned with visual and behavioral cues, which present users with an accurate chain of events throughout their training exercises. Failure modes and edge cases were also investigated to guarantee the system’s robustness in less-than-perfect conditions. Iterative revisions were implemented wherever differences or unforeseen behavior were observed, further adding to the overall stability and fidelity of the virtual experience. Through this validation process, a “Scenario Validation Checklist” was employed to record scenario quality and support further improvement. This checklist is presented in

Appendix A.

4.5. Evaluation

Within the SAFEGUARD project, there will be an extensive user test at the pilot stage to determine the effectiveness, usability, and learning value of the training scenarios developed. This test will be important in validating the scenarios implemented using the proposed methodology and not the methodology itself, which was in-depth validated during the implementation and validation phase. In this setup, we are going to assess the training goals and how these are achieved through the implemented virtual training platform. Another aim of the evaluation will be to assess the usability of the interactive VR training system and its effectiveness in achieving the predefined training objectives. Specifically, the evaluation will analyze the degree of intuitiveness and user involvement of the system, whether the training simulations are found to be realistic and instructive, and the degree to which users believe the experience contributes to their knowledge of security protocols and threat response methods.

The testing process will include a range of end-users, such as trainees, trainers, and security officers, who will utilize the system in controlled pilot environments. Users will be guided through a carefully chosen set of training scenarios that cover a variety of threat scenarios. Data will be gathered by systematic observation, recording of user behavior during scenarios, and questionnaires after sessions. Feedback will revolve around scenario clarity, interaction ease, system responsiveness, perceived realism, and perceived learning outcomes by the users.

The assessment will utilize a mixed-methods methodology, blending quantitative metrics (e.g., task completion rates, error rates, and Likert scale ratings) with qualitative input (e.g., open-ended survey questions and verbal interviews). In this way, both quantifiable performance measurements and an in-depth examination of user experiences and expectations will be possible. In conclusion, the results from this pilot assessment will feed into the development of the training framework, both in terms of technical refinement and enrichment of content. It will also help to define best practices in the implementation of equivalent training frameworks in other operational contexts, thereby increasing the overall transferability and scalability of the SAFEGUARD methodology.

5. Discussion and Conclusions

This study presents a systematic and modular approach to designing and implementing immersive training environments for preparing individuals to handle complex security situations. Within the SAFEGUARD project, a DT-based virtual training environment has been created, integrating authentic scene digitization, virtual surveillance techniques, human behavior modeling, and scenario scripting to facilitate immersive VR experiences.

Relative to previous work, this effort focuses on integrated scenario definition, digital modeling, and immersive training on and beyond the foundations established in the field of virtual training for emergency response and threat mitigation. For instance, previous efforts contemplated VR-based emergency training systems for disaster and fire preparedness and stressed the importance of immersive realism for improved learning outcomes [

46,

47,

48,

49,

50]. These works demonstrated the educational benefits of dynamic virtual environments for security training, focusing on user engagement and retention.

The presented approach exemplifies the experiential learning theory [

51], immersing first responder trainees in realistic scenarios through the use of VR and DT technologies [

52]. At its core, experiential learning holds the “learning by doing” paradigm, where individuals actively engage in experiences and then reflect on those experiences to foster knowledge, skills, and attitudes. This VR approach embodies this by moving beyond traditional training methods, which disseminate information on procedures or potential threats, by immersing trainees in high-fidelity and interactive digital replicas of real-world environments.

The presented approach aligns well with the stages of Experiential Learning of Direct Experience, Reflective Observation, Conceptual Understanding, and Active Experimentation. During training, trainees engage with virtual environments mirroring real-world physical spaces such as public venues and sites, directly experiencing the scenarios (i.e., Direct Experience), such as scanning buildings, detecting suspicious vehicles, or responding to dangerous crowd dynamics, as described in the use case. Trainees must navigate the scenarios, make decisions, respond to emerging threats, and witness the immediate consequences of their actions in a safe environment. The evaluation, through the validation checklist, involves observation, feedback, and analysis of the trainee’s performance, facilitating the reflective observation phase of Experiential Learning. This allows them to understand the practical application of security policies and the importance of responding appropriately to threats in a dynamic context. According to the outcomes, trainees can form “abstract conceptualizations” and comprehend lessons about effective strategies and critical thinking under pressure. The ability to replay scenarios, potentially with variations, trains trainees in the Active Experimentation phase, reinforcing learned concepts and behaviors and improving their readiness. The methodology focuses on realism, AI-driven human behavior, and interactive digital infrastructure, ensuring these experiences promote immersion, deeper learning, and skill retention than passive methods.

Furthermore, the presented approach positions trainees at the center of complex, realistic situations requiring problem-solving, critical thinking, and decision-making under pressure. As a problem-based learning approach [

53], trainees gain knowledge and skills by working to resolve open-ended problems that simulate real-world challenges facilitated by VR-based and DT technologies. Trainees must actively analyze the simulated environment, identify critical information in the scenario’s context (e.g., AI-driven crowd behavior, scan feeds), formulate hypotheses about potential threats, and decide on courses of action. During this process, trainees recall prior knowledge, identify learning gaps, and formulate solutions.

The current methodology adds to prior knowledge by presenting an adaptable framework that can be customized to many different contexts and threat models, rather than a simulation for one specific purpose. The addition of VH interaction provides an additional layer of realism, enabling dynamic and emergent behavior that better replicates crowd action and individual human reactions. Also, the modular structure allows scalability, thus making it possible for the simulation to be extended or reconfigured based on new scenarios or updated threat models.

Compared to previous systems that tended to be based on rigid, predetermined training paths, our system promotes variation in events and user interaction, thereby enabling adaptive training sessions. This follows research [

54] that identifies dynamic training conditions that enhance user readiness and situational awareness.

The use of real-time VR rendering also distinguishes this work by delivering a completely immersive experience that is interactive and measurable in terms of learning outcomes. As has been previously reported, embodied cognition and sense of presence play a significant role in the effectiveness of virtual training [

10]. This immersion is enabled by our implementation through accurate 3D reconstructions and choreographed training exercises derived from real-world examples.

Another topic of discussion is the use of VR headsets in conjunction with the associated human factors/ergonomic issues that arise from this technology [

55]. In this direction, we would like to stress that there are alternative display technologies that offer promising potential, specifically, LED walls and laser projection systems used in room-scale simulators such as CAVEs (Cave Automatic Virtual Environments). These systems allow for immersive visualization through large-scale projections onto surrounding walls or curved screens, providing a more natural field of view and reducing the physical and cognitive burden associated with wearing headsets. Studies have shown that while HMDs offer higher immersion, CAVE systems provide equivalent performance in tasks like distance perception and can reduce physical fatigue (e.g., the weight of an HMD) [

56]. These display setups are beneficial for all cases and especially useful in training contexts where multiple trainees need to experience the same environment simultaneously and the use of HMDs is impractical due to medical, ergonomic, or accessibility reasons. For first-responder training, immersive CAVE-based simulations have been effectively used in scenarios like mass-casualty incidents, enabling trainees to interact safely with virtual patients and environments while being observed and assessed in real time [

57]. Comparisons between CAVE and HMD setups indicate that while both are effective, CAVEs may better support collaboration and accessibility, whereas HMDs excel in portability and individualized interaction [

58]. While our present implementation focuses on VR headsets for their portability and interactivity, the underlying simulation platform is compatible with CAVE systems and dome-based projection environments. The Unity-based environment, with its modular rendering pipeline, can be easily reconfigured to output to multiple external displays or projection surfaces.

In conclusion, this study presents a robust and extensible approach to immersive VR-based training for security and emergency response applications. It advances current research by increasing realism, scalability, and flexibility, and positions itself for follow-on validation through pilot user trials. The insights developed in this implementation have the potential to serve as a template for developing comparable training solutions for a broad range of high-stakes decision-making and coordinated human response applications.