1. Introduction

Emotion can be defined as any psychological phenomenon caused by various positive or negative events for the individual [

1]. These events include a set of physiological reactions, changes in the normal course of thought, and behavioural responses. Therefore, an emotion is a well-structured, dynamic, and multi-component phenomenon activated by a specific event. It is a reaction to an external or internal antecedent that causes changes at the level of the nervous and endocrine systems, the musculoskeletal system, and the facial configuration. To confirm that we are dealing with an emotion, James in 1980 suggest that the last three must occur simultaneously, as they are considered essential components [

2].

Concerning emotions, in 1979, Ekman and Oster emphasized the crucial role that recognition of emotional facial expressions plays in our daily lives. In fact, according to these authors, facial expressions of emotion are the most critical component of nonverbal language, contributing significantly to the communication of our mental and emotional states, as well as those of individuals in our proximity [

3]. Given the pivotal role played by accurate recognition of emotional facial expressions, the importance of developing assessment tools for individuals who are facing difficulties becomes clear. Therefore, research in this area is essential to deepen our understanding of our current knowledge about this skill.

The assessment tools employed to measure such processes are mostly laboratory tests following a classical experimental methodology. According to González-Quevedo et al., this approach is deemed inappropriate and irrelevant as it fails to reflect how expressions are perceived in natural contexts [

4]. Furthermore, it presents numerous disadvantages in terms of ecological validity. In addition to these issues, there are also challenges related to individual motivation during the execution of the classical laboratory tests. This is often due to the tasks being presented in a highly repetitive and systematic fashion over prolonged periods. Thus, the constant state of attention and activation can lead to fatigue, which, in turn, can have an impact on participants’ cognitive functions and consequently their task performance [

5]. It is noteworthy that the sense of fatigue involves the participant’s ability to construct a mental framework resulting from the integration of multiple factors such as performance expectations (a prediction based on acquired memories about the muscular strength or power that one possesses and could demonstrate in a specific circumstance), the level of activation and arousal, motivation, and mood. These elements imply that, even at the same level of objective fatigue, individuals may experience diverse sensations [

6].

At the same time, however, basic experimentation is crucial for the formulation of hypotheses and theories, as stated also by González-Quevedo et al. [

4]. Similarly, Smith [

7] asserts that laboratory-based experimentation allows for a certain degree of control over highly complex phenomena. Researchers can manage exposure to independent variables, and randomize and control the range in which these independent variables vary, significantly increasing internal validity. Therefore, it is necessary to develop assessment tools that are realistic and both sensitive and motivating for the population concerned. These tools should function similarly regardless of the individual’s cultural environment, but with the same degree of rigour and experimental control as a classical laboratory task [

5,

7].

The use of Immersive Virtual Environments (IVEs) can help alleviate the issue of low psychological realism observed in experimental contexts by enabling the presentation of various stimuli and environments through a computer in a more realistic manner [

8,

9]. This methodology has grown in recent years as a tool that allows us to assess behaviours, obtaining increasingly precise measurements [

10].

In line with this idea, we have seen an expansion of the use of video games beyond mere entertainment to include educational, therapeutic, or research purposes [

11]. Technological development has a potent effect on phenomena such as attention, memory, and esthetics. Tasks and applications in game format enhance the user experience and facilitate the task, as they are strongly associated with entertainment and enjoyment, and can even stimulate thinking and affectivity [

1]. However, these formats need to be diverse and varied enough to avoid player boredom [

12].

In this regard, it is known that the use of computerized games improves performance in various cognitive tasks while enhancing the affective and motivational states of users, as shown in a systematic review [

13]. Thus, contemporary research shows a growing trend towards using video game or application formats for the measurement of psychological aspects of laboratory tasks, as opposed to traditional laboratory tasks. For instance, in a study conducted by Tong et al. [

14], a Go/No-Go task was implemented in a 3D environment or a Serious game to assess response inhibition. This study successfully demonstrated that the 3D environment task measures response inhibition capabilities in a manner very similar to the traditional format of the test. After completing the task, participants were asked to evaluate its utility, and it received a rating of 8.13 out of 10. Furthermore, 91% of participants indicated that they would recommend engaging in the task. The authors concluded by highlighting the potential benefits of analyzing the combined effect of a serious game with other traditional methods of intervention in psychology to assess its utility and effectiveness [

14]. Another study used the Unreal Engine game development engine to measure cognitive functions that play a pivotal role in the diagnosis and treatment of mental disorders such as schizophrenia—that is, spatial learning, mental flexibility, and working memory. Using a test suitable for clinical research, the authors created a virtual counterpart of the spatial animal task known as the Morris water maze (MWM). Analysis of data from 30 schizophrenia patients revealed cognitive deficits in the newly devised virtual task when compared to a control group of healthy volunteers, suggesting its potential utility as a diagnostic or therapeutic tool for cognitive function [

15].

Regarding the use of video games for evaluation and treatment purposes in the field of social cognition, previous studies also suggest their potential. For example, for evaluation reasons, the study by Banos [

16] introduced EmoAnim, a serious game designed to screen emotion recognition abilities in children, showing promising results in distinguishing between typically developing children and those with autism. The game successfully highlighted key differences in emotional understanding, demonstrating its effectiveness in identifying areas where children with autism might struggle, thus validating its potential as both an assessment and intervention tool for social cognition [

16]. Another study aimed to investigate the effects of computerized interventions on emotional understanding in children with autism spectrum disorder (ASD), highlighting that, following the application of the programme, the studied abilities improved, and these improvements were attributed to the use of the computerized intervention. Additionally, statistically significant differences were observed between the two groups in the locomotor, personal–social, language, performance, and practical reasoning subscales [

17]. Similarly, a computerized pilot training programme was designed for children with ASD exhibiting low scores in social perception. The intervention included a combination of computer-based social perception training exercises and a three-week trial using Remote Microphone Hearing Systems (RMHS) to provide an enhanced auditory experience. The results showed significant improvement in the children’s ability to perceive social cues, suggesting that this integrated approach can effectively enhance social perception in children with ASD, particularly in environments with auditory challenges [

18].

Objectives and Hypotheses

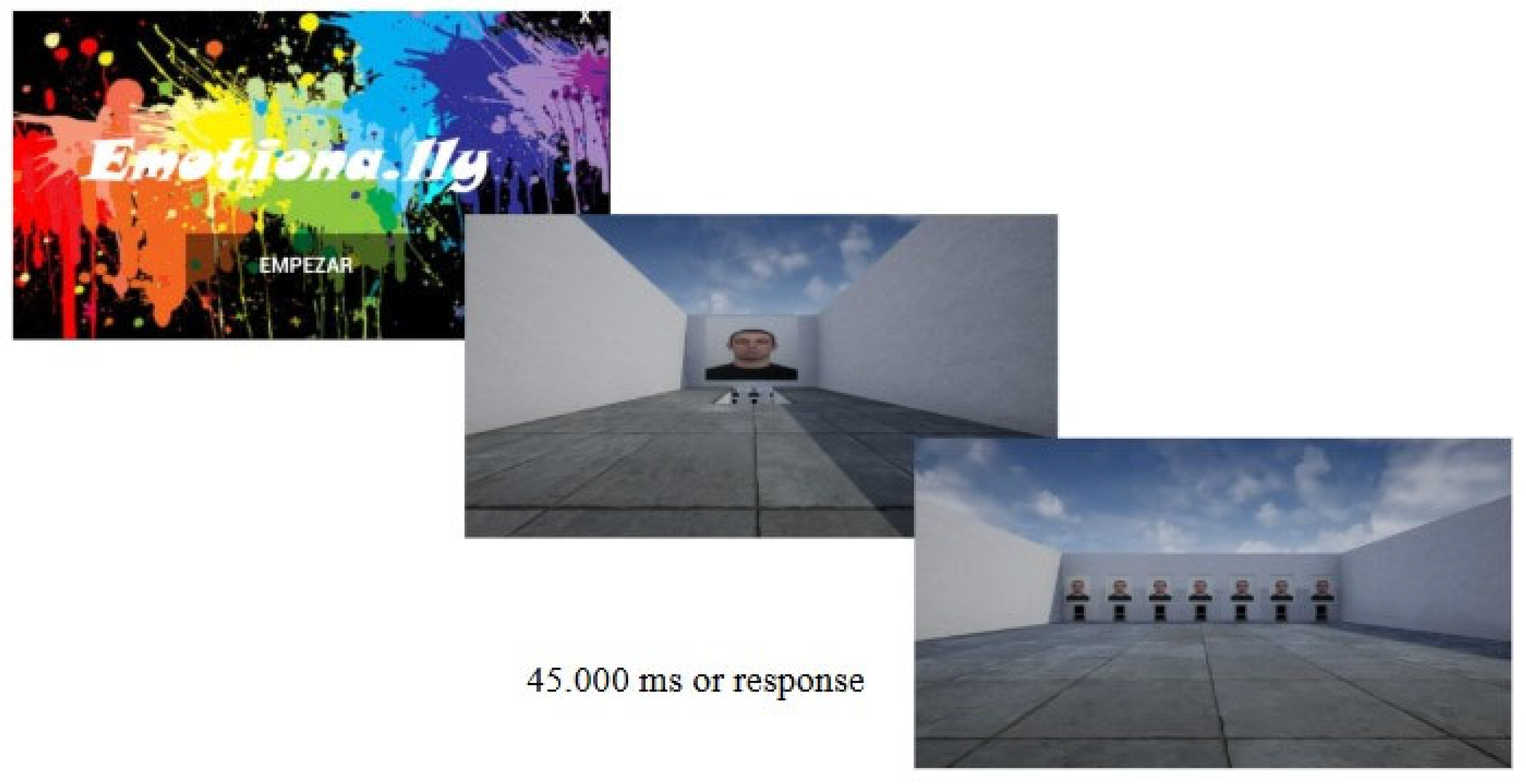

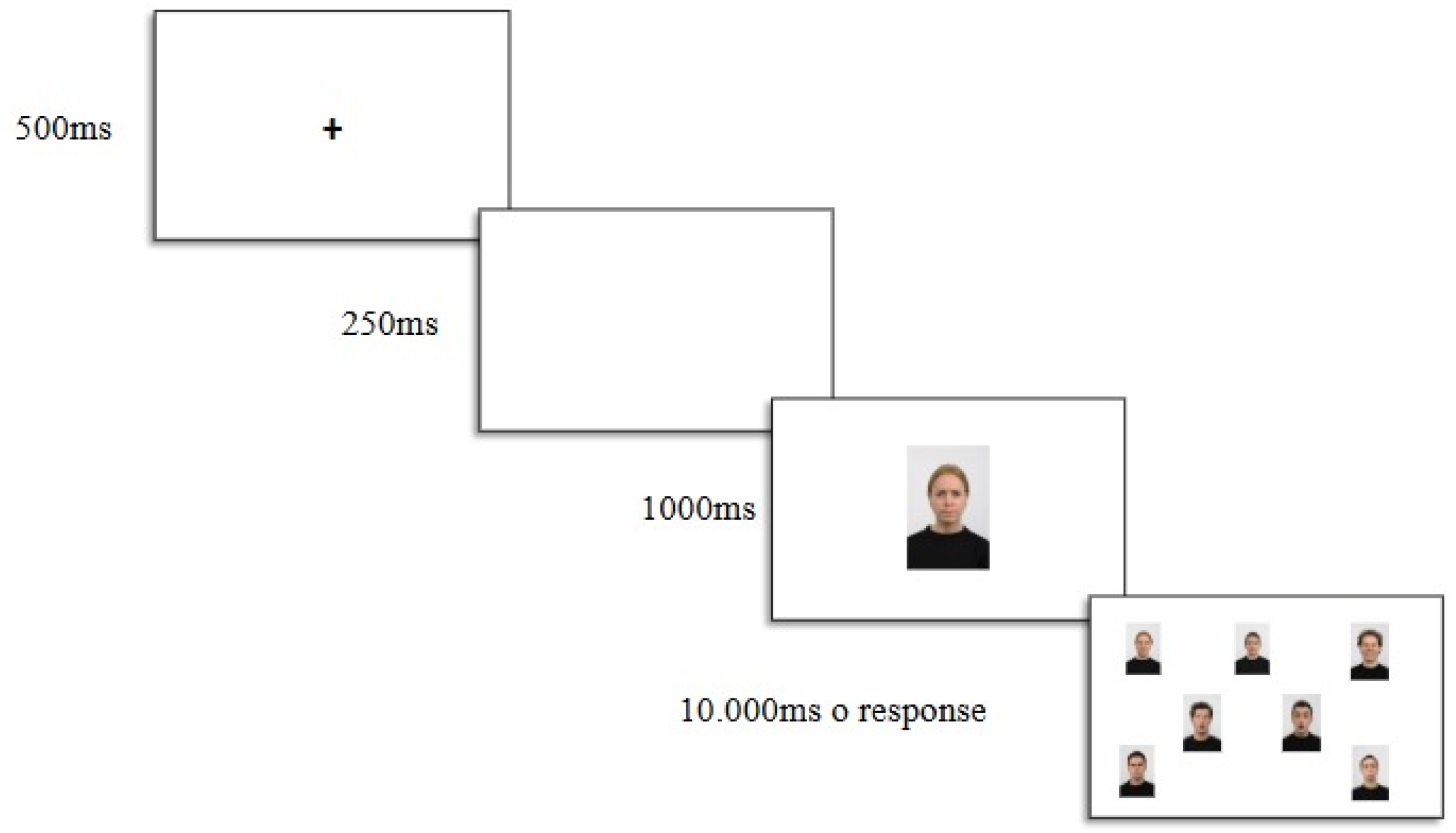

Given that tasks in a 3D serious game format appear to be useful at both applied and research levels, the main goal of the current study is to design a test in a serious game or 3D environment format to assess the recognition of emotional facial expressions. To achieve this, we based it on tasks traditionally used to study this ability, such as the Matching to Sample (MTS) task.

As a second objective, we aim to conduct a small pilot study to verify whether this task measures the recognition of emotional facial expressions similarly to a traditional laboratory task, such as the one used by González-Rodríguez et al. [

19]. We anticipate that this new video game format task will create a more engaging context for participants, preventing fatigue and being more enjoyable than a conventional task. We also expect it to measure emotional facial expression recognition in a similar manner to the traditional task [

19]. Therefore, we anticipate that performance in the 3D video game task will yield very similar results to performing the same task in a traditional format (Hypothesis 1). Additionally, we expect participants to express higher levels of psychological well-being, lower psychological distress or lack of activation, and a reduction in perceived fatigue compared to the conventional task (Hypothesis 2).

3. Results

3.1. Experimental Tasks

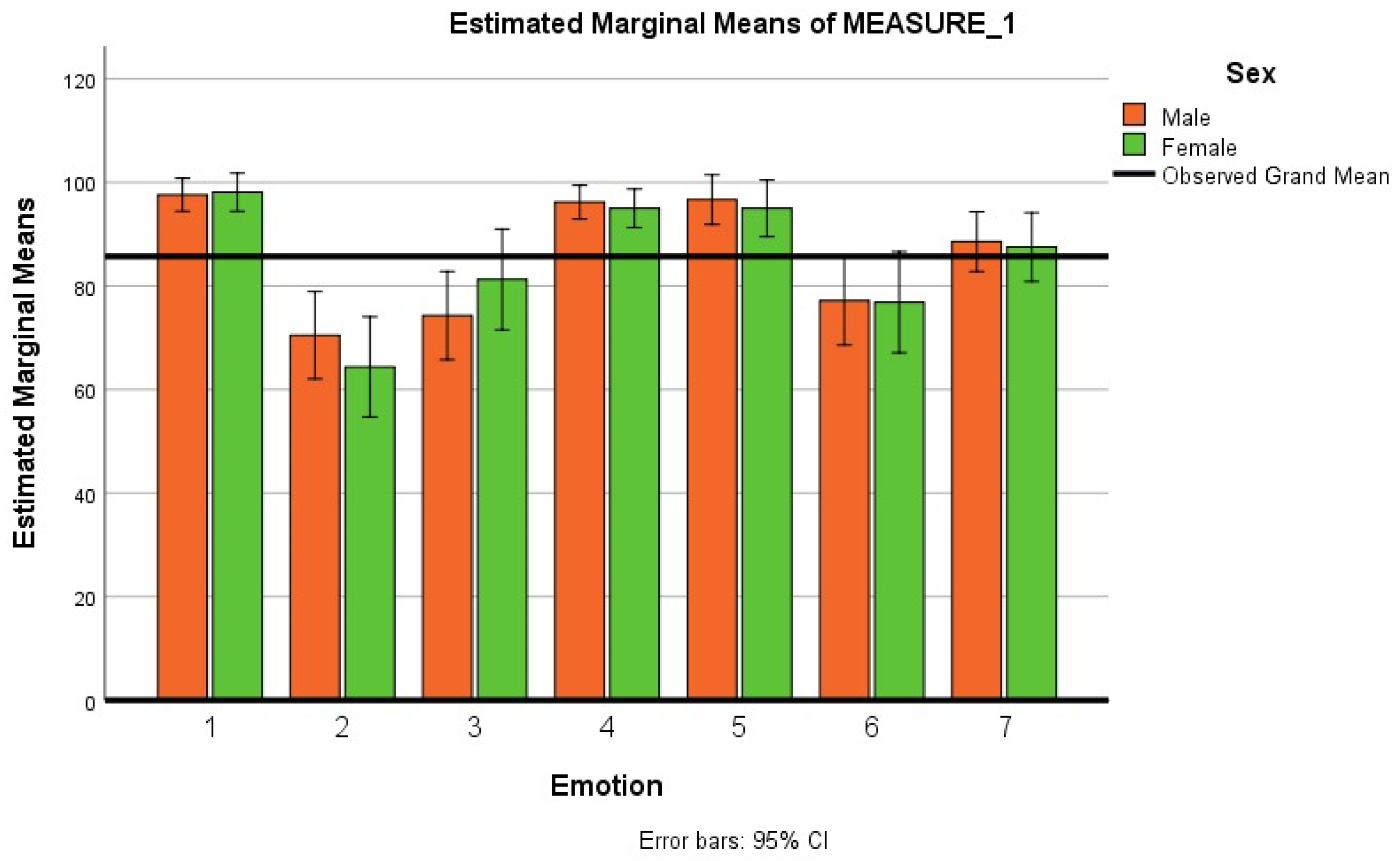

First of all, to rule out the possibility that gender-related effects might bias the results, a gender-by-emotion ANOVA was conducted on the data from both tasks (classic and 3D). As shown in

Figure 3, no gender differences were observed for any emotion [F(6, 35) = 0.82,

p = 0.55]. Therefore, the data from men and women were pooled for the subsequent analyses (see

Figure 3).

Figure 3.

Estimated Marginal means interaction of emotion x sex. To compare the performance of the groups that participated in the classic task and the 3D Environment task (see

Table 1 for descriptive statistics), the percentage of correct responses obtained by each participant for each emotion was analyzed using a mixed ANOVA with Emotion (Happiness, Sadness, Anger, Disgust, Surprise, Fear, and Neutral) as the within-participants factor and Type of task (Classic Task and 3D Environment Task) as the between-participants factor.

Figure 3.

Estimated Marginal means interaction of emotion x sex. To compare the performance of the groups that participated in the classic task and the 3D Environment task (see

Table 1 for descriptive statistics), the percentage of correct responses obtained by each participant for each emotion was analyzed using a mixed ANOVA with Emotion (Happiness, Sadness, Anger, Disgust, Surprise, Fear, and Neutral) as the within-participants factor and Type of task (Classic Task and 3D Environment Task) as the between-participants factor.

To check the assumption of homogeneity of variances in performance, we refer to the Levene’s test for heterogeneity of variances, where this assumption is not met for the emotions Happiness [F(1, 35) = 7.47,

p = 0.01], Disgust [F(1, 35) = 8.26;

p < 0.05]; and Surprise [F(1, 35) = 9.83,

p = 0.03]. That is, the variances for these data groups are different. However, it is met (

p > 0.05) for the emotions Sadness, Anger, Fear, and Neutral. For this reason, we use Welch’s ANOVA. Since the sphericity assumption was not met [Mauchly’s W= 0.24 = 20,

p < 0.05], the Greenhouse–Geisser correction was applied. A general effect of emotional recognition between tasks was found [Wilks’ Lambda (Λ)= 0.310, F(11, 135) = 6.000,

p < 0.05, ηp

2 = 0.77], general effect task which indicated superiority in the 3D task, and modulated by an Emotion x Task interaction [Type III Sum of Squares = 2.337 = 1, F = 0.008,

p = 0.93]. The results of Welch’s ANOVA for the emotions that violated the homogeneity of variance assumption were Happiness [F(1, 34.42) = 372.991,

p < 0.05]; Disgust [F(1, 25.24) = 35.752,

p < 0.01]; Surprise [F(1, 22.37) = 1.038,

p = 0.32]; For the emotions that did not violate the assumption, the results were Anger [F(1, 33.64) = 4.72,

p = 0.04]; Sadness [F(1, 23.85) = 69.972,

p = 0.00]; Fear [F(1, 19.01) = 25.13

p < 0.05]; Neutral [F(1, 31.87) = 2.14,

p = 0.15], (see

Figure 4).

Post hoc comparisons were conducted using the Games–Howell correction. In the classic task, the emotion that was best recognized was Disgust, being significantly different (p < 0.05) from four emotions (Happiness, Anger, Surprise, and Fear). This was followed by Fear, which significantly differed from Sadness, Disgust, and Neutral (p < 0.05), and Neutral, which differed from Fear, Anger, and Happiness. Significant differences (p < 0.05) were found in Happiness and Anger compared to Disgust and Neutral. Finally, in the Surprise emotion, we only found statistically significant differences (p < 0.05) with the Disgust emotion, and in Sadness, we only found statistically significant differences with Fear.

In the 3D Environment task, the emotion that achieved a better level of recognition was Sadness, where statistically significant differences (p < 0.05) were found compared to Happiness, Anger, Disgust, Surprise, Fear, and Neutral. This was followed by Fear, in which statistically significant differences (p < 0.05) were found compared to Happiness, Disgust, and Surprise. In third place, we found Happiness, Anger, and Surprise all of which differed significantly (p < 0.05) from Sadness and Fear. Finally, Neutral and Anger significantly differed (p < 0.05) from Sadness. The remaining differences were not statistically significant (p > 0.05).

3.2. SEES Scale (McAuley and Courneya, 1994)

The results of the test for normality and homogeneity of variance showed that only in the case of Lack of Activation, were these assumptions not met [U = 35= −2.30; p = 0.21], so the data for this group were analyzed using a Mann–Whitney U non-parametric test for two independent samples. In the other two factors, Psychological Well-being and Fatigue, an independent samples t-test was used. There were no statistically significant differences in the Psychological Well-being variable between the scores of participants who performed the Classic Task [M = 17.28, SE = 4.54] and those who did the 3D Environment Task [M = 19.47, SE = 4.79] [t = −1.43 = −1.517, p = 0.16] or were there statistically significant differences in Fatigue between the scores of participants who did the Classic Task [M = 10.47, SE = 4.78] and the 3D Environment Task [M = 13.72, SE = 5.12]; [t = 1.98 = 35, p = 0.06]. However, statistically significant differences were observed when comparing both groups regarding the Lack of Activation factor [Z = 2.302= 35, p = 0.02] with a very high effect size (d = 2.59). Specifically, the group that performed the task in the classic format scored higher (M = 23.17) than the group that performed the task in the 3D environment format (M = 15.05).

3.3. Ad Hoc Questionnaire Results

Regarding the ad hoc questionnaire, no statistically significant differences were found in any of the questions (See

Table 2).

4. Discussion

The aims of this study were, firstly, to design a task in a 3D environment format to assess the recognition of emotional facial expressions and, secondly, to conduct a pilot study to compare the performance and subjective experience (psychological well-being, lack of activation and psychological distress) in this task with that obtained in a similar task developed in a classic format. After achieving the first objective and finalizing the task design, we conducted a pilot study. The results indicated that, although there were overall differences in performance between the two tasks in favour of the 3D task, these differences became even more evident when analyzing each emotion individually. In the case of Sadness, the scores were notably lower in the 3D task compared to the classic task, which is consistent with the literature. Previous studies suggest that negative emotions, such as sadness, tend to be more difficult to recognize than the positive ones [

28,

29,

30,

31]. This difficulty may be explained by the tendency of people to focus more on positive stimuli, leading to reduced sensitivity to negative emotions [

32]. Moreover, cognitive biases may further distort emotional perception, causing a stronger emphasis on positive emotions while diminishing attention to negative ones [

33]. However, it is important to note that not all negative emotions behave similarly; for example, fear is often processed more rapidly due to its evolutionary significance in threat detection [

31,

32].

This pattern of negative emotion recognition suggests that this new task better captures the phenomenon by getting closer to the person’s real capacity in terms of emotional facial recognition. The results of this study seem to indicate that our first hypothesis is fulfilled; the 3D task could be used, like classical matching tasks, to explore and evaluate the recognition of emotional facial expressions. These results, together with other previous studies [

2,

14,

17], could suggest that tasks in 3D or Serious game environments are as effective as conventional tasks.

On the other hand, it is worth noting that of the three factors measured by the SEES scale, there were statistically significant differences in participants’ Lack of Activation in favour of the 3D Environment task. This improvement in activation occurs even though participants who completed the 3D Environment task had to perform a series of preliminary tasks that could have resulted in a lack of activation, tasks that participants who completed the classic task did not have to perform (e.g., the entire process related to downloading and installing the 3D task). Even considering the need to expand this pilot study, the data obtained suggest that our second hypothesis is partially fulfilled. This hypothesis referred to the idea that the 3D Environment task might be more attractive and dynamic, leading participants to manifest higher levels of psychological well-being, lower psychological distress or lack of activation, and a decrease in perceived fatigue compared to the conventional task. As we have observed, this hypothesis has been fulfilled regarding the lack of activation [

5,

6,

34].

Regarding the questions formulated to explore other aspects of the task, we observed that participants who completed the task in the 3D environment liked it on average 1.03 points more out of 7 than those who performed the task in the classic format. All the participants who completed the task in a 3D environment would be willing to do a similar task again, while 88.88% of those who completed the task in classic format expressed the same intention. Furthermore, the task was considered easy by 100% of those who completed it in its classic version, while 94.73% of those who completed it in the 3D Environment format found it easy. Additionally, responses to open-ended questions revealed that participants who completed the task in the 3D environment encountered difficulties in downloading and executing the task file. This suggests that addressing this issue, for example, by conducting the task in a laboratory setting where participants do not have to learn how to execute the file, or by programming an online version like various software packages for psychology experiment generation, could be beneficial.

Although these results could mean that the 3D Environment task designed in this study could be used, like more traditional matching tasks, to explore and assess the recognition of emotional facial expressions, we believe that further studies are needed to extract more conclusive data. Several reasons lead us to be cautious. Firstly, although no gender differences were found, we believe that the observed variability when analyzing each emotion separately could be due to this study being conducted with a small sample size and without controlling for the proportion of men and women in each group, a factor that can influence the recognition of emotional facial expressions. This gender imbalance is largely due to the fact that most participants were psychology students, a field with a higher proportion of female students. Furthermore, this study was conducted during the COVID-19 pandemic, which significantly limited access to participants and made it difficult to achieve a more balanced sample. Additionally, due to the limitations imposed by the pandemic, the experiments were administered online, which likely influenced the results obtained as environmental variables that can be minimized (e.g., the presence of distractors) during the experiment in a laboratory setting could not be controlled. In fact, by being conducted online, the 3D Environment task proved to be more complex than the classic task, requiring prior installation on participants’ computers, which, on more than one occasion, led to issues. Therefore, it would be advisable to repeat this study with a larger sample and preferably in a laboratory setting.