1. Introduction

Digital Twin has emerged in all fields, especially in smart cities and industrial control systems (ICSs). In an ICS, physical assets, such as Programmable Logic Controllers (PLCs), rarely update their firmware and software, which exposes them to security issues since any malicious update could result in catastrophic damage depending on the industry field. The infrequent updates are due to the high cost of maintenance in industrial systems because of stopping them, updating their firmware, and then restarting them [

1]. Digital Twin presents a good solution to solve the issue by controlling physical assets through their replica and therefore decreasing the maintenance costs. However, Digital Twin increases the attack surface since organizations must protect both physical assets and their replicas.

Generally, after each security incident, an investigation process takes place to determine the incident’s causes, missing controls, and possible prosecution of the attackers. Digital forensics is the field that deals with the collection, preservation, analysis, and reporting of evidence to preserve the organization’s rights. Digital Twin provides the feature of forensic readiness, so before any incident, forensic techniques are implemented to ensure that relevant data trails related to the incident are recorded in a forensically sound manner [

2]. Digital Twin provides the opportunity to conduct an investigation without shutting down industrial systems to reduce financial losses and shutdown time [

3].

To the best of our knowledge, no existing approach provides a forensics-aware Digital Twin architecture and an ontological knowledge data model to store forensics requirements in a forensics-aware manner before an incident occurs in a Digital Twin ecosystem. The only similar work, in [

3], focuses only on the fidelity representation of a digital replica without explicitly ensuring other forensic requirements during data collection, preservation, analysis, and reporting. However, such an architecture faces several challenges in enabling forensic readiness. Firstly, a Digital Twin generates a large volume of data to keep a real-time replica, where some of them are damaged or incomplete [

4], making the preservation of data integrity and authenticity very difficult [

5]. The issue of damaged data or incompleteness could affect the decision-making process and violate the forensic requirements. Secondly, a Digital Twin may be compromised, leading to unverifiable digital records, timestamping, and trusted logging, which increases the need for a suitable technique to ensure accurate and verifiable data provenance [

6]. Thirdly, a Digital Twin requires real-time forensics mechanisms that work with low-resource devices such as IoT sensors and actuators [

7]. Fourthly, consistency and real-time synchronization are important to ensure that a DT reflects real physical systems and avoids any wrong forensic conclusions [

8]. Lastly, preserving privacy is required during forensic data collection to avoid any rejection of evidence due to privacy infringements [

9].

In this paper, we propose a new Digital Twin architecture that considers forensics requirements from the design phase and adopts ontological data representation to enable flexibility in defining forensics rules, extensibility to cover organization and country regulations, and the automated detection of forensics requirement breaches through Semantic Web Rule Language (SWRL) rules. We defined a Digital Twin forensics adversary model to formally analyze the ability of the architecture to deal with multiple kinds of security and forensics violations. More specifically, we present the following contributions:

We present the forensics challenges in shifting to a Digital Twin environment and the limitations of existing studies and frameworks.

We propose a forensics-aware Digital Twin architectural model that incorporates forensics requirements.

We create a new ForensicTwin ontology-based knowledge model that comprehensively includes forensics admissibility requirements and the representation of Digital Twin features.

We validate the architecture and the ForensicTwin knowledge model through the proposition of a new forensics twin adversary model and formally demonstrate the ability of the proposed architecture and data model to preserve forensics requirements in a Digital Twin environment.

We present a comprehensive set of techniques and methods to cover all forensics requirements. In existing works, such as [

3], the authors only treat the challenge related to consistency and real-time synchronization. They introduce the term “fidelity” to ensure the correct synchronization of the Digital Twin replica. However, digital forensics requirements are not limited to fidelity (time integrity) but also encompass authenticity, comprehensiveness, and relevance requirements. Our proposed architecture includes the required techniques, rules, and queries to ensure the forensic soundness of the gathered data. The effort in [

10] only uses Digital Twin replicas to track drone accidents, focusing on time synchronization and orderliness. They do not consider authenticity, security attacks targeting the transmitted data, or the forensic verification of evidence admissibility. Our proposed approach uses ontologies to store the gathered data, SWRL rules to detect any forensic violations, and SPARQL queries to extract relevant data. We deployed multiple techniques to ensure data and transaction integrity.

The rest of this paper is structured as follows: In

Section 2, we present and discuss relevant existing works that treat forensics and security using a Digital Twin solution.

Section 3 presents the definition of Digital Twin and forensics requirements; also, it defines a new ForensicTwin adversary model and corresponding notations. In

Section 4, we present and describe our new forensics-aware digital twin architecture Design.

Section 5 portrays the new ForensicTwin ontology-based knowledge model that stores Digital Twin data while preserving forensics requirements and automates forensics breach detection through the definition of SWRL rules. In

Section 6, we provide a forensics analysis based on the adversary model regarding the ability of the architecture and data model to preserve forensics requirements. In

Section 7, we provide a simulation implementation to validate our architecture, and we proceed with a performance study. The approach is discussed and concluded in

Section 8.

2. Related Works

Existing research studies that employ Digital Twin for forensics purposes are very rare. In this paper, we depict the relevant efforts using Digital Twin for investigative purposes, as well as the analysis of intrusions.

In [

3], the authors propose using replication-based Digital Twins for forensic investigations of industrial control systems (ICSs). It allows the analysis without taking the real system offline. This method enables the selection of appropriate tools for evidence acquisition and is evaluated through a prototype implementation. Similar efforts in [

11] discuss a Digital Twin replication model that enhances security in industrial ICS, allowing for secure data sharing and control of security-critical processes, which can improve forensic analysis by providing synchronized and protected software states for investigation. The authors of [

12] discuss using Digital Twins for simulating diverse threat scenarios in Cyber-Physical Systems, enhancing forensics by generating rich datasets for training deep learning models, thus improving threat detection and classification in industrial control systems.

Another range of research studies focuses on using Digital Twins for detecting cyberattacks in ICS, deploying a virtual model for both physical and control layers [

13] or identifying critical cyberattacks through an Augmented Digital Twin methodology, emphasizing the impact on system operation via Key Performance Indicators [

14].

In [

15], the authors present a methodology that utilizes Digital Twins and process mining to enhance anomaly detection in ICS by identifying cyber-physical attacks by analyzing event logs derived from raw device logs, thus improving forensic capabilities.

Also, Digital Twin forensics is used to investigate drone operations. In [

10], the authors use Digital Twin technology to create virtual replicas of drones, which facilitates the forensic analysis of their operational parameters during accidents.

In

Table 1, we summarize the different existing studies, depicting their limitations, and in the last row, we present our proposed approach, showing how it overcomes the current studies.

3. Digital Twin and Forensics Concepts and Requirements

3.1. Digital Twin Concepts

Digital Twin (DT) is the virtual representation of a digital replica of physical assets. An asset could be a hardware device, a system, or a process. The DT model is used to monitor, simulate, and provide real-time responses. It enables a better understanding of the physical assets, allowing the stakeholders to optimize the performance of the physical twin [

16]. We distinguish four key enablers to build a Digital Twin system [

16]:

Virtual replica: the Digital Twin representation of the physical objects, systems, or processes.

Data integration: the basis of the Digital Twin through the collection of all required data to virtually create a digital replica. It includes data collection through IoT devices and cameras—3D scanning, for example. Then, the data are mapped into a digital representation such as XML or JSON format, followed by a data processing phase to correlate, fuse, or clean the data. Finally, they are transmitted to the Digital Twin layer.

Real-time synchronization: an important aspect to enable the Digital Twin’s consistency and fidelity.

Simulation and Analysis: using the collected data, the Digital Twin will simulate the real physical twin and provide information about the possible optimization before implementing them in the physical twin.

Another recommended and important aspect is the predictive capabilities through the analysis of the collected data and the estimation of suitable decisions to improve the performance and income of the system.

3.2. Forensics Concepts and Requirements: Background

The science of digital forensics receives increased attention due to the increased range of cyberattacks and the need to reconstruct an admissible set of evidence to condemn or anticipate unauthorized events. Digital forensics, as defined by [

17], is

“The use of scientifically derived and proven methods toward the preservation, collection, validation, identification, analysis, interpretation, documentation and presentation of digital evidence derived from digital sources for the purpose of facilitating or furthering the reconstruction of events found to be criminal or helping to anticipate unauthorized actions shown to be disruptive to planned operations”.

Thus, digital investigation passes through multiple phases, starting with data collection, followed by examination, analysis, and finally, reporting the results. Evidence collection, examination, analysis, and reporting must consider a set of forensic requirements to preserve their admissibility. Any non-documented data modification, unauthorized access or alteration, or non-comprehensive and irrelevant reporting may lead to evidence rejection by the court.

We distinguish five main admissibility attributes, which are [

18]

Authenticity through the preservation of the record’s identity and integrity [

18,

19];

Privacy by circumventing any form of private information breaches during the data acquisition process [

18];

Comprehensiveness by guaranteeing that no information is absent in the conclusive report [

20];

Relevance by concentrating on the presentation of only evidence that is pertinent to the case [

18];

Not being classified as hearsay, as “electronic documents generated and created in the normal and ordinary course of business are not considered hearsay” [

19].

More specifically, authenticity and privacy must consider access control, cryptographic, and hashing techniques to preserve the requirements. However, comprehensiveness and relevance use analytical techniques to create a relevant and complete investigation report.

3.3. Forensics Concepts and Requirements: The Digital Twin Forensics Adversary Model

Aside from the aforementioned forensic requirements in

Section 3.2, we need to avoid any risk that may violate the admissibility of the collected evidence. For this sake, we need to search for a forensic adversary model in the literature.

Multiple adversary models for security purposes are identified in the literature; however, almost all of them treat the detection of intrusion or security analysis using the Digital Twin states. We distinguish the adversary model for Industrial Automation and Control Systems (IACSs) in [

11], which considers a Cloud sharing solution and does not consider any attack at the physical layer.

Another range of research papers [

21,

22] treat security evaluation via penetration testing methods, and other research efforts [

23,

24,

25,

26] focus on monitoring the physical layer using Digital Twin states and the possible detection of intrusions.

To the best of our knowledge, there are no adversary models proposed for forensic purposes. More specifically, we did not identify an adversary model that violates forensic requirements. In this section, we develop a new Digital Twin adversary model that threatens forensic requirements. We assume that the forensic requirements could be threatened via internal system failures or external attacks on all system aspects. More specifically, in this paper, we treat the following adversary scenarios:

The adversary can intercept the synchronization messages between the DT and PT, modify the state, and replay them. It could also be a kind of silent tampering, where forensic logs appear normal despite an attack.

The adversary can tamper with the forensics database and modify logs to cover traces of past attacks and delete evidence of malicious state transitions.

The attacker can launch an impersonation attack; more specifically, the attacker could inject fake updates into the system to corrupt state consistency between the digital and physical twins. Also, it raises the challenge of differentiating between legitimate and forged messages. The system fails to keep its consistency in terms of Digital Twin representation fidelity. It poses the issue of the accuracy of evidence-time-based chaining.

Failures in generating comprehensive investigation reports and extracting relevant evidence pose the risk of evidence rejection by a court of law.

In this paper, we analyzed the aforementioned adversary model attacks; however, the model could be extended by any threat that could violate forensic and security requirements. Also, we did not consider DoS attacks, and we suppose that the synchronization messages arrive at each time slot.

3.4. Formal ForensicTwin Language Notation

We define a formal forensics language notation to ensure the satisfaction of forensics and Digital Twin requirements (e.g., authenticity, integrity, and time-orderliness). It defines the interaction between the physical twin and the Digital Twin. We re-used some notations and definitions from [

3,

11].

3.4.1. Definitions

Physical Twin (PT) represents the real-world system or device being monitored (e.g., a machine, sensor, or industrial control system). We define , where U is the set of physical twins in the system.

Digital Twin (DT) is the virtual representation of the physical twin, used for monitoring, simulation, and analysis. We define , where is the set Digital Twins in the system.

States: both the PT and DT have states that change over time. For example, the state of a machine could be “on”, “off”, or “malfunctioning”.

Forensic Authenticity ensures that all state transitions and synchronization messages come from legitimate sources. Let be the forensic authenticity function.

Adversarial State Space refers to the set of all possible states or configurations that an adversary (e.g., an attacker) can induce in the system. Let be is the adversarial state space.

3.4.2. State Transitions

Physical Twin States (S): The set of all possible states of the physical system. Let , S is the set of finite set of states u of the physical twin system U. Thus, , where each represents the corresponding state in the physical system.

Digital Twin States (S’): The set of all possible states of the Digital Twin. Let , where is the set of finite set of states of the Digital Twin system . Thus, , where each represents the corresponding state in the Digital Twin.

We define as representing the state transition functions.

3.4.3. Inputs and Outputs

Inputs to PT (I): Let , I be the set of finite inputs to the physical system U. Thus, , where each is an input (e.g., a command to turn on a machine).

Inputs to DTI’: , where is the set of finite inputs to the Digital Twin system . Thus, . These inputs can cause the Digital Twin to change states. These inputs are typically synchronized with the physical system.

3.4.4. Synchronization Messages

Synchronization messages (m): messages are exchanged between the PT and DT to keep their states synchronized. Let represent the synchronization messages between the physical and Digital Twin systems.

Encrypted messages (e): to ensure security, synchronization messages are encrypted. Let represent the encrypted synchronization messages between the physical and Digital Twin systems.

3.4.5. Time and Order

Time (t,t’): both the physical and Digital Twins operate in real-time. The time t represents the time in the physical system and represents the time in the Digital Twin. For forensic purposes, it is crucial that t and are synchronized.

Time-Orderliness: the states of the Digital Twin must reflect the states of the physical twin in the correct chronological order. Thus, if the physical system transitions from state to at time , the Digital Twin should also transition from to at the same time.

We define and as the transition function for the physical and Digital Twin, where and , respectively.

3.4.6. Cryptographic Techniques

Hashing (H): a cryptographic hash function is used to ensure the integrity of data. Let is a hash of state transitions for forensic integrity, which can be used to detect tampering.

Digital signature (): messages are signed using a private key to ensure authenticity. Let be the digital signature of message M using key k.

Signature verification (): the recipient verifies the signature using a public key. Let be the signature verification function, which checks if the signature is valid for messages M using the key k.

Nounce: Let be a nounce used for freshness verification.

4. Forensics-Aware Digital Twin Architecture Design

We propose a multi-layered design architecture for a forensics-aware Digital Twin system that enhances data reliability, security, and interoperability across physical and digital environments as depicted in

Figure 1. It mainly contains two layers: the physical layer and its twin layer. The proposed architecture allows for handling the heterogeneity of assets and data while preserving forensic requirements, making it scalable, adaptable, and highly effective for managing complex cyber-physical systems. Details about the architecture design ingredients and features are explained in the subsequent sections.

4.1. Architecture Ingredients

We detail in this section different architecture ingredients.

4.1.1. Physical Layer

The physical layer represents the real physical assets such as machinery, vehicles, or any other equipment. Physical assets have sensors (IoT devices) to continuously capture real-time data about their current state, such as temperature, pressure, video, vibration, and location, depending on the asset type. Sensors are the means to reflect the state of physical assets to their Digital Twin; thus, sensor accuracy and quality play a vital role in producing a high-fidelity Digital Twin replica.

Fidelity requires preserving data from alteration or loss to reflect the real physical system. Thus, we add a tagging/labeling feature during data collection from sensors onsite before transmitting data to ensure data integrity. In this paper, we are the first to incorporate required forensic features that equally preserve fidelity during the data collection phase. We enriched the captured data by sensors with metadata to ensure authenticity, integrity, and time-stamping. These attributes, as explained in

Section 3.2, ensure that data remain valid, trustworthy, and traceable, which are essential features in forensic analysis. The immediate authenticity, integrity, and time-stamp tagging ensure that data are original, untampered, and aligned with the physical environment in terms of time.

We generate a unique identifier for each piece of data, composed of the assetID, sensorID, and Timestamp. Also, we generate a hash of each piece of data using accurate hashing techniques at the point of capture to represent its contents. Additionally, we generate metadata that contain the values of the captured data.

The hash is formed from the metadata and the generated UID. If the data are altered at any point, the hash value will no longer match, enabling the detection of tampering.

4.1.2. Digital Twin Layer

The Digital Twin layer is the digital representation of physical assets. We divide the Digital Twin layer into two sublayers, named data and application layers.

Data layer: Data collected from sensors and tagged in the physical layer are then preprocessed and filtered before being stored in the knowledge base. The data transfer is based on a secure, connection-oriented protocol to ensure data integrity. The preprocessing also checks the integrity of the received data via the computation of a hash value of each piece of data and compares them to the received hash value. Then, the data are stored in the knowledge base. We adopt an ontology-based representation as the knowledge base. Our choice is motivated by the features provided by ontologies since this is a structured format that defines relationships, attributes, and concepts. It defines entities (such as assets, events, and access permissions) and their interactions, allowing for real-world representation and data coherence. Since our data are timestamped in the physical layer, it allows the ontology for temporal modeling, where each data point in the Digital Twin has a corresponding timestamp that aligns it with the real-world timeline. Thus, we ensure data replica consistency.

Since our architecture aims to preserve scene evidence and ensure high fidelity, we feed our knowledge base with external enterprise contextual information, including user information, access rights, and roles. It is important to understand the different interacting entities with the stored data. External data include every piece of data not issued by sensors and essential to represent the Digital Twin replica and preserve security and forensics requirements.

Application layer: It contains the components that the end user interacts with. It provides the ability to monitor, control, and analyze events generated by physical assets. This layer contains

Visualization component: It provides a visual representation of physical assets and data analysis. It enables users to inspect and interpret data in multiple formats and at different timestamps. Furthermore, it allows the user to verify that the Digital Twin accurately reflects the current state of physical assets and detects any anomalies.

Control component: It differentiates Digital Twins from simulations. It actively controls the data flow between the physical and digital layers. It enforces access policies and ensures data flow according to predefined rules. Control mechanisms vary from access control and user authentication to physical asset adjustments or interventions as necessary.

Forensics analysis component: It is designed specifically to conduct forensic analysis. It checks data integrity, accuracy, and authenticity from lower layers. These checks help identify any potential inconsistencies or errors, ensuring that the Digital Twin remains a trustworthy source of information. It detects, using ontology rules and queries, any forensic requirements violations and alerts the user. It provides an analysis interface to search for evidence, forensically process it, and generate reports.

4.2. Architecture’s Features

Our proposed architecture, by design, provides multiple features and advantages, detailed below.

4.2.1. High Fidelity

As per the definition by [

27], “A Digital Twin is a virtual double of a system during its lifecycle, providing sufficient fidelity for security measures via the consumption of real-time data when required”, the fidelity of a Digital Twin is of paramount importance to effectively represent physical assets. The fidelity requires the careful preservation of two parameters: state and time orderliness. An ideal Digital Twin requires

, or a high-fidelity Digital Twin replicated as a subset of the real system corresponding to

, where

[

3]. Also, fidelity requires a time-orderliness to replicate a real system accurately. Let

represent the real system at time

t where the initial state is

. The Digital Twin replicates each state

in chronological order so that

.

In [

3], the authors did not treat the time delay issue, since their architectural design does not include authenticity or time-stamping features. Our proposed architecture transmits data to the application layer with real-time timestamps, which improves the fidelity of Digital Twin representation. Thus, the

t in the Digital Twin is the same as that captured in the real physical system.

4.2.2. Easy Integration and Adaptability

The proposed architecture facilitates easy integration and adaptability of enterprise assets into a Digital Twin by using existing standard ontologies when possible, such as SSN/SOSA ontology-based knowledge representation, which unifies diverse data sources and systems to avoid huge changes in the enterprise’s database. The ontology acts as a semantic model to unify the representation of assets, sensors, and processes. It maps physical and cyber elements into structured relationships, ensuring that assets can be integrated with minimal reconfiguration. We integrate multiple entities from the Unified Cyber Ontology (UCO) [

28] and Cyber Investigation Analysis Standard Expression (CASE) [

29] to represent forensic attributes and relationships.

4.2.3. Built-In Forensics Requirements

The main contribution of our proposed architecture is the integration of forensics requirements during the creation of the Digital Twin. We tagged the collected data from physical assets to ensure data integrity, authenticity, and time accuracy. These features are essential to preserve forensic requirements and maintain the chain of custody integrity [

19,

30]. By tagging data directly at the physical layer, the system ensures that each data point visualized in the Digital Twin layer is uniquely identifiable, traceable, and time-ordered as it originally happened.

4.2.4. Chain of Custody

The metadata added during data collection at the physical layer ensure the preservation and record of the entity collecting the data, the exact event timestamp, and their integrity. These elements are essential to validate the integrity of the chain of custody attributes required in forensic analysis [

19]. Additionally, through the use of a forensics-aware knowledge base that integrates forensic attributes, the chain of custody will be maintained. More specifically, the ontology helps enforce the chain of custody by defining roles, events, and dependencies according to forensic requirements. Thus, we can easily verify the validity of each recorded datum through ontology queries [

31].

4.3. Forensics Requirements Formalism

In this section, we provide the formal representation of the forensics requirement that our architecture supports.

4.3.1. Forensics Authenticity

A Digital Twin system must ensure that all state transitions and synchronization messages are cryptographically authenticated. Unauthorized modifications, replayed messages, or impersonated messages must be detectable and preventable. More specifically, we need to ensure the authenticity of the synchronization messages and state transitions as follows.

Authenticity of Synchronization Messages

We deploy cryptographic techniques to ensure the authenticity of the synchronized messages. Thus, any message is signed by the allowed entity. Let be a cryptographic signature scheme with a signing function and a verification function .

The Digital Twin signs its message before sending:

The physical twin signs its response message:

The recipient verifies the signature before processing the message:

If valid, accept the state update.

If valid, accept the response update.

Authenticity of State Transitions

We also need to ensure the authenticity of the state transitions of digital and physical twins. The goal is to allow the investigator to attribute state changes to legitimate actions. Only verifiable origins are allowed.

Let us define a state authenticity function

that maps each state transition to a verifiable origin:

The same applies to the physical twin:

Thus, we ensure that only cryptographically verified state updates are accepted, preventing unauthorized state manipulation or spoofing attacks.

4.3.2. Forensics Integrity

The forensic integrity is involved during the message transmission and at rest. Thus, all messages and database files must preserve their integrity. So, we need to ensure that the database and messages are only modified by authorized entities and detect any illegal modification. In our architecture, for database files, we use the log hashing technique, and for message exchange integrity, we enforce cryptographic signatures on all messages.

Enforcing cryptographic encryption is already defined earlier in Equations (

1) and (

2). However, the log hashing technique is defined as follows:

where

is the hash of the log entry at time t.

is the hash of the previous log entry (at time ).

represents the actual log data time at t.

H is a cryptographic hash function (e.g., SHA-256) that generates a fixed-size string of characters that uniquely represents the input data.

After applying Equation (

5), each log entry is hashed by concatenating the hash of the previous log entry

with the current log entry

, then hashing the combined data. This creates a unique “chain” of hashes. The cryptographic hash function ensures that even a tiny change in any part of the log entry will result in a completely different hash, which makes it easy to detect tampering.

4.3.3. Forensics Time Orderliness

As mentioned in

Section 4.2.1, we add a timestamp to each collected data from the physical assets before transmitting and storing them in the knowledge database. The goal is to preserve the real issuing of the data, so even if the system faces failures in the transmission or storage phases, the occurrence time is accurate. The time consistency aims to preserve the time orderliness of the events (i.e., the correct sequence of events). Thus, investigators are able to reconstruct incidents, identify anomalies, and attribute malicious activities. The consistency condition in a Digital Twin system maintains chronological integrity during an investigation.

The consistency is defined by the function

where

maps physical twin states (

) to Digital Twin states (

), ensuring the Digital Twin model correctly represents the physical system. So,

Thus, if a state transition occurs in the physical twin using its state transition function , the corresponding state in the Digital Twin should transition in the same way under . Consequently, the mapping is preserved across transitions, ensuring that both systems evolve synchronously.

4.4. A Walkthrough Case Study

To better describe the model’s main components and their roles, we proceed with a brief case study description that will be used during the validation section. We consider an ICS running a firmware update, where an attacker attempts to inject malicious firmware into a Programmable Logic Controller (PLC) by intercepting and modifying legitimate update messages and then replaying outdated updates to evade detection. The proposed ForensicTwin model deals with these attacks through multiple layers of defense. First, in the physical layer, during the labeling and tagging phase, each firmware update is cryptographically signed to ensure its authenticity and to allow the verification of the updates that originate from trusted sources. Second, in the physical layer, we embed nonces in each message to detect replay attacks, which trigger alerts if duplicate nonces are observed. Third, we use hash-chaining techniques to link each synchronization message to its predecessor to ensure data integrity and chronological order. Consequently, any tampering invalidates the hash chain and enables the detection of integrity requirements. In the Digital Twin layer, the ForensicTwin ontology (knowledge base) automates the detection process using SWRL rules. For instance, we define an SWRL rule to identify duplicate cryptographic signatures within a short timeframe as replay attacks, and another rule that compares expected and observed state hashes to flag integrity violations. Also, the ontology is fed by organizational policies and requirements to control access to data and consider country regulations. In the application layer, the forensic analysis component uses SPARQL queries to extract forensic evidence, such as unauthorized updates or tampered logs, to provide comprehensive forensic reports. By integrating these mechanisms, the architecture ensures secure, forensically sound Digital Twin operations while minimizing disruption to physical assets.

5. ForensicTwin Ontology-Based Knowledge Data Model

The unified data model integrated within the proposed architecture allows for smooth interoperability of the different heterogeneous physical and software assets. More specifically, the modular and extensible ontology-based data model ensures high scalability and adaptability and provides context-rich representations across digital environments. Ontologies allow for semantic enrichment and structured relationships, which enhance the representation of the twin replica to reflect the real physical assets precisely. The ontology data model fosters the integration of security, forensics, and organizational policies together and enables the definition of relations between them, which facilitate fast and intelligent analysis of the gathered data and the automatic detection of policy violations based on predefined ontology rules, more specifically SWRL rules.

More specifically, the proposed ontology, which we named the ForensicTwin ontology, is a structured knowledge framework designed to model and analyze cybersecurity incidents in cyber-physical systems (e.g., industrial IoT) by integrating Digital Twins with forensic investigation principles. It combines existing standards and novel components to enable the automated detection of attacks, integrity validation, and compliance with forensic best practices. The ontology is available, and sharing details are in the

Supplementary Section.

Following, we will detail the different components and attributes of the data model.

5.1. Ontology Modules

The proposed ontology encompasses reused modules from existing ontologies, standards, and research papers and newly defined attributes. We encourage the reuse of existing reputed ontologies or parts of them whenever possible to enhance ease of integration and adaptability.

During the scoping process, we distinguish two ontologies, which we reuse parts of. Mainly, we adopt multiple entities from the Unified Cyber Ontology (UCO) [

28] and Cyber Investigation Analysis Standard Expression (CASE) [

29] to represent forensic attributes, detect cyberattacks, investigate anomalies, and relationships.

Another important reference [

27] is used to define new ontology attributes to represent the fidelity requirements. We use Semantic Sensor Network (SSN) and IoT-lite ontologies to represent the Internet of Things (IoT) resources, entities, and services, which are the same physical twin assets. More specifcally, IoT-lite represents IoT devices and can model DT sensors, actuators, and data streams. SSN is useful for defining sensor data flows in DTs.

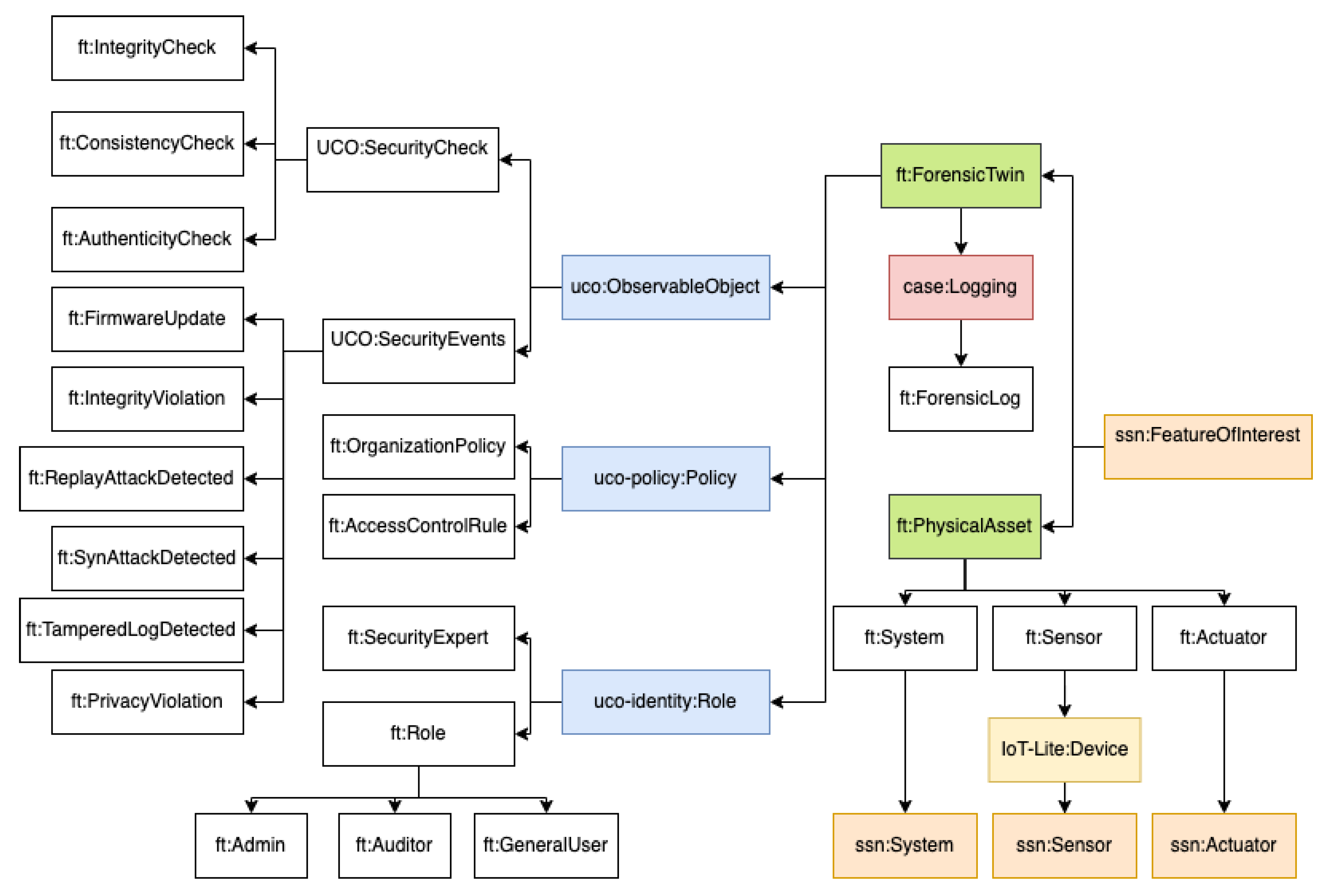

Figure 2 depicts the main proposed and imported classes of the forensic twin ontology.

Table 2 depicts the different selected standards and research efforts and their adaptation tailoring process. Also, it shows the newly proposed modules and extensions to existing standards.

5.2. ForensicTwin Ontology: New Classes and Properties

Existing ontologies (CASE/UCO) focus on cybercrime investigation, but they lack Digital Twin modeling. Also, SSN and IoT-lite focus on sensors but do not enforce authenticity, integrity, or forensic security. To support Digital ForensicTwin, new classes and properties are needed for real-time monitoring, forensic integrity, and governance policies.

Mainly, we added the following classes:

ForensicTwin represents the virtual counterpart of a physical asset. More specifically, we map ForensicTwin as a subclass of because represents any entity or phenomenon that can be observed, measured, or analyzed by a sensor system.

PhysicalAsset represents the real-world system or device. We use ssn:FeatureOfInterest (SSN) to represent the real-world system being monitored.

SecurityCheck is used for security verifications.

AuthenticityCheck is a subclass of that verifies the authenticity of data/events.

IntegrityCheck is a subclass of that ensures state consistency between physical and Digital Twins.

ConsistencyCheck is a subclass of which checks if real-world changes are reflected in the Digital Twin.

SecurityEvent is used to capture security incidents in the system. We use the uco-observable:ObservableObject (UCO) to map general event tracking.

ForensicLog is used to store immutable forensic records with hash chaining. We use the case:Logging (CASE) to map logging mechanisms for forensic investigations.

OrganizationPolicy is used to define external policies for governance. We use uco-policy:Policy (UCO) to map policy definitions in UCO.

SecurityExpert is defined to represent authorized personnel responsible for updates. We use uco-identity:Role (UCO) to represent personnel authorized to make security decisions.

The newly proposed properties are

hasTimestamp (SecurityEvent → float): ensures event records have timestamps.

hasSignature (SecurityEvent → string): stores cryptographic signatures for authenticity.

isEncrypted (SecurityEvent → boolean): tracks the encryption status of an event.

hasStateHash (DigitalTwin → string): stores the hashed state of the Digital Twin.

expectedStateHash (DigitalTwin → string): represents the expected correct hash.

observedStateHash (PhysicalAsset → string): captures the actual observed state hash.

triggers (PhysicalAsset → SecurityEvent): links a physical asset to a triggered security event.

hasPreviousHash (ForensicLog → string): supports hash chaining for forensic logs.

hasLogData (ForensicLog → string): stores data recorded in forensic logs.

allowsUpdate (OrganizationPolicy → SecurityExpert): specifies who is authorized to update devices.

updatesDevice (SecurityExpert → PhysicalAsset): defines the relationship between security experts and the assets they can update.

The ForensicTwin ontology combines UCO, CASE, SSN, and IoT-lite to provide a forensic-aware Digital Twin for multiple domains such as industrial control systems (e.g., a PLC). SSN and IoT-lite define physical assets and IoT devices. Thus, we ensure that physical assets are modeled with timestamps, encryption, and signatures for data authenticity. UCO and CASE enable forensic analysis by structuring security events, forensic logs, and evidence collection, which ensures that every event is logged, hashed, and encrypted to prevent tampering. For example, when a PLC firmware update occurs, SSN and IoT-lite record its state change. UCO and CASE log the event’s timestamp, digital signature, and execution trace. The SWRL rules prevent attacker attempts to launch a replay attack (reusing an old firmware update), since the defined SWRL rules detect duplicate hashes and timestamps automatically within a short time window. Also, through the use of ontology features, we detect a synchronization attack, where an attacker delays updates to the Digital Twin by comparing the PLC’s real-time state (SSN) with the expected state in the Digital Twin using SWRL rules. This ontology ensures a trustworthy, verifiable, and tamper-proof representation of a Digital Twin system, enabling robust forensic investigation and attack detection.

7. Validation and Performance Study

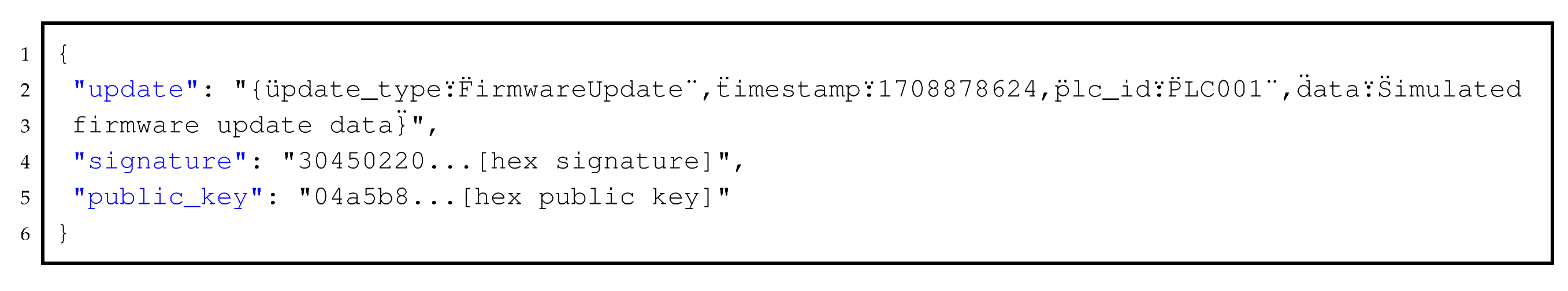

In this section, we implement and test the resistance of the proposed architecture against the attacks defined in the adversary model. So, our validation is divided into two parts: the first is a simulation use case of an industrial scenario to validate the resistance against authenticity forensic requirement attacks and the second is running SPARQL and SWRL rules to detect any forensic violations and extract relevant evidence.

7.1. Used Tools

We integrate multiple tools to ensure secure execution, anomaly detection, real-time monitoring, and forensic log integrity during the simulation of the Digital Twin system. We use ECDSA (Python ecdsa) for firmware signing and verification, which prevents unauthorized updates. We use SHA-256 hashing (hashlib) to ensure hash chaining and, therefore, prevent replay attacks and log tampering. We use Docker for containerized deployment to enhance portability and secure execution across different environments. We implement the ontology using owlready2 (Python library), which supports SWRL rules and SPARQL queries.

Table 3 summarizes the different tools and techniques used and their purpose.

In this paper, for clarity and easy understanding of codes, we present pseudocode instead of Python code snippets.

7.2. Use Case: Detecting a Firmware Injection Attack

We simulate a firmware injection attack by injecting malicious firmware into a Programmable Logic Controller (PLC). This can result in a system compromise and production disruption.

We suppose that the attacker can monitor the firmware update process between the Digital Twin and PLC (physical twin). They then identify a weak integrity verification. Through a phishing email, the attacker is able to gain administrative access. Next, they inject malicious firmware by modifying a legitimate update and exploiting state synchronization vulnerabilities. To evade detection, they replay old update messages. Once deployed, the malicious firmware can disable security mechanisms, alter control logic, and send false operational data to the Digital Twin, which may cause industrial disruptions.

7.2.1. Secure Firmware Signing

We avoid unauthorized firmware updates that threaten the authenticity of messages through the deployment of a digital signature. This ensures that the firmware update comes from trusted sources and uses a nonce and hash chaining to prevent the reuse of old messages.

So, we implement a forensic security mechanism using cryptographic authentication to secure firmware signing. We implement a simple Python script to securely sign any message. Python is supported by a wide range of security libraries that are useful for signing and securing messages.

The pseudocode depicted in Algorithm 1, prevents unauthorized firmware modifications and verifies the authenticity of updates before execution.

| Algorithm 1 Firmware signing and verification using Python ECDSA |

- 1:

Generate Key Pair - 2:

SigningKey.generate(curve=NIST256p) ▹ Generate private key - 3:

▹ Extract public key - 4:

function sign_firmware() - 5:

Input: Firmware code as a string - 6:

Output: Digital signature - 7:

return - 8:

end function - 9:

function verify_firmware() - 10:

Input: Firmware code and its digital signature - 11:

Output: Boolean (True if verification succeeds, False otherwise) - 12:

return - 13:

end function - 14:

Example Usage - 15:

▹ Define firmware code - 16:

▹ Sign the firmware - 17:

assert verify_firmware() ▹ Verify the signature

|

7.2.2. Preventing Replay Attacks Using Nonces

We use nonces to detect replay attacks in a firmware update system. Briefly, if the new matches the previous one, it indicates a replay attack, and an alert is triggered. Also, if the nonce is unique, it is stored as the for future verification.

The pseudocode depicted in Algorithm 2 ensures that all messages are unique and not issued from replay attack.

| Algorithm 2 Replay attack detection using nonces |

- 1:

Global Variable: - 2:

▹ Stores the last used nonce - 3:

function generate_nonce - 4:

Output: A unique nonce - 5:

return - 6:

end function - 7:

function verify_nonce() - 8:

Input: A new nonce to verify - 9:

Output: Boolean (True if valid, False if replay attack detected) - 10:

if then - 11:

print “[ALERT] Replay Attack Detected!” - 12:

return False - 13:

end if - 14:

▹ Update the previous nonce - 15:

return True - 16:

end function - 17:

Simulated Firmware Update - 18:

▹ Generate a valid nonce - 19:

▹ Simulate a replayed nonce - 20:

print ▹ Should return True - 21:

print ▹ Should trigger replay alert

|

7.2.3. Hash Chaining for Secure Synchronization

Hash chaining ensures that each synchronization message is linked to the previous one, preventing attackers from reusing old messages (replay attacks).

The pseudocode depicted in Algorithm 3 prevents the modification of logs.

| Algorithm 3 SHA-256 hash chaining for replay attack prevention |

- 1:

Initialize: - 2:

- 3:

function compute_hash() - 4:

▹ Ensure consistency - 5:

- 6:

- 7:

return - 8:

end function - 9:

function send_message() - 10:

Global - 11:

- 12:

- 13:

- 14:

return - 15:

end function - 16:

function verify_message() - 17:

- 18:

return - 19:

end function - 20:

Example Usage: - 21:

- 22:

- 23:

▹ Should be True - 24:

▹ Should be False - 25:

- 26:

|

7.3. Detecting ForensicTwin Attacks Using SWRL Rules

SWRL rules enhance the forensic capability of Digital Twins through the automatic detection of forensics and security violations in real time. In our ontology, SWRL rules identify authenticity attacks by verifying if firmware updates originate from authorized security experts, replay attacks by detecting repeated update hashes within a short interval, synchronize attacks by comparing timestamps between the physical and Digital Twin, and perform integrity violations by checking mismatched state hashes. Also, forensic logs are secured using hash chaining to detect tampering attempts when a log’s previous hash does not match the recorded state. These rules allow the automatic detection of security incidents within the Digital Twin to enable timely alerts and admissible evidence collection for investigations.

7.3.1. Rule 1: AuthenticityCheck

The AuthenticityCheck rule checks if a firmware update event is triggered by an unauthorized user (not a security expert). If the user is not listed in the organization’s policy as allowed to perform updates, the event is flagged as requiring an authenticity check.

| 1 | ft:FirmwareUpdate(?update), ft:triggers(?update, ?plc), uco-identity:hasRole(?user, ?role), |

| 2 | FILTER NOT EXISTS ?policy ft:hasAccess ft:SecurityExpert |

| 3 | -> ft:AuthenticityCheck(?update). |

The authenticity check rules are triggered when a firmware update event is launched (ft:FirmwareUpdate(?update) and the firmware is related to a PLC device (ft:triggers(?update, ?plc)). uco-identity:hasRole(?user, ?role) defines the role of the user and the FILTER NOT EXISTS ?policy ft:hasAccess ft:SecurityExpert filter excludes any scenario where a specific policy (?policy) gives access to a user with the role SecurityExpert. If such a policy exists, the rule does not apply. Finally, the ft:AuthenticityCheck(?update) happens if the conditions are met. It states that an authenticity check should be performed on the firmware update (?update).

7.3.2. Rule 2: ReplayAttackDetected

The ReplayAttackDetected rule detects replay attacks by identifying two firmware update events with the same cryptographic signature within a short timeframe. If the timestamps of the two events are very close, it indicates a potential replay attack.

| 1 | ft:FirmwareUpdate(?update1), ft:triggers(?update1, ?plc), ft:hasSignature(?update1, ?hash), |

| 2 | ft:FirmwareUpdate(?update2), ft:triggers(?update2, ?plc), ft:hasSignature(?update2, ?hash), |

| 3 | ft:hasTimestamp(?update1, ?t1), ft:hasTimestamp(?update2, ?t2), |

| 4 | FILTER (?update1 != ?update2 && abs(?t1 - ?t2) < 10) |

| 5 | -> ft:ReplayAttackDetected(?plc). |

7.3.3. Rule 3: SyncAttackDetected

The SyncAttackDetected rule identifies synchronization attacks by comparing the last update timestamps of a PLC and its corresponding ForensicTwin. If the ForensicTwin’s timestamp lags behind the PLC’s, it signals a synchronization attack.

| 1 | ft:PLC(?plc), ft:hasTimestamp(?plc, ?lastPhysicalUpdate), |

| 2 | ft:ForensicTwin(?twin), ft:hasTimestamp(?twin, ?lastTwinUpdate), |

| 3 | FILTER (?lastTwinUpdate < ?lastPhysicalUpdate) |

| 4 | -> ft:SyncAttackDetected(?plc). |

7.3.4. Rule 4: IntegrityViolation

The IntegrityViolation rule detects integrity violations by comparing the expected and observed state hashes of a PLC. If the hashes do not match, it indicates that the PLC’s state has been tampered with.

| 1 | ft:PLC(?plc), ft:expectedStateHash(?plc, ?expectedHash), |

| 2 | ft:observedStateHash(?plc, ?observedHash), |

| 3 | FILTER (?expectedHash != ?observedHash) |

| 4 | -> ft:IntegrityViolation(?plc). |

7.3.5. Rule 5: TamperedLogDetected

The TamperedLogDetected rule ensures the integrity of forensic logs by verifying hash chaining. If the hash of a log entry does not match the previous hash in the chain, it indicates tampering or missing logs.

| 1 | ft:ForensicLog(?log1), ft:hasStateHash(?log1, ?hash1), |

| 2 | ft:ForensicLog(?log2), ft:hasPreviousHash(?log2, ?prevHash), |

| 3 | FILTER (?hash1 != ?prevHash) |

| 4 | -> ft:TamperedLogDetected(?log1). |

7.3.6. Rule 6: PrivacyViolation

The PrivacyViolation rule detects privacy violations by checking if a forensic log is accessed by a user who is not authorized by any policy. If unauthorized access is detected, it flags a privacy violation.

| 1 | ft:ForensicLog(?log), |

| 2 | ft:hasLogData(?log, ?logData), |

| 3 | ft:accessedBy(?log, ?user), |

| 4 | uco-identity:hasRole(?user, ?role), |

| 5 | FILTER NOT EXISTS ?policy ft:grantsAccess ?user |

| 6 | -> ft:PrivacyViolation(?log). |

7.4. Forensic Analysis Using SPARQL Queries

SPARQL queries are used to retrieve forensic evidence from the ForensiTwin ontology, enabling investigators to trace security events, validate data integrity, and identify attack patterns. Queries can filter unauthorized firmware updates by checking if a security expert issued the update, detect replay attacks by extracting multiple firmware updates with identical signatures in a short period, and identify logs that were tampered with by comparing stored and recalculated hash values. Furthermore, investigators can correlate timestamps between physical assets and their digital representations to uncover synchronization attacks. By structuring forensic investigations through precise, rule-based queries, SPARQL provides an efficient and sound method for verifying authenticity, integrity, and security compliance within the Digital Twin framework.

7.4.1. Query 1: Detect Unauthorized PLC Firmware Updates

The Detect Unauthorized PLC Firmware Updates query identifies firmware update attempts made by unauthorized users. It retrieves the PLC, the user who attempted the update, and the timestamp, filtering out users not listed in the organization’s policy.

| 1 | PREFIX ft: <http://github.com/amakremi/forensictwin#> |

| 2 | PREFIX uco: <https://ontology.unifiedcyberontology.org/uco/> |

| 3 | |

| 4 | SELECT ?plc ?attemptedBy ?timestamp |

| 5 | WHERE { |

| 6 | ?plc rdf:type ft:PLC . |

| 7 | ?updateEvent rdf:type ft:FirmwareUpdate . |

| 8 | ?updateEvent ft:triggers ?plc . |

| 9 | ?updateEvent ft:hasTimestamp ?timestamp . |

| 10 | ?updateEvent ft:hasSignature ?signature . |

| 11 | ?attemptedBy rdf:type ?role . |

| 12 | FILTER NOT EXISTS { |

| 13 | ?policy rdf:type ft:OrganizationPolicy . |

| 14 | ?policy ft:allowsUpdate ?attemptedBy . |

| 15 | } |

| 16 | } |

7.4.2. Query 2: Identify Replay Attacks on PLC Firmware

The Identify Replay Attacks on PLC Firmware query detects replay attacks by finding two firmware update events with the same cryptographic signature and timestamps within a short timeframe. It returns the events, timestamps, and the affected PLC.

| 1 | PREFIX ft: <http://github.com/amakremi/forensictwin#> |

| 2 | |

| 3 | SELECT ?update1 ?update2 ?timestamp1 ?timestamp2 ?plc |

| 4 | WHERE { |

| 5 | ?update1 rdf:type ft:FirmwareUpdate . |

| 6 | ?update2 rdf:type ft:FirmwareUpdate . |

| 7 | ?update1 ft:triggers ?plc . |

| 8 | ?update2 ft:triggers ?plc . |

| 9 | ?update1 ft:hasSignature ?hash . |

| 10 | ?update2 ft:hasSignature ?hash . |

| 11 | ?update1 ft:hasTimestamp ?timestamp1 . |

| 12 | ?update2 ft:hasTimestamp ?timestamp2 . |

| 13 | FILTER (?update1 != ?update2 && ABS(?timestamp1 - ?timestamp2) < 10) |

| 14 | } |

7.4.3. Query 3: Detect PLC Synchronization Attacks

The Detect PLC Synchronization Attacks query checks for synchronization attacks by comparing the last update timestamps of a PLC and its ForensicTwin. If the ForensicTwin’s timestamp is older than the PLC’s, it indicates a synchronization attack.

| 1 | PREFIX ft: <http://github.com/amakremi/forensictwin#> |

| 2 | |

| 3 | SELECT ?plc ?forensicTwin ?lastPhysicalUpdate ?lastTwinUpdate |

| 4 | WHERE { |

| 5 | ?plc rdf:type ft:PLC . |

| 6 | ?plc ft:hasTimestamp ?lastPhysicalUpdate . |

| 7 | ?forensicTwin rdf:type ft:ForensicTwin . |

| 8 | ?forensicTwin ft:hasTimestamp ?lastTwinUpdate . |

| 9 | FILTER (?lastTwinUpdate < ?lastPhysicalUpdate) |

| 10 | } |

7.4.4. Query 4: Verify PLC State Integrity After an Attack

The Verify PLC State Integrity After an Attack query verifies the integrity of a PLC’s state by comparing its expected and observed state hashes. If the hashes do not match, it indicates tampering or an integrity violation.

| 1 | PREFIX ft: <http://github.com/amakremi/forensictwin#> |

| 2 | |

| 3 | SELECT ?plc ?expectedHash ?observedHash |

| 4 | WHERE { |

| 5 | ?plc rdf:type ft:PLC . |

| 6 | ?plc ft:expectedStateHash ?expectedHash . |

| 7 | ?plc ft:observedStateHash ?observedHash . |

| 8 | FILTER (?expectedHash != ?observedHash) |

| 9 | } |

7.4.5. Query 5: Detect Tampered Forensic Logs (PLC Security Events)

The Detect Tampered Forensic Logs query ensures the integrity of forensic logs by checking if the hash of a log entry matches the previous hash in the chain. If a mismatch is found, it indicates logs that were tampered with or missing.

| 1 | PREFIX ft: <http://github.com/amakremi/forensictwin#> |

| 2 | |

| 3 | SELECT ?logEntry1 ?logEntry2 ?hash1 ?prevHash |

| 4 | WHERE { |

| 5 | ?logEntry1 rdf:type ft:ForensicLog . |

| 6 | ?logEntry2 rdf:type ft:ForensicLog . |

| 7 | ?logEntry1 ft:hasStateHash ?hash1 . |

| 8 | ?logEntry2 ft:hasPreviousHash ?prevHash . |

| 9 | FILTER (?hash1 != ?prevHash) |

| 10 | } |

7.5. Performance Study

Due to limited resources and lack of permission to test the approach in a real setting, we programmatically simulated the scenario described in

Section 7.2 using a Docker image to simulate a PLC device, another Docker image to simulate the Digital Twin part that manipulates the ontology and verifies transactions. We also implemented a Docker image to test the network performance. We mainly focus on simulating a PLC that sends signed updates through an MQTT broker, with verification and monitoring components to analyze the system’s performance. The architecture consists of

MQTT Broker—Using Eclipse Mosquitto (2.0.15) for message communication.

PLC Simulator—Simulates a PLC device sending secure, signed updates.

Ontology Verifier—Verifies the signed messages and analyzes system performance.

Network Latency Monitor—Measures communication latency.

The simulation focuses on testing and measuring several key features:

Cryptographic Verification—Using ECDSA (Elliptic Curve Digital Signature Algorithm) with NIST P-256 curve for signing and verifying messages.

Performance Metrics: CPU usage during verification operations, Memory usage, End-to-end latency, Cryptographic overhead (time spent on signature verification).

Message Authentication—Ensuring messages from the PLC are authentic and unmodified.

Network Communication—Testing message delivery through the MQTT broker.

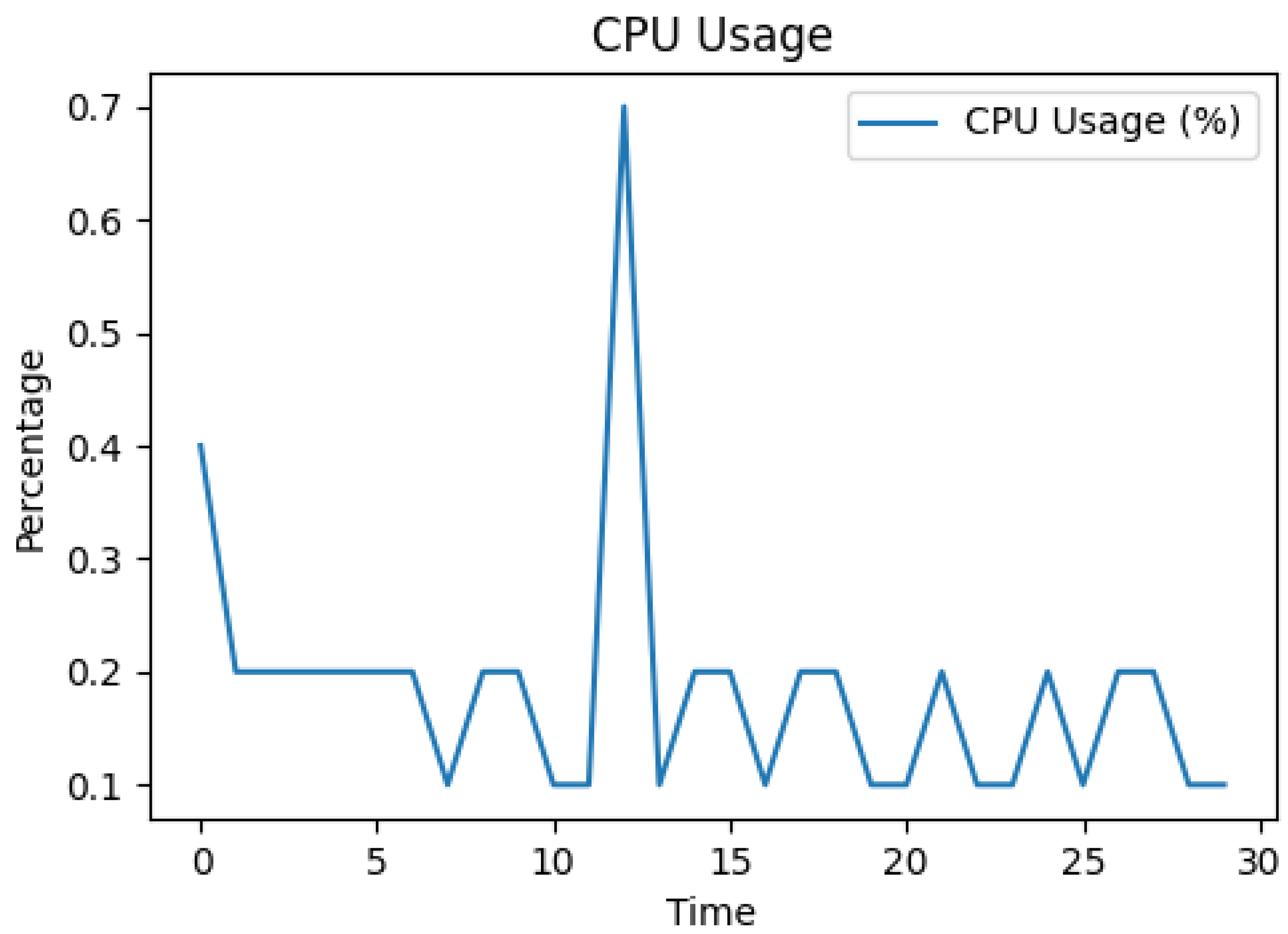

7.5.1. CPU Usage Analysis

Figure 3 depicts the CPU usage, which remains relatively stable between 0.1% and 0.2% for most of the duration, with minor fluctuations, which indicates a low consumption and stable usage with small spikes due to received events such as firmware updates. Also, even the ontology docker image is isolated, but it is impacted by the host events. The low and stable CPU usage indicates the forensicTwin approach would be feasible even on resource-constrained industrial systems.

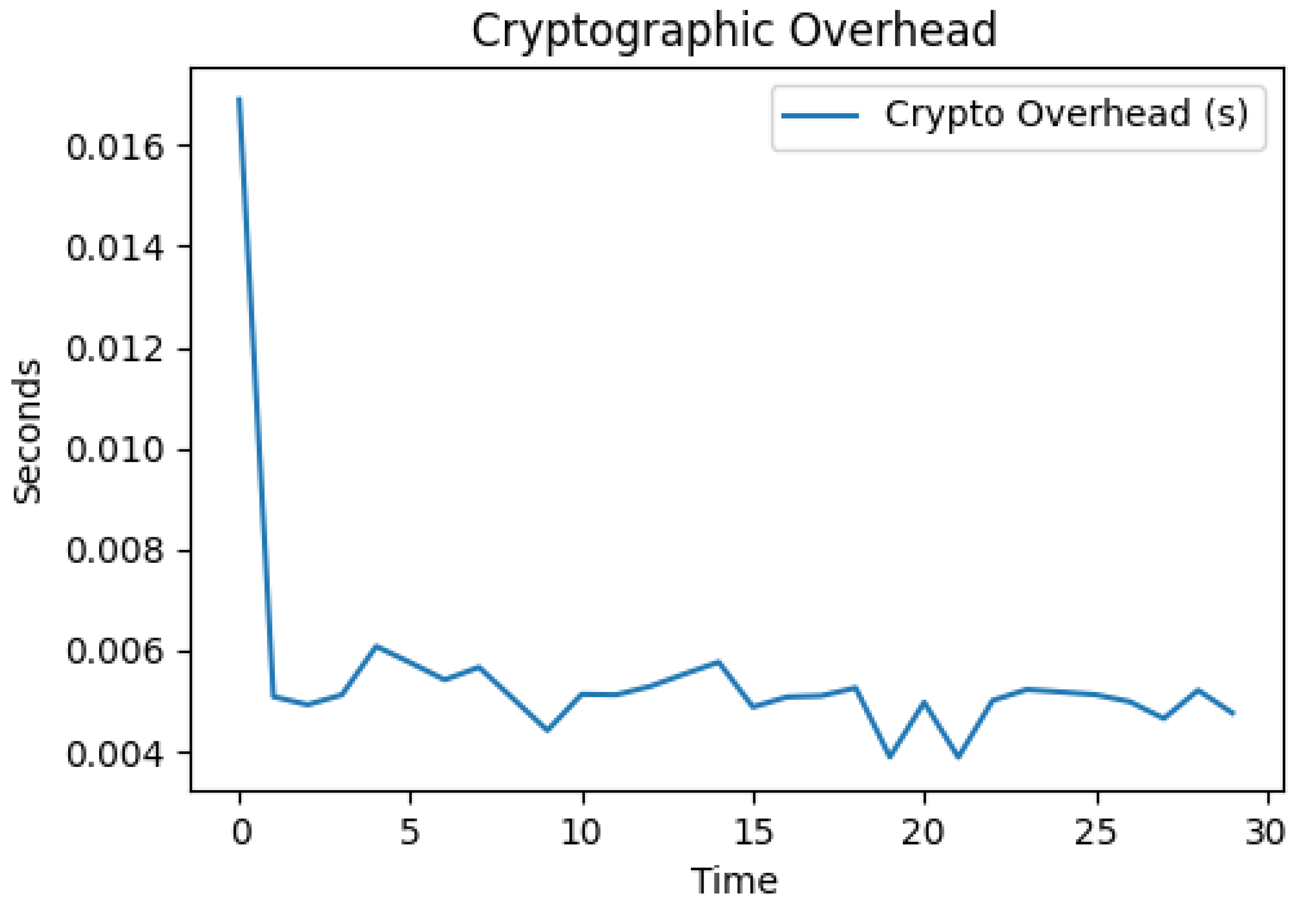

7.5.2. Cryptographic Overhead Analysis

The initial verification, in

Figure 4, shows slightly higher overhead (0.017 s), which then stabilizes at approximately 0.005 s (5 milliseconds) per verification. This low overhead (5 ms) for cryptographic operations is negligible in the context of industrial control systems, which operate on second or minute timescales [

32]. The consistent performance suggests the signature verification process is deterministic and reliable.

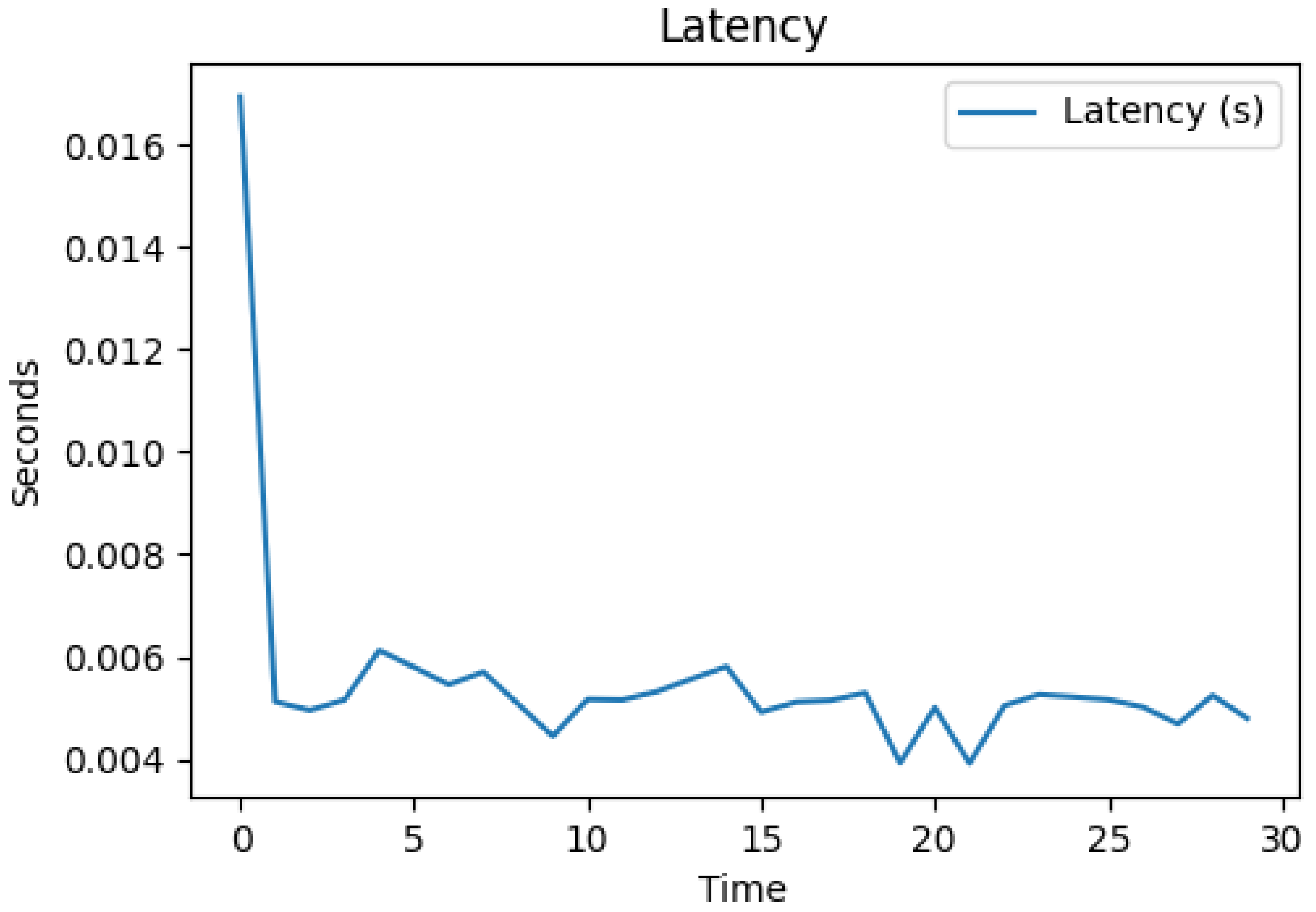

7.5.3. Processing Latency Analysis

The latency starts high at approximately 0.017 s and rapidly decreases in the first few seconds. It fluctuates between approximately 0.004 and 0.006 s for the majority of the duration. There are minor variations, but no significant spikes or drops after the initial decrease, as depicted in

Figure 5. It is remarkable that the overall processing latency pattern mirrors the cryptographic overhead, which indicates that the cryptographic verification is the dominant factor in the overall processing time.

7.5.4. Memory Usage Analysis

The memory usage depicted in

Figure 6 starts at about 43.1% and remains stable for the first 10 min. Then, there is a sudden drop to 42.4% around the 12-min mark, which correlates with the CPU spike seen in

Figure 3. The memory usage then stabilizes at a lower level for the remainder of the test. The drop might indicate garbage collection or optimization in the Python runtime.

7.5.5. Network Latency Analysis

In

Figure 7, the network latency is initially very high at around 0.95 s (950 ms). Then, at about the 10 min mark, there is a dramatic drop to near zero (around 0.02 s) before the latency then gradually increases over time, reaching about 0.1 s by the end of the test. This pattern suggests an initial network configuration or connection establishment overhead, followed by optimization and then a gradual increase, likely due to queue buildup.

8. Discussion and Conclusions

The ForensicTwin approach demonstrates excellent computational efficiency with minimal CPU and memory overhead. The cryptographic verification adds only about 5 ms of overhead, which is negligible for most industrial applications [

32]. Given the minimal resource utilization (both CPU and memory), the ForensicTwin approach appears to be highly scalable. The system could likely handle many more messages per second or support multiple PLCs without significant performance degradation. These results demonstrate that implementing ECDSA digital signatures for message authentication adds minimal overhead, suggesting that there is little performance penalty for the added security benefit. The dramatic improvement in network latency after the 10 min mark suggests that there might be opportunities for further optimization in how the MQTT connections are established and maintained.

In conclusion, the performance metrics validate that the ForensicTwin approach is viable from a performance standpoint, with minimal CPU, memory, and processing overhead. The most significant performance consideration would be network optimization, especially during the initial setup phase. The spikes and drops observed might be related to specific events or processes occurring within the system during the measurement period. The system demonstrates stable, predictable performance after initialization, which is essential for industrial applications where reliability is paramount.

Overall, our proposed architecture responds to all forensic requirements while keeping high performance and low resource usage. The deployment of ontologies with the ability to extend them to cover different devices and software types is important to enhance usability, adaptability, and support of the organization’s specifications. Also, the architecture considers the organization’s and country’s regulations to ensure the admissibility of the collected evidence. The ForensicTwin ontology enables the automatic detection of any forensic requirement breaches through the use of SWRL rules. These rules can be extended and refined according to the organization’s business needs and specifications.

However, the main limitation is the application of the approach to a real scenario with multiple devices due to resource limitations and a lack of permissions from organizations to test our architecture (even the most similar work does not provide a real application of their approach [

3]). We aim in the future to secure funds to create a large, real-world Digital Twin system based on the proposed approach. Thus, we could conduct a more extensive experimental real-world industrial deployment of the model to provide more precise measurements. Also, we aim to explore the usage of AI techniques to reconstitute missing metadata during data preprocessing in the data layer to complete the full set of information required for proper record creation. Our architecture uses ontology features to verify missing information; however, it would be beneficial if AI techniques could regenerate missing information and help automatically store DT archives. We will enrich the ontology with new forensic metrics [

33] that help train AI models on quantitative values reflecting evidence admissibility according to country regulations.