1. Introduction

Sinusitis refers to an inflammation or swelling of the internal tissues inside the paranasal cavity. It is a common medical condition that affects a significant population worldwide [

1], contributing to the loss of productivity among individuals as well as leading to a substantial socio-economic burden due to healthcare consumption. According to an estimate by EUFOREA in 2018, a total of 10% of the population in Europe suffered from chronic rhinosinusitis (CRS) [

2]. In a survey conducted in the USA, around 14.7% of the participants were reported to have suffered from sinusitis [

3]. A recent study [

4] performed with 3602 participants from different regions of Saudi Arabia showed that 26.3% of individuals (75.1% being female) were diagnosed with CRS. The common risk factors for sinusitis include upper respiratory infections, nasal blockage, allergies, asthma, a deviated septum, and a weakened immune system.

The term maxillary sinusitis is associated with inflammation of the maxillary sinus inside the paranasal region. Located within the maxilla and adjacent to the nasal cavity, the maxillary sinus is the largest among the paranasal sinuses. It plays a pivotal role in maintaining sinus health and the understanding of sinus-related pathologies [

5]. Maxillary sinusitis can be classified as acute or chronic [

6] depending upon the clinical symptoms. Acute sinusitis lasts up to four weeks, with symptoms like nasal congestion, purulent discharge, and facial pain. Chronic sinusitis can be caused by viruses, bacteria, or fungi, and persists for more than 12 weeks, marked by prolonged inflammation, nasal polyps, and recurrent infections.

For an accurate diagnosis of the maxillary sinusitis, physicians often rely on different imaging modalities, which include conventional radiography (X-rays) [

7], Magnetic Resonance Imaging (MRI) [

8], Computed Tomography (CT) [

9], ultrasound imaging [

10], and endoscopy [

11]. CT is the gold standard for diagnosing sinus diseases due to its high sensitivity and ability to detect soft and bone tissues, enabling early detection and prevention of serious maxillary sinusitis complications [

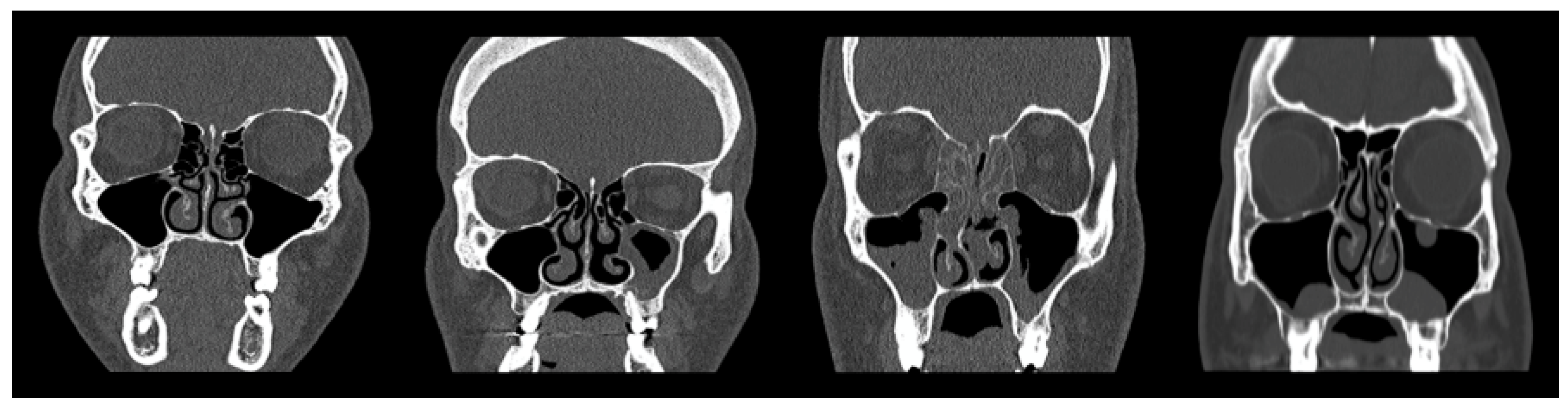

12]. Polyposis, retention cysts, mucosal thickening and air fluid levels are the types of CT findings on maxillary sinus (MS) images [

13]. However, the anatomical structure of the maxillary sinus area makes it challenging to distinguish these conditions. The similar appearance of retention cysts and opacified MS, or the minor mucosal thickening, can make it very difficult to accurately differentiate these conditions unless highly advanced image analysis is performed.

Diagnosis for maxillary sinusitis does not only involve the detection, but the intensity of the disease also needs to be assessed for proper treatment [

5]. Normally, this is conducted manually by a skilled radiologist, which is time consuming, laborious and requires intensive expertise. For that purpose, traditional machine learning (ML) models have been deployed to automate and make the process efficient [

14] to assist radiologists in diagnosing maxillary sinusitis with accuracy; unfortunately, the existing ML models suffer from the fact that we can only use direct output features and are incapable of extracting the implicit features present in raw imaging data [

9,

15,

16]. Different modalities have also been proposed using the existing approaches, such as X-ray [

17,

18], MRI [

8], ultrasound [

10], and endoscopy [

11]; however, CT imaging [

8,

9,

19] is considered more preferable as it has better accuracy, sensitivity and suitability for complex cases.

The use of deep learning (DL) approaches has recently gained popularity as they have shown good performance in learning meaningful information and radiomic features [

20]. In addition, image segmentation techniques possess high potential in enhancing diagnosis of sinusitis through aiding detection methods effectively [

21,

22]. Several deep learning-based approaches have been proposed for the diagnosis and analysis of sinusitis. A number of works have been reported demonstrating the potential of deep learning for diagnosis of maxillary sinusitis with radiographs and X-ray images [

17,

23,

24]. Similarly, a number of method have been proposed for CT modality, which used standard convolutional neural networks (CNN) based models [

25] or adaptations of CNNs, e.g., 3D-CNN [

26], Aux-MVNeT [

18] and SinusC-Net [

27]. Recent years have witnessed an increasing interest in vision transformers (ViT) for various medical imaging applications, demonstrating highly encouraging performance [

28,

29]; however, the use of ViT is still largely unexplored in applications involving diagnosis and analysis of maxillary sinusitis.

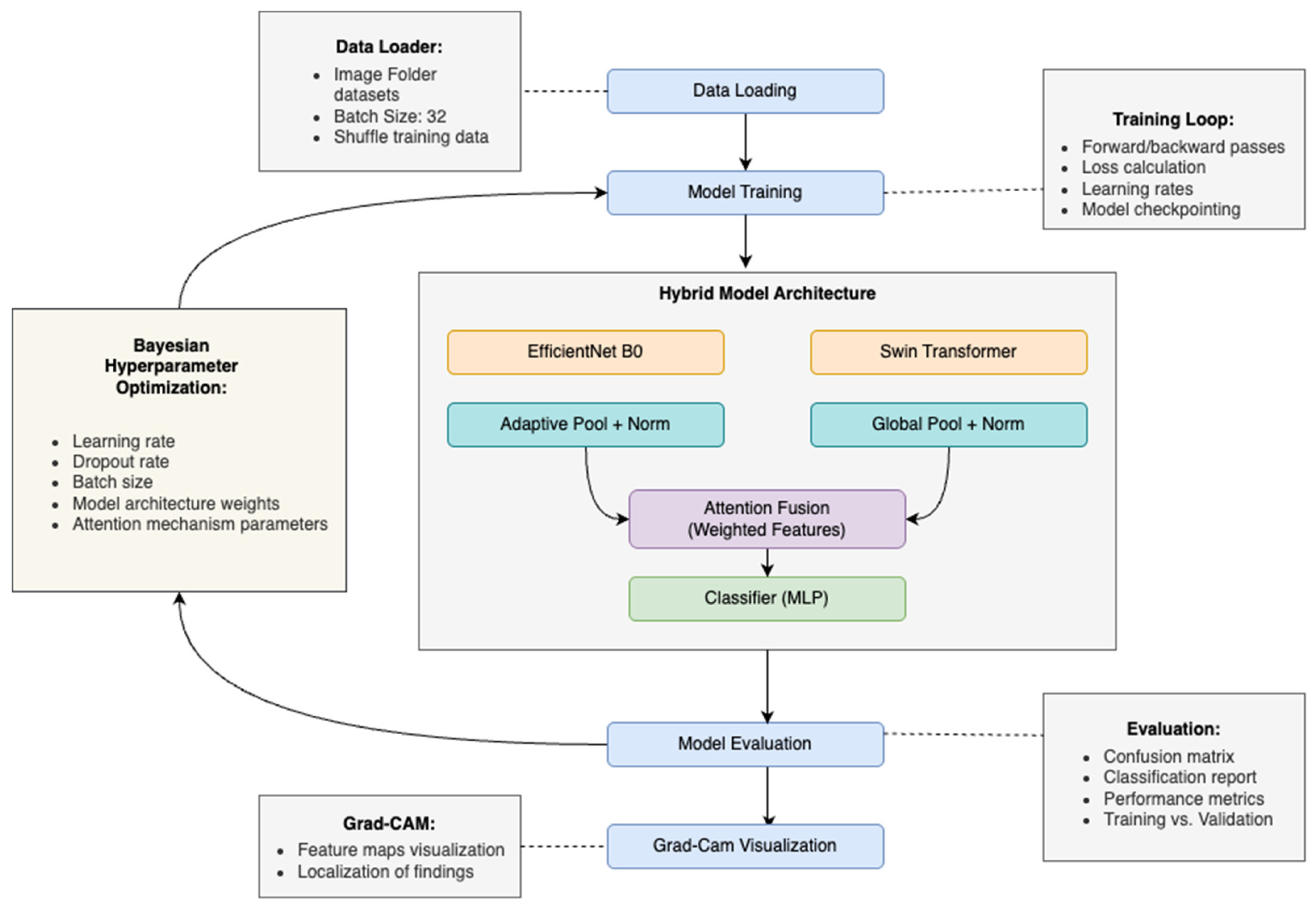

Hence, this paper proposes a hybrid deep learning framework combining convolutional neural networks (CNN) and Swin Transformers (ViT) to precisely classify maxillary sinus abnormalities from CT images. Notably, few CNN-based models for sinus detection have been reported in the literature in recent years; however, the majority of these works employ a single model for classification. Additionally, the lack of explainability in many deep learning models poses a significant barrier to their clinical adoption, as radiologists often require transparent reasoning for model predictions. Accordingly, the main contributions of this study are as follows:

- i.

Proposes a hybrid deep learning model combining CNN and Swin Transformer to improve the classification of maxillary sinus abnormalities from CT images.

- ii.

Utilizes the strengths of CNNs for capturing local features and Swin Transformers for modeling long-range dependencies in sinus imaging.

- iii.

Integrates an Explainable AI (XAI) technique, specifically Gradient-weighted Class Activation Mapping (Grad-CAM), to enhance transparency and interpretability of the model’s decisions.

The structure of this paper is organized as follows:

Section 2 presents the related work, highlighting existing approaches for maxillary sinus detection and classification using machine learning and deep learning techniques.

Section 3 describes the proposed methodology, including data collection, preprocessing, model architecture, training procedures, and hyperparameter tuning.

Section 4 details the experimental results and performance evaluation of the model.

Section 5 discusses the application of Grad-CAM to provide visual interpretability of model predictions. Finally,

Section 6 concludes the paper and outlines potential directions for future research.

2. Related Works

Several methods have been reported in the literature for automated detection of maxillary sinusitis, with the majority relying on convolutional neural networks (CNNs). Jeon et al. [

23] presented a CNN-based approach for the detection and classification of frontal, ethmoid, and maxillary sinusitis using Waters’ and Caldwell’s radiographs. Laura et al. [

19] proposed an ensemble approach that combined Darknet-19 deep neural network with YOLO for the detection of nasal sinuses and cavities with CT images. Kim et al. [

17] also developed an ensemble method built on multiple CNN models (VGG-16, VGG-19, ResNet-101) for the detection of sinusitis using a majority voting approach with X-ray images, achieving encouraging results. Ozbay and Tunc [

25] also made a useful contribution by proposing a method that relied on thresholding-based CT image segmentation Otsu’s method [

30] followed by classification of sinus abnormalities using a CNN-based model with promising performance; however, small data dataset led to generalization issues for diverse images.

Likewise, more sophisticated methods employed advanced architectures for diagnosing and screening of Maxillary sinusitis. Lim et al. [

18] presented an auxiliary classifier-based multi-view CNN model, called Aux-MVNet, that was aimed at the localization of maxillary sinusitis and classification of severity levels using X-ray images. Çelebi et al. [

28] developed a Swin Transformer-based architecture for maxillary sinus detection by utilizing the window multi-head self-attention mechanism in CBCT images. Kuwana et al. [

24] focused on the detection and classification of sinus lesions into healthy and inflamed categories using DetectNet model with panoramic radiographs. Murata et al. [

31] employed AlexNet for the diagnosis of maxillary sinusitis on panoramic radiographic image dataset created using varying data augmentation techniques. In addition, some studies explored the use of deep learning approaches for sinusitis screening using 3D volumetric datasets. Hwang et al. [

27] introduced the SinusC-Net model, a 3D distance-guided network, for surgical plan classification for maxillary sinus augmentation on CBCT images. Likewise, Bhattacharya et al. [

26] demonstrated robust classification performance using a 3D CNN model with supervised contrastive loss to classify sinus volumes into normal and abnormal categories. These sophisticated methods resulted in improved performance across different imaging modalities; however, they failed to generalize in constrained environments.

Similarly, there exist approaches employing transfer learning, i.e., customized architecture built on top of pretrained models to perform sinusitis diagnosis in an effective and efficient manner. Mori et al. [

32] proposed one such method for a robust detection and diagnosis of maxillary sinusitis on panoramic radiographs. Similarly, Kotaki et al. [

33] also investigated the use of transfer learning to enhance the performance of the diagnosis of maxillary sinusitis with radiography. However, fine-tuning of pretrained model for maxillary diagnosis again requires a significant volume of image data. Moreover, Oğuzhan Altun et al. [

34] developed a modified YOLOv5x architecture with transfer learning for automated segmentation of maxillary sinuses and associated pathologies in CBCT images, achieving high accuracy and precision, although the study’s small dataset of 307 images limits generalizability. Similarly, Ibrahim Sevki Bayrakdar et al. [

35] employed the nnU-Net v2 model for automatic segmentation of maxillary sinuses in CBCT volumes, showing strong performance with a limited dataset and manual annotations. While the use of nnU-Net v2 is a strength, the small dataset and lack of external validation are limitations for broader clinical applicability. An interesting work [

36] demonstrated the use of Swin transformers for maxillary sinus detection, but it employed cone beam computed tomography (CBCT), which has different characteristics compared to traditional CT scans, particularly in terms of resolution and the types of images it produces. This limitation makes it less generalizable to standard CT-based sinusitis detection, and highlights the need for further exploration of transformer-based models with traditional CT data to assess their viability in real-world clinical settings. Additionally, the study lacked explainability mechanisms, limiting its clinical transparency, and it did not assess performance across different stages of the disease, which is important for reliable diagnostic support.

As reviewed above, significant advancement has been made in terms of automated medical image analysis [

16], including sinusitis diagnosis and severity identification. Several deep learning-based architectures have been investigated to perform an automated diagnosis and classification of maxillary sinusitis [

26,

27,

31]. However, there is a need to explore the potential of ViT architectures for maxillary classification as these are being extensively used for medical image analysis [

28,

29].

Table 1 presents a summary of related works, highlighting the need for hybrid architectures that leverage the strengths of both convolutional neural networks and transformer-based models to improve diagnostic accuracy, interpretability, and generalizability across diverse imaging modalities.

4. Results and Discussion

The performance of the model on the test dataset is evaluated by standard evaluation metrics like accuracy, loss, precision, recall, and

F1

score and the evaluation of each metric is presented in this section. A general measure of a model’s performance is the accuracy, which is calculated as the proportion of correctly classified instances in the whole dataset. Precision is the ability of the model to minimize false positives; it is the ratio of correctly identified positives to the total number of positives that were predicted. Recall is the sensitivity of the model, i.e., the ratio of correctly identified actual positive cases to the total number of actual positive cases.

F1

score is the harmonic mean of precision and recall that gives a balanced view of whether the model can avoid both false positives and false negatives. In medical imaging, where incorrect diagnosis can be detrimental, the

F1

score is especially useful. Below are the formal definitions of these metrics as a means of statistical clarity to evaluate classification results:

TP = true positive,

FP = false positive,

TN = true negative, and

FN = false negative.

4.1. Confusion Matrix Analysis

The confusion matrix (

Figure 4) provides a detailed breakdown of the model’s class-wise prediction outcomes. It reveals that the model performed exceptionally well in distinguishing Normal Maxillary Sinus (MS) and Retention Cysts, correctly identifying 59 out of 60 and 58 out of 60 instances, respectively. However, minor misclassifications occurred between the Opacified MS and Polyposis classes, which can be attributed to the overlapping radiological characteristics of fluid retention and polypoidal soft tissue densities. For instance, four Polyposis cases were incorrectly labeled as Retention Cysts.

This pattern of misclassification reflects real-world diagnostic challenges faced by radiologists, where CT findings of certain pathological categories may present similarly. Hence, the model’s errors are clinically plausible and support its potential for assisting diagnostic workflows rather than contradicting clinical expertise.

4.2. Classification Performance Metrics

Standard metrics like precision, recall,

F1-

score and accuracy were used to evaluate the classification performance. Additionally, we calculated these metrics per class as well as aggregated for a macro-level model performance measure. Overall accuracy on all categories was 95.83%, showing strong generalization for all categories. Opacified MS and Normal MS precision values ranged from 0.92 to 0.98, implying a high capacity to minimize false positives. Similar recall values were calculated with a minimum of 0.92 for Opacified MS and 0.98 for Normal MS, suggesting that the model is able to detect true positives accordingly. This ranged from 0.92 to 0.98

F1-

score, indicating consistent and reliable results across all four classes.

Figure 5 summarizes the detailed metrics.

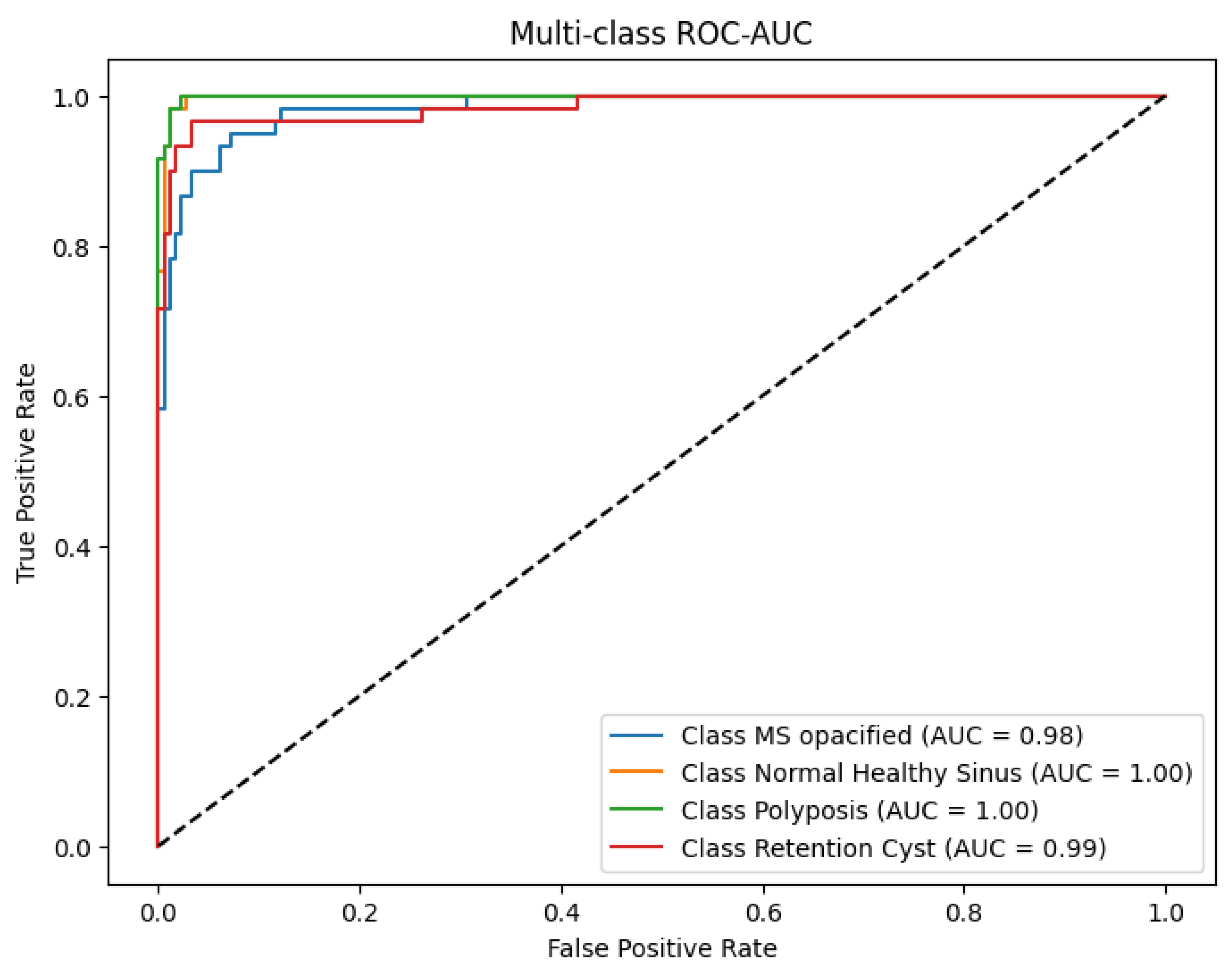

4.3. ROC-AUC Analysis

The ROC-AUC was computed to further evaluate the discriminative ability of the model as shown in

Figure 6. It yielded an ROC-AUC score of 0.982, which separated classes extremely well. The class variable wise AUC values were Normal MS = 1.00, Opacified MS = 0.98, Polyposis = 1.00 and Retention Cysts = 0.99. These values confirm the ability of the model to reliably differentiate healthy from diseased sinuses, as well as more subtle pathological examples.

The ROC-AUC performance also reinforces the effectiveness of the hybrid architecture in combining local and global features to optimize classification, especially for classes with overlapping imaging patterns.

4.4. Training Dynamics and Model Convergence

Training dynamics were monitored closely to assess convergence behavior and detect potential overfitting. The training and validation loss curves (

Figure 7) exhibited stable and monotonic decline, with the training loss reaching a minimum of 0.2 and validation loss stabilizing around 0.4. Correspondingly, the model achieved peak training accuracy of 98.6% and validation accuracy of 96.4%.

To prevent overfitting, early stopping was employed, terminating training once the validation loss plateaued. Model checkpoints ensured that the best-performing model was preserved for evaluation. The consistent trend across training and validation curves indicates a well-regularized model with strong generalization capability.

4.5. Comparison with Related Studies

To the best of our knowledge, no prior work has directly addressed the automated four-class classification of maxillary sinus abnormalities from CT images using a hybrid deep learning framework. However, some studies within the broader domain of sinus disease detection have utilized MRI, X-ray, and panoramic radiographs. For example, Bhattacharya et al. [

26] employed MRI for binary classification of sinusitis, Lim et al. [

18] analyzed sinus opacification using X-ray images, and Murata et al. [

31] investigated panoramic radiographs for detecting maxillary sinus lesions. While these studies provide useful insights, they differ significantly in imaging modality, classification scope, and explainability. Our study contributes uniquely by combining CNN and Transformer architectures with an attention-based fusion mechanism and Grad-CAM visualization, achieving high diagnostic accuracy while maintaining transparency for clinical use. A detailed comparison is summarized in

Table 9.

4.6. Interpretation and Clinical Relevance

The hybrid model’s success can be attributed to the complementary strengths of its constituent backbones. EfficientNetB0 contributed robust spatial and texture-level feature extraction, while the Swin Transformer provided the capacity to model long-range dependencies across the input volume. Their integration via attention-based fusion enabled adaptive weighting of both feature types, enhancing the model’s discriminative power.

Visualizations of Grad-CAMs confirmed model attention was appropriately localized to anatomically meaningful regions, such as sinuses walls, opacified cavities, and mucosal thickening. The model’s interpretability is further strengthened by these visual explanations that are key for clinical adoption. Moreover, the model also showed resilience to class imbalance as a result of the augmentation strategies and class rebalancing techniques used during preprocessing. Consequently, it exhibited minimal degradation in precision and recall across these minority classes (Opacified MS, Polyposis).

5. Grad-CAM Visualization

Interpretability is an important aspect of building trust with artificial intelligence systems in medical imaging. To address this, Gradient weighted Class Activation Mapping (Grad-CAM) [

42] was used to visualize and interpret the predictions made by the proposed hybrid deep learning model. It gives visual explanations by highlighting the regions in an image that most contributed to the model’s predictions using Grad-CAM. The saliency maps bridge the gap between algorithmic output and clinical understanding of maxillary sinus conditions, and can be used as a tool for validation that the model is classifying the maxillary sinus condition using anatomically relevant structures.

5.1. Methodological Framework of Grad-CAM

The Grad-CAM technique was implemented on the EfficientNetB0 backbone of the hybrid architecture. The process begins by calculating the gradients of the output class score with respect to the feature maps from the last convolutional layer. These gradients are globally averaged to determine the relative importance of each feature map channel for the predicted class. The resulting importance weights are then combined with the feature maps, followed by the application of a ReLU function to retain only positively contributing activations. This produces a coarse localization map, or heatmap, which is subsequently upsampled to the input image resolution (224 × 224 pixels) and normalized.

Finally, the heatmap is superimposed on the original CT image using a color gradient, typically ranging from blue (low importance) to red (high importance). This overlay visually indicates where the model is attending when making its prediction, thereby enhancing interpretability.

5.2. Implementation and Representative Sampling

For consistency and diagnostic relevance, the final convolutional layer of the EfficientNetB0 network was selected as the target layer due to its high semantic content and retained spatial resolution. Representative CT images from all four classes—Normal Maxillary Sinus (MS), Opacified MS, Polyposis, and Retention Cysts—were selected for Grad-CAM analysis. Post-processing techniques, including heatmap thresholding, were applied to reduce visual noise and to sharpen the focus on medically relevant anatomical structures.

In Retention Cysts, the heatmaps displayed sharply localized activations along cyst margins, while Normal MS scans exhibited diffused, low-intensity activations across the sinus region, accurately reflecting the absence of pathology.

Figure 8 presents the Grad-CAM heatmaps for Retention Cysts cases.

Figure 9 shows the resulting heatmaps for pathological cases, capturing variations in activation intensity and localization patterns.

5.3. Interpretation

Analysis of the Grad-CAM outputs revealed strong alignment between the model’s focus areas and clinically significant regions. In pathological cases such as Opacified MS and Polyposis, the model demonstrated high-intensity activations within the sinus cavities, particularly around regions exhibiting mucosal thickening or fluid accumulation. These activations corresponded closely with radiological markers typically used for diagnosis.

Grad-CAM was also utilized for error analysis. Misclassifications, particularly between Opacified MS and Polyposis, were associated with overlapping activation patterns in the heatmaps.

6. Conclusions and Future Work

In conclusion, this study presented a robust hybrid deep learning framework combining EfficientNetB0 and Swin Transformer architectures for the automated classification of maxillary sinus from CT images. The model achieved exceptional performance, with 95.83% test accuracy and strong discriminative capability across all classes, as evidenced by ROC-AUC scores exceeding 0.98. Importantly, we demonstrated the efficacy of Grad-CAM visualizations in elucidating the model’s decision making process, revealing its alignment with clinically relevant anatomical features. These explainability insights not only validate the model’s reliability but also enhance confidence among medical practitioners, a critical factor for clinical adoption. The proposed framework offers a promising tool for accurate, interpretable, and clinically relevant sinusitis diagnosis, paving the way for broader integration of AI-assisted imaging in routine medical practice.

Despite these promising results, the study’s limitations highlight key areas for future improvement. The primary constraint remains the relatively small size of the clinical dataset, which, despite augmentation techniques, may limit the model’s generalization to rare or complex cases. To address this, future work should focus on expanding the dataset through multi-institutional collaborations, incorporating diverse demographic and pathological variations. Additionally, integrating multi-view CT data (e.g., sagittal and axial planes) could enhance the model’s spatial understanding of sinus structures, potentially improving diagnostic precision for conditions with subtle radiographic differences.