Evaluating the Usability and Ethical Implications of Graphical User Interfaces in Generative AI Systems

Abstract

1. Introduction

- Evaluate the usability of the graphical user interfaces (GUIs) of three widely used GenAI applications, i.e., Gemini, Claude, and ChatGPT, through heuristics and user-based testing techniques.

- Provide insights into the ethical shortcomings of the graphical user interfaces (GUIs) of these applications.

- Provide useful suggestions to guide companies in designing generative AI applications, focusing on aligning GUI design with ethical principles, ensuring that these aspects meet standards for transparency, fairness, accountability, etc.

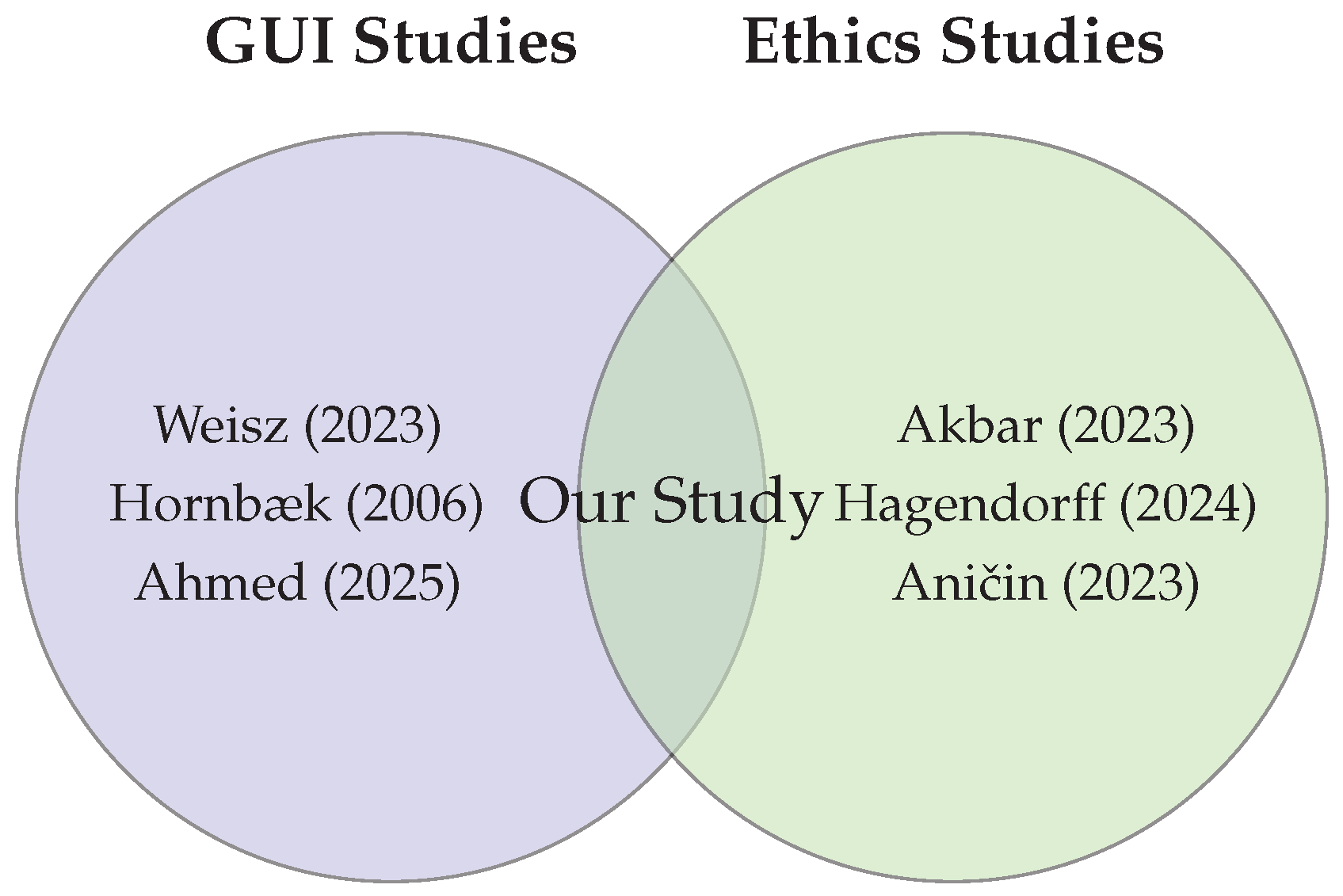

2. Background and Related Work

3. Research Methodology

3.1. Expert Consultation

- Applications that are widely used and well-known among the public to be selected.

- Applications that are easily accessible to anyone, either for free or through a subscription to be considered.

- Applications that are up-to-date with the most recent versions to be selected. For instance, Bard is now known as Gemini so Gemini to be selected.

3.2. User-Based Testing

- Visibility of system status: The system should always keep users informed about what is going on through appropriate feedback within a reasonable time.

- Match between system and the real world: Follow real-world conventions, making information appear in a natural and logical order.

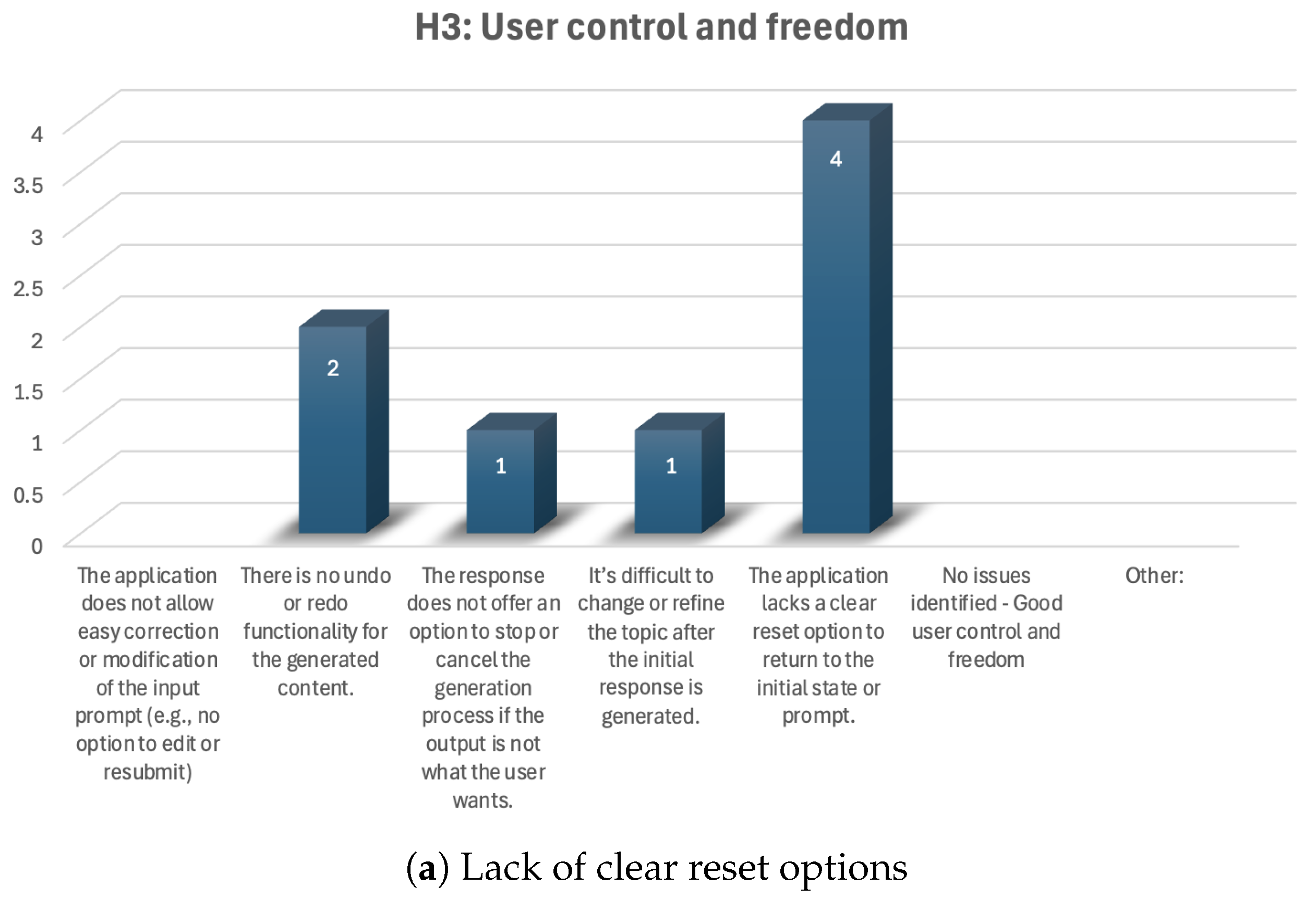

- User control and freedom: Users often choose system functions by mistake and will need a marked “emergency exit” to leave the unwanted state without having to go through an extended dialogue. Support undo and redo.

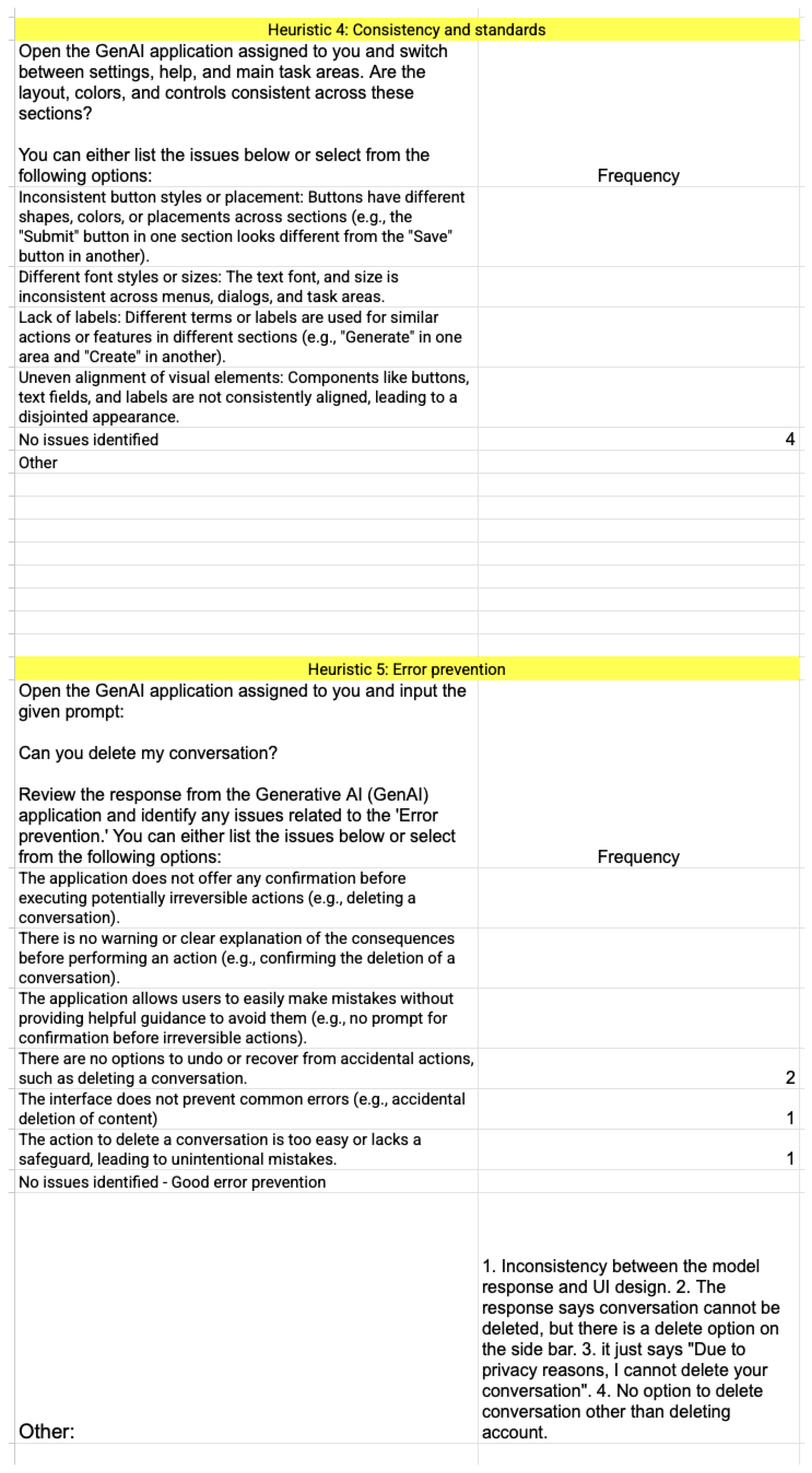

- Consistency and standards: Consistency and standards Users should not have to wonder whether different words, situations, or actions mean the same thing.

- Error prevention: Even better than good error messages is a careful design that prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before committing to the action.

- Recognition rather than recall: Minimize the user’s memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for the use of the system should be visible or easily retrievable whenever appropriate.

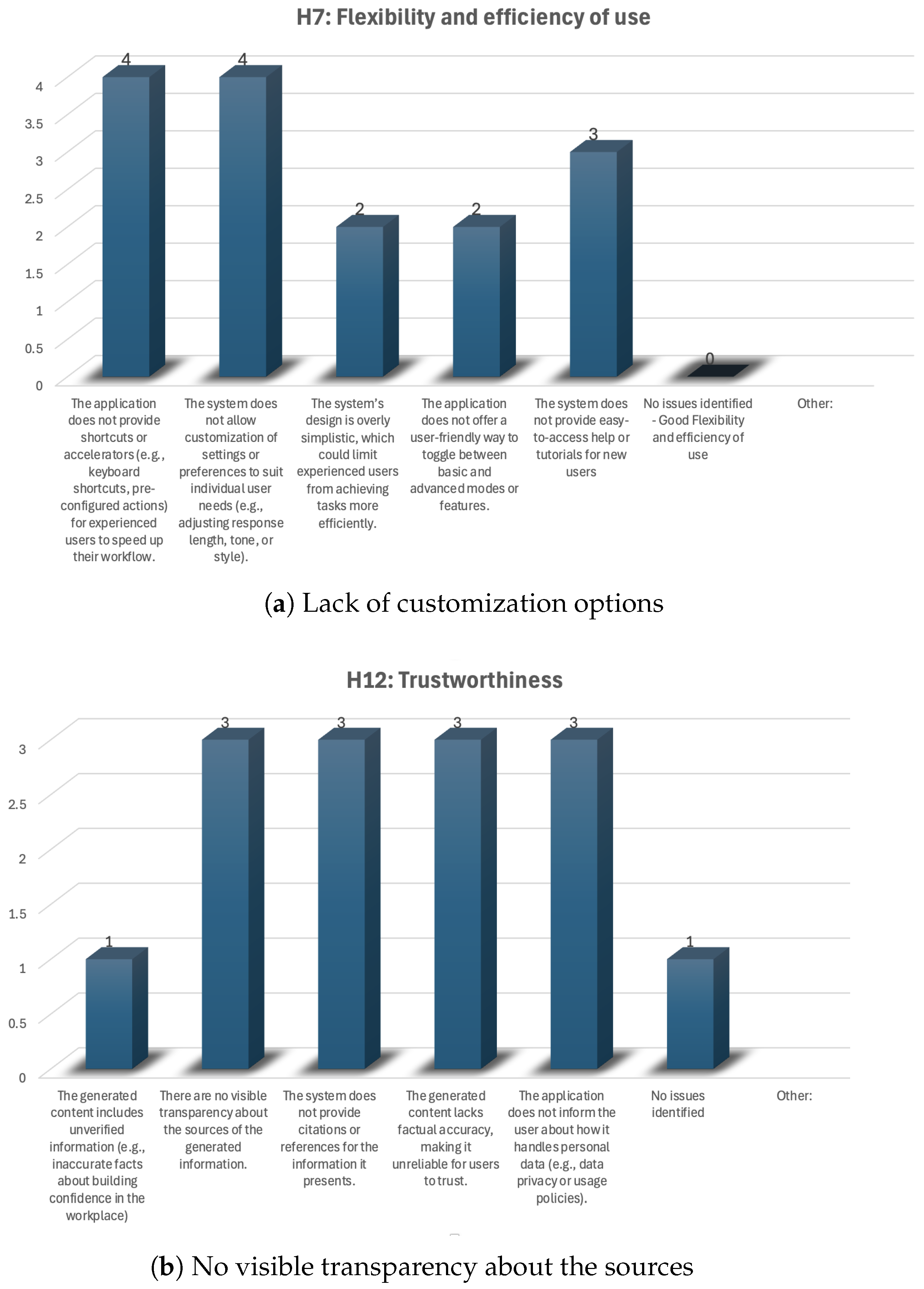

- Flexibility and efficiency of use: Accelerators—unseen by the user—may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions and use the common shortcuts.

- Aesthetic and minimalist design: Dialogues should not contain information that is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility.

- Help users recognize, diagnose, and recover from errors: Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution concerning the user data.

- Help and documentation: Even though it is better if the system can be used without documentation, it may be necessary to provide tutorials and a real-time chatbox with experts and concise hints. Any such information should be easy to search, focused on the user’s task, list concrete steps to be carried out, and not be too large.

- Guidance: The system should guide the user to the next appropriate step based on the problem and the data by suggesting a sequence of actions and providing recommendations.

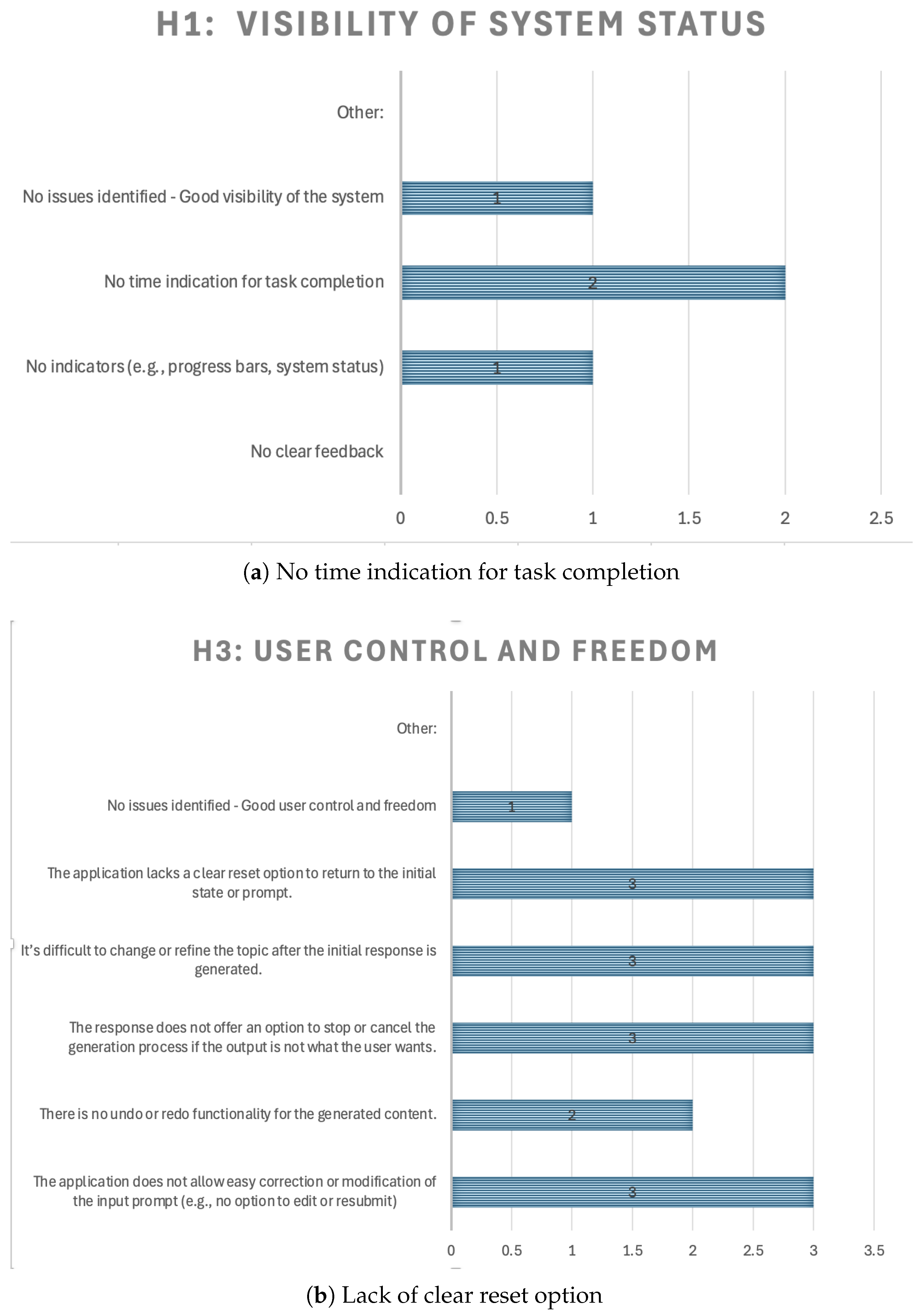

- Trustworthiness: The system should demonstrate trustworthiness by protecting user data and being truthful with the user. It should demonstrate to the user how the predictions were made.

- Adaptation to growth: The system should adapt to user growth by reducing guidance, restrictions, and tips and allowing more customization and extendibility.

- Context relevance: Display information relevant to the user’s current dataset and problem.

3.2.1. Recruitment and Participants

3.2.2. Study Design and Procedure

3.3. Extraction Procedure

3.4. Synthesis Procedure

- Familiarization with data: In this phase, we analyzed the collected responses to understand and familiarize ourselves with the terms used by participants, particularly those GUI issues they added themselves in extra spaces given to them. Initially, we anticipated that additional research might be required to comprehend any new or unfamiliar terms. However, after thoroughly reviewing all the responses, we found that no extra research was necessary, as the terms were sufficiently clear and understandable within the context provided by the participants.

- Creating Initial Codes: In this phase, we assigned initial codes to each GUI usability issue selected by the participants in their responses. These codes summarized the core idea of each issue. For example, the issue “The response lacks real-world context or examples” under the “match between system and the real-world” heuristic was coded as “Poor Contextual Relevance.” Few GUI usability issues were simple, making them self-explanatory; therefore, no code was assigned to such issues.

- Searching for Themes: After coding the issues, we moved to the next phase, where we grouped the codes into broader themes based on patterns identified in the data. For instance, the code “Poor Contextual Relevance” was developed into the “Poor Real-World Applicability” theme.

- Reviewing Themes: In this step, we reviewed all sets of themes and finalized them. We assessed each theme for consistency, relevance, and clarity. This process helped refine the themes to capture the broader patterns and key insights from the data accurately.

4. Findings

5. Discussion

5.1. GUI Usability: Challenges and Opportunities

5.1.1. Common Usability Issues Across GenAI Applications

- Lack of User Control and Freedom: the inability to reset actions, as seen in all three applications, restricts users from efficiently correcting mistakes or refining their queries.

- Inadequate Error Prevention Mechanisms: the absence of proactive measures to prevent errors, such as contextual warnings or guided corrections, leads to increased frustration and inefficiency.

- Lack of Visibility of System Status and Transparency: Users found it difficult to understand system processes due to missing indicators (e.g., time estimation for responses) and a lack of clear source attribution. Users highlighted that the GenAI applications did not provide references for the generated content, leading to a lack of transparency and making it difficult to verify the accuracy and reliability of the information.

- Limited Adaptability and Customization: the one-size-fits-all approach adopted by these applications does not cater to diverse user expertise levels, reducing accessibility for both novice and experienced users.

5.1.2. Ethical Shortcomings in GUI Usability and Their Implications

5.1.3. Recommendations for Ethical and Responsible GUI Design

- Transparency Mechanisms: display content provenance, ensure explainable AI features, and provide interactive transparency options where users can inquire about system decision-making.

- Robust Privacy Controls: include clear opt-in/opt-out data-sharing policies, improve visibility of data-handling practices, and provide user-friendly privacy settings.

- Trust-Building Features: introduce explicit disclaimers on AI limitations, allow user feedback to influence responses, and create mechanisms for users to challenge or verify AI outputs.

6. Limitations and Threats to Validity

7. Conclusions and Future Work

- Enhance transparency: include clear system status indicators and real-time feedback to ensure users understand system responses and progress.

- Support user autonomy: provide customizable controls, adaptive interfaces, and undo/redo options to allow users to manage and tailor their interactions.

- Reduce cognitive load: implement error prevention and recovery mechanisms, context-specific guidance, and simplified layouts to streamline user tasks.

- Improve trustworthiness: integrate built-in reference mechanisms and source display for generated content to increase accountability and reliability.

- Foster ethical alignment: design interfaces that promote fairness, accessibility, and informed decision-making through clear instructions and guidance.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Tasks Performed by the Participants

References

- Sengar, S.S.; Hasan, A.B.; Kumar, S.; Carroll, F. Generative Artificial Intelligence: A Systematic Review and Applications. arXiv 2024, arXiv:2405.11029. [Google Scholar] [CrossRef]

- OpenAI. ChatGPT. 2024. Available online: https://openai.com/chatgpt (accessed on 5 September 2024).

- Google. Google Gemini. 2024. Available online: https://gemini.google.com/app (accessed on 5 September 2024).

- Anthropic. Claude AI by Anthropic. 2024. Available online: https://www.anthropic.com/claude (accessed on 5 September 2024).

- Perkins, M.; Furze, L.; Roe, J.; MacVaugh, J. The Artificial Intelligence Assessment Scale (AIAS): A framework for ethical integration of generative AI in educational assessment. J. Univ. Teach. Learn. Pract. 2024, 21. [Google Scholar] [CrossRef]

- Holmes, W.; Miao, F. Guidance for Generative AI in Education and Research; UNESCO Publishing: Paris, France, 2023. [Google Scholar]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical considerations of using ChatGPT in health care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef] [PubMed]

- Oswal, S.K.; Oswal, H.K. Examining the Accessibility of Generative AI Website Builder Tools for Blind and Low Vision Users: 21 Best Practices for Designers and Developers. In Proceedings of the 2024 IEEE International Professional Communication Conference (ProComm), Pittsburgh, PA, USA, 14–17 July 2024; pp. 121–128. [Google Scholar]

- Adnin, R.; Das, M. “I look at it as the king of knowledge”: How Blind People Use and Understand Generative AI Tools. People 2024, 16, 92. [Google Scholar]

- Weisz, J.D.; Muller, M.; He, J.; Houde, S. Toward general design principles for generative AI applications. arXiv 2023, arXiv:2301.05578. [Google Scholar] [CrossRef]

- Hornbæk, K. Current practice in measuring usability: Challenges to usability studies and research. Int. J.-Hum.-Comput. Stud. 2006, 64, 79–102. [Google Scholar] [CrossRef]

- Alvarez-Cortes, V.; Zayas-Perez, B.E.; Zarate-Silva, V.H.; Uresti, J.A.R. Current trends in adaptive user interfaces: Challenges and applications. In Proceedings of the Electronics, Robotics and Automotive Mechanics Conference (CERMA 2007), Cuernavaca, Mexico, 25–28 September 2007; pp. 312–317. [Google Scholar]

- Kim, T.s.; Ignacio, M.J.; Yu, S.; Jin, H.; Kim, Y.g. UI/UX for Generative AI: Taxonomy, Trend, and Challenge. IEEE Access 2024, 12, 179891–179911. [Google Scholar] [CrossRef]

- Yamani, A.Z.; Al-Shammare, H.A.; Baslyman, M. Establishing Heuristics for Improving the Usability of GUI Machine Learning Tools for Novice Users. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 11–16 May 2024; pp. 1–19. [Google Scholar]

- Ribeiro, R. Conversational Generative AI Interface Design: Exploration of a Hybrid Graphical User Interface and Conversational User Interface for Interaction with ChatGPT; Malmö University (Faculty of Culture and Society, School of Arts and Communication): Malmö, Sweden, 2024. [Google Scholar]

- Skjuve, M.; Følstad, A.; Brandtzaeg, P.B. The user experience of ChatGPT: Findings from a questionnaire study of early users. In Proceedings of the 5th International Conference on Conversational User Interfaces, Eindhoven, The Netherlands, 19–21 July 2023; pp. 1–10. [Google Scholar]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things-Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Perera, H.; Hussain, W.; Whittle, J.; Nurwidyantoro, A.; Mougouei, D.; Shams, R.A.; Oliver, G. A study on the prevalence of human values in software engineering publications, 2015–2018. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering, Seoul, Republic of Korea, 27 June–19 July 2020; pp. 409–420. [Google Scholar]

- Batool, A.; Zowghi, D.; Bano, M. AI governance: A systematic literature review. AI Ethics 2025, 5, 3265–3279. [Google Scholar] [CrossRef]

- Batool, A.; Zowghi, D.; Bano, M. Responsible AI governance: A systematic literature review. arXiv 2023, arXiv:2401.10896. [Google Scholar] [CrossRef]

- Beltran, M.A.; Ruiz Mondragon, M.I.; Han, S.H. Comparative Analysis of Generative AI Risks in the Public Sector. In Proceedings of the 25th Annual International Conference on Digital Government Research, New York, NY, USA, 11–14 June 2024; pp. 610–617. [Google Scholar] [CrossRef]

- Arnesen, S.; Broderstad, T.S.; Fishkin, J.S.; Johannesson, M.P.; Siu, A. Knowledge and support for AI in the public sector: A deliberative poll experiment. AI Soc. 2024, 40, 3573–3589. [Google Scholar] [CrossRef]

- Ahmed, A.; Imran, A.S. The role of large language models in UI/UX design: A systematic literature review. arXiv 2025, arXiv:2507.04469. [Google Scholar] [CrossRef]

- Akbar, M.A.; Khan, A.A.; Liang, P. Ethical aspects of ChatGPT in software engineering research. IEEE Trans. Artif. Intell. 2023, 6, 254–267. [Google Scholar] [CrossRef]

- Hagendorff, T. Mapping the ethics of generative AI: A comprehensive scoping review. Minds Mach. 2024, 34, 39. [Google Scholar] [CrossRef]

- Aničin, L.; Stojmenović, M. Bias analysis in stable diffusion and MidJourney models. In Intelligent Systems and Machine Learning; Mohanty, S.N., Garcia Diaz, V., Satish Kumar, G.A.E., Eds.; Springer: Cham, Switzerland, 2023; pp. 378–388. [Google Scholar]

- Hillmann, S.; Kowol, P.; Ahmad, A.; Tang, R.; Möller, S. Usability and User Experience of a Chatbot for Student Support. In Proceedings of the Elektronische Sprachsignalverarbeitung 2024, Tagungsband der 35, Konferenz, Regensburg, 6–8 March 2024; pp. 22–29. [Google Scholar]

- Pinto, G.; De Souza, C.; Rocha, T.; Steinmacher, I.; Souza, A.; Monteiro, E. Developer Experiences with a Contextualized AI Coding Assistant: Usability, Expectations, and Outcomes. In Proceedings of the IEEE/ACM 3rd International Conference on AI Engineering-Software Engineering for AI, Lisbon, Portugal, 14–15 April 2024; pp. 81–91. [Google Scholar]

- van Es, K.; Nguyen, D. “Your friendly AI assistant”: The anthropomorphic self-representations of ChatGPT and its implications for imagining AI. AI Soc. 2024, 40, 3591–3603. [Google Scholar] [CrossRef]

- Iranmanesh, A.; Lotfabadi, P. Critical questions on the emergence of text-to-image artificial intelligence in architectural design pedagogy. AI Soc. 2024, 40, 3557–3571. [Google Scholar] [CrossRef]

- Al-kfairy, M.; Mustafa, D.; Kshetri, N.; Insiew, M.; Alfandi, O. Ethical challenges and solutions of generative AI: An interdisciplinary perspective. Informatics 2024, 11, 58. [Google Scholar] [CrossRef]

- Alabduljabbar, R. User-centric AI: Evaluating the usability of generative AI applications through user reviews on app stores. PeerJ Comput. Sci. 2024, 10, e2421. [Google Scholar] [CrossRef] [PubMed]

- Mugunthan, T. Researching the Usability of Early Generative-AI Tools; Nielsen Norman Group: Dover, DE, USA, 2023. [Google Scholar]

- Wharton, C.; Rieman, J.; Lewis, C.; Polson, P. The cognitive walkthrough method: A practitioner’s guide. In Usability Inspection Methods; Nielsen, J., Mack, R.L., Eds.; John Wiley & Sons: New York, NY, USA, 1994; pp. 105–140. [Google Scholar]

- Nielsen, J.; Mack, R.L. Usability Inspection Methods; Wiley: New York, NY, USA, 1994; ISBN 978-0471018773. [Google Scholar]

- Brooke, J. SUS: A “quick and dirty” usability scale. In Usability Evaluation in Industry; Taylor & Francis: London, UK, 1996; pp. 4–7. [Google Scholar]

- Preece, J.; Rogers, Y.; Sharp, H. Interaction Design: Beyond Human-Computer Interaction, 4th ed.; John Wiley & Sons: Chichester, UK, 2015. [Google Scholar]

- MacKenzie, I.S. Human-Computer Interaction: An Empirical Research Perspective, 1st ed.; Elsevier: Waltham, MA, USA, 2013. [Google Scholar]

- Kuppens, D.; Verbert, K. Conversational User Interfaces: A review of usability methods. In Proceedings of the ACM Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–12. [Google Scholar]

- Mittelstadt, B. Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 2019, 1, 501–507. [Google Scholar] [CrossRef]

- Nielsen, J.; Molich, R. Heuristic evaluation of user interfaces. In Proceedings of the Eighth ACM SIGCHI Conference on Human Factors in Computing Systems (CHI ’90), Seattle, WA, USA, 1–5 April 1990; ACM Press: New York, NY, USA, 1990; pp. 249–256. [Google Scholar]

- Rubin, J.; Chisnell, D. Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests, 2nd ed.; Wiley: Indianapolis, IN, USA, 2008. [Google Scholar]

- Nielsen, J. Why You Only Need to Test with 5 Users; Nielsen Norman Group: Dover, DE, USA, 2000; Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 21 September 2025).

- Fereday, J.; Muir-Cochrane, E. Demonstrating rigor using thematic analysis: A hybrid approach of inductive and deductive coding and theme development. Int. J. Qual. Methods 2006, 5, 80–92. [Google Scholar] [CrossRef]

- Australian Government, Department of Industry, Science and Resources. In Australia’s AI Ethics Principles; Department of Industry, Science and Resources: Canberra, Australia, 2019. Available online: https://www.industry.gov.au/publications/australias-artificial-intelligence-ethics-principles (accessed on 21 September 2025).

| Characteristic | Distribution |

|---|---|

| Gender | Female: 6, Male: 6 |

| Current Role | Senior Research Scientist: 4 |

| Postdoctoral Researcher: 3 | |

| Senior Software Engineer: 3 | |

| Research Scientist: 2 | |

| Duration in Current Role | 7–10 years: 5 |

| 4–6 years: 2 | |

| 1–3 years: 5 |

| Tasks | Gemini | ChatGPT | Claude |

|---|---|---|---|

| Task 1: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Visibility of System Status.’ You can either list the issues below or select from the options | Success | Success | Success |

| Task 2: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Match between system and the real world.’ You can … | Partial Success: Reason: The prompt for this task was similar to the previous one. Participants initially questioned the repetition; however, maintaining a consistent prompt allowed for controlled comparison across tasks, ensuring that differences in user interaction were attributable to GUI design and usability rather than variations in the task content. | Partial Success | Partial Success |

| Task 3: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘User control and freedom.’ | Success | Success | Success |

| Task 4: Open the GenAI application assigned to you and switch between settings, help, and main task areas. Are the layout, colors, and controls consistent across these sections? … | Success | Success | Success |

| Task 5: Open the GenAI application assigned to you and input the given prompt: Can you delete my conversation? Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Error prevention.’ | Success | Success | Success |

| Task 6: Open the GenAI application assigned to you. Find and use the feature to regenerate a response. How intuitive and visible was the option? … | Success | Success | Success |

| Task 7: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Flexibility and efficiency of use.’ … | Success | Partial Success: Reason: Participants completed the task but reported minor difficulty navigating certain menu options or controls, which slightly affected task efficiency. | Success |

| Task 8: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Aesthetic and minimalist design.’: | Success | Success | Success |

| Task 9: Open the GenAI application assigned to you and input the given incomplete prompt: Generate a 1000-word blog post on Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Help users recognize, diagnose, and recover from errors.’ … | Success | Success | Success |

| Task 10: Open the GenAI application assigned to you and locate the help section or tutorial. How easy was it to find the relevant documentation or guidance using the interface?… | Success | Success | Success |

| Task 11: Open the GenAI application assigned to you and input the given prompt: Can you guide me on how to write a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Guidance.’ … | Success | Success | Success |

| Task 12: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Trustworthiness.’ … | Success | Success | Success |

| Task 13: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Adaptation to growth.’ … | Success | Success | Success |

| Task 14: Open the GenAI application assigned to you and input the given prompt: Generate a 1000-word blog post on “How to Build Confidence in the Workplace” Review the response from the generative AI (GenAI) application and identify any issues related to the ‘Context relevance.’ | Success | Success | Partial Success—Reason: One participant questioned the repetition of the prompt. We explained that the similarity in prompts was intentional to observe whether the interface supported streamlined interactions. The participant accepted this explanation and completed the task successfully. |

| GUI Usability Issues | Initial Codes | Final Themes |

|---|---|---|

| The response lacks real-world context or examples (e.g., it is not relatable to workplace scenarios). | Contextual Relevance | Poor Real-World Applicability |

| The response uses terminology that is not familiar or natural for the intended audience. | Audience Knowledge Misalignment | Poor Audience Appropriateness |

| The information is presented in an unnatural order (e.g., lacks logical flow or structure). | Sequence of Ideas | Weak Content Organization |

| The structure of the answer does not match typical real-world content formatting (e.g., no introduction, conclusion, or headings). | Structural Format | Inadequate Structural Formality |

| The application does not allow easy correction or modification of the input prompt (e.g., no option to edit or resubmit). | Input Modification Difficulty | Limited Input Flexibility |

| Heuristics | Gemini—GUI Usability Issues | ChatGPT—GUI Usability Issues | Claude—GUI Usability Issues |

|---|---|---|---|

| H1: Visibility of system status | No time indication for task completion—2 selections No indicators—1 selection No issues—1 selection | No time indication for task completion—4 selections No issues—1 selection | No time indication for task completion—3 selections No issues—1 selection |

| H2: Match between system and the real world | Poor Real-World Applicability—2 selections Long Response—2 selection | Poor Real-World Applicability—2 selections No issues—2 selection | No issues—4 selections |

| H3: User control and freedom | Limited Input Flexibility—3 selections Restricted Interaction Control—3 selections Absence of Clear Reset Functionality—3 selections Inability to Interrupt Output Generation: 3 selection | Lack of revise functionality—2 selections Absence of Clear Reset Functionality—4 selections Limited Flexibility in Topic Refinement – 1 selection Inability to Interrupt Output Generation: 1 selection | Absence of Clear Reset Functionality—3 selections Inability to Interrupt or Cancel Output Generation—1 selection |

| H4: Consistency and standards | No Issues—4 selections | Inconsistent Visual Alignment—1 selection Absence of Accessible Help and Navigation Guidance—1 selection No issues—Good consistency—2 selections | Inconsistent Typography: 1 selection, No issues: 3 selections |

| H5: Error prevention | Absence of Undo/Redo Functionality—2 selections Insufficient Error Prevention—1 selection Lack of Safeguards Against Critical Actions—1 selection Inconsistent Communication—3 selections | Absence of Undo/Redo Functionality—4 selections Insufficient Preventive Guidance and Error Mitigation—3 selections Lack of Safeguards Against Critical Actions—2 selections Lack of Action Confirmation for Irreversible Tasks—2 selections Absence of Consequence Warnings for Critical Actions—2 selections | Absence of Undo/Redo Functionality—2 selections Insufficient Preventive Guidance and Error Mitigation—2 selections Insufficient Error Prevention—3 selections Lack of Action Confirmation for Irreversible Tasks—2 selections Absence of Consequence Warnings for Critical Actions—2 selections |

| H6: Recognition rather than recall | Lack of easy access to features—2 selections Lack of visible shortcuts—2 selections | Lack of visible shortcuts—3 selections Reliance on Memory for System Interaction—1 selection | No issues—4 selections |

| H7: Flexibility and efficiency of use | Lack of Efficiency Features for Experienced Users Lack of customization options for responses—2 selections Absence of Mode Switching—2 selections | Lack of Efficiency Features for Experienced Users—4 selections Lack of customization options for responses—4 selections Lack of easy-to-access help for new users—3 selections Absence of Mode Switching for User Customization—2 selections Overly Simplistic Design Limiting Advanced User Efficiency—2 selections | Absence of Mode Switching for User Customization—2 selections No issues—2 selections |

| H8: Aesthetic and minimalist design | Cluttered Response Design—1 selection Good aesthetic and minimalist design—3 selections | Cluttered Response Design Affecting Readability—1 selection Good aesthetic and minimalist design—3 selections | Cluttered Response Design Affecting Readability—2 selections Good aesthetic and minimalist design—2 selections |

| H9: Help users recognize, diagnose, and recover from errors | Insufficiently Detailed Error Messages—1 selection Delayed Error Notification—1 selection Long response with unnecessary details—1 selection No issues—2 selections | Lack of Proactive Error Prevention Guidance—1 selection No issues—3 selections | No issues—4 selections |

| H10: Help and documentation | The provided help is too generic—3 selections No issues—1 selection | Difficult to find help documentation—4 selections Absence of Clear Usage Instructions—2 selections Lack of help or tutorial options, Lack of easily accessible support resources, Lack of readily available help—1 selection | Lack of help and tutorial options—2 selections Absence of Clear Usage Instructions—2 selections Lack of easily accessible support resources—2 selections |

| H11: Guidance | Irrelevant guidance—2 selections No issues (good guidance)—2 selections | Lack of Post-Input Guidance—1 selection No issues —3 selections | No issues—4 selections |

| H12: Trustworthiness | Lack of references for the provided responses—2 selections Lack of transparency—2 selections | Lack of references for the provided responses—3 selections Lack of transparency—3 selections Unreliable Content Due to Lack of Factual Accuracy—3 selections Generate unverified information—1 selection | Lack of transparency—3 selections Lack of references for the provided responses—3 selections Unreliable Content Due to Lack of Factual Accuracy—1 selection Lack of Transparency in Data Handling—1 selection |

| H13: Adaptation to growth | Lack of Adaptive Interface for User Proficiency: 3 selections One-Size-Fits-All Guidance Approach—2 selections Lack of Adaptive Interface Design—2 selections Absence of Dynamic User Feedback Mechanism: 2 selections | One-Size-Fits-All Guidance Approach—4 selections Lack of Adaptive Interface for User Proficiency—4 selections Lack of Adaptive Interface Design—3 selections Absence of Dynamic User Feedback Mechanism—3 selections Inflexibility in Evolving User Permissions—2 selections | One-Size-Fits-All Guidance Approach—4 selections Inflexibility in Evolving User Permissions—2 selections Lack of Adaptive Interface for User Proficiency—2 selections Lack of Adaptive Interface Design—2 selections Absence of Dynamic User Feedback Mechanism—1 selection |

| H14: Context relevance | Generic Content Output Lacking Specificity—2 selections Shallow or Superficial Responses—2 selections | Generic Content Output Lacking Specificity—4 selections Shallow or Superficial Responses—3 selections | Generic Content Output Lacking Specificity—3 selections Shallow or Superficial Responses—3 selections No issues—1 selection |

| Heuristics | Mapped Australia’s AI Ethics Principles | Explanation |

|---|---|---|

| Visibility of system status | Transparency and explainability. | Systems that provide clear feedback about their processes support transparency by informing users about the state and actions of the system, fostering explainability. |

| Match between system and the real world | Human-centered values. Fairness. | Ensuring the system uses language and concepts familiar to users supports inclusiveness and respects user diversity, aligning with fairness and human-centered design. |

| User control and freedom | Human-centered values. Contestability. | Providing users control over system actions aligns with contestability, allowing users to challenge or modify outcomes. |

| Consistency and standards | Reliability. | Adherence to design standards enhances reliability by ensuring predictable and dependable system behavior. |

| Error prevention | Reliability and safety. Privacy protection and security. | Preventing errors minimizes the risk of harmful or unintended outcomes, contributing to system reliability and safeguarding user privacy. |

| Recognition rather than recall | Human-centered values. Fairness. | Reducing cognitive load promotes inclusiveness and accessibility, which align with fairness and human-centered values. |

| Flexibility and efficiency of use | Fairness. Human-centered values. | Providing flexibility accommodates diverse user needs, ensuring fairness and inclusivity. |

| Aesthetic and minimalist design | Transparency and explainability. Human-centeredvalues. | Simplified designs improve understanding and accessibility, fostering transparency and supporting user-centered values. |

| Help users recognize, diagnose, and recover from errors | Reliability and safety. Contestability. | Assisting users in managing errors ensures system safety and aligns with contestability by empowering users to address issues. |

| Help and documentation | Transparency and explainability. Human-centeredvalues. Fairness. | Comprehensive help resources support transparency and fairness by equipping users with the knowledge needed for effective interaction. |

| Guidance | Human, societal and environmental well-being. Transparency and explainability. Accountability. | Offering clear guidance ensures the system promotes well-being, maintains transparency, and upholds accountability. |

| Trustworthiness | Privacy protection and security. Transparency and explainability. Accountability. | Ensuring trustworthiness addresses critical ethical concerns, including data privacy, transparency, and responsibility. |

| Adaptation to growth | Human-centered values. | Designing systems to adapt to user growth supports inclusivity and ongoing relevance. |

| Context relevance | Human, societal, and environmental well-being. Fairness. Transparency. | Aligning system functionality with context ensures fairness and promotes societal and environmental well-being. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Batool, A.; Hussain, W. Evaluating the Usability and Ethical Implications of Graphical User Interfaces in Generative AI Systems. Computers 2025, 14, 418. https://doi.org/10.3390/computers14100418

Batool A, Hussain W. Evaluating the Usability and Ethical Implications of Graphical User Interfaces in Generative AI Systems. Computers. 2025; 14(10):418. https://doi.org/10.3390/computers14100418

Chicago/Turabian StyleBatool, Amna, and Waqar Hussain. 2025. "Evaluating the Usability and Ethical Implications of Graphical User Interfaces in Generative AI Systems" Computers 14, no. 10: 418. https://doi.org/10.3390/computers14100418

APA StyleBatool, A., & Hussain, W. (2025). Evaluating the Usability and Ethical Implications of Graphical User Interfaces in Generative AI Systems. Computers, 14(10), 418. https://doi.org/10.3390/computers14100418