Preserving Songket Heritage Through Intelligent Image Retrieval: A PCA and QGD-Rotational-Based Model

Abstract

1. Introduction

2. Literature Review

2.1. Image Retrieval Challenges

2.2. Domain Specific: Malaysia’s Cultural Heritage

2.2.1. Songket Motifs

2.2.2. Research in Songket Motifs Retrieval System

2.3. Image Retrieval Model Comparison

2.3.1. Bag of Visual Words Model

2.3.2. Min-Hash Model

2.3.3. SVD-SIFT Model

2.3.4. Visually Salient Riemannian Space Model

2.3.5. Fourier–Mellin Transformation Model

2.3.6. Shape-Based Image Retrieval Model

2.3.7. Palembang Motif Image Retrieval Using Canny Edge Detection, PCA, GLCM, and KNN Techniques

2.4. Discussion

3. Methodology

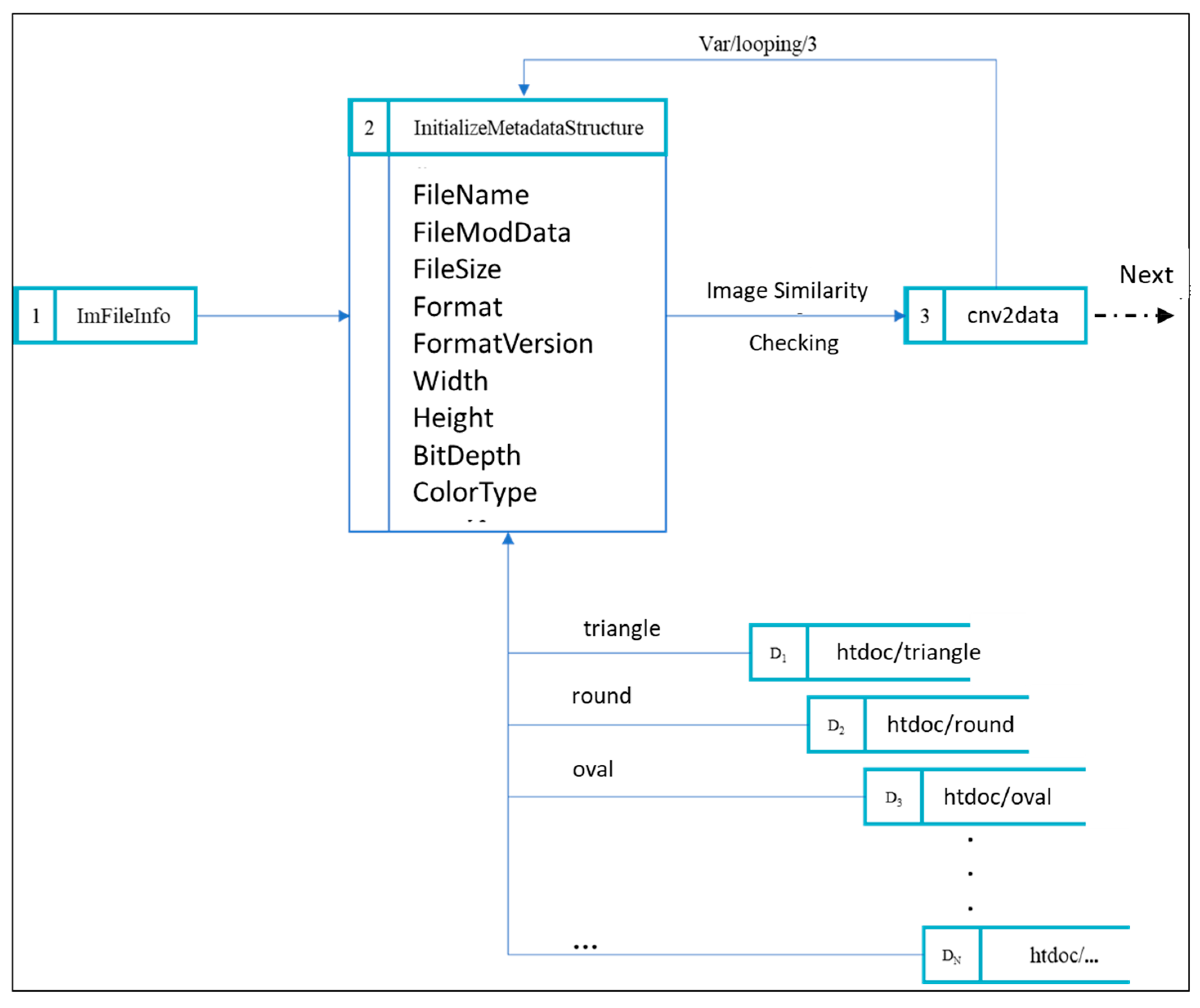

3.1. Data (Pre-Processing)

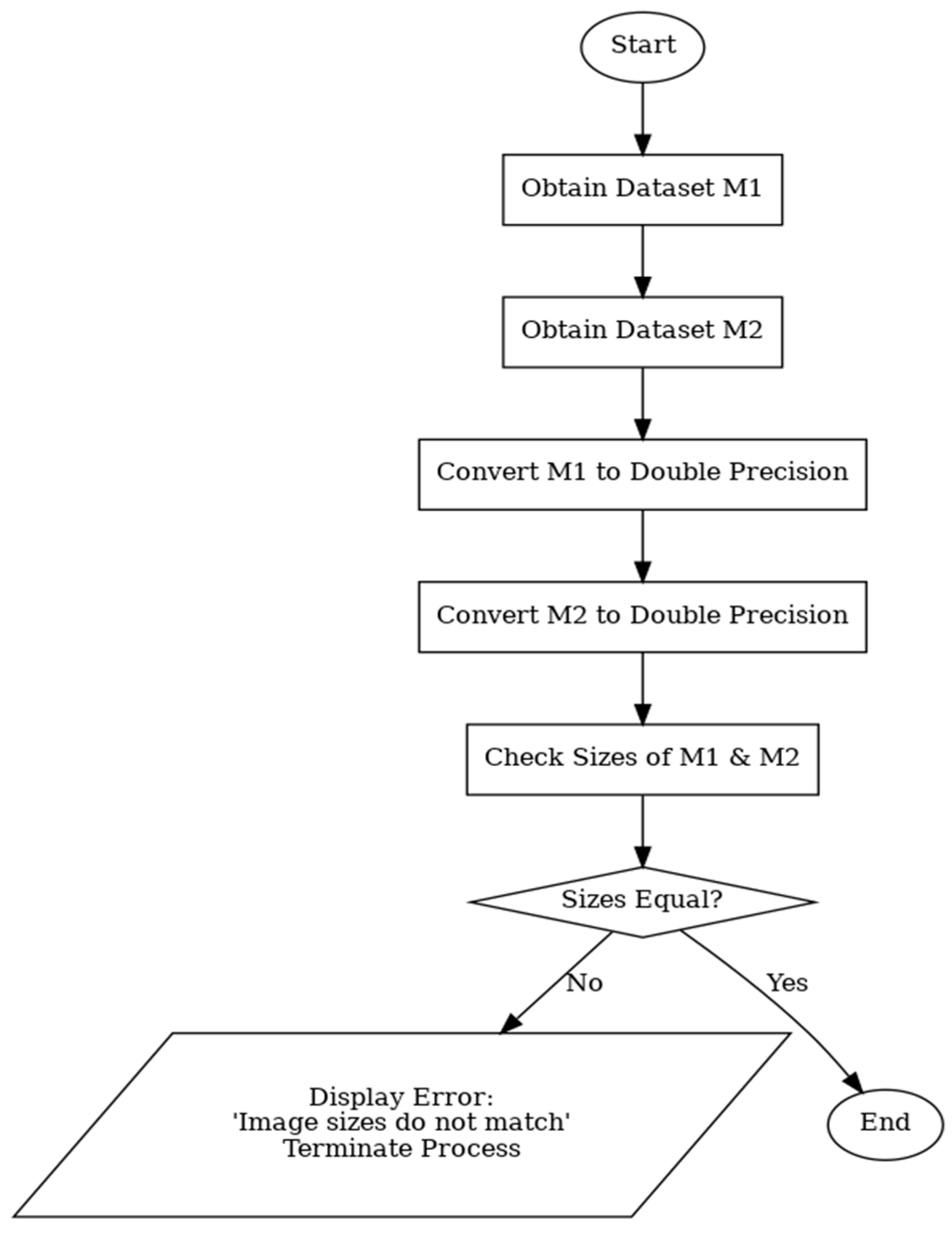

3.2. Model Development Process

- Computing the covariance matrix from the scaled image data.

- Calculating eigenvectors and eigenvalues. The first eigenvector is aligned through the centroid to determine the best-fit line, while the second represents less significant variance.

- Reducing dimensionality by selecting the highest eigenvalue to represent the principal component, with eigenvectors ranked from highest to lowest priority.

- Deriving PCA scores using Equation (1).

- Classifying the PCA scores.

- Eigenvectors and eigenvalues, represented as X, are passed to Stage II—Part II for similarity matching.

- A rotated object derived from the original image.

- Alignment of rotated objects of differing sizes for consistency.

- When a and b are vectors of equal length, the output is a 2 × 2 covariance matrix.

- When a and b are observation matrices, they are reshaped into vectors using cov(a(:),b(:)), which requires identical dimensions.

- When a and b are scalars or empty arrays, the function returns either a 2 × 2 zero matrix or a NaN block, respectively.

- Image format conversion;

- Evaluation of image features extracted;

- Declaration of arrays;

- Declaration of image objects;

- Geometric feature calculation through symmetrical analysis;

- Matching the closest points based on feature similarity;

- Estimating binary points for precision matching;

- Storing the estimated matches for image classification.

3.3. Image Rotation

- Declaration of the rot90 function.

- Identification of features from both the query and stored images.

- The query image is flipped and matched against stored images to detect rotational differences through alignment.

- A loop is executed based on the number of stored images.

- If no rotational match is found, the process terminates.

- If a match is found, a re-alignment process is initiated.

- Matching the most similar points based on feature similarity or proximity.

- Estimation of corresponding points to achieve accurate alignment.

- Results are finalized and forwarded to the image indexing phase.

- If k == 1: Matrix A is flipped along dimension 2 and then permuted to switch the first and second dimensions.

- If k == 2: Matrix A is flipped twice, first along dimension 1 and then dimension 2.

- If k == 3: The matrix is first permuted and then flipped along dimension 2.

- If k == 0: No rotation is applied and A is returned unchanged.

- If k is not an integer, an error is raised.

- Extraction of feature vector similarity results.

- Sorting of similarity values.

- Re-plotting the sorted indices.

- Ranking indices from highest to lowest similarity percentage.

- Final classification output is generated.

- The retrieval results are displayed on the songket motif image retrieval system interface.

3.4. Definition of Technique

- PCA Technique

- 2.

- RPCA Technique

- 3.

- QGD Technique

- 4.

- PCAQGD Technique

- 5.

- PCAQGD + Rotation Technique (Proposed Method)

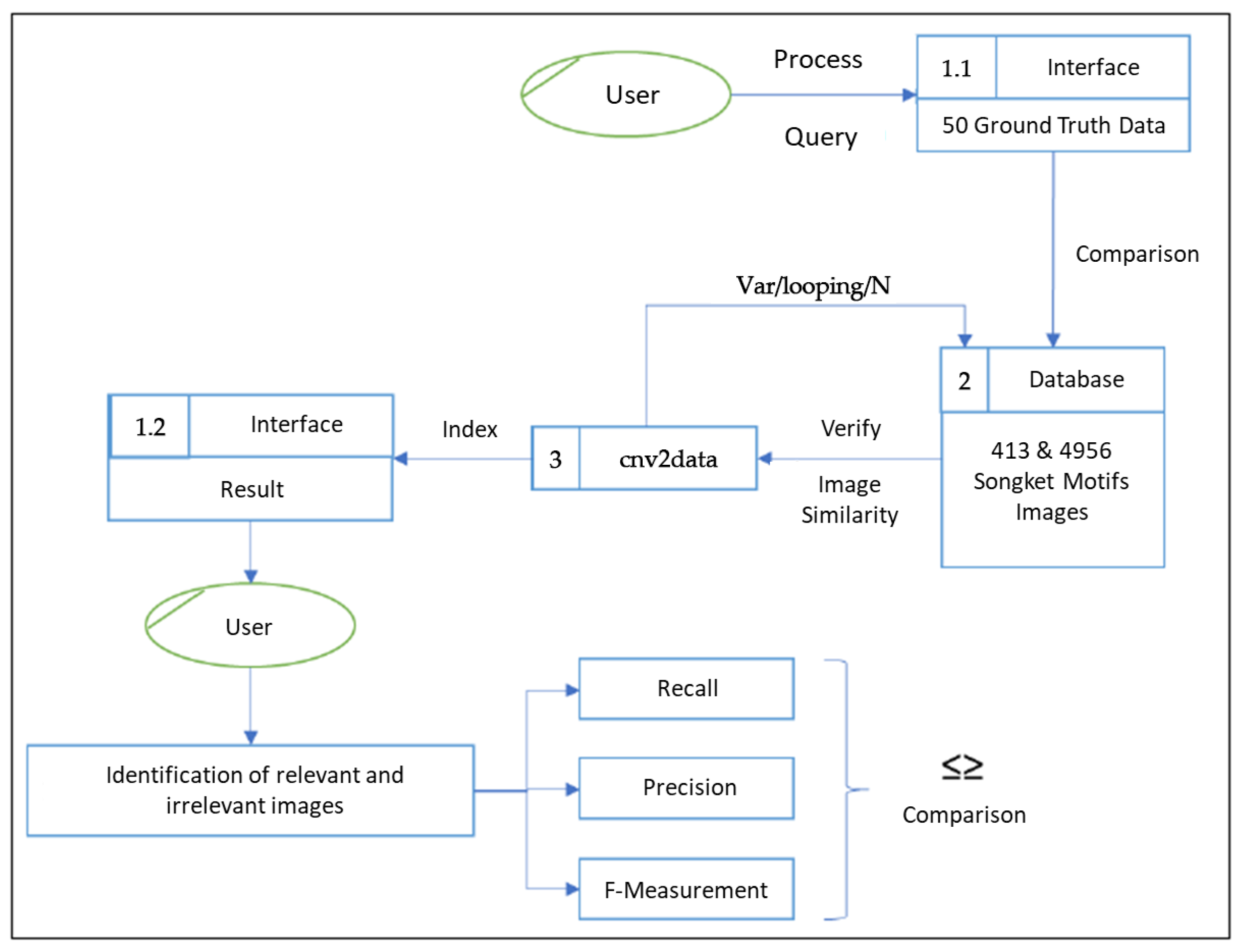

4. Testing and Evaluation

4.1. Testing

4.2. Result

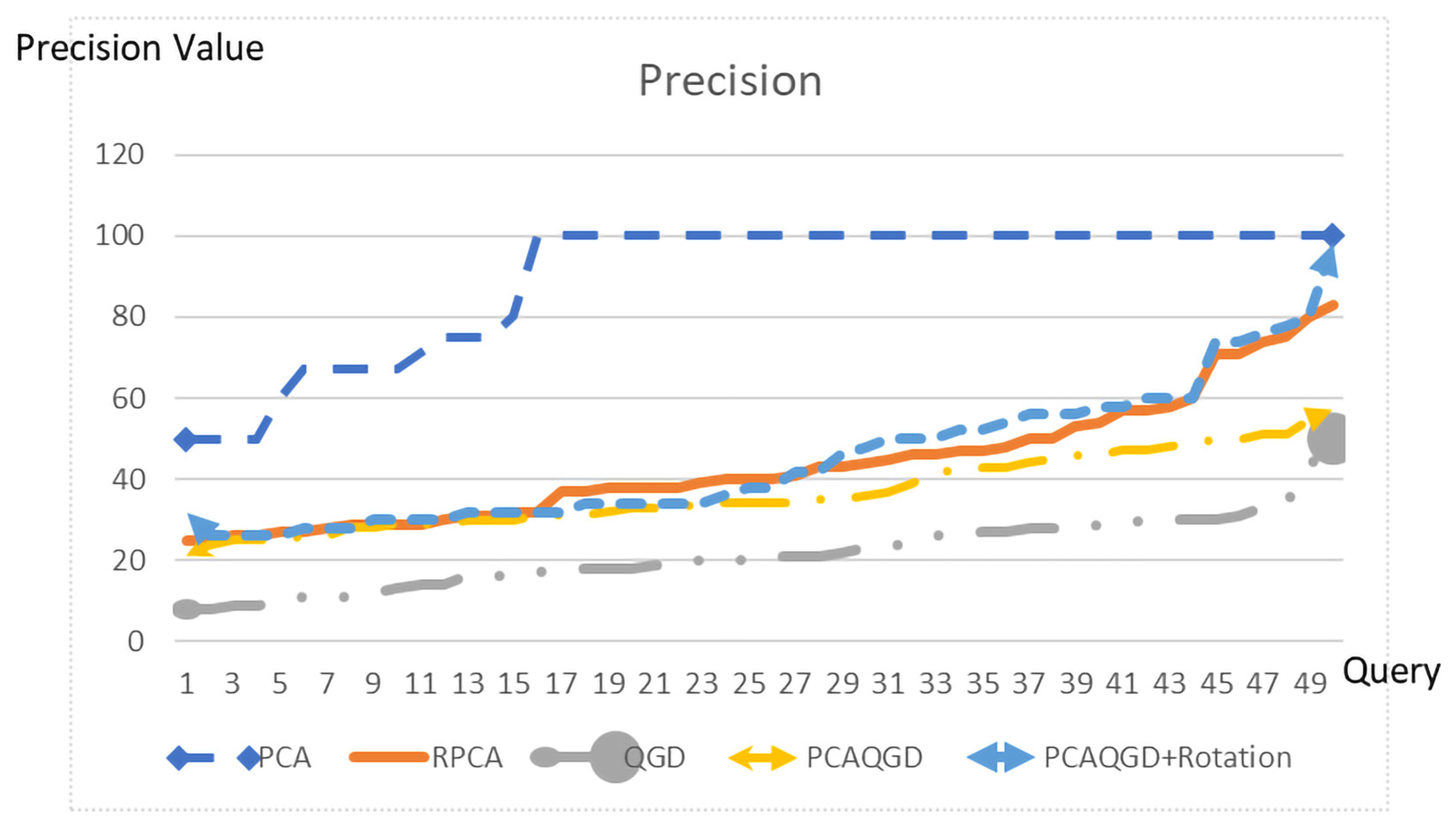

4.2.1. Precision Evaluation

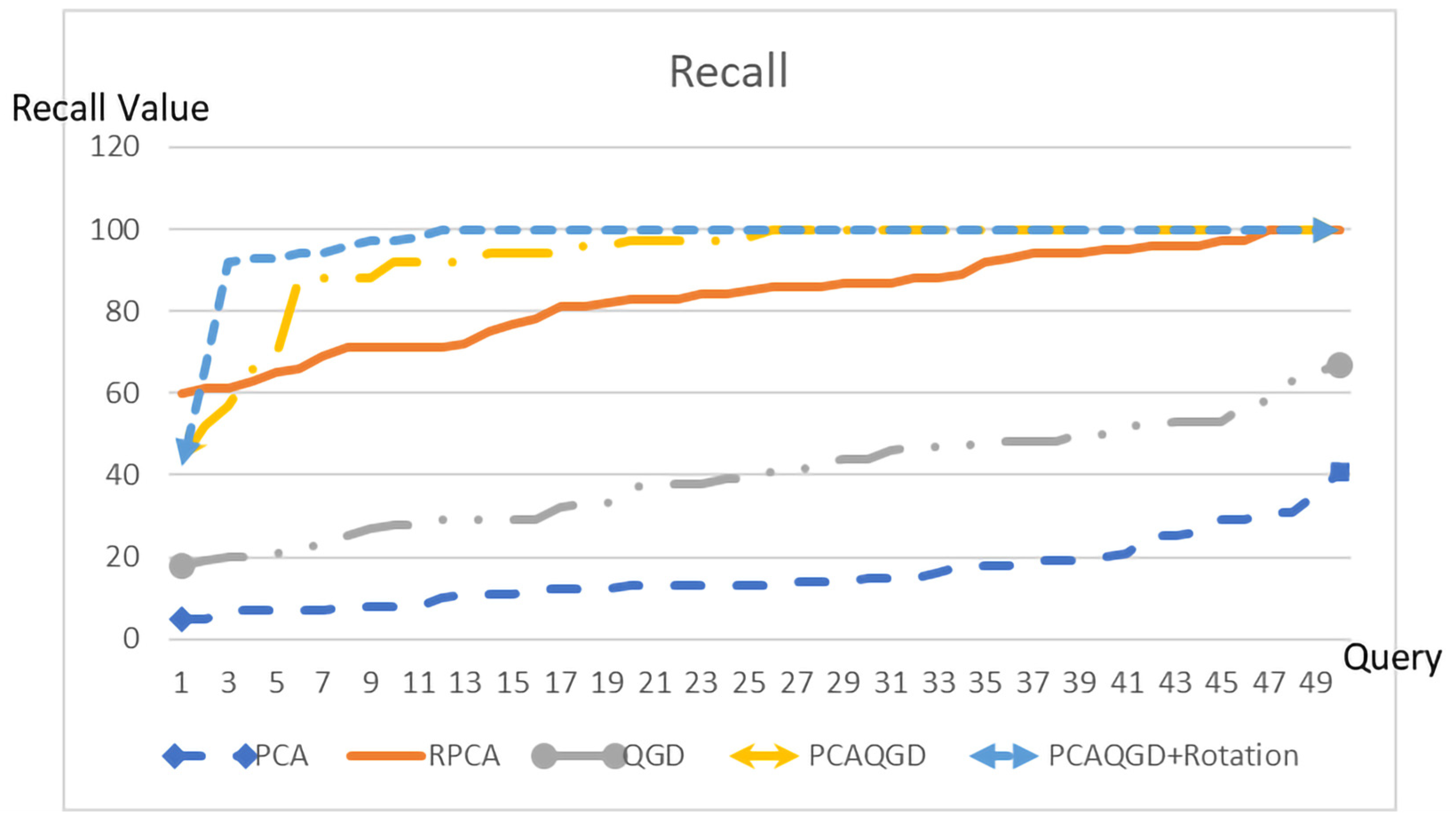

4.2.2. Recall Evaluation

4.2.3. F-Measurement

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arba’iyah Ab, A. Songket Melayu: Serumpun Bangsa Sesantun Budaya. J. Pengaj. Melayu 2021, 32, 57–73. [Google Scholar] [CrossRef]

- Jusam, A.; Yu, W.C.; Rafee, Y.M.; Awang, A.; Md Yusof, S.Z.; Jussem, S.W.; Abol Hassan, M.Z. Pengaplikasian Teknologi Visual dalam Penghasilan Inovasi berkaitan Proses Penghasilan Songket Rajang di Sarawak. J. Dunia 2021, 3, 105–116. [Google Scholar]

- UNESCO. Representative List of the Intangible Cultural Heritage of Humanity: Songket Malaysia. 2019. Available online: https://ich.unesco.org (accessed on 18 July 2025).

- Aniza, O. Ciri Pembeza Pengelas Penampilan Warna Pemandangan Lukisan Landskap Berdasarkan Pengamatan Manusia Terhadap Warna. Ph.D. Thesis, Universiti Kebangsaan Malaysia, Bangi, Malaysia, 2021. [Google Scholar]

- Casado-Coscolla, A.; Sánchez-Belenguer, C.; Wolfart, E.; Angorrilla-Bustamante, C.; Sequeira, V. Active Learning for Image Retrieval via Visual Similarity Metrics and Semantic Features. Eng. Appl. Artif. Intell. 2024, 138, 109239. [Google Scholar] [CrossRef]

- Pavel, P.; Axel, L. Optimal Principal Component Analysis of STEM-XEDS Spectrum Images. Adv. Struct. Chem. Imaging 2019, 5, 4. [Google Scholar] [CrossRef]

- Ma, Q.; Pan, J.; Bai, C. Direction-Oriented Visual-Semantic Embedding Model for Remote Sensing Image-Text Retrieval. arXiv 2023, arXiv:2310.08276. [Google Scholar] [CrossRef]

- Xian, Y.; Xiang, Y.; Yang, X.; Zhao, Q.; Cairang, X.; Lin, J.; Qi, L.; Zhang, X.; Guo, J. Thangka School Image Retrieval Based on Multi-Attribute Features. npj Herit. Sci. 2025, 13, 179. [Google Scholar] [CrossRef]

- Minarno, A.E.; Soesanti, I.; Nugroho, H.A. A Systematic Literature Review on Batik Image Retrieval. In Proceedings of the 2023 IEEE 13th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 20–21 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmad, A. The Role of History in National Identity Formation. J. Malays. Soc. Hist. 2002, 12, 17–30. [Google Scholar]

- Yusof, N.; Ismail, A.; Abd Majid, N.A.; Muda, Z. Image Retrieval Evaluation Metric for Songket Motif. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 578–588. [Google Scholar] [CrossRef]

- Nursuriati, J.; Zainab, A.B.; Tengku Mohd, T.S. Image Retrieval of Songket Motifs Using Simple Shape Descriptors. In Proceedings of the Conference on Geometric Modeling and Imaging: New Trends (GMAI ′06), London, UK, 5–7 July 2006; pp. 171–176. [Google Scholar] [CrossRef]

- Desi, A.; Mashur, G.L. Semantic Search with Combination Impression and Image Feature Query. In Proceedings of the 3rd International Conference on Applied Engineering (ICAE 2020), Lisbon, Portugal, 7–8 October 2020; pp. 105–109. [Google Scholar] [CrossRef]

- Jamil, N. Image Retrieval of Songket Motifs Based on Fusion of Shape Geometric Descriptors. Ph.D. Thesis, Universiti Kebangsaan Malaysia, Bangi, Malaysia, 2008. [Google Scholar]

- Ermatita, E.; Noprisson, H.; Abdiansah, A. Palembang Songket Fabric Motif Image Detection with Data Augmentation Based on ResNet Using Dropout. Bull. Electr. Eng. Inf. 2024, 13, 1991–1999. [Google Scholar] [CrossRef]

- Wenti, A.W.; Ema, U.; Anggit, D.H. Content-Based Image Retrieval Menggunakan Tamura Texture Fitur pada Kain Songket Khas Lombok. Explore 2021, 11, 35. [Google Scholar] [CrossRef]

- Yullyana, D.; Deci, I.; Mila Nirmala, S.H. Content-Based Image Retrieval for Songket Motifs Using Graph Matching. Sinkron 2022, 7, 714–719. [Google Scholar] [CrossRef]

- Wesnina, W.; Prabawati, M.; Noerharyono, M. Integrating Traditional and Contemporary in Digital Techniques: The Analysis of Indonesian Batik Motifs Evolution. Cogent Arts Humanit. 2025, 12, 2474845. [Google Scholar] [CrossRef]

- Yuhandri; Madenda, S.; Wibowo, E.P.; Karmilasari. Pattern Recognition and Classification Using Backpropagation Neural Network Algorithm for Songket Motifs Image Retrieval. Int. J. Adv. Sci. Eng. Inf. Technol. 2017, 7, 2343–2349. [Google Scholar] [CrossRef]

- Varshney, S.; Singh, S.; Lakshmi, C.V.; Patvardhan, C. Content-Based Image Retrieval of Indian Traditional Textile Motifs Using Deep Feature Fusion. Sci. Rep. 2024, 14, 56465. [Google Scholar] [CrossRef]

- Yusof, N. Pencarian Imej Motif Songket Mengguna Teknik Lakaran. Master’s Thesis, Universiti Kebangsaan Malaysia, Bangi, Malaysia, 2014. Available online: http://www.ukm.my/ptsl/e-thesis (accessed on 27 July 2025).

- Yusof, N.; Ismail, A.; Abd Majid, N.A.A. Visualising Image Data through Image Retrieval Concept Using a Hybrid Technique: Songket Motif’s. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 359–369. [Google Scholar] [CrossRef]

- Abdullah, N.H.; Isa, W.M.W.; Wan Shamsuddin, S.N.; Rawi, N.A.; Mat Amin, M.; Zain, W.M.; Adzim, W.M. Towards Digital Preservation of Cultural Heritage: Exploring Serious Games for Songket Tradition. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 321–339. [Google Scholar] [CrossRef]

- Zafar, B.; Ashraf, R.; Ali, N.; Ahmed, M.; Jabbar, S.; Chatzichristofis, S.A. Image Classification by Addition of Spatial Information Based on Histograms of Orthogonal Vectors. PLoS ONE 2018, 13, e0198175. [Google Scholar] [CrossRef]

- Broder, A.Z.; Glassman, S.C.; Manasse, M.S.; Zweig, G. Syntactic Clustering of the Web. Comput. Netw. ISDN Syst. 1997, 29, 1157–1166. [Google Scholar] [CrossRef]

- Chum, O.; Philbin, J.; Zisserman, A. Near Duplicate Image Detection: Min-Hash and TF-IDF Weighting. In Proceedings of the British Machine Vision Conference (BMVC), Leeds, UK, 1–4 September 2008; Volume 810, pp. 812–815. [Google Scholar]

- Leskovec, J.; Rajaraman, A.; Ullman, J.D. Mining of Massive Datasets, 2nd ed.; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Francisco, F.; Benjamin, B. On the Use of Minhash and Locality Sensitive Hashing for Detecting Similar Lyrics. Eng. Lett. 2022, 30, 1–16. [Google Scholar]

- Battiato, S.; Maria, G.; Puglisi, G.; Ravì, D.; Farinella, G.M. Aligning Codebooks for Near Duplicate Image Detection. Multimed. Tools Appl. 2014, 72, 1483–1506. [Google Scholar] [CrossRef]

- Hassanian-Esfahani, R.; Kargar, M.J. Sectional MinHash for Near-Duplicate Detection. Expert Syst. Appl. 2018, 99, 203–212. [Google Scholar] [CrossRef]

- Kuric, E.; Bielikova, M. ANNOR: Efficient Image Annotation Based on Combining Local and Global Features. Comput. Graph. 2015, 47, 1–15. [Google Scholar] [CrossRef]

- Liu, H.; Lu, H.; Xue, X. SVD-SIFT for Web Near-Duplicate Image Detection. In Proceedings of the International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010; pp. 1445–1448. [Google Scholar]

- Kabbai, L.; Azaza, A.; Abdellaoui, M.; Douik, A. Image Matching Based on LBP and SIFT Descriptor. In Proceedings of the 12th International Multi-Conference on Systems, Signals and Devices (SSD 2015), Mahdia, Tunisia, 16–19 March 2015. [Google Scholar] [CrossRef]

- Kalpana, J.; Krishnamoorthy, R. Color Image Retrieval Technique with Local Features Based on Orthogonal Polynomials Model and SIFT. Multimed. Tools Appl. 2016, 75, 49–69. [Google Scholar] [CrossRef]

- Li, Z.; Feng, X. Near Duplicate Image Detecting Algorithm Based on Bag of Visual Word Model. J. Multimed. 2013, 8, 557–564. [Google Scholar] [CrossRef]

- Tzeng, J. Split-and-Combine Singular Value Decomposition for Large-Scale Matrix. J. Appl. Math. 2013, 2013, 683053. [Google Scholar] [CrossRef]

- Zheng, L.; Lei, Y.; Qiu, G.; Huang, J. Near-Duplicate Image Detection in a Visually Salient Riemannian Space. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1578–1593. [Google Scholar] [CrossRef]

- Douze, M.; Amsaleg, L.; Schmid, C. Evaluation of GIST Descriptors for Web-Scale Image Search. In Proceedings of the ACM International Conference on Image and Video Retrieval (CIVR ′09), Santorini, Greece, 8–10 July 2009; Article No. 19. pp. 1–8. [Google Scholar]

- Costa Pereira, J.L.G.F.S.; de Azevedo, J.C.R.; Knapik, H.G.; Burrows, H.D. Unsupervised Component Analysis: PCA, POA and ICA Data Exploring—Connecting the Dots. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2016, 165, 6–13. [Google Scholar] [CrossRef]

- Sokic, E.; Konjicija, S. Phase Preserving Fourier Descriptor for Shape-Based Image Retrieval. Signal Process. Image Commun. 2016, 40, 82–96. [Google Scholar] [CrossRef]

- Guerreschi, P. Digital Signal and Image Processing Tomography; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2009; Volume 1. [Google Scholar]

- Srinivasan, S.H.; Sawant, N. Finding Near-Duplicate Images on the Web Using Fingerprints. In Proceedings of the 16th ACM International Conference on Multimedia (MM ′08), Vancouver, BC, Canada, 26–31 October 2008; ACM Digital Library: New York, NY, USA, 2008; pp. 881–884. [Google Scholar]

- Costa, L.F.; Cesar, R.M., Jr. Shape Classification and Analysis, 2nd ed.; Taylor & Francis Group, LLC.: Boca Raton, FL, USA, 2009. [Google Scholar]

- Kumar, T.S.; Kumar, V.V.; Reddy, B.E. Image Retrieval Based on Hybrid Features. ARPN J. Eng. Appl. Sci. 2017, 12, 591–598. [Google Scholar]

- Hambali, M.; Imran, B. Classification of Lombok Songket Cloth Image Using Convolution Neural Network Method (CNN). J. PILAR Nusa Mandiri 2021, 17, 149–156. Available online: https://utmmataram.ac.id/ (accessed on 22 July 2025).

- Wagenpfeil, S.; Engel, F.; Kevitt, P.M.; Hemmje, M. AI-Based Semantic Multimedia Indexing and Retrieval for Social Media on Smartphones. Information 2021, 12, 43. [Google Scholar] [CrossRef]

- Slamanig, D.; Tsigaridas, E.; Zafeirakopoulos, Z. Mathematical Aspects of Computer and Information Sciences; Springer: Gebze, Turkey, 2019; Volume 61. [Google Scholar] [CrossRef]

- Delis, A.; Tsotras, V.J. Indexed Sequential Access Method. In Encyclopedia of Database Systems, 2nd ed.; Liu, L., Özsu, M.T., Eds.; Springer: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Nikolai, J. Understanding the Covariance Matrix. DataSciencePlus. 2018. Available online: https://datascienceplus.com/understanding-the-covariance-matrix/ (accessed on 2 October 2020).

- Asim, M.N.; Wasim, M.; Khan, M.U.G.; Mahmood, N.; Mahmood, W. The Use of Ontology in Retrieval: A Study on Textual, Multilingual, and Multimedia Retrieval. IEEE Access 2019, 7, 21662–21686. [Google Scholar] [CrossRef]

- Yusof, N.; Ismail, A.; Abd Majid, N.A.A. A Hybrid Model for Near-Duplicate Image Detection in MapReduce Environment. TEM J. 2019, 8, 1252–1258. [Google Scholar] [CrossRef]

- Yusof, N.; Tengku Wan, T.S.M.; Mohd Noor, S.F. Model Konseptual untuk Capaian Imej Motif Songket Mengguna Teknik Lakaran. In Proceedings of the Simposium ICT Dalam Warisan Budaya (SICTH 2016), Cuiabá, Brazil, 7–10 November 2016; Universiti Kebangsaan Malaysia: Bangi, Malaysia, 2016; pp. 72–81. [Google Scholar]

- Marecek, L.; Mathis, A.H. Intermediate Algebra 2e; OpenStax: Houston, TX, USA, 2020; Available online: https://openstax.org/books/intermediate-algebra-2e/pages/1-introduction (accessed on 22 July 2023).

- dan Pustaka, D.B. Pusat Rujukan Persuratan Melayu. 2017. Available online: https://prpm.dbp.gov.my/ (accessed on 23 November 2022).

- Raieli, R. Multimedia Information Retrieval: Theory and Techniques. In Synthesis Lectures on Information Concepts, Retrieval, and Services; Chandos Publishing: Oxford, UK; Cambridge, UK; New Delhi, India, 2013; Volume 1. [Google Scholar]

- Bütcher, M.; Raieli, A.; Chrysos, G.; Nicola, L.; Powers, D.; Desai, P.; Yousefi, S.; Carol, M. Evaluation Techniques for Retrieval Models. Inf. Retr. J. 2011, 14, 182–203. [Google Scholar]

- Chrysos, G.G.; Antonakos, E.; Snape, P.; Asthana, A.; Zafeiriou, S. A Comprehensive Performance Evaluation of Deformable Face Tracking “In-the-Wild”. Int. J. Comput. Vis. 2018, 126, 198–232. [Google Scholar] [CrossRef] [PubMed]

- Desai, P.; Pujari, J.; Kinnikar, A. Performance Evaluation of Image Retrieval Systems Using Shape Feature Based on Wavelet Transform. In Proceedings of the 2nd International Conference on Cognitive Computing and Information Processing (CCIP 2016), Changzhou, China, 22–23 September 2022. [Google Scholar]

- Nicola, F.; Carol, P. Information Retrieval Evaluation in a Changing World: Lessons Learned from 20 Years of CLEF. 2019. Available online: https://books.google.dz/books?id=NyGpDwAAQBAJ (accessed on 25 July 2025).

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness & Correlation. J. Mach. Learn. Technol. 2007, 2, 37–63. [Google Scholar]

- Yousefi, B.; Sfarra, S.; Ibarra-Castanedo, C.; Avdelidis, N.P.; Maldague, X.P.V. Thermography Data Fusion and Nonnegative Matrix Factorization for the Evaluation of Cultural Heritage Objects and Buildings. J. Therm. Anal. Calorim. 2019, 136, 943–955. [Google Scholar] [CrossRef]

- Ibtihaal, H.; Sadiq, A.; Basheera, M. Content-Based Image Retrieval: A Review of Recent Trends. Cogent Eng. 2021, 8, 1927469. [Google Scholar] [CrossRef]

- Yusof, N. Model Capaian Imej Motif Songket Berasaskan Teknik Analisis Komponen Utama dan Jarak Kuadratik Geometri. Ph.D. Thesis, Universiti Kebangsaan Malaysia, Bangi, Malaysia, 2023. [Google Scholar]

- Mehrdad, C.; Adnani Elham, S. Performance Evaluation of Web Search Engines in Image Retrieval: An Experimental Study. Inf. Dev. 2021, 38, 522–534. [Google Scholar] [CrossRef]

| Songket Cloth Section | Songket Motif | Motif Songket Images | Philosophy |

|---|---|---|---|

| Head of the cloth | Bunga ketola/Petola |  | Symbolizes the loyalty between the people and their king. |

| Body of the cloth | Bunga Anggerik atau Orkid |  | Represents the character of a woman who is delicate and requires nurturing with noble values and cultural refinement, as women are the mothers of households who will shape the future generations of the ummah. |

| Cloth edge/Side panel/Foot of the cloth | Bunga corong |  | Symbolizes the harmony between human beings and the natural world created by the Divine. |

| Scattered arrangement | Bunga Bebaling |  | Reflects the axis of the life cycle of a human being. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yusof, N.; Abd. Majid, N.A.; Ismail, A.; Hussain, N.H. Preserving Songket Heritage Through Intelligent Image Retrieval: A PCA and QGD-Rotational-Based Model. Computers 2025, 14, 416. https://doi.org/10.3390/computers14100416

Yusof N, Abd. Majid NA, Ismail A, Hussain NH. Preserving Songket Heritage Through Intelligent Image Retrieval: A PCA and QGD-Rotational-Based Model. Computers. 2025; 14(10):416. https://doi.org/10.3390/computers14100416

Chicago/Turabian StyleYusof, Nadiah, Nazatul Aini Abd. Majid, Amirah Ismail, and Nor Hidayah Hussain. 2025. "Preserving Songket Heritage Through Intelligent Image Retrieval: A PCA and QGD-Rotational-Based Model" Computers 14, no. 10: 416. https://doi.org/10.3390/computers14100416

APA StyleYusof, N., Abd. Majid, N. A., Ismail, A., & Hussain, N. H. (2025). Preserving Songket Heritage Through Intelligent Image Retrieval: A PCA and QGD-Rotational-Based Model. Computers, 14(10), 416. https://doi.org/10.3390/computers14100416