1. Introduction

Biometric recognition has become an important part of daily life applications such as forensics, surveillance systems, attendance systems, unlocking the smart phones, Automated Tailored Machines (ATMs) and border and control systems in many countries due to the extensive research in this field [

1]. The extensive use of the biometric data makes the end users concerned about the privacy of their biometric data. For example, in a password protected system if the password is compromised or hacked then the owner of the password can reset the password. In contrast to passwords and Personal Identification Numbers (PINs), the human biometrics is so naturally associated with the owner that it cannot be changed. For example, if facial data of someone is compromised or hacked then the actual owner cannot change his/her face. The biometric data like the facial data, fingerprint, hand geometry, voice, ear, iris and gait will remain unchanged for a user. Therefore, it is crucial to take all possible measures to protect the users’ biometric data. Several measures have already been in practice which, ensure the privacy of the end users [

2,

3].

One of the solutions to ensure the end user’s privacy is to combine the features from more than one biometrics [

4]. The use of multimodal biometrics not only provides the reliability and accuracy but also ensures the security of the end user’s data [

5]. As the data are stored in fused form, even if compromised, it will be more difficult to re-use the same data as the data fused from more than one biometrics trait does not pertain to any single biometric modality. Although the fusion of various biometric traits produces a more effective biometric system, fusing different biometric traits at feature level is complex due to incompatibilities in different features from the biometric modalities and a larger number of dimensions in the fused feature-set. Once the feature level fusion is achieved it yields more effective information as compared to other levels of fusion like sensor level and score level fusions [

1].

The biometric may be explained as the measurement of the physiological or behavioral characteristics used to identify a person. Mainly, the biometrics is classified into two types: physiological and behavioral biometrics. Physiological biometric may be described as the calculations for the measurements of physical characteristics of a human body such as fingerprint, face, iris and hand geometry, whereas behavioral biometric may be defined as the measurements of the behavior of a person while performing some task, for example, voice, signature, gait and keystroke dynamics [

6]. The physical biometric is comparably more accurate and reliable. However, there are some challenges to the security of the physical biometrics as those are more public and open in nature [

6]. The face and fingerprints of the human beings are open to the public and can be easily captured by anyone. Once a high-resolution photograph is obtained, anyone can re-create and reuse human face or fingerprint [

6]. While the behavioral biometrics is not as accurate and reliable as the physical ones, they are more personal to the owner and cannot be copied easily.

One of the solutions to enhance the security of biometric data is to use the multimodal biometrics. In a multimodal biometrics system, more than one biometric traits or modalities may be used to store the biometrics data in a fused form and thus provides more security as regeneration or replication of one biometric trait is very difficult from a fused biometrics template. Fusion of face with fingerprint, face with iris, fingerprint with iris and fingerprint with finger vein are among the common combinations for a multimodal biometrics system [

7,

8,

9]. Therefore, it is essential to combine one of the physical traits with behavioral biometric traits to ensure the accuracy and privacy [

10]. Furthermore, the combination of physical and behavioral biometric traits will increase the accuracy of a biometrics system and reduce the spoof attacks.

The biometrics data, if stored in an unencrypted form, is always prone to security attacks. One of the examples of such breach in security is “BioStar2” database [

11]. The database stored biometrics data including fingerprints, facial recognition records and passwords of the users in an unencrypted form. The breach in the security in the database was discovered in 2019 by a team of security researchers. The services of the database were being used by thousands of the institutions of the developed and developing countries of the world including United Kingdom, United States of America, United Arab Emirates, Finland, Japan, Germany and many more. The data were obtained by security researchers just through the web browser by applying some operations. This shows that the biometric data of users can be compromised, thus it should be stored in such a fused and encrypted form, so that it cannot be reused, even if compromised.

Keeping in view the privacy of the users’ biometric data, this research proposes and develops a feature level fusion scheme based on Convolutional Neural Network (CNN) for fingerprints and online signatures. This paper implemented two types of feature level fusion schemes using CNN—one is early fusion and the other is late fusion. Following are the main contributions of this study.

A multimodal biometric dataset has been collected with 1400 fingerprints and 1400 online signatures from 280 subjects. As there are only a few databases that contain samples from both fingerprint and online signature biometric traits, there was a strong need to collect the data on a large-scale from the real users. For this reason, the data are collected from 280 subjects and utilized in this research. Furthermore, an Android application was developed to collect the online signatures.

A CNN model is developed that fuses the features from fingerprints and online signatures into a single biometric template. Two types of fusion schemes: early and late fusion using CNNs are developed.

Several experiments are performed by fine-tuning the hyper parameters of CNN using early and late fusion techniques on the new custom multimodal dataset, where the best results are obtained with early fusion and are compared with the related multimodal biometric systems.

Rest of the paper is organized as follows:

Section 2 presents an overview of the research contribution in the field of biometric especially for the feature level fusion.

Section 3 describes the database of fingerprints and online signatures collected for this research paper. Different steps of the proposed system based on both early feature fusion scheme and late feature fusion scheme, along with the experimental setup, are explained in detail in

Section 4.

Section 5 discusses the experimental results and compares the results with the related multimodal biometric systems while

Section 6 concludes the paper and suggests some future directions for this research.

2. Related Work

The effectiveness of biometric fusion at the feature level is evident from the work of many researchers [

4,

7,

8,

9]. Biometric systems based on the fusion of features from multiple biometric traits offer more accuracy as compared with the accuracy achieved by using a single biometric trait. For example, Ahmad et al. [

12] fused the features from the palmprint and face to achieve the higher accuracy rates than the palmprint or face alone. In their research the features were extracted using Gabor Filter. As the resultant feature vector offers a high dimensional data, hence the dimensions were reduced with the help of Principal Component Analysis (PCA) as well as Linear Discriminant Analysis (LDA). Then the features were combined serially and the Euclidian Distance algorithm was used to classify the fused template. The authors experimented on 400 images from the ORL face database and 400 images from Poly-U palmprint database. The Euclidean Distance classifier produced 99.5% accurate results for fused data. Similarly, Chanukya et al. [

13] fused the features from fingerprint and ear biometrics. They extracted the features by applying modified region growing algorithm and Gabor filters to achieve an accuracy of 98% using a conventional neural network classifier.

It has always been important to select a good fusion technique to fuse the biometrics at the feature level. Various fusion schemes to fuse the biometric features have been adopted by the research society. Thepade et al. [

14] fused the features from the human iris and palmprint. First, the images of palmprint and iris were fused together, then the features were reduced by using different transforms of energy distributions and comparison is made for various methods of the energy transform. The highest Genuine Acceptance Rate (GAR) achieved was 58.40% by Hartley transform. Another method for the fusion of iris and fingerprint was proposed by Guesmi et al. [

15]. They extracted the features of iris and fingerprint using curvelet transform and selected the relevant features. The selected features were matched with the database using possibility matching and a GAR of 98.3% was achieved. Similarly, Xing et al. [

16] proposed a new method for the feature level fusion of gait and face to be especially used by the images obtained from a Closed-circuit television (CCTV) camera. They extracted the eigenvalues from input images and minimized the difference between the values from same person. They fused the features by projecting the features of the same person into coupled subspace. To demonstrate their approach, they constructed a chimeric database comprising of 40 subjects using two publicly available databases of CASIA gait and ORL face. They used a nearest neighbor classifier and obtained an accuracy of 98.7%.

Different methods for the feature extraction of biometric modalities have been reported by the research community. For example, Sheikh et al. [

17] used Dual Tree-Discrete Wavelet Transform (DT-DWT) to extract the features from the palmprint and finger knuckleprint. They used AdaBoost classifier and achieved the accuracy of 99.5%. In the same way, Haghighat et al. [

18] used Discriminant Correlation Analysis (DCA) for feature extraction from different sets of biometric modalities. It is proposed and experimentally proved by their research work that DCA assists in maximizing the difference between different classes and minimizing the difference between the same classes. They performed experiments on the feature fusion of face with ear and fingerprint with iris. They achieved the accuracies of over 99% by using a nearest neighbor classifier called k-Nearest Neighbors (kNN). In 2016 Jagadiswary et al. [

19] presented their research on fusing features from the fingerprint, finger vein and the retina. In this work the feature extraction for the fingerprint was performed by extracting minutiae points from the fingerprints. The feature extraction for the retina was performed by extracting the blood vessel segmentation using threshold techniques based on Kirch’s template. The blood vessel pattern of a finger vein was obtained using maximum curvature method and repeated line tracking method. The features were fused by concatenation method. The fused template was also encrypted to ensure further security. They achieved maximum GAR of up to 95%, the False Acceptance Rate (FAR) was reduced to as low as 0.01%. Some of the researchers even fused different feature extraction techniques to achieve the better results. To fuse the features from fingerprint, finger vein and the face, [

8] calculated the Fisher vectors of the extracted features and then trained three classifiers including Support Vector Machine (SVM), Bayes classifier and kNN for recognition. Authors collected a dataset from 50 students with 5 samples from each student. The authors also tested the system using fake data and the maximum accuracy achieved by the system was 93%. It is evident from their experimental results that the kNN classifier outperformed the other two classifiers.

Some of the research has also demonstrated the power of biometric fusion by using different levels of fusions simultaneously. Azome et al. [

20] combined the feature level and score level fusions. They proposed a multimodal biometric system based on iris and face. They applied the combination of various feature extraction methods to the face images from the ORL face database and iris images from the CASIA iris database. After feature combination they applied weighted score fusion to get the resultant accuracy. They used the nearest neighbor classifier and achieved up to 98.7% accuracy. Similarly, Toygar et al. [

21] combined the features from the ear and the face profile for identical twins. They used both feature level and score level fusions to achieve the best recognition results. They used five separate feature extraction methods such as PCA, Local Binary Patterns (LBP), Scale-Invariant Feature Transform (SIFT), Local Phase Quantization (LPQ) and Binarized Statistical Image Features (BISF). Their best achieved recognition results were 76% using kNN classifier. In the same way another noticeable research work was published by Sharifi et al. [

22]. They fused the face and iris modalities at different levels to achieve a novel scheme for multimodal fusion. The modalities were fused at feature, score and decision levels. Features were extracted using Log-Gabor Transform technique. The LDA was used to reduce the size of the feature set. The best GAR achieved was 98.9%. In another research, the features from face, fingerprints and iris were fused together by Meena et al. [

23]. Authors used a dataset of 280 subjects. Each subject contributed 4 samples for each of the 3 biometric traits. Hence a dataset of 2400 samples was used. Features were extracted by Local Derivative Ternary Pattern (LDTP). Two classifiers k-NN and SVM were trained to assess the accuracy and a maximum accuracy of 99.5% was acquired by the proposed system.

Recently, deep learning techniques have demonstrated the significant improvements over the traditional methods in the field of biometric recognition [

24,

25,

26]. Deep feature level fusion techniques have been applied in several studies to fuse the features from different biometric traits for the multimodal biometric systems. In recent years, a multimodal biometric recognition using feature level fusion proposed by Xin et al. [

8] combined the features from fingerprint, finger vein and the facial images for the recognition of persons. Zhang et al. [

27] fused the periocular and iris features with the help of a deep CNN model for the mobile biometric recognition. For generating the specific and concise representation of each modality, they applied a maxout in the CNN and then merged the distinct features of both modalities with the help of a weighted concatenation process. In the same manner, Umer et al. [

28] combined the periocular and iris features for the biometric recognition of a person. They deployed different deep learning based CNN frameworks such as ResNet-50, VGG-16 and Inception-v3 for feature extraction and classification. They demonstrated that the performance of the system was improved by combining the features from various traits.

Similarly, Surendra et al. [

29] developed a biometric based attendance system using a deep learning-based feature fusion technique on the iris features. The features from fingerprints and electrocardiogram (ECG) were fused by Jomaa et al. [

30] to detect the presentation attacks. They deployed three CNN architectures including fully connected layers, 1-D CNN and 2-D CNN for the feature extraction from ECG and an EfficientNet for the feature extraction from the fingerprint. Recently, the periocular and the facial features have been combined by Tiong et al. [

31] in a multi deep learning network for the facial recognition system. They further improved the recognition accuracy by combining the textural and multimodal features. The recent research discussed in this section has been summarized in

Table 1.

As seen in

Table 1 the literature on multimodal biometric fusion presents the diversity in fusion of biometric traits. However, most of the multimodal systems are based on the fusion of physiological traits only. As a person’s most of the physiological traits are open to the public, it is beneficial to fuse the physiological biometric trait(s) with the behavioral biometric trait(s). To the best of our knowledge there has been no previous research on feature level fusion of fingerprints and online signatures using deep learning techniques. The feature level fusion of fingerprint and online signature was proposed in [

32] however, they only proposed the fusion scheme and no experimental results were presented. Furthermore, the fingerprints and online signatures were fused at feature level using machine learning approach by Imran et al. [

33]. However, they did not apply deep learning technique and used simple machine learning algorithms such as kNN and SVM. Their proposed system achieved an accuracy of 98.5% on a chimeric dataset synthesized from Fingerprint Verification Competition (FVC) 2006 fingerprint dataset and MCYT-100 signature corpus. This research presents the feature level fusion of the fingerprint, the most common physiological biometric with the signature, the most common behavioral biometric for biometric identification using deep learning approach.

3. Dataset

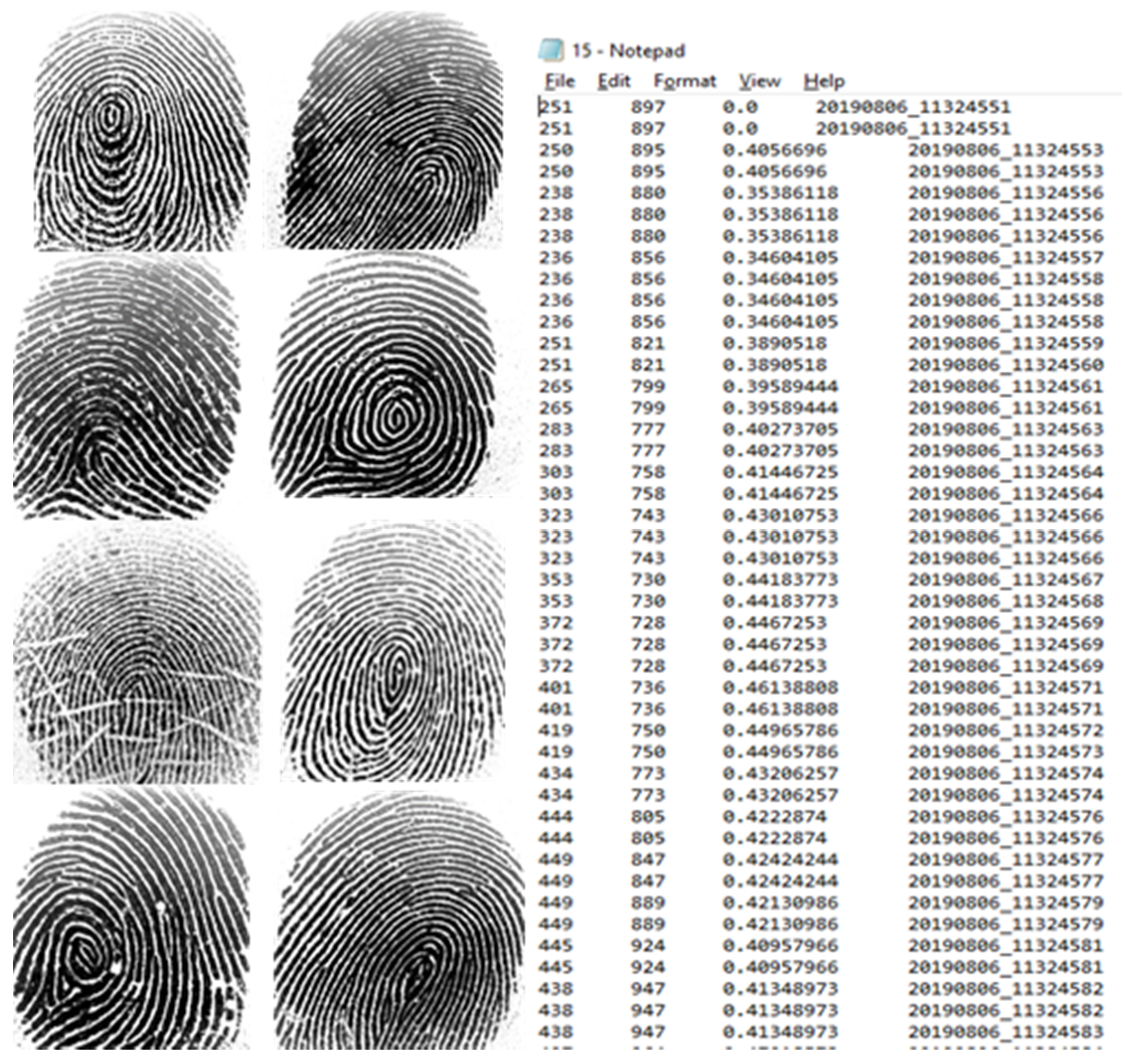

A novel multimodal biometric dataset based on fingerprints and online signatures was collected for this paper. Most of the data were collected from the volunteer undergraduate students from four different universities and campuses including University of Sindh Dadu Campus, University of Sindh Mirpurkhas Campus, Quaid-e-Awam University of Engineering, Science & Technology Nawabshah and Sindh Agriculture University, Tandojam, Pakistan. Ages of the subjects were between 19 and 23 years. The dataset consist 1400 fingerprints and 1400 online signatures collected from 280 subjects. Each subject contributed five samples of the fingerprint from the same finger (i.e., ring finger of left hand) and five samples of their online signature. Fingerprint data were collected using SecuGen Hamster Plus fingerprint reader. The SecuGen Hamster Plus fingerprint reader uses Light Emitting Diode (LED) light source to capture the fingerprint images and saves the image in .bmp file format. The resolution of the captured image was 500 Dots Per Inch (DPI). Size of each fingerprint image was 260 × 300 pixels and the images were stored in a gray-scale format. A few samples of the fingerprints from the collected dataset are illustrated in

Figure 1.

The other part of the dataset consists of online signatures. To collect online signature samples, an Android application was developed. The application was installed and used on a Samsung Galaxy Note 3 mobile phone with the S-Pen. The signatures were drawn on the screen of the smart phone with a pen and the application captured and stored the data in a text file in four columns namely x coordinates, y coordinates, pressure applied and the time data for each of the pixels of the signature. Each of the subject provided 5 samples of their signature.

Figure 1 (right), presents a part of online signature template.

For the training of classifier, the data were separated into three sets: the training set, the validation set and the testing set. The training set consists of 60% of total data, the validation set consists of 20% of the total data and the testing set also consists 20% of the total data. When five samples were divided into 60% for training, 20% for validation and 20% for testing the number of samples for each category became 3, 1 and 1, respectively. This small number of samples was not sufficient to train a deep learning model.

One of the problems encountered in this paper was the lack of samples for each class. The total of 5 samples have been collected for each subject or class. The justification for collecting the smaller quantity of samples was to represent the real-life situation. In real-life, it is unlikely for a person to provide 20 to 30 fingerprints or signatures for registering into a biometric system. Therefore, the data augmentation methods were applied to expand the data up to the three folds. For fingerprint images, data augmentation techniques such as rotation (not more than 10 degree), flipping, zooming and changing width, height and brightness or contrast of images were applied for expanding the size of training samples. Similarly, the augmented files for online signatures were created by slightly changing the values of x-coordinates and y-coordinates. This change made the effect of rotation of the signature, the increase in the height and width of the signature and the position of the signature. Similarly, the time data were changed slightly. However, the pressure data for each signature was not changed. After data augmentation, the dataset expanded up to 4200 fingerprints and 4200 online signatures for 280 classes with 15 samples of each modality per class.

4. Proposed Multimodal Biometric System Based on CNN

In the proposed multimodal biometric system, initially the fingerprint images and the online signature files were given as the input to the network and preprocessed to be used for the process of feature extraction. Preprocessing steps for the fingerprint include various methods from general image preprocessing techniques like image enhancement to more sophisticated techniques like thinning and binarization. First, the fingerprint image was enhanced in order to improve the quality of the image so that the ridges and the valleys on a fingerprint become clearly visible. After that, as all the images in the dataset were in gray-scale (0-255) so each image was binarized, means each pixel of the image was made equal to either 0 or 1. Some of the fingerprints during the preprocessing stage are illustrated in

Figure 2.

In the next step, each ridge was thinned or skeletonized so that it becomes exactly one pixel in width. If the image was not enhanced, then the ridges were supposed to be distorted during the process of thinning and the one continuing ridge would have been shown as a broken ridge. Similarly, the preprocessing was accomplished for the online signature file by removing the duplicate values from the file. The values that were exactly same for all the four columns of the file were removed. After removal of the duplicate values, the zeros were appended to the end of the files to make the number of rows same in all the files.

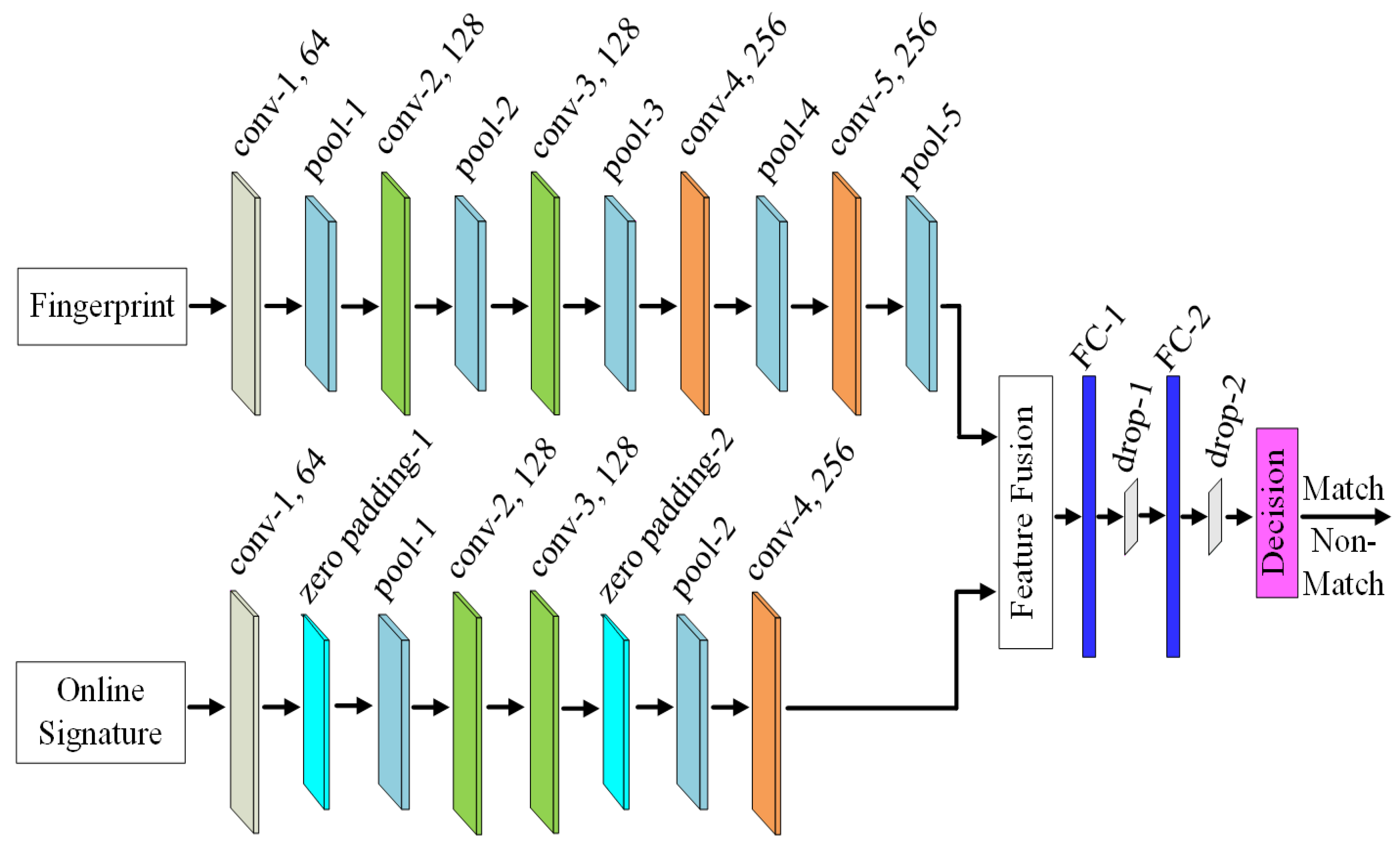

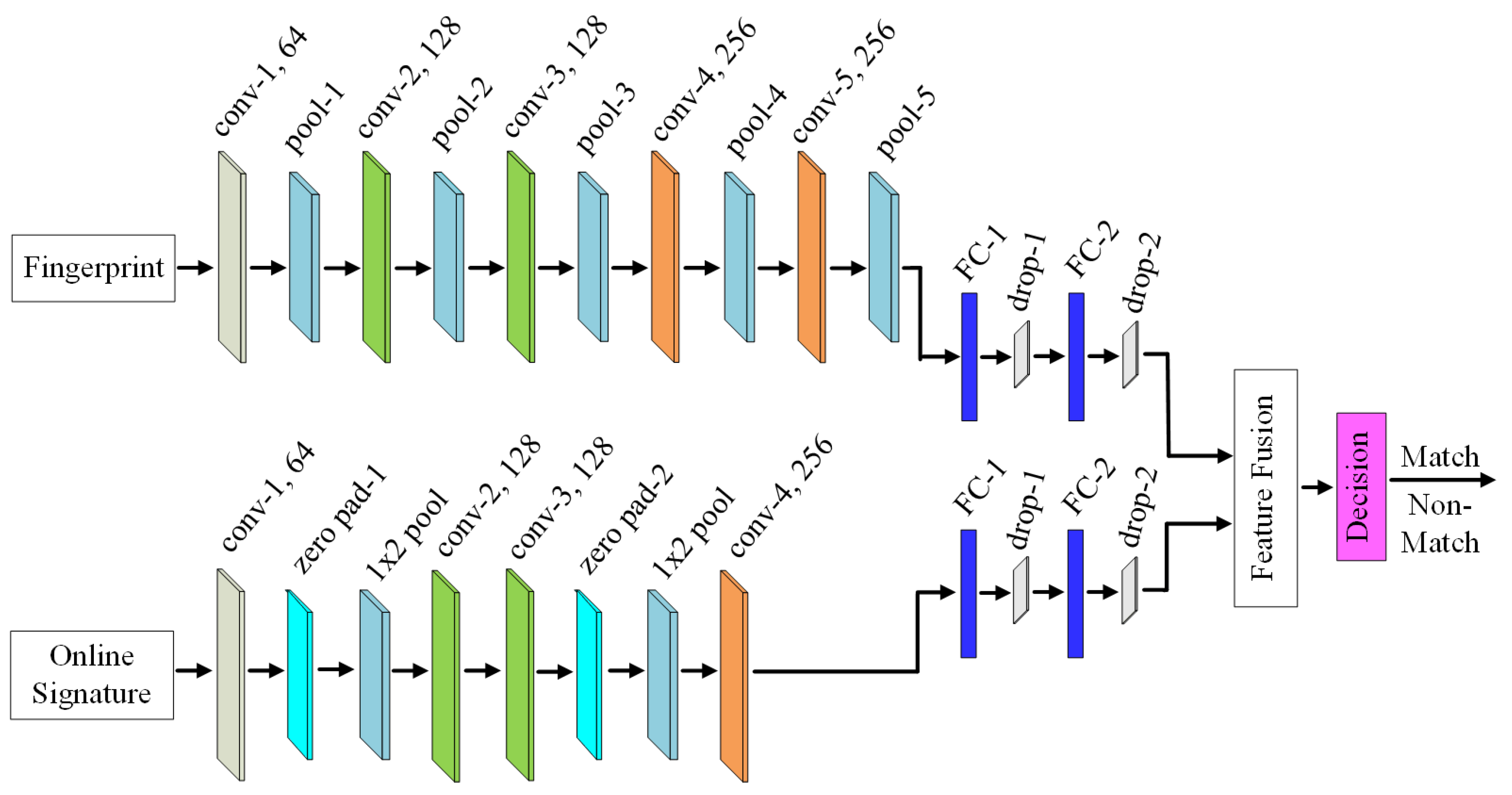

After pre-processing, the task of feature extraction and classification is performed by the proposed CNN model. After the features are extracted from online signature and fingerprint by convolutional layers, the extracted features are fused together. Two types of feature fusion schemes; early and late fusion are proposed and developed in this paper. The early fusion technique achieved better accuracy than the late fusion technique. Both feature fusion techniques use same number of convolutional and fully connected layers. However, in the early fusion technique, the features are fused before the fully connected layers, while in the late fusion technique; the features are fused after the fully connected layers as illustrated in

Figure 3 and

Figure 4. Each network is divided into two parts: fingerprint and online signature. The fingerprint part extracts features from the fingerprint images and online signature part extracts features from online signature file. The fingerprint part consists of five convolutional, five max pooling and two fully connected layers. The convolutional layers are used to extract the features from the fingerprint images. The low-level features were extracted by the initial convolutional layers, the later convolutional layers extract the middle and higher-level features, while the fully connected layers extract more specific features. Similarly, online signature part consists of four convolutional layers, from which first and third layers were followed by a zero-padding layer and a pooling layer. Pooling layer in online signature part of the layer was also implemented using the max-pooling. In the feature fusion layer, the concatenation of features obtained by fingerprint part of the network and online signature part of the network has been performed using simple equation as below:

where the

FF represents the feature vector that contains fused features from fingerprint and online signature. The

fp was obtained by:

In Equation (2) the K represents the filter matrix of fingerprint, b denotes the network bias and the WT stands for the weight metrics for the fingerprint. In the same way the os was obtained by the same equation.

The dimensions of the online signature were fixed to 1 × 17, where 1 and 17 are the width and height of the signature, while for fingerprint image, the dimensions were fixed to 150 × 150 × 1, where 150, 150 and 1 are the width, height and the depth of the fingerprint image. As the proposed feature fusion techniques combine the features of online signatures and fingerprint images and the CNN takes fixed size three-dimensional input, the dimensions of the online signature were reshaped to 1 × 17 × 1 and passed to the input layer of the online signature network. Here the last 1 added shows the depth of the online signature. Though, reshaping of online signature from 1-D to 3-D increased the network parameters but the fusion of two feature vectors, that is, fingerprint images and online signatures is possible when both have same dimensions. Therefore, the feature vectors of both fingerprint images and online signatures were fused together. The max pooling layer applied for online signature reduced the spatial dimensions of the feature vector. For online signature, the kernel size of the max pooling was set to 1 × 2. This reduced only the height of the signature without changing its width. Furthermore, the down-sampling of the signature to 1 × 8 after applying max pooling, reduced the accuracy of the overall system. The reason of the reduction in the accuracy was the smaller size of the signature data. The smaller number of features was extracted from the signature by the convolutional layers and the pooling layer further reduced the features. For that reason, two zero padding layers were applied to the signature before down-sampling by the pooling layer. The zero padding layers added an extra row and column of zeros to the signature data. The configuration of the online signature network is shown in

Table 2. After adding zero padding layer to the signature data the accuracy of the system improved significantly.

4.1. Early Feature Fusion Scheme

As discussed, two types of feature fusion schemes are proposed in this paper. One is based on early fusion scheme in which the features extracted by convolutional layers were fused or concatenated together before the fully connected layers. The concatenated feature vector was passed to two fully connected layers each followed by a ReLU layer. As the fully connected layers increase the training data by extracting more features from the data, so the dropout layers were added after both fully connected layers to randomly drop the learning parameters with a value of 0.3 to boost the training time of the network. Finally, a Softmax layer was used to distribute the results into the classes using probability distribution obtained for each class.

4.2. Late Feature Fusion Scheme

The second type of feature fusion scheme proposed for the fusion of fingerprint and online signature is the late feature fusion scheme. In this type of feature fusion scheme, the features extracted by convolutional layers were further passed to the two fully connected layers each followed by a dropout layer. Then the extracted features were concatenated and passed to the Softmax layer for the classification. Here again the dropout layers randomly drop the learning parameters with a value of 0.3 in order to improve the training time of the network. Finally, a Softmax layer has been used for the classification based on the probability distribution obtained for each class.

6. Conclusions and Future Work

In this paper, deep learning models based on the CNN architecture have been proposed for the feature level fusion of online signatures and fingerprints. Two feature fusion techniques, that is, early and late have been developed where the features extracted from both biometric modalities are fused together at convolutional and fully connected layers. The size of the input image for the fingerprint is fixed to 150 × 150 × 1 and the size of the online signature file is 1 × 17. To fuse the features of fingerprint and online signature, the size of the signature was reshaped to 1 × 17 × 1 before passing to the online signature network. To fuse the features of fingerprint image and online signature, different approaches were tried. However, the accuracy and other values for other evaluation metrics for the proposed system did not improve because of the width of the online signature’s feature vector which was equal to 1. The problem was addressed and the accuracy and the values for other evaluation metrics for the system was increased by adding two zero-padding layers in the signature network. By this zero-padding technique, the extra zeros were added at all four sides of the feature vector, that is, top, bottom, left and right. In this way, the dimensions of the final feature vector became 4 × 4 in size. Similarly, the size of final feature vector of fingerprint was 4 × 4. These features have been fused by concatenation and passed to the fully connected layers for more abstract feature extraction and classification. The model was trained and tested on the new collected dataset and finally, the overall system achieved an accuracy of 99.10% with early fusion scheme and 98.35% with the late fusion scheme.

In future, low level characteristics or level 3 features of the fingerprint like ridge contours and active sweat pores may also be used for the fusion to ensure more accuracy and liveness of a user. In future one of the different-state-of-the-art cryptography techniques for biometrics may also be applied to the proposed system to further ensure the security of the fused biometric template.