Simple Summary

This is the first study to utilize 3D Gaussian representations and a Gaussian-prompt diffusion model for performant and interpretable multimodal medical imaging segmentation. Our proposed 3D Gaussian-prompted diffusion model addresses two long-standing challenges in this area: (1) accuracy limitation caused by heavy information redundancy and (2) intepretability defectiveness caused by unreliable information extraction and integration from multimodal inputs. Exclusive experiments have demonstrated that our proposed method can not only evidently boost the multimodal segmentation performance of gross tumor volume for nasopharyngeal carcinoma but also undertake segmentation in an interpretable step-wise diffusion process with traceable contribution from prior guidance on multimodal imaging inputs.

Abstract

Background: Gross tumor volume (GTV) segmentation of Nasopharyngeal Carcinoma (NPC) crucially determines the precision of image-guided radiation therapy (IGRT) for NPC. Compared to other cancers, the clinical delineation of NPC is especially challenging due to its capricious infiltration of the adjacent rich tissues and bones, and it routinely requires multimodal information from CT and MRI series to identify its ambiguous tumor boundary. However, the conventional deep learning-based multimodal segmentation method suffers from limited prediction accuracy and frequently performs as well as or worse than single-modality segmentation models. The limited multimodal prediction performance indicates defective information extraction and integration from the input channels. This study aims to develop a 3D Gaussian-prompted Diffusion Model (3DG-PDM) for more clinically targeted information extraction and effective multimodal information integration, thereby facilitating more accurate and clinically interpretable GTV segmentation for NPC. Methods: We propose a 3D-Gaussian-Prompted Diffusion Model (3DGS-PDM) that operates NPC tumor contouring in multimodal clinical priors through a guided stepwise process. The proposed model contains two modules: a Gaussian Initialization Module that utilizes a 3D-Gaussian-Splatting technique to distill 3D-Gaussian representations based on clinical priors from CT, MRI-t2 and MRI-t1-contract-enhanced-fat-suppression (MRI-t1-cefs), respectively, and a Diffusion Segmentation Module that generates tumor segmentation step-by-step from the fused 3D-Gaussians prompts. We retrospectively collected data on 600 NPC patients from four hospitals through paired CT, MRI series and clinical GTV annotations, and divided that dataset into 480 training volumes and 120 testing volumes. Results: Our proposed method can achieve a mean dice similarity cofficient (DSC) of 84.29 ± 7.33, a mean average symmetric surface distance (ASSD) of 1.31 ± 0.63, and a 95th percentile of Hausdorff (HD95) of 4.76 ± 1.98 on primary NPC tumor (GTVp) segmentation, and a DSC of 79.25 ± 10.01, an ASSD of 1.19 ± 0.72 and an HD95 of 4.76 ± 1.71 on metastasis NPC tumor (GTVnd) segmentation. Comparative experiments further demonstrate that our method can significantly improve the multimodal segmentation performance on NPC tumors, with superior advantages over five other state-of-the-art comparative methods. Visual evaluation on the segmentation prediction process and a three-step ablation study on input channels further demonstrate the interpretability of our proposed method. Conclusions: This study proposes a performant and interpretable multimodal segmentation method for GTV of NPC, contributing greatly to precision improvement for NPC therapy treatment.

1. Introduction

Nasopharyngeal Carcinoma is a malignant tumor arising in the roof and lateral walls of the nasopharyngeal cavity [1]. The majority of NPC tumors are cured with radiation therapy, and image-guided radiation therapy (IGRT) is the standard technique for NPC [2]. During IGRT treatment planning, the delineation accuracy of NPC gross tumor volume (GTV) is crucial due to the proximity between the NPC tumor and critical brain tissues. However, GTV contouring for NPC is particularly error-prone because the NPC can infiltrate diverse areas of the adjacent bones and rich tissues within the head and neck region, and the extent of this involvement is often reflected by subtle changes and exhibits varied contrasts in CT, MRI-t1, MRI-t2 and MRI-t1-contrast-enhancement (MRI-t1-ce) imaging modalities. As a result, NPC tumors are always compounded with the surrounding anatomy, and the tumor boundaries become visually ambiguous and difficult to recognize compared to tumors in other regions.

In clinical scenarios, to delineate accurate contouring of the NPC tumor region, radiologists need to fuse multimodal images (CT, MRI-t1, MRI-t2 and MRI-t1-ce) into the same coordinates, identify anatomical structures by comparing different modalities and adjacent slices, and determine the tumor-contouring workflow based on empirical evidence such as bone infiltration in MRI-t1, lymph node enlargement in MRI-t2 and meninges thickening in MRI-t1-cefs [3]. The clinical delineation process is extremely labor-intensive and highly reliable to the radiologists’ expertise.

To reduce the workload, fast and accurate deep-learning-based NPC GTV segmentation methods have gained overwhelming popularity in recent years. Conventional deep learning-based models follow an annotation-targeting paradigm by formulating deterministic mapping from the source image to the predicted segmentation masks. Segmentation models based on a single modality source image, such as CT imaging or MRI-t1-ce, have achieved moderate segmentation accuracy [4,5]. These methods seek network complexity increments and the integration of clinical priors to improve the segmentation accuracy. However, due to the diverse information exhibited by the NPC on CT and different MRI series, the incomplete anatomical information from a single modality have drawn serious clinical concerns and potentially hampers the segmentation performance.

Great efforts have been devoted to developing a multimodal segmentation model for further segmentation accuracy increments, such as multi-scale sensitive U-Net [6], intuition-inspired hypergraph modeling [7], automatic weighted dilated convolutional network [8], enhancement learning [9], mask-guided self-knowledge distillation [10], uncertainty and relation-based semi-supervised learning [11] and uncertainly-based end-to-end learning [12]. However, these attempts have achieved limited improvement or even performance degradation. Information redundancy may be the fundamental reason behind the performance degradation, as although supplementary tumor-related information is integrated from multimodal inputs, a larger ratio of irrelevant information is introduced at the same time [13]. The high ratio of irrelevant information greatly increases training difficulty [14], and the models fail to efficiently extract tumor-focused information from multimodal inputs while comprehensively integrating pertinent information for more accurate tumor contouring. Most importantly, due to the simplicity of the high-dimensional convolutional feature space and end-to-end predicting process, there remains an urgent demand for methods which can effectively undertake clinically oriented information extraction and reasonable contribution distributions from multimodal inputs.

To address the above limitations, in this study, we propose a 3D Gaussian-prompted Diffusion Model (3DG-PDM) for accurate and reasonable multimodal NPC segmentation. The 3DG-PDM contains two modules: a Gaussian Initialization Module that utilizes a 3D-Gaussian-Splatting technique to distil 3D-Gaussian representations based on clinical priors for clinically oriented information extraction from CT, MRI-t1-contract-enhanced(MRI-t1-ce) and MRI-t2, respectively, and a diffusion segmentation module that generates tumor segmentation step by step, guided by step-wise 3D-Gaussian, for an interpretable segmentation process. Extensive experiments are conducted on 600 NPC patients from four hospitals to evaluate the segmentation performance of our model. The dice similarity coefficient (DSC), mean average symmetric surface distance (ASSD) and 95th percentile of Hausdorff (HD95) indexes are employed for quantitative evaluation compared to other state-of-the-art multimodal segmentation models. A visual evaluation and ablation study are also performed to demonstrate the performance and interpretability of our method.

2. Materials and Methods

2.1. Method Overview

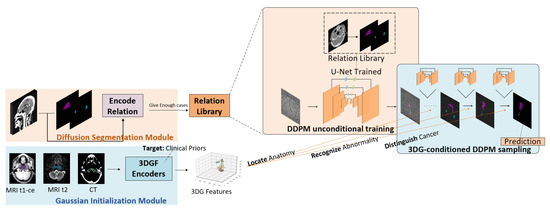

Figure 1 shows the overall workflow of the proposed 3DG-PDM model. A diffusion segmentation model is pretrained first, to build a relation library encoding segmentation mapping relation between source CT imaging and the paired GTV clinical annotations. Then, a Gaussian initialization module is pretrained on CT, MRI-t1-ce and MRI t2 imaging to extract 3D Gaussian features (3DGF) which contain key information from multimodal inputs, based on clinical focuses on each modality. During the segmentation process, multimodal imaging data (MRI-t1-ce, MRI-t2 and CT) are fed into 3DGF encoders to extract clinically-focused Gaussian features, and then the Gaussian features are used as prompts for guiding the conditional sampling process of the diffusion segmentation module, generating stepwise coarse-to-fine segmentation predictions with precise anatomy location, abnormality recognition and cancer distinguishing ability.

Figure 1.

The overall workflow of the proposed method.

2.2. Gaussian Initialization Module

The Gaussian initialization module refines key information from multimodal imaging inputs based on clinical priors guidance, which filters unwanted information and facilitates effective multimodal information extraction.

Inspired by the success of the 3D Gaussian splatting technique in the area of novel 3D scene reconstruction [15], a great deal of research has been developed to leverage the unique advantages of 3D Gaussian feature segmentation [16,17,18]. Compared to conventional high-dimensional feature spaces encoded by convolutional neural network (CNN) layers or transformer tokens, features encoded in 3D Gaussian space have unique advantages: (1) 3D Gaussian features are highly refined from input imaging owing to the pixel-to-Gaussian training process; (2) 3D Gaussian features can be extracted individually on a single imaging modality integrated from multimodal imaging without information loss, through mathematical addition between 3D Gaussian distributions; and (3) 3D Gaussian features are extracted and applied in a clinically interpretable process, because the information containment of 3D Gaussian features is observable in structured low-dimensional Gaussian space. The explicitness of 3D Gaussian features enables effective information extraction from clinical priors.

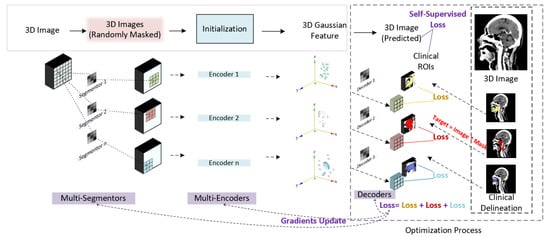

Based on the above traits of 3D Gaussian features, we employ a 3D Gaussian features extraction technique for refining clinically focused information from multimodal inputs. To adapt the 3D Gaussian feature extraction process in medical imaging scenarios, we designed a Gaussian initialization module. Figure 2 shows the architecture of the proposed Gaussian initialization module. Regional 3D points are first located from a 3D image input by multiple CNN layers, namely multi-segmentors. An initialization block containing multiple 3D Gaussian encoders (3DGE) is then applied to transfer pixel points into 3D Gaussian feature points. The 3D Gaussian feature points are then decoded into regional anatomy images by multiple CNN layers, namely decoders. Finally, similarity loss is calculated by comparing decoded regional anatomy images and real regional anatomical images acquired based on clinical delineations. By optimizing the similarity loss, the gradients of the network are updated.

Figure 2.

Gaussian initialization module.

Specifically, the initialization block initials 3D Gaussian feature points from pixel points referring to definitions from the original 3D Gaussian splatting techniques [15]. The definition of each 3D Gaussian feature point can be formulated as

where x represents a pixel point in 3D space and represents a 3D Gaussian point in 3D Gaussian space. represents the mean position of the Gaussian feature point in 3D Gaussian feature space. refers to Spherical Harmonic coefficients, which represent color information.

The 3D Gaussian features are conventionally rendered to 2D images by a “splatting” technique; they are projected to 2D Gaussian features by transforming the covariant matrix according to the following transformation [19]:

where J represents the Jacobian of the affine approximation of the projective transformation, and W is the view transformation.

Since medical imaging is defined by pixel intensity rather than RGB colors, we alters the 3D Gaussian feature formulation according to [20] as follows:

where i refers to pixel intensity value.

Additionally, compared to rendering 3D Gaussian feature points into 2D images, we employ CNN decoders for directly transforming 3D Gaussian feature points into 3D imaging.

2.3. Diffusion Segmentation Module

The diffusion segmentation module integrates multimodal information in a Gaussian-prompted step-wise sampling process. The integration of Gaussian features in Gaussian space enables more effective information fusion, and the combination of Gaussian prompts and diffusional sampling processes allows segmentation to operate in an interpretable way. Interpretively, the influence of Gaussian prompts is explicitly exhibited as the altering of the sampling trajectory. This altering further demonstrates that 3D Gaussian features can effectively extract clinical prior information, and multimodal prior information can effectively alters segmentation processes through different aims.

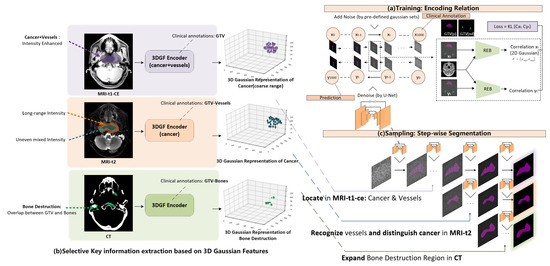

Figure 3 shows the architecture of the proposed diffusion segmentation module. Figure 3a shows the unconditional pre-training process of the image-GTV relation library encoding. A typical U-Net is employed for denoising, and special relation-encoding blocks (REB) are used for generating correlations from CT imaging and GTV mask predictions, with reference to conventional designs of diffusion models for segmentation [21].

Figure 3.

Diffusion segmentation module.

Figure 3b shows the key information selection process during 3D Gaussian feature extraction. According to an influential clinical NPC segmentation guideline [22], during the clinical NPC delineation process, doctors have a strong empirical focus on multimodal imaging. For NPC patients, due to mucosal inflammation, the water region is visually dark in MRI t1-ce imaging, resulting in an intensity enhancement of the cancer region and vessels. Furthermore, a combined region containing cancer and vessels can be further recognized from MRI-t1-ce by thresholding. The enhanced region automatically acquired by thresholding can also be used to guide 3D Gaussian feature extraction from MRI-t1-ce imaging to encode a coarser cancer range combining the cancer and vessels. Additionally, while the combined region of vessels and cancer is still intensity-enhanced, the vessels experience long-duration highlighting in MRI-t2 imaging, although the intensity distribution of the cancer region is uneven and mixed. This distribution difference can be leveraged to distinguish cancer from vessels, and can allow fine cancer contouring to be acquired from a coarse cancer range. To guide the 3D Gaussian feature encoder to recognize vessel regions, we set the training target as the difference found between the intensity-enhanced region (acquired by thresholding adjustment) and clinically annotated GTV. In addition, because NPC cancer involves bone destruction and CT exhibits high-contrast bone information, a 3D Gaussian feature encoder on CT imaging was developed for locating the bone-destructed region, targeted by an overlap between GTV annotations and bones (acquired by thresholding adjustment).

After pre-training of the diffusion segmentation module, which encodes the CT-GTV relation, and the pre-training of the Gaussian initialization module, which encodes multimodal clinically-focused priors, a Gaussian-prompted step-wise segmentation is operated during the sampling process of the diffusion model. As can be seen from Figure 3c, as GTV contouring is generated step-wise from the denoising sampling process of the diffusion model, 3D Gaussian features extracted from multimodal inputs are used as prompts to perturb the step-wise segmentation process. During this conditional sampling process, a coarse cancer range is firstly located by Gaussian features from MRI-t1-ce imaging, finer cancer contouring is then recognized by Gaussian features from MRI-t2 imaging, and finally the generated GTV contouring is expanded to include bone destruction regions through Gaussian features from CT imaging. By combining the Gaussian initialization module and the diffusion segmentation module, the proposed 3DG-PDM is able to operate interpretable and performant multimodal NPC GTV segmentation in a step-wise auto-contouring process guided by 3D Gaussian feature-encoded clinical priors.

Owing to its mathematical advantages, a 3D Gaussian distribution can be mathematically reflected along one axis into 2D Gaussian distribution. During the conditional sampling process, we reflected our extracted 3D Gaussian feature points along the z-axis into 2D Gaussian distribution and added them into step-wise Gaussian noise in the conditional sampling process. Compared to conventionally adding the image as a condition, directly adding 2D Gaussian distribution achieves better information preservation. To further enable prompts from multi-modal inputs to guide the sampling process, we added the condition step-wise from the three input modalities to the sampling process, specifically 3DG from MRI-t1-ce in step 1–350, 3DG from MRI-t2 in step 351–700 and 3DG from CT in step 701–1000.

The visibility of the input-feature and feature-prediction corresponding relations during the generating process strongly demonstrate the contribution of each imaging modality and inherently improve the segmentation performance through more refined information leveraging.

2.4. Dataset

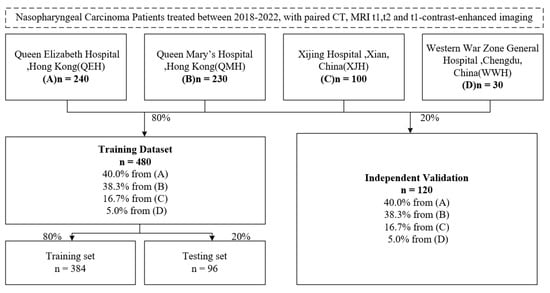

As can be seen from Figure 4, data on 600 NPC patients were retrospectively collected with CT, MRI series (t1, t1-contrast enhanced and t2) and clinical annotations from four hospitals: Queen Mary’s Hospital, Hong Kong (QMH); Queen Elizabeth Hospital, Hong Kong (QEH); Xijing Hospital, Xian, China (XJH); and Western War Zone General Hospital, Chengdu, China (WWH). Then, 480 cases were selected as the training set and 120 cases for independent testing. The training set was further separated randomly into 384 cases for training and 96 cases for validation. For each patient, to align the MRI series and CT imaging into the same spatial coordinate system, 3D registration was operated using the Elastix registration toolbox from the MRI series (moving images) to CT imaging (fixed image) [23]. The voxel units of both CT and MRI series imaging were resampled to a 1:1:1 ratio by spacing along x, y and z axes. The intensity values of all images were scaled to the range of [0, 1], with a scaling factor calculated by the difference between the minimum and maxium intensity value, respectively, from all CT images and from each MRI imaging series. For CT imaging, a Hounsfield Unit (HU) value below −1000 or over 1000 was replaced with −1000 or 1000.

Figure 4.

Dataset description flow.

2.5. Implementation Details

All experiments were conducted on a 48 GB A6000 GPU. The number of 3D Gaussian feature points were initialized as 50,000 for each input imaging modality, similar to [20], occupying around 20GB GPU space during the Gaussian feature initialization training stage and 1 GB GPU space at the Gaussian feature initialization inference stage. The diffusion model for segmentation was unconditionally trained on 3D volumes of size (256,256,20) along the x, y and z axes, occupying 16GB GPU space for each individual batch.

3. Results

3.1. Quantitative Evaluation

To demonstrate the superiority of our method, we compared it with five state-of-the-art multimodal segmentation methods: MsU-Net [6], AD-Net [8], Multi-resU-Net [24], nnformer [9] and nnU-Net [25]. The dice similarity coeffient (DSC) [26], average symmetric surface distance (ASSD), and 95th percentile of Hausdorff () indexes were calculated between the predicted GTV segmentation and the real clinical annotations. The quantitative comparison results are listed in Table 1.

Table 1.

Comparison between our method and other multimodal segmentation methods.

The table shows that our method achieved the highest segmentation accuracy among all comparative multimodal segmentation methods. Our method achieved a mean DSC of 84.29 ± 7.33 for GTVp segmentation and a mean DSC of 79.25 ± 10.01 for GTVnd segmentation, and reached an average segmentation accuracy of 81.77 ± 8.67. As observed, for GTVp segmentation, the mean DSC of comparative methods such as AD-Net, Multi-resU-Net, nnformer and nnU-Net commonly meets a bottleneck at 80. Compared to these methods, our method has achieved a significant accuracy improvement to 84, and the dominant accuracy improvements indicate that our method breaks through the performance bottleneck due to more efficient multimodal information extraction and integration. For GTVnd segmentation, our method also achieved prominent accuracy improvement. ASSD and indexes follow the same performing tendency as DSC, which further demonstrates the superiority of our method.

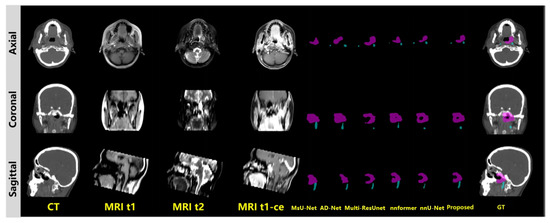

3.2. Qualitative Evaluation

For the qualitative evaluation, a representative case was selected for evaluating the visual performance of our model. Figure 5 shows the comparative visual performance evaluation, including GTVp and GTVnd segmentation results. GTVp is marked in pink and GTVnd is marked in blue. The figures show that the GTVp and GTVnd contouring generated by our proposed method achieved the best similarty to the ground truth clinical annotations. It is worth noticing that our method is able to recognize subtle details within the cancer region, and achieves stable segmentation accuracy on both GTVp and GTVnd. By contrast, comparative methods have great difficulty in distinguishing subtle health regions from the cancer range, and most of them fail to maintain stable performance for both GTVp and GTVnd.

Figure 5.

Visual evaluation.GTVp is marked in pink and GTVnd is marked in blue.

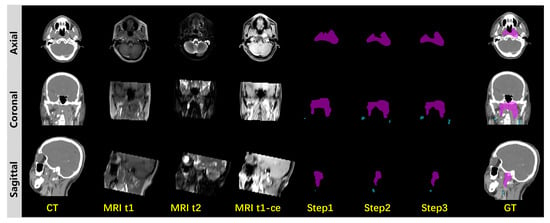

3.3. Ablation Study

To further demonstrate the effectiveness of multimodal information extraction and integration, we conducted an ablation study. As is shown in Figure 6, in the ablation study, the multimodal input channels are gradually fed to the diffusion model in three steps. Step 1 involves single Gaussian feature prompting from MRI-t1-ce imaging during the diffusion segmentation sampling process. Step 2 uses both Gaussian features from MRI-t1-ce and MRI-t2 imaging to prompt the diffusion sampling process. In step 3, Gaussian features from CT are added for a complete three-step prompt from all MRI-t1-ce, MRI-t2 and CT source imaging data. From the three-step ablation process we can observe improvements in segmentation accuracy, from a coarse cancer range to fine and even finer cancer contouring. We summarized the quantitative experiments on the ablation study in Table 2. The ablation study evidently demonstrates the effectiveness of information extraction and integration from multimodal imaging inputs. We summarized the quantitative results in Table 2, with a comparison between our proposed method and other multimodal segmentation methods by DSC, ASSD and .

Figure 6.

Ablation study.GTVp is marked in pink and GTVnd is marked in blue.

Table 2.

Quantitative ablation study with comparison between the proposed method and other multimodal segmentation methods.

4. Discussion

In this study, we proposed a 3D Gaussian-prompted Diffusion Model for performant and interpretable multimodal NPC segmentation. We designed a 3D Gaussian feature extraction strategy, namely the Gaussian extraction module, for selectively extracting clinically focused information from multimodal imaging. Additionally, we designed a Gaussian-prompted segmentation-oriented denoising diffusion probabilistic model, namely the diffusion segmentation module, to efficiently integrate multimodal information and undertake segmentation in an interpretable step-wise generating process.

Exclusive experiments have demonstrated that, compared to other methods, our proposed method has achieved an accuracy improvement on both GTVp and GTVnd segmentation by DSC, on a multi-institutional testing dataset built on four hospitals. While other methods reached their performance bottleneck at a average DSC of 77, our method has made a significant breakthrough by increasing the average DSC to 81. ASSD and indexes follow the same performing tendency as DSC. As has been mentioned in many works focusing on multimodal generation [13,27,28], information redundancy could be the main cause hampering the model performance. We addressed this limitation by proposing a Gaussian initialization module for a more refined and clinically biased information extraction from the multimodal inputs. The proposed Gaussian initialization module fully leverages the unique advantages of 3D Gaussian representations, including key clinically focused information refinement based on the highly refined trait of 3D Gaussian feature points, efficient multimodal information integration based on flexible mathematical additions in Gaussian space, and interpretable coarse-to-fine segmentation predicting processes based on the explicitness of low-dimensional Gaussian feature and the compatibility between Gaussian prompts and the conditional diffusion model. Additionally, the quantitative analysis summarized in Table 1 showed that, compared to other methods, our proposed method achieved sufficient performance consistency among four different hospitals with the minimum center bias. The high multi-institutional consistency further demonstrated the generalization ability and high clinical utility of the proposed method. Another unique advantage of the proposed method is that information is extracted separately from each input modality channel, which greatly alleviates potential registration errors and inherently increases the generalization ability across different centers.

Not restricted to more refined and clinically reliable information extraction, our proposed Gaussian-prompted diffusion segmentation module also further endows the whole multimodal segmentation process with interpretability. As related works commonly point out that the contribution of multimodal input channels tends to become uneven during the training process [29], currently, deep learning-based methods may not be able to achieve effective information integration from multimodal inputs based on a real clinical focus. This ineffective information integration can greatly limit the model’s performance, and moreover, adjusting the multimodal information contribution can be challenging due to the implicit end-to-end training paradigm. Adding multichannel weighing factors [30] or designing loss functions for specific channels [31] are common solutions for adjusting multichannel channel contributions, yet these methods lack effectiveness and fail to utilize clinical priors for adjusting multimodal input contributions. Combining Gaussian prompts and diffusion models can ensure interpretable and effective multimodal information integration. On the one hand, clinical bias can be applied in the Gaussian feature extraction period, for example, by guiding the Gaussian encoder to extract bone-destruction-related information from CT imaging. The clinically biased information can be further conveyed to the segmentation process by adding the Gaussian feature to the intermediate images during the sampling process of the diffusion model, without information loss due the flexible operational ability of Gaussian distribution. On the other hand, clinically biased information from different imaging modalities can be respectively used for prompts during the sampling process, and the effects from the prompt to the segmentation trajectory altering can be observable. Compared to a conventional end-to-end segmentation model, diffusion model-based segmentation is undertaken in an observable step-wise denoising trajectory [32], and the altering of the trajectory path prompted by Gaussian features from multimodal inputs reasonably demonstrates the multimodal information integration effectiveness and further demonstrates the interpretability of the proposed method. The conducted ablation study evidently reveals this effectiveness and interpretability.

Although our proposed method has achieved considerable accuracy improvement and demonstrated interpretability for multimodal segmentation, it still suffers from slow generating speed due to the diffusion-based model design. Additionally, there is still a wealth of professional knowledge on the clinical NPC GTV delineation process that can be leveraged in the Gaussian feature extract stage for a more clinically focused design. The multi-institutional robustness of the proposed method also requires further inter-observer assessments. In the future, we plan to employ more advanced denoising diffusion probabilistic approaches for accelerating the segmentation generation process, such as denoising diffusion implicit models (DDIM). We also plan to further explore effective clinical knowledge on recognizing NPC GTV in different imaging modalities for more comprehensive Gaussian initialization module design, thereby further boosting the model performance.

5. Conclusions

This study introduces a 3D Gaussian-prompted diffusion model for multimodal gross tumor volume (GTV) segmentation of nasopharyngeal carcinoma (NPC) patients. The model is designed to enhance the clinical relevance of feature extraction process and improve the integration efficiency of multimodal imaging information fusion process. The proposed method addresses two long-standing challenges in multimodal segmentation:accuracy limitation due to information redundancy;and poor interpretability caused by unreliable data fusion process. Extensive experiments demonstrate that the proposed model achieves significant improvements in both accuracy and interpretability over state-of-the-art methods. These gains are attributed to its use of 3D Gaussian representations for more clinically targeted feature extraction and a Gaussian-prompted diffusion mechanism for more effective multimodal integration. Overall, this work offers a robust and interpretable tool for multimodal NPC GTV segmentation, which is critical for NPC radiation therapy treatment precision. The proposed interpretable and robust multimodal segmentation paradigm processes significant clinical utility, which may potentially facilitate a more effective image-guided radiation therapy process.

Author Contributions

Conceptualization, methodology, writing—original draft preparation, investigation: J.Z.; validation, resources, Z.M.; writing—review and editing, supervision: G.R. and J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly supported by the Health and Medical Research Fund (11222456) of the Health Bureau, the Pneumoconiosis Compensation Fund Board in HKSAR, and Shenzhen Science and Technology Program (JCYJ20230807140403007), Guangdong Basic and Applied Basic Research Foundation (2025A1515012926).

Institutional Review Board Statement

This study complied with the Declaration of Helsinki and was approved by the Research Ethics Committee (Kowloon Central/Kowloon East), reference number KC/KE-18-0085/ER-1, 14 September 2018.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are confidential.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wong, K.C.; Hui, E.P.; Lo, K.W.; Lam, W.K.J.; Johnson, D.; Li, L.; Tao, Q.; Chan, K.C.A.; To, K.F.; King, A.D.; et al. Nasopharyngeal carcinoma: An evolving paradigm. Nat. Rev. Clin. Oncol. 2021, 18, 679–695. [Google Scholar] [CrossRef]

- Lin, L.; Dou, Q.; Jin, Y.M.; Zhou, G.Q.; Tang, Y.Q.; Chen, W.L.; Su, B.A.; Liu, F.; Tao, C.J.; Jiang, N.; et al. Deep learning for automated contouring of primary tumor volumes by MRI for nasopharyngeal carcinoma. Radiology 2019, 291, 677–686. [Google Scholar] [CrossRef]

- Bossi, P.; Chan, A.T.; Licitra, L.; Trama, A.; Orlandi, E.; Hui, E.P.; Halámková, J.; Mattheis, S.; Baujat, B.; Hardillo, J.; et al. Nasopharyngeal carcinoma: ESMO-EURACAN Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann. Oncol. 2021, 32, 452–465. [Google Scholar] [CrossRef]

- Tang, P.; Zu, C.; Hong, M.; Yan, R.; Peng, X.; Xiao, J.; Wu, X.; Zhou, J.; Zhou, L.; Wang, Y. DA-DSUnet: Dual attention-based dense SU-net for automatic head-and-neck tumor segmentation in MRI images. Neurocomputing 2021, 435, 103–113. [Google Scholar] [CrossRef]

- Guo, Z.; Li, X.; Huang, H.; Guo, N.; Li, Q. Deep learning-based image segmentation on multimodal medical imaging. IEEE Trans. Radiat. Plasma Med. Sci. 2019, 3, 162–169. [Google Scholar] [CrossRef]

- Hao, Y.; Jiang, H.; Diao, Z.; Shi, T.; Liu, L.; Li, H.; Zhang, W. MSU-Net: Multi-scale Sensitive U-Net based on pixel-edge-region level collaborative loss for nasopharyngeal MRI segmentation. Comput. Biol. Med. 2023, 159, 106956. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Diao, W.; Yan, Z.; Yao, F.; Fu, K. Multimodal remote sensing image segmentation with intuition-inspired hypergraph modeling. IEEE Trans. Image Process. 2023, 32, 1474–1487. [Google Scholar] [CrossRef]

- Peng, Y.; Sun, J. The multimodal MRI brain tumor segmentation based on AD-Net. Biomed. Signal Process. Control 2023, 80, 104336. [Google Scholar] [CrossRef]

- Tao, G.; Li, H.; Huang, J.; Han, C.; Chen, J.; Ruan, G.; Huang, W.; Hu, Y.; Dan, T.; Zhang, B.; et al. SeqSeg: A sequential method to achieve nasopharyngeal carcinoma segmentation free from background dominance. Med Image Anal. 2022, 78, 102381. [Google Scholar] [CrossRef]

- Zhang, J.; Li, B.; Qiu, Q.; Mo, H.; Tian, L. SICNet: Learning selective inter-slice context via Mask-Guided Self-knowledge distillation for NPC segmentation. J. Vis. Commun. Image Represent. 2024, 98, 104053. [Google Scholar] [CrossRef]

- Shi, Y.; Zu, C.; Yang, P.; Tan, S.; Ren, H.; Wu, X.; Zhou, J.; Wang, Y. Uncertainty-weighted and relation-driven consistency training for semi-supervised head-and-neck tumor segmentation. Knowl.-Based Syst. 2023, 272, 110598. [Google Scholar] [CrossRef]

- Tang, P.; Yang, P.; Nie, D.; Wu, X.; Zhou, J.; Wang, Y. Unified medical image segmentation by learning from uncertainty in an end-to-end manner. Knowl.-Based Syst. 2022, 241, 108215. [Google Scholar] [CrossRef]

- Zhang, Y.; Sidibé, D.; Morel, O.; Mériaudeau, F. Deep multimodal fusion for semantic image segmentation: A survey. Image Vis. Comput. 2021, 105, 104042. [Google Scholar] [CrossRef]

- Pandey, S.; Chen, K.F.; Dam, E.B. Comprehensive multimodal segmentation in medical imaging: Combining yolov8 with sam and hq-sam models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 2592–2598. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Jain, U.; Mirzaei, A.; Gilitschenski, I. Gaussiancut: Interactive segmentation via graph cut for 3D Gaussian splatting. Adv. Neural Inf. Process. Syst. 2024, 37, 89184–89212. [Google Scholar]

- Cen, J.; Fang, J.; Yang, C.; Xie, L.; Zhang, X.; Shen, W.; Tian, Q. Segment any 3D Gaussians. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 1971–1979. [Google Scholar]

- Kim, C.M.; Wu, M.; Kerr, J.; Goldberg, K.; Tancik, M.; Kanazawa, A. Garfield: Group anything with radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21530–21539. [Google Scholar]

- Zwicker, M.; Pfister, H.; Van Baar, J.; Gross, M. Ewa volume splatting. In Proceedings of the Proceedings Visualization (VIS’01), San Diego, CA, USA, 21–26 October 2001; IEEE: New York, NY, USA, 2001; pp. 29–538. [Google Scholar]

- Li, Y.; Fu, X.; Li, H.; Zhao, S.; Jin, R.; Zhou, S.K. 3DGR-CT: Sparse-view CT reconstruction with a 3D Gaussian representation. Med. Image Anal. 2025, 103, 103585. [Google Scholar] [CrossRef]

- Rahman, A.; Valanarasu, J.M.J.; Hacihaliloglu, I.; Patel, V.M. Ambiguous medical image segmentation using diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Denver, CO, USA, 3–7 June 2023; pp. 11536–11546. [Google Scholar]

- Lee, A.W.; Ng, W.T.; Pan, J.J.; Poh, S.S.; Ahn, Y.C.; AlHussain, H.; Corry, J.; Grau, C.; Grégoire, V.; Harrington, K.J.; et al. International guideline for the delineation of the clinical target volumes (CTV) for nasopharyngeal carcinoma. Radiother. Oncol. 2018, 126, 25–36. [Google Scholar] [CrossRef]

- Klein, S.; Staring, M.; Murphy, K.; Viergever, M.A.; Pluim, J.P. Elastix: A toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 2009, 29, 196–205. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Sohel Rahman, M.M. Rethinking the U-Net architecture for multimodal biomedical image segmentation. arXiv 2019, arXiv:1902.04049. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Thada, V.; Jaglan, V. Comparison of jaccard, dice, cosine similarity coefficient to find best fitness value for web retrieved documents using genetic algorithm. Int. J. Innov. Eng. Technol. 2013, 2, 202–205. [Google Scholar]

- Zhan, F.; Yu, Y.; Wu, R.; Zhang, J.; Lu, S.; Liu, L.; Kortylewski, A.; Theobalt, C.; Xing, E. Multimodal image synthesis and editing: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 4, 15098–15119. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Chen, G.; Shen, J.; Shao, L. Hi-net: Hybrid-fusion network for multi-modal MR image synthesis. IEEE Trans. Med Imaging 2020, 39, 2772–2781. [Google Scholar] [CrossRef]

- Yuan, X.; Lin, Z.; Kuen, J.; Zhang, J.; Wang, Y.; Maire, M.; Kale, A.; Faieta, B. Multimodal contrastive training for visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6995–7004. [Google Scholar]

- Vora, A.; Paunwala, C.N.; Paunwala, M. Improved weight assignment approach for multimodal fusion. In Proceedings of the 2014 International Conference on Circuits, Systems, Communication and Information Technology Applications (CSCITA), Mumbai, India, 4–5 April 2014; IEEE: New York, NY, USA, 2014; pp. 70–74. [Google Scholar]

- He, B.; Wang, J.; Qiu, J.; Bui, T.; Shrivastava, A.; Wang, Z. Align and attend: Multimodal summarization with dual contrastive losses. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14867–14878. [Google Scholar]

- Wu, J.; Fu, R.; Fang, H.; Zhang, Y.; Yang, Y.; Xiong, H.; Liu, H.; Xu, Y. Medsegdiff: Medical image segmentation with diffusion probabilistic model. In Proceedings of the Medical Imaging with Deep Learning. PMLR, Paris, France, 3–5 July 2024; pp. 1623–1639. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).