Tumor Response Evaluation Using iRECIST: Feasibility and Reliability of Manual Versus Software-Assisted Assessments

Abstract

Simple Summary

Abstract

1. Introduction

2. Material and Methods

2.1. Study Population

2.2. Imaging

2.3. Data Analysis

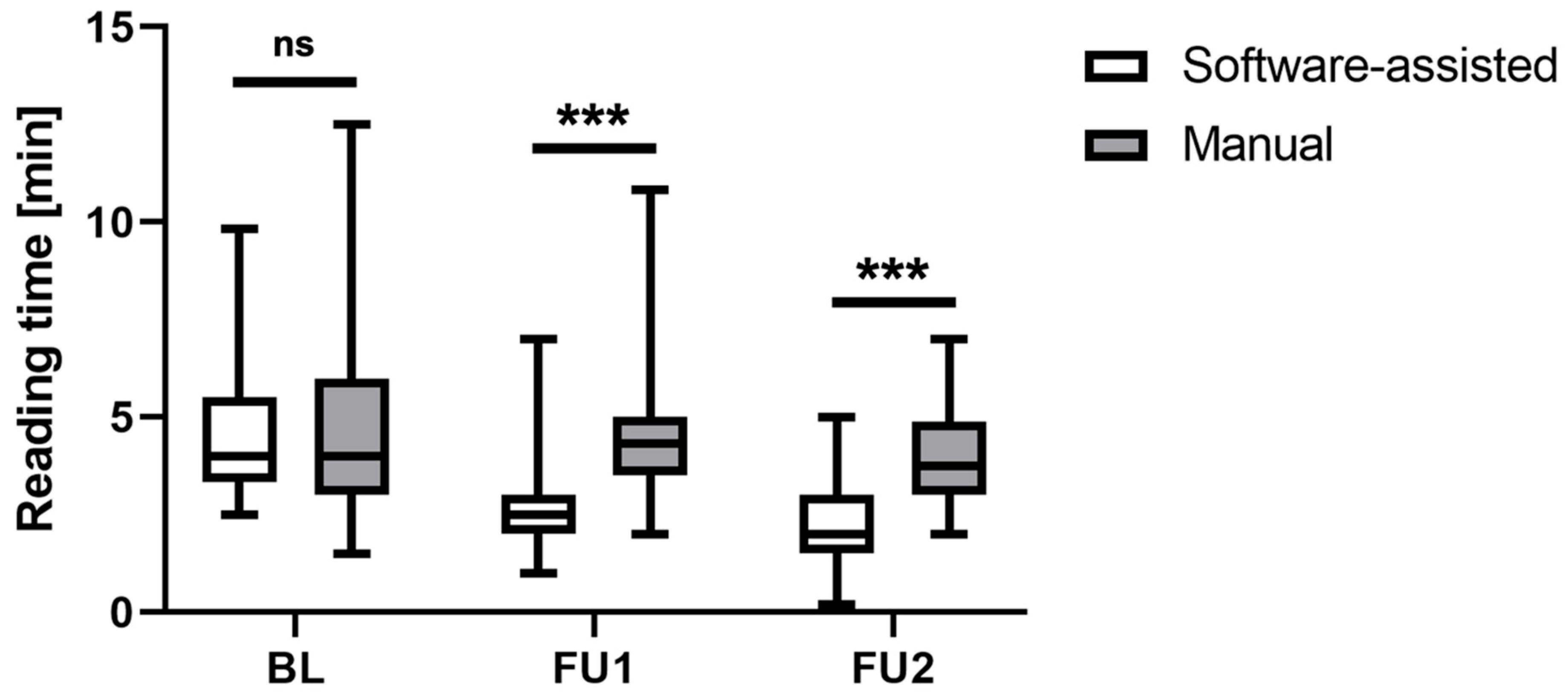

2.4. Reading Time Assessment

2.4.1. Manual iRECIST Assessment

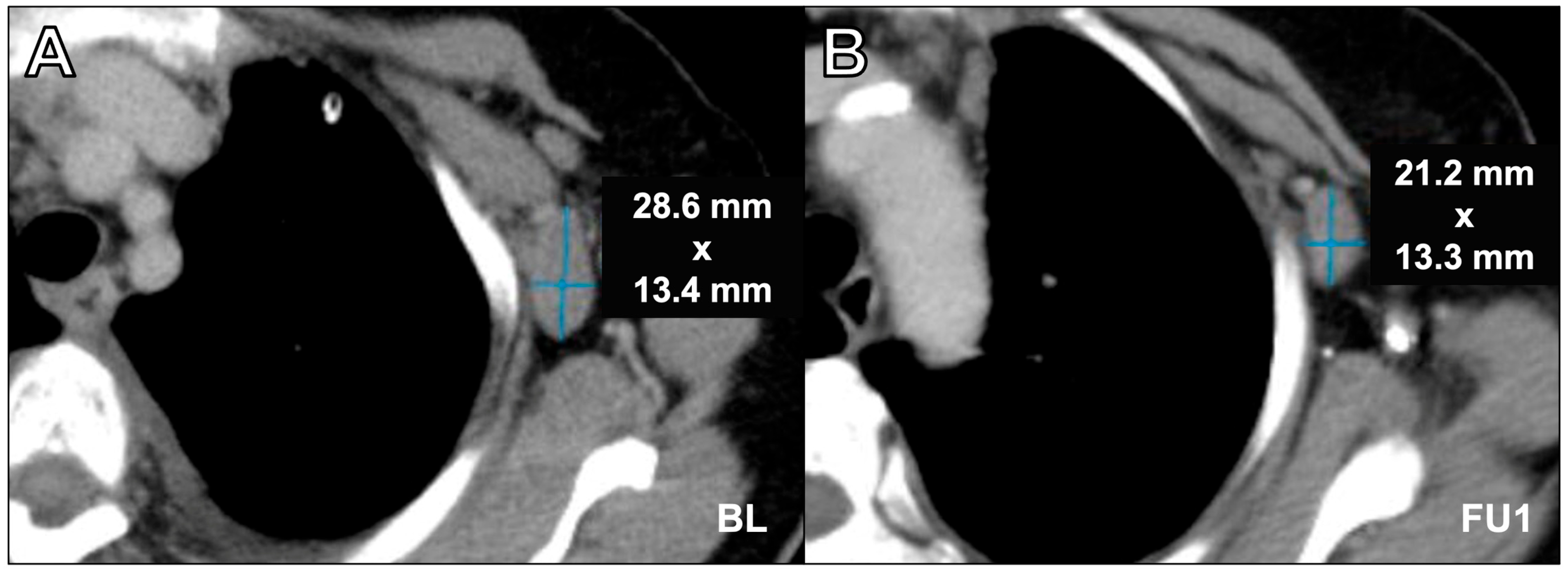

2.4.2. Software-Assisted iRECIST Assessment

2.5. Analysis of Response Assessments

2.6. Quantitative Inter-Reader Agreement

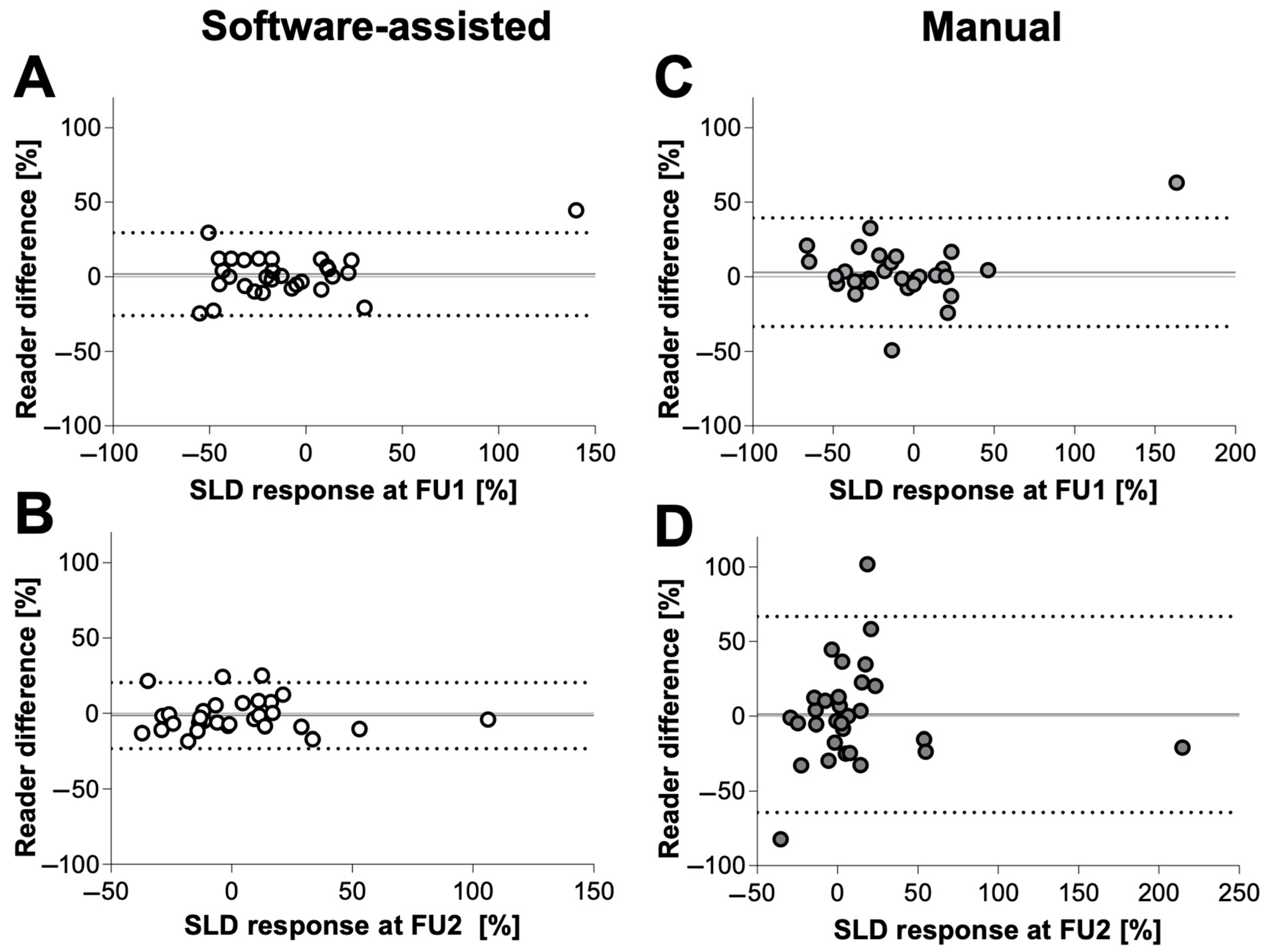

2.7. Statistical Analysis

3. Results

3.1. Reading Time Assessment

3.2. Analysis of Response Assessments

3.3. Interobserver Correlation

3.4. Quantitative Inter-Reader Agreement

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Therasse, P.; Arbuck, S.G.; Eisenhauer, E.A.; Wanders, J.; Kaplan, R.S.; Rubinstein, L.; Verweij, J.; Van Glabbeke, M.; Van Oosterom, A.T.; Christian, M.C.; et al. New Guidelines to Evaluate the Response to Treatment in Solid Tumors. JNCI J. Natl. Cancer Inst. 2000, 92, 205–216. [Google Scholar] [CrossRef]

- Eisenhauer, E.A.; Therasse, P.; Bogaerts, J.; Schwartz, L.H.; Sargent, D.; Ford, R.; Dancey, J.; Arbuck, S.; Gwyther, S.; Mooney, M.; et al. New Response Evaluation Criteria in Solid Tumours: Revised RECIST Guideline (Version 1.1). Eur. J. Cancer 2009, 45, 228–247. [Google Scholar] [CrossRef]

- Schwartz, L.H.; Litière, S.; De Vries, E.; Ford, R.; Gwyther, S.; Mandrekar, S.; Shankar, L.; Bogaerts, J.; Chen, A.; Dancey, J.; et al. RECIST 1.1—Update and Clarification: From the RECIST Committee. Eur. J. Cancer 2016, 62, 132–137. [Google Scholar] [CrossRef]

- Lencioni, R.; Llovet, J.M. Modified Recist (MRECIST) Assessment for Hepatocellular Carcinoma. Semin. Liver Dis. 2010, 30, 52–60. [Google Scholar] [CrossRef] [PubMed]

- Llovet, J.M.; Lencioni, R. MRECIST for HCC: Performance and Novel Refinements. J. Hepatol. 2020, 72, 288–306. [Google Scholar] [CrossRef]

- Byrne, M.J.; Nowak, A.K. Modified RECIST Criteria for Assessment of Response in Malignant Pleural Mesothelioma. Ann. Oncol. 2004, 15, 257–260. [Google Scholar] [CrossRef]

- Ramon-Patino, J.L.; Schmid, S.; Lau, S.; Seymour, L.; Gaudreau, P.O.; Li, J.J.N.; Bradbury, P.A.; Calvo, E. IRECIST and Atypical Patterns of Response to Immuno-Oncology Drugs. J. Immunother. Cancer 2022, 10, e004849. [Google Scholar] [CrossRef]

- Seymour, L.; Bogaerts, J.; Perrone, A.; Ford, R.; Schwartz, L.H.; Mandrekar, S.; Lin, N.U.; Litière, S.; Dancey, J.; Chen, A.; et al. IRECIST: Guidelines for Response Criteria for Use in Trials Testing Immunotherapeutics. Lancet Oncol. 2017, 18, e143–e152. [Google Scholar] [CrossRef]

- Chiou, V.L.; Burotto, M. Pseudoprogression and Immune-Related Response in Solid Tumors. J. Clin. Oncol. 2015, 33, 3541–3543. [Google Scholar] [CrossRef] [PubMed]

- Jia, W.; Gao, Q.; Han, A.; Zhu, H.; Yu, J. The Potential Mechanism, Recognition and Clinical Significance of Tumor Pseudoprogression after Immunotherapy. Cancer Biol. Med. 2019, 16, 655. [Google Scholar] [CrossRef] [PubMed]

- Abramson, R.G.; McGhee, C.R.; Lakomkin, N.; Arteaga, C.L. Pitfalls in RECIST Data Extraction for Clinical Trials: Beyond the Basics. Acad. Radiol. 2015, 22, 779. [Google Scholar] [CrossRef]

- Lai, Y.C.; Chang, W.C.; Chen, C.B.; Wang, C.L.; Lin, Y.F.; Ho, M.M.; Cheng, C.Y.; Huang, P.W.; Hsu, C.W.; Lin, G. Response Evaluation for Immunotherapy through Semi-Automatic Software Based on RECIST 1.1, IrRC, and IRECIST Criteria: Comparison with Subjective Assessment. Acta Radiol. 2020, 61, 983–991. [Google Scholar] [CrossRef]

- Goebel, J.; Hoischen, J.; Gramsch, C.; Schemuth, H.P.; Hoffmann, A.C.; Umutlu, L.; Nassenstein, K. Tumor Response Assessment: Comparison between Unstructured Free Text Reporting in Routine Clinical Workflow and Computer-Aided Evaluation Based on RECIST 1.1 Criteria. J. Cancer Res. Clin. Oncol. 2017, 143, 2527–2533. [Google Scholar] [CrossRef]

- Baidya Kayal, E.; Kandasamy, D.; Yadav, R.; Bakhshi, S.; Sharma, R.; Mehndiratta, A. Automatic Segmentation and RECIST Score Evaluation in Osteosarcoma Using Diffusion MRI: A Computer Aided System Process. Eur. J. Radiol. 2020, 133, 109359. [Google Scholar] [CrossRef]

- Primakov, S.P.; Ibrahim, A.; van Timmeren, J.E.; Wu, G.; Keek, S.A.; Beuque, M.; Granzier, R.W.Y.; Lavrova, E.; Scrivener, M.; Sanduleanu, S.; et al. Automated Detection and Segmentation of Non-Small Cell Lung Cancer Computed Tomography Images. Nat. Commun. 2022, 13, 3423. [Google Scholar] [CrossRef]

- Folio, L.R.; Sandouk, A.; Huang, J.; Solomon, J.M.; Apolo, A.B. Consistency and Efficiency of CT Analysis of Metastatic Disease: Semiautomated Lesion Management Application Within a PACS. AJR Am. J. Roentgenol. 2013, 201, 618. [Google Scholar] [CrossRef]

- René, A.; Aufort, S.; Mohamed, S.S.; Daures, J.P.; Chemouny, S.; Bonnel, C.; Gallix, B. How Using Dedicated Software Can Improve RECIST Readings. Informatics 2014, 1, 160–173. [Google Scholar] [CrossRef]

- Sailer, A.M.; Douwes, D.C.; Cappendijk, V.C.; Bakers, F.C.; Wagemans, B.A.; Wildberger, J.E.; Kessels, A.G.; Beets-Tan, R.G. RECIST Measurements in Cancer Treatment: Is There a Role for Physician Assistants?—A Pilot Study. Cancer Imaging 2014, 14, 12. [Google Scholar] [CrossRef][Green Version]

- Gouel, P.; Callonnec, F.; Levêque, É.; Valet, C.; Blôt, A.; Cuvelier, C.; Saï, S.; Saunier, L.; Pepin, L.F.; Hapdey, S.; et al. Evaluation of the Capability and Reproducibility of RECIST 1.1. Measurements by Technologists in Breast Cancer Follow-up: A Pilot Study. Sci. Rep. 2023, 13, 9148. [Google Scholar] [CrossRef]

- Hillman, S.L.; An, M.W.; O’Connell, M.J.; Goldberg, R.M.; Schaefer, P.; Buckner, J.C.; Sargent, D.J. Evaluation of the Optimal Number of Lesions Needed for Tumor Evaluation Using the Response Evaluation Criteria in Solid Tumors: A North Central Cancer Treatment Group Investigation. J. Clin. Oncol. 2009, 27, 3205. [Google Scholar] [CrossRef]

- Siegel, M.J.; Ippolito, J.E.; Wahl, R.L.; Siegel, B.A. Discrepant Assessments of Progressive Disease in Clinical Trials between Routine Clinical Reads and Formal RECIST 1.1. Radiol. Imaging Cancer 2023, 5, e230001. [Google Scholar] [CrossRef] [PubMed]

- Cappello, G.; Romano, V.; Neri, E.; Fournier, L.; D’Anastasi, M.; Laghi, A.; Zamboni, G.A.; Beets-Tan, R.G.H.; Schlemmer, H.P.; Regge, D. A European Society of Oncologic Imaging (ESOI) Survey on the Radiological Assessment of Response to Oncologic Treatments in Clinical Practice. Insights Imaging 2023, 14, 220. [Google Scholar] [CrossRef]

- Kuhl, C.K.; Alparslan, Y.; Schmoee, J.; Sequeira, B.; Keulers, A.; Brümmendorf, T.H.; Keil, S. Validity of RECIST Version 1.1 for Response Assessment in Metastatic Cancer: A Prospective, Multireader Study. Radiology 2019, 290, 349–356. [Google Scholar] [CrossRef]

- Keil, S.; Barabasch, A.; Dirrichs, T.; Bruners, P.; Hansen, N.L.; Bieling, H.B.; Brümmendorf, T.H.; Kuhl, C.K. Target Lesion Selection: An Important Factor Causing Variability of Response Classification in the Response Evaluation Criteria for Solid Tumors 1.1. Investig. Radiol. 2014, 49, 509–517. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, A.; Kumtakar, A.; Sehgal, H.; Kumar, S.; Kalyanpur, A. Interobserver Variation in Response Evaluation Criteria in Solid Tumors 1.1. Acad. Radiol. 2019, 26, 489–501. [Google Scholar] [CrossRef] [PubMed]

| Examination Timepoint | Software-Assisted | Manual |

|---|---|---|

| FU1 | 0/60 (0%) | 2/60 (3.3%) |

| FU2 | 1/60 (1.7%) | 6/60 (10%) |

| Software-Assisted | Manual | p-Value | ||

|---|---|---|---|---|

| Mean difference (%) ± SD | FU1 | 10.2 ± 0.9 | 11.6 ± 2.1 | 0.56 |

| FU2 | 8.9 ± 6.5 | 23.4 ± 23.2 | 0.001 | |

| Bias ± SD | FU1 | 1.8 ± 14.2 | 3.0 ± 18.6 | |

| FU2 | −1.4 ± 11.1 | 1.20 ± 33.4 | ||

| 95% limits of agreement | FU1 | −26.1 to 29.6 | −33.41 to 39.4 | |

| FU2 | −23.2 to 20.4 | −64.3 to 66.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ristow, I.; Well, L.; Wiese, N.J.; Warncke, M.; Tintelnot, J.; Karimzadeh, A.; Koehler, D.; Adam, G.; Bannas, P.; Sauer, M. Tumor Response Evaluation Using iRECIST: Feasibility and Reliability of Manual Versus Software-Assisted Assessments. Cancers 2024, 16, 993. https://doi.org/10.3390/cancers16050993

Ristow I, Well L, Wiese NJ, Warncke M, Tintelnot J, Karimzadeh A, Koehler D, Adam G, Bannas P, Sauer M. Tumor Response Evaluation Using iRECIST: Feasibility and Reliability of Manual Versus Software-Assisted Assessments. Cancers. 2024; 16(5):993. https://doi.org/10.3390/cancers16050993

Chicago/Turabian StyleRistow, Inka, Lennart Well, Nis Jesper Wiese, Malte Warncke, Joseph Tintelnot, Amir Karimzadeh, Daniel Koehler, Gerhard Adam, Peter Bannas, and Markus Sauer. 2024. "Tumor Response Evaluation Using iRECIST: Feasibility and Reliability of Manual Versus Software-Assisted Assessments" Cancers 16, no. 5: 993. https://doi.org/10.3390/cancers16050993

APA StyleRistow, I., Well, L., Wiese, N. J., Warncke, M., Tintelnot, J., Karimzadeh, A., Koehler, D., Adam, G., Bannas, P., & Sauer, M. (2024). Tumor Response Evaluation Using iRECIST: Feasibility and Reliability of Manual Versus Software-Assisted Assessments. Cancers, 16(5), 993. https://doi.org/10.3390/cancers16050993