Annotation-Efficient Deep Learning Model for Pancreatic Cancer Diagnosis and Classification Using CT Images: A Retrospective Diagnostic Study

Abstract

Simple Summary

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Ethics

3.2. Patient and Data Collection

3.3. Development of PS Self-Supervised Learning

3.4. Training of DL Models

3.5. Statistical Analysis

4. Results

4.1. Clinicopathological Data

4.2. PC Classification

4.2.1. State-of-the-Art DL Models for PC Classification

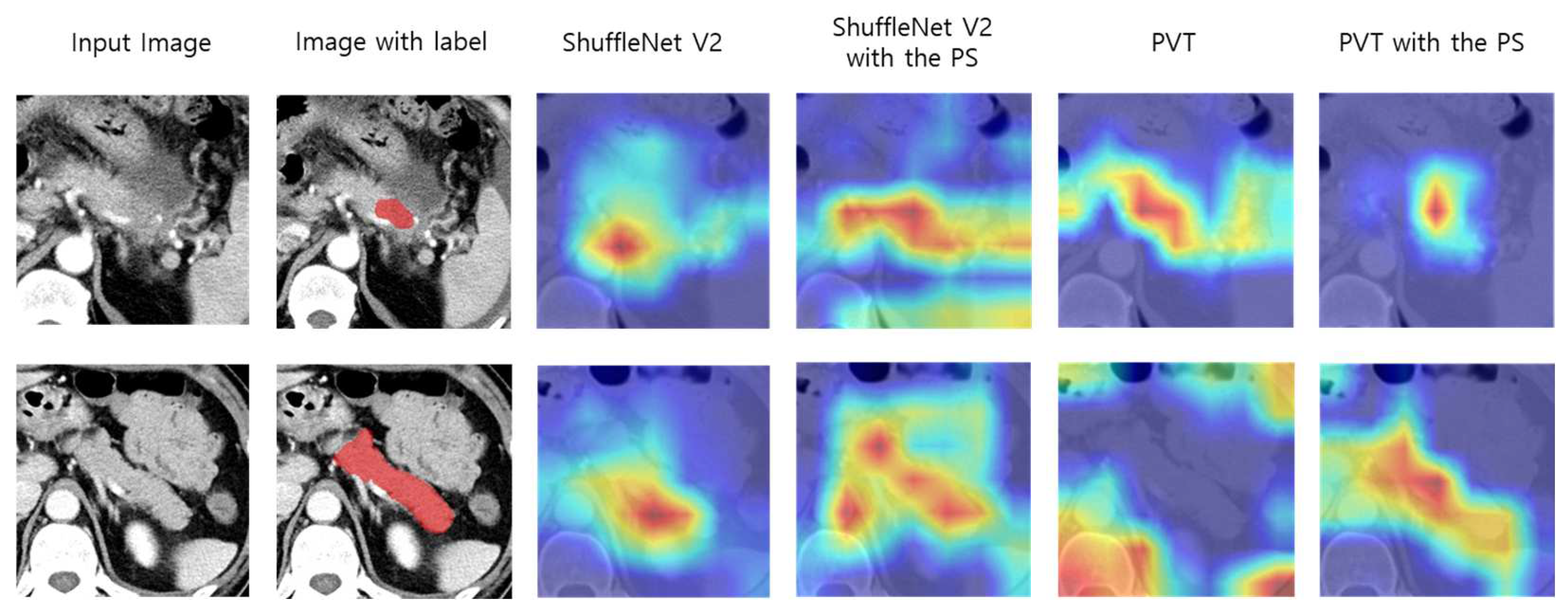

4.2.2. Impact of the PS on PC Classification on the Internal Validation Dataset

4.3. External Validation Set Classification

4.4. Early Stage PC Detection

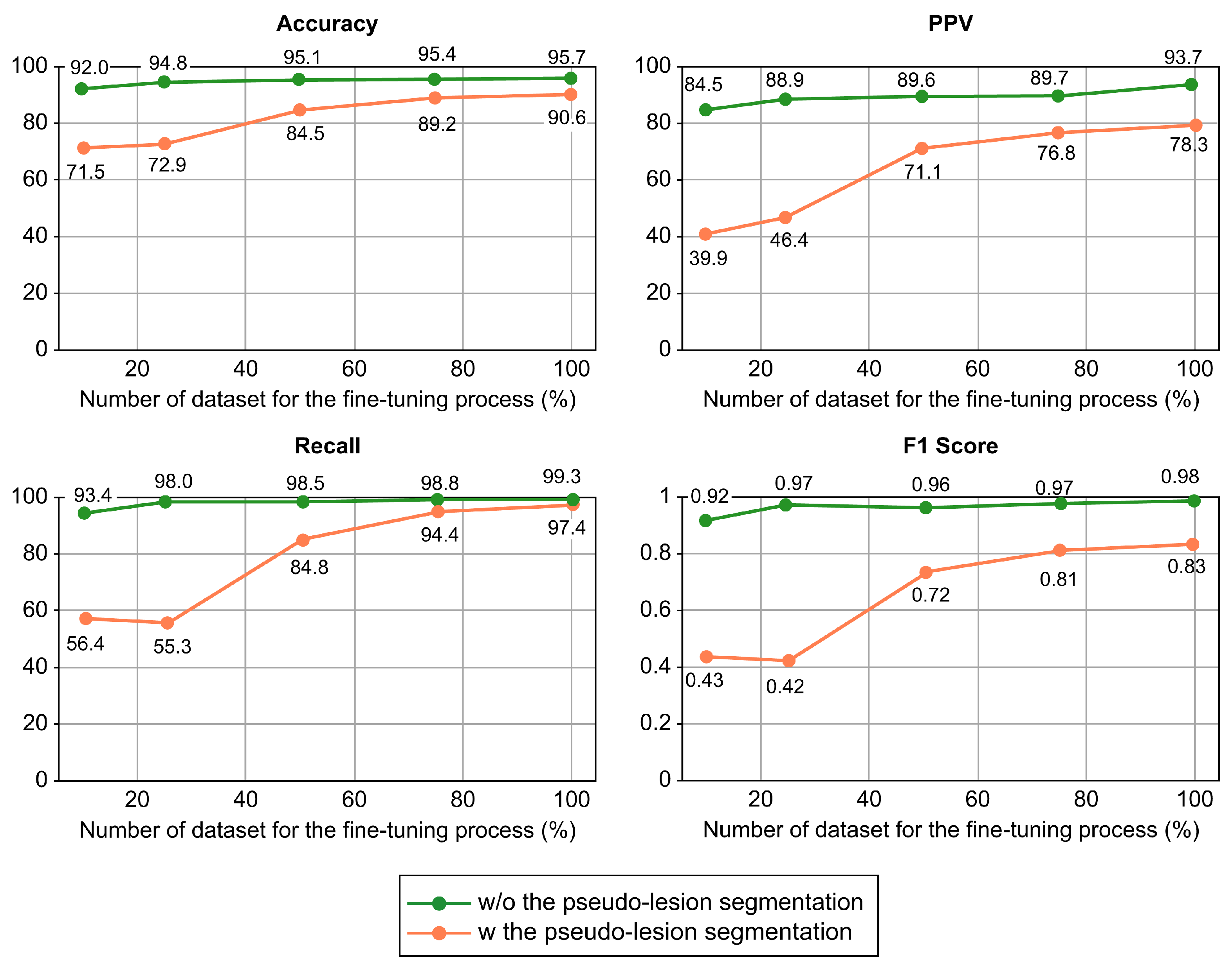

4.5. Performance Changes Depending on the Size of the Annotated Dataset

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Argalia, G.; Tarantino, G.; Ventura, C.; Campioni, D.; Tagliati, C.; Guardati, P.; Kostandini, A.; Marzioni, M.; Giuseppetti, G.M.; Giovagnoni, A. Shear wave elastography and transient elastography in HCV patients after direct-acting antivirals. Radiol. Med. 2021, 126, 894–899. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Wood, L.D.; Canto, M.I.; Jaffee, E.M.; Simeone, D.M. Pancreatic cancer: Pathogenesis, screening, diagnosis, and treatment. Gastroenterology 2022, 163, 386–402.e1. [Google Scholar] [CrossRef] [PubMed]

- Cicero, G.; Mazziotti, S.; Silipigni, S.; Blandino, A.; Cantisani, V.; Pergolizzi, S.; D’Angelo, T.; Stagno, A.; Maimone, S.; Squadrito, G.; et al. Dual-energy CT quantification of fractional extracellular space in cirrhotic patients: Comparison between early and delayed equilibrium phases and correlation with oesophageal varices. Radiol. Med. 2021, 126, 761–767. [Google Scholar] [CrossRef]

- Granata, V.; Fusco, R.; Setola, S.V.; Avallone, A.; Palaia, R.; Grassi, R.; Izzo, F.; Petrillo, A. Radiological assessment of secondary biliary tree lesions: An update. J. Int. Med. Res. 2020, 48, 300060519850398. [Google Scholar] [CrossRef]

- Granata, V.; Grassi, R.; Fusco, R.; Setola, S.V.; Palaia, R.; Belli, A.; Miele, V.; Brunese, L.; Grassi, R.; Petrillo, A.; et al. Assessment of Ablation Therapy in Pancreatic Cancer: The Radiologist’s Challenge. Front. Oncol. 2020, 10, 560952. [Google Scholar] [CrossRef]

- Bronstein, Y.L.; Loyer, E.M.; Kaur, H.; Choi, H.; David, C.; DuBrow, R.A.; Broemeling, L.D.; Cleary, K.R.; Charnsangavej, C. Detection of small pancreatic tumors with multiphasic helical CT. AJR Am. J. Roentgenol. 2004, 182, 619–623. [Google Scholar] [CrossRef]

- Chu, L.C.; Goggins, M.G.; Fishman, E.K. Diagnosis and Detection of Pancreatic Cancer. Cancer J. 2017, 23, 333–342. [Google Scholar] [CrossRef]

- Tamm, E.P.; Loyer, E.M.; Faria, S.C.; Evans, D.B.; Wolff, R.A.; Charnsangavej, C. Retrospective analysis of dual-phase MDCT and follow-up EUS/EUS-FNA in the diagnosis of pancreatic cancer. Abdom. Imaging 2007, 32, 660–667. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Corrigendum: Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 546, 686. [Google Scholar] [CrossRef]

- Hagerty, J.R.; Stanley, R.J.; Almubarak, H.A.; Lama, N.; Kasmi, R.; Guo, P.; Drugge, R.J.; Rabinovitz, H.S.; Oliviero, M.; Stoecker, W.V. Deep Learning and Handcrafted Method Fusion: Higher Diagnostic Accuracy for Melanoma Dermoscopy Images. IEEE J. Biomed. Health 2019, 23, 1385–1391. [Google Scholar] [CrossRef] [PubMed]

- Lehman, C.D.; Yala, A.; Schuster, T.; Dontchos, B.; Bahl, M.; Swanson, K.; Barzilay, R. Mammographic Breast Density Assessment Using Deep Learning: Clinical Implementation. Radiology 2019, 290, 52–58. [Google Scholar] [CrossRef] [PubMed]

- Ma, N.N.; Zhang, X.Y.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. Lect. Notes Comp. Sci. 2018, 11218, 122–138. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Lian, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Kuwahara, T.; Hara, K.; Mizuno, N.; Okuno, N.; Matsumoto, S.; Obata, M.; Kurita, Y.; Koda, H.; Toriyama, K.; Onishi, S.; et al. Usefulness of Deep Learning Analysis for the Diagnosis of Malignancy in Intraductal Papillary Mucinous Neoplasms of the Pancreas. Clin. Transl. Gastroen. 2019, 10, 1–8. [Google Scholar] [CrossRef]

- Liu, K.L.; Wu, T.; Chen, P.T.; Tsai, Y.M.; Roth, H.; Wu, M.S.; Liao, W.C.; Wang, W. Deep learning to distinguish pancreatic cancer tissue from non-cancerous pancreatic tissue: A retrospective study with cross-racial external validation. Lancet Digit. Health 2020, 2, e303–e313. [Google Scholar] [CrossRef]

- Sekaran, K.; Chandana, P.; Krishna, N.M.; Kadry, S. Deep learning convolutional neural network (CNN) with Gaussian mixture model for predicting pancreatic cancer. Multimed. Tools Appl. 2020, 79, 10233–10247. [Google Scholar] [CrossRef]

- Thanaporn, V.; Woo, S.M.; Choi, J.H. Unsupervised visual representation learning based on segmentation of geometric pseudo-shapes for transformer-based medical tasks. IEEE J. Biomed. Health Inform. 2023, 27, 2003–2014. [Google Scholar]

- Alves, N.; Schuurmans, M.; Litjens, G.; Bosma, J.S.; Hermans, J.; Huisman, H. Fully automatic deep learning framework for pancreatic ductal adenocarcinoma detection on computed tomography. Cancers 2022, 14, 376. [Google Scholar] [CrossRef]

- Frampas, E.; David, A.; Regenet, N.; Touchefeu, Y.; Meyer, J.; Morla, O. Pancreatic carcinoma: Key-points from diagnosis to treatment. Diagn. Interv. Imaging 2016, 97, 1207–1223. [Google Scholar] [CrossRef]

- Pourvaziri, A. Potential CT Findings to Improve Early Detection of Pancreatic Cancer. Radiol. Imaging Cancer 2022, 4, e229001. [Google Scholar] [CrossRef]

- Chu, L.C.; Park, S.; Kawamoto, S.; Wang, Y.; Zhou, Y.; Shen, W.; Zhu, Z.; Xia, Y.; Xie, L.; Liu, F.; et al. Application of Deep Learning to Pancreatic Cancer Detection: Lessons Learned from Our Initial Experience. J. Am. Coll. Radiol. 2019, 16, 1338–1342. [Google Scholar] [CrossRef]

- Si, K.; Xue, Y.; Yu, X.; Zhu, X.; Li, Q.; Gong, W.; Liang, T.; Duan, S. Fully End-to-End Deep-Learning-Based Diagnosis of Pancreatic Tumors. Theranostics 2021, 11, 1982–1990. [Google Scholar] [CrossRef]

- Mu, W.; Liu, C.; Gao, F.; Qi, Y.; Lu, H.; Liu, Z.; Zhang, X.; Cai, X.; Ji, R.Y.; Hou, Y.; et al. Prediction of Clinically Relevant PancreaticoEnteric Anastomotic Fistulas after Pancreatoduodenectomy Using Deep Learning of Preoperative Computed Tomography. Theranostics 2020, 10, 9779–9788. [Google Scholar] [CrossRef]

- Liu, Y.; Jain, A.; Eng, C.; Way, D.H.; Lee, K.; Bui, P.; Kanada, K.; de Oliveira Marinho, G.; Gallegos, J.; Gabriele, S.; et al. A deep learning system for differential diagnosis of skin diseases. Nat. Med. 2020, 26, 900–908. [Google Scholar] [CrossRef]

- Noroozi, M.; Favaro, P. Unsupervised learning of visual representations by solving jigsaw puzzles. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; Part VI; pp. 69–84. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. Colorization as a proxy task for visual understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 840–849. [Google Scholar]

- Komodakis, N.; Gidaris, S. Unsupervised representation learning by predicting image rotations. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Azizi, S.; Mustafa, B.; Ryan, F.; Beaver, Z.; Freyberg, J.; Deaton, J.; Loh, A.; Karthikesalingam, A.; Kornblith, S.; Chen, T.; et al. Big self-supervised models advance medical image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3458–3468. [Google Scholar]

- Li, Z.; Wang, Y.; Yu, J.; Guo, Y.; Cao, W. Deep learning based radiomics (DLR) and its usage in noninvasive IDH1 prediction for low grade glioma. Sci. Rep. 2017, 7, 5467. [Google Scholar] [CrossRef]

- Park, C.M. Can artificial intelligence fix the reproducibility problem of radiomics? Radiology 2019, 292, 374–375. [Google Scholar] [CrossRef]

- Kuhl, C.K.; Alparslan, Y.; Schmoee, J.; Sequeira, B.; Keulers, A.; Brümmendorf, T.H.; Keil, S. Validity of RECIST Version 1.1 for Response Assessment in Metastatic Cancer: A Prospective, Multireader Study. Radiology 2019, 290, 349–356. [Google Scholar] [CrossRef]

- Gong, H.; Fletcher, J.G.; Heiken, J.P.; Wells, M.L.; Leng, S.; McCollough, C.H.; Yu, L. Deep-learning model observer for a low-contrast hepatic metastases localization task in computed tomography. Med. Phys. 2022, 49, 70–83. [Google Scholar] [CrossRef]

- Han, M.; Kim, B.; Baek, J. Human and model observer performance for lesion detection in breast cone beam CT images with the FDK reconstruction. PLoS ONE 2018, 13, e0194408. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 2015, pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2016, pp. 5987–5995. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Zhang, Z.; Lin, H.; Sun, Y.; He, T.; Mueller, J.W.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 18–23 June 2022; pp. 2735–2745. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Álvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:abs/2105.15203. [Google Scholar]

- Heo, B.; Yun, S.; Han, D.; Chun, S.; Choe, J.; Oh, S. Rethinking Spatial Dimensions of Vision Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 11916–11925. [Google Scholar]

- Simpson, A.L.; Antonelli, M.; Bakas, S.; Bilello, M.; Farahani, K.; van Ginneken, B.; Kopp-Schneider, A.; Landman, B.A.; Litjens, G.; Menze, B.; et al. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. arXiv 2019, arXiv:1902.09063. [Google Scholar]

- Roth, H.; Farag, A.; Turkbey, E.B.; Lu, L.; Liu, J.; Summers, R.M. Data from Pancreas-CT (Version 2) [Data set]. The Cancer Imaging Archive. 2016. Available online: https://wiki.cancerimagingarchive.net/display/public/pancreas-ct#225140405a525c7710d147e8bfc6611f18577bb7 (accessed on 25 May 2023).

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Ahn, S.S.; Kim, M.J.; Choi, J.Y.; Hong, H.S.; Chung, Y.E.; Lim, J.S. Indicative findings of pancreatic cancer in prediagnostic CT. Eur. Radiol. 2009, 19, 2448–2455. [Google Scholar] [CrossRef] [PubMed]

- Walters, D.M.; LaPar, D.J.; de Lange, E.E.; Sarti, M.; Stokes, J.B.; Adams, R.B.; Bauer, T.W. Pancreas-protocol imaging at a high-volume center leads to improved preoperative staging of pancreatic ductal adenocarcinoma. Ann. Surg. Oncol. 2011, 18, 2764–2771. [Google Scholar] [CrossRef] [PubMed]

- Han, S.H.; Heo, J.S.; Choi, S.H.; Choi, D.W.; Han, I.W.; Han, S.; You, Y.H. Actual long-term outcome of T1 and T2 pancreatic ductal adenocarcinoma after surgical resection. Int. J. Surg. 2017, 40, 68–72. [Google Scholar] [CrossRef]

- Kang, J.D.; Clarke, S.E.; Costa, A.F. Factors associated with missed and misinterpreted cases of pancreatic ductal adenocarcinoma. Eur. Radiol. 2021, 31, 2422–2432. [Google Scholar] [CrossRef]

- Elbanna, K.Y.; Jang, H.J.; Kim, T.K. Imaging diagnosis and staging of pancreatic ductal adenocarcinoma: A comprehensive review. Insights Imaging 2020, 11, 58. [Google Scholar] [CrossRef]

- Lê, K.A.; Ventura, E.E.; Fisher, J.Q.; Davis, J.N.; Weigensberg, M.J.; Punyanitya, M.; Hu, H.H.; Nayak, K.S.; Goran, M.I. Ethnic differences in pancreatic fat accumulation and its relationship with other fat depots and inflammatory markers. Diabetes Care 2011, 34, 485–490. [Google Scholar] [CrossRef]

- Permuth, J.B.; Vyas, S.; Li, J.; Chen, D.T.; Jeong, D.; Choi, J.W. Comparison of Radiomic Features in a Diverse Cohort of Patients with Pancreatic Ductal Adenocarcinomas. Front. Oncol. 2021, 11, 712950. [Google Scholar] [CrossRef]

- Yu, A.C.; Mohajer, B.; Eng, J. External Validation of Deep Learning Algorithms for Radiologic Diagnosis: A Systematic Review. Radiol. Artif. Intell. 2022, 4, e210064. [Google Scholar] [CrossRef]

- Amin, M.B.; Greene, F.L.; Edge, S.B.; Compton, C.C.; Gershenwald, J.E.; Brookland, R.K.; Meyer, L.; Gress, D.M.; Byrd, D.R.; Winchester, D.P. The Eighth Edition AJCC Cancer Staging Manual: Continuing to build a bridge from a population-based to a more “personalized” approach to cancer staging. CA Cancer J. Clin. 2017, 67, 93–99. [Google Scholar] [CrossRef]

| DL Model | Accuracy (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | Precision (95% CI) | F1 Score |

|---|---|---|---|---|---|

| CNN-based architecture | |||||

| ResNet 101 [35] | 90.2% (89.9–90.6%) | 78.6% (77.6–79.5%) | 91.1% (90.7–91.6%) | 80.4% (79.5–81.4%) | 0.79 |

| ResNeXt-101 [36] | 83.5% (83.0–83.9%) | 62.7% (61.7–63.7%) | 84.9% (84.3–85.4%) | 64.3% (63.0–65.5%) | 0.62 |

| ResNeSt [37] | 84.3% (83.9–84.7%) | 63.2% (62.2–64.1%) | 84.5% (84.0–85.0%) | 67.9% (66.7–69.2%) | 0.64 |

| ShuffleNet V2 | 93.6% (92.1–94.8%) | 90.6% (87.9–92.9%) | 95.5% (93.8–96.8%) | 93.9% (91.5–95.6%) | 0.92 |

| Transformer-based architecture | |||||

| MiT [38] | 85.4% (85.0–85.8%) | 64.5% (63.5–65.5%) | 84.7% (84.2–85.2%) | 71.8% (70.5–73.0%) | 0.66 |

| PiT [39] | 82.8% (82.3–83.2%) | 56.3% (55.5–57.2%) | 80.2% (79.7–80.7%) | 68.8% (67.2–70.4%) | 0.56 |

| PVT | 90.6% (88.8–92.1%) | 97.4% (95.3–98.6%) | 87.5% (85.1–89.6%) | 78.3% (74.4–81.7%) | 0.83 |

| DL Model | Accuracy (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | Precision (95% CI) | F1 Score | AUC |

|---|---|---|---|---|---|---|

| CNN-based architecture | ||||||

| ShuffleNet V2 | 93.6% (92.1–94.8%) | 90.6% (87.9–92.9%) | 95.5% (93.8–96.8%) | 93.9% (91.5–95.6%) | 0.92 | 0.93 |

| ShuffleNet V2 + PS | 94.3% (92.8–95.4%) | 92.5% (90.0–94.4%) | 95.8% (94.2–97.1%) | 94.4% (92.2–97.1%) | 0.93 | 0.94 |

| Transformer-based architecture | ||||||

| PVT | 90.6% (88.8–92.1%) | 97.4% (95.3–98.6%) | 87.5% (85.1–89.6%) | 78.3% (74.4–81.7%) | 0.83 | 0.88 |

| PVT + PS | 95.7% (94.5–96.7%) | 99.3% (98.4–99.7%) | 90.7% (88.0–92.9%) | 93.7% (91.8–95.2%) | 0.98 | 0.95 |

| DL Model | Accuracy (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | Precision (95% CI) | F1 Score | AUC |

|---|---|---|---|---|---|---|

| CNN-based architecture | ||||||

| ShuffleNet V2 | 80.9% (76.5–84.6%) | 80.3% (75.8–84.1%) | 100.0% (74.1–100.0%) | 100.0% (98.6–100.0%) | 0.89 | 0.57 |

| ShuffleNet V2 + PS | 82.5% (78.3–86.1%) | 81.7% (77.3–85.4%) | 100.0% (81.7–100.0%) | 100.0% (98.6–100.0%) | 0.90 | 0.61 |

| Transformer-based architecture | ||||||

| PVT | 83.1% (78.9–86.6%) | 82.3% (77.9–86.6%) | 95.2% (77.3–99.1%) | 99.6% (98.0–99.9%) | 0.90 | 0.62 |

| PVT + PS | 87.8% (84.0–90.8%) | 86.5% (82.3–89.8%) | 100.0% (90.4–100.0%) | 100.0% (98.6–100.0%) | 0.93 | 0.80 |

| DL Model | Accuracy (95% CI) | ||

|---|---|---|---|

| Stage T1 | Stage T2 | All Stages | |

| CNN-based architecture | |||

| ShuffleNet | 51.3% (44.8–57.8%) | 68.4% (65.9–70.8%) | 93.6% (92.1–94.8%) |

| ShuffleNet + PS | 54.0% (47.5–60.4%) | 76.9% (74.6–79.0%) | 94.3% (92.8–95.4%) |

| Transformer-based architecture | |||

| PVT | 50.4% (47.0–56.9%) | 67.1% (64.6–69.9%) | 90.6% (88.8–92.1%) |

| PVT + PS | 55.3% (48.8–61.8%) | 75.2% (72.7–77.6%) | 95.7% (94.5–96.7%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Viriyasaranon, T.; Chun, J.W.; Koh, Y.H.; Cho, J.H.; Jung, M.K.; Kim, S.-H.; Kim, H.J.; Lee, W.J.; Choi, J.-H.; Woo, S.M. Annotation-Efficient Deep Learning Model for Pancreatic Cancer Diagnosis and Classification Using CT Images: A Retrospective Diagnostic Study. Cancers 2023, 15, 3392. https://doi.org/10.3390/cancers15133392

Viriyasaranon T, Chun JW, Koh YH, Cho JH, Jung MK, Kim S-H, Kim HJ, Lee WJ, Choi J-H, Woo SM. Annotation-Efficient Deep Learning Model for Pancreatic Cancer Diagnosis and Classification Using CT Images: A Retrospective Diagnostic Study. Cancers. 2023; 15(13):3392. https://doi.org/10.3390/cancers15133392

Chicago/Turabian StyleViriyasaranon, Thanaporn, Jung Won Chun, Young Hwan Koh, Jae Hee Cho, Min Kyu Jung, Seong-Hun Kim, Hyo Jung Kim, Woo Jin Lee, Jang-Hwan Choi, and Sang Myung Woo. 2023. "Annotation-Efficient Deep Learning Model for Pancreatic Cancer Diagnosis and Classification Using CT Images: A Retrospective Diagnostic Study" Cancers 15, no. 13: 3392. https://doi.org/10.3390/cancers15133392

APA StyleViriyasaranon, T., Chun, J. W., Koh, Y. H., Cho, J. H., Jung, M. K., Kim, S.-H., Kim, H. J., Lee, W. J., Choi, J.-H., & Woo, S. M. (2023). Annotation-Efficient Deep Learning Model for Pancreatic Cancer Diagnosis and Classification Using CT Images: A Retrospective Diagnostic Study. Cancers, 15(13), 3392. https://doi.org/10.3390/cancers15133392