How AI Can Help in the Diagnostic Dilemma of Pulmonary Nodules

Abstract

:Simple Summary

Abstract

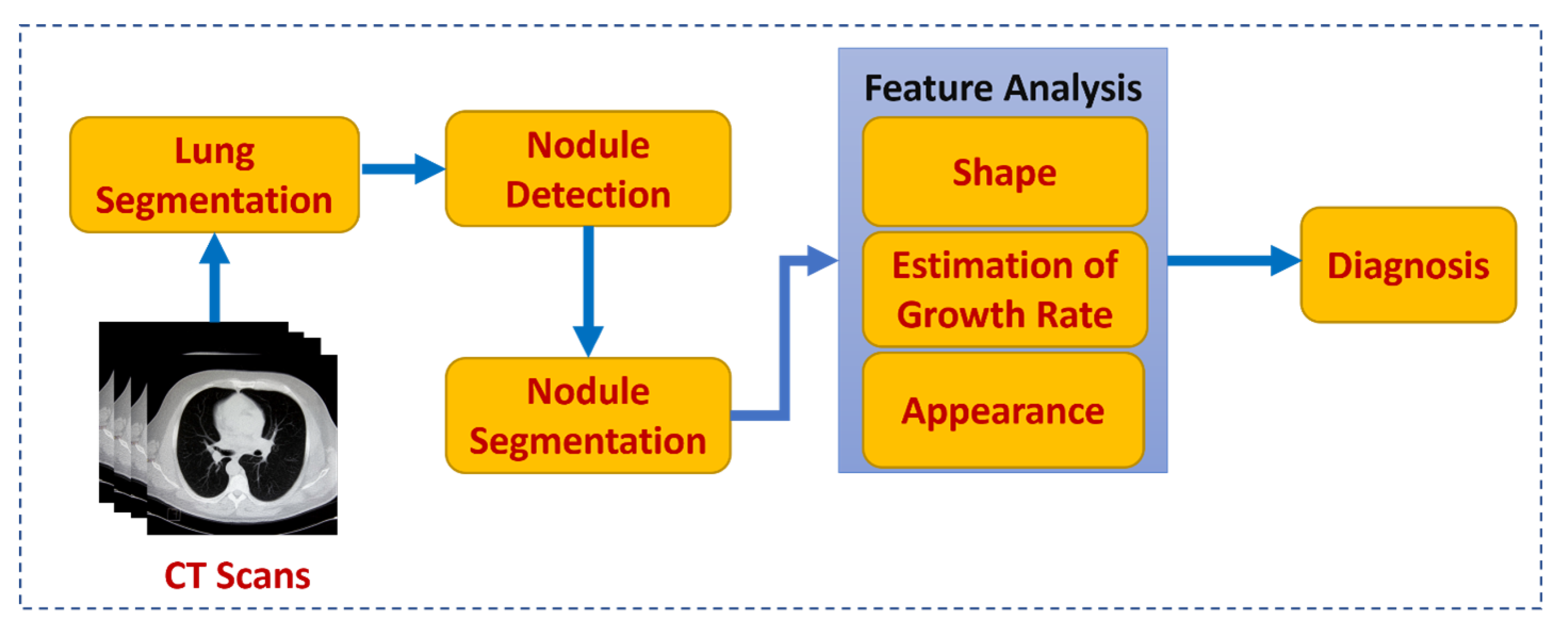

1. Introduction

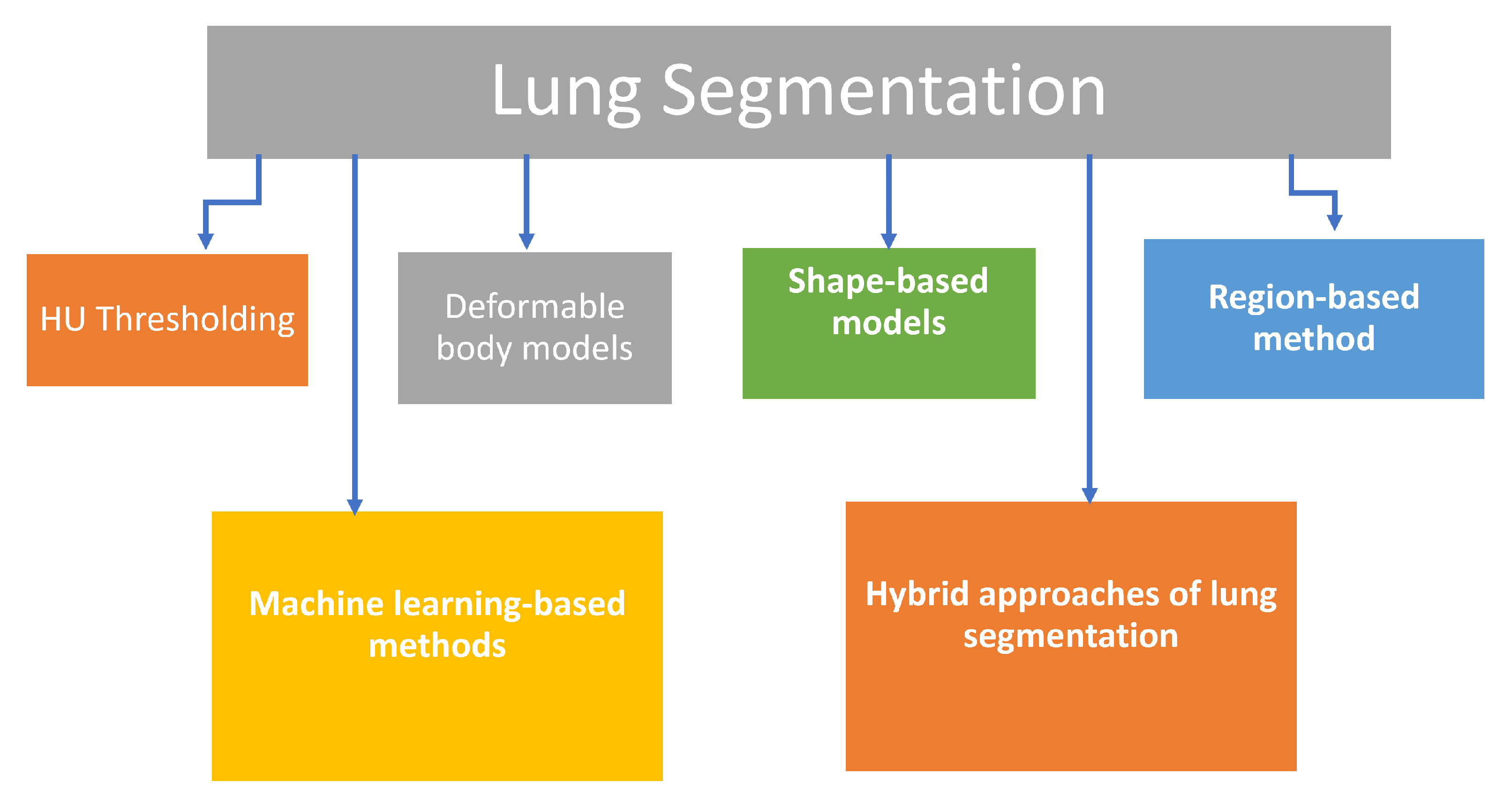

2. Lung Segmentation

3. Pulmonary Nodule Detection and Segmentation

4. Nodule Classification

5. Limitations and Future Prospects

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HU | Hounsfield Unit |

| ABM | Adaptive Border Marching |

| A-CNN | Amalgamated Convolutional Neural Network |

| ASM | Active Shape Model |

| CAD | Computer-Aided Diagnosis |

| CADe | Computer-Aided Detection System |

| CADx | Computer-Aided Diagnosis System |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| MV-CNN | Multi-view CNN |

| ML-CNN | Multi-level CNN |

| AHSN | Angular Histograms of Surface Normals |

| CPM | Competition Performance Metrics |

| CT | Computed Tomography |

| CV | Chan Vese |

| DBN | Deep Belief Network |

| DCNN | Deep Convolutional Neural Network |

| DNN | Deep Neural Network |

| ELM | Extreme Learning Machines |

| FLD | Fisher Linear Discriminant |

| FPN | False Positive Nodule |

| GAN | Generative Adversarial Network |

| GGO | Ground Glass Opacity |

| GGN | Ground Glass Nodule |

| ICLR | InferRead CT Lung Research |

| KB | Knowledge Bank |

| k-NN | K-nearest Neighbor |

| LDA | Linear Discriminate Analysis |

| LDCT | Low Dose Computed Tomography |

| LIDC-IDRI | Lung Image Database Consortium and Image Database Resource Initiative |

| MGRF | Markov Gibbs Random Field |

| ML | Machine Learning |

| MPP | Multi Player Perception |

| NNE | Neural Network Ensemble |

| PNN | Probabilistic Neural Network |

| RASM | Robust Active Shape Model |

| ROI | Region of Interest |

| RPCA | Robust Principal Component Analysis |

| SAE | Stacked Autoencoder |

| SS-ELM | Semi-Supervised Extreme Learning Machines |

| SVM | Support Vector Machine |

| TPN | True Positive Nodule |

| AUC | Area Under the Curve |

| IA | Invasive Adenocarcinoma |

| MTANN | Massive training artificial neural networks |

| NCI | National Cancer Institute |

| SVHN | Street View House Numbers Dataset |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| AAH | Atyoical Adenomatous Hyperplasia |

| MIA | minimally invasive adenocarcinoma |

| AIS | Adenocarcinoma in Situ |

| GLCM | Gray-Level Co-occurrence Matrix |

| EM | Expectation–maximization method |

| DSC | Dice Similarity Coefficient |

| Inf-Net | COVID-19-infected lung segmentation convolution neural network |

| Semi-Inf-Net | semi-supervised Inf-Net |

| ALVD | absolute lung volume difference |

| BHD | bidirectional Hausdorff distance |

| HCRF | Hidden conditional random field |

| SCPM-Net | sphere center-points matching detection network |

| SD-U-Net | Squeeze and attention, and dense atrous spatial pyramid pooling U-Net |

References

- American Cancer Society: Cancer Facts and Figures 2017. Available online: https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2017/cancer-facts-and-figures-2017.pdf (accessed on 13 November 2021).

- Centers for Disease Control and Prevention (CDC): Smoking and Tobacco Use: Secondhand Smoke (SHS) Facts. Available online: https://www.cdc.gov/tobacco/data_statistics/fact_sheets/secondhand_smoke/general_facts/index.htm (accessed on 11 November 2021).

- Madsen, L.R.; Krarup, N.H.V.; Bergmann, T.K.; Bærentzen, S.; Neghabat, S.; Duval, L.; Knudsen, S.T. A cancer that went up in smoke: Pulmonary reaction to e-cigarettes imitating metastatic cancer. Chest 2016, 149, e65–e67. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jenks, S. Is Lung Cancer Incidence Increasing Among Never-Smokers? Jnci J. Natl. Cancer Inst. 2016, 108, djv418. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coté, M.L.; Liu, M.; Bonassi, S.; Neri, M.; Schwartz, A.G.; Christiani, D.C.; Spitz, M.R.; Muscat, J.E.; Rennert, G.; Aben, K.K.; et al. Increased risk of lung cancer in individuals with a family history of the disease: A pooled analysis from the International Lung Cancer Consortium. Eur. J. Cancer 2012, 48, 1957–1968. [Google Scholar] [CrossRef] [PubMed]

- de Torres, J.P.; Wilson, D.O.; Sanchez-Salcedo, P.; Weissfeld, J.L.; Berto, J.; Campo, A.; Alcaide, A.B.; García-Granero, M.; Celli, B.R.; Zulueta, J.J. Lung cancer in patients with chronic obstructive pulmonary disease. Development and validation of the COPD Lung Cancer Screening Score. Am. J. Respir. Crit. Care Med. 2015, 191, 285–291. [Google Scholar] [CrossRef] [Green Version]

- Zhai, K.; Ding, J.; Shi, H.Z. Author’s Reply to “Comments on HPV and Lung Cancer Risk: A Meta-Analysis” [J. Clin. Virol. (In Press)]. J. Clin. Virol. Off. Publ. Pan Am. Soc. Clin. Virol. 2015, 63, 92–93. [Google Scholar] [CrossRef]

- Team, N.L.S.T.R. The national lung screening trial: Overview and study design. Radiology 2011, 258, 243–253. [Google Scholar]

- Global Resource for Advancing Cancer Education: Lung Cancer Screening, Part I: The Arguments for CT Screening. Available online: http://cancergrace.org/lung/2007/01/23/ct-screening-for-lung-ca-advantages/ (accessed on 14 November 2021).

- Ather, S.; Kadir, T.; Gleeson, F. Artificial intelligence and radiomics in pulmonary nodule management: Current status and future applications. Clin. Radiol. 2020, 75, 13–19. [Google Scholar] [CrossRef] [Green Version]

- Prabhakar, B.; Shende, P.; Augustine, S. Current trends and emerging diagnostic techniques for lung cancer. Biomed. Pharmacother. 2018, 106, 1586–1599. [Google Scholar] [CrossRef] [PubMed]

- Firmino, M.; Morais, A.H.; Mendoça, R.M.; Dantas, M.R.; Hekis, H.R.; Valentim, R. Computer-aided detection system for lung cancer in computed tomography scans: Review and future prospects. Biomed. Eng. Online 2014, 13, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Hu, S.; Hoffman, E.A.; Reinhardt, J.M. Automatic lung segmentation for accurate quantitation of volumetric X-ray CT images. IEEE Trans. Med. Imaging 2001, 20, 490–498. [Google Scholar] [CrossRef] [PubMed]

- Ukil, S.; Reinhardt, J.M. Anatomy-guided lung lobe segmentation in X-ray CT images. IEEE Trans. Med. Imaging 2008, 28, 202–214. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van Rikxoort, E.M.; De Hoop, B.; Van De Vorst, S.; Prokop, M.; Van Ginneken, B. Automatic segmentation of pulmonary segments from volumetric chest CT scans. IEEE Trans. Med. Imaging 2009, 28, 621–630. [Google Scholar] [CrossRef] [PubMed]

- Armato, S.G.; Giger, M.L.; Moran, C.J.; Blackburn, J.T.; Doi, K.; MacMahon, H. Computerized detection of pulmonary nodules on CT scans. Radiographics 1999, 19, 1303–1311. [Google Scholar] [CrossRef] [PubMed]

- Armato III, S.G.; Sensakovic, W.F. Automated lung segmentation for thoracic CT: Impact on computer-aided diagnosis1. Acad. Radiol. 2004, 11, 1011–1021. [Google Scholar] [CrossRef] [PubMed]

- Pu, J.; Roos, J.; Chin, A.Y.; Napel, S.; Rubin, G.D.; Paik, D.S. Adaptive border marching algorithm: Automatic lung segmentation on chest CT images. Comput. Med. Imaging Graph. 2008, 32, 452–462. [Google Scholar] [CrossRef] [Green Version]

- Gao, Q.; Wang, S.; Zhao, D.; Liu, J. Accurate lung segmentation for X-ray CT images. In Proceedings of the Third International Conference on Natural Computation (ICNC 2007), Haikou, China, 24–27 August 2007; Volume 2, pp. 275–279. [Google Scholar]

- Wei, Q.; Hu, Y.; Gelfand, G.; MacGregor, J.H. Segmentation of lung lobes in high-resolution isotropic CT images. IEEE Trans. Biomed. Eng. 2009, 56, 1383–1393. [Google Scholar] [PubMed]

- Ye, X.; Lin, X.; Dehmeshki, J.; Slabaugh, G.; Beddoe, G. Shape-based computer-aided detection of lung nodules in thoracic CT images. IEEE Trans. Biomed. Eng. 2009, 56, 1810–1820. [Google Scholar] [PubMed] [Green Version]

- Itai, Y.; Kim, H.; Ishikawa, S.; Katsuragawa, S.; Ishida, T.; Nakamura, K.; Yamamoto, A. Automatic segmentation of lung areas based on SNAKES and extraction of abnormal areas. In Proceedings of the 17th IEEE International Conference on Tools with Artificial Intelligence (ICTAI’05), Hong Kong, China, 14–16 November 2005; p. 5. [Google Scholar]

- Silveira, M.; Marques, J. Automatic segmentation of the lungs using multiple active contours and outlier model. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 3122–3125. [Google Scholar]

- Silveira, M.; Nascimento, J.; Marques, J. Automatic segmentation of the lungs using robust level sets. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 4414–4417. [Google Scholar]

- Rani, K.V.; Jawhar, S. Emerging trends in lung cancer detection scheme—A review. Int. J. Res. Anal. Rev. 2018, 5, 530–542. [Google Scholar]

- Mansoor, A.; Bagci, U.; Foster, B.; Xu, Z.; Papadakis, G.Z.; Folio, L.R.; Udupa, J.K.; Mollura, D.J. Segmentation and image analysis of abnormal lungs at CT: Current approaches, challenges, and future trends. Radiographics 2015, 35, 1056–1076. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, S.; Bauer, C.; Beichel, R. Automated 3-D segmentation of lungs with lung cancer in CT data using a novel robust active shape model approach. IEEE Trans. Med. Imaging 2011, 31, 449–460. [Google Scholar] [PubMed] [Green Version]

- Li, K.; Wu, X.; Chen, D.Z.; Sonka, M. Optimal surface segmentation in volumetric images-a graph-theoretic approach. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 28, 119–134. [Google Scholar]

- Sofka, M.; Wetzl, J.; Birkbeck, N.; Zhang, J.; Kohlberger, T.; Kaftan, J.; Declerck, J.; Zhou, S.K. Multi-stage learning for robust lung segmentation in challenging CT volumes. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Toronto, ON, Canada, 18–22 September 2011; pp. 667–674. [Google Scholar]

- Hua, P.; Song, Q.; Sonka, M.; Hoffman, E.A.; Reinhardt, J.M. Segmentation of pathological and diseased lung tissue in CT images using a graph-search algorithm. In Proceedings of the 2011 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Chicago, IL, USA, 30 March–2 April 2011; pp. 2072–2075. [Google Scholar]

- Kockelkorn, T.T.; van Rikxoort, E.M.; Grutters, J.C.; van Ginneken, B. Interactive lung segmentation in CT scans with severe abnormalities. In Proceedings of the 2010 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Rotterdam, The Netherlands, 14–17 April 2010; pp. 564–567. [Google Scholar]

- El-Baz, A.; Gimel’farb, G.; Falk, R.; El-Ghar, M.A. A novel three-dimensional framework for automatic lung segmentation from low dose computed tompgraphy images. In Lung Imaging and Computer Aided Diagnosis; El-Baz, A., Suri, J., Eds.; CRC Press: Boca Raton, FL, USA, 2011; pp. 1–15. [Google Scholar]

- El-Ba, A.; Gimel’farb, G.; Falk, R.; Holland, T.; Shaffer, T. A new stochastic framework for accurate lung segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, New York, NY, USA, 6–10 September 2008; pp. 322–330. [Google Scholar]

- El-Baz, A.; Gimel’farb, G.L.; Falk, R.; Holland, T.; Shaffer, T. A Framework for Unsupervised Segmentation of Lung Tissues from Low Dose Computed Tomography Images. In Proceedings of the BMVC, Aberystwyth, UK, 31 August–3 September 2008; pp. 1–10. [Google Scholar]

- Chung, H.; Ko, H.; Jeon, S.J.; Yoon, K.H.; Lee, J. Automatic lung segmentation with juxta-pleural nodule identification using active contour model and bayesian approach. IEEE J. Transl. Eng. Health Med. 2018, 6, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, S.; Ren, H.; Dan, T.; Wei, W. 3D segmentation of lungs with juxta-pleural tumor using the improved active shape model approach. Technol. Health Care 2021, 29, 385–398. [Google Scholar] [CrossRef]

- Adams, R.; Bischof, L. Seeded region growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef] [Green Version]

- Hojjatoleslami, S.; Kittler, J. Region growing: A new approach. IEEE Trans. Image Process. 1998, 7, 1079–1084. [Google Scholar] [CrossRef] [Green Version]

- Pavlidis, T.; Liow, Y.T. Integrating region growing and edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 225–233. [Google Scholar] [CrossRef]

- Tremeau, A.; Borel, N. A region growing and merging algorithm to color segmentation. Pattern Recognit. 1997, 30, 1191–1203. [Google Scholar] [CrossRef]

- Zhu, S.C.; Yuille, A. Region competition: Unifying snakes, region growing, and Bayes/MDL for multiband image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 884–900. [Google Scholar]

- Mangan, A.P.; Whitaker, R.T. Partitioning 3D surface meshes using watershed segmentation. IEEE Trans. Vis. Comput. Graph. 1999, 5, 308–321. [Google Scholar] [CrossRef] [Green Version]

- Grady, L. Random walks for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1768–1783. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boykov, Y.; Jolly, M.P. Interactive organ segmentation using graph cuts. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Pittsburgh, PA, USA, 11–14 October 2000; pp. 276–286. [Google Scholar]

- Udupa, J. Fuzzy connectedness and object definition: Theory, algorithms, and applications in image segmentation. Graph. Model. Image Process. 1999, 9, 85–90. [Google Scholar]

- Song, Y.; Cai, W.; Zhou, Y.; Feng, D.D. Feature-based image patch approximation for lung tissue classification. IEEE Trans. Med. Imaging 2013, 32, 797–808. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Sonka, M.; McLennan, G.; Guo, J.; Hoffman, E.A. MDCT-based 3-D texture classification of emphysema and early smoking related lung pathologies. IEEE Trans. Med. Imaging 2006, 25, 464–475. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Dwyer, A.; Summers, R.M.; Mollura, D.J. Computer-aided diagnosis of pulmonary infections using texture analysis and support vector machine classification. Acad. Radiol. 2011, 18, 306–314. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Korfiatis, P.D.; Karahaliou, A.N.; Kazantzi, A.D.; Kalogeropoulou, C.; Costaridou, L.I. Texture-based identification and characterization of interstitial pneumonia patterns in lung multidetector CT. IEEE Trans. Inf. Technol. Biomed. 2009, 14, 675–680. [Google Scholar] [CrossRef]

- Bagci, U.; Yao, J.; Wu, A.; Caban, J.; Palmore, T.N.; Suffredini, A.F.; Aras, O.; Mollura, D.J. Automatic detection and quantification of tree-in-bud (TIB) opacities from CT scans. IEEE Trans. Biomed. Eng. 2012, 59, 1620–1632. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mansoor, A.; Bagci, U.; Xu, Z.; Foster, B.; Olivier, K.N.; Elinoff, J.M.; Suffredini, A.F.; Udupa, J.K.; Mollura, D.J. A generic approach to pathological lung segmentation. IEEE Trans. Med. Imaging 2014, 33, 2293–2310. [Google Scholar] [CrossRef] [Green Version]

- Van Rikxoort, E.M.; Van Ginneken, B. Automated segmentation of pulmonary structures in thoracic computed tomography scans: A review. Phys. Med. Biol. 2013, 58, R187. [Google Scholar] [CrossRef] [PubMed]

- Bağci, U.; Yao, J.; Caban, J.; Palmore, T.N.; Suffredini, A.F.; Mollura, D.J. Automatic detection of tree-in-bud patterns for computer assisted diagnosis of respiratory tract infections. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5096–5099. [Google Scholar]

- Bagci, U.; Yao, J.; Caban, J.; Suffredini, A.F.; Palmore, T.N.; Mollura, D.J. Learning shape and texture characteristics of CT tree-in-bud opacities for CAD systems. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Toronto, ON, Canada, 18–22 September 2011; pp. 215–222. [Google Scholar]

- Caban, J.J.; Yao, J.; Bagci, U.; Mollura, D.J. Monitoring pulmonary fibrosis by fusing clinical, physiological, and computed tomography features. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6216–6219. [Google Scholar]

- Korfiatis, P.; Kalogeropoulou, C.; Karahaliou, A.; Kazantzi, A.; Skiadopoulos, S.; Costaridou, L. Texture classification-based segmentation of lung affected by interstitial pneumonia in high-resolution CT. Med. Phys. 2008, 35, 5290–5302. [Google Scholar] [CrossRef]

- Wang, J.; Li, F.; Li, Q. Automated segmentation of lungs with severe interstitial lung disease in CT. Med. Phys. 2009, 36, 4592–4599. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Sharafeldeen, A.; Elsharkawy, M.; Khalifa, F.; Soliman, A.; Ghazal, M.; AlHalabi, M.; Yaghi, M.; Alrahmawy, M.; Elmougy, S.; Sandhu, H.S.; et al. Precise higher-order reflectivity and morphology models for early diagnosis of diabetic retinopathy using OCT images. Sci. Rep. 2021, 11. [Google Scholar] [CrossRef] [PubMed]

- Nakagomi, K.; Shimizu, A.; Kobatake, H.; Yakami, M.; Fujimoto, K.; Togashi, K. Multi-shape graph cuts with neighbor prior constraints and its application to lung segmentation from a chest CT volume. Med. Image Anal. 2013, 17, 62–77. [Google Scholar] [CrossRef]

- Yan, Q.; Wang, B.; Gong, D.; Luo, C.; Zhao, W.; Shen, J.; Shi, Q.; Jin, S.; Zhang, L.; You, Z. COVID-19 Chest CT Image Segmentation—A Deep Convolutional Neural Network Solution. arXiv 2020, arXiv:2004.10987. [Google Scholar] [CrossRef]

- Fan, D.P.; Zhou, T.; Ji, G.P.; Zhou, Y.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Inf-Net: Automatic COVID-19 Lung Infection Segmentation From CT Images. IEEE Trans. Med. Imaging 2020, 39, 2626–2637. [Google Scholar] [CrossRef]

- Oulefki, A.; Agaian, S.; Trongtirakul, T.; Laouar, A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2021, 114, 107747. [Google Scholar] [CrossRef] [PubMed]

- Sharafeldeen, A.; Elsharkawy, M.; Alghamdi, N.S.; Soliman, A.; El-Baz, A. Precise Segmentation of COVID-19 Infected Lung from CT Images Based on Adaptive First-Order Appearance Model with Morphological/Anatomical Constraints. Sensors 2021, 21, 5482. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Xu, Y.; He, Z.; Tang, J.; Zhang, Y.; Han, J.; Shi, Y.; Zhou, W. Lung segmentation and automatic detection of COVID-19 using radiomic features from chest CT images. Pattern Recognit. 2021, 119, 108071. [Google Scholar] [CrossRef] [PubMed]

- Sousa, J.; Pereira, T.; Silva, F.; Silva, M.C.; Vilares, A.T.; Cunha, A.; Oliveira, H.P. Lung Segmentation in CT Images: A Residual U-Net Approach on a Cross-Cohort Dataset. Appl. Sci. 2022, 12, 1959. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Lecture Notes in Computer Science; Springer: Berlin, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kim, H.M.; Ko, T.; Choi, I.Y.; Myong, J.P. Asbestosis diagnosis algorithm combining the lung segmentation method and deep learning model in computed tomography image. Int. J. Med. Inform. 2022, 158, 104667. [Google Scholar] [CrossRef] [PubMed]

- Miettinen, O.S.; Henschke, C.I. CT screening for lung cancer: Coping with nihilistic recommendations. Radiology 2001, 221, 592–596. [Google Scholar] [CrossRef] [PubMed]

- Henschke, C.I.; Naidich, D.P.; Yankelevitz, D.F.; McGuinness, G.; McCauley, D.I.; Smith, J.P.; Libby, D.; Pasmantier, M.; Vazquez, M.; Koizumi, J.; et al. Early Lung Cancer Action Project: Initial findings on repeat screening. Cancer 2001, 92, 153–159. [Google Scholar] [CrossRef]

- Swensen, S.J.; Jett, J.R.; Hartman, T.E.; Midthun, D.E.; Sloan, J.A.; Sykes, A.M.; Aughenbaugh, G.L.; Clemens, M.A. Lung cancer screening with CT: Mayo Clinic experience. Radiology 2003, 226, 756–761. [Google Scholar] [CrossRef]

- Rusinek, H.; Naidich, D.P.; McGuinness, G.; Leitman, B.S.; McCauley, D.I.; Krinsky, G.A.; Clayton, K.; Cohen, H. Pulmonary nodule detection: Low-dose versus conventional CT. Radiology 1998, 209, 243–249. [Google Scholar] [CrossRef]

- Garg, K.; Keith, R.L.; Byers, T.; Kelly, K.; Kerzner, A.L.; Lynch, D.A.; Miller, Y.E. Randomized controlled trial with low-dose spiral CT for lung cancer screening: Feasibility study and preliminary results. Radiology 2002, 225, 506–510. [Google Scholar] [CrossRef]

- Nawa, T.; Nakagawa, T.; Kusano, S.; Kawasaki, Y.; Sugawara, Y.; Nakata, H. Lung cancer screening using low-dose spiral CT: Results of baseline and 1-year follow-up studies. Chest 2002, 122, 15–20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sone, S.; Li, F.; Yang, Z.; Honda, T.; Maruyama, Y.; Takashima, S.; Hasegawa, M.; Kawakami, S.; Kubo, K.; Haniuda, M.; et al. Results of three-year mass screening programme for lung cancer using mobile low-dose spiral computed tomography scanner. Br. J. Cancer 2001, 84, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Way, T.W.; Hadjiiski, L.M.; Sahiner, B.; Chan, H.P.; Cascade, P.N.; Kazerooni, E.A.; Bogot, N.; Zhou, C. Computer-aided diagnosis of pulmonary nodules on CT scans: Segmentation and classification using 3D active contours. Med. Phys. 2006, 33, 2323–2337. [Google Scholar] [CrossRef] [Green Version]

- Tandon, Y.K.; Bartholmai, B.J.; Koo, C.W. Putting artificial intelligence (AI) on the spot: Machine learning evaluation of pulmonary nodules. J. Thorac. Dis. 2020, 12, 6954. [Google Scholar] [CrossRef]

- Armato, S.G., III; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Med. Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Chi, J.; Liu, J.; Yang, L.; Zhang, B.; Yu, D.; Zhao, Y.; Lu, X. A survey of computer-aided diagnosis of lung nodules from CT scans using deep learning. Comput. Biol. Med. 2021, 137, 104806. [Google Scholar] [CrossRef]

- Chang, S.; Emoto, H.; Metaxas, D.N.; Axel, L. Pulmonary micronodule detection from 3D chest CT. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Saint-Malo, France, 26–29 September 2004; pp. 821–828. [Google Scholar]

- Takizawa, H.; Shigemoto, K.; Yamamoto, S.; Matsumoto, T.; Tateno, Y.; Iinuma, T.; Matsumoto, M. A recognition method of lung nodule shadows in X-Ray CT images using 3D object models. Int. J. Image Graph. 2003, 3, 533–545. [Google Scholar] [CrossRef]

- Li, Q.; Doi, K. New selective nodule enhancement filter and its application for significant improvement of nodule detection on computed tomography. In Proceedings of the Medical Imaging 2004: Image Processing. International Society for Optics and Photonics, San Diego, CA, USA, 16–19 February 2004; Volume 5370, pp. 1–9. [Google Scholar]

- Paik, D.S.; Beaulieu, C.F.; Rubin, G.D.; Acar, B.; Jeffrey, R.B.; Yee, J.; Dey, J.; Napel, S. Surface normal overlap: A computer-aided detection algorithm with application to colonic polyps and lung nodules in helical CT. IEEE Trans. Med. Imaging 2004, 23, 661–675. [Google Scholar] [CrossRef]

- Mendonça, P.R.; Bhotika, R.; Sirohey, S.A.; Turner, W.D.; Miller, J.V.; Avila, R.S. Model-based analysis of local shape for lesion detection in CT scans. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Palm Springs, CA, USA, 26–29 October 2005; pp. 688–695. [Google Scholar]

- Lee, Y.; Hara, T.; Fujita, H.; Itoh, S.; Ishigaki, T. Automated detection of pulmonary nodules in helical CT images based on an improved template-matching technique. IEEE Trans. Med. Imaging 2001, 20, 595–604. [Google Scholar] [PubMed]

- Wiemker, R.; Rogalla, P.; Zwartkruis, A.; Blaffert, T. Computer-aided lung nodule detection on high-resolution CT data. In Proceedings of the Medical Imaging 2002: Image Processing. International Society for Optics and Photonics, San Diego, CA, USA, 23–28 February 2002; Volume 4684, pp. 677–688. [Google Scholar]

- Kostis, W.J.; Reeves, A.P.; Yankelevitz, D.F.; Henschke, C.I. Three-dimensional segmentation and growth-rate estimation of small pulmonary nodules in helical CT images. IEEE Trans. Med. Imaging 2003, 22, 1259–1274. [Google Scholar] [CrossRef] [PubMed]

- Gurcan, M.N.; Sahiner, B.; Petrick, N.; Chan, H.P.; Kazerooni, E.A.; Cascade, P.N.; Hadjiiski, L. Lung nodule detection on thoracic computed tomography images: Preliminary evaluation of a computer-aided diagnosis system. Med. Phys. 2002, 29, 2552–2558. [Google Scholar] [CrossRef]

- Kanazawa, K.; Kawata, Y.; Niki, N.; Satoh, H.; Ohmatsu, H.; Kakinuma, R.; Kaneko, M.; Moriyama, N.; Eguchi, K. Computer-aided diagnosis for pulmonary nodules based on helical CT images. Comput. Med. Imaging Graph. 1998, 22, 157–167. [Google Scholar] [CrossRef]

- Kawata, Y.; Niki, N.; Ohmatsu, H.; Kusumoto, M.; Kakinuma, R.; Mori, K.; Nishiyama, H.; Eguchi, K.; Kaneko, M.; Moriyama, N. Computer-aided diagnosis of pulmonary nodules using three-dimensional thoracic CT images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Utrecht, The Netherlands, 27 September–1 October 2001; pp. 1393–1394. [Google Scholar]

- Betke, M.; Ko, J.P. Detection of pulmonary nodules on CT and volumetric assessment of change over time. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, UK, 19–22 September 1999; pp. 245–252. [Google Scholar]

- Kubo, M.; Kubota, K.; Yamada, N.; Kawata, Y.; Niki, N.; Eguchi, K.; Ohmatsu, H.; Kakinuma, R.; Kaneko, M.; Kusumoto, M.; et al. CAD system for lung cancer based on low-dose single-slice CT image. In Proceedings of the Medical Imaging 2002: Image Processing. International Society for Optics and Photonics, San Diego, CA, USA, 19–25 January 2002; Volume 4684, pp. 1262–1269. [Google Scholar]

- Oda, T.; Kubo, M.; Kawata, Y.; Niki, N.; Eguchi, K.; Ohmatsu, H.; Kakinuma, R.; Kaneko, M.; Kusumoto, M.; Moriyama, N.; et al. Detection algorithm of lung cancer candidate nodules on multislice CT images. In Proceedings of the Medical Imaging 2002: Image Processing. International Society for Optics and Photonics, San Diego, CA, USA, 19–25 January 2002; Volume 4684, pp. 1354–1361. [Google Scholar]

- Saita, S.; Oda, T.; Kubo, M.; Kawata, Y.; Niki, N.; Sasagawa, M.; Ohmatsu, H.; Kakinuma, R.; Kaneko, M.; Kusumoto, M.; et al. Nodule detection algorithm based on multislice CT images for lung cancer screening. In Proceedings of the Medical Imaging 2004: Image Processing. International Society for Optics and Photonics, San Diego, CA, USA, 16–19 February 2004; Volume 5370, pp. 1083–1090. [Google Scholar]

- Brown, M.S.; McNitt-Gray, M.F.; Goldin, J.G.; Suh, R.D.; Sayre, J.W.; Aberle, D.R. Patient-specific models for lung nodule detection and surveillance in CT images. IEEE Trans. Med. Imaging 2001, 20, 1242–1250. [Google Scholar] [CrossRef]

- Messay, T.; Hardie, R.C.; Rogers, S.K. A new computationally efficient CAD system for pulmonary nodule detection in CT imagery. Med. Image Anal. 2010, 14, 390–406. [Google Scholar] [CrossRef] [PubMed]

- Setio, A.A.; Jacobs, C.; Gelderblom, J.; van Ginneken, B. Automatic detection of large pulmonary solid nodules in thoracic CT images. Med. Phys. 2015, 42, 5642–5653. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Xin, J.; Sun, P.; Lin, Z.; Yao, Y.; Gao, X. Improved lung nodule diagnosis accuracy using lung CT images with uncertain class. Comput. Methods Programs Biomed. 2018, 162, 197–209. [Google Scholar] [CrossRef]

- Baralis, E.; Chiusano, S.; Garza, P. A lazy approach to associative classification. IEEE Trans. Knowl. Data Eng. 2007, 20, 156–171. [Google Scholar] [CrossRef]

- Pehrson, L.M.; Nielsen, M.B.; Ammitzbøl Lauridsen, C. Automatic pulmonary nodule detection applying deep learning or machine learning algorithms to the LIDC-IDRI database: A systematic review. Diagnostics 2019, 9, 29. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Kadir, T.; Gleeson, F. Lung cancer prediction using machine learning and advanced imaging techniques. Transl. Lung Cancer Res. 2018, 7, 304. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.M.; Seo, J.B.; Yun, J.; Cho, Y.H.; Vogel-Claussen, J.; Schiebler, M.L.; Gefter, W.B.; Van Beek, E.J.; Goo, J.M.; Lee, K.S.; et al. Deep learning applications in chest radiography and computed tomography. J. Thorac. Imaging 2019, 34, 75–85. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Akram, S.; Javed, M.Y.; Qamar, U.; Khanum, A.; Hassan, A. Artificial neural network based classification of lungs nodule using hybrid features from computerized tomographic images. Appl. Math. Inf. Sci 2015, 9, 183–195. [Google Scholar] [CrossRef]

- Choi, W.J.; Choi, T.S. Automated pulmonary nodule detection based on three-dimensional shape-based feature descriptor. Comput. Methods Programs Biomed. 2014, 113, 37–54. [Google Scholar] [CrossRef] [PubMed]

- Alilou, M.; Kovalev, V.; Snezhko, E.; Taimouri, V. A comprehensive framework for automatic detection of pulmonary nodules in lung CT images. Image Anal. Stereol. 2014, 33, 13–27. [Google Scholar] [CrossRef] [Green Version]

- Bai, J.; Huang, X.; Liu, S.; Song, Q.; Bhagalia, R. Learning orientation invariant contextual features for nodule detection in lung CT scans. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), Brooklyn, NY, USA, 16–19 April 2015; pp. 1135–1138. [Google Scholar]

- El-Regaily, S.A.; Salem, M.A.M.; Aziz, M.H.A.; Roushdy, M.I. Lung nodule segmentation and detection in computed tomography. In Proceedings of the 2017 Eighth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egyp, 5–7 December 2017; pp. 72–78. [Google Scholar]

- Golan, R.; Jacob, C.; Denzinger, J. Lung nodule detection in CT images using deep convolutional neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 243–250. [Google Scholar]

- Bergtholdt, M.; Wiemker, R.; Klinder, T. Pulmonary nodule detection using a cascaded SVM classifier. In Proceedings of the Medical Imaging 2016: Computer-Aided Diagnosis. International Society for Optics and Photonics, San Diego, CA, USA, 27 February–3 March 2016; Volume 9785, p. 978513. [Google Scholar]

- Zhang, T.; Zhao, J.; Luo, J.; Qiang, Y. Deep belief network for lung nodules diagnosed in CT imaging. Int. J. Perform. Eng. 2017, 13, 1358. [Google Scholar] [CrossRef]

- Jacobs, C.; van Rikxoort, E.M.; Murphy, K.; Prokop, M.; Schaefer-Prokop, C.M.; van Ginneken, B. Computer-aided detection of pulmonary nodules: A comparative study using the public LIDC/IDRI database. Eur. Radiol. 2016, 26, 2139–2147. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wu, B.; Zhang, N.; Liu, J.; Ren, F.; Zhao, L. Research progress of computer aided diagnosis system for pulmonary nodules in CT images. J. X-ray Sci. Technol. 2020, 28, 1–16. [Google Scholar] [CrossRef] [PubMed]

- McWilliams, A.; Tammemagi, M.C.; Mayo, J.R.; Roberts, H.; Liu, G.; Soghrati, K.; Yasufuku, K.; Martel, S.; Laberge, F.; Gingras, M.; et al. Probability of cancer in pulmonary nodules detected on first screening CT. N. Engl. J. Med. 2013, 369, 910–919. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Horeweg, N.; Scholten, E.T.; de Jong, P.A.; van der Aalst, C.M.; Weenink, C.; Lammers, J.W.J.; Nackaerts, K.; Vliegenthart, R.; ten Haaf, K.; Yousaf-Khan, U.A.; et al. Detection of lung cancer through low-dose CT screening (NELSON): A prespecified analysis of screening test performance and interval cancers. Lancet Oncol. 2014, 15, 1342–1350. [Google Scholar] [CrossRef]

- Revel, M.P.; Bissery, A.; Bienvenu, M.; Aycard, L.; Lefort, C.; Frija, G. Are two-dimensional CT measurements of small noncalcified pulmonary nodules reliable? Radiology 2004, 231, 453–458. [Google Scholar] [CrossRef] [PubMed]

- Korst, R.J.; Lee, B.E.; Krinsky, G.A.; Rutledge, J.R. The utility of automated volumetric growth analysis in a dedicated pulmonary nodule clinic. J. Thorac. Cardiovasc. Surg. 2011, 142, 372–377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bianconi, F.; Fravolini, M.L.; Pizzoli, S.; Palumbo, I.; Minestrini, M.; Rondini, M.; Nuvoli, S.; Spanu, A.; Palumbo, B. Comparative evaluation of conventional and deep learning methods for semi-automated segmentation of pulmonary nodules on CT. Quant. Imaging Med. Surg. 2021, 11, 3286. [Google Scholar] [CrossRef] [PubMed]

- Kuhnigk, J.M.; Dicken, V.; Bornemann, L.; Bakai, A.; Wormanns, D.; Krass, S.; Peitgen, H.O. Morphological segmentation and partial volume analysis for volumetry of solid pulmonary lesions in thoracic CT scans. IEEE Trans. Med. Imaging 2006, 25, 417–434. [Google Scholar] [CrossRef]

- Jamshid, D.; Hamdan, A.; Manlio, V.; Ye, X. Segmentation of pulmonary nodules in thoracic CT scans: A region growing approach. IEEE Trans. Med. Imaging 2008, 27, 467–480. [Google Scholar]

- Tao, Y.; Lu, L.; Dewan, M.; Chen, A.Y.; Corso, J.; Xuan, J.; Salganicoff, M.; Krishnan, A. Multi-level ground glass nodule detection and segmentation in CT lung images. In Proceedings of the International Conference on Medical Image Computing and Computer—Assisted Intervention, London, UK, 20–24 September 2009; pp. 715–723. [Google Scholar]

- Zhou, J.; Chang, S.; Metaxas, D.N.; Zhao, B.; Ginsberg, M.S.; Schwartz, L.H. An automatic method for ground glass opacity nodule detection and segmentation from CT studies. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, Virtual, 1–5 November 2006; pp. 3062–3065. [Google Scholar]

- Charbonnier, J.P.; Chung, K.; Scholten, E.T.; Van Rikxoort, E.M.; Jacobs, C.; Sverzellati, N.; Silva, M.; Pastorino, U.; Van Ginneken, B.; Ciompi, F. Automatic segmentation of the solid core and enclosed vessels in subsolid pulmonary nodules. Sci. Rep. 2018, 8, 646. [Google Scholar] [CrossRef] [Green Version]

- Kubota, T.; Jerebko, A.K.; Dewan, M.; Salganicoff, M.; Krishnan, A. Segmentation of pulmonary nodules of various densities with morphological approaches and convexity models. Med. Image Anal. 2011, 15, 133–154. [Google Scholar] [CrossRef]

- Mukhopadhyay, S. A segmentation framework of pulmonary nodules in lung CT images. J. Digit. Imaging 2016, 29, 86–103. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Wang, Z.; Guo, M.; Li, P. Hidden conditional random field for lung nodule detection. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014. [Google Scholar] [CrossRef]

- Li, Q.; Sone, S.; Doi, K. Selective enhancement filters for nodules, vessels, and airway walls in two- and three-dimensional CT scans. Med. Phys. 2003, 30, 2040–2051. [Google Scholar] [CrossRef]

- Quattoni, A.; Wang, S.; Morency, L.P.; Collins, M.; Darrell, T. Hidden Conditional Random Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1848–1852. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Han, J.; Jia, Y.; Gou, F. Lung Nodule Detection via 3D U-Net and Contextual Convolutional Neural Network. In Proceedings of the 2018 International Conference on Networking and Network Applications (NaNA), Xi’an, China, 12–15 October 2018. [Google Scholar] [CrossRef]

- Özgün, Ç.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Springer: Berlin, Germany, 2016; pp. 424–432. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8 December 2014; Volume 2, pp. 2672–2680. [Google Scholar]

- Luo, X.; Song, T.; Wang, G.; Chen, J.; Chen, Y.; Li, K.; Metaxas, D.N.; Zhang, S. SCPM-Net: An anchor-free 3D lung nodule detection network using sphere representation and center points matching. Med. Image Anal. 2022, 75, 102287. [Google Scholar] [CrossRef] [PubMed]

- Yin, S.; Deng, H.; Xu, Z.; Zhu, Q.; Cheng, J. SD-UNet: A Novel Segmentation Framework for CT Images of Lung Infections. Electronics 2022, 11, 130. [Google Scholar] [CrossRef]

- Gong, J.; Liu, J.; Hao, W.; Nie, S.; Zheng, B.; Wang, S.; Peng, W. A deep residual learning network for predicting lung adenocarcinoma manifesting as ground-glass nodule on CT images. Eur. Radiol. 2020, 30, 1847–1855. [Google Scholar] [CrossRef] [PubMed]

- Sim, Y.; Chung, M.J.; Kotter, E.; Yune, S.; Kim, M.; Do, S.; Han, K.; Kim, H.; Yang, S.; Lee, D.J.; et al. Deep convolutional neural network–based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology 2020, 294, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Tajbakhsh, N.; Suzuki, K. Comparing two classes of end-to-end machine-learning models in lung nodule detection and classification: MTANNs vs. CNNs. Pattern Recognit. 2017, 63, 476–486. [Google Scholar] [CrossRef]

- Hu, X.; Gong, J.; Zhou, W.; Li, H.; Wang, S.; Wei, M.; Peng, W.; Gu, Y. Computer-aided diagnosis of ground glass pulmonary nodule by fusing deep learning and radiomics features. Phys. Med. Biol. 2021, 66, 065015. [Google Scholar] [CrossRef] [PubMed]

- Zwanenburg, A.; Leger, S.; Vallières, M.; Löck, S. Image biomarker standardisation initiative. arXiv 2016, arXiv:1612.07003. [Google Scholar] [CrossRef] [Green Version]

- Sharafeldeen, A.; Elsharkawy, M.; Khaled, R.; Shaffie, A.; Khalifa, F.; Soliman, A.; khalek Abdel Razek, A.A.; Hussein, M.M.; Taman, S.; Naglah, A.; et al. Texture and shape analysis of diffusion-weighted imaging for thyroid nodules classification using machine learning. Med. Phys. 2021, 49, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; Van Stiphout, R.G.; Granton, P.; Zegers, C.M.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Foley, F.; Rajagopalan, S.; Raghunath, S.M.; Boland, J.M.; Karwoski, R.A.; Maldonado, F.; Bartholmai, B.J.; Peikert, T. Computer-aided nodule assessment and risk yield risk management of adenocarcinoma: The future of imaging? In Seminars in Thoracic and Cardiovascular Surgery; Elsevier: Amsterdam, The Netherlands, 2016; Volume 28, pp. 120–126. [Google Scholar]

- Wang, X.; Mao, K.; Wang, L.; Yang, P.; Lu, D.; He, P. An appraisal of lung nodules automatic classification algorithms for CT images. Sensors 2019, 19, 194. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Narayan, V.; Gill, R.R.; Jagannathan, J.P.; Barile, M.F.; Gao, F.; Bueno, R.; Jayender, J. Computer-aided diagnosis of ground-glass opacity nodules using open-source software for quantifying tumor heterogeneity. Am. J. Roentgenol. 2017, 209, 1216. [Google Scholar] [CrossRef]

- Fan, L.; Fang, M.; Li, Z.; Tu, W.; Wang, S.; Chen, W.; Tian, J.; Dong, D.; Liu, S. Radiomics signature: A biomarker for the preoperative discrimination of lung invasive adenocarcinoma manifesting as a ground-glass nodule. Eur. Radiol. 2019, 29, 889–897. [Google Scholar] [CrossRef] [PubMed]

- Madero Orozco, H.; Vergara Villegas, O.O.; Cruz Sánchez, V.G.; Ochoa Domínguez, H.D.J.; Nandayapa Alfaro, M.D.J. Automated system for lung nodules classification based on wavelet feature descriptor and support vector machine. Biomed. Eng. Online 2015, 14, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dehmeshki, J.; Ye, X.; Costello, J. Shape based region growing using derivatives of 3D medical images: Application to semiautomated detection of pulmonary nodules. In Proceedings of the 2003 International Conference on Image Processing, Barcelona, Spain, 14–17 September 2003; Volume 1, pp. I-1085–I-1088. [Google Scholar]

- Kumar, D.; Wong, A.; Clausi, D.A. Lung nodule classification using deep features in CT images. In Proceedings of the 2015 12th Conference on Computer and Robot Vision, Halifax, NS, Canada, 3–5 June 2015; pp. 133–138. [Google Scholar]

- Li, Q.; Balagurunathan, Y.; Liu, Y.; Qi, J.; Schabath, M.B.; Ye, Z.; Gillies, R.J. Comparison between radiological semantic features and lung-RADS in predicting malignancy of screen-detected lung nodules in the National Lung Screening Trial. Clin. Lung Cancer 2018, 19, 148–156. [Google Scholar] [CrossRef] [Green Version]

- Liu, A.; Wang, Z.; Yang, Y.; Wang, J.; Dai, X.; Wang, L.; Lu, Y.; Xue, F. Preoperative diagnosis of malignant pulmonary nodules in lung cancer screening with a radiomics nomogram. Cancer Commun. 2020, 40, 16–24. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Yang, J.; Sun, Y.; Li, C.; Wu, W.; Jin, L.; Yang, Z.; Ni, B.; Gao, P.; Wang, P.; et al. 3D deep learning from CT scans predicts tumor invasiveness of subcentimeter pulmonary adenocarcinomas. Cancer Res. 2018, 78, 6881–6889. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, J.; Chen, X.; Lu, H.; Zhang, L.; Pan, J.; Bao, Y.; Su, J.; Qian, D. Feature-shared adaptive-boost deep learning for invasiveness classification of pulmonary subsolid nodules in CT images. Med. Phys. 2020, 47, 1738–1749. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Xia, X.; Gong, J.; Hao, W.; Yang, T.; Lin, Y.; Wang, S.; Peng, W. Comparison and fusion of deep learning and radiomics features of ground-glass nodules to predict the invasiveness risk of stage-I lung adenocarcinomas in CT scan. Front. Oncol. 2020, 10, 418. [Google Scholar] [CrossRef]

- Uthoff, J.; Stephens, M.J.; Newell, J.D., Jr.; Hoffman, E.A.; Larson, J.; Koehn, N.; De Stefano, F.A.; Lusk, C.M.; Wenzlaff, A.S.; Watza, D.; et al. Machine learning approach for distinguishing malignant and benign lung nodules utilizing standardized perinodular parenchymal features from CT. Med. Phys. 2019, 46, 3207–3216. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.; Zhou, M.; Yang, F.; Yang, C.; Tian, J. Multi-scale convolutional neural networks for lung nodule classification. In Proceedings of the International Conference on Information Processing in Medical Imaging; 2015; pp. 588–599. [Google Scholar]

- Nibali, A.; He, Z.; Wollersheim, D. Pulmonary nodule classification with deep residual networks. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1799–1808. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Hou, F.; Qin, H.; Hao, A. Multi-view multi-scale CNNs for lung nodule type classification from CT images. Pattern Recognit. 2018, 77, 262–275. [Google Scholar] [CrossRef]

- Chen, H.; Wu, W.; Xia, H.; Du, J.; Yang, M.; Ma, B. Classification of pulmonary nodules using neural network ensemble. In Proceedings of the International Symposium on Neural Networks, Guilin, China, 29 May–1 June 2011; pp. 460–466. [Google Scholar]

- Kuruvilla, J.; Gunavathi, K. Lung cancer classification using neural networks for CT images. Comput. Methods Programs Biomed. 2014, 113, 202–209. [Google Scholar] [CrossRef]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Liu, K.; Zhong, Y.; Liang, M.; Qin, P.; Li, H.; Zhang, R.; Li, S.; Liu, X. Assessing the predictive accuracy of lung cancer, metastases, and benign lesions using an artificial intelligence-driven computer aided diagnosis system. Quant. Imaging Med. Surg. 2021, 11, 3629. [Google Scholar] [CrossRef]

- Zhou, M.; Leung, A.; Echegaray, S.; Gentles, A.; Shrager, J.B.; Jensen, K.C.; Berry, G.J.; Plevritis, S.K.; Rubin, D.L.; Napel, S.; et al. Non–small cell lung cancer radiogenomics map identifies relationships between molecular and imaging phenotypes with prognostic implications. Radiology 2018, 286, 307–315. [Google Scholar] [CrossRef]

- Yamamoto, S.; Korn, R.L.; Oklu, R.; Migdal, C.; Gotway, M.B.; Weiss, G.J.; Iafrate, A.J.; Kim, D.W.; Kuo, M.D. ALK molecular phenotype in non–small cell lung cancer: CT radiogenomic characterization. Radiology 2014, 272, 568–576. [Google Scholar] [CrossRef] [PubMed]

- Aerts, H.J.; Grossmann, P.; Tan, Y.; Oxnard, G.R.; Rizvi, N.; Schwartz, L.H.; Zhao, B. Defining a radiomic response phenotype: A pilot study using targeted therapy in NSCLC. Sci. Rep. 2016, 6, 33860. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rizzo, S.; Petrella, F.; Buscarino, V.; De Maria, F.; Raimondi, S.; Barberis, M.; Fumagalli, C.; Spitaleri, G.; Rampinelli, C.; De Marinis, F.; et al. CT radiogenomic characterization of EGFR, K-RAS, and ALK mutations in non-small cell lung cancer. Eur. Radiol. 2016, 26, 32–42. [Google Scholar] [CrossRef] [PubMed]

- Velazquez, E.R.; Parmar, C.; Liu, Y.; Coroller, T.P.; Cruz, G.; Stringfield, O.; Ye, Z.; Makrigiorgos, M.; Fennessy, F.; Mak, R.H.; et al. Somatic mutations drive distinct imaging phenotypes in lung cancer. Cancer Res. 2017, 77, 3922–3930. [Google Scholar] [CrossRef] [Green Version]

- Lee, K.H.; Goo, J.M.; Park, C.M.; Lee, H.J.; Jin, K.N. Computer-aided detection of malignant lung nodules on chest radiographs: Effect on observers’ performance. Korean J. Radiol. 2012, 13, 564–571. [Google Scholar] [CrossRef]

- Liu, S.; Xie, Y.; Jirapatnakul, A.; Reeves, A.P. Pulmonary nodule classification in lung cancer screening with three-dimensional convolutional neural networks. J. Med. Imaging 2017, 4, 041308. [Google Scholar] [CrossRef]

- Kang, G.; Liu, K.; Hou, B.; Zhang, N. 3D multi-view convolutional neural networks for lung nodule classification. PLoS ONE 2017, 12, e0188290. [Google Scholar] [CrossRef] [Green Version]

- Lyu, J.; Ling, S.H. Using multi-level convolutional neural network for classification of lung nodules on CT images. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 686–689. [Google Scholar]

- Ciompi, F.; Chung, K.; Van Riel, S.J.; Setio, A.A.A.; Gerke, P.K.; Jacobs, C.; Scholten, E.T.; Schaefer-Prokop, C.; Wille, M.M.; Marchiano, A.; et al. Towards automatic pulmonary nodule management in lung cancer screening with deep learning. Sci. Rep. 2017, 7, 46479. [Google Scholar] [CrossRef]

- Shaffie, A.; Soliman, A.; Fraiwan, L.; Ghazal, M.; Taher, F.; Dunlap, N.; Wang, B.; van Berkel, V.; Keynton, R.; Elmaghraby, A.; et al. A generalized deep learning-based diagnostic system for early diagnosis of various types of pulmonary nodules. Technol. Cancer Res. Treat. 2018, 17, 1533033818798800. [Google Scholar] [CrossRef]

- Hua, K.L.; Hsu, C.H.; Hidayati, S.C.; Cheng, W.H.; Chen, Y.J. Computer-aided classification of lung nodules on computed tomography images via deep learning technique. OncoTarg. Ther. 2015, 8, 2015–2022. [Google Scholar]

- Song, Q.; Zhao, L.; Luo, X.; Dou, X. Using deep learning for classification of lung nodules on computed tomography images. J. Healthc. Eng. 2017, 2017, 8314740. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Causey, J.L.; Zhang, J.; Ma, S.; Jiang, B.; Qualls, J.A.; Politte, D.G.; Prior, F.; Zhang, S.; Huang, X. Highly accurate model for prediction of lung nodule malignancy with CT scans. Sci. Rep. 2018, 8, 9286. [Google Scholar] [CrossRef] [PubMed]

- El-Baz, A.S.; Gimel’farb, G.L.; Suri, J.S. Stochastic Modeling for Medical Image Analysis; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Elsharkawy, M.; Sharafeldeen, A.; Soliman, A.; Khalifa, F.; Ghazal, M.; El-Daydamony, E.; Atwan, A.; Sandhu, H.S.; El-Baz, A. A Novel Computer-Aided Diagnostic System for Early Detection of Diabetic Retinopathy Using 3D-OCT Higher-Order Spatial Appearance Model. Diagnostics 2022, 12, 461. [Google Scholar] [CrossRef]

- Elsharkawy, M.; Sharafeldeen, A.; Taher, F.; Shalaby, A.; Soliman, A.; Mahmoud, A.; Ghazal, M.; Khalil, A.; Alghamdi, N.S.; Razek, A.A.K.A.; et al. Early assessment of lung function in coronavirus patients using invariant markers from chest X-rays images. Sci. Rep. 2021, 11, 12095. [Google Scholar] [CrossRef] [PubMed]

- Farahat, I.S.; Sharafeldeen, A.; Elsharkawy, M.; Soliman, A.; Mahmoud, A.; Ghazal, M.; Taher, F.; Bilal, M.; Razek, A.A.K.A.; Aladrousy, W.; et al. The Role of 3D CT Imaging in the Accurate Diagnosis of Lung Function in Coronavirus Patients. Diagnostics 2022, 12, 696. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhang, S.; Sun, F.; Wang, N.; Zhang, C.; Yu, Q.; Zhang, M.; Babyn, P.; Zhong, H. Computer-aided diagnosis (CAD) of pulmonary nodule of thoracic CT image using transfer learning. J. Digit. Imaging 2019, 32, 995–1007. [Google Scholar] [CrossRef]

- Suk, H.I.; Shen, D. Deep learning-based feature representation for AD/MCI classification. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Nagoya, Japan, 22–26 September 2013; pp. 583–590. [Google Scholar]

| Study | Method | # Subjects | System Evaluation |

|---|---|---|---|

| Amato et al. [16,17] | 1. Grey scale thresholding 2. Rolling ball algorithm. | 17 CT patients. | The area under the ROC curve (AUC) of the system was . |

| Hu et al. [13] | 1.

Grey scale thresholding. 2. Dynamic programming. 3. Morphological operations. | eight normal CT patients. | The average intrasubject change was . |

| Itai et al. [22] | 1. Grey scale thresholding. 2. Active contour model. | 9 CT Patients. | Qualitative evaluation only. |

| Silveria et al. [23,24] | 1. Grey scale thresholding. 2. Geometric active contour. 3. Level sets. 4. Expectation-maximization (EM) algorithm. | Stack of chest CT slices. | Qualitative evaluation only. |

| Gao et al. [19] | 1. Grey scale thresholding. 2. Anisotropic diffusion. 3. 3D region growing. 4. Dynamic programming. 5. Rolling ball algorithm. | eight CT scans. | The average overlap coefficient of the system was . |

| Pu et al. [18] | 1. Grey scale thresholding. 2. Geometric border marching. | 20 CT patients. | Average over-segmentation and under-segmentation ratio were and , respectively. |

| Korfiatis et al. [57] | 1. k-means clustering 2. Support vector machine (SVM) | 22 CT patients. | The mean overlap coefficient of the system was higher than . |

| Wang et al. [58] | 1. Gray scale thresholding. 2. 3D gray-level co-occurrence matrix (GLCM) [59,60]. | 76 CT patients. | The mean overlap coefficient of the system was . |

| Van Rikxoort et al. [15] | 1. Region growing. 2. Grey scale thresholding. 3. Dynamic programming. 4. 3D hole filling. 5. Morphological closing. | 100 CT Patients. | The accuracy of the system was . |

| Wei et al. [20] | 1. Histogram analysis and connected-component labeling. 2. Wavelet transform. 3. Otsu’s algorithm. | nine CT patients. | The accuracy range of the system was . |

| Ye et al. [21] | 1. 3D fuzzy adaptive thresholding. 2. Expectation–maximization (EM) algorithm. 3. Antigeometric diffusion. 4. Volumetric shape index map. 5. Gaussian filter. 6. Dot map. 7. Weighted support vector machine (SVM) classification. | 108 CT patients. | The average detection rate of the system was . |

| Sun et al. [27] | 1. Active shape model matching method. 2. Rib cage detection method. 3. Surface finding approach. | 60 CT patients. | The Dice similarity coefficient (DSC) and mean absolute surface distance of the system were and , respectively. |

| Sofka et al. [29] | 1. Shape model. 2. Boundary detection. | 260 CT patients. | The errors in segmenting left and right lung were and , respectively. |

| Hua et al. [30] | Graph-based search algorithm. | 19 pathological lung CT patients. | The sensitivity, specificity, and Hausdorff distance of the system were , , and , respectively. |

| Nakagomi et al. [61] | Min-cut graph algorithm. | 97 CT patients | The sensitivity and Jaccard index of the system were , and , respectively. |

| Mansoor et al. [52] | 1. Fuzzy connectedness segmentation algorithm. 2. Texture-based random forest classification. 3. Region-based and neighboring anatomy guided correction segmentation. | more than 400 CT patients. | The DSC, Hausdorff distance, sensitivity, and specificity of the system were , , , and , respectively. |

| Yan et al. [62] | Convolution neural network (CNN). | 861 CT COVID-19 patients. | The system achieved DSC of and , sensitivity of and , and specificity of and for normal and COVID-19-infected lung, respectively. |

| Fan et al. [63] | 1. COVID-19-infected lung segmentation convolution neural network (Inf-Net). 2. Semi-supervised Inf-Net (Semi-Inf-Net). | 100 CT images. | The DSC (sensitivity, specificity) of Inf-Net and Semi-Inf-Net were (, ) and (, ), respectively. |

| Oulefki et al. [64] | Multi-level entropy-based threshold approach. | 297 CT COVID-19 patients. | The DSC, sensitivity, specificity, and precision of the system were , , , and , respectively. |

| Sharafeldeen et al. [65] | 1. Linear combination of Gaussian. 2. Expectation-maximization (EM) algorithm. 3. Modified k-means clustering approach. 4. 3D MGRF-based morphological constraints. | 32 CT COVID-19 patients. | The Overlap coefficient, DSC, absolute lung volume difference (ALVD), and 95th-percentile bidirectional Hausdorff distance (BHD) were , , , and , respectively. |

| Zhao et al. [66] | 1. Grey scale thresholding. 2. 3D V-Net. 3. Deformation module. | 112 CT patients. | DSC, sensitivity, specificity, and mean surface distance error of the system were , , , and , respectively. |

| Sousa et al. [67] | Hybrid deep learning model, consisted of U-Net [68] and ResNet-34 [69] architectures. | 385 CT patients, collected from five different datasets. | The mean DSC of the system was higher than , and the average Hausdorff distance was less than . |

| Kim et al. [70] | Otsu’s algorithm. | 447 CT patients. | Sensitivity, specificity, accuracy, AUC, and F1-score of the system were , , , , and , respectively. |

| Study | Method | # Subjects | System Evaluation |

|---|---|---|---|

| Brown et al. [97] | 1. Priori model. 2. Region growing. 3. Mathematical morphology. | 31 CT patients. | The accuracy of the system was . |

| Oda et al. [95] | 1. 3D filter by orientation map of gradient vectors. 2. 3D distance transformation. | 33 CT patients. | The accuracy of the system was . |

| Chang et al. [82] | 1. Cylinder filter. 2. Spherical filter. 3. Sphericity test. | eight CT patients. | The detection rate of the system was . |

| Way et al. [78] | 1. k-means clustering. 2. 3D active contour model | 96 CT patients. | Qualitative evaluation only. |

| Kuhnigk et al. [121] | Automatic morphological and partial volume analysis based method. | Low-dose data from 8 clinical metastasis patients. | Results of proposed method outperformed conventional methods both systematic and absolute errors were substantially reduced. Method could successfully account for slice thickness and variations of kernel reconstruction compared to conventional methods. |

| Zhou et al. [124] | 1. Detection: boosted KNN with Euclidean distance measure between the non-parametric density estimates of two regions. 2. Segmentation: analysis of 3-D texture likelihood map of nodule region. | 10 ground Glass Opacity nodules. | All 10 nodules detected with only 1 false positive nodule. |

| Dehmeshki et al. [122] | Adaptive sphericity oriented contrast region growing on the fuzzy connectivity map of the object of interest. | 1. Database 1: 608 pulmonary nodules from 343 scans, 2. Database 2: 207 pulmonary nodules from 80 CT scans. | Visual inspection found that of the segmented nodules were correct, while the other nodules required other segmentation solutions. |

| Tao et al. [123] | A multi-level statistical learning-based approach for segmentation and detection of ground glass nodule. | Database: 1100 subvolumes (100 contains ground glass nodule) acquired from 200 subjects. | Classification accuracy: (overall), and (ground glass nodule). |

| Messay et al. [98] | 1. Thresholding. 2. Morphological operations. 3. Fisher Linear Discriminant (FLD) classifier. | 84 CT patients. | The sensitivity of the system was . |

| Kubota et al. [126] | Region Growing. | 1. LIDC 1: 23 nodule, 2. LIDC 2: 82 nodule, 3. A dataset of 820 nodules with manual diameter measurements. | 1. LIDC 1: average overlap, 2. LIDC 2: average overlap. |

| Liu et al. [128] | 1. Selective enhancement filter [129]. 2. Hidden conditional random field (HCRF) [130]. | 24 CT patients. | The sensitivity of the system was with false positive/scan. |

| Choi et al. [107] | 1. Dot enhancement filter. 2. Angular histograms of surface normals (AHSN). 3. Iterative wall elimination method. 4. Support vector machine (SVM) classifier. | 84 CT patients. | The sensitivity of the system was with false positive/scan. |

| Alilou et al. [108] | 1. Thresholding. 2. Morphological opening. 3. 3D region growing. | 60 CT patients. | The sensitivity of the system was with false positive/scan. |

| Bai et al. [109] | 1. Local shape analysis. 2. Data-driven local contextual feature learning. 3. Principal component analysis (PCA). | 99 CT patients | The number of false positive were reduced by more than . |

| Setio et al. [99] | 1. Thresholding. 2. Morphological operations. 3. Vector supporting machine (VSM) classifier. | 888 CT patients. | The sensitivity of the system was and with an average of 1 and 4 false positive/scan, respectively. |

| Bai et al. [109] | 1. Local shape analysis. 2. Data-driven local contextual feature learning. 3. Principal component analysis (PCA). | 99 CT patients | The number of false positive were reduced by more than . |

| Setio et al. [99] | 1. Thresholding. 2. Morphological operations. 3. Vector supporting machine (VSM) classifier. | 888 CT patients. | The sensitivity of the system was and with an average of 1 and 4 false positive/scan, respectively. |

| Akram et al. [106] | 1. Artificial neural network (ANN). 2. Geometric and intensity-based features. | 84 CT patients. | The accuracy and sensitivity of the system were and , respectively. |

| Golan et al. [111] | Deep convolutional neural network (CNN). | 1018 CT patients | The sensitivity of the system was with 20 false positive/scan. |

| Bergtholdt et al. [112] | 1. Geometric features. 2. Grayscale features. 3. Location features. 4. Support vector machine (SVM) classifier. | 1018 CT patients. | The sensitivity of the system was with false positive/scan. |

| Sudipta Mukhopadhyay [127] | Thresholding approach based on internal texture (solid/part-solid and non-solid), and external attachment (juxta-plural and juxta-vascular). | 891 nodules from (LIDC/IDRI). | Average segmentation accuracy: (for soild/part-solid), (for non-solid). |

| El-Regaily et al. [110] | 1. Canny edge detector. 2. Thresholding. 3. Region growing. 4. Rule-based classifier. | 400 CT patients. | The accuracy, sensitivity, and specificity of the system were , , and , respectively with an average of false positive/scan. |

| Zhang et al. [113] | Deep believe network (DBN). | 1018 CT patients. | The accuracy of system was . |

| Wang et al. [100] | Semi-supervised extreme learning machines (SS-ELM) | 1018 CT patients. | The accuracy of the system was . |

| Zhao et al. [131] | 1. 3D U-Net [132]. 2. Generative adversarial network (GAN) [133]. | 800 CT scans. | Qualitative evaluation only. |

| Charbonnier et al. [125] | Subsolid nodule segmentation using voxel classification that eliminated blood vessels. | 170 subsolid nodules from the Multicentric Italian Lung Disease trial. | of segmented vessels, and of segmented solid core were accepted observers. |

| Luo et al. [134] | 3D sphere center-points matching detection network (SCPM-Net). | 888 CT scans. | The sensitivity of the system was . |

| Yin et al. [135] | Squeeze and attention, and dense atrous spatial pyramid pooling U-Net (SD-U-Net). | 2236 CT slices. | The Dice similarity coefficient (DSC), sensitivity, specificity, and accuracy of the system were , , , and , respectively. |

| Bianconi et al. [120] | 1. 12 conventional semi-automated methods (Active contours (MorphACWE, MprphGAC), cluserting (K-means, SlIC), graph-based (Felzenszwalb), region-growing (flood fill), thresholding (Kapur, Kittler, Otsu, MultiOtsu, others (MSER, Watershed)), and 2. 12 deep learning semi-automated methods (12 CNNS designed using 4 standard segmentation models (FPN, LinkNet, PSPNet, U-Net) and 3 well-known encoders (InceptionV3, MobileNet, ResNet34)). | 1. Dataset 1: 383 images from a cohort of 111 patients. 2. Dataset 2: 259 images from a cohort of 100. | Semi-automated deep learning methods outperformed the conventional methods. DSCs of the deep learning based methods recorded and for dataset 1, and dataset 2 respectively. Conventional methods recorded DSCs of and . |

| Study | Method | # Subjects | System Evaluation |

|---|---|---|---|

| Dehmeshki et al. [148] | Shape-based region growing. | 3D lung CT data where nodules are attached to blood vessels or lung wall. | Qualitative evaluation only. |

| Lee et al. [169] | Commercial CAD system (IQQA-Chest, EDDA Technology, Princeton Junction, NJ, USA). | 200 chest radiographs (100 normal, 100 with malignant solitary nodules. | Sensitivity of , false positive rate of . |

| Kuruvilla et al. [161] | Feed forward and feed forward back propagation neural networks. | 155 patients from LIDC | Classification accuracy of . |

| Yamamoto et al. [165] | Random forest. | 172 patients with NSCLC. | Sensitivity of , specificity of , accuracy of in independent testing. |

| Orozco et al. [147] | 1. Wavelet feature descriptor, 2. SVM. | 45 CT scans from ELCAP and LIDC. | Total preciseness in classifying cancerous from non-cancerous nodules was ; sensitivity of , and specificity of . |

| Kumar et al. [149] | Deep Features using autoencoder. | 4323 nodules from NCI-LIDC dataset. | overall accuracy, sensitivity, and false positive of 0.39/patient (10-fold cross validation). |

| Hua et al. [175] | 1. A deep belief network (DBN), 2. CNN. | LIDC | Sensitivity (DBN: , CNN: ), Specificity (DBN: , CNN: ). |

| Kang et al. [171] | 3D multi-view CNN (MV-CNN). | LIDC-IDRI | Error rate of for binary classification (benign and malignant) and for ternary classification(benign, primary malignant and metastatic malignant). |

| Ciompi et al. [173] | Multi-stream multi-scale convolutional networks. | 1. Italian MILD screening trial, 2. Danish DLCST screening trial. | Best accuracy of . |

| Song et al. [176] | 1. CNN, 2. Deep neural network (DNN), 3. Stacked autoencoder (SAE). | LIDC-IDRI | Accuracy of , sensitivity of , and specificity of . |

| Tajbakhsh et al. [138] | 1. Massive training artificial neural networks (MTANN), 2. CNN. | LDCT acquired from 31 patients. | AUC = ( confidence interval (CI): ). |

| Li et al. [145] | Support vector machine (SVM). | 248 GGNs. | Accuracy of classifying GGNs into atypical adenomatous hyperplasia (AAH), adenocarcinoma in situ (AIS), minimally invasive adenocarcinoma (MIA), and invasive adenocarcinoma (IA) was . Accuracy of classification between AIS and MIA nodules is , and between indolent versus invasive lesions is . |

| Huang et al. [154] | Dense convolutional network (DenseNet). | 1. CIFAR, 2. SVHN, 3. ImageNet. | Error rates for CIFAR (C10: , C10+: , C100: , C100+: ), SVHN (), ImageNet (error rates with single-crop (10-crop) are: top-1 (25.02 (23.61), 23.80 (22.08), 22.58 (21.46), 22.33 (20.85)), top-5 (7.71 (6.66), 6.85 (5.92), 6.34 (5.54), 6.15 (5.30))). |

| Nibali et al. [158] | ResNet | LIDC/IDRI | Sensitivity of , specificity of , precision of , AUC of , and accuracy of . |

| Liu et al. [159] | Multi-view multi-scale CNNs | LIDC-IDRI and ELCAP | Classification rate as . |

| Zhao et al. [152] | A deep learning system based on 3D CNNs and multitask learning | 651 nodules with labels of AAH, AIS, MIA, IA. | Classification accuracy using 3 class weighted average F1 score is: compared to radiologists who achieved , , , and . |

| Li et al. [150] | Multivariable linear predictor model built on semantic features. | 100 patients from NLST-LDCT. | AUC at baseline screening: , at first followup: , and at second followup: . |

| Lyu et al. [172] | Multi-level CNN (ML-CNN). | LIDC, IDRI (1018 cases from 1010 patients) | Accuracy: . |

| Shaffie et al. [174] | 1. Seventh-order Markov Gibbs random field (MGRF) model [178,179,180], 2. Geometric features, 3. Deep autoencoder classifier. | 727 nodules from 467 patients (LIDC). | Classification accuracy of . |

| Causey et al. [177] | Deep learning CNN. | LIDC-IDRI | Accuracy of malignancy classification with AUC of approximately of . |

| Uthoff et al. [156] | k-medoids clustering and information theory. | Training: (74 malignant, 289 benign), Validation (50 malignant, 50 benign). | AUC = , sensitivity and specificity. |

| Ardila et al. [162] | A deep learning CNN. | 6716 National Lung Cancer Screening Trial cases, independent clinical validation set of 1139 cases. | AUC = . |

| Liu et al. [151] | 1. Multivariate logistic regression analysis, 2. Least absolute shrinkage and selection operator (LASSO). | Benign and malignant nodules from 875 patients. | Training: AUC = ; CI: 0.793–0.879) and validation (AUC = ; CI: 0.745–0.872). |

| Gong et al. [136] | A deep learning–based artificial intelligence system for classifying ground-glass nodule(GGN) into invasive adenocarcinoma (IA) or non-invasive IA. | 828 GGNs of 644 patients (209 are IA and 619 non-IA, including 409 adenocarcinomas in situ and 210 minimally invasive adenocarcinomas). | AUC = . |

| Sim et al. [137] | Radiologists assisted by deep learning–based CNN. | 600 lung cancer–containing chest radiographs and 200 normal chest radiographs. | Average sensitivity improved from to , and number of false positives per radiograph declined from to . |

| Wang et al. [153] | A two-stage deep learning strategy: prior-feature learning followed by adaptive-boost deep learning. | 1357 nodules (765 noninvasive (AAH and AIS) and 592 invasive nodules (MIA and IA)). | Classification accuracy of compared to specialists who achieved , , and . AUC= . |

| Xia et al. [155] | 1. Recurrent residual CNN based on U-Net, 2. Information fusion method. | 373 GGNs from 323 patients. | AUC= , accuracy: . |

| Li et al. [163] | CLR software based on 3D CNN with DenseNet architecture as a backbone. | 486 consecutive resected lung lesions(320 adenocarcinomas, 40 other malignancies, 55 metastases, and 71 benign lesions). | Classification accuracy for adenocarcinomas, other malignancies, metastases, and benign lesions was , , , and , respectively. |

| Hu et al. [139] | 1. 3D U-NET, 2. Deep neural network. | 513 GGNs (100 benign, 413 malignant). | Accuracy of , F1 score of , weighted average F1 score of , and Matthews correlation coefficient of . |

| Farahat et al. [181] | 1. Three MGRF energies, extracted from three different grades of COVID-19 patients, 2. Artificial neural network. | 76 CT COVID-19 patients. | accuracy, and Cohen kappa. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fahmy, D.; Kandil, H.; Khelifi, A.; Yaghi, M.; Ghazal, M.; Sharafeldeen, A.; Mahmoud, A.; El-Baz, A. How AI Can Help in the Diagnostic Dilemma of Pulmonary Nodules. Cancers 2022, 14, 1840. https://doi.org/10.3390/cancers14071840

Fahmy D, Kandil H, Khelifi A, Yaghi M, Ghazal M, Sharafeldeen A, Mahmoud A, El-Baz A. How AI Can Help in the Diagnostic Dilemma of Pulmonary Nodules. Cancers. 2022; 14(7):1840. https://doi.org/10.3390/cancers14071840

Chicago/Turabian StyleFahmy, Dalia, Heba Kandil, Adel Khelifi, Maha Yaghi, Mohammed Ghazal, Ahmed Sharafeldeen, Ali Mahmoud, and Ayman El-Baz. 2022. "How AI Can Help in the Diagnostic Dilemma of Pulmonary Nodules" Cancers 14, no. 7: 1840. https://doi.org/10.3390/cancers14071840

APA StyleFahmy, D., Kandil, H., Khelifi, A., Yaghi, M., Ghazal, M., Sharafeldeen, A., Mahmoud, A., & El-Baz, A. (2022). How AI Can Help in the Diagnostic Dilemma of Pulmonary Nodules. Cancers, 14(7), 1840. https://doi.org/10.3390/cancers14071840