Design Strategies of 40 nm Split-Gate NOR Flash Memory Device for Low-Power Compute-in-Memory Applications

Abstract

:1. Introduction

2. Design Methodology

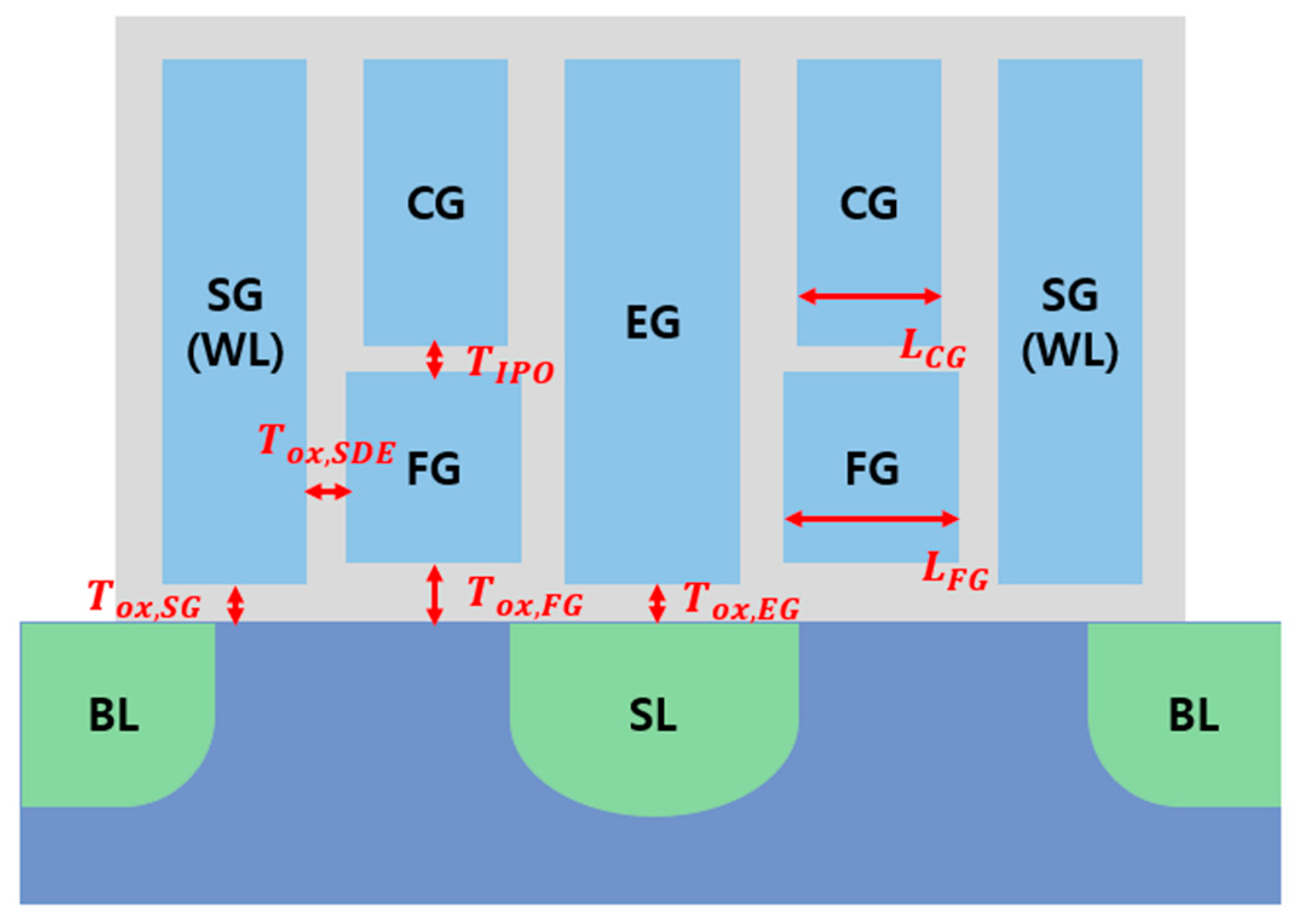

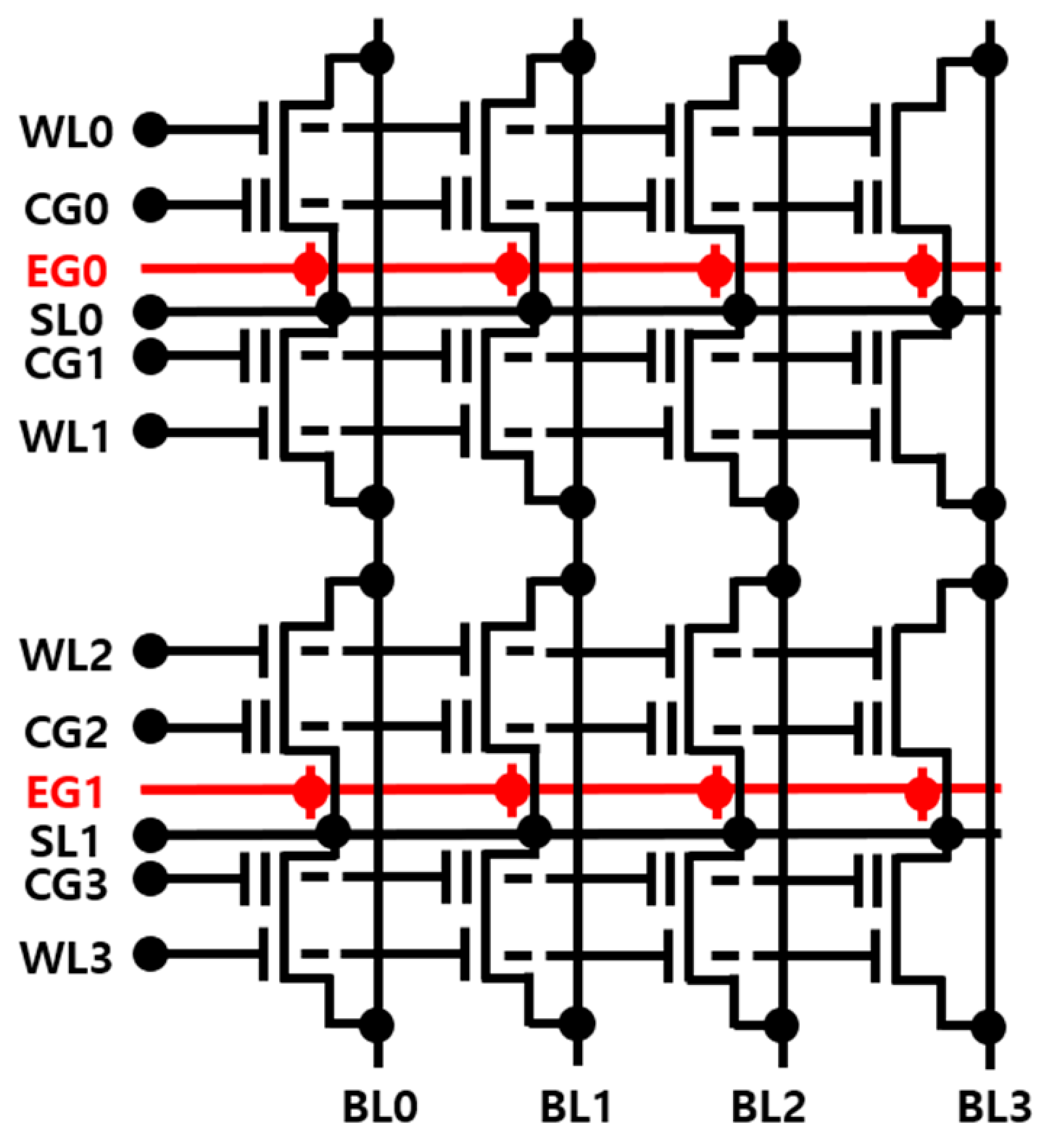

2.1. Cell Structure

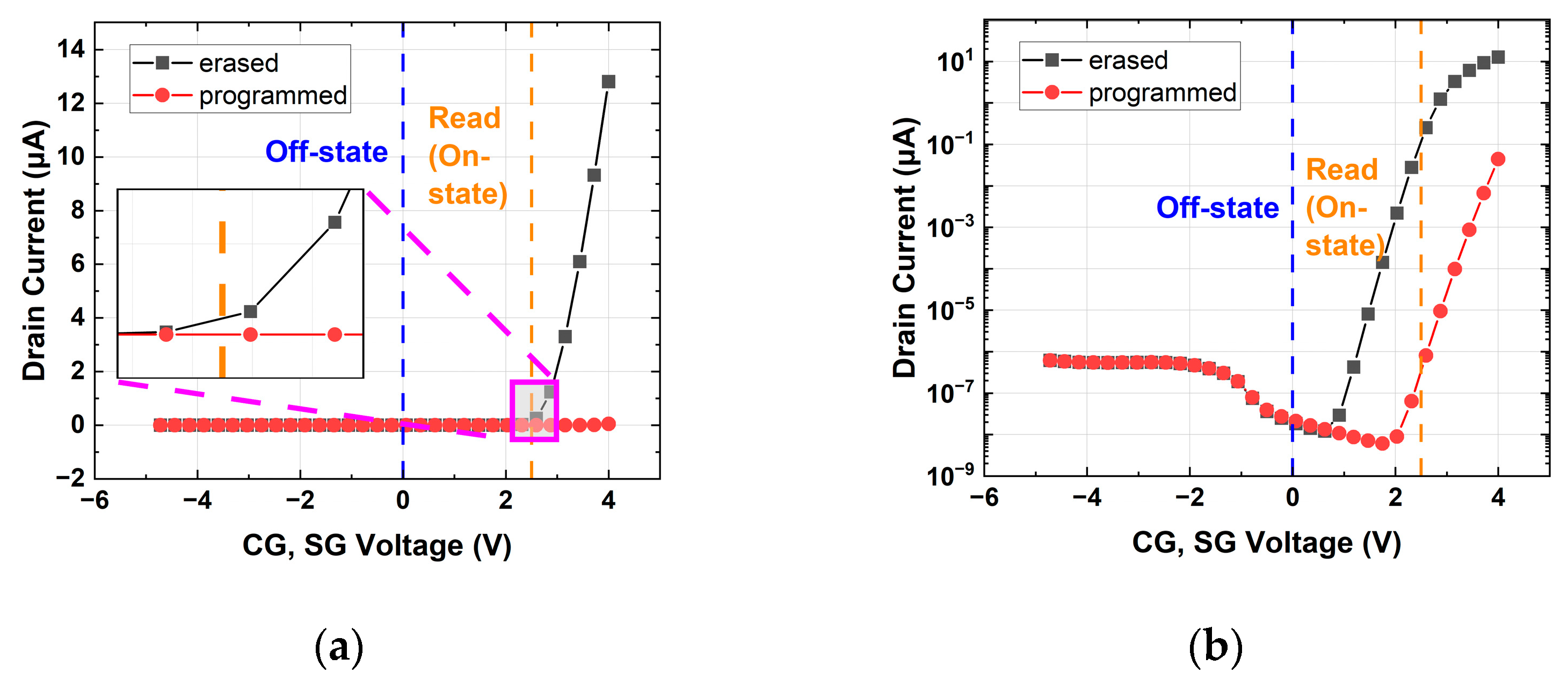

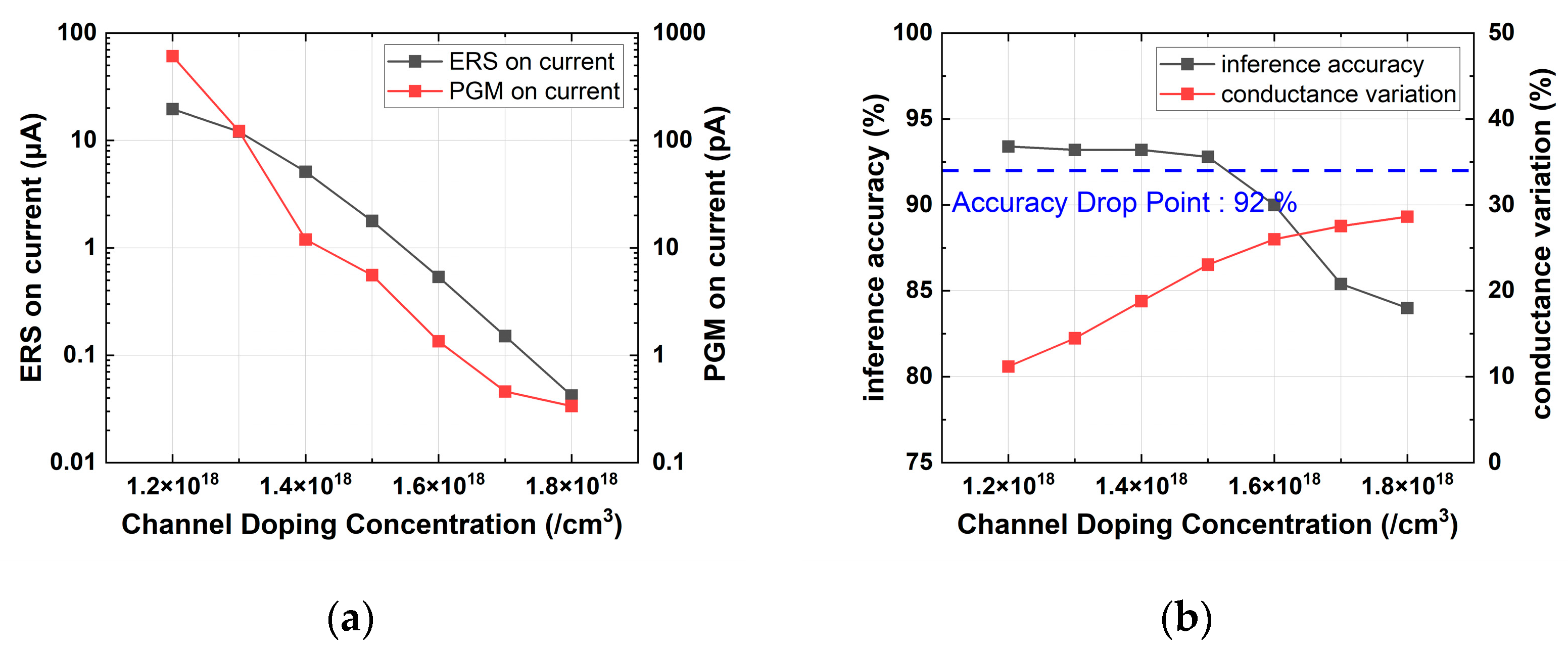

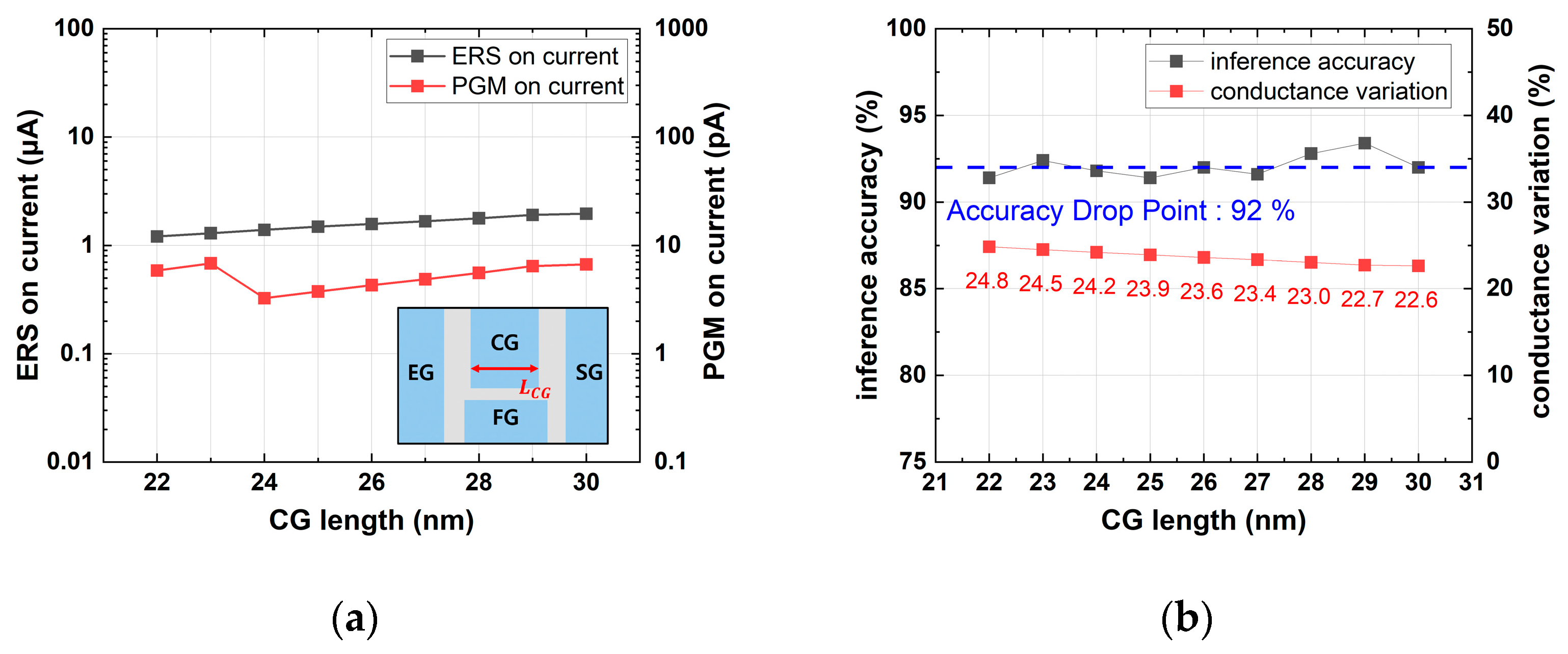

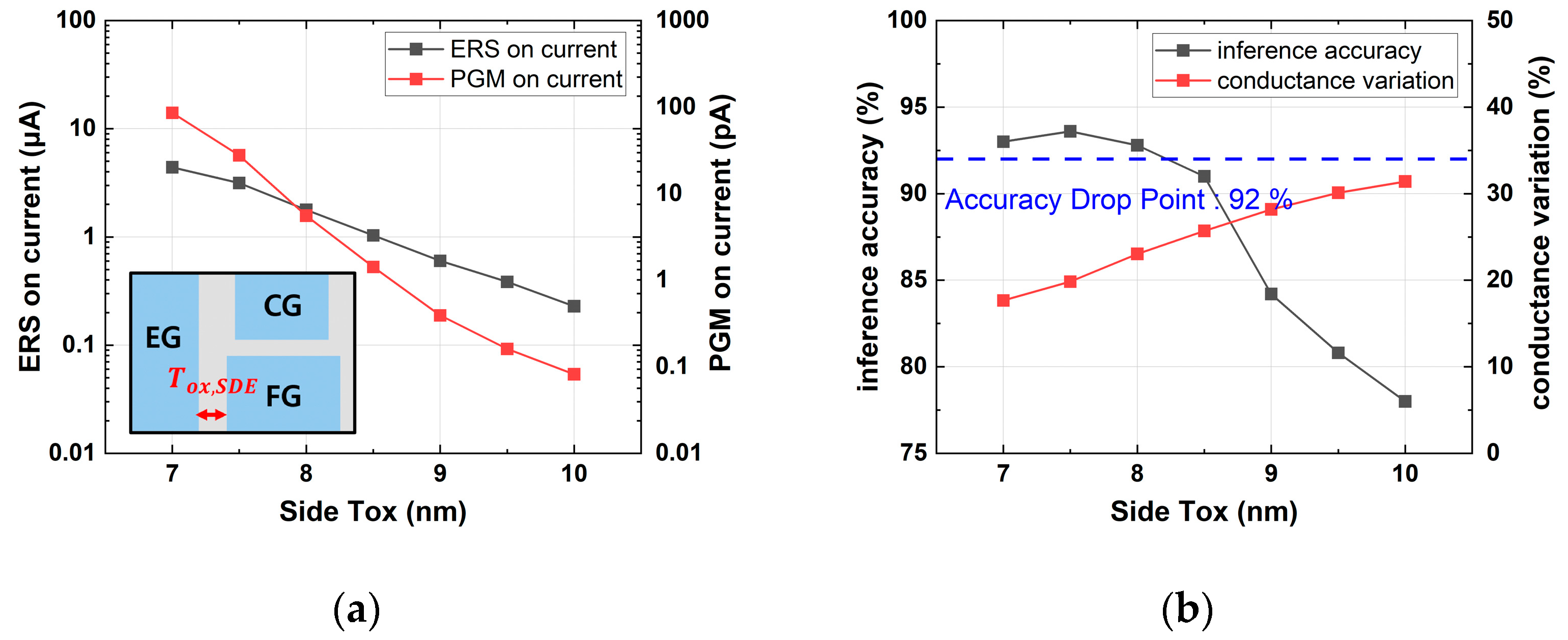

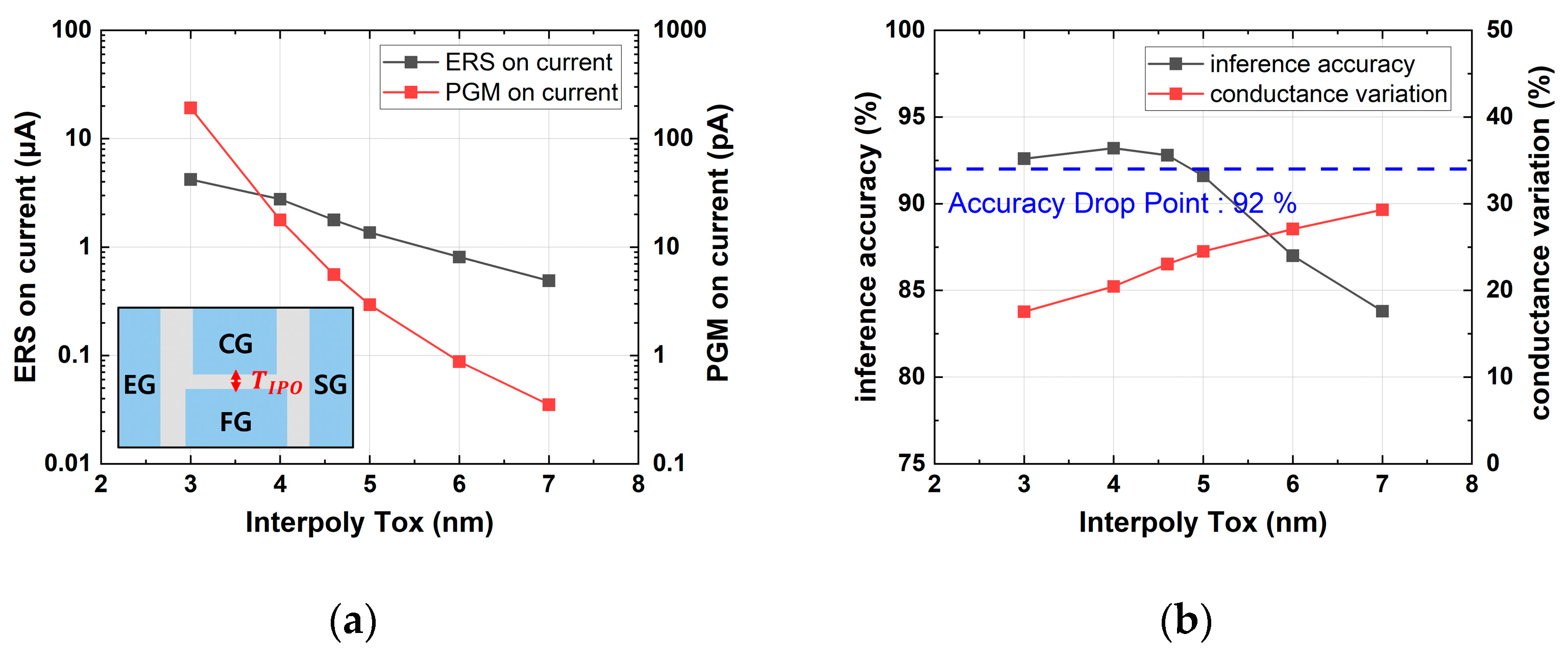

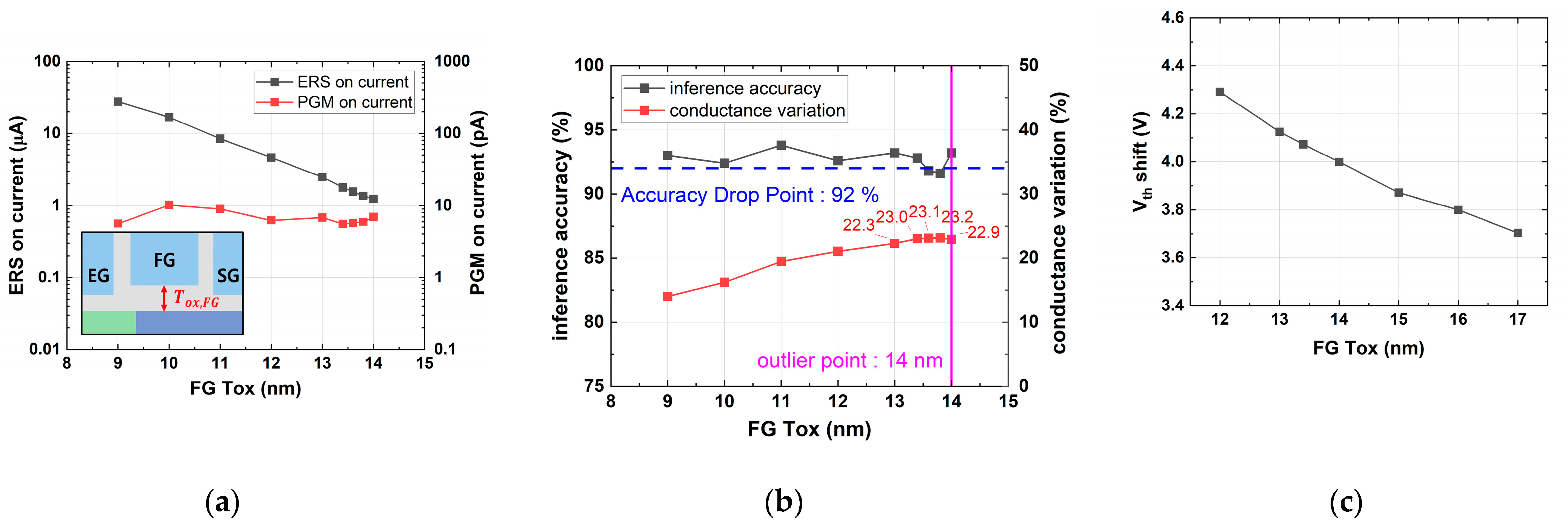

2.2. Cell Optimization

2.3. Design Constraints

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kang, M.; Gonugondla, S.K.; Patil, A.; Shanbhag, N.R. A multifunctional in-memory inference processor using a standard 6T SRAM array. IEEE J. Solid-State Circuits 2018, 53, 642–655. [Google Scholar] [CrossRef]

- Dong, Q.; Sinangil, M.E.; Erbagci, B.; Sun, D.; Khwa, W.-S.; Liao, H.-J.; Wang, Y.; Chang, J. A 351TOPS/W and 372.4 GOPS compute-in-memory SRAM macro in 7 nm FinFET CMOS for machine-learning applications. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 16–20 February 2020. [Google Scholar]

- Su, J.-W.; Si, X.; Chou, Y.-C.; Chang, T.-W.; Huang, W.-H.; Tu, Y.-N.; Liu, R.; Lu, P.-J.; Liu, T.-W.; Wang, J.-H.; et al. 15.2 A 28 nm 64 Kb Inference-Training Two-Way Transpose Multibit 6T SRAM Compute-in-Memory Macro for AI Edge Chips. In Proceedings of the 2020 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 16–20 February 2020. [Google Scholar]

- Yin, S.; Kim, Y.; Han, X.; Barnaby, H.; Yu, S.; Luo, Y.; He, W.; Sun, X.; Kim, J.-J.; Seo, J.-S. Monolithically Integrated RRAM- and CMOS-Based In-Memory Computing Optimizations for Efficient Deep Learning. IEEE Micro 2019, 39, 54–63. [Google Scholar] [CrossRef]

- Xue, C.-X.; Huang, T.-Y.; Liu, J.-S.; Chang, T.-W.; Kao, H.-Y.; Wang, J.-H.; Liu, T.-W.; Wei, S.-Y.; Huang, S.-P.; Wei, W.-C.; et al. A 22 nm 2 Mb ReRAM compute-in-memory macro with 121-28TOPS/W for multibit MAC computing for tiny AI edge devices. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 16–20 February 2020. [Google Scholar]

- Shim, W.; Luo, Y.; Seo, J.-S.; Yu, S. Investigation of Read Disturb and Bipolar Read Scheme on Multilevel RRAM-Based Deep Learning Inference Engine. IEEE Trans. Electron Devices 2020, 67, 2318–2323. [Google Scholar] [CrossRef]

- Yu, S.; Shim, W.; Peng, X.; Luo, Y. RRAM for Compute-in-Memory: From Inference to Training. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 2753–2765. [Google Scholar] [CrossRef]

- Burr, G.W.; Shelby, R.M.; di Nolfo, C.; Jang, J.W.; Shenoy, R.S.; Narayanan, P.; Virwani, K.; Giacometti, E.U.; Kurdi, B.; Hwang, H. Experimental demonstration and tolerancing of a largescale neural network (165,000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron. Devices 2015, 62, 3498–3507. [Google Scholar] [CrossRef]

- Kim, W.; Bruce, R.; Masuda, T.; Fraczak, G.; Gong, N.; Adusumilli, P.; Ambrogio, S.; Tsai, H.; Bruley, J.; Han, J.-P.; et al. Confined PCM-based Analog Synaptic Devices offering Low Resistance-drift and 1000 Programmable States for Deep Learning. In Proceedings of the 2019 Symposium on VLSI Technology, Kyoto, Japan, 9–14 June 2019. [Google Scholar]

- Joshi, V.; Le Gallo, M.; Haefeli, S.; Boybat, I.; Nandakumar, S.R.; Piveteau, C.; Dazzi, M.; Rajendran, B.; Sebastian, A.; Eleftheriou, E. Accurate deep neural network inference using computational phase-change memory. Nat. Commun. 2020, 11, 2473. [Google Scholar] [CrossRef] [PubMed]

- Yin, G.; Cai, Y.; Wu, J.; Duan, Z.; Zhu, Z.; Liu, Y.; Wang, Y.; Yang, H.; Li, X. Enabling Lower-Power Charge-Domain Nonvolatile In-Memory Computing With Ferroelectric FETs. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 2262–2266. [Google Scholar] [CrossRef]

- Luo, Y.; Luo, Y.-C.; Yu, S. A Ferroelectric-Based Volatile/Non-Volatile Dual-Mode Buffer Memory for Deep Neural Network Accelerators. IEEE Trans. Comput. 2022, 71, 2088–2101. [Google Scholar] [CrossRef]

- Choe, G.; Lu, A.; Yu, S. 3D AND-Type Ferroelectric Transistors for Compute-in-Memory and the Variability Analysis. IEEE Electron Device Lett. 2022, 43, 304–307. [Google Scholar] [CrossRef]

- Jain, S.; Ranjan, A.; Roy, K.; Raghunathan, A. Computing in Memory with Spin-Transfer Torque Magnetic RAM. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 470–483. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Z.; Wang, G.; Zhang, Y.; Zhao, W. Design of an Area-Efficient Computing in Memory Platform Based on STT-MRAM. IEEE Trans. Magn. 2021, 57, 1–4. [Google Scholar] [CrossRef]

- Lee, S.-T.; Kim, H.; Bae, J.-H.; Yoo, H.; Choi, N.Y.; Kwon, D.; Lim, S.; Park, B.-G.; Lee, J.-H. High-Density and Highly-Reliable Binary Neural Networks Using NAND Flash Memory Cells as Synaptic Devices. In Proceedings of the 2019 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 7–11 December 2019. [Google Scholar]

- Wang, P.; Xu, F.; Wang, B.; Gao, B.; Wu, H.; Qian, H.; Yu, S. Three-Dimensional nand Flash for Vector–Matrix Multiplication. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 988–991. [Google Scholar] [CrossRef]

- Shim, W.; Yu, S. Technological Design of 3D NAND-Based Compute-in-Memory Architecture for GB-Scale Deep Neural Network. IEEE Electron Device Lett. 2021, 42, 160–163. [Google Scholar] [CrossRef]

- Guo, X.; Bayat, F.M.; Bavandpour, M.; Klachko, M.; Mahmoodi, M.R.; Prezioso, M.; Likharev, K.K.; Strukov, D.B. Fast, energy-efficient, robust, and reproducible mixed-signal neuromorphic classifier based on embedded NOR flash memory technology. In Proceedings of the IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 2–6 December 2017; pp. 6.5.1–6.5.4. [Google Scholar]

- Superflash Technology Products, SST, Inc. Available online: https://www.sst.com/products-and-services/superflash-r-technology-products/process-license (accessed on 15 February 2017).

- Shum, D.; Luo, L.Q.; Kong, Y.; Deng, F.; Qu, X.; Teo, Z.; Liu, J.Q.; Zhang, F.; Cai, X.; Tan, K.; et al. 40 nm Embedded Self-Aligned Split-Gate Flash Technology for High-Density Automotive Microcontrollers. In Proceedings of the 2017 IEEE International Memory Workshop (IMW), Monterey, CA, USA, 14–17 May 2017. [Google Scholar]

- Sentaurus Device User Guide, Version N-2017.09; Synopsys: Mountain View, CA, USA, 2017.

- Peng, X.; Huang, S.; Luo, Y.; Sun, X.; Yu, S. DNN+NeuroSim: An End-to-End Benchmarking Framework for Compute-in-Memory Accelerators with Versatile Device Technologies. In Proceedings of the 2019 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 7–11 December 2019. [Google Scholar]

- Simonyan, K.; Andrew, Z. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wang, Q.; Park, Y.; Lu, W.D. Device Non-Ideality Effects and Architecture-Aware Training in RRAM In-Memory Computing Modules. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Republic of Korea, 22–28 May 2021. [Google Scholar]

- Wu, S.; Li, G.; Chen, F.; Shi, L. Training and Inference with Integers in Deep Neural Networks. arXiv 2018, arXiv:1802.04680. [Google Scholar]

| Electrode | Erase (V) | Program (V) | Read (V) |

|---|---|---|---|

| WL (SG) | 0 | 1.2 | |

| BL (drain) | 0 | 0.3 | 0.8 |

| CG | 0 | 10 | |

| EG | 11.75 | 6.5 | 0 |

| SL (source) | 0 | 6.5 | 0 |

| Charge Density (C) | |

|---|---|

| Erased state | −1 × 10−16 |

| Programmed state | −1 × 10−15 |

| Amount of charge variation | ±2 × 10−17 |

| NeuroSim Simulation Options | |

|---|---|

| Dataset | CIFAR-10 |

| Network | VGG-8 |

| Input precision | 8 |

| Weight precision | 8 |

| Activation precision | 8 |

| Memory array size | 256 × 256 |

| ADC precision | 5 |

| Bit per cell | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yook, C.-G.; Kim, J.N.; Kim, Y.; Shim, W. Design Strategies of 40 nm Split-Gate NOR Flash Memory Device for Low-Power Compute-in-Memory Applications. Micromachines 2023, 14, 1753. https://doi.org/10.3390/mi14091753

Yook C-G, Kim JN, Kim Y, Shim W. Design Strategies of 40 nm Split-Gate NOR Flash Memory Device for Low-Power Compute-in-Memory Applications. Micromachines. 2023; 14(9):1753. https://doi.org/10.3390/mi14091753

Chicago/Turabian StyleYook, Chan-Gi, Jung Nam Kim, Yoon Kim, and Wonbo Shim. 2023. "Design Strategies of 40 nm Split-Gate NOR Flash Memory Device for Low-Power Compute-in-Memory Applications" Micromachines 14, no. 9: 1753. https://doi.org/10.3390/mi14091753

APA StyleYook, C.-G., Kim, J. N., Kim, Y., & Shim, W. (2023). Design Strategies of 40 nm Split-Gate NOR Flash Memory Device for Low-Power Compute-in-Memory Applications. Micromachines, 14(9), 1753. https://doi.org/10.3390/mi14091753