Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. Test Site

2.2. UAV System and Flight Missions

2.3. Ground Truthing

2.4. Image Pre-Processing

2.5. Photogrammetric Processing

2.6. Extraction of Image Variables

2.7. Statistical Analysis

3. Results and Discussion

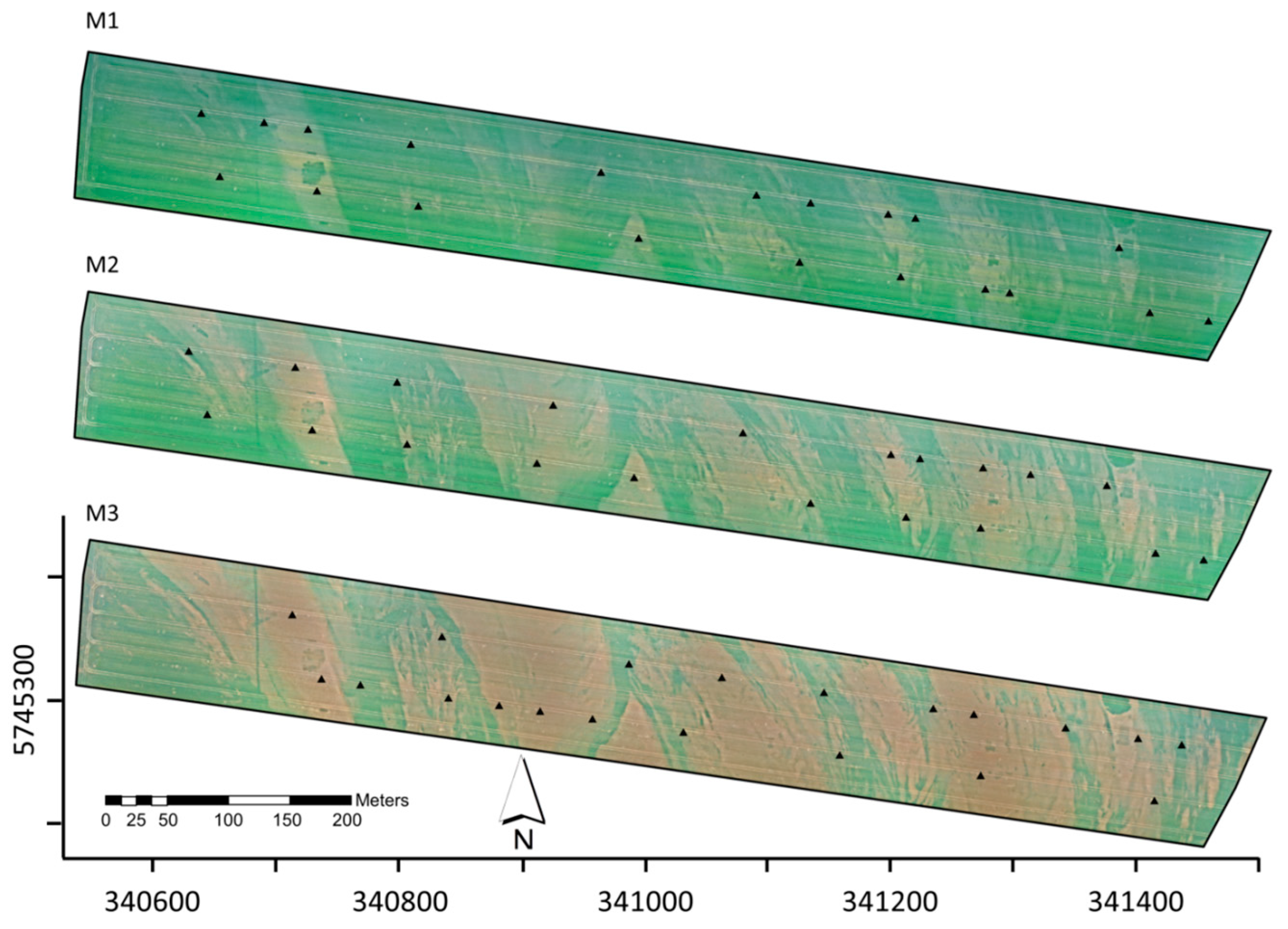

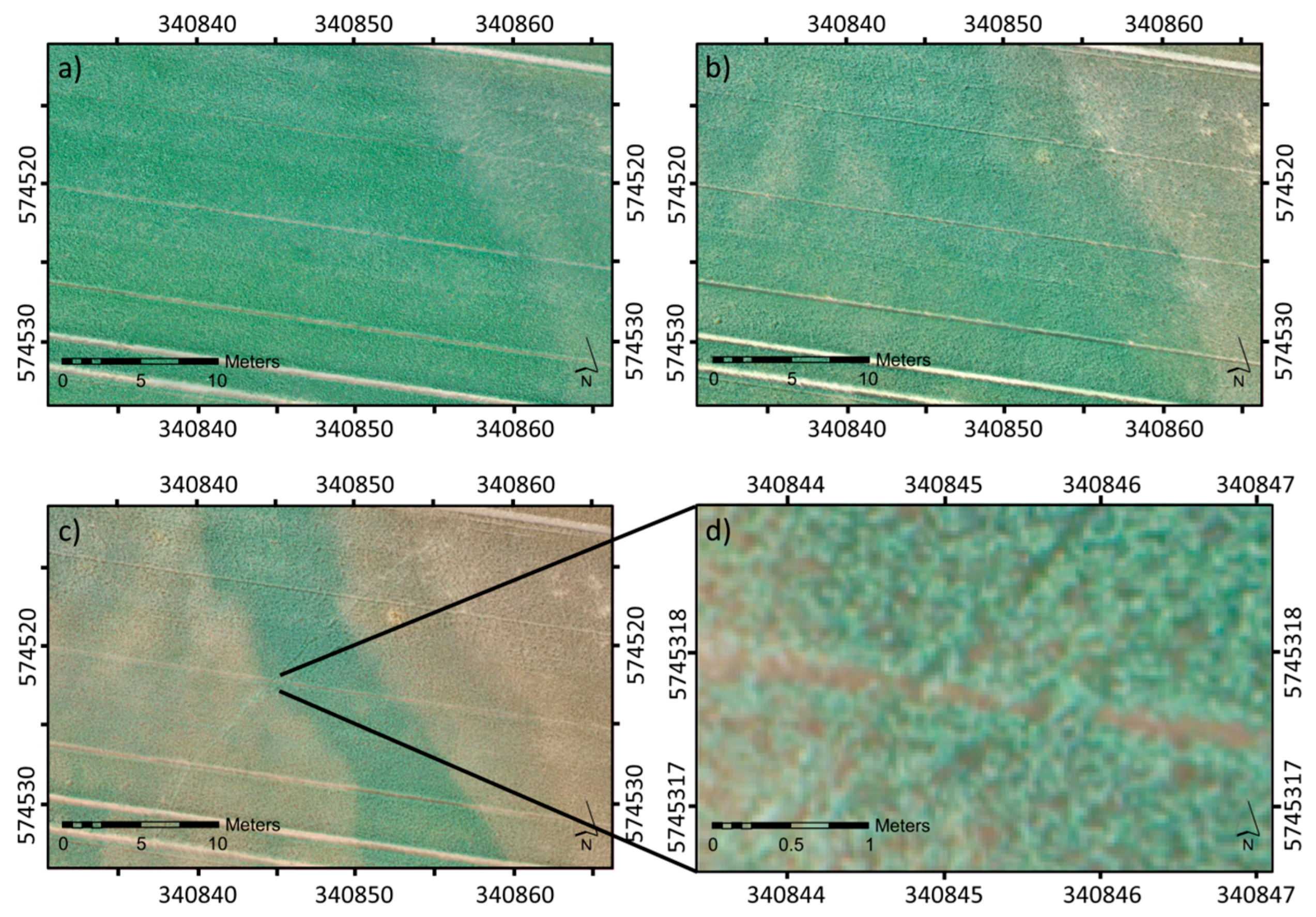

3.1. Qualitative Assessment of the Acquired UAV Imagery

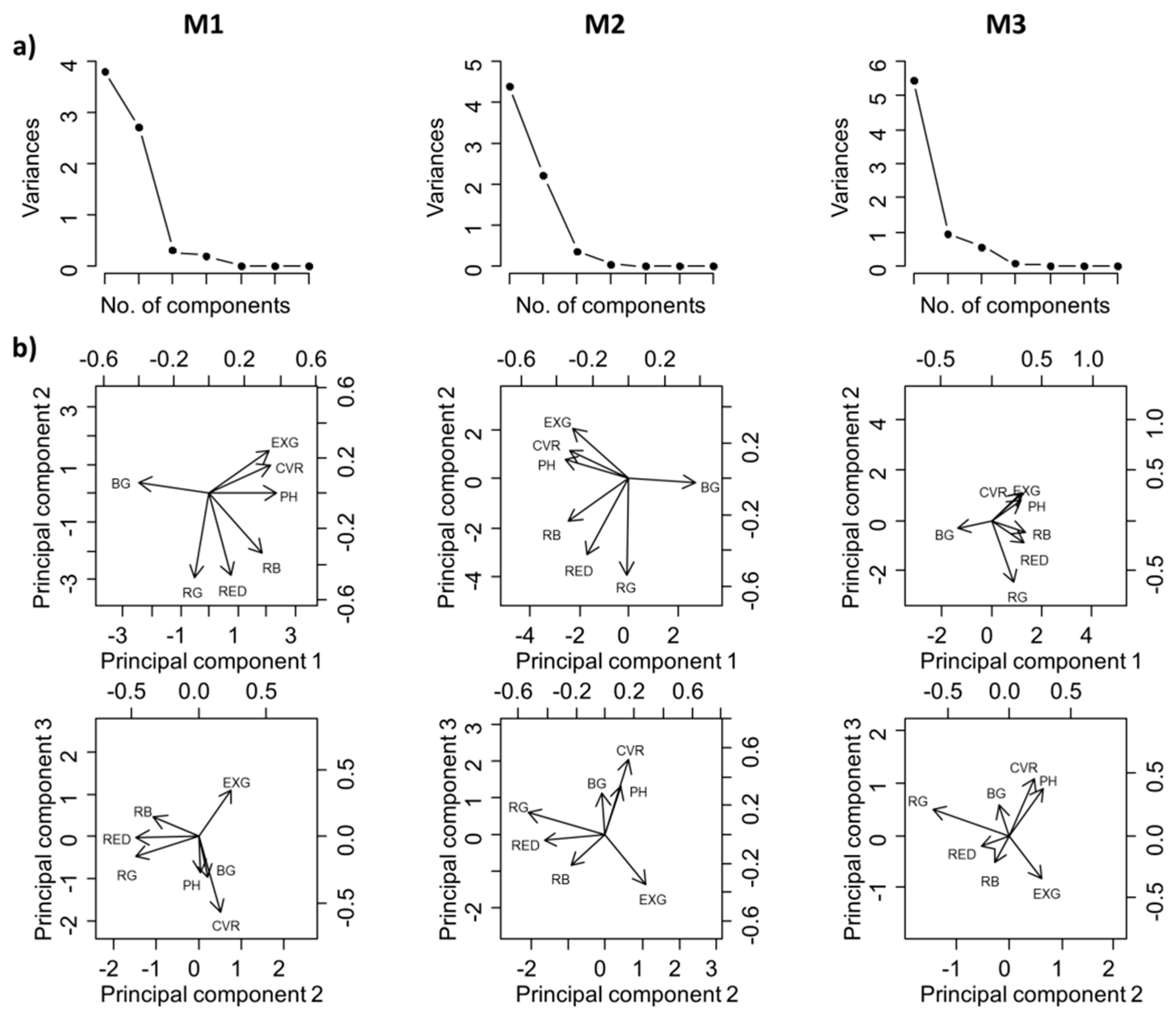

3.2. Relationship between Agronomic Parameters and Image Variables

3.3. Modeling the Biophysical Wheat Parameters

3.4. Modeling the Nitrogen Status

4. Discussion

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- FAOSTAT. Available online: http://faostat3.fao.org/ (accessed on 17 June 2016).

- Thoele, H.; Ehlert, D. Biomass related nitrogen fertilization with a crop sensor. Appl. Eng. Agric. 2010, 26, 769–775. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Hamdorf, A.; Ustyuzhanin, A.; Schirrmann, M.; Leithold, P.; Leithold, H.; Volk, T.; Tackenberg, M. Zielflächenorientierte, präzise Echtzeit-Fungizidapplikation in Getreide. Landtechnik 2015, 70, 31–43. [Google Scholar]

- Tackenberg, M.; Volkmar, C.; Dammer, K.-H. Sensor-based variable-rate fungicide application in winter wheat. Pest Manag. Sci. 2016, in press. [Google Scholar] [CrossRef] [PubMed]

- Thorp, K.R.; Wang, G.; West, A.L.; Moran, M.S.; Bronson, K.F.; White, J.W.; Mon, J. Estimating crop biophysical properties from remote sensing data by inverting linked radiative transfer and ecophysiological models. Remote Sens. Environ. 2012, 124, 224–233. [Google Scholar] [CrossRef]

- Borchard, N.; Schirrmann, M.; von Hebel, C.; Schmidt, M.; Baatz, R.; Firbank, L.; Vereecken, H.; Herbst, M. Spatio-temporal drivers of soil and ecosystem carbon fluxes at field scale in an upland grassland in Germany. Agric. Ecosyst. Environ. 2015, 211, 84–93. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Thöle, H.; Volk, T.; Hau, B. Variable-rate fungicide spraying in real time by combining a plant cover sensor and a decision support system. Precis. Agric. 2009, 10, 431–442. [Google Scholar] [CrossRef]

- Mirschel, W.; Schultz, A.; Wenkel, K.-O.; Wieland, R.; Poluektov, R. Crop growth modelling on different spatial scales—A wide spectrum of approaches. Arch. Agron. Soil Sci. 2004, 50, 329–343. [Google Scholar] [CrossRef]

- Ewert, F.; van Ittersum, M. K.; Heckelei, T.; Therond, O.; Bezlepkina, I.; Andersen, E. Scale changes and model linking methods for integrated assessment of agri-environmental systems. Agric. Ecosyst. Environ. 2011, 142, 6–17. [Google Scholar] [CrossRef]

- Newe, M.; Meier, H.; Johnen, A.; Volk, T. ProPlant expert.com—An online consultation system on crop protection in cereals, rape, potatoes and sugarbeet*. EPPO Bull. 2003, 33, 443–449. [Google Scholar] [CrossRef]

- Council Directive of 12 December 1991 concerning the protection of waters against pollution caused by nitrates from agricultural (91/676/EEC). Off. J. Eur. Commun. 1991, L 375, 1–8.

- Prost, L.; Jeuffroy, M.-H. Replacing the nitrogen nutrition index by the chlorophyll meter to assess wheat N status. Agron. Sust. Dev. 2007, 27, 321–330. [Google Scholar] [CrossRef]

- Justes, E.; Mary, B.; Meynard, J.-M.; Machet, J.-M.; Thelier-Huche, L. Determination of a critical nitrogen dilution curve for winter wheat crops. Ann. Bot. 1994, 74, 397–407. [Google Scholar] [CrossRef]

- Cao, Q.; Cui, Z.; Chen, X.; Khosla, R.; Dao, T.H.; Miao, Y. Quantifying spatial variability of indigenous nitrogen supply for precision nitrogen management in small scale farming. Precis. Agric. 2012, 13, 45–61. [Google Scholar] [CrossRef]

- Muñoz-Huerta, R.; Guevara-Gonzalez, R.; Contreras-Medina, L.; Torres-Pacheco, I.; Prado-Olivarez, J.; Ocampo-Velazquez, R. A review of methods for sensing the nitrogen status in plants: Advantages, disadvantages and recent advances. Sensors 2013, 13, 10823–10843. [Google Scholar] [CrossRef] [PubMed]

- Debaeke, P.; Rouet, P.; Justes, E. Relationship between the normalized SPAD index and the nitrogen nutrition index: Application to Durum Wheat. J. Plant Nutr. 2006, 29, 75–92. [Google Scholar] [CrossRef]

- Breda, N.J.J. Ground-based measurements of leaf area index: A review of methods, instruments and current controversies. J. Exp. Bot. 2003, 54, 2403–2417. [Google Scholar] [CrossRef] [PubMed]

- Schirrmann, M.; Hamdorf, A.; Giebel, A.; Dammer, K.-H.; Garz, A. A mobile sensor for leaf area index estimation from canopy light transmittance in wheat crops. Biosyst. Eng. 2015, 140, 23–33. [Google Scholar] [CrossRef]

- Ehlert, D.; Adamek, R.; Horn, H.-J. Laser rangefinder-based measuring of crop biomass under field conditions. Precis. Agric. 2009, 10, 395–408. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Magney, T.S.; Vierling, L.A.; Brown, T.T.; Huggins, D.R. LiDAR based biomass and crop nitrogen estimates for rapid, non-destructive assessment of wheat nitrogen status. Field Crop Res. 2014, 159, 21–32. [Google Scholar] [CrossRef]

- Erdle, K.; Mistele, B.; Schmidhalter, U. Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crop Res. 2011, 124, 74–84. [Google Scholar] [CrossRef]

- Scotford, I.M.; Miller, P.C.H. Estimating tiller density and leaf area index of winter wheat using spectral reflectance and ultrasonic sensing techniques. Biosyst. Eng. 2004, 89, 395–408. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Ehlert, D. Variable-rate fungicide spraying in cereals using a plant cover sensor. Precis. Agric. 2006, 7, 137–148. [Google Scholar] [CrossRef]

- Ehlert, D.; Dammer, K.-H. Widescale testing of the Crop-meter for site-specific farming. Precis. Agric. 2006, 7, 101–115. [Google Scholar] [CrossRef]

- Bao, Y.; Gao, W.; Gao, Z. Estimation of winter wheat biomass based on remote sensing data at various spatial and spectral resolutions. Front. Earth Sci. 2009, 3, 118–128. [Google Scholar] [CrossRef]

- Boegh, E.; Houborg, R.; Bienkowski, J.; Braban, C.F.; Dalgaard, T.; van Dijk, N.; Dragosits, U.; Holmes, E.; Magliulo, V.; Schelde, K.; et al. Remote sensing of LAI, chlorophyll and leaf nitrogen pools of crop- and grasslands in five European landscapes. Biogeosciences 2013, 10, 6279–6307. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bareth, G. UAV-based imaging for multi-temporal, very high resolution crop surface models to monitor crop growth variability. Photogramm. Fernerkund. Geoinf. 2013, 2013, 551–562. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using Crop Surface Models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Pölönen, I.; Saari, H.; Kaivosoja, J.; Honkavaara, E.; Pesonen, L. Hyperspectral imaging based biomass and nitrogen content estimations from light-weight UAV. Proc. SPIE 2013, 8887. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-temporal crop surface models combined with the RGB vegetation index from UAV-based images for forage monitoring in grassland. Int. Arch. Photogram. Rem. Sens. Spat. Inform. Sci. 2016, XLI-B1, 991–998. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-Green-Blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Lelong, C.C.D.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef]

- Mathews, A.; Jensen, J. Visualizing and quantifying vineyard canopy LAI using an Unmanned Aerial Vehicle (UAV) collected high density structure from motion point cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Tanaka, S.; Kawamura, K.; Maki, M.; Muramoto, Y.; Yoshida, K.; Akiyama, T. Spectral Index for Quantifying Leaf Area Index of Winter Wheat by Field Hyperspectral Measurements: A Case Study in Gifu Prefecture, Central Japan. Remote Sens. 2015, 7, 5329–5346. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.-M.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Øvergaard, S.I.; Isaksson, T.; Kvaal, K.; Korsaeth, A. Comparisons of two hand-held, multispectral field radiometers and a hyperspectral airborne imager in terms of predicting spring wheat grain yield and quality by means of powered partial least squares regression. J. Near Infrared Spectrosc. 2010, 18, 247. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses. PLOS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef] [PubMed]

- Han, Y.; Li, M.; Zhang, X.; Jia, L.; Chen, X.; Zhang, F. Precision management of winter wheat based on aerial images and hyperspectral data obtained by unmanned aircraft. In Proceedings 2005 IEEE International Geoscience and Remote Sensing Symposium, 2005, IGARSS’05, Seoul, Korea, 25–29 July 2005; Volume 5, pp. 3109–3112.

- Lancashire, P.D.; Bleiholder, H.; van den Boom, T.; Langelüddeke, P.; Stauss, R.; Weber, E.; Witzenberger, A. A uniform decimal code for growth stages of crops and weeds. Ann. Appl. Biol. 1991, 119, 561–601. [Google Scholar] [CrossRef]

- Dumas, J.B.A. Procedes de l’analyse organic. Ann. Chim. Phys. 1831, 247, 198–213. [Google Scholar]

- Zheng, Y.; Yu, J.; Kang, S.B.; Lin, S.; Kambhamettu, C. Single-image vignetting correction using radial gradient symmetry. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2008, CVPR 2008, Anchorage, AK, USA, 24–26 June 2008; pp. 1–8.

- Verhoeven, G. Taking computer vision aloft—Archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Yan, X.; Xiao, G.S. Linear Regression Analysis. Theory and Computing; World Scientific Publishing Co. Pte. Ltd.: Singapore, 2009. [Google Scholar]

- Yin, P.; Fan, X. Estimating shrinkage in multiple regression: A comparison of different analytical methods. J. Exp. Educ. 2001, 69, 203–224. [Google Scholar] [CrossRef]

- Lemaire, G.; van Oosterom, E.; Sheehy, J.; Jeuffroy, M.H.; Massignam, A.; Rossato, L. Is crop N demand more closely related to dry matter accumulation or leaf area expansion during vegetative growth? Field Crop Res. 2007, 100, 91–106. [Google Scholar] [CrossRef]

- Nagy, Z.; Németh, E.; Guóth, A.; Bona, L.; Wodala, B.; Pécsváradi, A. Metabolic indicators of drought stress tolerance in wheat: Glutamine synthetase isoenzymes and Rubisco. Plant Physiol. Biochem. 2013, 67, 48–54. [Google Scholar] [CrossRef] [PubMed]

- Villegas, D.; Aparicio, N.; Blanco, R.; Royo, C. Biomass accumulation and main stem elongation of durum wheat grown under mediterranean conditions. Ann. Bot. 2001, 88, 617–627. [Google Scholar] [CrossRef]

- Lamb, D.W.; Steyn-Ross, M.; Schaare, P.; Hanna, M.M.; Silvester, W.; Steyn-Ross, A. Estimating leaf nitrogen concentration in ryegrass (Lolium spp.) pasture using the chlorophyll red-edge: Theoretical modelling and experimental observations. Int. J. Remote Sens. 2002, 23, 3619–3648. [Google Scholar] [CrossRef]

- Li, F.; Gnyp, M.L.; Jia, L.; Miao, Y.; Yu, Z.; Koppe, W.; Bareth, G.; Chen, X.; Zhang, F. Estimating N status of winter wheat using a handheld spectrometer in the North China Plain. Field Crop. Res. 2008, 106, 77–85. [Google Scholar] [CrossRef]

- Schirrmann, M.; Hamdorf, A.; Garz, A.; Ustyuzhanin, A.; Dammer, K.-H. Estimating wheat biomass by combining image clustering with crop height. Comput. Electron. Agric. 2016, 121, 374–384. [Google Scholar] [CrossRef]

- Ehlert, D.; Heisig, M.; Adamek, R. Suitability of a laser rangefinder to characterize winter wheat. Precis. Agric. 2010, 11, 650–663. [Google Scholar] [CrossRef]

- Gebbers, R.; Ehlert, D.; Adamek, R. Rapid mapping of the leaf area index in agricultural crops. Agron. J. 2011, 103, 1532. [Google Scholar] [CrossRef]

- Gieselmann, C. Development of a cable-connected and autonomous UAV to be used as a carrier platform in agriculture. In 20. und 21. Workshop Computer Bildanalyse in der Landwirtschaft. 3. Workshop Unbemannte autonom fliegende Systeme (UAS) in der Landwirtschaft; Bornimer Agrartechnische Berichte: Osnabrück, Germany, 2014; Volume 88, pp. 142–146. [Google Scholar]

- WorldView-3 Satellite Sensor (0.31m). Available online: http://www.satimagingcorp.com/satellite-sensors/worldview-3/ (accessed on 17 June 2016).

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 63–74. [Google Scholar] [CrossRef]

- Grenzdörffer, G.; Zacharias, P. Bestandeshöhenermittlung landwirtschaftlicher Kulturen aus UAS-Punktwolken. DGPF Tagungsband 2014, 23, 1–8. [Google Scholar]

- Hunt, E.R.; Hively, W.D.; Daughtry, C.S.; McCarty, G.W.; Fujikawa, S.J.; Ng, T.L.; Tranchitella, M.; Linden, D.S.; Yoel, D.W. Remote sensing of crop leaf area index using unmanned airborne vehicles. In Proceedings of the Pecora 17 Symposium, Denver, CO, USA, 16–20 November 2008.

- Chen, P. A comparison of two approaches for estimating the wheat nitrogen nutrition index using remote sensing. Remote Sens. 2015, 7, 4527–4548. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Kaiser, H.F.; Rice, J. Little Jiffy, Mark IV. Educ. Psychol. Meas. 1974, 34, 11–117. [Google Scholar] [CrossRef]

| Mission Objective | Processed Item | ID | Date | Growth Stage | Cloud Cover [okta] | Wind Speed [ms−1] |

|---|---|---|---|---|---|---|

| Crop canopy | Orthoimage/Surface model | M1 | 05-18-2015 | BBCH * 41–47 (Booting) | 8 | 2–3 |

| Crop canopy | Orthoimage/Surface model | M2 | 06-04-2015 | BBCH 61–71 (Flowering) | 5 | 1–2 |

| Crop canopy | Orthoimage/Surface model | M3 | 06-16-2015 | BBCH 73–83 (Grain filling) | 7 | 4 |

| Ground model | Surface model | M4 | 07-31-2015 | After tillage | 8 | 4 |

| Variable | Abbreviation | Unit | Min | Mean | Max | Standard Deviation | Median |

|---|---|---|---|---|---|---|---|

| Mission 1 | |||||||

| Fresh biomass | FBM | kg·m−2 | 1.64 | 3.07 | 4.72 | 0.959 | 2.66 |

| Dry biomass | DBM | kg·m−2 | 0.63 | 0.87 | 1.07 | 0.128 | 0.88 |

| Leaf area index | LAI | 1.77 | 3.08 | 4.81 | 0.956 | 2.59 | |

| Nitrogen | Nt | % | 1.44 | 1.95 | 2.75 | 0.298 | 1.94 |

| Plant height | PHT | m | 0.44 | 0.58 | 0.7 | 0.076 | 0.58 |

| Mission 2 | |||||||

| Fresh biomass | FBM | kg·m−2 | 1.56 | 2.88 | 5.02 | 1.099 | 2.43 |

| Dry biomass | DBM | kg·m−2 | 0.66 | 1.02 | 1.46 | 0.051 | 0.96 |

| Leaf area index | LAI | 2.15 | 3.66 | 6.29 | 1.140 | 3.52 | |

| Nitrogen | Nt | % | 1.47 | 1.77 | 2.06 | 0.205 | 1.78 |

| Plant height | PHT | m | 0.45 | 0.61 | 0.82 | 0.111 | 0.58 |

| Mission 3 | |||||||

| Fresh biomass | FBM | kg·m−2 | 0.90 | 3.04 | 5.64 | 1.340 | 2.99 |

| Dry biomass | DBM | kg·m−2 | 0.47 | 1.23 | 1.76 | 0.375 | 1.27 |

| Leaf area index | LAI | 1.07 | 2.77 | 5.42 | 1.069 | 2.57 | |

| Nitrogen | Nt | % | 1.02 | 1.36 | 1.70 | 0.192 | 1.36 |

| Plant height | PHT | m | 0.30 | 0.62 | 0.80 | 0.154 | 0.67 |

| Image Variable | Description/Computation |

|---|---|

| CVR | Percentage of crop pixels within plot area |

| EXG 1 | Related to the green channel (EXG = 2 green channel − red channel − blue channel) |

| RED 1 | Red channel |

| BG 1 | Ratio of the blue and green channel |

| RG 1 | Ratio of the red and green channel |

| RB 1 | Ratio of the red and blue channel |

| PHTUAV 2 | Plant height from UAV images. Computed by subtracting the M4 surface model (ground surface model) from the M1-3 surface models. |

| Mission | Variable | FBM | DBM | LAI | PHT | Nt | CVR | EXG | RED | BG | RG | RB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M1 | DBM | 0.87 | ||||||||||

| LAI | 0.98 | 0.82 | ||||||||||

| PHT | 0.94 | 0.84 | 0.90 | |||||||||

| Nt | 0.44 ns | 0.23 ns | 0.45 | 0.27 ns | ||||||||

| CVR | 0.71 | 0.63 | 0.69 | 0.77 | −0.01 ns | |||||||

| EXG | 0.69 | 0.52 | 0.73 | 0.73 | −0.05 ns | 0.83 | ||||||

| RED | 0.54 | 0.56 | 0.51 | 0.41 ns | 0.53 | −0.02 ns | −0.16ns | |||||

| BG | −0.95 | −0.82 | −0.97 | −0.93 | −0.26 ns | −0.78 | −0.82 | −0.42 ns | ||||

| RG | 0.02 ns | 0.11 ns | −0.01 ns | −0.09 ns | 0.43 ns | −0.47 | −0.68 | 0.82 | 0.14 ns | |||

| RB | 0.88 | 0.80 | 0.87 | 0.78 | 0.52 | 0.43 ns | 0.35 ns | 0.85 | −0.82 | 0.44 ns | ||

| PHTUAV | 0.83 | 0.68 | 0.83 | 0.88 | 0.17 ns | 0.76 | 0.74 | 0.26 ns | −0.85 | −0.18 ns | 0.65 | |

| M2 | DBM | 0.97 | ||||||||||

| LAI | 0.94 | 0.93 | ||||||||||

| PHT | 0.94 | 0.90 | 0.90 | |||||||||

| Nt | −0.06 ns | −0.04 ns | −0.16 ns | −0.19 ns | ||||||||

| CVR | 0.81 | 0.78 | 0.82 | 0.86 | −0.53 | |||||||

| EXG | 0.74 | 0.65 | 0.75 | 0.81 | −0.48 | 0.89 | ||||||

| RED | 0.78 | 0.82 | 0.70 | 0.71 | 0.29 ns | 0.47 | 0.26 ns | |||||

| BG | −0.92 | −0.86 | −0.89 | −0.95 | 0.30 ns | −0.92 | −0.93 | −0.59 | ||||

| RG | 0.08 ns | 0.20 ns | 0.01 ns | −0.04 ns | 0.68 | −0.31 ns | −0.56 | 0.63 | 0.23 ns | |||

| RB | 0.97 | 0.95 | 0.91 | 0.93 | 0.05 ns | 0.75 | 0.70 | 0.86 | −0.90 | 0.19 ns | ||

| PHTUAV | 0.86 | 0.81 | 0.84 | 0.92 | −0.38 ns | 0.94 | 0.88 | 0.55 | −0.94 | −0.24 ns | 0.82 | |

| M3 | DBM | 0.98 | ||||||||||

| LAI | 0.96 | 0.92 | ||||||||||

| PHT | 0.92 | 0.94 | 0.85 | |||||||||

| Nt | −0.29 ns | −0.32 ns | −0.19 ns | −0.49 | ||||||||

| CVR | 0.88 | 0.92 | 0.77 | 0.96 | −0.50 | |||||||

| EXG | 0.94 | 0.90 | 0.94 | 0.83 | −0.35 ns | 0.80 | ||||||

| RED | 0.87 | 0.80 | 0.85 | 0.74 | −0.03 ns | 0.71 | 0.78 | |||||

| BG | −0.97 | −0.92 | −0.95 | −0.86 | 0.28 ns | −0.84 | −0.98 | −0.88 | ||||

| RG | 0.41 ns | 0.36 ns | 0.38 ns | 0.32 ns | 0.31 ns | 0.31 ns | 0.19 ns | 0.74 | −0.39 ns | |||

| RB | 0.94 | 0.87 | 0.94 | 0.80 | −0.14 ns | 0.75 | 0.90 | 0.96 | −0.96 | 0.58 | ||

| PHTUAV | 0.92 | 0.95 | 0.85 | 0.98 | −0.56 | 0.94 | 0.84 | 0.68 | −0.85 | 0.23 ns | 0.77 |

| Variable | Mission | R2 | Significance | PC | R2val (n = 10) | RMSE (n = 10) | ME (n = 10) |

|---|---|---|---|---|---|---|---|

| FBM | M1 | 0.92 | *** | 1***, 2*** | 0.93 | 0.46 | −0.38 |

| FBM | M2 | 0.93 | *** | 1***, 2***, 3 | 0.87 | 0.53 | −0.33 |

| FBM | M3 | 0.97 | *** | 1***, 2** | 0.99 | 0.48 | −0.40 |

| DBM | M1 | 0.70 | *** | 1***, 2 | 0.73 | 0.10 | −0.06 |

| DBM | M2 | 0.89 | *** | 1***, 2*** | 0.83 | 0.14 | −0.08 |

| DBM | M3 | 0.94 | *** | 1***, 2**, 3** | 0.94 | 0.21 | −0.15 |

| LAI | M1 | 0.94 | *** | 1***, 2***, 3* | 0.96 | 0.46 | −0.41 |

| LAI | M2 | 0.83 | *** | 1***, 2 | 0.90 | 0.48 | −0.15 |

| LAI | M3 | 0.90 | *** | 1***, 3* | 0.93 | 0.48 | −0.36 |

| PHT | M1 | 0.87 | *** | 1*** | 0.87 | 0.06 | −0.05 |

| PHT | M2 | 0.93 | *** | 1***, 2 | 0.90 | 0.06 | −0.05 |

| PHT | M3 | 0.96 | *** | 1***, 2***, 3*** | 0.88 | 0.15 | −0.11 |

| Variable | Mission | R2 | Significance | PC | R2val (n = 10) | RMSE (n = 10) | ME (n = 10) |

|---|---|---|---|---|---|---|---|

| Nt | M1 | 0.22 | * | 2* | 0.43 | 0.20 | −0.13 |

| Nt | M2 | 0.65 | *** | 1*, 2***, 3** | 0.64 | 0.14 | −0.08 |

| Nt | M3 | 0.40 | ** | 2**, 3* | 0.24 | 0.17 | 0.05 |

| Variable | Mission | R²val (n = 10) | RMSE (n = 10) | ME (n = 10) |

|---|---|---|---|---|

| NNI | M1 | 0.73 | 0.11 | −0.09 |

| NNI | M2 | 0.58 | 0.11 | −0.08 |

| NNI | M3 | 0.37 | 0.10 | −0.03 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. https://doi.org/10.3390/rs8090706

Schirrmann M, Giebel A, Gleiniger F, Pflanz M, Lentschke J, Dammer K-H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sensing. 2016; 8(9):706. https://doi.org/10.3390/rs8090706

Chicago/Turabian StyleSchirrmann, Michael, Antje Giebel, Franziska Gleiniger, Michael Pflanz, Jan Lentschke, and Karl-Heinz Dammer. 2016. "Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery" Remote Sensing 8, no. 9: 706. https://doi.org/10.3390/rs8090706

APA StyleSchirrmann, M., Giebel, A., Gleiniger, F., Pflanz, M., Lentschke, J., & Dammer, K.-H. (2016). Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sensing, 8(9), 706. https://doi.org/10.3390/rs8090706