Object-Based Canopy Gap Segmentation and Classification: Quantifying the Pros and Cons of Integrating Optical and LiDAR Data

Abstract

:1. Introduction

2. Methodology

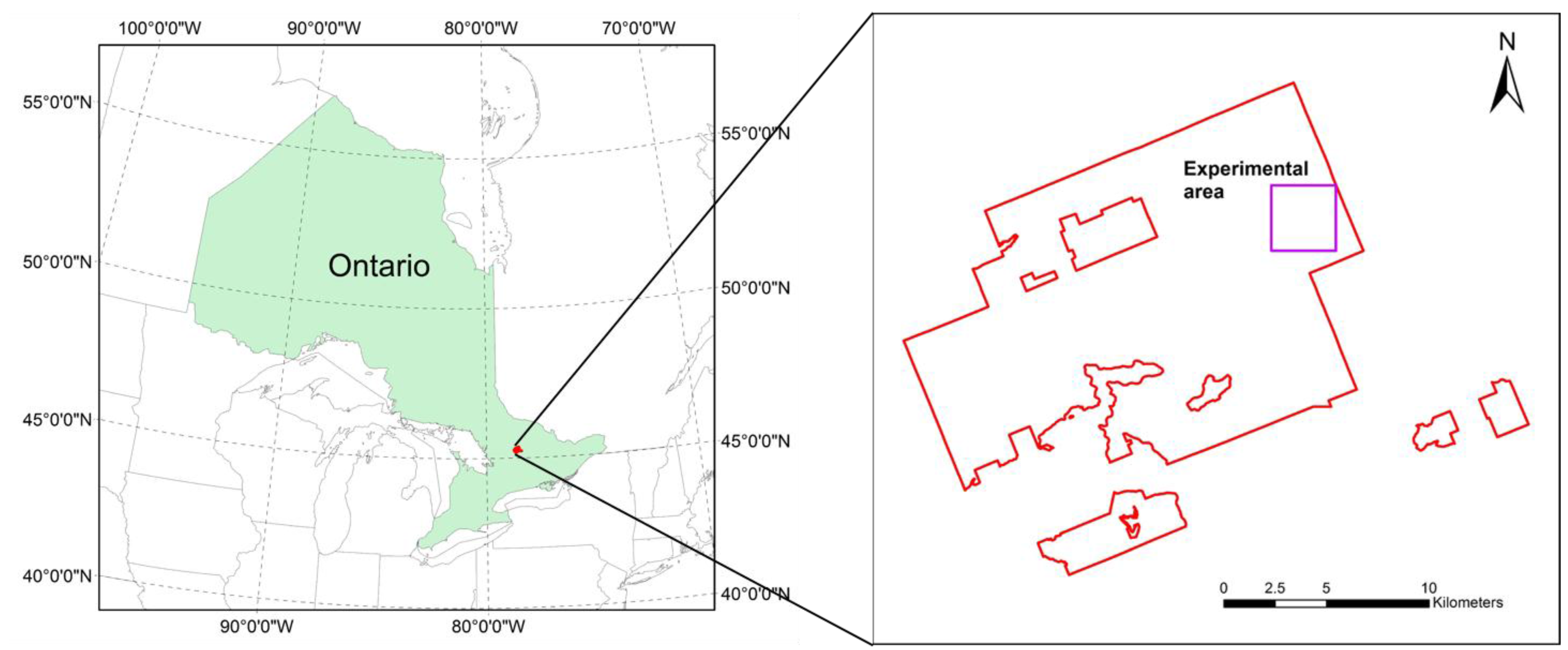

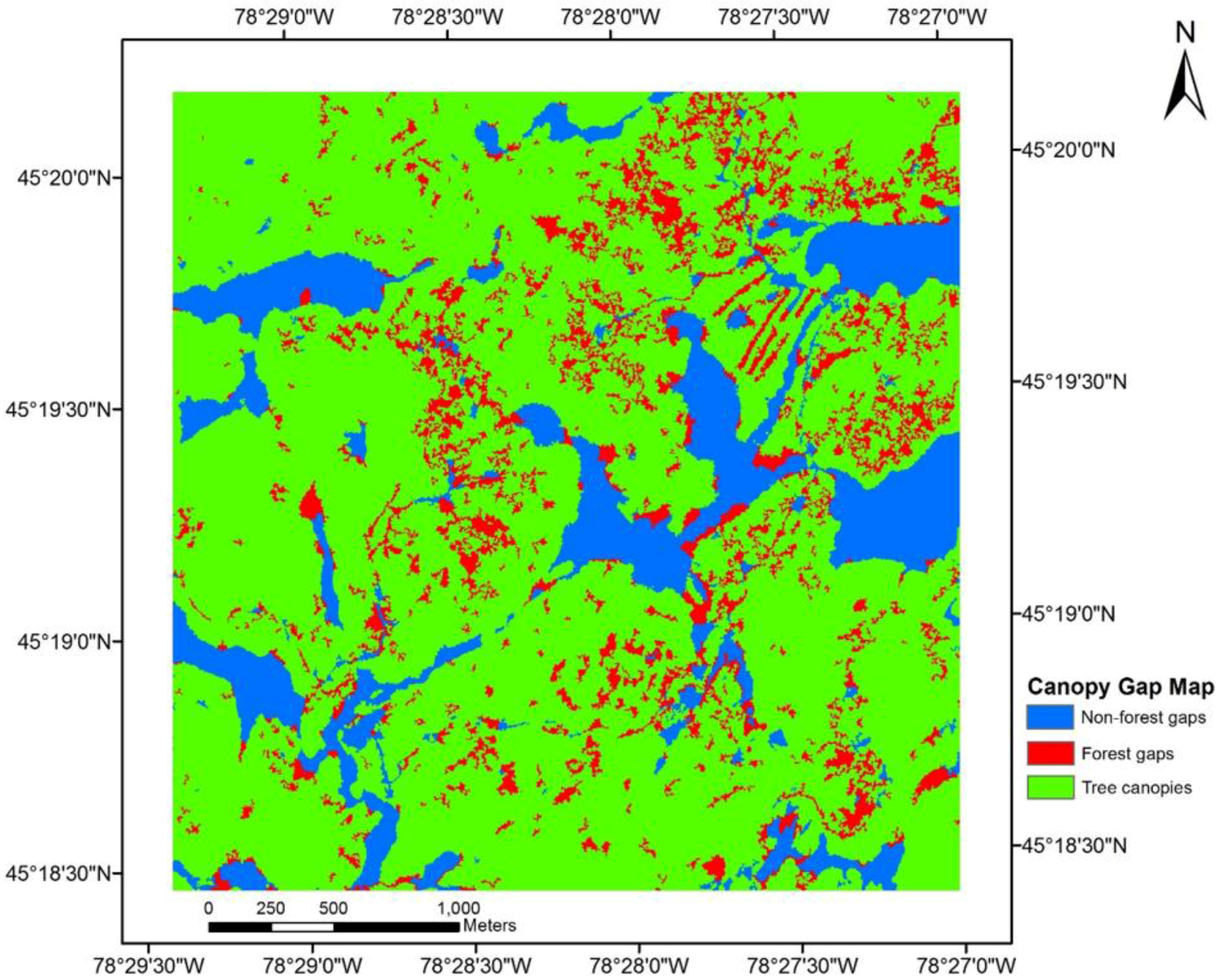

2.1. Study Area and Experimental Site Selection

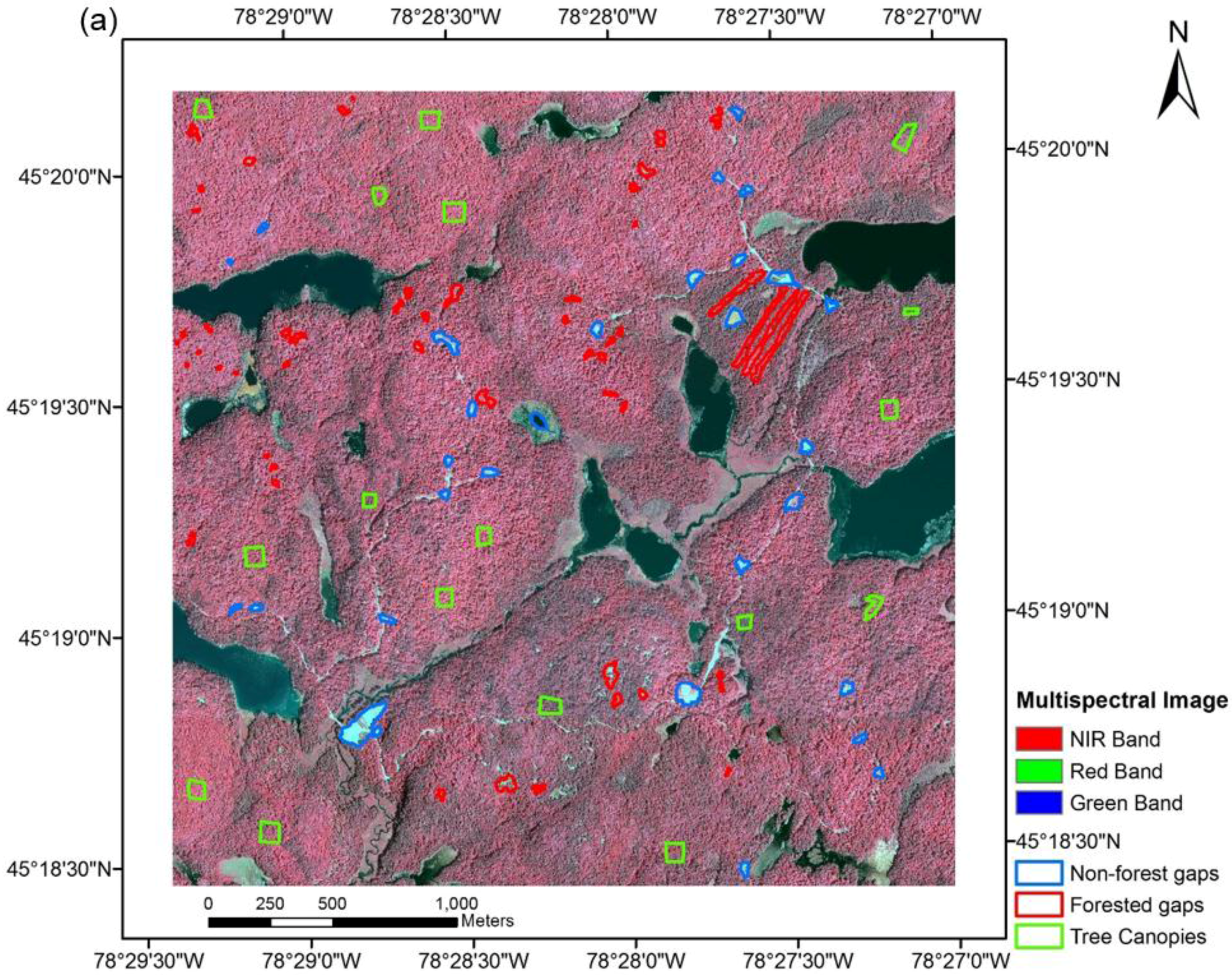

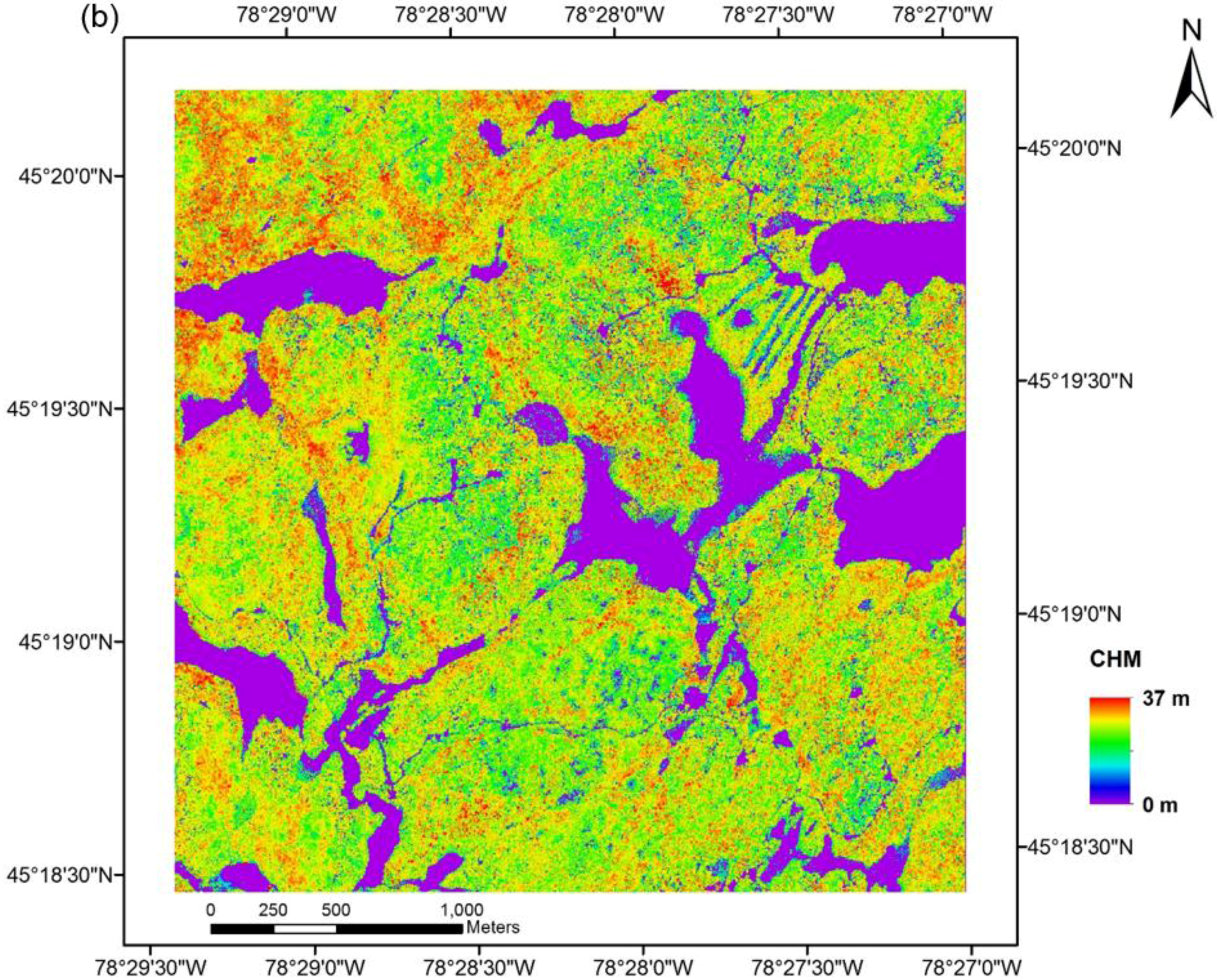

2.2. Multi-Source Remote Sensing Data

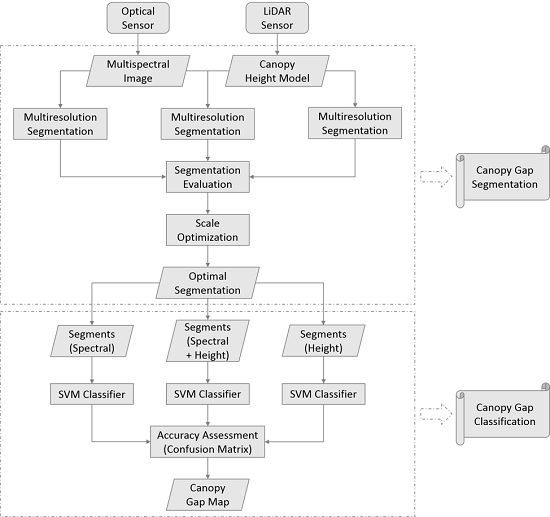

2.3. Methods

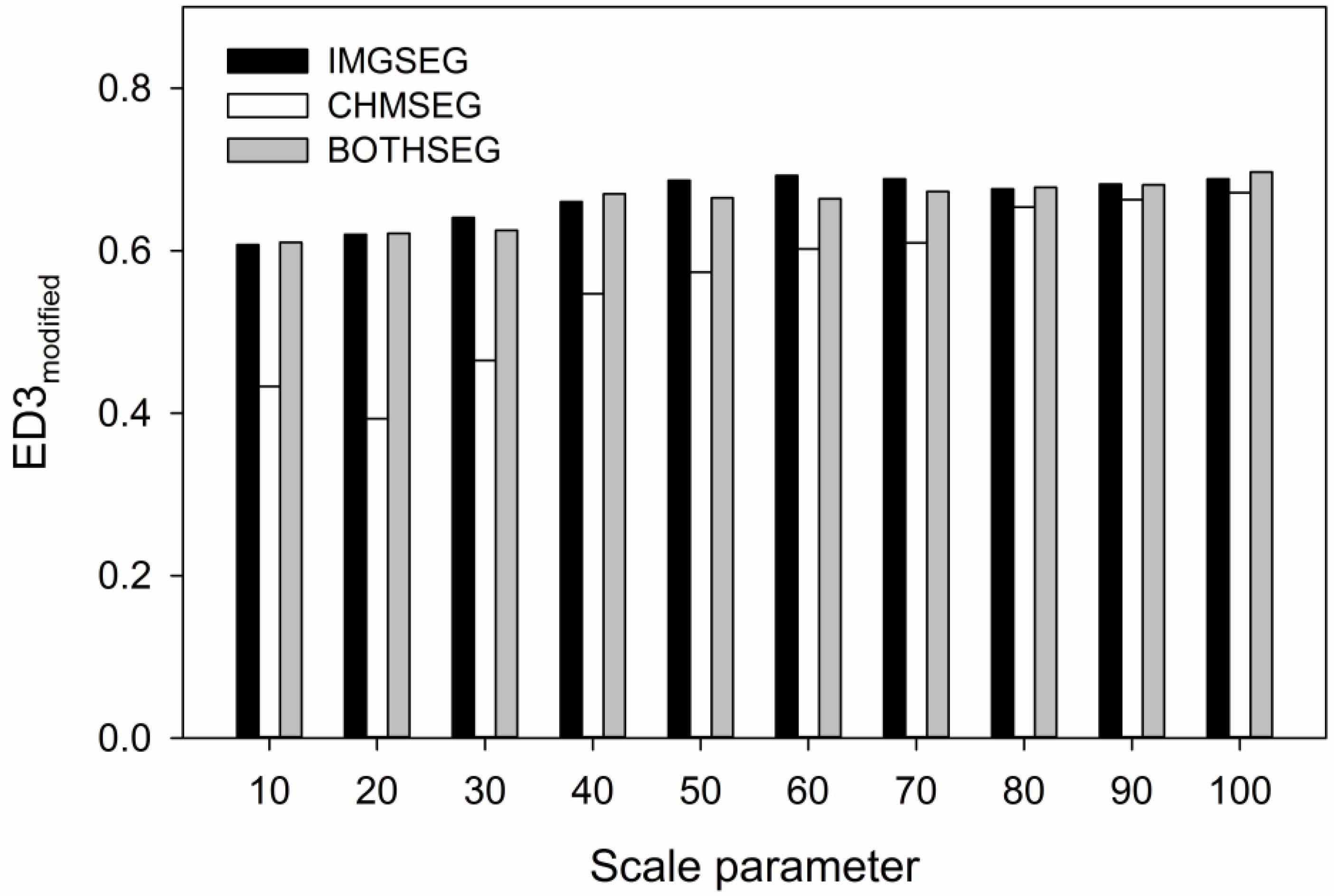

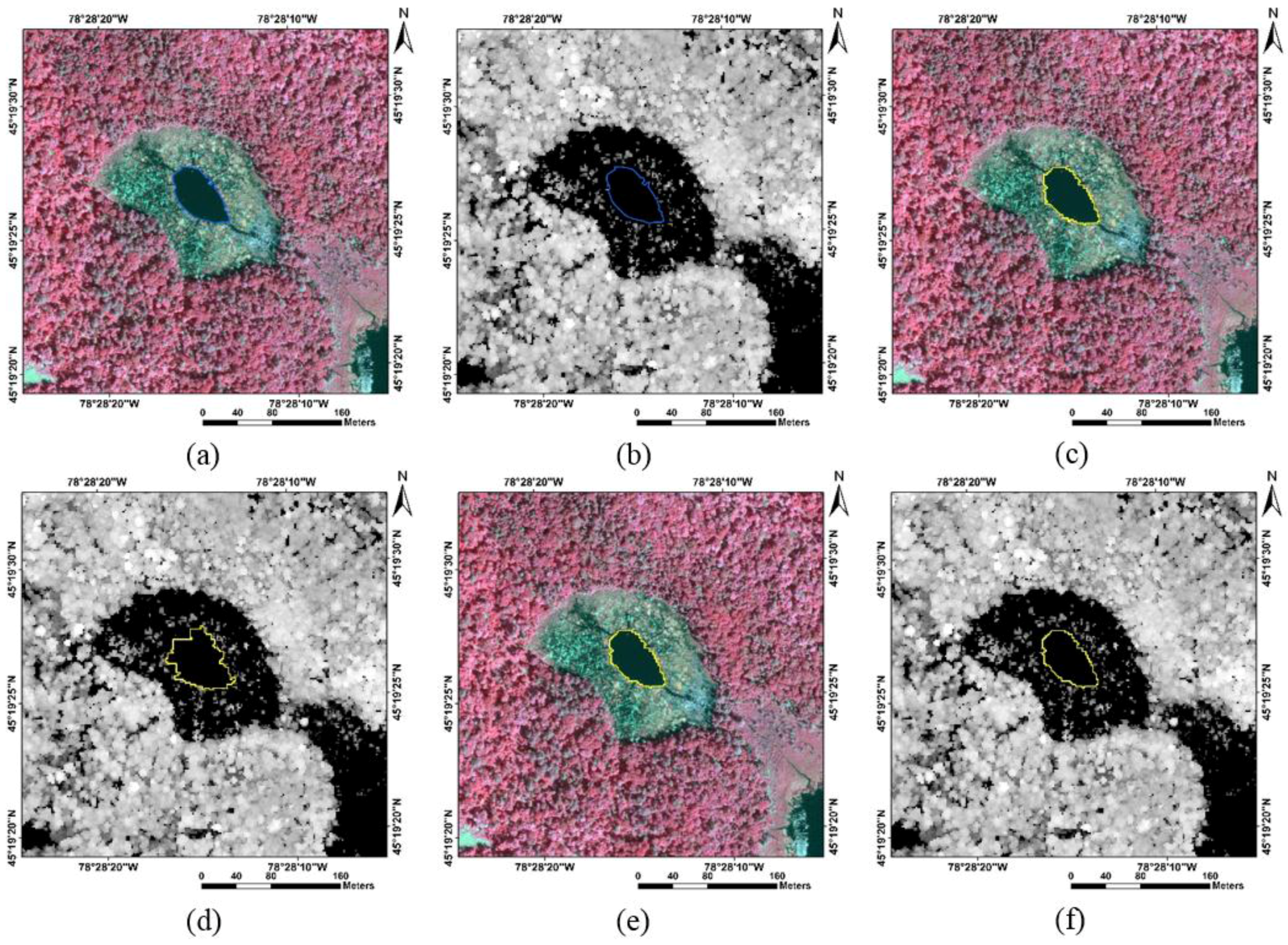

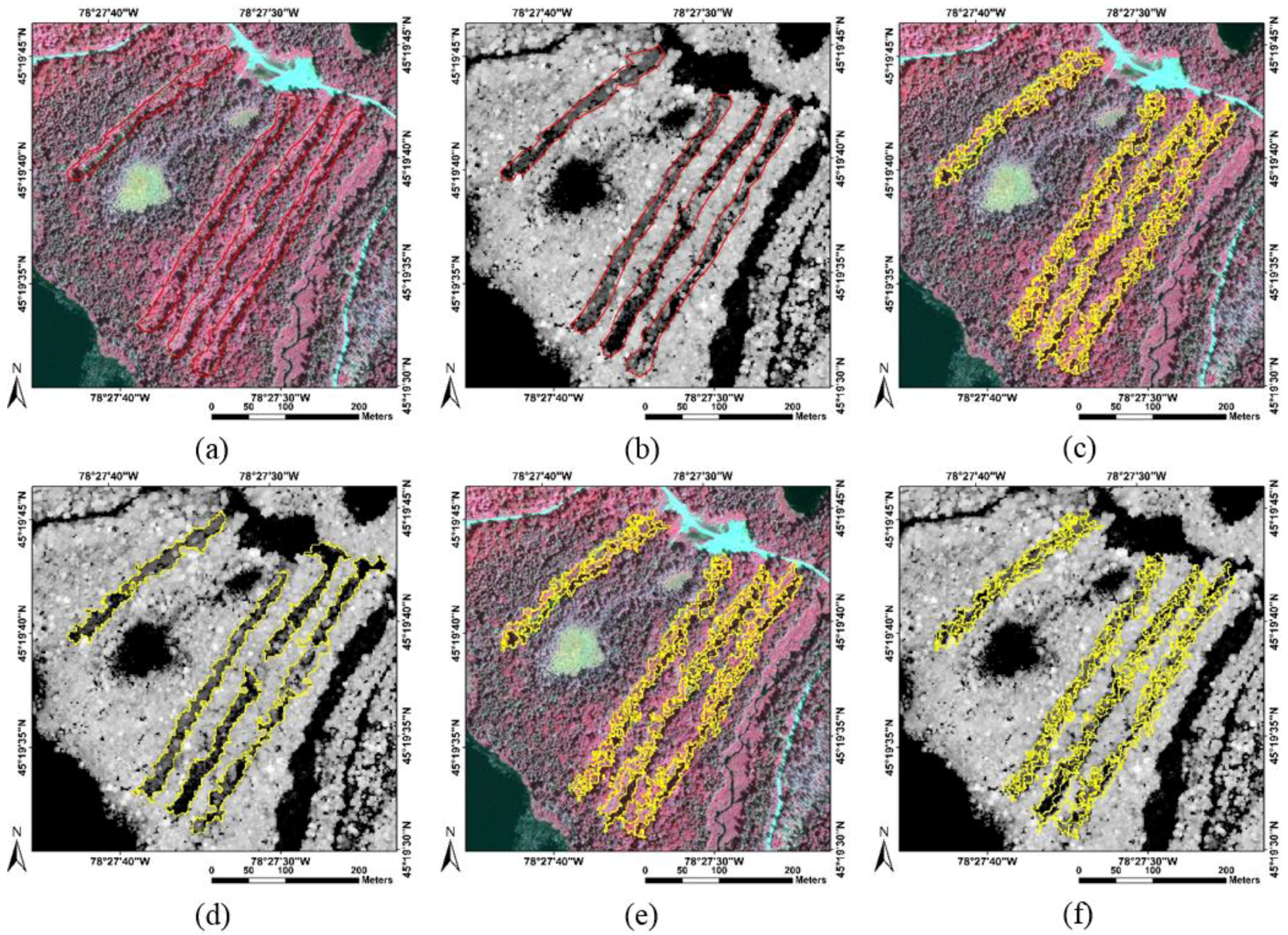

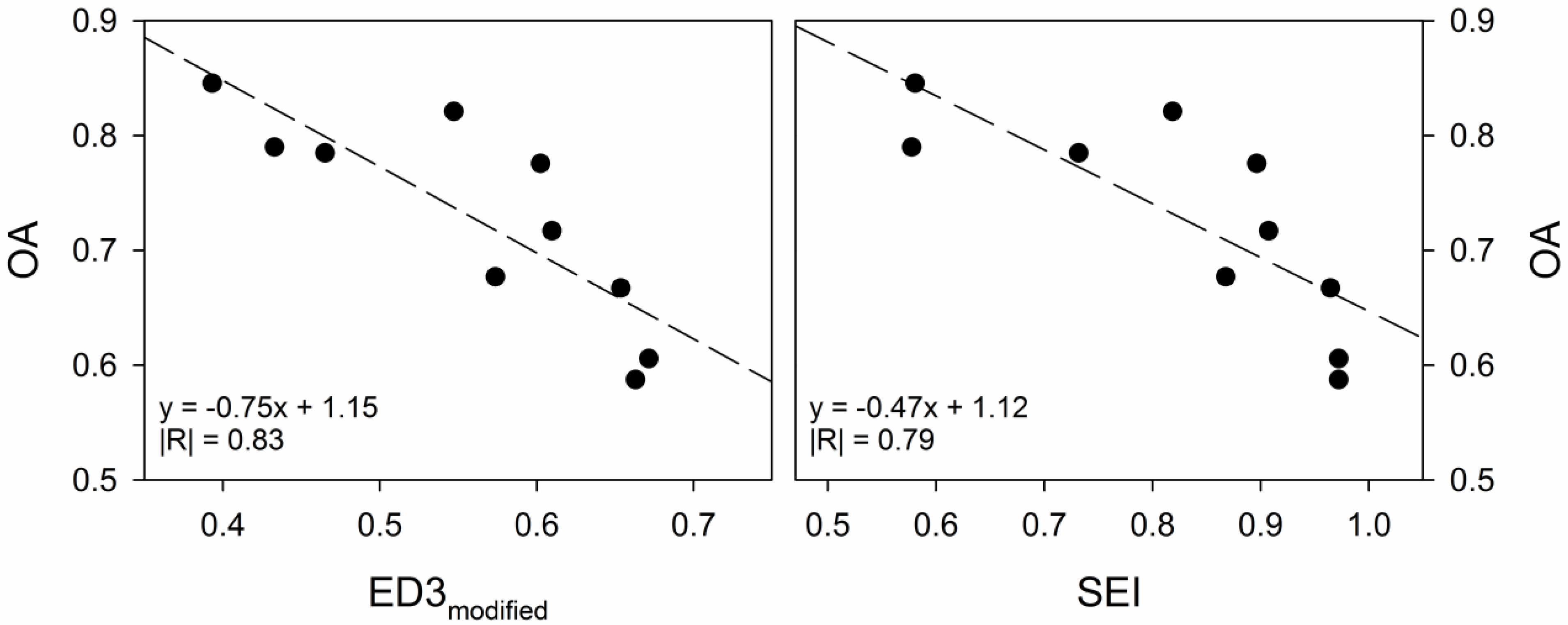

2.3.1. Canopy Gap Delineation

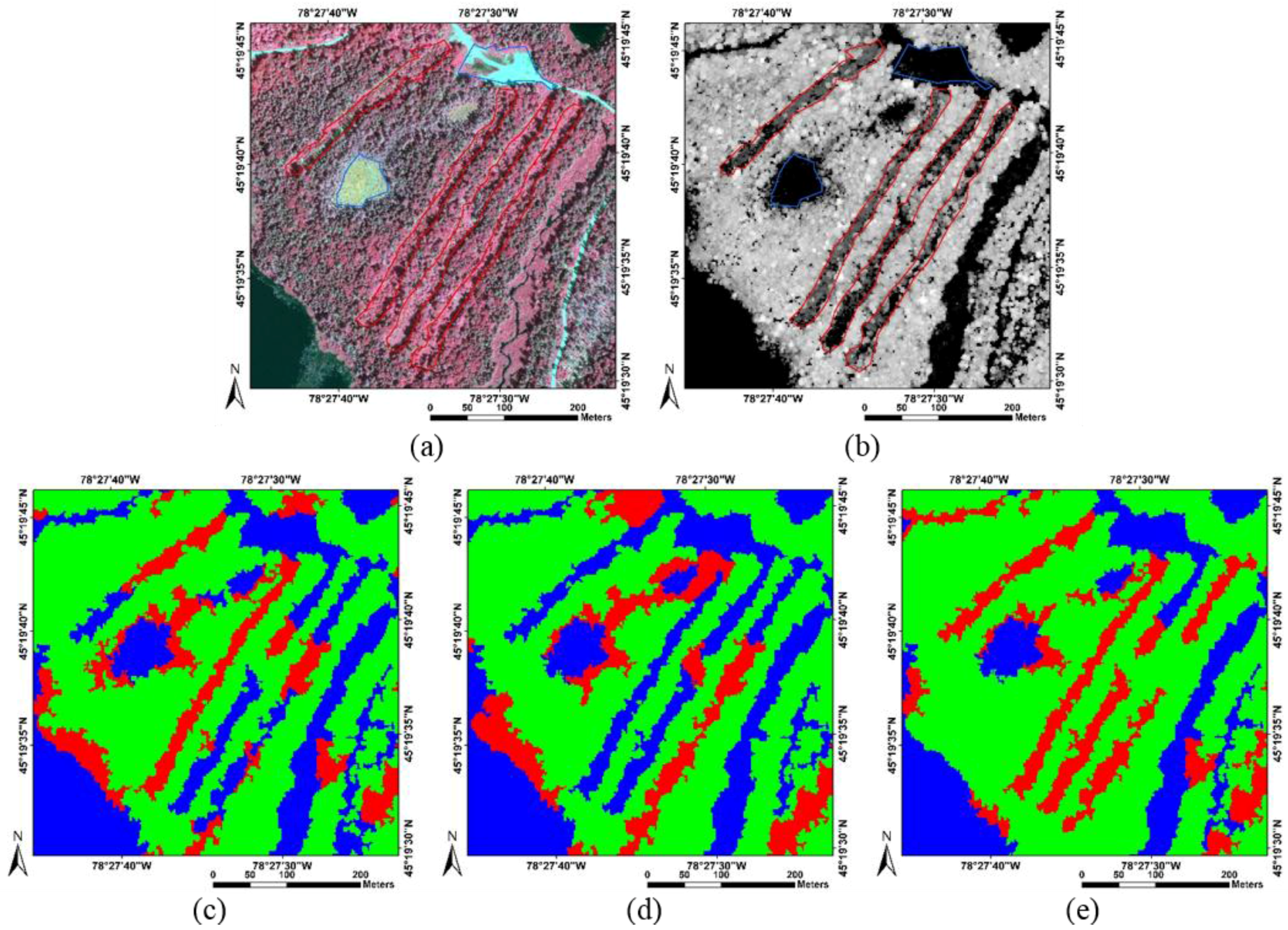

2.3.2. Object-Based Canopy Gap Classification

3. Results

| Data Source | Reference | Non-Forest Gaps | Forest Gaps | Tree Canopies | |

|---|---|---|---|---|---|

| Classification | |||||

| Spectral | Non-forest gaps | 14,079 | 618 | 0 | |

| Forest gaps | 2069 | 12,109 | 1900 | ||

| Tree canopies | 1167 | 3614 | 15,073 | ||

| Height | Non-forest gaps | 14,390 | 856 | 18 | |

| Forest gaps | 1975 | 11,158 | 1003 | ||

| Tree canopies | 950 | 4327 | 15,952 | ||

| Spectral + Height | Non-forest gaps | 14,059 | 498 | 0 | |

| Forest gaps | 2501 | 13,628 | 1492 | ||

| Tree canopies | 755 | 2215 | 15,481 | ||

| Gap Class | Accuracy | Spectral | Height | Spectral + Height |

|---|---|---|---|---|

| Non-forest gaps | PA | 81.31% | 83.11% | 81.20% |

| UA | 95.80% | 94.27% | 96.58% | |

| Forest gaps | PA | 74.10% | 68.28% | 83.40% |

| UA | 75.31% | 78.93% | 77.34% |

4. Discussion

4.1. Remote Sensing Data Processing

4.2. Forest Ecosystem Management

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- St-Onge, B.; Vepakomma, U.; Sénécal, J.-F.; Kneeshaw, D.; Doyon, F. Canopy gap detection and analysis with airborne laser scanning. In Forestry Applications of Airborne Laser Scanning; Maltamo, M., Næsset, E., Vauhkonen, J., Eds.; Springer Netherlands: Berlin, Germany, 2014; Volume 27, pp. 419–437. [Google Scholar]

- Kupfer, J.A.; Runkle, J.R. Early gap successional pathways in a fagus-acer forest preserve: Pattern and determinants. J. Veg. Sci. 1996, 7, 247–256. [Google Scholar] [CrossRef]

- Suarez, A.V.; Pfennig, K.S.; Robinson, S.K. Nesting success of a disturbance-dependent songbird on different kinds of edges. Conserv. Biol. 1997, 11, 928–935. [Google Scholar] [CrossRef]

- Bolton, N.W.; D’Amato, A.W. Regeneration responses to gap size and coarse woody debris within natural disturbance-based silvicultural systems in northeastern minnesota, USA. For. Ecol. Manag. 2011, 262, 1215–1222. [Google Scholar] [CrossRef]

- Vepakomma, U.; St-Onge, B.; Kneeshaw, D. Boreal forest height growth response to canopy gap openings—An assessment with multi-temporal lidar data. Ecol. Appl. 2011, 21, 99–121. [Google Scholar] [CrossRef] [PubMed]

- Asner, G.P.; Keller, M.; Pereira, R., Jr.; Zweede, J.C.; Silva, J.N. Canopy damage and recovery after selective logging in amazonia: Field and satellite studies. Ecol. Appl. 2004, 14, 280–298. [Google Scholar] [CrossRef]

- Negrón-Juárez, R.I.; Chambers, J.Q.; Marra, D.M.; Ribeiro, G.H.; Rifai, S.W.; Higuchi, N.; Roberts, D. Detection of subpixel treefall gaps with landsat imagery in central amazon forests. Remote Sens. Environ. 2011, 115, 3322–3328. [Google Scholar] [CrossRef]

- Clark, M.L.; Clark, D.B.; Roberts, D.A. Small-footprint lidar estimation of sub-canopy elevation and tree height in a tropical rain forest landscape. Remote Sens. Environ. 2004, 91, 68–89. [Google Scholar] [CrossRef]

- Johansen, K.; Arroyo, L.A.; Phinn, S.; Witte, C. Comparison of geo-object based and pixel-based change detection of riparian environments using high spatial resolution multi-spectral imagery. Photogramm. Eng. Remote Sens. 2010, 76, 123–136. [Google Scholar] [CrossRef]

- Jackson, R.G.; Foody, G.M.; Quine, C.P. Characterising windthrown gaps from fine spatial resolution remotely sensed data. For. Ecol. Manag. 2000, 135, 253–260. [Google Scholar] [CrossRef]

- He, Y.; Franklin, S.E.; Guo, X.; Stenhouse, G.B. Narrow-linear and small-area forest disturbance detection and mapping from high spatial resolution imagery. J. Appl. Remote Sens. 2009, 3. [Google Scholar] [CrossRef]

- Malahlela, O.; Cho, M.A.; Mutanga, O. Mapping canopy gaps in an indigenous subtropical coastal forest using high-resolution worldview-2 data. Int. J. Remote Sens. 2014, 35, 6397–6417. [Google Scholar] [CrossRef]

- Cho, M.A.; Mathieu, R.; Asner, G.P.; Naidoo, L.; van Aardt, J.; Ramoelo, A.; Debba, P.; Wessels, K.; Main, R.; Smit, I.P. Mapping tree species composition in south african savannas using an integrated airborne spectral and LiDAR system. Remote Sens. Environ. 2012, 125, 214–226. [Google Scholar] [CrossRef]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using worldview-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Vepakomma, U.; St-Onge, B.; Kneeshaw, D. Spatially explicit characterization of boreal forest gap dynamics using multi-temporal lidar data. Remote Sens. Environ. 2008, 112, 2326–2340. [Google Scholar] [CrossRef]

- Gaulton, R.; Malthus, T.J. LiDAR mapping of canopy gaps in continuous cover forests: A comparison of canopy height model and point cloud based techniques. Int. J. Remote Sens. 2010, 31, 1193–1211. [Google Scholar] [CrossRef]

- Hossain, S.M.Y.; Caspersen, J.P. In-situ measurement of twig dieback and regrowth in mature Acer saccharum trees. For. Ecol. Manag. 2012, 270, 183–188. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation. Available online: http://www.ecognition.com/sites/default/files/405_baatz_fp_12.pdf (accessed on 5 October 2015).

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Carleer, A.; Debeir, O.; Wolff, E. Assessment of very high spatial resolution satellite image segmentations. Photogramm. Eng. Remote Sens. 2005, 71, 1285–1294. [Google Scholar] [CrossRef]

- Zhang, Y.J. A survey on evaluation methods for image segmentation. Pattern Recognit. 1996, 29, 1335–1346. [Google Scholar] [CrossRef]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Liu, Y.; Bian, L.; Meng, Y.; Wang, H.; Zhang, S.; Yang, Y.; Shao, X.; Wang, B. Discrepancy measures for selecting optimal combination of parameter values in object-based image analysis. ISPRS J. Photogramm. Remote Sens. 2012, 68, 144–156. [Google Scholar] [CrossRef]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.; Weng, Q. An automated method to parameterize segmentation scale by enhancing intrasegment homogeneity and intersegment heterogeneity. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1282–1286. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.; Caspersen, J.; Jones, T. A discrepancy measure for segmentation evaluation from the perspective of object recognition. ISPRS J. Photogramm. Remote Sens. 2015, 101, 186–192. [Google Scholar] [CrossRef]

- Karatzoglou, A.; Smola, A.; Hornik, K.; Zeileis, A. Kernlab—An S4 package for kernel methods in R. J. Stat. Softw. 2004, 11, 1–20. [Google Scholar] [CrossRef]

- Vapnik, V.N.; Vapnik, V. Statistical Learning Theory; Wiley: New York, NY, USA, 1998; Volume 1. [Google Scholar]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree species classification in the southern Alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and LiDAR data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å.; Söderman, U. Species identification of individual trees by combining high resolution LiDAR data with multi-spectral images. Int. J. Remote Sens. 2008, 29, 1537–1552. [Google Scholar] [CrossRef]

- Ørka, H.O.; Gobakken, T.; Næsset, E.; Ene, L.; Lien, V. Simultaneously acquired airborne laser scanning and multispectral imagery for individual tree species identification. Can. J. Remote Sens. 2012, 38, 125–138. [Google Scholar] [CrossRef]

- Zhou, W.; Troy, A. An object-oriented approach for analysing and characterizing urban landscape at the parcel level. Int. J. Remote Sens. 2008, 29, 3119–3135. [Google Scholar] [CrossRef]

- Zhou, Y.; Qiu, F. Fusion of high spatial resolution worldview-2 imagery and LiDAR pseudo-waveform for object-based image analysis. ISPRS J. Photogramm. Remote Sens. 2015, 101, 221–232. [Google Scholar] [CrossRef]

- Gilmore, M.S.; Wilson, E.H.; Barrett, N.; Civco, D.L.; Prisloe, S.; Hurd, J.D.; Chadwick, C. Integrating multi-temporal spectral and structural information to map wetland vegetation in a lower connecticut river tidal marsh. Remote Sens. Environ. 2008, 112, 4048–4060. [Google Scholar] [CrossRef]

- Johansen, K.; Phinn, S.; Witte, C. Mapping of riparian zone attributes using discrete return LiDAR, quickbird and SPOT-5 imagery: Assessing accuracy and costs. Remote Sens. Environ. 2010, 114, 2679–2691. [Google Scholar] [CrossRef]

- Rampi, L.P.; Knight, J.F.; Pelletier, K.C. Wetland mapping in the upper midwest United States. Photogramm. Eng. Remote Sens. 2014, 80, 439–448. [Google Scholar] [CrossRef]

- Liu, J.; Li, P.; Wang, X. A new segmentation method for very high resolution imagery using spectral and morphological information. ISPRS J. Photogramm. Remote Sens. 2015, 101, 145–162. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Wang, J.; Wang, Z. Hybrid region merging method for segmentation of high-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 98, 19–28. [Google Scholar] [CrossRef]

- Li, P.; Xiao, X. Multispectral image segmentation by a multichannel watershed-based approach. Int. J. Remote Sens. 2007, 28, 4429–4452. [Google Scholar] [CrossRef]

- Wang, L. A multi-scale approach for delineating individual tree crowns with very high resolution imagery. Photogramm. Eng. Remote Sens. 2010, 76, 371–378. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.; Caspersen, J. A multi-band watershed segmentation method for individual tree crown delineation from high resolution multispectral aerial image. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 1588–1591.

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Koukoulas, S.; Blackburn, G.A. Quantifying the spatial properties of forest canopy gaps using LiDAR imagery and GIS. Int. J. Remote Sens. 2004, 25, 3049–3072. [Google Scholar] [CrossRef]

- Koukoulas, S.; Blackburn, G.A. Spatial relationships between tree species and gap characteristics in broad-leaved deciduous woodland. J. Veg. Sci. 2005, 16, 587–596. [Google Scholar] [CrossRef]

- Zhang, K. Identification of gaps in mangrove forests with airborne LiDAR. Remote Sens. Environ. 2008, 112, 2309–2325. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Kaartinen, H.; Maltamo, M. Automatic detection of harvested trees and determination of forest growth using airborne laser scanning. Remote Sens. Environ. 2004, 90, 451–462. [Google Scholar] [CrossRef]

- St-Onge, B.; Vepakomma, U. Assessing forest gap dynamics and growth using multi-temporal laser-scanner data. Power 2004, 140, 173–178. [Google Scholar]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Jones, T.; Caspersen, J.; He, Y. Object-Based Canopy Gap Segmentation and Classification: Quantifying the Pros and Cons of Integrating Optical and LiDAR Data. Remote Sens. 2015, 7, 15917-15932. https://doi.org/10.3390/rs71215811

Yang J, Jones T, Caspersen J, He Y. Object-Based Canopy Gap Segmentation and Classification: Quantifying the Pros and Cons of Integrating Optical and LiDAR Data. Remote Sensing. 2015; 7(12):15917-15932. https://doi.org/10.3390/rs71215811

Chicago/Turabian StyleYang, Jian, Trevor Jones, John Caspersen, and Yuhong He. 2015. "Object-Based Canopy Gap Segmentation and Classification: Quantifying the Pros and Cons of Integrating Optical and LiDAR Data" Remote Sensing 7, no. 12: 15917-15932. https://doi.org/10.3390/rs71215811

APA StyleYang, J., Jones, T., Caspersen, J., & He, Y. (2015). Object-Based Canopy Gap Segmentation and Classification: Quantifying the Pros and Cons of Integrating Optical and LiDAR Data. Remote Sensing, 7(12), 15917-15932. https://doi.org/10.3390/rs71215811