Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview

Abstract

:1. Introduction

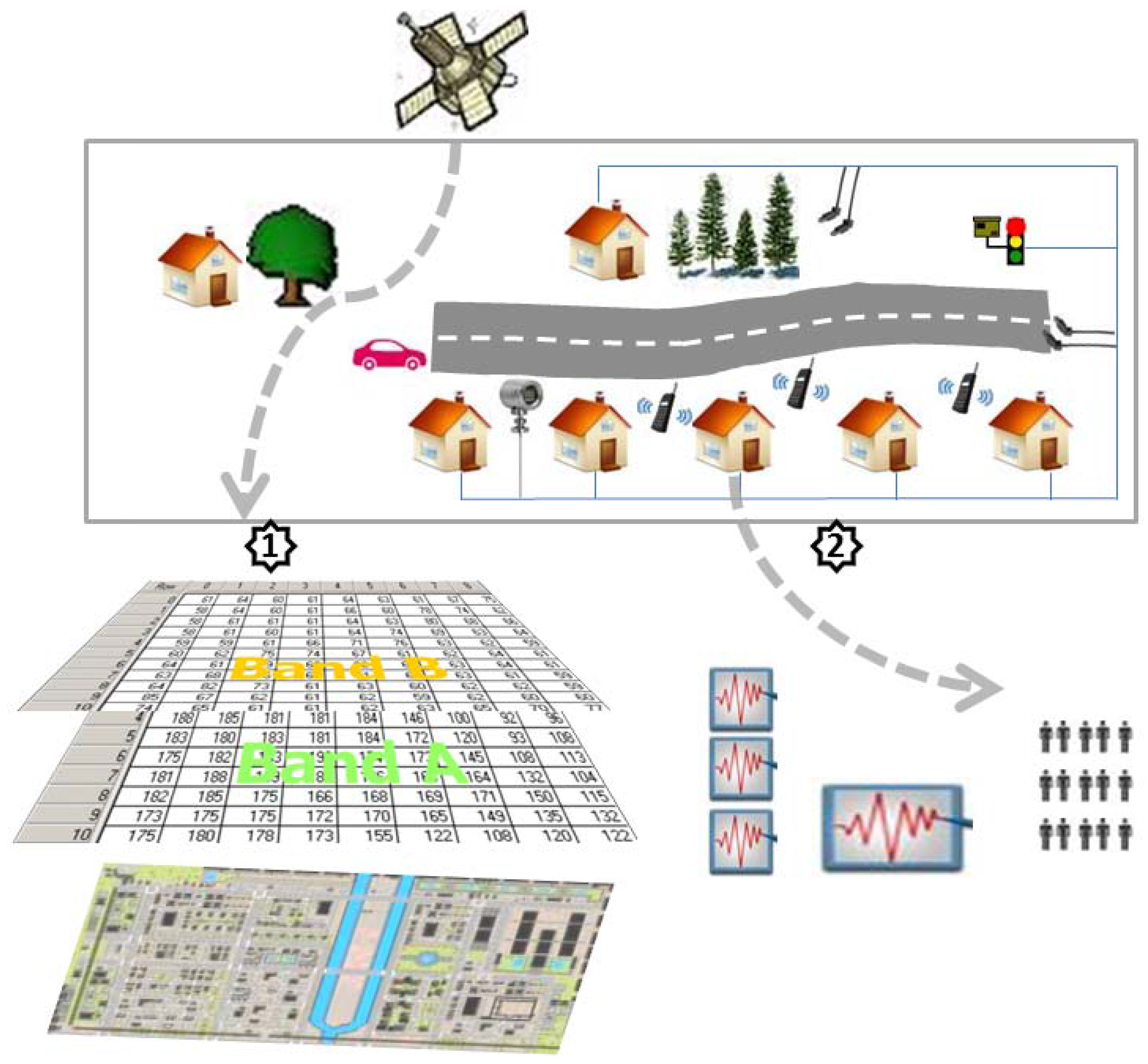

2 Remote Sensing and the Urban Environment

2.1. Progress in Technology

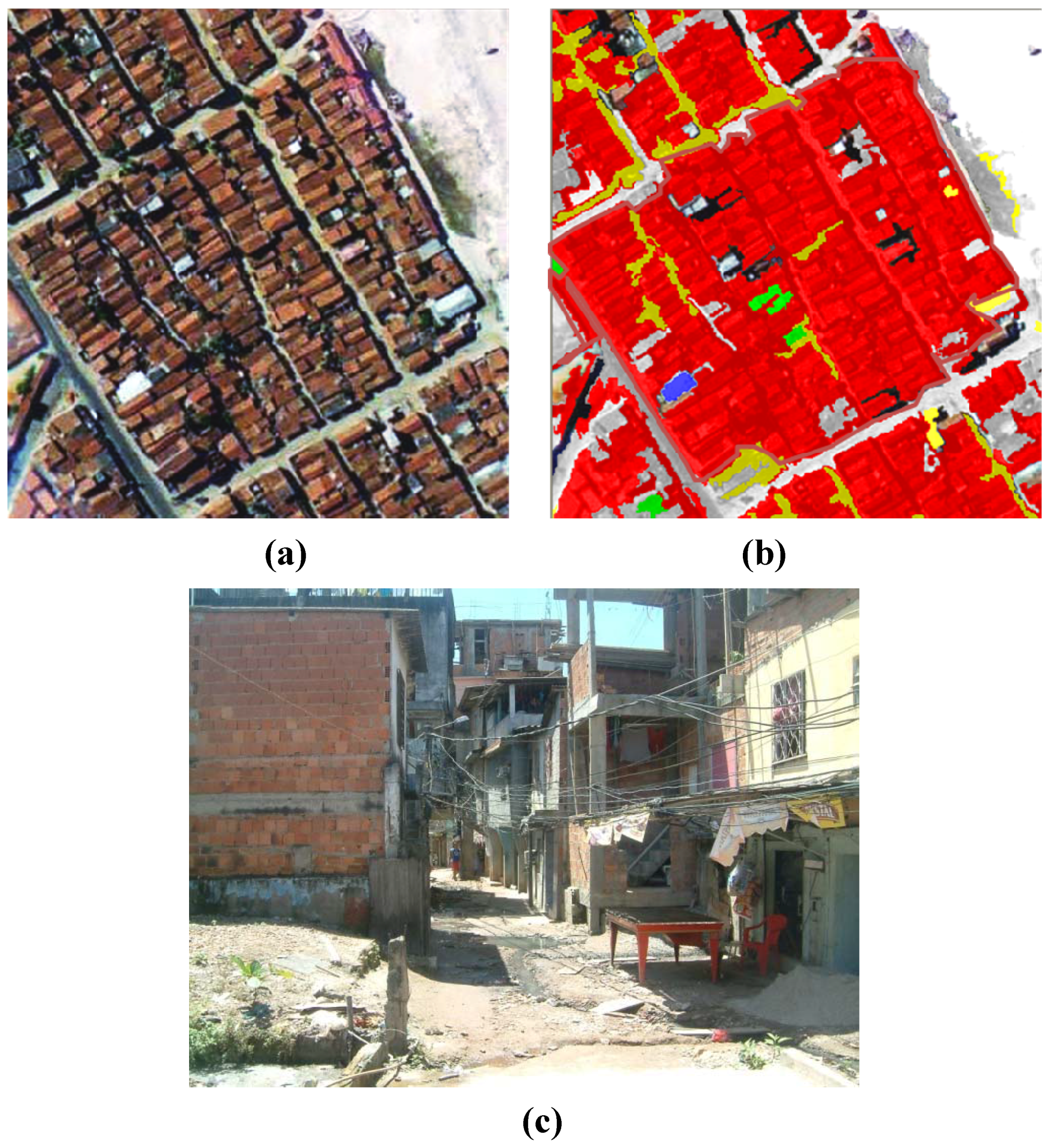

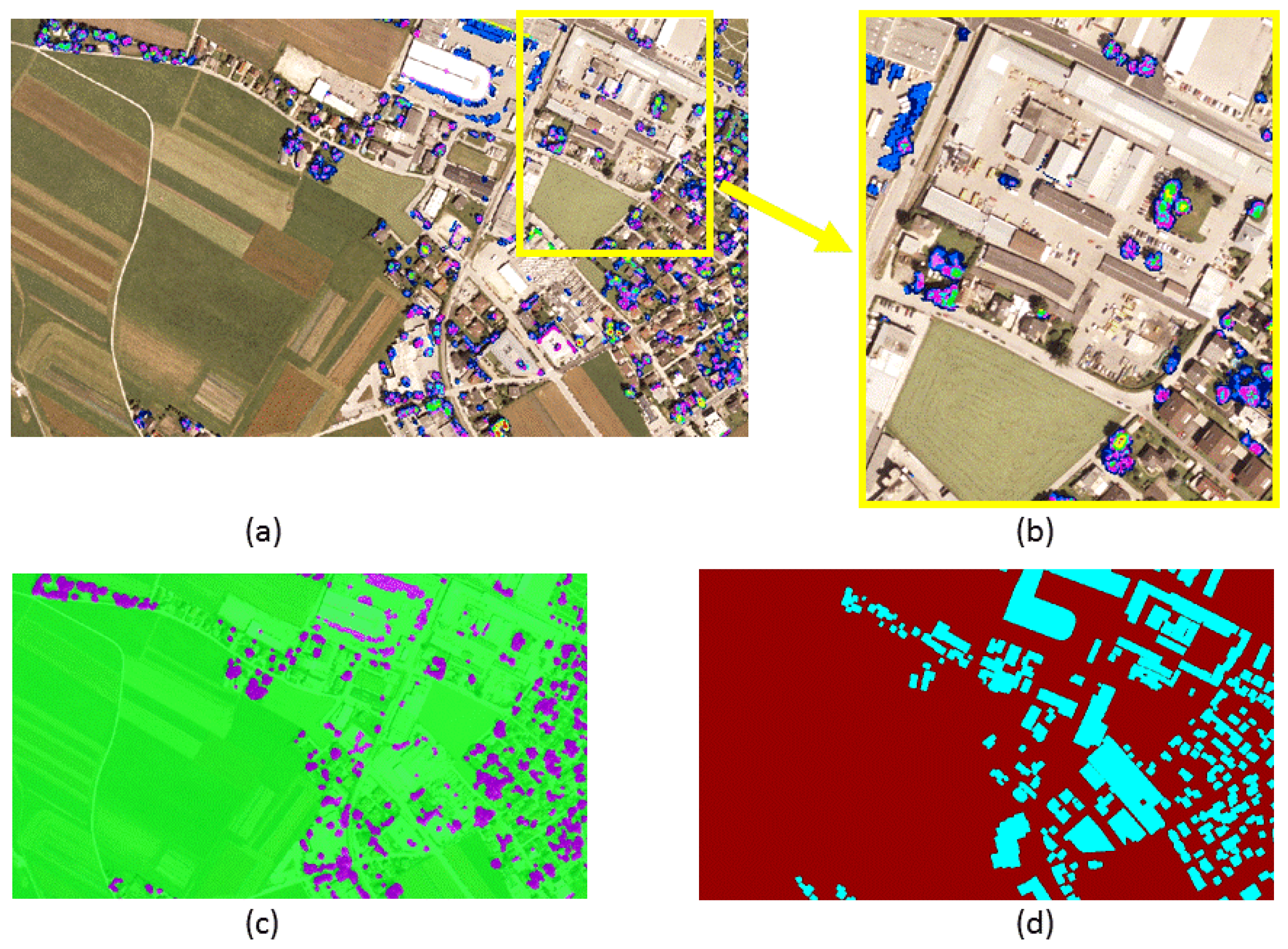

2.2. Progress in Image Analysis

2.3. Integrating Remote Sensing and GIS for Urban Analysis

| Area of surrounding vegetation | Distance rings from the building under consideration (m) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| <10 | 10–20 | 20–30 | 30–40 | 40–50 | 50–60 | 60–70 | 70–80 | 80–90 | 90–100 | |

| <25% | 1,232 | 646 | 621 | 589 | 607 | 527 | 528 | 517 | 554 | 551 |

| <50% | 485 | 596 | 742 | 772 | 858 | 947 | 948 | 962 | 983 | 979 |

| <75% | 136 | 482 | 441 | 465 | 374 | 377 | 379 | 375 | 321 | 329 |

| >75% | 10 | 139 | 59 | 37 | 24 | 12 | 8 | 9 | 5 | 4 |

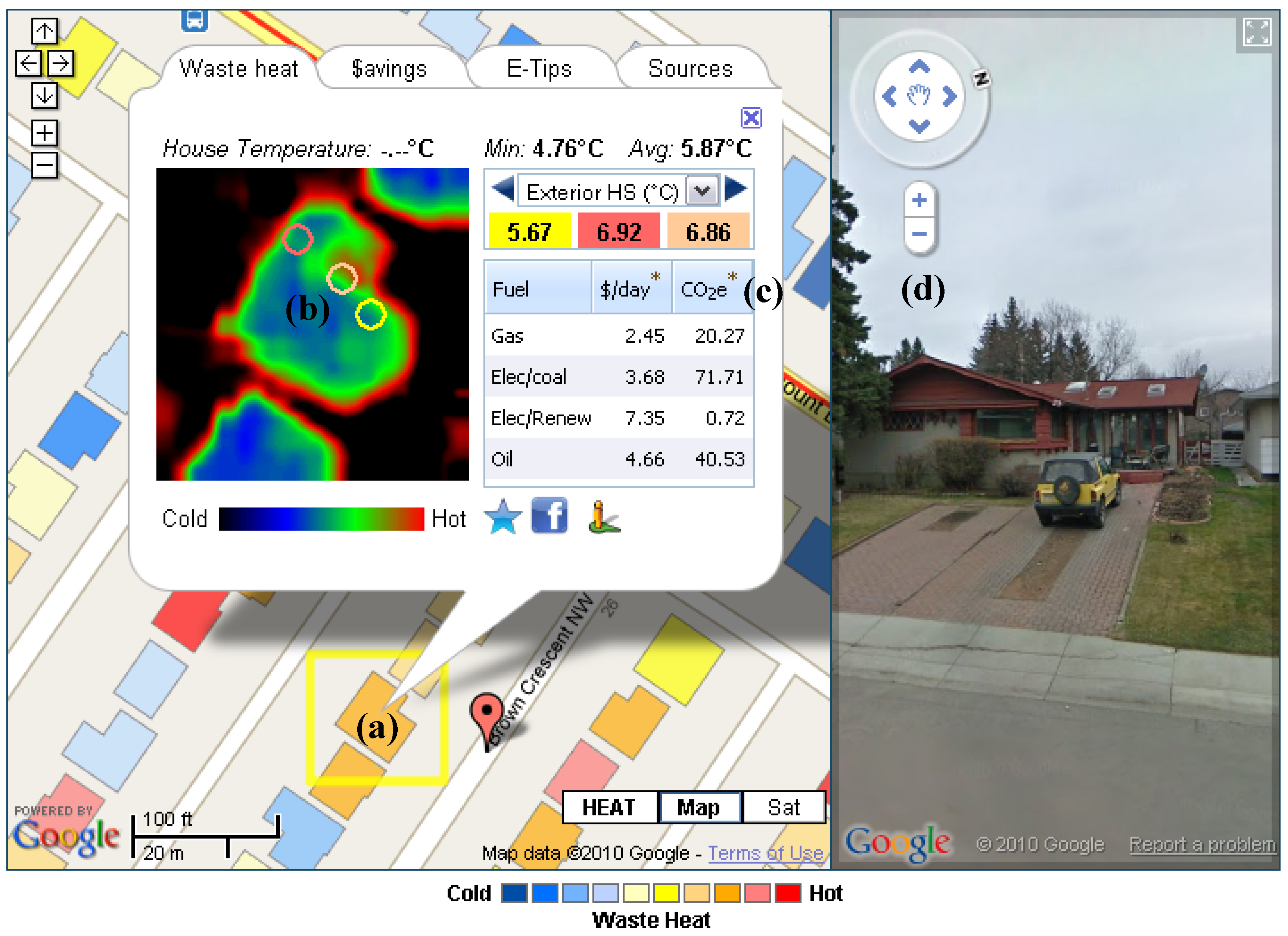

2.4. In depth Example of GIS-RS Integration: Thermal Urban Analysis

3. In situ Measurement Systems, Sensor Webs and Mobile Sensing

3.1. Towards a Digital Skin for Planet Earth

“In the next century, planet earth will don an electronic skin. It will use the Internet as a scaffold to support and transmit its sensations. This skin is already being stitched together. It consists of millions of embedded electronic measuring devices: thermostats, pressure gauges, pollution detectors, cameras, microphones, glucose sensors, EKGs, electroencephalographs. These will probe and monitor cities and endangered species, the atmosphere, our ships, highways and fleets of trucks, our conversations, our bodies–even our dreams”.[107]

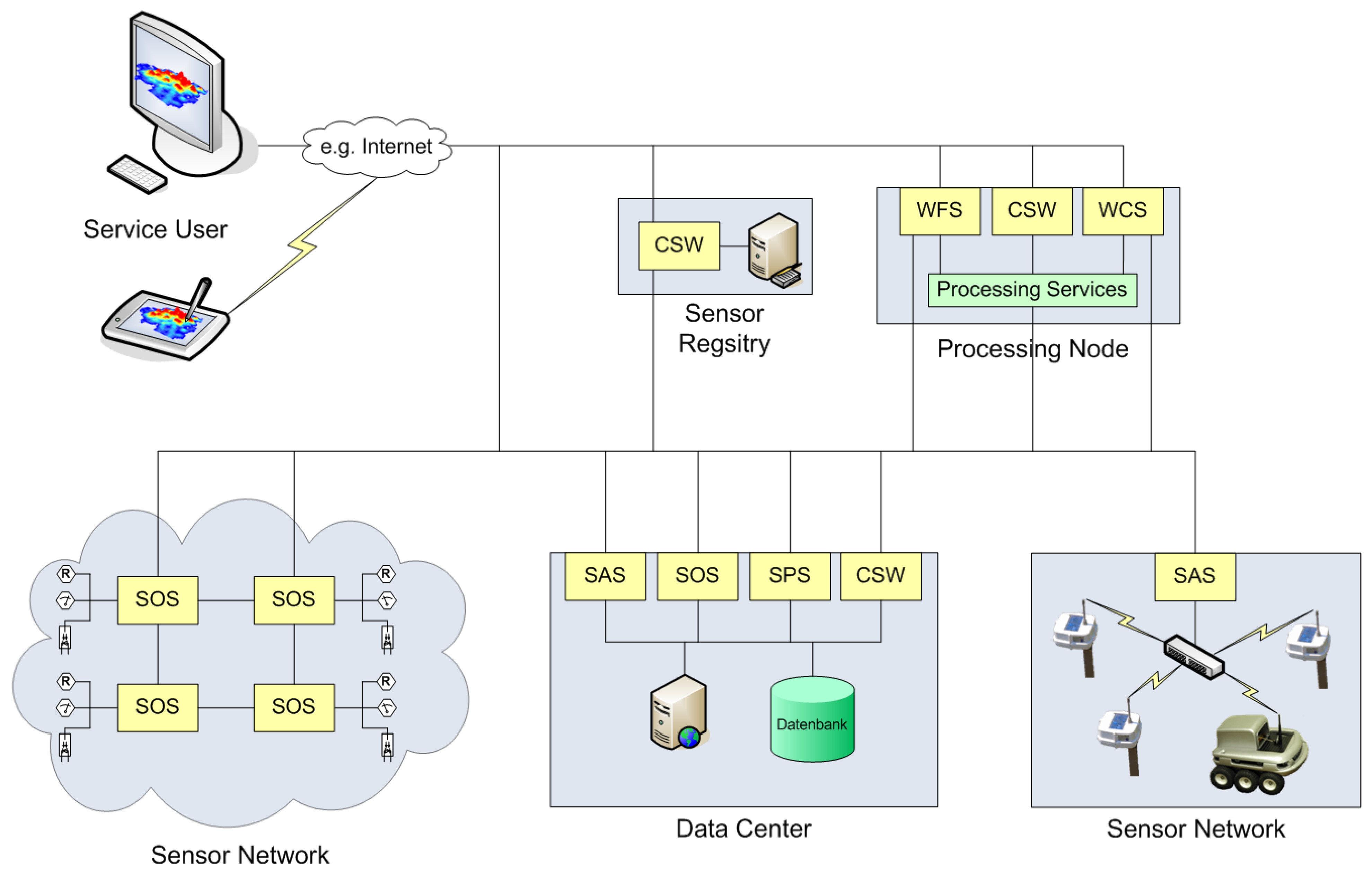

3.2. Technology Integration—Sensor Web Enablement

- ▪

- Sensor Model Language (SensorML)—This standard provides an XML schema for defining the geometric, dynamic and observational characteristics of a sensor. Thus, SensorML assists in the discovery of different types of sensors, and supports the processing and analysis of the retrieved data, as well as the geo-location and tasking of sensors.

- ▪

- Observations & Measurements (O&M)—O&M provides a description of sensor observations in the form of general models and XML encodings. This framework labels several terms for the measurements themselves as well as for the relationship between them. Measurement results are expressed as quantities, categories, temporal or geometrical values as well as arrays or composites of these.

- ▪

- Transducer Model Language (TML)—Generally speaking, TML can be understood as O&M’s pendant or streaming data by providing a method and message format describing how to interpret raw transducer data.

- ▪

- Sensor Observation Service (SOS)—SOS provides a standardized web service interface allowing access to sensor observations and platform descriptions.

- ▪

- Sensor Planning Service (SPS)—SPS offers an interface for planning an observation query. In effect, the service performs a feasibility check during the set-up of a request for data from several sensors.

- ▪

- Sensor Alert Service (SAS)—SAS can be seen as an event-processing engine whose purpose is to identify pre-defined events such as the particularities of sensor measurements, and then generate and send alerts in a standardized protocol format.

- ▪

- Web Notification Service (WNS)—The Web Notification Service is responsible for delivering generated alerts to end-users by E-mail, over HTTP, or via SMS. Moreover, the standard provides an open interface for services, through which a client may exchange asynchronous messages with one or more other services.

- ▪

- Sensor Web Registry—The registry serves to maintain metadata about sensors and their observations. In short, it contains information including sensor location, which phenomena they measure, and whether they are static or mobile. Currently, the OGC is pursuing a harmonization approach to integrate the existing CS-W (Web Catalogue Service) into SWE by building profiles in ebRIM/ebXML (e-business Registry Information Model).

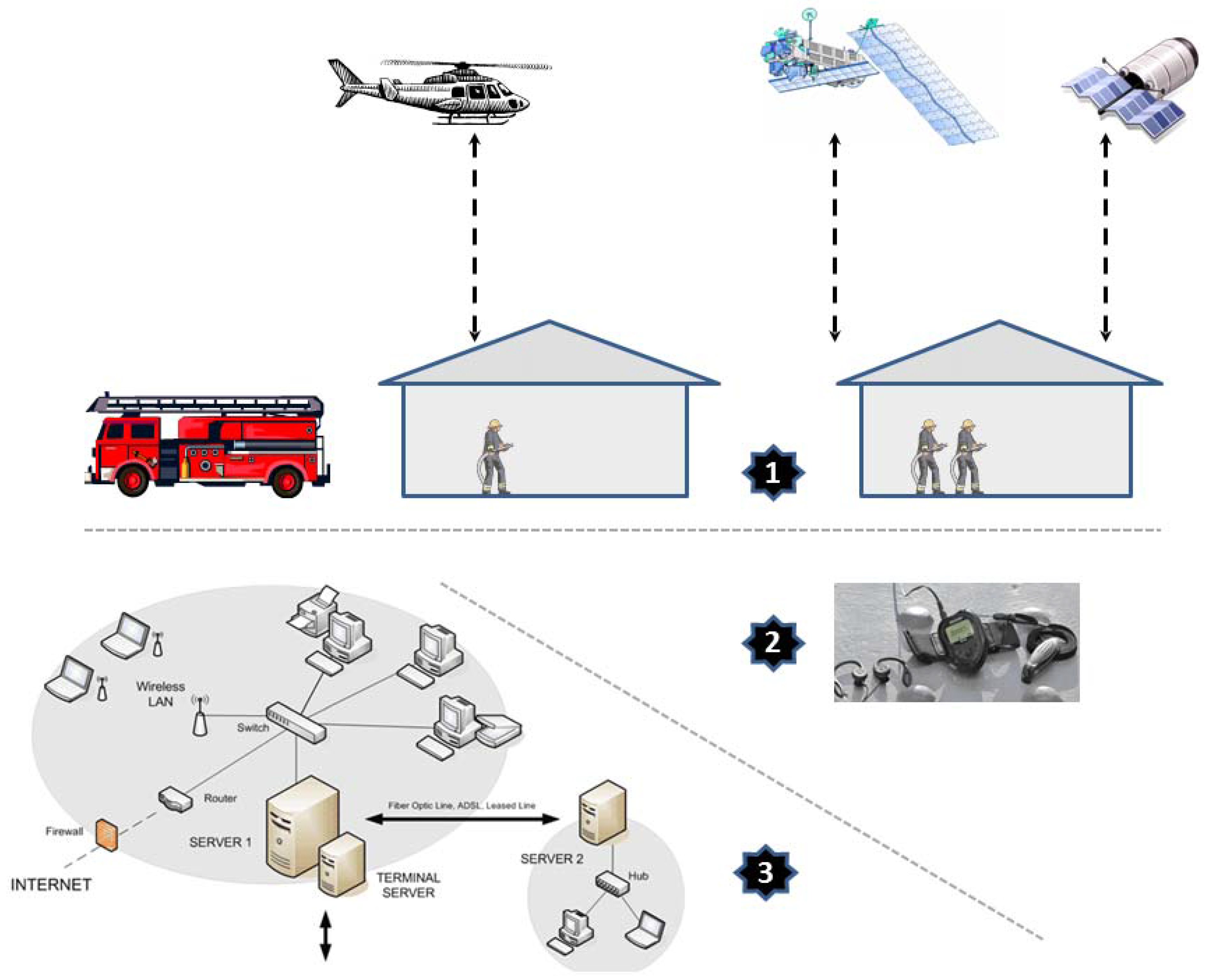

3.3. Fine-Grained Urban Sensing Reveals Unseen Information Layers

4. Collective Sensing: Beyond Monitoring of Physical Infrastructure

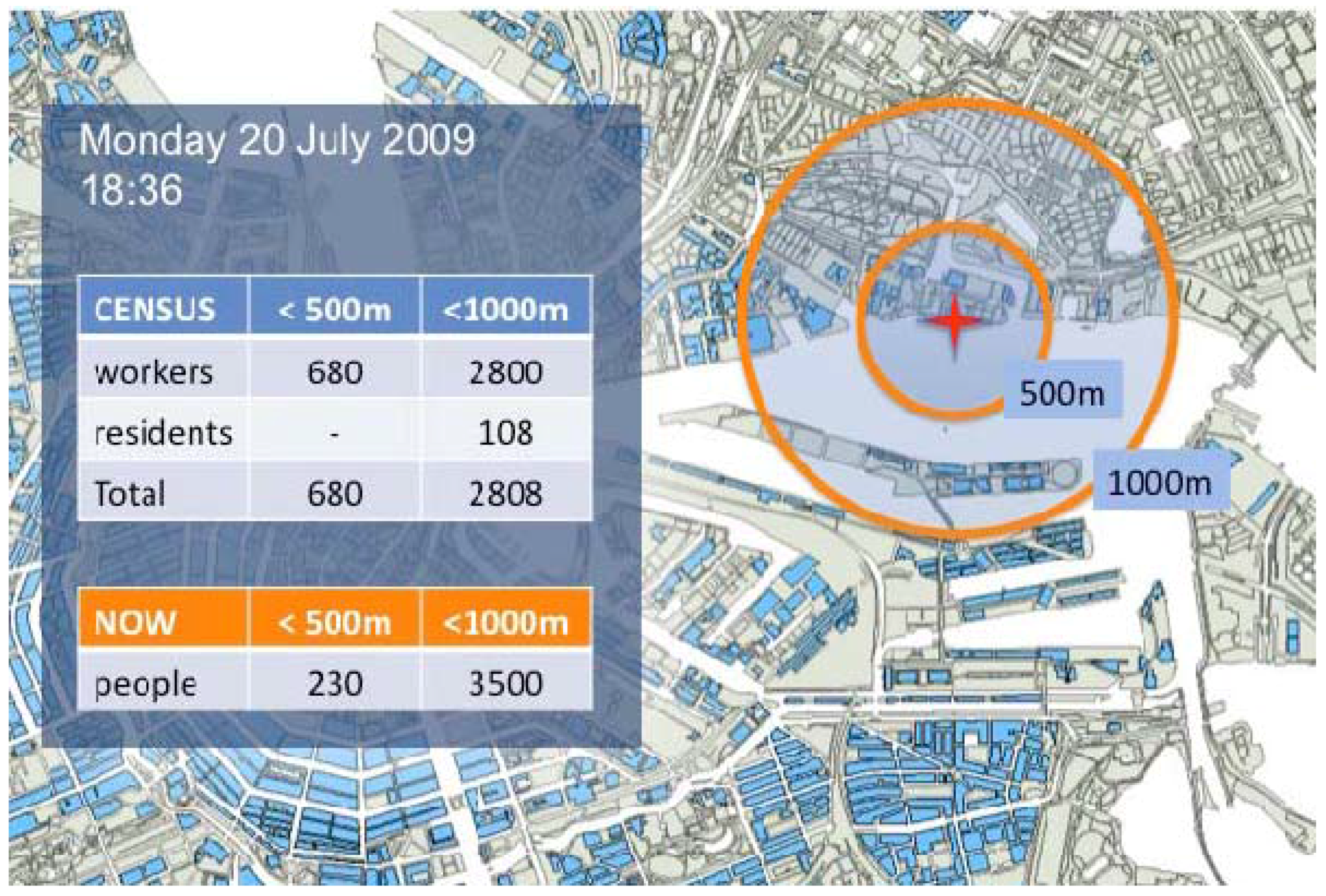

4.1. Demand for Recent and Holistic Urban Information

4.2. GIS as a Processing Platform

4.3. Thoughts on Urban Morphology and Function

4.4. Collective Sensing in the “Digital City” and “Smart City” Contexts

| Characteristics of a smart city | Role of remote sensing | Role of sensor webs | ||

|---|---|---|---|---|

| Today | Potential | Today | Potential | |

| Smart economy | * | ** | * | ** |

| Smart people | (*) | * | - | *** |

| Smart governance | (*) | * | - | ** |

| Smart mobility | * | ** | * | ***** |

| Smart Environment | **** | ***** | (*) | ***** |

| Smart Living | * | ** | (*) | ***** |

4.5. Thoughts on the Human-Environmental Processes

4.6. Beyond Remote Sensing

4.7. Towards a New Terminology for Collective Sensing

- ▪

- Collective sensing reveals 170 hits (50% of them are published since the year 2007) in Google scholar, with the five most cited articles accounting for 305 citations.

- ▪

- Ambient sensing reveals 403 hits (38% of them are published since the year 2007) in Google scholar, with the five most cited articles accounting for 138 citations.

- ▪

- Context sensing reveals 1,568 hits (39% of them are published since the year 2007) in Google scholar, with the five most cited articles accounting for 3,400 citations.

- ▪

- Ubiquitous sensing reveals 1,359 hits (48% of them are published since the year 2007) in Google scholar, with the five most cited articles accounting for 1,395 citations.

5. Conclusions: Towards Collective Urban Sensing

Acknowledgements

References

- Crutzen, P.J. Geology of mankind. Nature 2002, 415, 23. [Google Scholar] [CrossRef] [PubMed]

- World Population Data Sheet 2009; PRB (Population Reference Bureau): Washington, DC, USA, 2009.

- United Nations Department of Economic and Social Affairs. Population Division; No.2; UN: New York, NY, USA, 2010. [Google Scholar]

- Energy Mapping Study; Canadian Urban Institute for the City of Calgary: Toronto, ON, Canada, 2008; Available online: http://www.calgary.ca/docgallery/BU/planning/pdf/plan_it/energy_mapping_study.pdf (accessed on 13 March 2011).

- Bailie, A.; Beckstead, C. Canada’s Coolest Cities; Sustainable Energy Solutions Technical Report; The Pembina Institute: Drayton Valley, AB, Canda, 2010; Available online: http://pubs.pembina.org/reports/coolest-cities-technical-report.pdf (accessed on 13 March 2011).

- Zardini, M. Sense of the City: An Alternate Approach to Urbanism; Lars Müller Publishers: Baden, Switzerland, 2006. [Google Scholar]

- Hall, P. The World Cities, 3rd ed.; Weidenfeld and Nicolson: London, UK, 1966. [Google Scholar]

- Hall, P.; Pfeiffer, U. URBAN 21–Expertenbericht Zur Zukunft der Städte; Deutsche Verlagsanstalt: Stuttgart, Germany, 2000. [Google Scholar]

- Castells, M. The rise of network society. In The Information Age: Economy, Society and Culture; Castells, M., Ed.; Blackwell: Oxford, UK, 1996; Volume 1. [Google Scholar]

- Friedmann, J. The world city hypothesis. Dev. Change 1986, 17, 69–83. [Google Scholar] [CrossRef]

- Droege, P. The Renewable City: A Comprehensive Guide to an Urban Revolution; John Wiley & Sons, Ltd.: Chichester, UK, 2006. [Google Scholar]

- Mitchell, W.J. City of Bits: Space, Place, and the Infobahn; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Marceau, D.; Benenison, I. Advanced Geospatial Simulation Models; Bentham Science Publishers: Hilversum, The Netherlands, 2011; in press. [Google Scholar]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective, 2nd ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- ITRES. CASI-1500 Hyperspectral Imager. 2011. Available online: http://www.itres.com/products/imagers/casi1500 (accessed on 13 March 2011).

- Thomas, N.; Hendrix, C.; Congalton, R.G. A comparison of urban mapping methods using high-resolution digital imagery. Photogramm. Eng. Remote Sensing 2003, 69, 963–972. [Google Scholar] [CrossRef]

- Weng, Q.; Quattrochi, D.A. Urban Remote Sensing; CRC Press/Taylor and Francis: Boca Raton, FL, USA, 2006. [Google Scholar]

- Ehlers, M. New developments and trends for urban remote sensing. In Urban Remote Sensing; Weng, Q., Quattrochi, D.A., Eds.; CRC Press: Boca Raton, FL, USA, 2006; pp. 357–375. [Google Scholar]

- Herold, M.; Rogers, D.A. Remote sensing of urban and suburban areas. In Remote Sensing of Urban and Suburban Areas; Rashed, T., Jürgens, C., Eds.; Springer: Berlin, Germany, 2010; pp. 47–65. [Google Scholar]

- Rashed, T.; Jürgens, C. Remote Sensing of Urban and Suburban Areas; Springer: Berlin, Germany, 2010. [Google Scholar]

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Weng, Q. Thermal infrared remote sensing for urban climate and environmental studies: Methods, applications, and trends. ISPRS J. Photogramm. 2009, 64, 335–344. [Google Scholar] [CrossRef]

- Weng, Q. Advances in Environmental Remote Sensing: Sensors, Algorithms and Applications; CRC Press/Taylor and Francis: Boca Raton, FL, USA, 2011. [Google Scholar]

- Maktav, D.; Erbek, F.S.; Jürgens, C. Remote sensing of urban areas. Int. J. Appl. Earth Obs. Geoinf. 2005, 26, 655–659. [Google Scholar] [CrossRef]

- Andersson, E. Urban landscapes and sustainable cities. Ecol. Soc. 2006, 11, 34. [Google Scholar]

- Hardin, P.J.; Jackson, M.W.; Otterstrom, S.M. Mapping, measuring, and modeling urban growth. In Geo-Spatial Technologies in Urban Environments: Policy, Practice, and Pixels, 2nd ed.; Jensen, R.R., Gatrell, J.D., McLean, D.D., Eds.; Springer-Verlag: Berlin, Germany, 2007; pp. 141–176. [Google Scholar]

- Longley, P.A. Geographical Information Systems: Will developments in urban remote sensing and GIS lead to ‘better’ urban geography? Progr. Human Geogr. 2002, 26, 231–239. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Extraction of urban impervious surfaces from IKONOS imagery. Int. J. Remote Sens. 2009, 30, 1297–1311. [Google Scholar] [CrossRef]

- Hu, X.; Weng, Q. Impervious surface area extraction from IKONOS imagery using an object-based fuzzy method. Geocarto Int. 2011, 26, 3–20. [Google Scholar] [CrossRef]

- Bhaskaran, S.; Paramananda, S.; Ramnarayan, M. Per-pixel and object-oriented classification methods for mapping urban features using Ikonos satellite data. Appl. Geogr. 2010, 30, 650–665. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S; Weng, Q. Per-pixel versus object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Roessner, S.; Segl, K.; Heiden, U.; Kaufmann, H. Automated differentiation of urban surfaces based on airborne hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1525–1532. [Google Scholar] [CrossRef]

- Gamba, P.; Dell’Acqua, F. Spectral resolution in the context of very high resolution urban remote sensing. In Urban Remote Sensing; Weng, Q., Quattrochi, D.A., Eds.; CRC Press: Boca Raton, FL, USA, 2006; pp. 377–391. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high resolution remote sensing imagery. Photogramm. Eng. Remote Sensing 2006, 72, 799–781. [Google Scholar] [CrossRef]

- Herold, M.; Liu, X.H.; Clarke, K.C. Spatial metrics and image texture for mapping urban land-use. Photogramm. Eng. Remote Sensing 2003, 69, 991–1001. [Google Scholar] [CrossRef]

- Jensen, J.R.; Cowen, D.C. Remote sensing of urban/suburban infrastructure and socioeconomic attributes. Photogramm. Eng. Remote Sensing 1999, 65, 611–622. [Google Scholar]

- Hinks, T.; Carr, H.; Laefer, D.F. Flight optimization algorithms for aerial LiDAR capture for urban infrastructure model generation. J. Comput. Civil Eng. 2009, 23, 330–339. [Google Scholar] [CrossRef]

- Goodwin, N.R.; Coops, N.C.; Tooke, T.R.; Christen, A.; Voogt, J.A. Characterizing urban surface cover and structure with airborne LiDAR technology. Can. J. Remote Sens. 2009, 35, 297–309. [Google Scholar] [CrossRef]

- Weng, Q.; Lu, D. Landscape as a continuum: An examination of the urban landscape structures and dynamics of Indianapolis city 1991–2000. Int. J. Remote Sens. 2009, 30, 2547–2577. [Google Scholar] [CrossRef]

- Mather, P.M. Land cover classification revisited. In Advances in Remote Sensing and GIS; Atkinson, P.M., Tate, N.J., Eds.; Wiley: New York, NY, USA, 1999; pp. 7–16. [Google Scholar]

- Hay, G.J.; Niemann, K.O.; McLean, G. An object-specific image-texture analysis of H-resolution forest imagery. Remote Sens. Environ. 1996, 55, 108–122. [Google Scholar] [CrossRef]

- Wang, F. Fuzzy supervised classification of remote sensing images. IEEE Trans. Geosci. Remote Sens. 1990, 28, 194–201. [Google Scholar] [CrossRef]

- Asner, G.P.; Hicke, J.A.; Lobell, D.B. Per-pixel analysis of forest structure. Vegetation indices, spectral mixture analysis and canopy reflectance modeling. In Remote Sensing of Forest Environments. Concepts and Case Studies; Wulder, M.A., Franklin, S.E., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2003; pp. 209–254. [Google Scholar]

- Blaschke, T.; Lang, S.; Hay, G. Object Based Image Analysis; Springer: Berlin, Germany, 2008. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Lang, S.; Blaschke, T. Bridging remote sensing and GIS—What are the main supporting pillars? In International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Vienna, Austria, 2006; Vol. XXXVI-4/C42. [Google Scholar]

- Walker, J.; Blaschke, T. Object-based landcover classification for the Phoenix metropolitan area: Optimization vs. transportability. Int. J. Remote Sens. 2008, 29, 2021–2040. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic object-based image analysis (GEOBIA): A new name for a new discipline? In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 75–89. [Google Scholar]

- Castilla, G.; Hay, G.J. Image-objects and geo-objects. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 92–110. [Google Scholar]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Jin, M.S.; Kessomkiat, W.; Pereira, G. Satellite-observed urbanization characters in Shanghai, China: Aerosols, Urban Heat Island effect, and land–atmosphere interactions. Remote Sens. 2011, 3, 83–99. [Google Scholar] [CrossRef]

- Aubrecht, C.; Steinnocher, K.; Hollaus, M.; Wagner, W. Integrating earth observation and GIScience for high resolution spatial and functional modeling of urban land use. Comput. Environ. Urban Syst. 2009, 33, 15–25. [Google Scholar] [CrossRef]

- Zhou, W.; Troy, A.; Grove, M. Object-based land cover classification and change analysis in the baltimore metropolitan area using multitemporal high resolution remote sensing data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef]

- Kux, H.J.; Araujo, E.H.G. Object-based image analysis using QuickBird satellite images and GIS data, case study Belo Horizonte (Brazil). In Object Based Image Analysis; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germay, 2008; pp. 571–588. [Google Scholar]

- Kressler, F.; Steinnocher, K. Object-oriented analysis of image and LiDAR data and its potential for dasymetric mapping applications. In Object Based Image Analysis; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germay, 2008; pp. 611–624. [Google Scholar]

- Ehlers, M.; Jadkowski, M.A.; Howard, R.R.; Brostuen, D.E. Application of SPOT data for regional growth analysis and local planning. Photogramm. Eng. Remote Sensing 1990, 56, 175–180. [Google Scholar]

- Treitz, P.M.; Howard, P.J.; Gong, P. Application of satellite and GIS technologies for land-cover and land-use mapping at the rural-urban fringe: A case study. Photogramm. Eng. Remote Sensing 1992, 58, 439–448. [Google Scholar]

- Harris, P.M.; Ventura, S.J. The integration of geographic data with remotely sensed imagery to improve classification in an urban area. Photogramm. Eng. Remote Sensing 1995, 61, 993–998. [Google Scholar]

- Weng, Q. Land use change analysis in the Zhujiang Delta of China using satellite remote sensing, GIS, and stochastic modeling. J. Environ. Manage. 2002, 64, 273–284. [Google Scholar] [CrossRef] [PubMed]

- Wilkinson, G.G. A review of current issues in the integration of GIS and remote sensing data. Int. J. Geogr. Inf. Syst. 1996, 10, 85–101. [Google Scholar] [CrossRef]

- Harvey, W.; McGlone, J.C.; McKeown, D.M.; Irvine, J.M. User-centric evaluation of semi-automated road network extraction. Photogramm. Eng. Remote Sensing 2004, 70, 1353–1364. [Google Scholar] [CrossRef]

- Song, M.; Civco, D. Road extraction using SVM and image segmentation. Photogramm. Eng. Remote Sensing 2004, 70, 1365–1372. [Google Scholar] [CrossRef]

- Doucette, P.; Agouris, P.; Stefanidis, A. Automated road extraction from high resolution multispectral imagery. Photogramm. Eng. Remote Sensing 2004, 70, 1405–1416. [Google Scholar] [CrossRef]

- Kim, T.; Park, S.; Kim, M.; Jeong, S.; Kim, K. Tracking road centerlines from high resolution remote sensing images by least squares correlation matching. Photogramm. Eng. Remote Sensing 2004, 70, 1417–1422. [Google Scholar] [CrossRef]

- Mayer, H. Automatic object extraction from aerial imagery—A survey focusing on building. Comput. Vis. Image Understand. 1999, 74, 138–139. [Google Scholar] [CrossRef]

- Lee, D.S.; Shan, J.; Bethel, J.S. Class-guided building extraction from IKONOS imagery, Photogramm. Eng. Remote Sensing 2003, 69, 143–150. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, K.M.; Lee, S.U. Fusion of lidar and imagery for reliable building extraction. Photogramm. Eng. Remote Sensing 2008, 74, 215–225. [Google Scholar] [CrossRef]

- Miliaresis, G.; Kokkas, N. Segmentation and object-based classification for the extraction of the building class from LiDAR DEMs. Comput. Geosci. 2007, 33, 1076–1087. [Google Scholar] [CrossRef]

- Haack, B.; Bryant, N.; Adams, S. Assessment of Landsat MSS and TM data for urban and near-urban land cover digital classification. Remote Sens. Environ. 1987, 21, 201–213. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Spectral mixture analysis of the urban landscape in Indianapolis with Landsat ETM+ imagery. Photogramm. Eng. Remote Sensing 2004, 70, 1053–1062. [Google Scholar] [CrossRef]

- Weng, Q.; Hu, X. Medium spatial resolution satellite imagery for estimating and mapping urban impervious surfaces using LSMA and ANN. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2397–2406. [Google Scholar] [CrossRef]

- Yeh, A.G.O.; Li, X. An integrated remote sensing and GIS approach in the monitoring and evaluation of rapid urban growth for sustainable development in the Pearl River Delta, China. Int. Plan. Studies 1997, 2, 193–210. [Google Scholar] [CrossRef]

- Cheng, J.; Masser, I. Urban growth pattern modeling: A case study of Wuhan City, PR, China. Landscape Urban Plan. 2003, 62, 199–217. [Google Scholar] [CrossRef]

- Mesev, V. The use of census data in urban image classification. Photogramm. Eng. Remote Sensing 1998, 64, 431–438. [Google Scholar]

- Weng, Q. Remote Sensing and GIS Integration: Theories, Methods, and Applications; McGraw-Hill: New York, NY, USA, 2009. [Google Scholar]

- Langford, M.; Maguire, D.J.; Unwin, D.J. The areal interpolation problem: Estimating population using remote sensing in a GIS framework. In Handling Geographical Information: Methodology and Potential Applications; Masser, I., Blakemore, M., Eds.; John Wiley & Sons, Inc.: New York, NY, USA, 1991. [Google Scholar]

- Lo, C.P. Automated population and dwelling unit estimation from high resolution satellite images: A GIS approach. Int. J. Remote Sens. 1995, 16, 17–34. [Google Scholar] [CrossRef]

- Sutton, P. Modeling population density with nighttime satellite imagery and GIS. Comput. Environ. Urban Syst. 1997, 21, 227–244. [Google Scholar] [CrossRef]

- Yuan, Y.; Smith, R.M.; Limp, W.F. Remodeling census population with spatial information from Landsat imagery. Comput. Environ. Urban Syst. 1997, 21, 245–258. [Google Scholar] [CrossRef]

- Harris, R.J.; Longley, P.A. New data and approaches for urban analysis: Modeling residential densities. Trans. GIS 2000, 4, 217–234. [Google Scholar] [CrossRef]

- Martin, D.; Tate, N.J.; Langford, M. Refining population surface models: Experiments with Northern Ireland census data. Trans. GIS 2000, 4, 343–360. [Google Scholar] [CrossRef]

- Harvey, J. Small area population estimation using satellite imagery. Trans. GIS 2000, 4, 611–633. [Google Scholar]

- Harvey, J.T. Estimation census district population from satellite imagery: Some approaches and limitations. Int. J. Remote Sens. 2002, 23, 2071–2095. [Google Scholar] [CrossRef]

- Qiu, F.; Woller, K.L.; Briggs, R. Modeling urban population growth from remotely sensed imagery and TIGER GIS road data. Photogramm. Eng. Remote Sensing 2003, 69, 1031–1042. [Google Scholar] [CrossRef]

- Li, G.; Weng, Q. Using Landsat ETM+ imagery to measure population density in Indianapolis, Indiana, USA. Photogramm. Eng. Remote Sensing 2005, 71, 947–958. [Google Scholar] [CrossRef]

- Li, G.; Weng, Q. Fine-scale population estimation: How Landsat ETM+ imagery can improve population distribution mapping? Can. J. Remote Sens. 2010, 36, 155–165. [Google Scholar] [CrossRef]

- Thomson, C.N. Remote sensing/GIS integration to identify potential low-income housing sites. Cities 2000, 17, 97–109. [Google Scholar] [CrossRef]

- Hall, G.B.; Malcolm, N.W.; Piwowar, J.M. Integration of remote sensing and GIS to detect pockets of urban poverty: The case of Rosario, Argentina. Trans. GIS 2001, 5, 235–253. [Google Scholar] [CrossRef]

- Weber, C.; Hirsch, J. Some urban measurements from SPOT data: Urban life quality indices. Int. J. Remote Sens. 1992, 13, 3251–3261. [Google Scholar] [CrossRef]

- Lo, C.P.; Faber, B.J. Integration of landsat thematic mapper and census data for quality of life assessment. Remote Sens. Environ. 1997, 62, 143–157. [Google Scholar] [CrossRef]

- Li, G.; Weng, Q. Measuring the quality of life in city of Indianapolis by integration of remote sensing and census data. Int. J. Remote Sens. 2007, 28, 249–267. [Google Scholar] [CrossRef]

- Liang, B.; Weng, Q. Assessing urban environmental quality change of Indianapolis, United States, by the remote sensing and GIS integration. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 43–55. [Google Scholar] [CrossRef]

- Möller, M.; Blaschke, T. GIS-gestützte Bildanalyse der städtischen vegetation als Indikator urbaner Lebensqualität. Photogramm. Fernerkundung Geoinformation 2006, 10, 19–30. [Google Scholar]

- Hinz, S.; Lenhart, D; Leitloff, J. Traffic extraction and characterisation from optical remote sensing data. Photogramm. Rec. 2008, 23, 424–440. [Google Scholar] [CrossRef]

- Heiple, S.; Sailor, D.J. Using building energy simulation and geospatial modeling techniques to determine high resolution building sector energy consumption profiles. Energy Build. 2008, 40, 1426–1436. [Google Scholar] [CrossRef]

- Roth, M.; Oke, T.R.; Emery, W.J. Satellite derived urban heat islands from three coastal cities and the utilisation of such data in urban climatology. Int. J. Remote Sens. 1989, 10, 1699–1720. [Google Scholar] [CrossRef]

- Weng, Q.; Liu, H.; Lu, D. Assessing the effects of land use and land cover patterns on thermal conditions using landscape metrics in city of Indianapolis, United States. Urban Ecosyst. 2007, 10, 203–219. [Google Scholar] [CrossRef]

- Liu, H.; Weng, Q. An examination of the effect of landscape pattern, land surface temperature, and socioeconomic conditions on WNV dissemination in Chicago. Environ. Monit. Assess. 2009, 159, 143–161. [Google Scholar] [CrossRef] [PubMed]

- Hay, G.J.; Hemachandran, B.; Kyle, C.D. HEAT (Home Energy Assessment Technologies): Residential waste heat monitoring, google maps and airborne thermal imagery. Alberta, Canada. GIM Int. 2010, 24, 13–15. [Google Scholar]

- Hay, G.J.; Kyle, C.D.; Hemachandran, B.; Chen, G.; Rahman, M.; Fung, T.S.; Arvai, J.L. Geospatial technologies to improve urban energy efficiency. Remote Sens. 2011, 3, 1380–1405. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Spectral mixture analysis of ASTER imagery for examining the relationship between thermal features and biophysical descriptors in Indianapolis, Indiana. Remote Sens. Environ. 2006, 104, 157–167. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Use of impervious surface in urban land use classification. Remote Sens. Environ. 2006, 102, 146–160. [Google Scholar] [CrossRef]

- Weng, Q.; Hu, X.; Liu, H. Estimating impervious surfaces using linear spectral mixture analysis with multi-temporal ASTER images. Int. J. Remote Sens. 2009, 30, 4807–4830. [Google Scholar] [CrossRef]

- Resch, B.; Lippautz, M.; Mittlboeck, M. Pervasive monitoring-a standardised sensor web approach for intelligent sensing infrastructures. Sensors 2010, 10, 11440–11467. [Google Scholar]

- Gross, N. The Earth will don an electronic skin. BusinessWeek Online. 30 August 1999. Available online: http://www.businessweek.com/1999/99_35/b3644024.htm (accessed on 20 February 2011).

- Paulsen, H.; Riegger, U. SensorGIS-Geodaten in Echtzeit. GIS-Bus. 2006, 8, 17–19. [Google Scholar]

- Resch, B.; Britter, R.; Ratti, C. Live urbanism-towards the senseable city and beyond. In Sustainable Architectural Design: Impacts on Health; Pardalos, P., Rassia, S., Eds.; 2011; in press. [Google Scholar]

- Chong, C.; Kumar, S. Sensor networks: Evolution, opportunities, and challenges. Proc. IEEE 2003, 91, 1247–1256. [Google Scholar] [CrossRef]

- Lesser, V.; Ortiz, C.; Tambe, M. Distributed Sensor Networks: A Multiagent Perspective; Springer: Berlin, Germany, 2003. [Google Scholar]

- De Wolf, T.; Holvoet, T. Towards Autonomic Computing: Agent-Based Modelling, Dynamical Systems Analysis, and Decentralised Control. In Proceedings of the IEEE International Conference on Industrial Informatics, 2003, INDIN 2003, Banff, AB, Canada, 21–24 August 2003; pp. 470–479.

- Botts, M.; Robin, A.; Davidson, J.; Simonis, I. OpenGIS Sensor Web Enablement Architecture; OpenGIS Discussion Paper OGC 06-021r1; Version 1.0; Open Geospatial Consortium Inc.: Wayland, MA, USA, 4 March 2006. [Google Scholar]

- Goodchild, M.F. Communicating geographic information in a digital age. Ann. Assoc. Am. Geogr. 2000, 90, 344–355. [Google Scholar] [CrossRef]

- Gore, A. The digital earth: Understanding our planet in the 21st Century. Photogramm. Eng. Remote Sensing 1999, 65, 528–530. [Google Scholar] [CrossRef]

- Craglia, M.; Goodchild, M.F.; Annoni, A.; Camara, G.; Gould, M.; Kuhn, W.; Mark, D.M.; Masser, I.; Maguire, D.J.; Liang, S.; et al. Next-generation digital earth. A position paper from the Vespucci initiative for the advancement of geographic information science. Int. J. Spat. Data Infrastruct. Res. 2008, 3, 146–167. [Google Scholar]

- Goodchild, M.F. Citizens as voluntary sensors: Spatial data infrastructure in the world of Web 2.0. Int. J. Spat. Data Infrastruct. Res. 2007, 2, 24–32. [Google Scholar]

- Mesev, V. Identification and characterisation of urban building patterns using IKONOS imagery and point-based postal data. Comput. Environ. Urban Syst. 2005, 29, 541–557. [Google Scholar] [CrossRef]

- Faust, N.L.; Anderson, W.H.; Star, J.L. Geographic information systems and remote sensing future computing environment. Photogramm. Eng. Remote Sensing 1991, 57, 655–668. [Google Scholar]

- Kimes, D.S.; Harrison, P.R.; Ratcliffe, P.A. A knowledge-based expert system for inferring vegetation characteristics. Int. J. Remote Sens. 1991, 12, 1987–2020. [Google Scholar] [CrossRef]

- Steiniger, S.; Hay, G.J. Free and open source geographic information tools for landscape ecology: A review. Ecol. Inf. 2009, 4, 183–195. [Google Scholar] [CrossRef]

- Gao, J. Integration of GPS with remote sensing and GIS: reality and prospect. Photogramm. Eng. Remote Sensing 2002, 68, 447–453. [Google Scholar]

- Karimi, H.A.; Khattak, A.H; Hummer, J.E. Evaluation of mobile mapping systems for roadway data collection. J. Comput. Civil Eng. 2000, 14, 168–173. [Google Scholar] [CrossRef]

- Xue, Y.; Cracknell, A.P.; Guo, H.D. Telegeoprocessing: The integration of remote sensing, geographic information system (GIS), global positioning system (GPS) and telecommunication. Int. J. Remote Sens. 2002, 23, 1851–1893. [Google Scholar] [CrossRef]

- Sabins, F.F. Remote Sensing: Principles and Interpretation; W.H. Freeman: New York, NY, USA, 1987. [Google Scholar]

- Torrens, P.M. Wi-Fi geographies. Ann. Assoc. Am. Geogr. 2008, 98, 59–84. [Google Scholar] [CrossRef]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- Resch, B.; Mittlboeck, M.; Kranzer, S.; Sagl, G.; Heistracher, T.; Blaschke, T. “People as sensors” mittels personalisertem geo-tracking. In Angewandte Geoinformatik; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann Verlag: Heidelberg, Germany, 2011; pp. 682–687. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high resolution remote sensing imagery. Photogramm. Eng. Remote Sensing 2006, 72, 799–781. [Google Scholar] [CrossRef]

- Liu, D.; Kelly, M.; Gong, P. A spatial–temporal approach to monitoring forest disease spread using multi-temporal high spatial resolution imagery. Remote Sens. Environ. 2006, 101, 167–180. [Google Scholar] [CrossRef]

- Chen, K. Correlations between Census Dwelling Data and Remotely Sensed Data. In Proceedings of the SIRC 98: 10th Annual Colloquium of the Spatial Information Research Centre, Dunedin, New Zealand, 16–19 November 1998.

- Banzhaf, E.; Kindler, A.; Haase, D. Monitoring and Modelling Indicators for Urban Shrinkage—The City of Leipzig, Germany. In Proceedings of the Second Workshop of the EARSeL SIG on Remote Sensing of Land Use and Land Cover, Bonn, Germany, 28–30 September 2006.

- Hollands, R.G. Will the real smart city please stand up? Intelligent, progressive or entrepreneurial? City 2008, 12, 303–320. [Google Scholar] [CrossRef]

- Giffinger, R.; Fertner, C.; Kramar, H.; Meijers, E.; Pichler-Milanović, N. Smart Cities: Ranking of European Medium-Sized Cities; Final Report; Centre of Regional Science, Vienna UT: Vienna, Austria, October 2007; Available online: www.smart-cities.eu/download/smart_cities_final_report.pdf (accessed on 9 August 2011).

- Partridge, H. Developing a Human Perspective to the Digital Divide in the Smart City. In Presented at ALIA 2004, Brisbane, QLD, Australia, 21–24 September 2004; Available online: http://eprints.qut.edu.au/1299/1/partridge.h.2.paper.pdf (accessed on 9 August 2011).

- Calabrese, F.; Ratti, C. Real time rome. Netw. Commun. Stud. 2006, 20, 247–258. [Google Scholar]

- Reades, J.; Calabrese, F.; Sevtsuk, A.; Ratti, C. Cellular census: Explorations in urban data collection. Pervasive Comput. 2007, 6, 30–38. [Google Scholar] [CrossRef]

- Dierig, S.; Lachmund, J.; Mendelsohn, A. Science and the City; Workshop, Max Planck Institute for the History of Science: Berlin, Germany, 1–3 December 2000; Available online: http://vlp.mpiwg-berlin.mpg.de/exp/dierig/science_city.html (accessed on 10 September 2010).

- Netherlands Organization for Scientific Research. Urban Sciences. Interdisciplinary Research Programme on Urbanization & Urban Culture in The Netherlands. 2007. Available online: http://www.urbansciences.eu (accessed on 26 August 2010).

- Resch, B. Live Geography—Standardised Geo-sensor Networks for Real-Time Monitoring in Urban Environments. Ph.D. Thesis, University of Salzburg, Salzburg, Austria, December 2009. [Google Scholar]

- Resch, B.; Mittlboeck, M.; Girardin, F.; Britter, R.; Ratti, C. Live geography-embedded sensing for standardised urban environmental monitoring. Int. J. Adv. Syst. Meas. 2009, 2, 156–167. [Google Scholar]

- Resch, B.; Lippautz, M.; Mittlboeck, M. Pervasive monitoring-a standardised sensor web approach for intelligent sensing infrastructures. Sensors 2010, 10, 11440–11467. [Google Scholar] [CrossRef] [PubMed]

- Nittel, S.A. Survey of geosensor networks: Advances in dynamic environmental monitoring. Sensors 2009, 9, 5664–5678. [Google Scholar] [CrossRef] [PubMed]

- Ferscha, A.; Vogl, S.; Beer, W. Context sensing, aggregation, representation and exploitation in wireless networks. Sci. Int. J. Parallel Distrib. Comput. 2005, 6, 77–81. [Google Scholar]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- Campbell, A.T.; Eisenman, S.B.; Lane, N.D.; Miluzzo, E.; Peterson, R. People-Centric Urban Sensing. In Proceedings of the 2nd ACM/IEEE Annual International Wireless Internet Conference, Boston, MA, USA, 2–5 August 2006.

- Oke, T.R. Initial Guidance to Obtain Representative Meteorological Observations at Urban Sites; IOM Report No.81, WMO/TD. No. 1250; World Meteorological Organization: Geneva, Switzerland, 2006. [Google Scholar]

- Anonymous. Reviewer B, comments to the second submission of Manuscript ID: remotesensing-8447-Blaschke-at. 15 June 2011. [Google Scholar]

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview. Remote Sens. 2011, 3, 1743-1776. https://doi.org/10.3390/rs3081743

Blaschke T, Hay GJ, Weng Q, Resch B. Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview. Remote Sensing. 2011; 3(8):1743-1776. https://doi.org/10.3390/rs3081743

Chicago/Turabian StyleBlaschke, Thomas, Geoffrey J. Hay, Qihao Weng, and Bernd Resch. 2011. "Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview" Remote Sensing 3, no. 8: 1743-1776. https://doi.org/10.3390/rs3081743

APA StyleBlaschke, T., Hay, G. J., Weng, Q., & Resch, B. (2011). Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview. Remote Sensing, 3(8), 1743-1776. https://doi.org/10.3390/rs3081743