A Practical Framework for Estimating Façade Opening Rates of Rural Buildings Using Real-Scene 3D Models Derived from Unmanned Aerial Vehicle Photogrammetry

Abstract

1. Introduction

- -

- We propose a practical workflow for estimating FORs using real-scene 3D models derived from UAV photogrammetry, effectively avoiding the projection distortions inherent in image-based FOR estimation.

- -

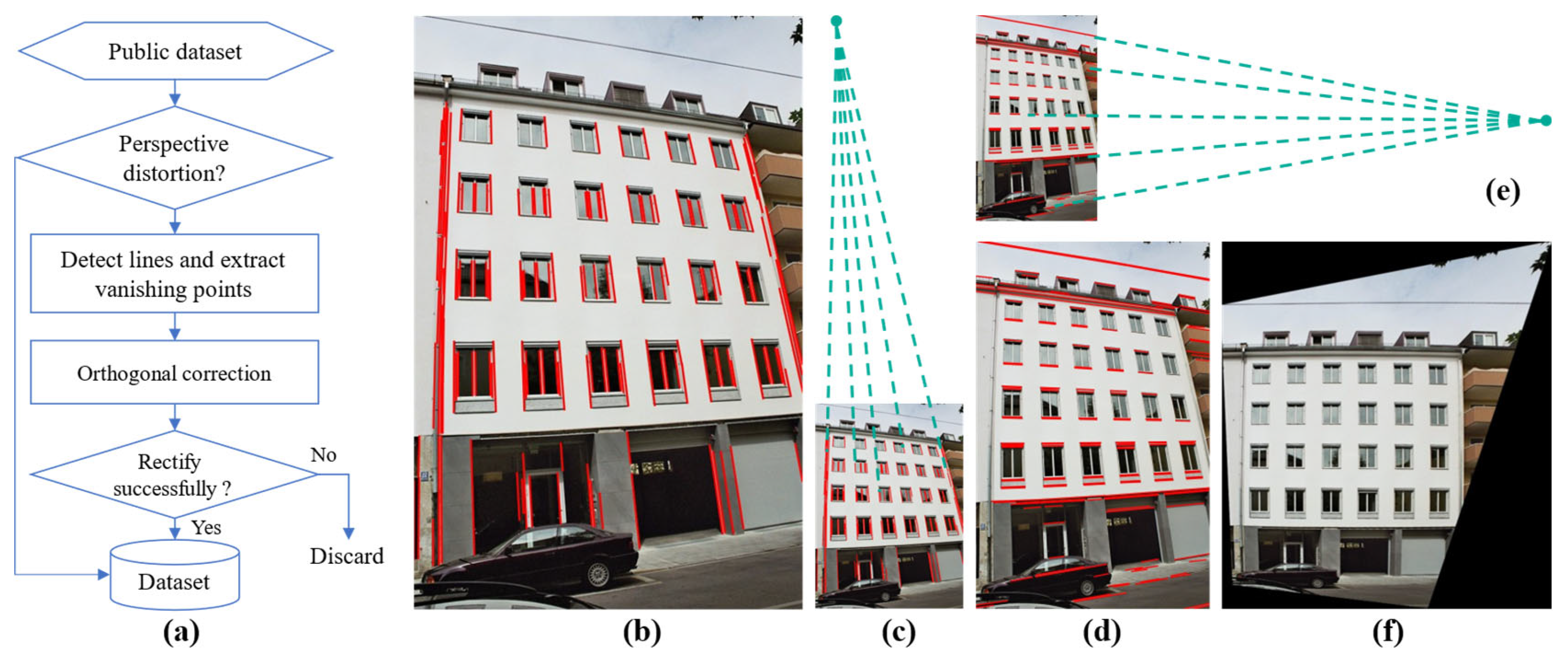

- By leveraging vanishing point correction to align the style of open-source street-view images with front-view images, we enhance the pre-training effectiveness of street-view image samples for extracting opening areas from rural building façades.

- -

- We introduce an attention module within a CNN learning framework to enhance the extraction of doors and windows from façade images, improving façade opening detection accuracy.

2. Related Work

2.1. Façade Safety Risk Assessment

2.2. Façade Opening Extraction

3. Materials and Methods

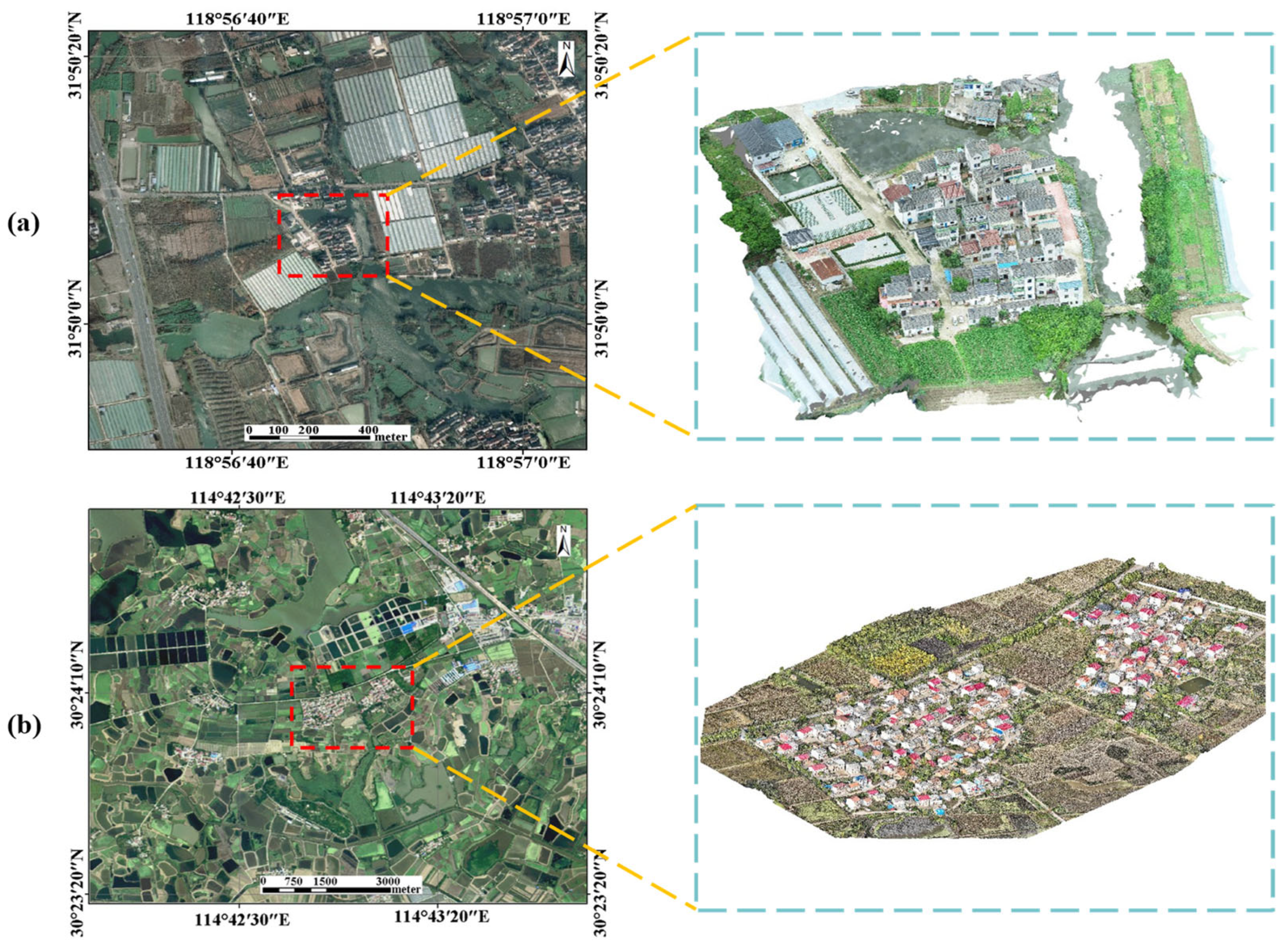

3.1. Datasets

3.2. Methods

3.2.1. Front-View Façade Image Generation

3.2.2. Alignment Between Street-View and Front-View Façade Images

3.2.3. Improved Deep Learning Network for Façade Opening Extraction

3.2.4. Wall Area Extraction and FOR Estimation

4. Results

4.1. Baselines and Evaluation Metrics

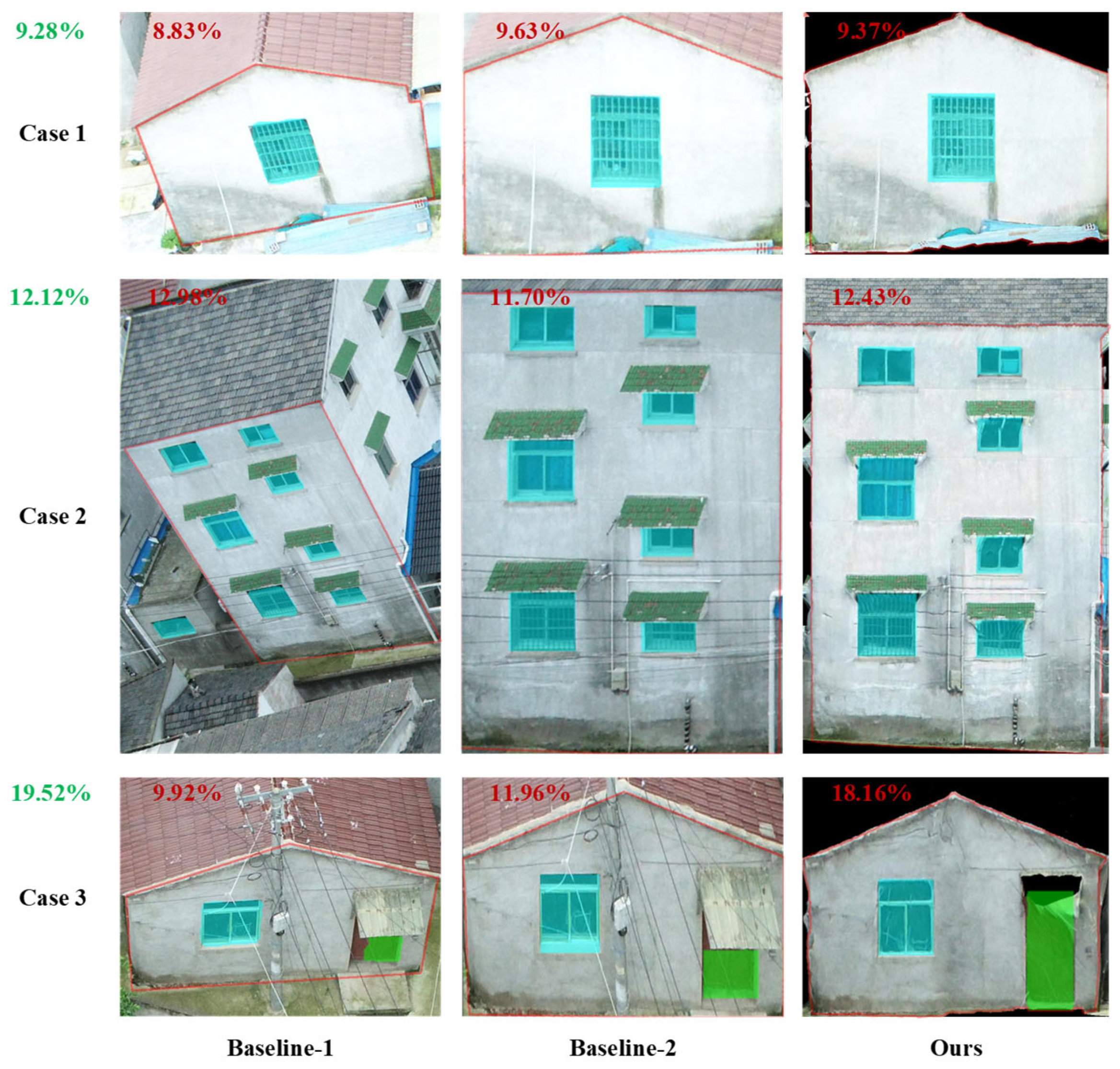

- Baseline 1: Raw Image Extraction.

- Baseline 2: Homography Correction.

4.2. Implementation Details

4.3. Overall Results

4.3.1. FOR Estimation Evaluation

4.3.2. Façade Opening Extraction Evaluation

5. Discussion

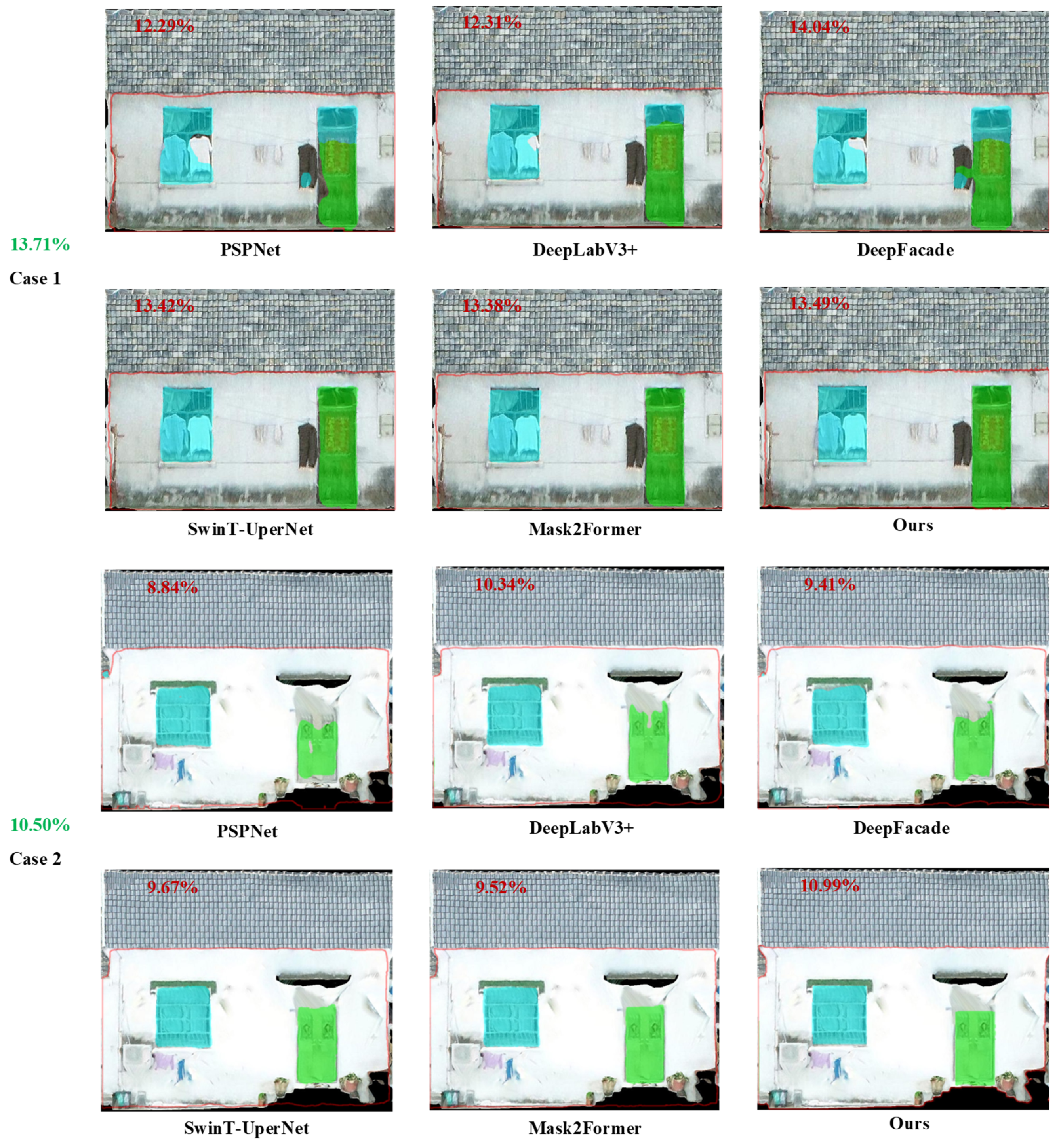

5.1. Comparison with Other Deep Learning Networks

5.2. Effectiveness of the Pretraining with Style-Adapted Publicly Available Datasets

5.3. Applicability

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Elnashai, A.S.; Di Sarno, L. Fundamentals of Earthquake Engineering: From Source to Fragility; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Das, T.; Barua, U.; Ansary, M.A. Factors affecting vulnerability of ready-made garment factory buildings in Bangladesh: An assessment under vertical and earthquake loads. Int. J. Disaster Risk Sci. 2018, 9, 207–223. [Google Scholar] [CrossRef]

- Aravena Pelizari, P.; Geiß, C.; Aguirre, P.; Santa María, H.; Merino Peña, Y.; Taubenböck, H. Automated building characterization for seismic risk assessment using street-level imagery and deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 180, 370–386. [Google Scholar] [CrossRef]

- Li, M.; Zhu, E.; Wang, B.; Zhu, C.; Liu, L.; Yang, W. Study on the seismic performance of rural houses masonry walls with different geometries of open-hole area reduction. Structures 2022, 41, 525–540. [Google Scholar] [CrossRef]

- Liu, Z.; Crewe, A. Effects of size and position of openings on in-plane capacity of unreinforced masonry walls. Bull. Earthq. Eng. 2020, 18, 4783–4812. [Google Scholar] [CrossRef]

- Shariq, M.; Abbas, H.; Irtaza, H.; Qamaruddin, M. Influence of openings on seismic performance of masonry building walls. Build. Environ. 2008, 43, 1232–1240. [Google Scholar] [CrossRef]

- Kayırga, O.M.; Altun, F. Investigation of earthquake behavior of unreinforced masonry buildings having different opening sizes: Experimental studies and numerical simulation. J. Build. Eng. 2021, 40, 102666. [Google Scholar] [CrossRef]

- Tekeli, H.; Aydin, A. An experimental study on the seismic behavior of infilled RC frames with opening. Sci. Iran. 2017, 24, 2271–2282. [Google Scholar] [CrossRef]

- Nila, N.D.; Sivan, P.P.; Ramya, K.M. Study on Effect of Openings in Seismic Behavior of Masonry Structures. Int. Res. J. Eng. Technol. 2018, 5, 4712–4717. [Google Scholar]

- Wang, J.; Wang, F.; Shen, Q.; Yu, B. Seismic response evaluation and design of CTSTT shear walls with openings. J. Constr. Steel Res. 2019, 153, 550–566. [Google Scholar] [CrossRef]

- Tripathy, D.; Singhal, V. Experimental and analytical investigation of opening effects on the in-plane capacity of unreinforced masonry wall. Eng. Struct. 2024, 311, 118161. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, G.; Sun, W. Automatic extraction of building geometries based on centroid clustering and contour analysis on oblique images taken by unmanned aerial vehicles. Int. J. Geogr. Inf. Sci. 2022, 36, 453–475. [Google Scholar] [CrossRef]

- Fond, A.; Berger, M.-O.; Simon, G. Model-image registration of a building’s facade based on dense semantic segmentation. Comput. Vis. Image Underst. 2021, 206, 103185. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; 4th Print; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Elkhrachy, I. Accuracy assessment of low-cost unmanned aerial vehicle (UAV) photogrammetry. Alex. Eng. J. 2021, 60, 5579–5590. [Google Scholar] [CrossRef]

- Barrile, V.; Bilotta, G.; Nunnari, A. 3D modeling with photogrammetry by UAVs and model quality verification. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 129–134. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, J.; Zhu, J.; Hoi, S.C.H. DeepFacade: A Deep Learning Approach to Facade Parsing. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2301–2307. [Google Scholar]

- Ma, W.; Ma, W.; Xu, S.; Zha, H. Pyramid ALKNet for Semantic Parsing of Building Facade Image. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1009–1013. [Google Scholar] [CrossRef]

- Zhuo, X.; Tian, J.; Fraundorfer, F. Cross Field-Based Segmentation and Learning-Based Vectorization for Rectangular Windows. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 431–448. [Google Scholar] [CrossRef]

- Li, C.-K.; Zhang, H.-X.; Liu, J.-X.; Zhang, Y.-Q.; Zou, S.-C.; Fang, Y.-T. Window Detection in Facades Using Heatmap Fusion. J. Comput. Sci. Technol. 2020, 35, 900–912. [Google Scholar] [CrossRef]

- Femiani, J.; Para, W.R.; Mitra, N.; Wonka, P. Facade segmentation in the wild. arXiv 2018, arXiv:180508634. [Google Scholar]

- Ma, W.; Ma, W. Deep Window Detection in Street Scenes. KSII Trans. Internet Inf. Syst. 2020, 14, 855–870. [Google Scholar] [CrossRef]

- Teboul, O.; Simon, L.; Koutsourakis, P.; Paragios, N. Segmentation of building facades using procedural shape priors. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3105–3112. [Google Scholar]

- Tyleček, R.; Šára, R. Spatial Pattern Templates for Recognition of Objects with Regular Structure. In Proceedings of the Pattern Recognition: 35th German Conference, GCPR 2013, Saarbrücken, Germany, 3–6 September 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 364–374. [Google Scholar]

- Riemenschneider, H.; Krispel, U.; Thaller, W.; Donoser, M.; Havemann, S.; Fellner, D.; Bischof, H. Irregular lattices for complex shape grammar facade parsing. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1640–1647. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Chaudhury, K.; DiVerdi, S.; Ioffe, S. Auto-rectification of user photos. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 3479–3483. [Google Scholar]

- Ruggiero, G.; Marmo, R.; Nicolella, M. A Methodological Approach for Assessing the Safety of Historic Buildings’ Façades. Sustainability 2021, 13, 2812. [Google Scholar] [CrossRef]

- Kechidi, S.; Castro, J.M.; Monteiro, R.; Marques, M.; Yelles, K.; Bourahla, N.; Hamdache, M. Development of exposure datasets for earthquake damage and risk modelling: The case study of northern Algeria. Bull. Earthq. Eng. 2021, 19, 5253–5283. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Yang, J.; Li, H.; Liu, Y.; Fu, B.; Yang, F. Seismic vulnerability comparison between rural Weinan and other rural areas in Western China. Int. J. Disaster Risk Reduct. 2020, 48, 101576. [Google Scholar] [CrossRef]

- An, J.; Nie, G.; Hu, B. Area-Wide estimation of seismic building structural types in rural areas by using decision tree and local knowledge in combination. Int. J. Disaster Risk Reduct. 2021, 60, 102320. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, G.; Sun, W. Automatic identification of building structure types using unmanned aerial vehicle oblique images and deep learning considering facade prior knowledge. Int. J. Digit. Earth 2023, 16, 3348–3367. [Google Scholar] [CrossRef]

- Haghighatgou, N.; Daniel, S.; Badard, T. A method for automatic identification of openings in buildings facades based on mobile LiDAR point clouds for assessing impacts of floodings. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102757. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV Photogrammetry for Mapping and 3D Modeling: Current Status and Future Perspectives. In The International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences, Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics (UAV-g), Zurich, Switzerland, 14–16 September 2011; International Society of Photogrammetry and Remote Sensing (ISPRS): Hannover, Germany, 2011; pp. 25–31. [Google Scholar]

- Yoon, S.; Spencer Jr, B.F.; Lee, S.; Jung, H.-J.; Kim, I.-H. A novel approach to assess the seismic performance of deteriorated bridge structures by employing UAV-based damage detection. Struct. Control Health Monit. 2022, 29, e2964. [Google Scholar] [CrossRef]

- Meduri, G.M.; Barrile, V. Bridge Seismic Evaluation Through Processing Techniques and UAV Photogrammetric Investigation. In Proceedings of the Networks, Markets & People, Proceedings of the International Symposium: New Metropolitan Perspectives, Reggio Calabria, Italy, 22–24 May 2024; Springer Nature: Cham, Switzerland, 2024; pp. 176–185. [Google Scholar]

- Zhou, J.; Liu, Y.; Nie, G.; Cheng, H.; Yang, X.; Chen, X.; Gross, L. Building extraction and floor area estimation at the village level in rural China via a comprehensive method integrating UAV photogrammetry and the novel EDSANet. Remote Sens. 2022, 14, 5175. [Google Scholar] [CrossRef]

- Wang, Y.; Li, S.; Teng, F.; Lin, Y.; Wang, M.; Cai, H. Improved mask R-CNN for rural building roof type recognition from uav high-resolution images: A case study in hunan province, China. Remote Sens. 2022, 14, 265. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, Y.; Yeoh, J.K.; Chua, D.K.; Wong, L.W.; Ang, M.H.; Lee, W.; Chew, M.Y. Framework for automated UAV-based inspection of external building façades. Autom. Cities Des. Constr. Oper. Future Impact 2021, 173–194. [Google Scholar]

- He, T.; Chen, K.; Jazizadeh, F.; Reichard, G. Unmanned aerial vehicle-based as-built surveys of buildings. Autom. Constr. 2024, 161, 105323. [Google Scholar] [CrossRef]

- Marsland, L.; Nguyen, K.; Zhang, Y.; Huang, Y.; Abu-Zidan, Y.; Gunawardena, T.; Mendis, P. Improving aerodynamic performance of tall buildings using façade openings at service floors. J. Wind Eng. Ind. Aerodyn. 2022, 225, 104997. [Google Scholar] [CrossRef]

- Nguyen, K.T.; Weerasinghe, P.; Mendis, P.; Ngo, T. Performance of modern building façades in fire: A comprehensive review. Electron. J. Struct. Eng. 2016, 16, 69–87. [Google Scholar] [CrossRef]

- Sun, Q.; Song, J.; Yu, Y.; Ai, H.; Zhao, L. A Study of the Impacts of Different Opening Arrangements of Double-Skin Façades on the Indoor Temperatures of a Selected Building. Buildings 2024, 14, 3893. [Google Scholar] [CrossRef]

- Müller, P.; Zeng, G.; Wonka, P.; Van Gool, L. Image-based procedural modeling of facades. ACM Trans. Graph. 2007, 26, 85. [Google Scholar] [CrossRef]

- Reznik, S.; Mayer, H. Implicit shape models, self-diagnosis, and model selection for 3D façade interpretation. Photogramm. Fernerkund. Geoinf. 2008, 3, 187–196. [Google Scholar]

- Cohen, A.; Schwing, A.G.; Pollefeys, M. Efficient structured parsing of facades using dynamic programming. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3206–3213. [Google Scholar]

- Sun, Y.; Malihi, S.; Li, H.; Maboudi, M. DeepWindows: Windows Instance Segmentation through an Improved Mask R-CNN Using Spatial Attention and Relation Modules. ISPRS Int. J. Geo-Inf. 2022, 11, 162. [Google Scholar] [CrossRef]

- Liu, H.; Xu, Y.; Zhang, J.; Zhu, J.; Li, Y.; Hoi, S.C.H. DeepFacade: A Deep Learning Approach to Facade Parsing With Symmetric Loss. IEEE Trans. Multimed. 2020, 22, 3153–3165. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zoph, B.; Ghiasi, G.; Lin, T.-Y.; Cui, Y.; Liu, H.; Cubuk, E.D.; Le, Q. Rethinking pre-training and self-training. Adv. Neural Inf. Process. Syst. 2020, 33, 3833–3845. [Google Scholar]

- Hu, H.; Gu, J.; Zhang, Z.; Dai, J.; Wei, Y. Relation networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3588–3597. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Dai, M.; Ward, W.O.C.; Meyers, G.; Densley Tingley, D.; Mayfield, M. Residential building facade segmentation in the urban environment. Build. Environ. 2021, 199, 107921. [Google Scholar] [CrossRef]

- Fathalla, R.; Vogiatzis, G. A deep learning pipeline for semantic facade segmentation. In Proceedings of the British Machine Vision Conference 2017, London, UK, 19–22 September 2017; p. 120. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.-Y.; Girshick, R. Detectron2 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 14 June 2022).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Gadde, R.; Marlet, R.; Paragios, N. Learning grammars for architecture-specific facade parsing. Int. J. Comput. Vis. 2016, 117, 290–316. [Google Scholar] [CrossRef]

- Korc, F.; Förstner, W. eTRIMS Image Database for Interpreting Images of Man-Made Scenes; Technical Report TR-IGG-P-2009-01; University of Bonn: Bonn, Germany, 2009. [Google Scholar]

- Fröhlich, B.; Rodner, E.; Denzler, J. A Fast Approach for Pixelwise Labeling of Facade Images. In Proceedings of the International Conference on Pattern Recognition (ICPR 2010), Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Riemenschneider, H.; Bódis-Szomorú, A.; Weissenberg, J.; Van Gool, L. Learning Where to Classify in Multi-View Semantic Segmentation. In Computer Vision—ECCV 2014, Proceedings of the ECCV 2014: 13th European Conference; Proceedings Part V 13, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 516–532. [Google Scholar]

- Kolev, K.; Klodt, M.; Brox, T.; Cremers, D. Continuous Global Optimization in Multiview 3D Reconstruction. Int. J. Comput. Vis. 2009, 84, 80–96. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 1290–1299. [Google Scholar]

| Input | Nanjing | Ezhou | ||

|---|---|---|---|---|

| MAE | MRE | MAE | MRE | |

| Baseline-1 | 0.062 | 34% | 0.058 | 35% |

| Baseline-2 | 0.029 | 17% | 0.032 | 16% |

| Ours | 0.020 | 12% | 0.019 | 11% |

| Study Area | Input | Window | Door | ||||

|---|---|---|---|---|---|---|---|

| PRE | REC | IOU | PRE | REC | IOU | ||

| Nanjing | Baseline-1 | 0.88 | 0.86 | 0.77 | 0.70 | 0.16 | 0.15 |

| Baseline-2 | 0.84 | 0.93 | 0.79 | 0.92 | 0.39 | 0.38 | |

| Ours | 0.95 | 0.93 | 0.89 | 0.86 | 0.71 | 0.64 | |

| Ezhou | Baseline-1 | 0.78 | 0.84 | 0.68 | 0.72 | 0.15 | 0.16 |

| Baseline-2 | 0.91 | 0.89 | 0.82 | 0.89 | 0.38 | 0.36 | |

| Ours | 0.94 | 0.92 | 0.88 | 0.85 | 0.71 | 0.63 | |

| Study Area | Method | Window | Door | Wall | FOR | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PRE | REC | IOU | PRE | REC | IOU | PRE | REC | IOU | MAE | MRE | ||

| Nanjing | PSPNet | 0.94 | 0.92 | 0.87 | 0.88 | 0.53 | 0.49 | 0.97 | 0.96 | 0.93 | 0.033 | 14% |

| DeepLabV3+ | 0.95 | 0.91 | 0.87 | 0.84 | 0.70 | 0.62 | 0.94 | 0.95 | 0.92 | 0.021 | 13% | |

| DeepFacade | 0.92 | 0.93 | 0.86 | 0.85 | 0.59 | 0.54 | 0.97 | 0.96 | 0.94 | 0.031 | 14% | |

| SwinT-UperNet | 0.93 | 0.93 | 0.87 | 0.88 | 0.64 | 0.62 | 0.97 | 0.96 | 0.93 | 0.023 | 13% | |

| Mask2Former | 0.95 | 0.92 | 0.88 | 0.86 | 0.69 | 0.63 | 0.96 | 0.96 | 0.92 | 0.021 | 13% | |

| Ours | 0.95 | 0.93 | 0.89 | 0.86 | 0.71 | 0.64 | 0.98 | 0.96 | 0.94 | 0.020 | 12% | |

| Ezhou | PSPNet | 0.92 | 0.94 | 0.87 | 0.86 | 0.58 | 0.51 | 0.95 | 0.95 | 0.92 | 0.032 | 13% |

| DeepLabV3+ | 0.95 | 0.92 | 0.87 | 0.85 | 0.69 | 0.61 | 0.96 | 0.96 | 0.92 | 0.022 | 13% | |

| DeepFacade | 0.93 | 0.92 | 0.86 | 0.87 | 0.68 | 0.60 | 0.96 | 0.97 | 0.93 | 0.029 | 14% | |

| SwinT-UperNet | 0.95 | 0.93 | 0.87 | 0.85 | 0.70 | 0.62 | 0.97 | 0.97 | 0.94 | 0.022 | 13% | |

| Mask2Former | 0.94 | 0.92 | 0.87 | 0.86 | 0.68 | 0.61 | 0.97 | 0.95 | 0.92 | 0.024 | 13% | |

| Ours | 0.94 | 0.92 | 0.88 | 0.85 | 0.71 | 0.63 | 0.96 | 0.96 | 0.93 | 0.019 | 11% | |

| Study Area | Method | Window | Door | Wall | FOR | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PRE | REC | IOU | PRE | REC | IOU | PRE | REC | IOU | MAE | MRE | ||

| Nanjing | w/o P | 0.93 | 0.84 | 0.80 | 0.64 | 0.64 | 0.47 | 0.98 | 0.96 | 0.94 | 0.039 | 22% |

| P | 0.92 | 0.91 | 0.85 | 0.83 | 0.67 | 0.59 | 0.98 | 0.96 | 0.94 | 0.032 | 14% | |

| P + R | 0.95 | 0.93 | 0.89 | 0.86 | 0.71 | 0.64 | 0.98 | 0.96 | 0.94 | 0.020 | 12% | |

| Ezhou | w/o P | 0.91 | 0.83 | 0.78 | 0.67 | 0.65 | 0.49 | 0.94 | 0.94 | 0.90 | 0.041 | 23% |

| P | 0.94 | 0.89 | 0.84 | 0.84 | 0.66 | 0.59 | 0.94 | 0.94 | 0.90 | 0.028 | 13% | |

| P + R | 0.94 | 0.92 | 0.87 | 0.85 | 0.71 | 0.63 | 0.94 | 0.94 | 0.90 | 0.019 | 11% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, Z.; Xi, K.; Liao, Y.; Tao, P.; Ke, T. A Practical Framework for Estimating Façade Opening Rates of Rural Buildings Using Real-Scene 3D Models Derived from Unmanned Aerial Vehicle Photogrammetry. Remote Sens. 2025, 17, 1596. https://doi.org/10.3390/rs17091596

Niu Z, Xi K, Liao Y, Tao P, Ke T. A Practical Framework for Estimating Façade Opening Rates of Rural Buildings Using Real-Scene 3D Models Derived from Unmanned Aerial Vehicle Photogrammetry. Remote Sensing. 2025; 17(9):1596. https://doi.org/10.3390/rs17091596

Chicago/Turabian StyleNiu, Zhuangqun, Ke Xi, Yifan Liao, Pengjie Tao, and Tao Ke. 2025. "A Practical Framework for Estimating Façade Opening Rates of Rural Buildings Using Real-Scene 3D Models Derived from Unmanned Aerial Vehicle Photogrammetry" Remote Sensing 17, no. 9: 1596. https://doi.org/10.3390/rs17091596

APA StyleNiu, Z., Xi, K., Liao, Y., Tao, P., & Ke, T. (2025). A Practical Framework for Estimating Façade Opening Rates of Rural Buildings Using Real-Scene 3D Models Derived from Unmanned Aerial Vehicle Photogrammetry. Remote Sensing, 17(9), 1596. https://doi.org/10.3390/rs17091596