Rule-Based Multi-Task Deep Learning for Highly Efficient Rice Lodging Segmentation

Abstract

1. Introduction

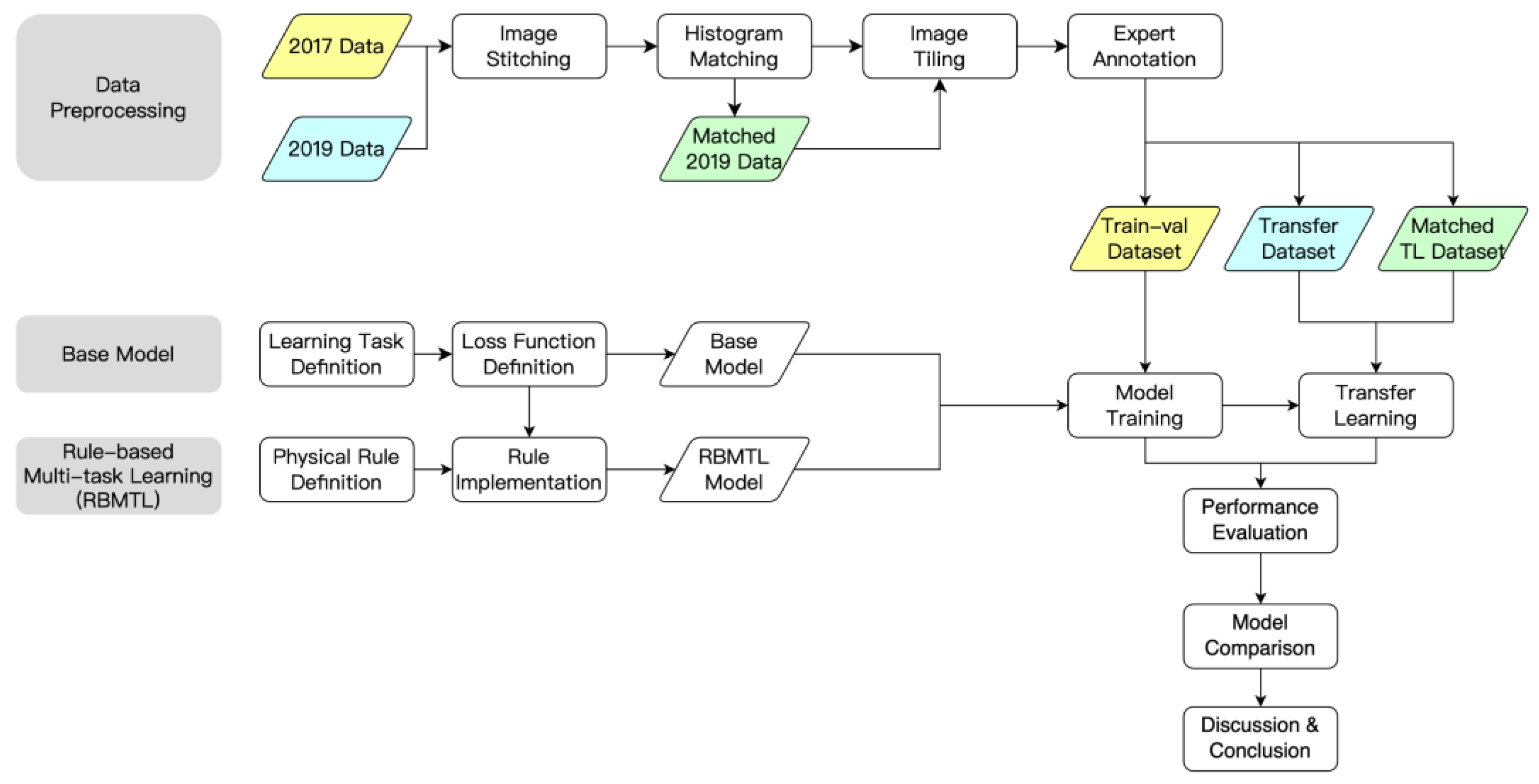

2. Materials and Methods

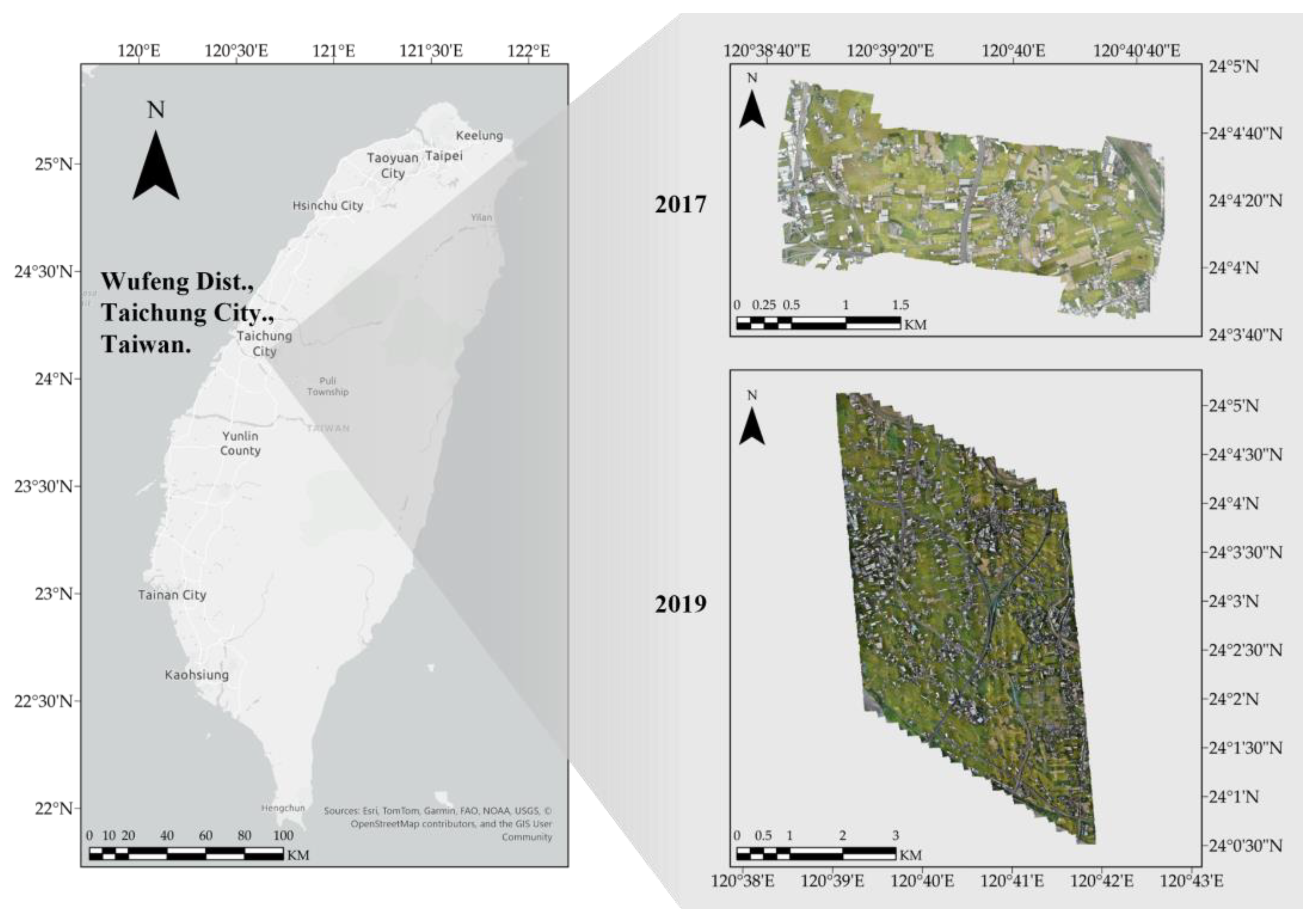

2.1. Study Site and Dataset

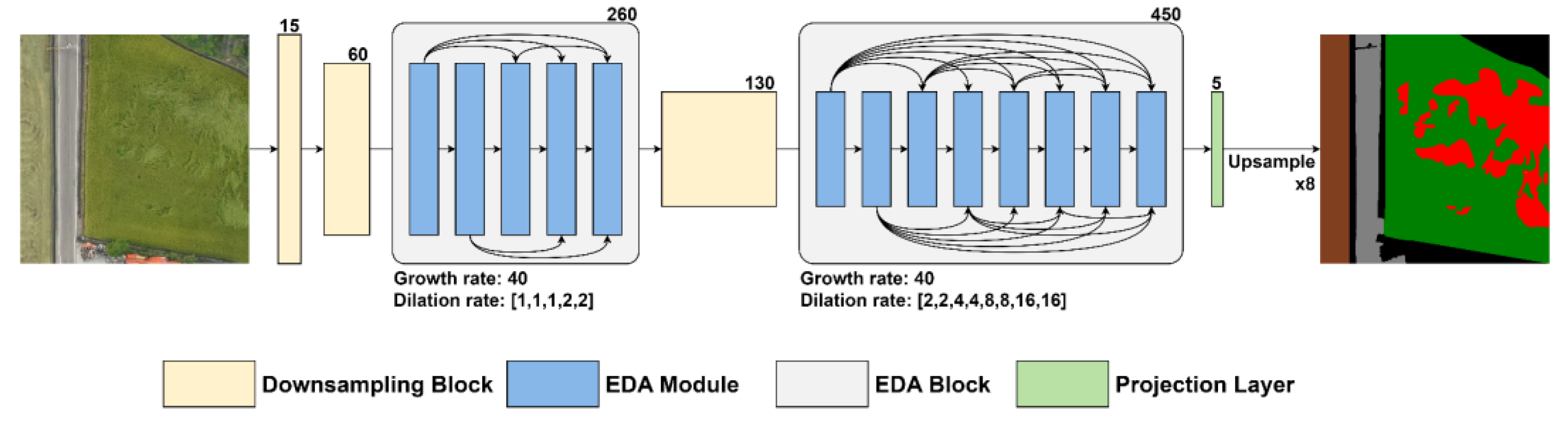

2.2. Semantic Segmentation Model

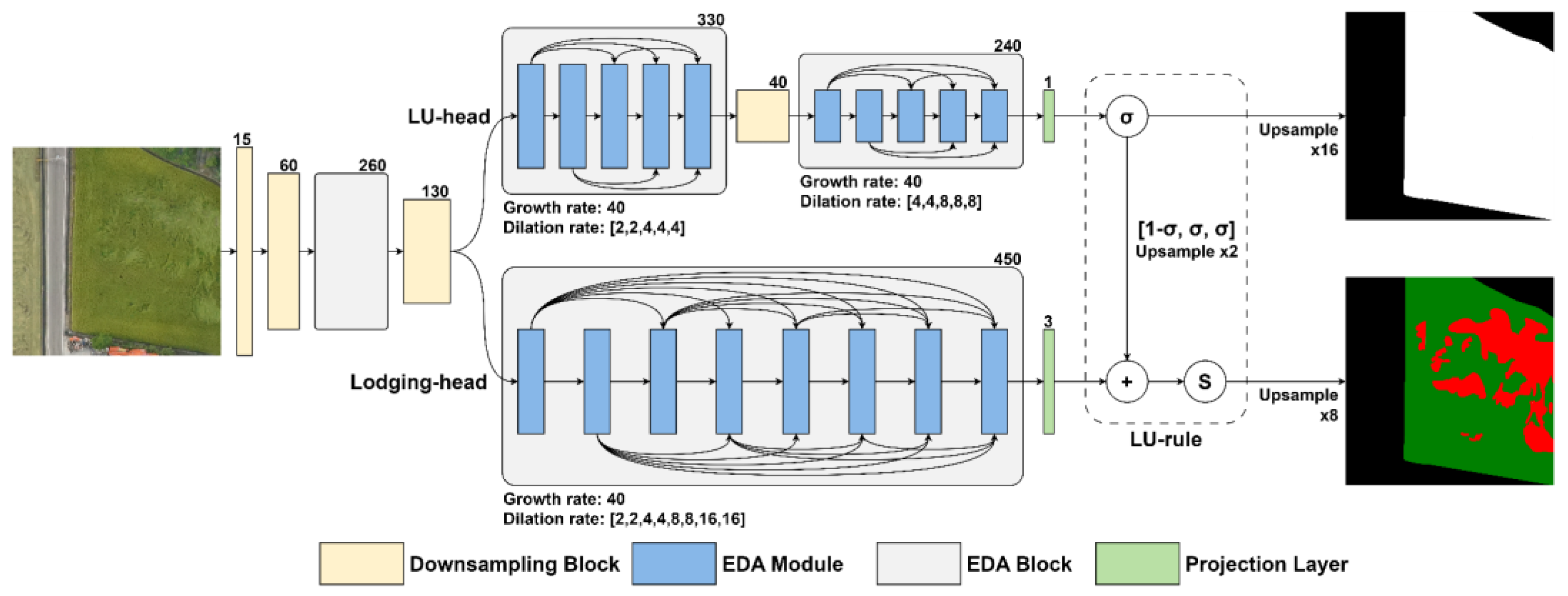

2.3. Rule-Based Learning

2.3.1. Land-Use Branch (LU-Head)

2.3.2. Lodging Branch (Lodging-Head)

2.4. Multi-Task Learning

2.4.1. Binary Cross Entropy (BCE) Loss

2.4.2. Categorical Cross Entropy (CCE) Loss

2.4.3. Categorical Focal Loss

2.4.4. Total Loss

2.5. Evaluation Metrics

3. Results and Discussions

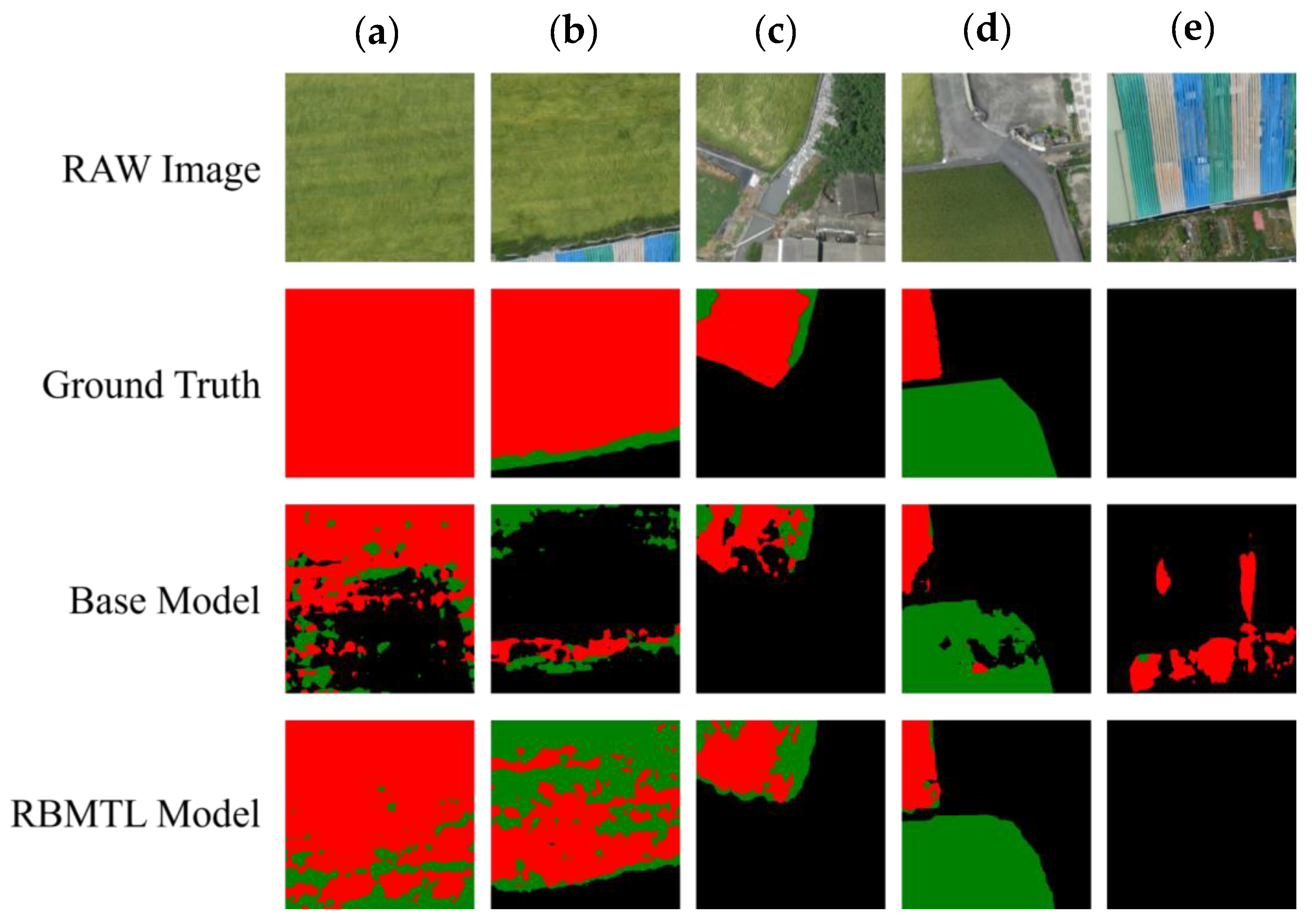

3.1. Training and Evaluation on the 2017 Dataset

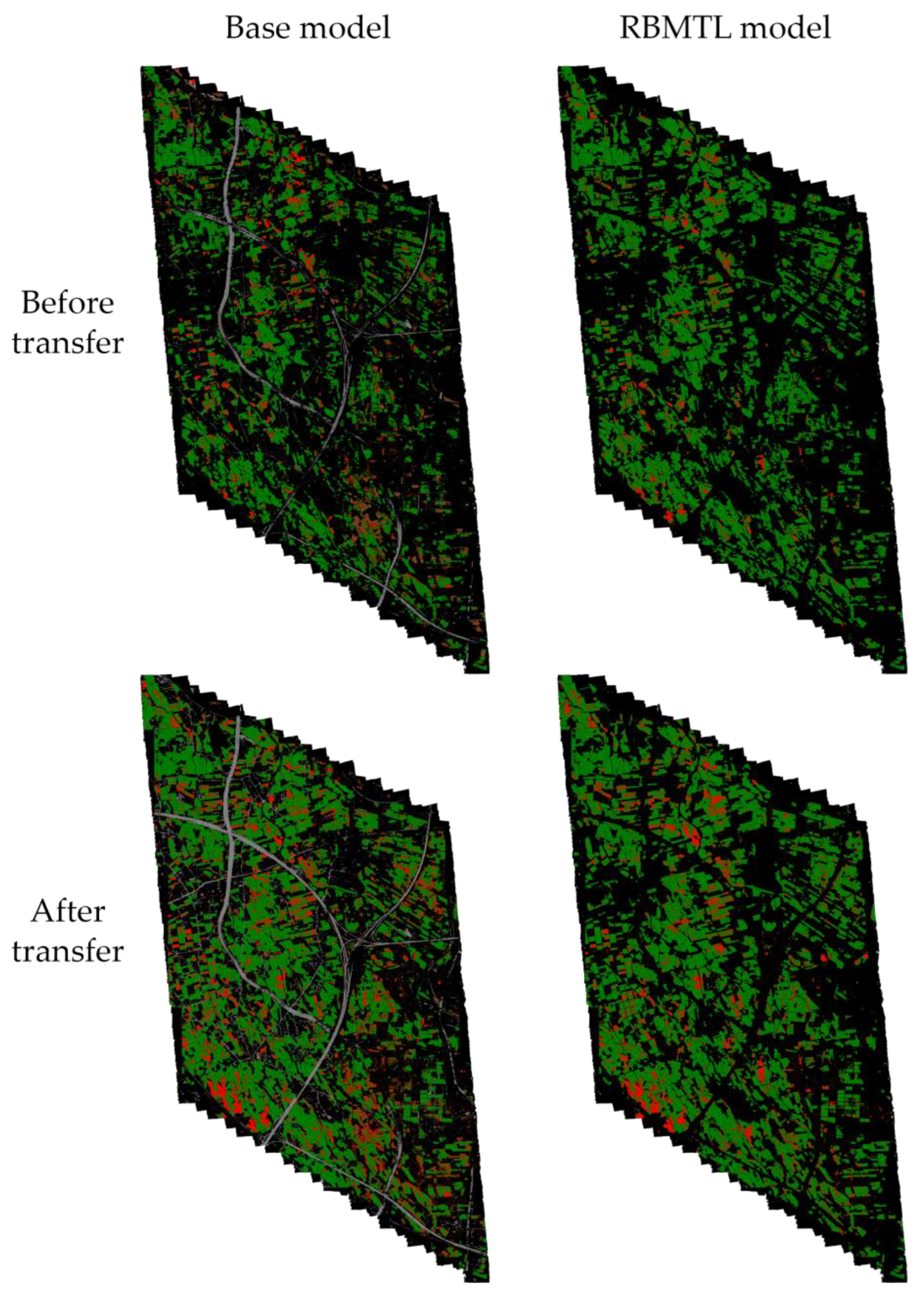

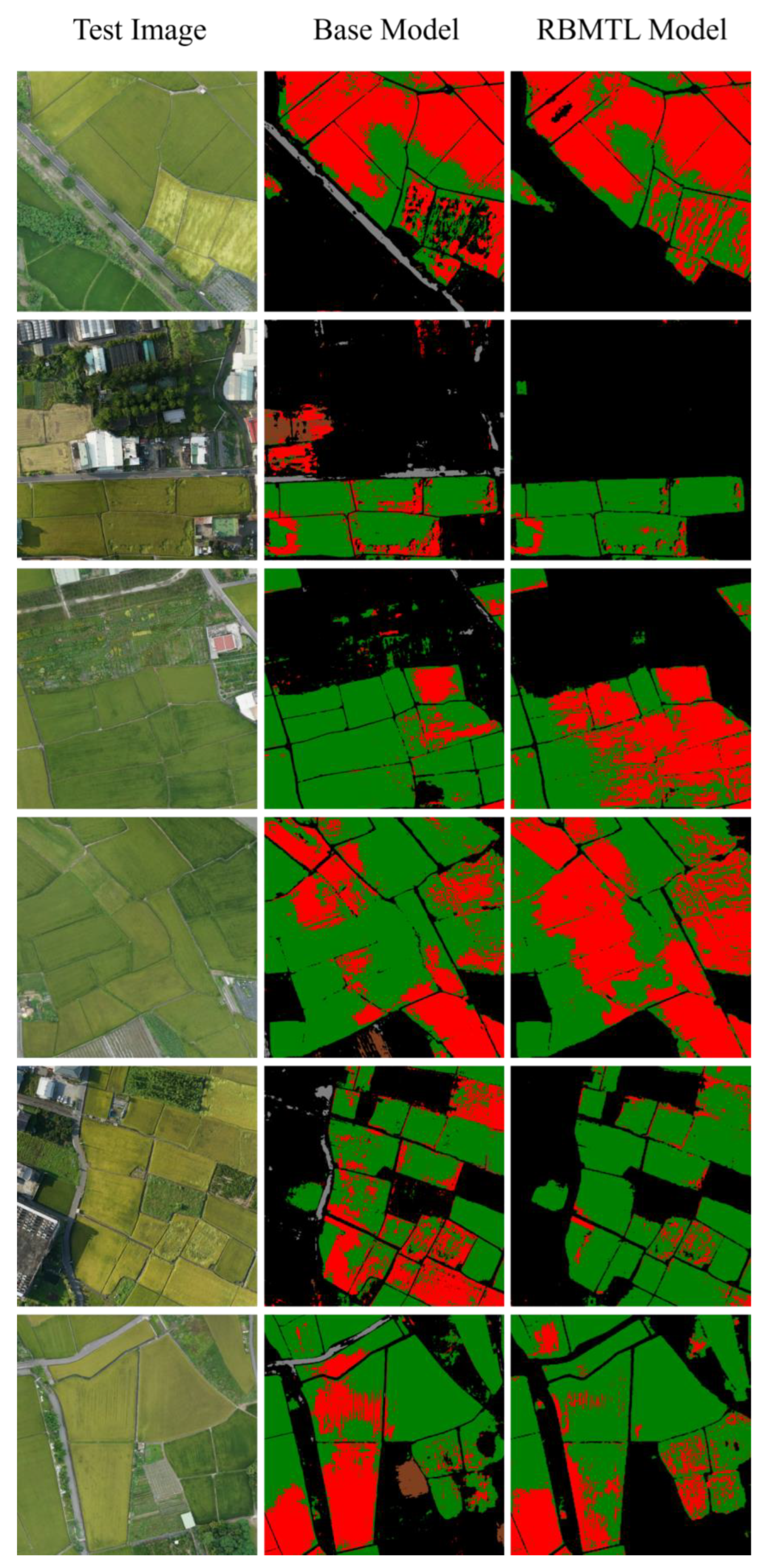

3.2. Evaluation of the 2019 Dataset

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Crops and Livestock Products. FAOSTAT. Available online: https://www.fao.org/faostat/en/#data/QCL (accessed on 20 February 2024).

- Juliano, B.O. Rice in Human Nutrition; FAO food and nutrition series; Published with the cooperation of the International Rice Research Institute; Food and Agriculture Organization of the United Nations: Rome, Italy, 1993; ISBN 978-92-5-103149-0. [Google Scholar]

- Ishimaru, K.; Togawa, E.; Ookawa, T.; Kashiwagi, T.; Madoka, Y.; Hirotsu, N. New Target for Rice Lodging Resistance and Its Effect in a Typhoon. Planta 2008, 227, 601–609. [Google Scholar] [CrossRef] [PubMed]

- Vignola, R.; Harvey, C.A.; Bautista-Solis, P.; Avelino, J.; Rapidel, B.; Donatti, C.; Martinez, R. Ecosystem-based adaptation for smallholder farmers: Definitions, opportunities and constraints. Agric. Ecosyst. Environ. 2015, 211, 126–132. [Google Scholar] [CrossRef]

- Shimono, H.; Okada, M.; Yamakawa, Y.; Nakamura, H.; Kobayashi, K.; Hasegawa, T. Lodging in rice can be alleviated by atmospheric CO2 enrichment. Agric. Ecosyst. Environ. 2007, 118, 223–230. [Google Scholar] [CrossRef]

- Liu, Q.; Ma, J.; Zhao, Q.; Zhou, X. Physical Traits Related to Rice Lodging Resistance under Different Simplified-Cultivation Methods. Agron. J. 2018, 110, 127–132. [Google Scholar] [CrossRef]

- Corbin, J.L.; Orlowski, J.M.; Harrell, D.L.; Golden, B.R.; Falconer, L.; Krutz, L.J.; Gore, J.; Cox, M.S.; Walker, T.W. Nitrogen Strategy and Seeding Rate Affect Rice Lodging, Yield, and Economic Returns in the Midsouthern United States. Agron. J. 2016, 108, 1938–1943. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, L.; Wu, X.; Ding, Y.; Li, G.; Li, J.; Weng, F.; Liu, Z.; Tang, S.; Ding, C.; et al. Lodging Resistance of Japonica Rice (Oryza sativa L.): Morphological and Anatomical Traits Due to Top-Dressing Nitrogen Application Rates. Rice 2016, 9, 31. [Google Scholar] [CrossRef]

- Setter, T.L.; Laureles, E.V.; Mazaredo, A.M. Lodging Reduces Yield of Rice by Self-Shading and Reductions in Canopy Photosynthesis. Field Crops Res. 1997, 49, 95–106. [Google Scholar] [CrossRef]

- Chang, H.H.; Zilberman, D. On the political economy of allocation of agricultural disaster relief payments: Application to Taiwan. Eur. Rev. Agric. Econ. 2014, 41, 657–680. [Google Scholar] [CrossRef]

- Yang, Y.; Liang, C.; Hu, L.; Luo, X.; He, J.; Wang, P.; Huang, P.; Gao, R.; Li, J. A Proposal for Lodging Judgment of Rice Based on Binocular Camera. Agronomy 2023, 13, 2852. [Google Scholar] [CrossRef]

- Jia, Y.; Su, Z.; Shen, W.; Yuan, J.; Xu, Z. UAV remote sensing image mosaic and its application in agriculture. Int. J. Smart Home 2016, 10, 159–170. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. For. Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Nelson, A.; Setiyono, T.; Rala, A.B.; Quicho, E.D.; Raviz, J.V.; Abonete, P.J.; Maunahan, A.A.; Garcia, C.A.; Bhatti, H.Z.M.; Villano, L.S.; et al. Towards an Operational SAR-Based Rice Monitoring System in Asia: Examples from 13 Demonstration Sites across Asia in the RIICE Project. Remote Sens. 2014, 6, 10773–10812. [Google Scholar] [CrossRef]

- Wu, D.-H.; Chen, C.-T.; Yang, M.-D.; Wu, Y.-C.; Lin, C.-Y.; Lai, M.-H.; Yang, C.-Y. Controlling the Lodging Risk of Rice Based on a Plant Height Dynamic Model. Bot. Stud. 2022, 63, 25. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.-Y.; Yang, M.-D.; Tseng, W.-C.; Hsu, Y.-C.; Li, G.-S.; Lai, M.-H.; Wu, D.-H.; Lu, H.-Y. Assessment of Rice Developmental Stage Using Time Series UAV Imagery for Variable Irrigation Management. Sensors 2020, 20, 5354. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Yang, C.-Y.; Lai, M.-H.; Wu, D.-H. A UAV Open Dataset of Rice Paddies for Deep Learning Practice. Remote Sens. 2021, 13, 1358. [Google Scholar] [CrossRef]

- Tseng, H.-H.; Yang, M.-D.; Saminathan, R.; Hsu, Y.-C.; Yang, C.-Y.; Wu, D.-H. Rice Seedling Detection in UAV Images Using Transfer Learning and Machine Learning. Remote Sens. 2022, 14, 2837. [Google Scholar] [CrossRef]

- Lockhart, K.; Sandino, J.; Amarasingam, N.; Hann, R.; Bollard, B.; Gonzalez, F. Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review. Remote Sens. 2025, 17, 304. [Google Scholar] [CrossRef]

- Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.P.; Lin, L.-M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Masiane, T.; Murray, S.C. UAS Imaging for Automated Crop Lodging Detection: A Case Study over an Experimental Maize Field. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II, Anaheim, CA, USA, 10–11 April 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10218, pp. 88–94. [Google Scholar]

- Yu, H.; Wang, J.; Bai, Y.; Yang, W.; Xia, G.-S. Analysis of Large-Scale UAV Images Using a Multi-Scale Hierarchical Representation. Geo-Spat. Inf. Sci. 2018, 21, 33–44. [Google Scholar] [CrossRef]

- Yang, M.-D.; Boubin, J.G.; Tsai, H.P.; Tseng, H.-H.; Hsu, Y.-C.; Stewart, C.C. Adaptive Autonomous UAV Scouting for Rice Lodging Assessment Using Edge Computing with Deep Learning EDANet. Comput. Electron. Agric. 2020, 179, 105817. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Lee, C.-J.; Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Sung, Y.; Chen, W.-L. Single-Plant Broccoli Growth Monitoring Using Deep Learning with UAV Imagery. Comput. Electron. Agric. 2023, 207, 107739. [Google Scholar] [CrossRef]

- Zheng, Z.; Yuan, J.; Yao, W.; Yao, H.; Liu, Q.; Guo, L. Crop Classification from Drone Imagery Based on Lightweight Semantic Segmentation Methods. Remote Sens. 2024, 16, 4099. [Google Scholar] [CrossRef]

- Chu, Z.; Yu, J. An End-to-End Model for Rice Yield Prediction Using Deep Learning Fusion. Comput. Electron. Agric. 2020, 174, 105471. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV Environmental Perception and Autonomous Obstacle Avoidance: A Deep Learning and Depth Camera Combined Solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Yang, M.-D.; Hsu, Y.-C.; Tseng, W.-C.; Tseng, H.-H.; Lai, M.-H. Precision Assessment of Rice Grain Moisture Content Using UAV Multispectral Imagery and Machine Learning. Comput. Electron. Agric. 2025, 230, 109813. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep Convolutional Neural Networks for Rice Grain Yield Estimation at the Ripening Stage Using UAV-Based Remotely Sensed Images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Negnevitsky, M. Artificial Intelligence: A Guide to Intelligent Systems, 2nd ed.; Addison-Wesley: Harlow, UK; New York, NY, USA, 2005; ISBN 978-0-321-20466-0. [Google Scholar]

- Abraham, A. Rule-Based Expert Systems. In Handbook of Measuring System Design; John Wiley & Sons, Ltd.: Chichester, UK, 2005; ISBN 978-0-471-49739-4. [Google Scholar]

- de Sousa, J.V.R.; Gamboa, P.V. Aerial Forest Fire Detection and Monitoring Using a Small UAV. KnE Eng. 2020, 5, 242–256. [Google Scholar] [CrossRef]

- Blaschke, T.; Feizizadeh, B.; Hölbling, D. Object-Based Image Analysis and Digital Terrain Analysis for Locating Landslides in the Urmia Lake Basin, Iran. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4806–4817. [Google Scholar] [CrossRef]

- Bui, N.Q.; Le, D.H.; Duong, A.Q.; Nguyen, Q.L. Rule-Based Classification of Airborne Laser Scanner Data for Automatic Extraction of 3D Objects in the Urban Area. Inż. Miner. 2021, 2, 103–114. [Google Scholar] [CrossRef]

- Knöttner, J.; Rosenbaum, D.; Kurz, F.; Reinartz, P.; Brunn, A. RULE-BASED MAPPING OF PARKED VEHICLES USING AERIAL IMAGE SEQUENCES. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2-W7, 95–101. [Google Scholar] [CrossRef]

- Trachtman, A.R.; Bergamini, L.; Palazzi, A.; Porrello, A.; Capobianco Dondona, A.; Del Negro, E.; Paolini, A.; Vignola, G.; Calderara, S.; Marruchella, G. Scoring Pleurisy in Slaughtered Pigs Using Convolutional Neural Networks. Vet. Res. 2020, 51, 51. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Pan, N.F.; Liu, P. Feature Extraction of Sewer Pipe Defects Using Wavelet Transform and Co-occurrence Matrix. Int. J. Wavelets Multiresolut. Inf. Process. 2011, 2, 211–225. [Google Scholar] [CrossRef]

- Caruana, R. Multi-task Learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Luong, M.-T.; Le, Q.V.; Sutskever, I.; Vinyals, O.; Kaiser, L. Multi-Task Sequence to Sequence Learning. arXiv 2015, arXiv:1511.06114. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Montréal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Zhang, C.; Zhang, Z. Improving Multiview Face Detection with Multi-Task Deep Convolutional Neural Networks. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 1036–1041. [Google Scholar]

- Lu, X.; Zhong, Y.; Zheng, Z.; Liu, Y.; Zhao, J.; Ma, A.; Yang, J. Multi-Scale and Multi-Task Deep Learning Framework for Automatic Road Extraction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9362–9377. [Google Scholar] [CrossRef]

- Amyar, A.; Modzelewski, R.; Li, H.; Ruan, S. Multi-Task Deep Learning Based CT Imaging Analysis for COVID-19 Pneumonia: Classification and Segmentation. Comput. Biol. Med. 2020, 126, 104037. [Google Scholar] [CrossRef]

- Song, L.; Lin, J.; Wang, Z.J.; Wang, H. An end-to-end multi-task deep learning framework for skin lesion analysis. IEEE J. Biomed. Health Inform. 2020, 24, 2912–2921. [Google Scholar] [CrossRef]

- Jiang, Z.; Dong, Z.; Jiang, W.; Yang, Y. Recognition of Rice Leaf Diseases and Wheat Leaf Diseases Based on Multi-Task Deep Transfer Learning. Comput. Electron. Agric. 2021, 186, 106184. [Google Scholar] [CrossRef]

- Assadzadeh, S.; Walker, C.; McDonald, L.; Maharjan, P.; Panozzo, J. Multi-Task Deep Learning of near Infrared Spectra for Improved Grain Quality Trait Predictions. J. Near Infrared Spectrosc. 2020, 28, 275–286. [Google Scholar] [CrossRef]

- Kang, J.; Gwak, J. Ensemble of multi-task deep convolutional neural networks using transfer learning for fruit freshness classification. Multimed. Tools Appl. 2022, 81, 22355–22377. [Google Scholar] [CrossRef]

- Lo, S.Y.; Hang, H.M.; Chan, S.W.; Lin, J.J. Efficient dense modules of asymmetric convolution for real-time semantic segmentation. In Proceedings of the 1st ACM International Conference on Multimedia in Asia, Beijing, China, 15–19 December 2019. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2015, arXiv:1412.6572. [Google Scholar] [CrossRef]

- Bai, X.; Wang, X.; Liu, X.; Liu, Q.; Song, J.; Sebe, N.; Kim, B. Explainable Deep Learning for Efficient and Robust Pattern Recognition: A Survey of Recent Developments. Pattern Recognit. 2021, 120, 108102. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, H.; Zhao, T.; Guo, Y.; Xu, Z.; Liu, Z.; Liu, S.; Lan, X.; Sun, X.; Feng, M. Classification of Cardiac Abnormalities from ECG Signals Using SE-ResNet. In Proceedings of the 2020 Computing in Cardiology, Rimini, Italy, 13–16 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Zisad, S.N.; Chowdhury, E.; Hossain, M.S.; Islam, R.U.; Andersson, K. An Integrated Deep Learning and Belief Rule-Based Expert System for Visual Sentiment Analysis under Uncertainty. Algorithms 2021, 14, 213. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Li, X.; Zhao, L.; Wei, L.; Yang, M.-H.; Wu, F.; Zhuang, Y.; Ling, H.; Wang, J. DeepSaliency: Multi-Task Deep Neural Network Model for Salient Object Detection. IEEE Trans. Image Process. 2016, 25, 3919–3930. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Y.; Yang, Q. Multi-Task Learning in Natural Language Processing: An Overview. ACM Comput. Surv. 2024, 56, 295:1–295:32. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine Learning; McGraw-Hill: New York, NY, USA, 1997; ISBN 978-0-07-115467-3. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour. arXiv 2018, arXiv:1706.02677. [Google Scholar] [CrossRef]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the Variance of the Adaptive Learning Rate and Beyond. arXiv 2021, arXiv:1908.03265. [Google Scholar] [CrossRef]

- Pitas, I. Digital Image Processing Algorithms and Applications, 1st ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2000; ISBN 978-0-471-37739-9. [Google Scholar]

- Yang, M.-D.; Hsu, Y.-C.; Liu, T.-T.; Huang, H.-H. Enhancing Grain Moisture Prediction in Multiple Crop Seasons Using Domain Adaptation AI. Comput. Electron. Agric. 2025, 231, 110058. [Google Scholar] [CrossRef]

| Acquisition Date | 8 June 2017 | 31 May 2019 | ||

|---|---|---|---|---|

| Camera model | Sony QX100 | Sony α7RII | ||

| Orthomosaic size (width × height) (pixel) | 46,343 × 25,658 | 103,258 × 179,684 | ||

| Flight height (meter) | 230 | 215 | ||

| Covering area (ha) | 430 | 2300 | ||

| Spatial Resolution (cm/pixel) | 5.3 | 4.7 | ||

| Tile size (width × height) (pixel) | 480 × 480 | 480 × 480 | 1440 × 1440 | 4096 × 4096 |

| Valid tile numbers | 2082 | 694 | 72 | 666 |

| Dataset type | train | val | test | test |

| Camera Model | Sony QX100 | Sony α7RII |

|---|---|---|

| Pixel size (micrometer) | 2.4 | 4.5 |

| Focal length (mm) | 10.4 | 20.0 |

| Image resolution (width × height) (pixel) | 5472 × 3648 | 7952 × 5304 |

| Dynamic range (bits) | 8 | |

| Spatial resolution (200 m above ground) (cm/pixel) | 4.64 | 4.53 |

| Sensor size (mm) | 13.2 × 8.8 | 36.0 × 24.0 |

| Field of view (vertical, horizontal) (degrees) | 64.8, 45.9 | 83.9, 61.9 |

| Class name | Background | Normal rice | Road | Ridge | Lodging rice |

| HEX code | #000000 | #008000 | #808080 | #804020 | #FF0000 |

| Color |

| Indices | Formula |

|---|---|

| Precision | |

| Recall | |

| -score | |

| -score |

| Hyperparameter | Value |

|---|---|

| Training epochs Et | 50 |

| Batch size | 32 |

| Epoch steps Se | 65 |

| Optimizer | SGD |

| Scheduled learning curve | warmup + polynomial |

| Warmup epochs Ew | 5 |

| Warmup learning rate lrinit | 0.0001 |

| Target learning rate lrtgt | 0.4 |

| Warmup curve exponent α | 1.0 |

| End learning rate lrend | 0.0001 |

| Learning curve exponent β | 0.8 |

| Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Background | 93.16 | 95.91 | 94.52 |

| Normal rice | 83.99 | 88.20 | 86.04 |

| Road | 91.20 | 71.01 | 79.85 |

| Ridge | 87.85 | 82.97 | 85.34 |

| Lodging rice | 76.95 | 73.42 | 75.14 |

| Multi-Task Branch | Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| LU-head | rice paddy | 98.30 | 99.02 | 98.66 |

| background | 99.24 | 98.69 | 98.97 | |

| Lodging-head | background | 99.60 | 98.67 | 99.13 |

| normal rice | 90.06 | 88.22 | 89.13 | |

| lodging rice | 80.10 | 86.01 | 82.95 |

| Model Variation | Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Base | background | 71.53 | 94.92 | 81.58 |

| normal rice | 94.43 | 67.36 | 78.63 | |

| road | 81.34 | 32.59 | 46.54 | |

| ridge | 17.22 | 44.23 | 23.79 | |

| lodging rice | 56.89 | 34.08 | 42.62 | |

| Base + transfer | background | 89.38 | 93.36 | 85.20 |

| normal rice | 91.97 | 88.97 | 90.45 | |

| road | 75.79 | 62.93 | 68.76 | |

| ridge | 22.67 | 21.12 | 21.87 | |

| lodging rice | 48.81 | 54.67 | 51.57 | |

| Base + hist + transfer | background | 88.11 | 94.24 | 91.07 |

| normal rice | 92.66 | 87.51 | 90.01 | |

| road | 75.57 | 52.90 | 62.23 | |

| ridge | 18.18 | 21.61 | 19.75 | |

| lodging rice | 46.58 | 58.18 | 51.74 |

| Model variation | Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| RBMTL | background | 89.75 | 98.77 | 94.05 |

| normal rice | 92.10 | 81.77 | 86.83 | |

| lodging rice | 71.64 | 36.78 | 48.61 | |

| RBMTL + transfer | background | 98.12 | 97.21 | 97.66 |

| normal rice | 91.17 | 91.44 | 91.30 | |

| lodging rice | 50.83 | 55.78 | 53.19 | |

| RBMTL + hist + transfer | background | 98.26 | 97.10 | 97.68 |

| normal rice | 91.21 | 92.28 | 91.74 | |

| lodging rice | 54.38 | 57.95 | 56.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, M.-D.; Tseng, H.-H. Rule-Based Multi-Task Deep Learning for Highly Efficient Rice Lodging Segmentation. Remote Sens. 2025, 17, 1505. https://doi.org/10.3390/rs17091505

Yang M-D, Tseng H-H. Rule-Based Multi-Task Deep Learning for Highly Efficient Rice Lodging Segmentation. Remote Sensing. 2025; 17(9):1505. https://doi.org/10.3390/rs17091505

Chicago/Turabian StyleYang, Ming-Der, and Hsin-Hung Tseng. 2025. "Rule-Based Multi-Task Deep Learning for Highly Efficient Rice Lodging Segmentation" Remote Sensing 17, no. 9: 1505. https://doi.org/10.3390/rs17091505

APA StyleYang, M.-D., & Tseng, H.-H. (2025). Rule-Based Multi-Task Deep Learning for Highly Efficient Rice Lodging Segmentation. Remote Sensing, 17(9), 1505. https://doi.org/10.3390/rs17091505