Abstract

Lossless image compression is vital for missions with limited data transmission bandwidth. Reducing file sizes enables faster transmission and increased scientific gains from transient events. This study compares two wavelet-based image compression algorithms, CCSDS 122.0 and JPEG 2000, used in the European Space Agency Comet Interceptor and Hera missions, respectively, in varying scenarios. The JPEG 2000 implementation is sourced from the JasPer library, whereas a custom implementation was written for CCSDS 122.0. The performance analysis for both algorithms consists of compressing simulated asteroid images in the visible and near-infrared spectral ranges. In addition, all test images were noise-filtered to study the effect of the amount of noise on both compression ratio and speed. The study finds that JPEG 2000 achieves consistently higher compression ratios and benefits from decreased noise more than CCSDS 122.0. However, CCSDS 122.0 produces comparable results faster than JPEG 2000 and is substantially less computationally complex. On the contrary, JPEG 2000 allows dynamic (entropy-permitting) reduction in the bit depth of internal data structures to 8 bits, halving the memory allocation, while CCSDS 122.0 always works in 16-bit mode. These results contribute valuable knowledge to the behavioral characteristics of both algorithms and provide insight for entities planning on using either algorithm on board planetary missions.

Keywords:

image compression; CCSDS 122.0; JPEG 2000; hyperspectral; noise filtering; Didymos; Dimorphos; comet 1. Introduction

We present a performance comparison of two wavelet-based image compression algorithms applied in two forthcoming European Space Agency (ESA) space missions. The CCSDS 122.0 standard is implemented in the Comet Interceptor (CI) mission to compress Optical Periscopic Imager for Comets (OPIC) and Entire Visible Sky (EnVisS) images and the JPEG 2000 standard is implemented in the Hera mission to compress Asteroid Spectral Imager (ASPECT) hyperspectral datacubes. Both missions have in common that the sub-spacecrafts, on which the above-mentioned optical payloads are located, do not have a direct data link to the ground. Instead, the data first are relayed over an inter-satellite link to the main craft and subsequently to the ground during a subsequent communication window. This approach results in reduced data bandwidth and intermittent link availability, creating a requirement for data volume reduction.

1.1. Comet Interceptor and Hera Missions

CI is an F1 (fast) science mission within the ESA Science Programme (SP) [1]. Its main goal is to characterize a pristine, ideally dynamically new comet, its activity and the surrounding environment. CI consists of a main spacecraft A and two sub spacecrafts B1 and B2, enabling multi-point proximity observations during the fly-by of its target comet. B2 carries, among other payloads, two cameras, OPIC and EnVisS. OPIC is a monochromatic camera with a px sensor and an approximately 18.2° × 18.2° field of view (FoV). EnVisS is an all-sky polarimetric camera with 550–800 nm broadband wavelength coverage and a non-polarizing and two polarimetric filters. Its sensor has a resolution of px and an FoV of 180° × 45° scanning the whole sky in a push-broom mode utilizing spacecraft spin stabilization; see Figure 1. OPIC and EnVisS share a common data handling unit (DHU). The DHU contains a Cortex-m7-based SAMRHV71 microcontroller unit (MCU) with a clock speed of 100 MHz and 256 MB of SDRAM and flash memory. Its purpose is basic data handling and image compression according to the ESA CCSDS 122.0-B-2 standard.

Figure 1.

The OPIC (left) and EnVisS (right) cameras of the Comet Interceptor mission, modified [1].

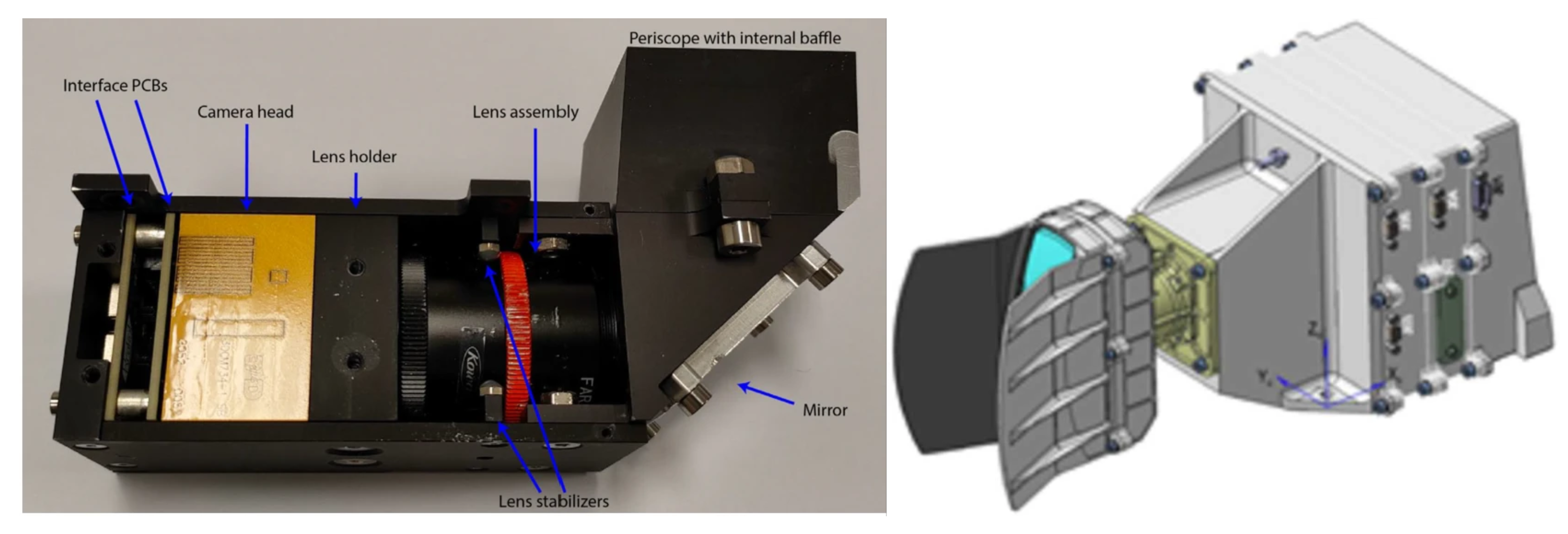

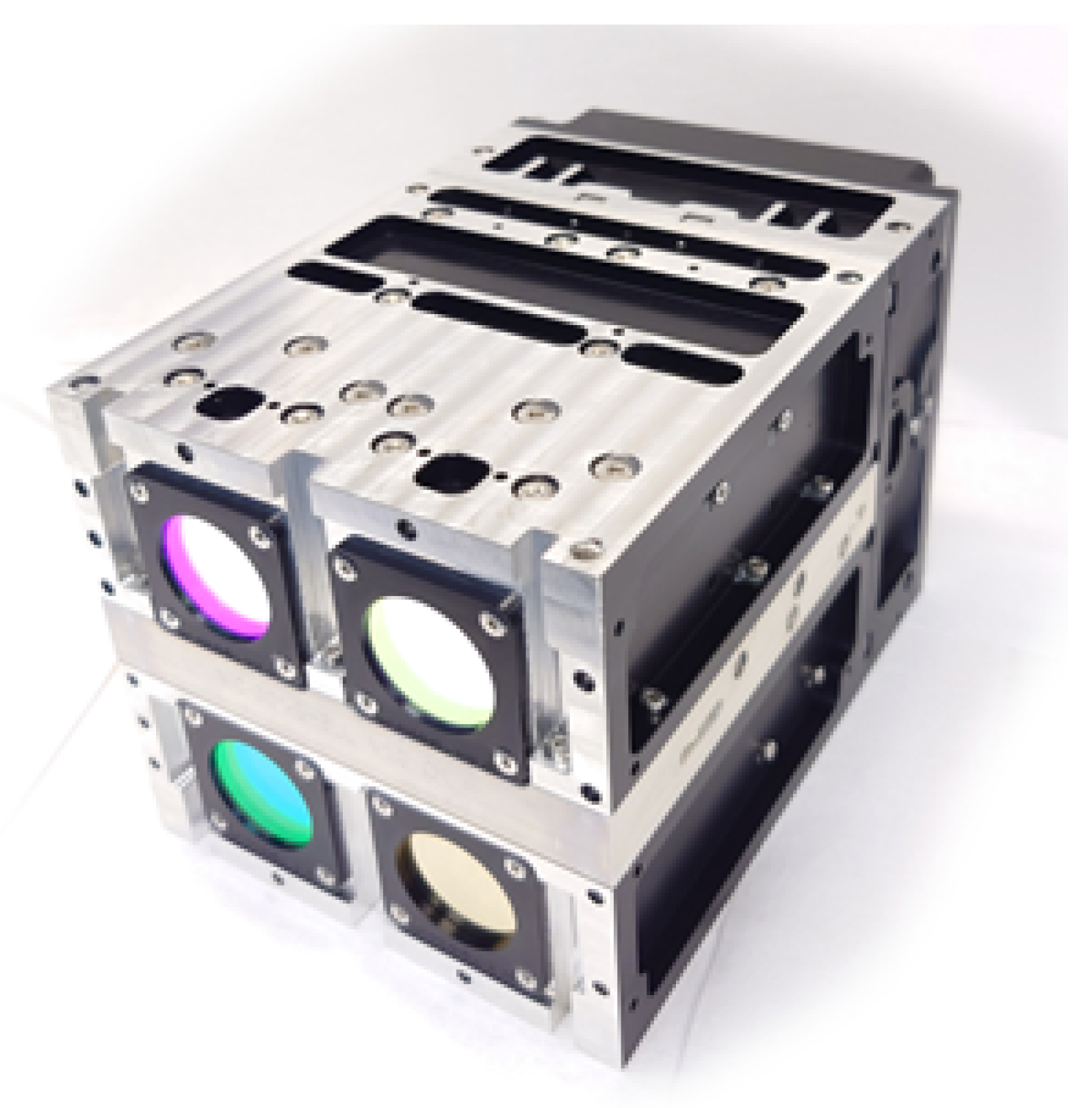

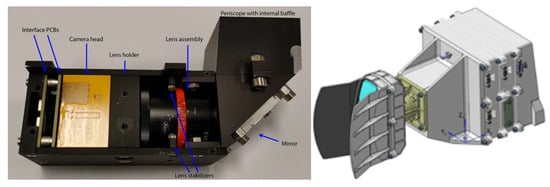

Hera is a planetary defense mission demonstrator within the ESA Space Safety and Security Programme (S2P) [2]. Its main goals are (1) to characterize the physical properties of the target binary asteroid (65803) Didymos–Dimorphos and (2) to document the consequences of the 2022 NASA Double Asteroid Redirect Test (DART) spacecraft’s impact on Dimorphos [3]. Hera also carries two 6-unit CubeSats, Juventas and Milani, which will be deployed upon arrival in the vicinity of the two asteroids and will carry on specialized proximity observations. The primary payload of Milani CubeSat is the ASPECT hyperspectral camera [4] tasked with mapping the target mineral composition and surface maturity. ASPECT (Figure 2) in its imaging range will capture 2D images for each recorded wavelength, forming a hyperspectral datacube containing a full spectrum for each pixel. ASPECT consists of three imaging channels: visible (Vis) and two near-infrared (NIR1 and NIR2), one additional single point short-wave infrared (SWIR) spectrometer (basic parameters in Table 1), and a dedicated Data Processing Unit (DPU) based on the Xiphos Q7S system-on-chip. The DPU runs the Linux operating system on an ARM dual-core Cortex A9 MPCore CPU. Its purpose is to support basic instrument operation and data handling, as well as to execute advanced image processing functions such as coverage and sharpness detection and JPEG 2000 compression using JasPer library, v4.2.4. See https://jasper-software.github.io/jasper/, https://www.ece.uvic.ca/~frodo/jasper/ (accessed on 26 February 2025). One complete uncompressed ASPECT hyperspectral datacube with 72 wavelengths is 364.8 Mbits and the total estimated data volume to transmit during the whole mission is approximately 10 Gbits.

Figure 2.

ASPECT camera of the Hera mission.

Table 1.

The ASPECT camera parameters.

1.2. Legacy Image Compression Techniques

Before the wide adoption of wavelet-based compression techniques, a differential pulse-code modulation (DPCM) was common due to its simplicity [5]. The DPCM algorithm calculates the difference between two consecutive values for each sample. The lower entropy of this differential signal allows increased compression compared to the original [6]. DPCM was successfully used onboard the French Satellite Pour l’Observation de la Terre (SPOT) line of satellites from 1986 to 1998. Subsequent SPOT satellites generated data at much higher rates, requiring increasingly sophisticated algorithms [7].

Alongside DCPM, the discrete cosine transform (DCT), used by the original Joint Photographic Experts Group (JPEG) standard, increased in popularity at the same time. DCT is based on decomposing a signal to cosine coefficients similar to those from a Fourier transform [8]. This decomposition allows granular control over visual fidelity as coefficients are grouped by frequency. The algorithm is significantly more complex and requires increased processing power [7,8]. However, the ability to prioritize transmitted data and effortless data filtering counteracts implementation difficulties. Numerous satellites have implemented DCT, such as SPOT5, Clementine by NASA and Proba-2 by ESA [7,9,10].

Although DCT performs notably better than DPCM, it lacks utilization of the spatial correlation between pixels. The discrete wavelet transform (DWT) does not suffer from this lack of utilization [11]. Instead of continuous cosine signals, DWT uses wavelets, which have a non-zero amplitude only temporarily. Wavelets decompose the input signal into frequency and spatial data with only a minimal increase in algorithm complexity [12]. Satellites have increasingly often implemented DWT-based compression methods since JPEG 2000 was introduced at the beginning of the century [13]. The Consultative Committee for Space Data Systems (CCSDS) published their recommended standard for image compression shortly after in 2005. The CCSDS 122.0-B-2 standard [14] focuses on DWT use onboard satellites by sacrificing some compression performance for reduced implementation complexity.

1.3. CCSDS 122.0

CCSDS 122.0 is a recommended wavelet-based mono-channel image compression standard from CCSDS. The algorithm consists of two distinct steps: decorrelation and encoding. First, a 2D DWT using a 9–7 Cohen–Daubechies–Feauveau (CDF) wavelet operates on the data [14,15]. This transformation decorrelates neighboring pixels by transforming data to the frequency domain. The resulting coefficients are transformed repeatedly for a total of three iterations. Since each level of DWT is sequentially calculated from the previous one, the produced data have a tree-like hierarchy containing significant redundancy. Finally, this redundancy is exploited by a bitplane encoder, called a rice entropy encoder by CCSDS. The resulting file has a reduced size and an increased entropy [6,14].

Files generated by CCSDS 122.0 are ordered so that coefficients with higher priority are transmitted first. The priority is proportional to frequency. Thus, coefficients with lower frequency have higher priority [14]. This default behavior is optimal for visual data, where lower frequency components produce significant perceptual changes in the image [16]. For alternative data types, a user-defined priority heuristic is achievable by altering sub-band scaling factors. Moreover, the sub-band scaling factors are adjustable between different segments of an image [14].

CCSDS 122.0 supports both lossy and lossless compression methods. Lossless compression offers fewer configuration options as the compressed data include all of the information [6]. However, the user can affect compression directly when lossy compression is applied. Compression can stop prematurely when reaching a specific stage or a user-defined rate limit. Lossy compression always discards some bitplanes or coefficients [14].

1.4. JPEG 2000

The JPEG 2000 Part-1 standard [13] describes the codec specification for 2D image data. It was designed to supersede the original JPEG standard based on DCT with a new method based on DWT, offering mainly increased flexibility for decoding. The JasPer [17] library implementation of this standard used for ASPECT DPU on-board hyperspectral image compression was included in the JPEG 2000 Part-5 standard [18] as an official reference implementation. While having design similarities to CCDSD 122.0, both being DWT based, the JPEG 2000 offers the use of two different DWTs [19]:

- Reversible Component Transform (RCT), used for both lossy and lossless compression modes. Implemented with the Le Gall–Tabatabai (LGT) 5/3 wavelet transform utilizing only integer coefficients, mitigating the effect of quantization noise (rounding).

- Irreversible Component Transform (ICT), used for the lossy compression mode. Implemented with the CDF 9–7 wavelet transform with quantization noise depending on the precision of the decoder.

For CCSDS 122.0, only the CDF 9–7 is utilized with float and integer versions for lossy and lossless modes correspondingly. Once the input data are transformed into the frequency domain, the further postprocessing difference between JPEG 2000 and CCSDS 122.0 is described in [20]. JPEG 2000 uses the embedded block coding with optimal truncation points (EBCOT) algorithm to overcome redundancy between local neighboring DWT coefficients.

2. Materials and Methods

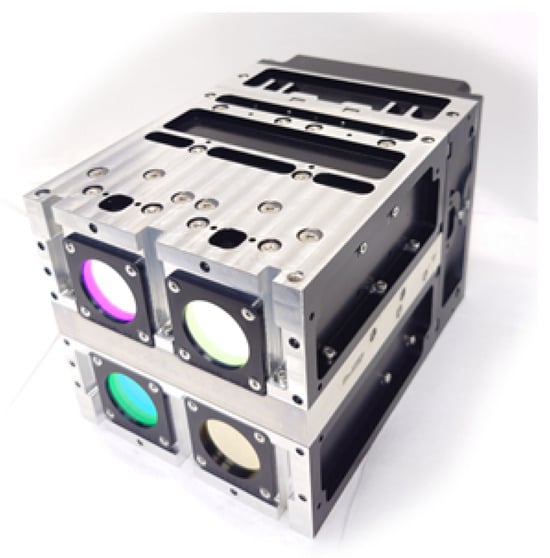

2.1. Simulated Hyperspectral Test Data

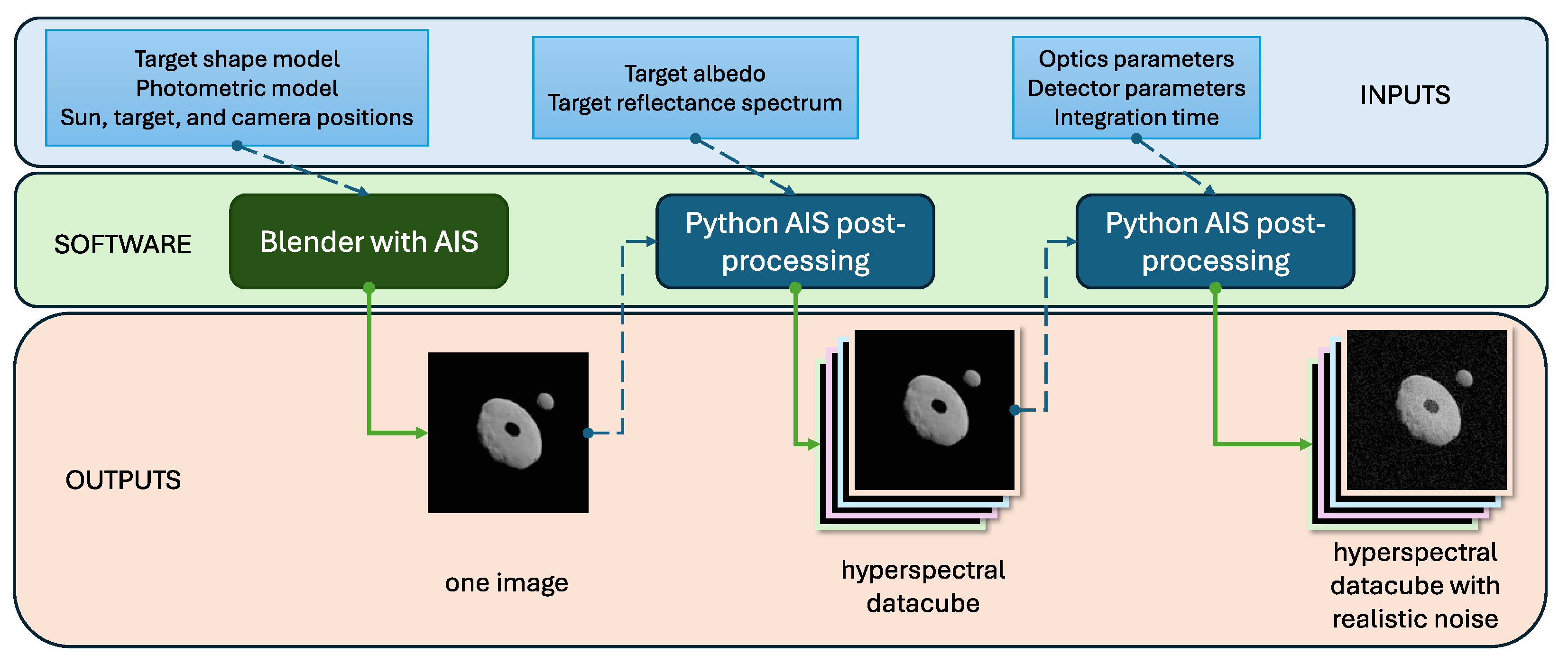

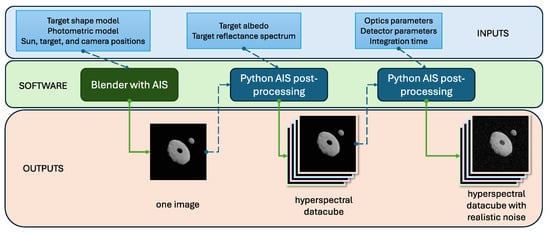

Testing the compression and noise filtering algorithms before mission launch requires synthetic test data, i.e., hyperspectral datacubes presenting realistic scenes for the mission. For this purpose, an end-to-end Asteroid Image Simulator (AIS), see https://bitbucket.org/planetarysystemresearch/asteroid-image-simulator (accessed on 26 February 2025) for the current development version, was developed and used to simulate ASPECT observations of the Didymos binary asteroid with realistic instrument performance. The test data creation is a three-step process. First, the Blender 3D modeling, v3.6 software, https://www.blender.org/ (accessed on 26 February 2025), with added functionalities from the AIS package is used to create simulated images. These images are of high resolution and free of any imaging noise coming from the detector. Second, the simulated images are expanded into different wavelengths for the hyperspectral datacube in our AIS, v0.9, Python post-processing scripts. Finally, the grayscale units of an image are transformed into digital numbers (DNs) of the detector and the detector noises are simulated on the datacube frames. Figure 3 shows the flow of inputs and outputs in the process.

Figure 3.

A flowchart of the simulated data set creation. The software consists of three parts and the second and third part take the output of the previous part as input, together with additional parameters. Python version used is 3.10, Blender 3.6, and AIS 0.9.

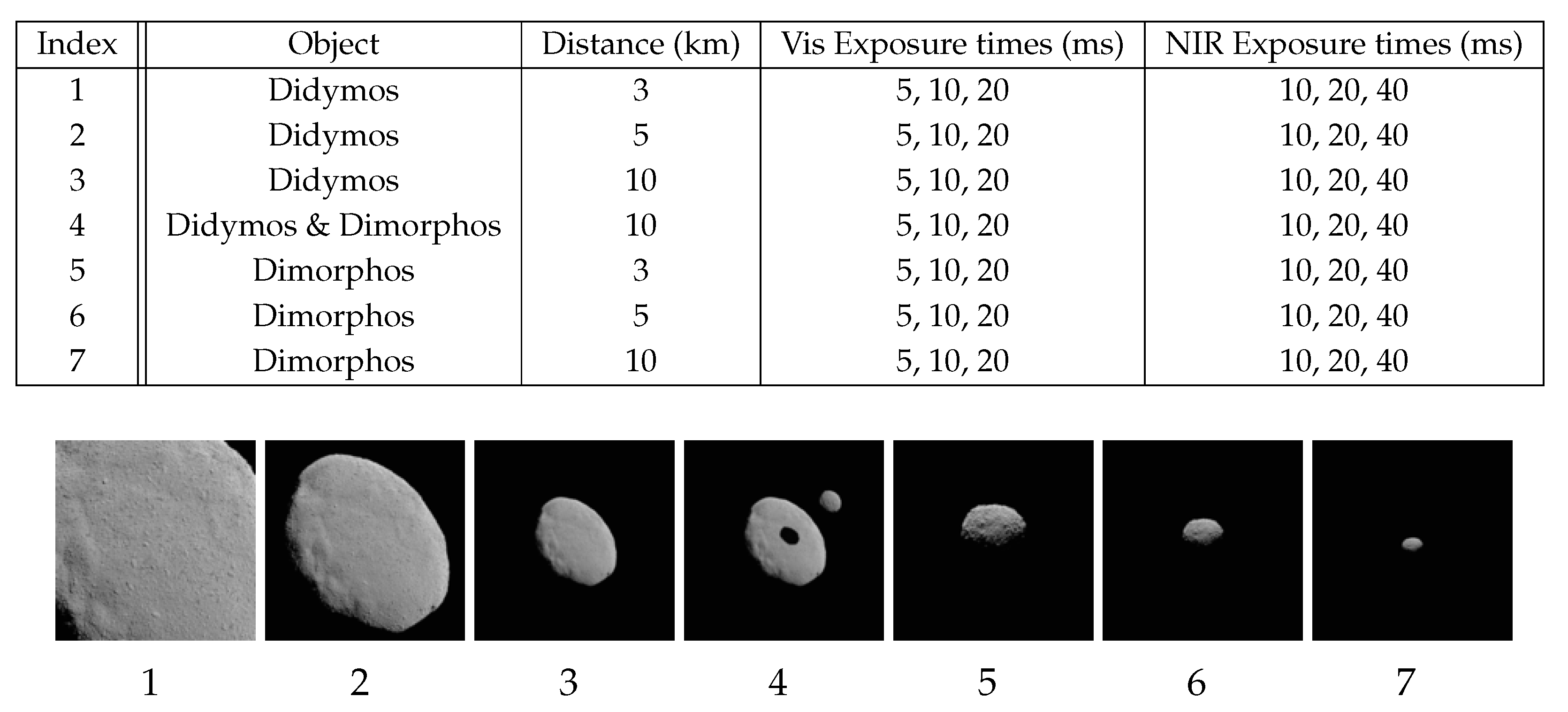

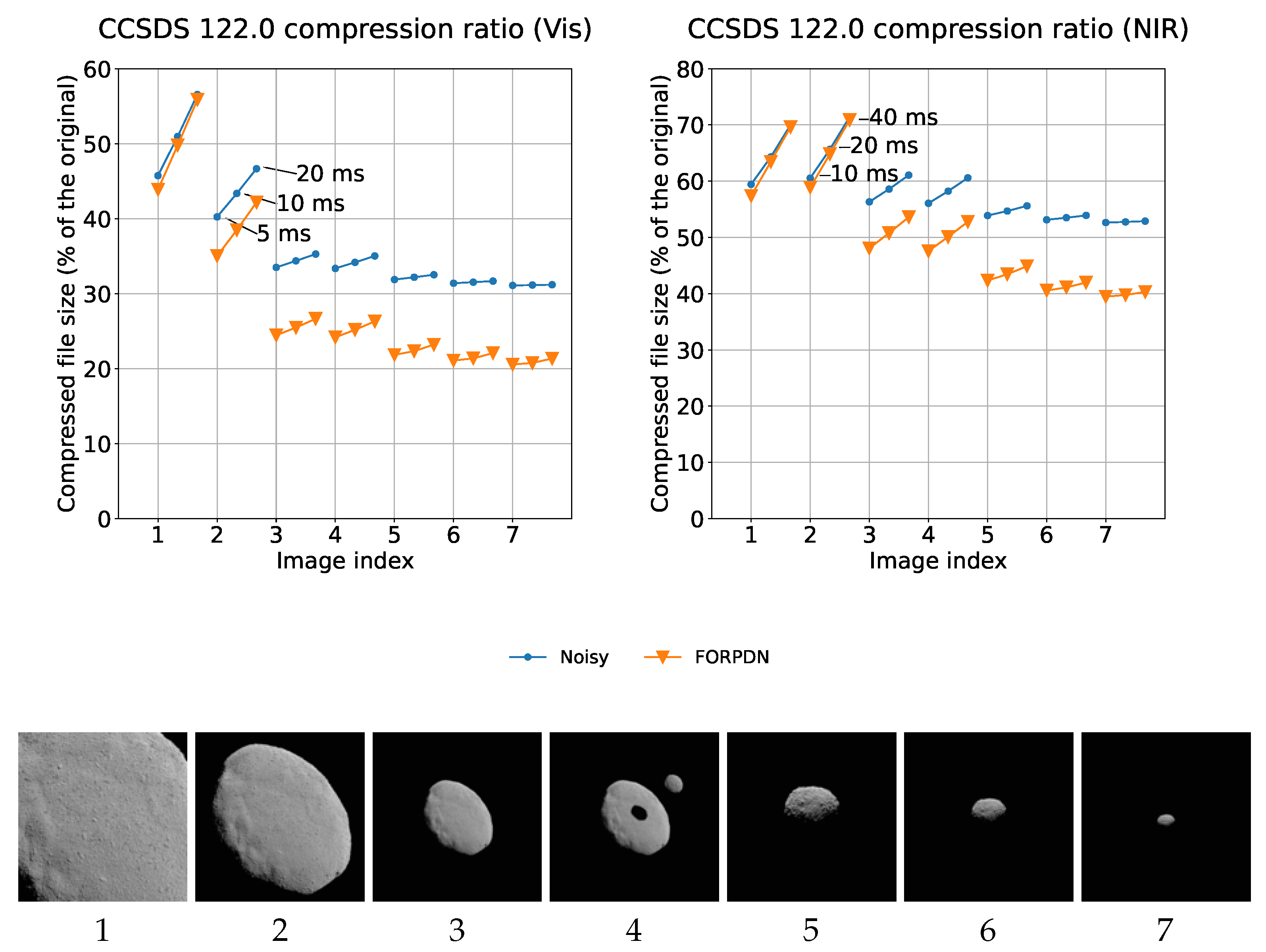

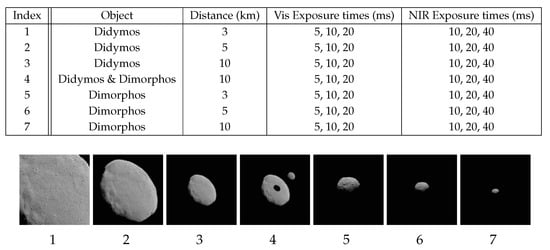

Seven scenes are selected for Blender image creation. We used the 3D mesh models of both Didymos and Dimorphos [21] as the target shapes in Blender. Both Didymos and Dimorphos are selected as targets alone, imaged from three different distances of 10, 5 and 3 km. In addition, one scene where both bodies are visible in the same frame is imaged from 10 km. Both Vis and NIR channels are simulated and since their FoVs and detector resolutions are different (see Table 1), they need separate simulations. Figure 4 shows the imaged test scenes.

Figure 4.

Simulated test images indexed with their corresponding simulation parameters.

Both the intensity of illumination (i.e., sunlight) and the plane albedo of the asteroids’ surface material can be controlled in Blender, which means the image grayscale values are convertible into reflectance. Multiplying this reflectance with the known incident flux spectra from the Sun at a given distance and the known reflectance spectra of Didymos [22] produces the reflected spectral radiance of the target surface. By taking into account the angular size of the ASPECT camera aperture on selected distances and the pixel resolution of the detector, the incident spectral radiance per detector pixel can be solved. The throughput of the camera optics is not perfect but estimated to be 90% and the throughput of the Fabry–Perót spectral filter in the ASPECT camera is estimated to be 30%. These factors will reduce the incident flux to a pixel. Finally, the detectors have specific quantum efficiency (QE) functions for converting the incident photons into elementary electric charges () on the detector. Both the Vis and NIR detectors have their QE functions evaluated by the manufacturer. These functions are used in the conversion chain from spectral flux to photons of a particular wavelength and finally to ’s per wavelength window (with a width of 25 nm in ASPECT) read by the detector pixel. The final DN reading from the detector, per time unit, is multiplied by the possible detector gain.

There are sources of noise in all detectors and we simulate these noises into the hyperspectral frames. Furthermore, these noises are expressed in units or in amperes that convert into per second, so simulating noises at the end of our conversion chain into the units is beneficial. We take into account fixed dark background (100 for Vis, 5900 for NIR) and dark current while integrating (0.02 fA for Vis, 0.5 fA for NIR) and read noise (8 for Vis, 85 for NIR). Finally, there is a photon shot noise with a standard deviation given by the square root of the signal.

Once the integration (exposure) time of the detector is decided, simulation of the hyperspectral datacubes with random noise is possible. The dark current and photon shot noise are taken as Poisson variables while the read noise is Gaussian. The final signal consists of these together with the dark background signal. Finally, the resulting DN is limited above by the full-well capacity of the detector (10,000 for Vis, for NIR) and sampled between zero and full well with the bit depth of the detector (12 for Vis, 14 for NIR).

Simulated datacubes from the seven simulated target frames were built using integration times of 5, 10 and 20 ms for Vis and 10, 20 and 40 ms for NIR. Datacubes were recorded both with and without simulated noise. These data cubes are used in testing the compression and the noise filtering algorithms.

2.2. CCSDS Implementation on EnVisS/OPIC DHU

The tested implementation of CCSDS 122.0 targets the CI mission and was written specifically for the B2 spacecraft DHU. Due to known mission constraints, some functionality described in the standard, such as lossy compression, was discarded. Moreover, decompression functionality was not included as it is performed on the ground. Any decompressor compliant with the CCSDS 122.0 standard is sufficient for this task.

The source code written in C programming language does not contain any external libraries or floating-point operations. These restrictions reduce memory and power requirements. Additionally, the application is single-threaded, as the DHU MCU does not support multithreading. The CI mission data were determined to require a maximum bit-depth of 12 bits per pixel. The implementation reflects this constraint and thus, higher bit-depths are not officially supported. These changes enable the source code to only consist of 1513 total lines of code that compiles to a 35 KB binary.

Implementation development was performed on a SAMV71 Xplained Ultra evaluation kit, which contains a prototyping board with a non-radiation hardened version of the SAMV71 MCU. Although otherwise comparable, the development kit contains significantly less random access memory (RAM) than the DHU (256 MB to 384 KB). This memory amount limits the maximum size of compressible images as the implementation does not support image data streaming. However, the performance is directly comparable between the two platforms since the compression algorithm is deterministic. Thus, verifying correct functionality on smaller images is sufficient and only compression speed differs when comparing the platforms.

The configuration parameter choices were mostly based on the constraints set by lossless compression. Neither a byte limit nor any early stopping flags were enabled to guarantee the encoding of all data. Notably, the heuristic methods for the bitplane encoder are used. This change did not negatively affect the compression performance of the algorithm but decreased both memory usage and speed significantly. Moreover, the sub-band weights, described in the CCSDS 122.0 standard, were used. No alterations to these parameters were conducted during testing or validation.

2.3. JPEG 2000 Implementation on ASPECT DPU

The baseline data compression algorithm used in ASPECT is a lossless JPEG 2000 codec based on the JasPer library. The main reason behind its selection is its previous heritage from the Aalto-1 CubeSat mission. Individual single-wavelength images of the hyperspectral datacube are encoded/compressed individually, resulting in moderate data savings. This approach is straightforward but does not exploit dependencies in the wavelength domain within the datacube.

The JasPer library allows for both lossless and lossy JPEG 2000 modes. However, the effect of lossy compression on hyperspectral data quality is not presented in this manuscript. Furthermore, simple data preprocessing techniques based on differential encoding were tested. This preprocessing is an optional step before the JPEG 2000 compression of single images, allowing utilization of the tested implementation while reaching better compression ratios. The JasPer library contains 34,413 lines of code that produce a 548 KB binary of 548 KB.

2.4. Differential Encoding

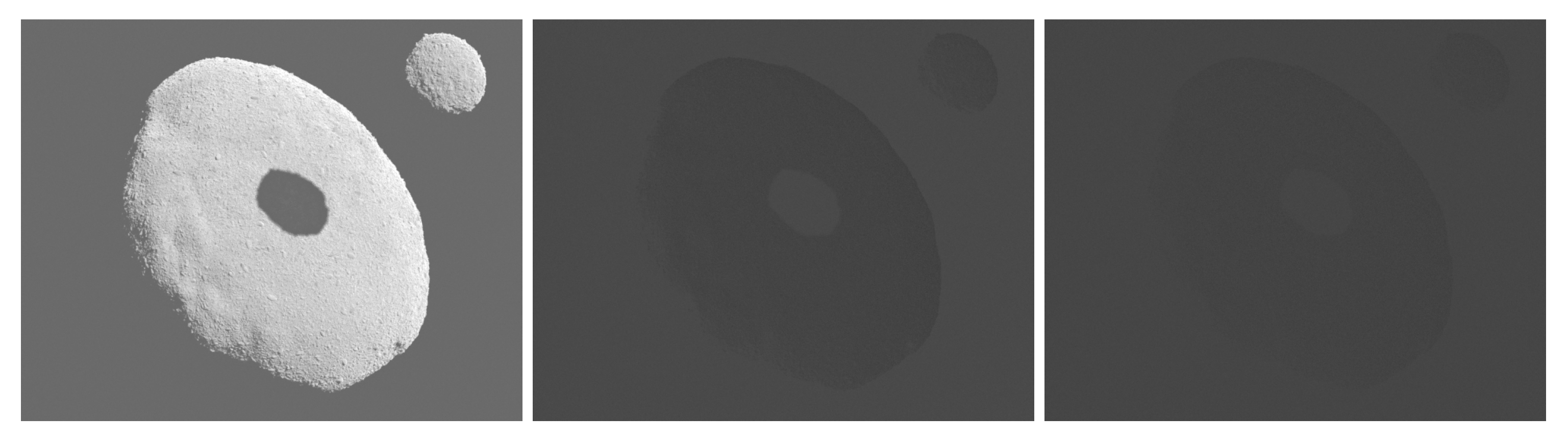

Differential encoding is based on the fact that the spectral difference (change) between spatially identical pixels (i.e., identical point on the imaged asteroid) for adjunct wavelengths is expected to be small and monotonous due to the continuous nature of spectral signals.

There are two ways such dependence can be utilized. The first method is choosing a smaller pixel bit depth for all but the first wavelength images. For example, in the case the original single pixel precision is 16 bits and all but the first datacube layer is encoded as an 8-bit difference between the adjunct layers, the resulting data savings is almost 50%.

The second method is based on the fact that the differential image suppresses to some extent the spatial variation related to object surface changes (i.e., the spatial variations are insignificant in case their spectrum is consistent) and is spatially simpler (Figure 5). Thus, the simple 2D compression of differential images should always be more efficient compared to original 2D images independently of the bit depth. Keeping the original resolution of 16 bits per pixel for all pictures (first, original and subsequently, differences) in combination with the baseline JPEG 2000 compression delivered promising results. For lossless JPEG 2000 compression, the addition of differential encoding as a preprocessing step does not result in any information loss.

Figure 5.

Differential encoding of a hyperspectral datacube example. First wavelength compressed normally (left) and subsequent differentially encoded wavelengths (middle) and (right).

For the implementation, the difference for 2nd and later images inside one ASPECT channel datacube is computed as:

This difference can be positive or negative in general. As the data type to store a single pixel of a datacube is an unsigned 16-bit integer, it is necessary to introduce an offset for each image to omit negative values. The offset is equal to a minimal difference in the image . The stored value in a resulting datacube is then

For decoding of deferentially encoded data, we need to decode the JPEG 2000 first and then apply the inverse procedure:

The list of offsets for all images g is stored in the datacube metadata with the value for the first image set to 0.

2.5. Noise Filtering

The test data were noise-filtered. The focus was on noise filters acting on the entire spacial–spectral data cube simultaneously. Three-dimensional noise filtering methods are more efficient in processing the hyperspectral image data because they exploit the internal dependencies between the wave bands [23].

Four different noise filters were studied in this work: three wavelet-based methods and one using solely low-rank modeling. The wavelet-based approaches, Wavelet3D [24], First Order Spectral Roughness Penalty Denoising (FORPDN) [25,26] and Hyperspectral Restoration (HyRes) [27], performed well in comparison to the Low-Rank Matrix Recovery (LRMR) method [28], which uses low-rank modeling but no wavelets [23]. The benefits of LRMR are that it has low implementation complexity and is easily controllable regarding its parameters. FORPDN and HyRes, the latter of which is the most computationally efficient, use Stein’s unbiased risk estimator (SURE) for parameter selection, which is an unbiased mean square error estimator [25,27]. Both methods achieved efficient results combined with image compression. Further recent work on hyperspectral imaging denoising methods can be found, e.g., in [29,30,31].

2.6. Test Procedure

The CCSDS 122.0 implementation was first functionally tested by compressing and decompressing randomly generated black-and-white images, i.e., pure noise. These images had varying sizes up to px and bitdepths between 1 and 12. Images of pure noise were chosen to represent the worst-case scenario as their entropy is high and no correlation between neighboring pixels exists.

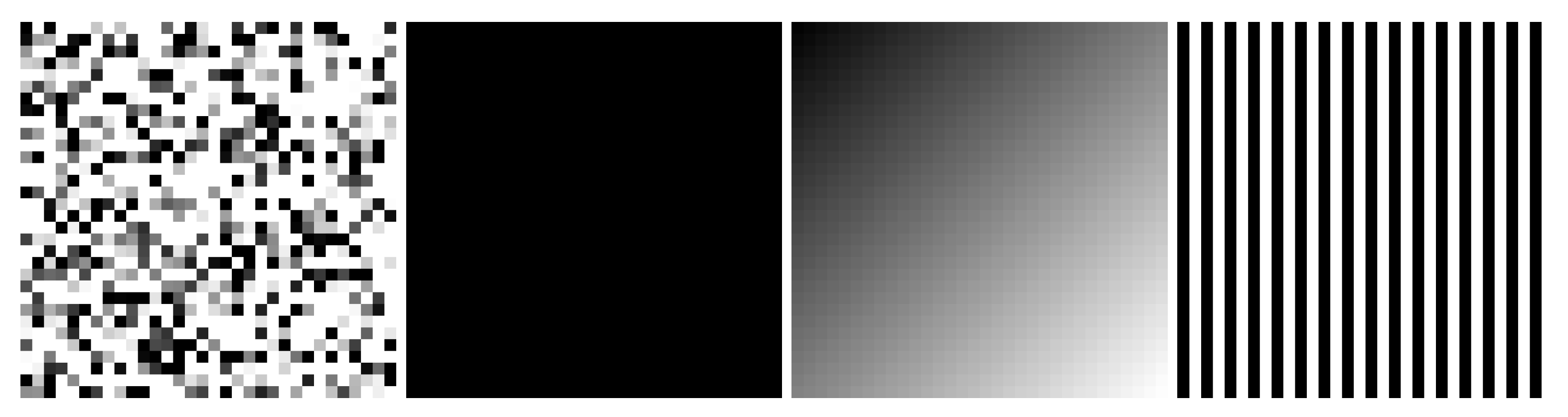

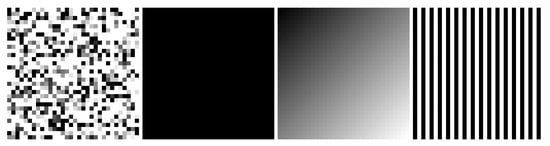

After verifying lossless compression, known edge cases presented in Figure 6 were tested to reach all branches of logic. Finally, file format compliance was verified by cross-checking the output with reference implementations from ESA and the University of Nebraska.

Figure 6.

Images used to find edge cases in the CCSDS 122.0 image compression algorithm. From left to right: white noise, pure black, smooth gradient and vertical stripes.

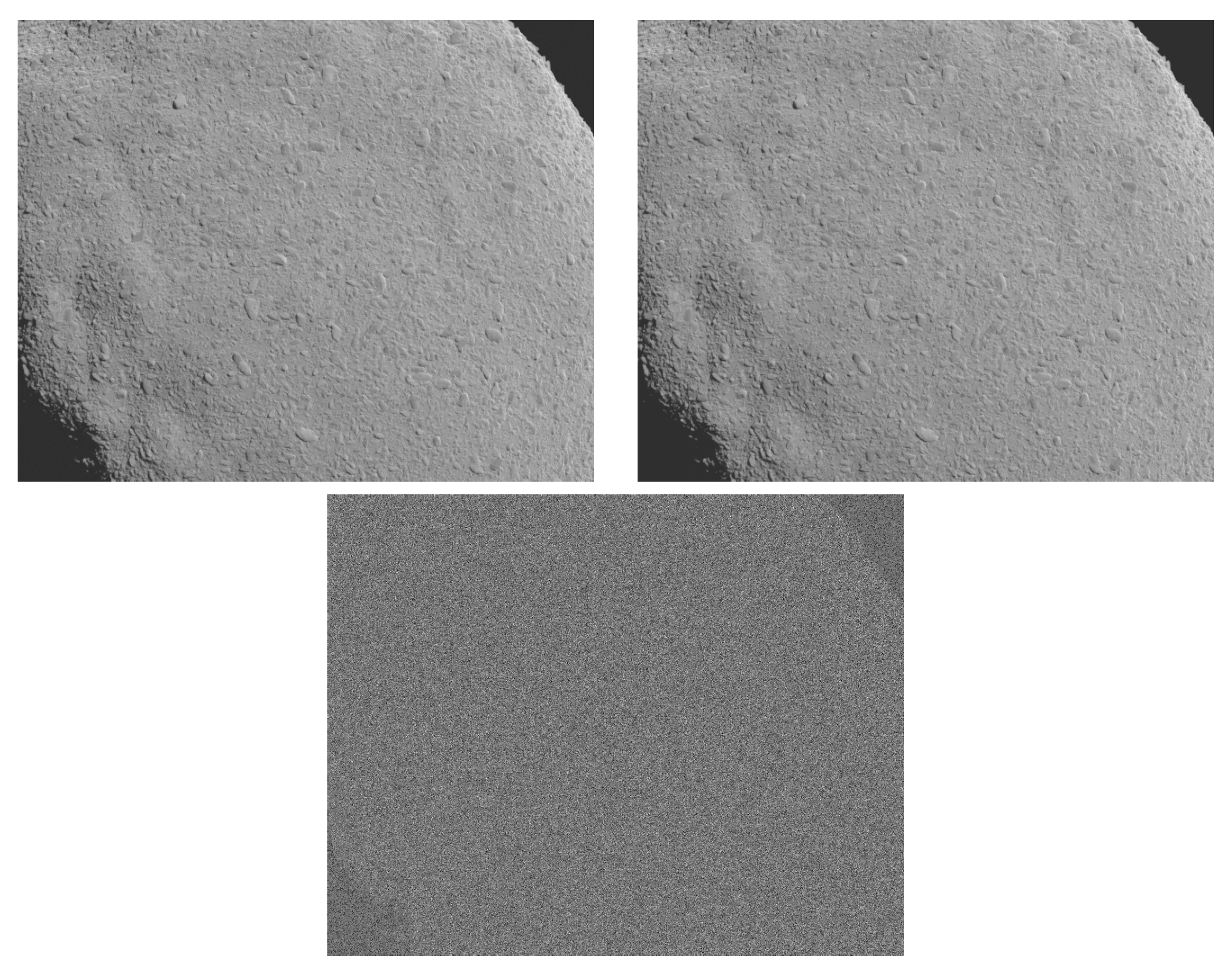

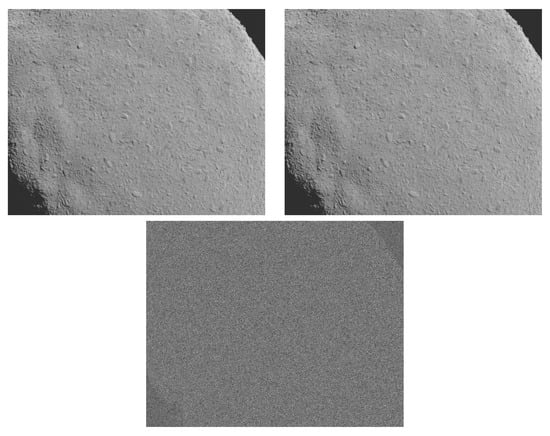

The compression performance tests and benchmarking against the JPEG 2000 JasPer codec followed the successful verification of the CCSDS 122.0 implementation. Each data point was calculated as the average compression ratio of all wavelengths corresponding to an image, 10 for Vis and 20 for NIR. The whole datacube was divided into two parts: ASPECT Vis and combined NIR (corresponding to the ASPECT NIR 1 and NIR2 ranges). Next, all wavelengths were compressed as single 2D images by both the JPEG 2000 and CCSDS 122.0 algorithms. Differentially encoded images were first processed with a script described in Section 2.4 before compression. Additionally, multiple noise filters described in Section 2.5 were used to reduce noise by varying amounts. These filtered images are used to provide data comparable to real-world scenarios and increase coverage of noise scenarios. Finally, noiseless versions of each image (see Figure 7) were compressed to act as a sanity check and provide a reference level for the maximum achievable compression ratio.

Figure 7.

NIR Image 1 with 40 ms exposure time (top left), NIR image 1 noiseless (top right) and the difference between noisy and noiseless (bottom).

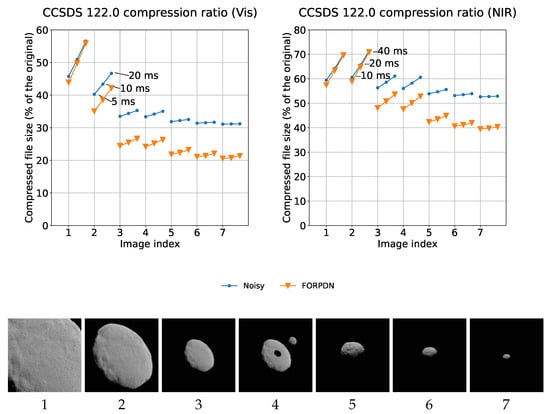

3. Results

All plots presented in the results analysis are composed as shown in Figure 8. For each tested image, three exposure times are paired with corresponding compression ratios. In addition, different noise filters are represented by a unique color and symbol. Compression performance is presented as the percentage ratio of the compressed file to the uncompressed original. This ratio facilitates the comparison of different-sized images and is a more intuitive metric than bitrate per pixel.

Figure 8.

Example of the compression ratio plots for indexed Vis and NIR images (bottom) with three exposure times per image: 5 ms, 10 ms and 20 ms for Vis (top left) and 10 ms, 20 ms and 40 ms for NIR (top right). All images are shown with and without FORPDN filtering.

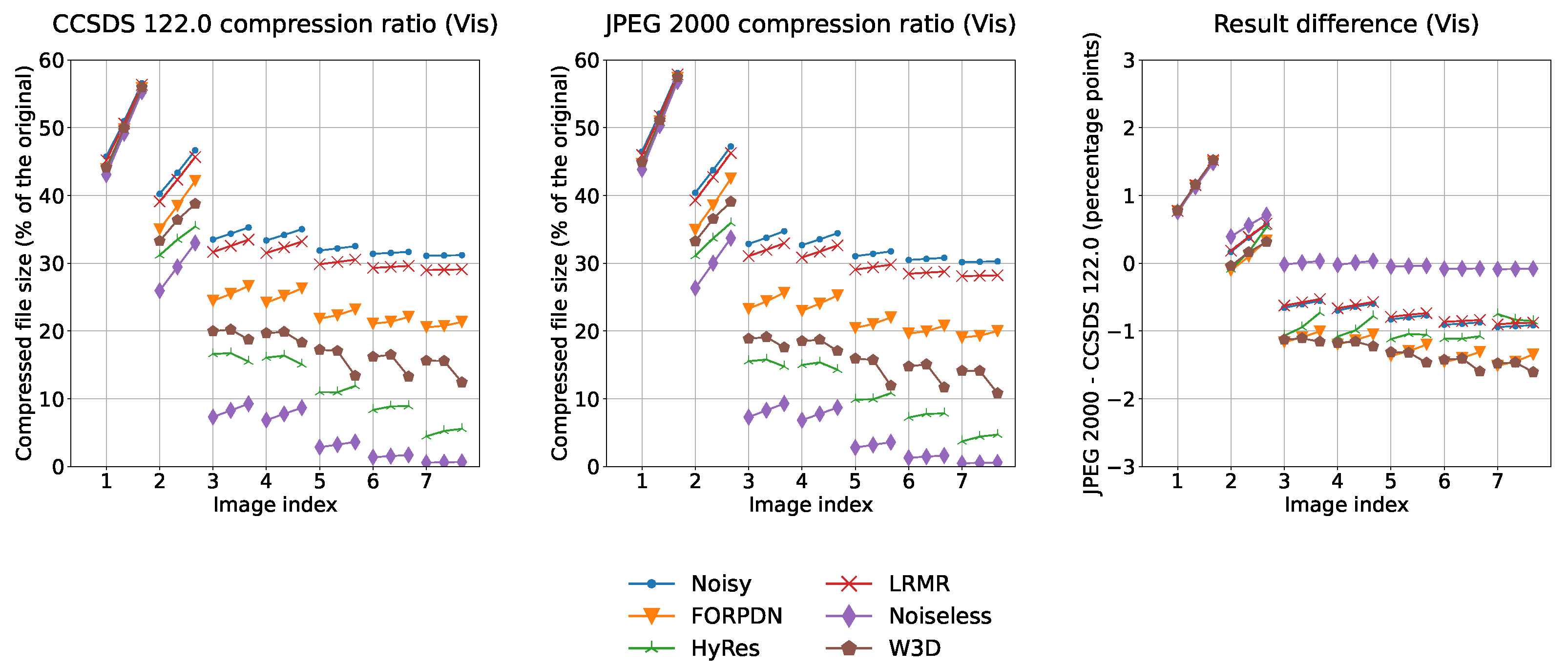

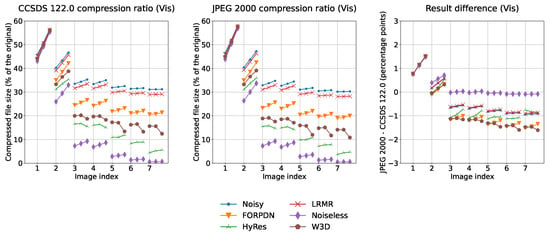

Figure 9 shows that both CCSDS 122.0 and JPEG 2000 compress asteroid-dominated Vis images to under 60% of the original. This compression ratio increases to slightly over 30% for space-dominated images. Moreover, the space-dominated images increasingly benefit from noise filtering. Notably, a subset of these images compress to under 10% when the HyRes filter is applied. Compression ratio roughly inversely correlates with the increasing simulated imaging distance (space-dominated images compress better than asteroid-dominated ones) regardless of noise reduction methods used.

Figure 9.

Performance of the CCSDS 122.0 and JPEG 2000 compression algorithms on three exposure levels of the noiseless, noisy and filtered visible spectrum images. Filtering is performed with FORPDN, HyRes, LRMR and W3D.

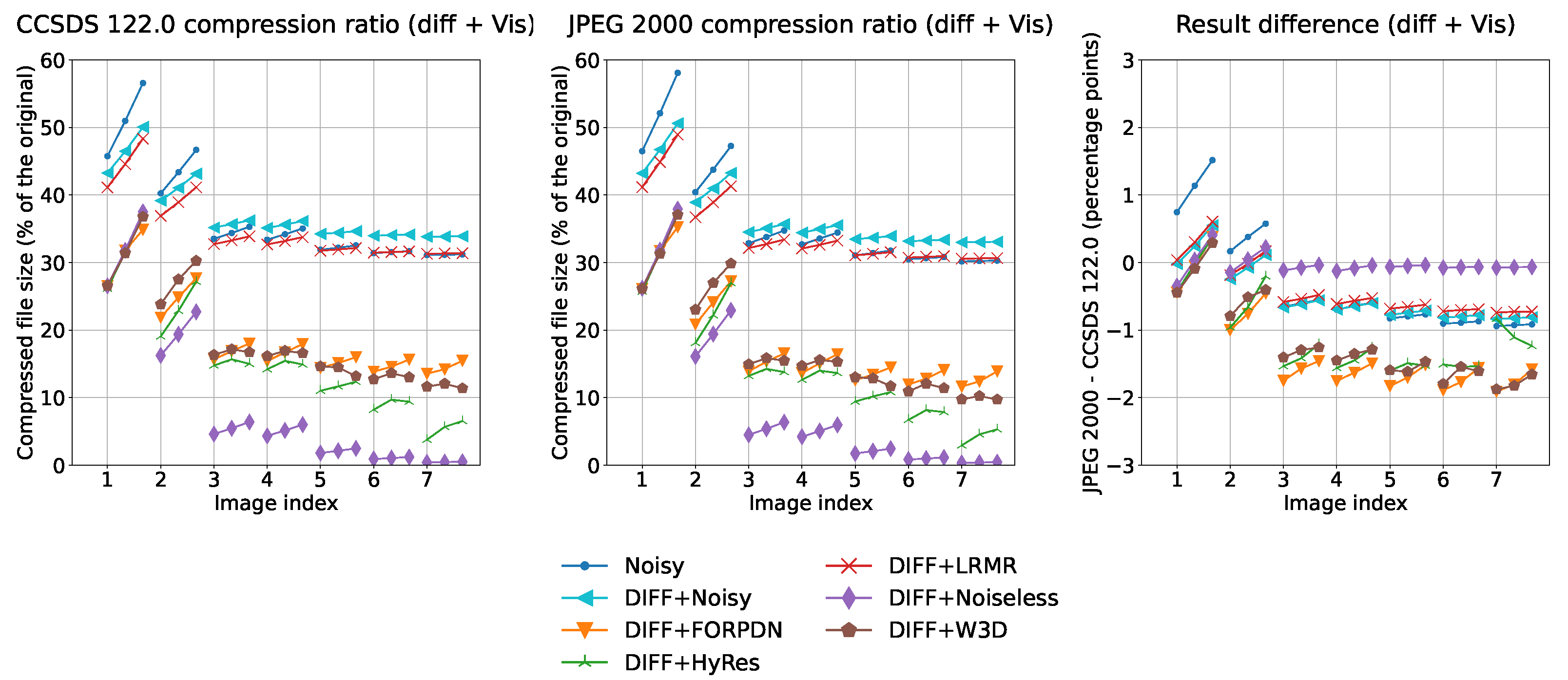

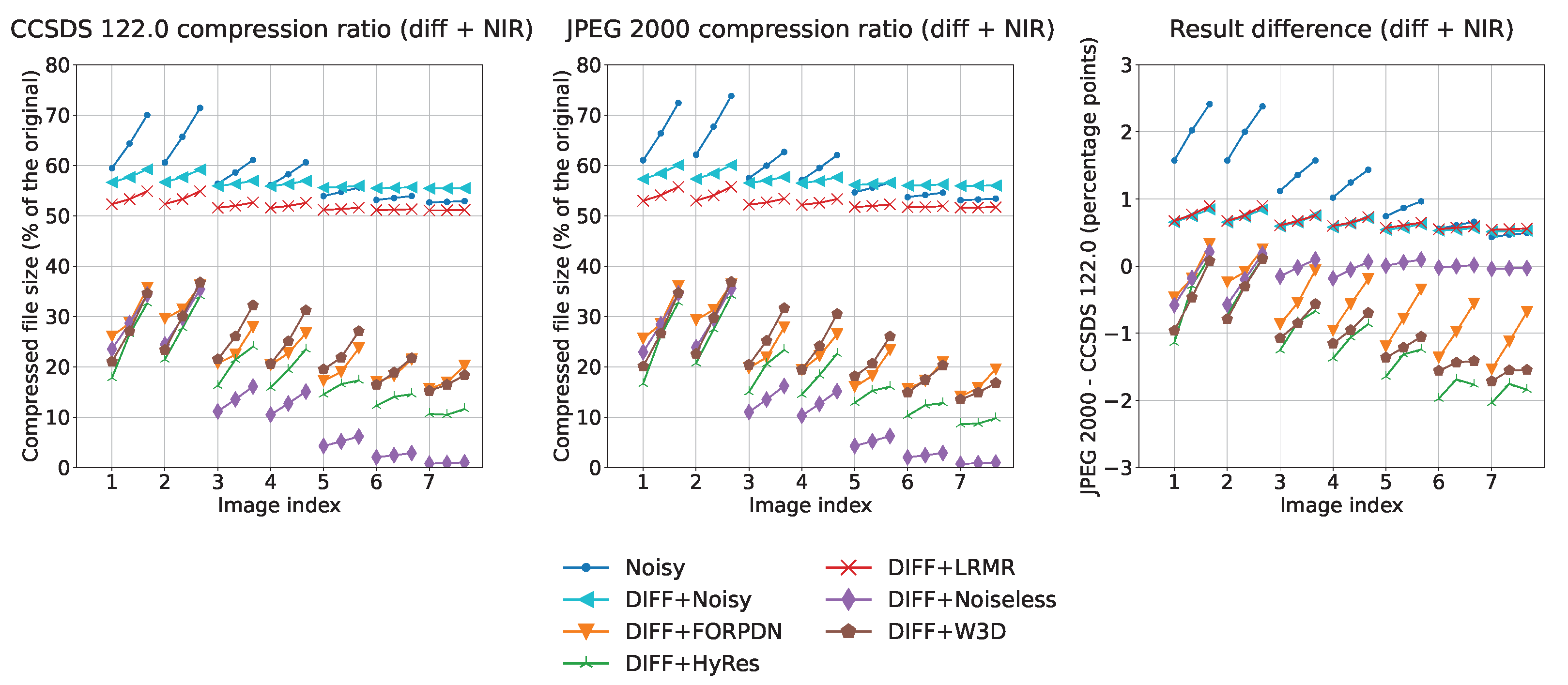

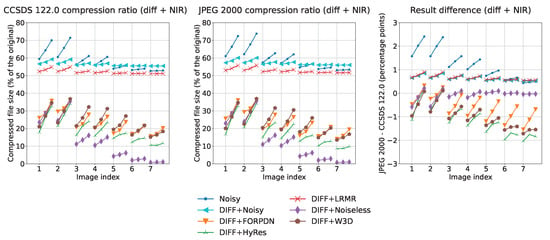

An increase of up to 10 percentage points (pp) in compression ratio is achieved by differentially encoding asteroid-dominated images as seen in Figure 10. On the contrary, slightly worse results are produced when non-filtered space-dominated images are differentially encoded. Finally, applying each noise filter, except LRMR, increases the overall compression ratio further by 15 to 20 pp.

Figure 10.

Performance of the CCSDS 122.0 and JPEG 2000 compression algorithms on three exposure levels of the noiseless, noisy and filtered differentially encoded visible spectrum images. Filtering is performed with FORPDN, HyRes, LRMR and W3D.

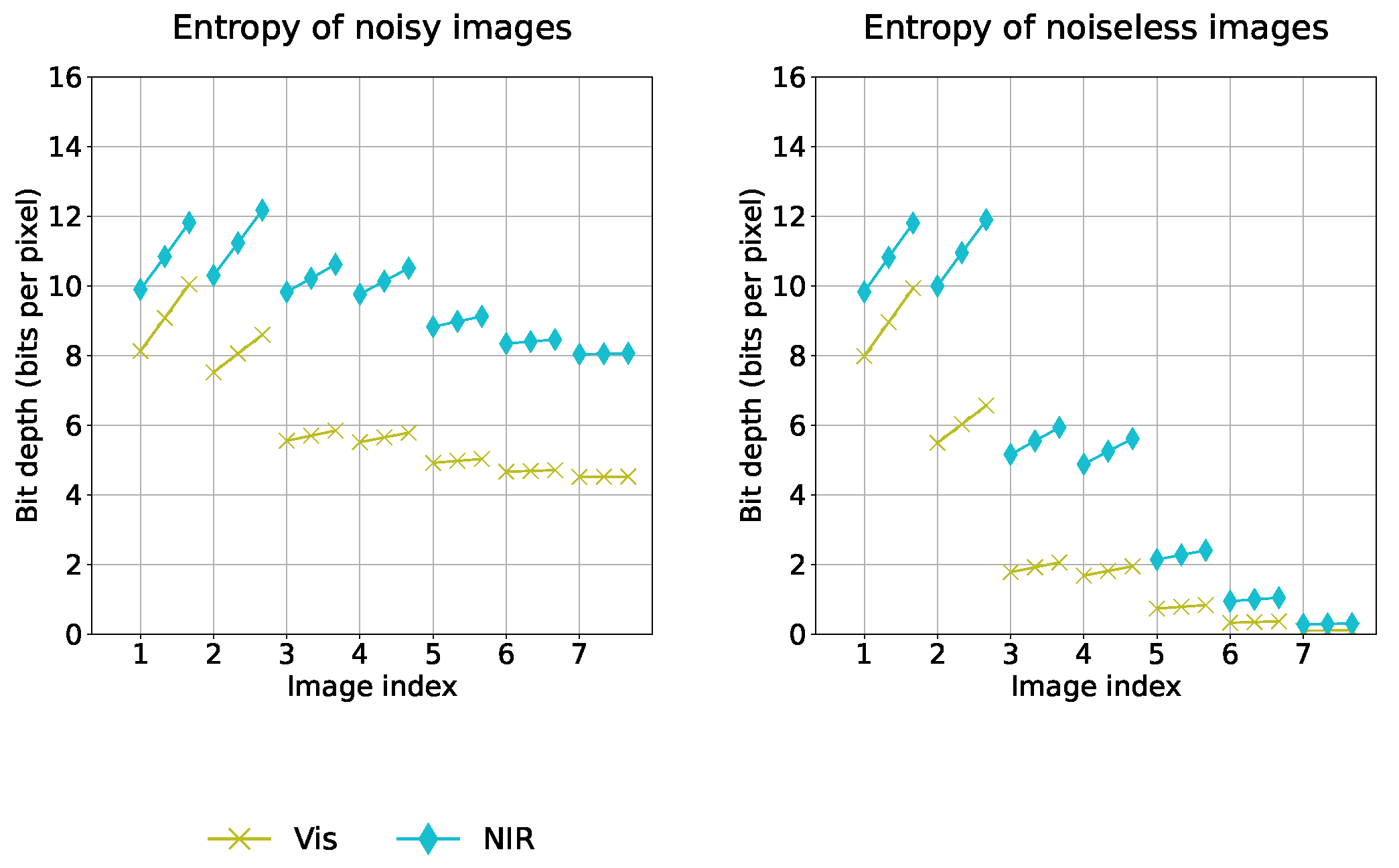

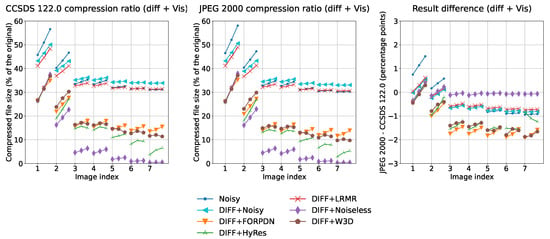

Vis images exhibit significantly lower entropy than NIR images. Entropy increases when introducing noise or other high-frequency details to the images. This effect on entropy is visible in Figure 11 when increasing the exposure time or decreasing the distance to the asteroid. All image entropies decrease drastically in the absence of noise. Additionally, the previous compression performances resemble Figure 11, as both algorithms achieve lossless compression ratios near the theoretical limit.

Figure 11.

The entropy of three exposure levels of noisy Vis and NIR images (left) and their noiseless variants (right).

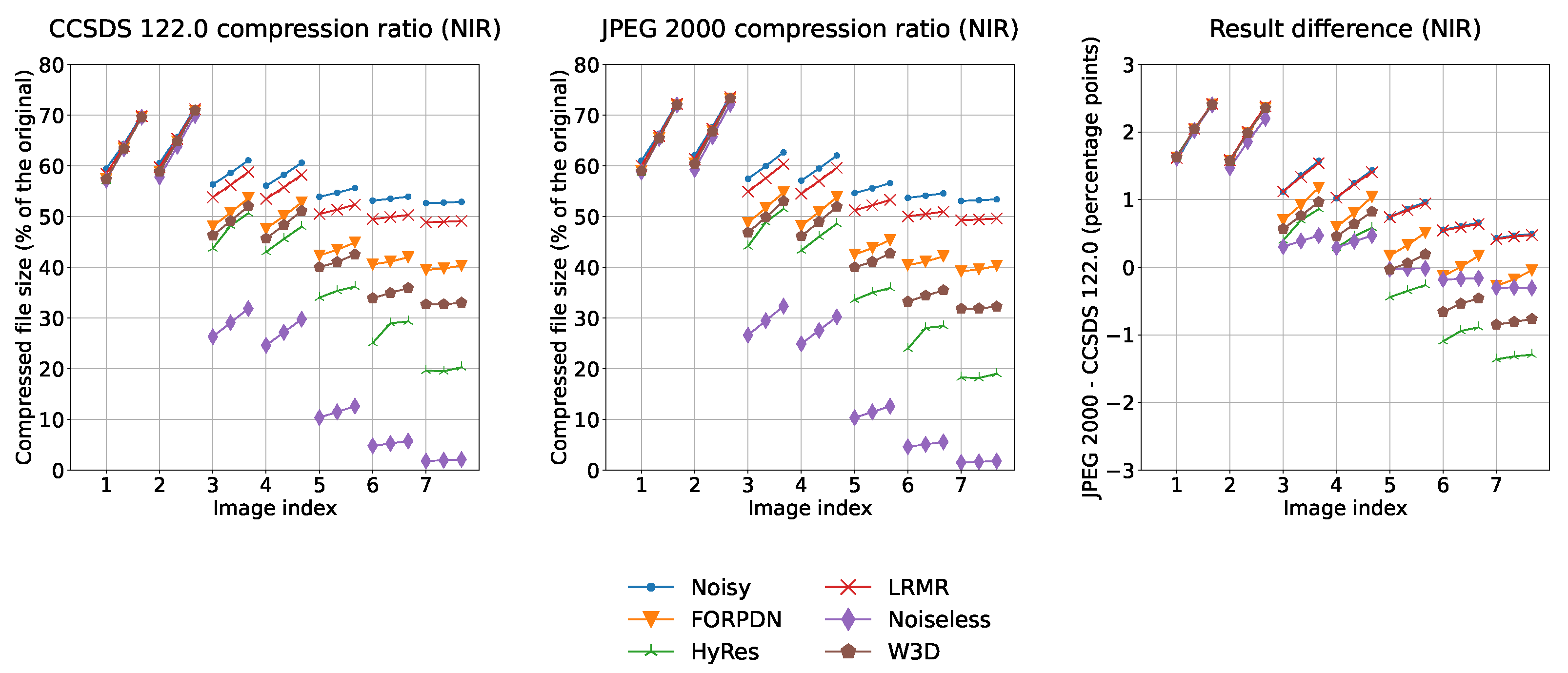

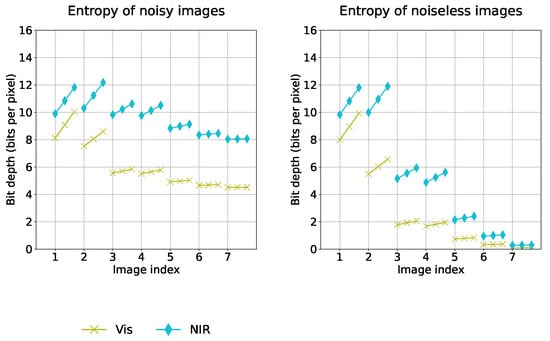

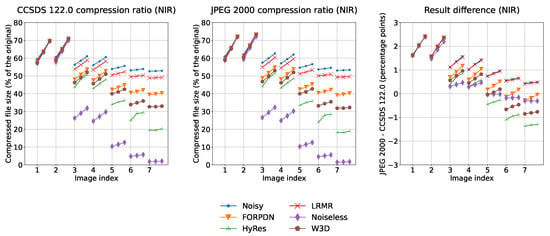

Results for the NIR images follow a similar trend. However, Figure 12 shows that the overall compression performance is reduced by approximately 20 pp for all NIR images relative to Vis. Additionally, relative differences in the compression ratio between asteroid and space-dominated images are reduced. This effect is intensified by differential encoding resulting in compression ratios of slightly under 60% independent of the scene. Figure 13 shows that, unlike with differential Vis images, noise filtering has a noticeable positive effect on asteroid-dominated differential NIR images. Moreover, these differential NIR images are increasingly affected by exposure time resulting in a compression ratio variation of up to 15 pp between different exposure times.

Figure 12.

Performance of the CCSDS 122.0 and JPEG 2000 compression algorithms on three exposure levels of filtered and noisy near-infrared images. Filtering is performed with FORPDN, HyRes, LRMR and W3D.

Figure 13.

The performance of the CCSDS 122.0 and JPEG 2000 compression algorithms on three exposure levels of filtered and noisy differentially encoded near-infrared images. Filtering is performed with FORPDN, HyRes, LRMR and W3D.

Table 2 shows that while both algorithms compress images in a comparable amount of time, CCSDS 122.0 performs consistently faster than JPEG 2000 in the majority of cases. The slowest Vis compression takes JPEG 2000 442 ms, whereas the slowest CCSDS 122.0 compression takes less than 327 ms. CCSDS 122.0 and JPEG 2000 exhibit a significant difference in compression time between Vis and NIR images, whereas differential encoding only slightly decreases compression time.

Table 2.

The minimum, maximum and mean compression times of the CCSDS 122.0 and JPEG 2000 compression algorithms for the Vis, differentially encoded Vis, Nir and differentially encoded NIR image sets.

A significant difference in memory usage between the algorithms is visible in Table 3. CCSDS 122.0 compression uses 2124 KB of memory for all NIR images, while JPEG 2000 uses between 1966 KB and 2264 KB. However, compression of Vis images with CCSDS 122.0 requires 6765 KB. This allocation is almost twice the memory usage of JPEG 2000, which varies between 3409 KB and 3695 KB. Differential encoding has no effect on CCSDS 122.0 memory usage and only slightly reduces the memory usage of JPEG 2000.

Table 3.

The minimum, maximum and mean memory usage of the CCSDS 122.0 and JPEG 2000 compression algorithms for the Vis, differentially encoded Vis, Nir and differentially encoded NIR image sets.

4. Discussion

4.1. Interpretation of Results

The performance analysis shows that both algorithms, JPEG 2000 and CCSDS 122.0, produce results within pp of eachother when compressing hyperspectral image data. CCSDS 122.0 performed up to pp better with noisy images, whereas JPEG 2000 is superior with noise-filtered images. In the case of original noiseless images, both algorithms behave similarly. These differences are more apparent in the compression of simulated NIR images as they exhibit higher entropy than Vis images and are exacerbated by noise-filtering and differential encoding. Our findings are consistent with those of [32,33], which focused on the compression of Earth observation images. Their research shows that lossless JPEG 2000 performs up to better than CCSDS 122.0 but compresses up to two times slower.

Every tested filter reduces the compressed data volume compared to the original noisy counterparts. However, no filter completely negated the noise or achieved the same compression ratios comparable to noiseless images. This discrepancy is probably due to the filters not removing all the noise or the filters themselves introducing some artifacts that negatively affect compression. Interestingly, although CCSDS 122.0 and JPEG 2000 produced nearly identical compression ratios for noiseless images, JPEG 2000 benefited from noise-filtering more than CCSDS 122.0 and performed up to 2 pp better in some cases.

The most significant benefit was reached by differentially encoding space-dominated images filtered with HyRes. These operations resulted in JPEG 2000 compressing image index no. seven by approximately 2.0 percentage points better than CCSDS 122.0. However, CCSDS 122.0 performed pp better when compressing asteroid-dominated without differential encoding. In all other test cases, the difference between compression ratios remained less than 2.5 pp. Timing these operations showed that, on average, NIR images compress three times as fast as Vis images. This is expected as they are a third of the size px as opposed to Vis images px. CCSDS 122.0 was approximately 50 ms faster than JPEG 2000 when compressing Vis images and approximately 25 ms faster with NIR images. Furthermore, differential encoding increased the compression speed by around 20 ms. These absolute time differences give CCSDS 122.0 roughly a factor of 1.3 compression time advantage compared to JPEG 2000. From a computational performance point of view, CCSDS 122.0 demonstrated faster compression performance than JPEG 2000 on average.

Memory consumption between algorithms differs by a factor of two when the DWT coefficients can be stored as 8-bit integers. Compressing Vis images with JPEG 2000 uses 3580 KB whereas CCSDS 122.0 6765 KB. This is likely due to the implementation of CCSDS 122.0, which allocates memory based purely on the size of the image regardless of its entropy. Every pixel is internally treated as a 16-bit integer, even when the data entropy would require fewer bits. Additionally, all allocations are made at the start of the program and reused throughout the program to simplify porting the algorithm for embedded platforms where dynamic allocation is not available. CCSDS 122.0 always expects the worst-case scenario in which the maximum amount of memory is required. This enables accurate prediction of the algorithm behavior at the expense of an increased memory footprint. JPEG 2000 manages memory allocations dynamically based on the size and content of the images. This reduces memory consumption but hinders the algorithm predictability and increases code complexity.

The usage of generated data and noise reduction methods provides an increasingly detailed view of the effect of noise on both algorithms. Notably, even though both algorithms performed comparatively well, the tested implementation of CCSDS 122.0 is only 1513 lines of code compared to the 34,413 lines of JPEG-2000. This makes the CCSDS 122.0 implementation an ideal candidate for on-board processing in hardware-limited environments.

4.2. Limitations and Future Prospects

The comparison of JPEG 2000 and CCSDS 122.0 offers numerous avenues for potential future research. First, a comparison of the throughput of both algorithms is needed. Throughput is an exceedingly important aspect in time-limited missions, and such tests provide further insight for entities seeking to implement image compression on resource-limited hardware. Second, an analysis should be performed on whether the computational complexity of JPEG 2000 is worth the gains in compression ratio. This would aid in considering the feasibility of hardware accelerating parts of both compression algorithms even in situations where time and processing power are abundant. Finally, additional development of the CCSDS 122.0 implementation should include input data streaming and the validation of lossy compression.

Alternative options for preprocessing methods should also be considered in the future. One potential method consists of spectral unmixing autoencoders (SUAs). The SUAs can be modeled using neural networks performing differential encoding and noise reduction. This reduces computational complexity as neural networks do not require matrix decompositions or other advanced matrix operations. However, training these networks requires a significant amount of tailored data, which are not easily modeled or readily available [34,35].

5. Conclusions

The JPEG 2000 and CCSDS 122.0 algorithms were implemented and tested on synthetic hyperspectral images of the Didymos–Dimorphos asteroids. Both algorithms show a consistent reduction in hyperspectral image data volume. A few trends were observed. While CCSDS 122.0 performed on average 1–2 pp better in compressing the noise-containing images, JPEG 2000 offered 1–2 pp greater compression performance with noise-filtered images. The original noiseless images were compressed similarly with both algorithms. Compared to JPEG 2000, CCSDS 122.0 has the advantages of an over 15 times smaller binary code size and approx. 1.3 times faster image compression. Regarding memory usage, JPEG 2000 has the advantage of dynamic bit depth reduction of its internal data structures from 16 to 8 bits when the image entropy allows this. For this reason, JPEG 2000 Vis image compression used half the memory compared to the CCSDS 122.0 case, where 16-bit mode was kept. With NIR images, both algorithms worked in full 16-bit mode, resulting in similar memory demands. Additional noise pre-filtering, especially wavelet 3D-based algorithms such as FORPDN or HyRes, reduced the image size by half. The benefit of differential encoding is the reduction of compression performance dependence on asteroid coverage, resulting in more consistent and predictable data volume savings. When choosing an image compression algorithm, the advantages and disadvantages exhibited by both algorithms need to be weighed on a case-by-case basis, and further tests should be performed on mission-specific hardware.

Author Contributions

Conceptualization, K.S., T.K. (Tomáš Kohout) and J.P.; methodology, K.S.; software, K.S., T.K. (Tomáš Kašpárek), A.P. and M.W.; validation, K.S. and T.K. (Tomáš Kohout); formal analysis, K.S., T.K. (Tomáš Kohout), T.K. (Tomáš Kašpárek), A.P. and M.W.; investigation, K.S., T.K. (Tomáš Kohout), T.K. (Tomáš Kašpárek), A.P. and M.W.; resources, T.K. (Tomáš Kohout), A.P. and J.P.; data curation, K.S.; writing—original draft preparation, K.S., T.K. (Tomáš Kohout), T.K. (Tomáš Kašpárek), A.P. and M.W.; writing—review and editing, K.S., T.K. (Tomáš Kašpárek), A.P., M.W. and J.P.; visualization, K.S. and A.P.; supervision, T.K. (Tomáš Kohout) and J.P.; project administration, T.K. (Tomáš Kohout) and J.P.; funding acquisition, T.K. (Tomáš Kohout), A.P., M.W. and J.P. All authors have read and agreed to the published version of the manuscript.

Funding

We acknowledge the funding provided by the European Union’s Renewing and Competent Finland 2021–2027 Just Transition Fund under the Finnish Future Farm project R-00791, ESA EXPRO project No. 4000139060, Academy of Finland project No. 335595 and institutional support RVO 67985831 of the Institute of Geology of the Czech Academy of Sciences. The presented work was performed as part of and financed by the Finnish Centre of Excellence in Research of Sustainable Space (FORESAIL), which is a project under the Research Council of Finland decision numbers 336805 to 336809.

Data Availability Statement

The CCSDS 122.0 implementation used can be found at https://github.com/Skooogi/ccsds-122.0-B-2.git (accessed on 26 February 2025) and the JasPer library at https://github.com/jasper-software/jasper.git (accessed on 26 February 2025). The synthetic asteroid images used in the tests are available at https://doi.org/10.5281/zenodo.13944328 (accessed on 26 February 2025). The code for differential encoding is available at https://github.com/tkas/diff_enc (accessed on 26 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ESA | European Space Agency |

| CI | Comet Interceptor |

| OPIC | Optical Periscopic Imager for Comets |

| EnVisS | Entire Visible Sky |

| ASPECT | Asteroid Spectral Imager |

| SP | Science Programme |

| FoV | Field of View |

| DHU | Data Handling Unit |

| MCU | Microcontroller Unit |

| S2P | Space Safety and Security Programme |

| DART | Double Asteroid Redirect Test |

| Vis | Visible |

| SWIR | Short-Wave Infrared |

| DPU | Data Processing Unit |

| DPCM | Differential Pulse-Code Modulation |

| SPOT | Satellite Pour l’Observation de la Terre |

| DCT | Discrete Cosine Transform |

| JPEG | Joint Photographic Experts Group |

| DWT | Discrete Wavelet Transform |

| CCSDS | Consultative Committee for Space Data Systems |

| CDF | Cohen–Daubechies–Feauveau |

| RCT | Reversible Component Transform |

| LGT | Le Gall–Tabatabai |

| ICT | Irreversible Component transform |

| EBCOT | Embedded Block Coding with Optimal Truncation Points |

| AIS | Asteroid Image Simulator |

| DN | Digital numbers |

| RAM | Random Access Memory |

| FORPDN | First Order Spectral Roughness Penalty Denoising |

| HyRes | Hyperspectral Restoration |

| LRMR | Low-Rank Matrix Recovery |

| SURE | Stein’s Unbiased Risk Estimator |

References

- Jones, G.H.; Snodgrass, C.; Tubiana, C.; Küppers, M.; Kawakita, H.; Lara, L.M.; Agarwal, J.; André, N.; Attree, N.; Auster, U.; et al. The Comet Interceptor mission. Space Sci. Rev. 2024, 220, 9. [Google Scholar] [CrossRef] [PubMed]

- Michel, P.; Küppers, M.; Bagatin, A.C.; Carry, B.; Charnoz, S.; De Leon, J.; Fitzsimmons, A.; Gordo, P.; Green, S.F.; Hérique, A.; et al. The ESA Hera mission: Detailed characterization of the DART impact outcome and of the binary asteroid (65803) Didymos. Planet. Sci. J. 2022, 3, 160. [Google Scholar] [CrossRef]

- Daly, R.; Ernst, C.; Barnouin, O.; Chabot, N.; Rivkin, A.; Cheng, A.; Adams, E.; Agrusa, H.; Abel, E.; Alford, A.; et al. Successful kinetic impact into an asteroid for planetary defence. Nature 2023, 616, 443–447. [Google Scholar] [CrossRef]

- Kohout, T.; Näsilä, A.; Tikka, T.; Granvik, M.; Kestilä, A.; Penttilä, A.; Kuhno, J.; Muinonen, K.; Viherkanto, K.; Kallio, E. Feasibility of asteroid exploration using CubeSats—ASPECT case study. Adv. Space Res. 2018, 62, 2239–2244. [Google Scholar] [CrossRef]

- Kumar, A.; Kumaran, R.; Paul, S.; Mehta, S. ADPCM Image Compression Techniques for Remote Sensing Applications. Int. J. Inf. Eng. Electron. Bus. 2015, 7, 26. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Lier, P.; Moury, G.A.; Latry, C.; Cabot, F. Selection of the SPOT5 image compression algorithm. In Earth Observing Systems III; SPIE: Bellingham, WA, USA, 1998; Volume 3439, pp. 541–552. [Google Scholar]

- Wallace, G.K. The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 1992, 38, xviii–xxxiv. [Google Scholar] [CrossRef]

- McEwen, A.; Robinson, M. Mapping of the Moon by Clementine. Adv. Space Res. 1997, 19, 1523–1533. [Google Scholar] [CrossRef]

- Berghmans, D.; Hochedez, J.; Defise, J.; Lecat, J.; Nicula, B.; Slemzin, V.; Lawrence, G.; Katsyiannis, A.; Van der Linden, R.; Zhukov, A.; et al. SWAP onboard PROBA 2, a new EUV imager for solar monitoring. Adv. Space Res. 2006, 38, 1807–1811. [Google Scholar] [CrossRef]

- Kumar, G.; Brar, E.S.S.; Kumar, R.; Kumar, A. A review: DWT-DCT technique and arithmetic-Huffman coding based image compression. Int. J. Eng. Manuf. 2015, 5, 20. [Google Scholar] [CrossRef][Green Version]

- Zhang, D.; Zhang, D. Wavelet transform. In Fundamentals of Image Data Mining: Analysis, Features, Classification and Retrieval; Springer Nature: Berlin/Heidelberg, Germany, 2019; pp. 35–44. [Google Scholar]

- Boliek, M. JPEG2000 Part I Final Draft International Standard; (ISO/IEC FDIS15444-1), ISO/IEC JTC1/SC29/WG1 N1855; International Organization for Standardization: Geneva, Switzerland, 2000. [Google Scholar]

- Image Data Compression; Image Data Compression Blue Book; CCSDS Secretariat National Aeronautics and Space Administration: Washington, DC, USA, 2005.

- Vonesch, C.; Blu, T.; Unser, M. Generalized Daubechies wavelet families. IEEE Trans. Signal Process. 2007, 55, 4415–4429. [Google Scholar] [CrossRef]

- Perfetto, S.; Wilder, J.; Walther, D.B. Effects of spatial frequency filtering choices on the perception of filtered images. Vision 2020, 4, 29. [Google Scholar] [CrossRef] [PubMed]

- Adams, M.D.; Ward, R.K. JasPer: A portable flexible open-source software tool kit for image coding/processing. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP 2004, Montreal, QC, Canada, 17–21 May 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 241–244. [Google Scholar] [CrossRef]

- ISO/IEC 15444-5:2003; Information Technology — JPEG 2000 Image Coding System: Reference Software Part 5. International Organization for Standardization: Geneva, Switzerland, 2003.

- Unser, M.; Blu, T. Mathematical properties of the JPEG2000 wavelet filters. IEEE Trans. Image Process. 2003, 12, 1080–1090. [Google Scholar] [CrossRef] [PubMed]

- Delaunay, X.; Chabert, M.; Charvillat, V.; Morin, G. Satellite image compression by post-transforms in the wavelet domain. Signal Process. 2010, 90, 599–610. [Google Scholar] [CrossRef]

- Daly, R.; Ernst, C.; Barnouin, O.; Gaskell, R.; Nair, H.; Agrusa, H.; Chabot, N.; Cheng, A.; Dotto, E.; Mazzotta Epifani, E.; et al. An Updated Shape Model of Dimorphos from DART Data. Planet. Sci. J. 2024, 5, 24. [Google Scholar] [CrossRef]

- de León, J.; Licandro, J.; Serra-Ricart, M.; Pinilla-Alonso, N.; Campins, H. Observations, compositional, and physical characterization of near-Earth and Mars-crosser asteroids from a spectroscopic survey. Astron. Astrophys. 2010, 517, A23. [Google Scholar] [CrossRef]

- Rasti, B.; Scheunders, P.; Ghamisi, P.; Licciardi, G.; Chanussot, J. Noise reduction in hyperspectral imagery: Overview and application. Remote. Sens. 2018, 10, 482. [Google Scholar] [CrossRef]

- Basuhail, A.A.; Kozaitis, S.P. Wavelet-based noise reduction in multispectral imagery. In Algorithms for Multispectral and Hyperspectral Imagery IV; SPIE: Bellingham, WA, USA, 1998; Volume 3372, pp. 234–240. [Google Scholar]

- Rasti, B.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Hyperspectral image denoising using first order spectral roughness penalty in wavelet domain. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2013, 7, 2458–2467. [Google Scholar] [CrossRef]

- Rasti, B.; Sveinsson, J.R.; Ulfarsson, M.O.; Sigurdsson, J. First order roughness penalty for hyperspectral image denoising. In Proceedings of the 2013 5th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Gainesville, FL, USA, 26–28 June 2013; pp. 1–4. [Google Scholar]

- Rasti, B.; Ulfarsson, M.O.; Ghamisi, P. Automatic Hyperspectral Image Restoration Using Sparse and Low-Rank Modeling. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 2335–2339. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote. Sens. 2013, 52, 4729–4743. [Google Scholar] [CrossRef]

- Rasti, B.; Koirala, B.; Scheunders, P.; Ghamisi, P. How hyperspectral image unmixing and denoising can boost each other. Remote. Sens. 2020, 12, 1728. [Google Scholar] [CrossRef]

- Nguyen, H.V.; Ulfarsson, M.O.; Sveinsson, J.R. Sure based convolutional neural networks for hyperspectral image denoising. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, IEEE, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1784–1787. [Google Scholar]

- Coquelin, D.; Rasti, B.; Götz, M.; Ghamisi, P.; Gloaguen, R.; Streit, A. Hyde: The first open-source, python-based, gpu-accelerated hyperspectral denoising package. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), IEEE, Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar]

- Zabala, A.; Vitulli, R.; Pons, X. Impact of CCSDS-IDC and JPEG 2000 Compression on Image Quality and Classification. J. Electr. Comput. Eng. 2012, 2012, 761067. [Google Scholar] [CrossRef]

- Indradjad, A.; Nasution, A.S.; Gunawan, H.; Widipaminto, A. A comparison of Satellite Image Compression methods in the Wavelet Domain. IOP Conf. Ser. Earth Environ. Sci. 2019, 280, 012031. [Google Scholar] [CrossRef]

- Palsson, B.; Sigurdsson, J.; Sveinsson, J.R.; Ulfarsson, M.O. Hyperspectral Unmixing Using a Neural Network Autoencoder. IEEE Access 2018, 6, 25646–25656. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse Unmixing of Hyperspectral Data. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).