WMFA-AT: Adaptive Teacher with Weighted Multi-Layer Feature Alignment for Cross-Domain UAV Object Detection

Highlights

- The proposed method effectively mitigates domain discrepancies in UAV object detection by integrating teacher–student mutual learning, domain adversarial learning, and weighted multi-layer feature alignment.

- Experimental results on four challenging UAV cross-domain benchmarks—covering cross-time, cross-camera, cross-view, and cross-weather scenarios—demonstrate that WMFA-AT consistently improves detection accuracy and robustness under severe domain shifts.

- The proposed method enables accurate UAV object detection in unseen domains without requiring additional bounding box annotations, substantially reducing the cost of manual labeling for new environments.

- The effectiveness of the weighted multi-layer feature alignment strategy highlights a new direction for designing domain-adaptive detection frameworks capable of generalizing across complex aerial imaging conditions.

Abstract

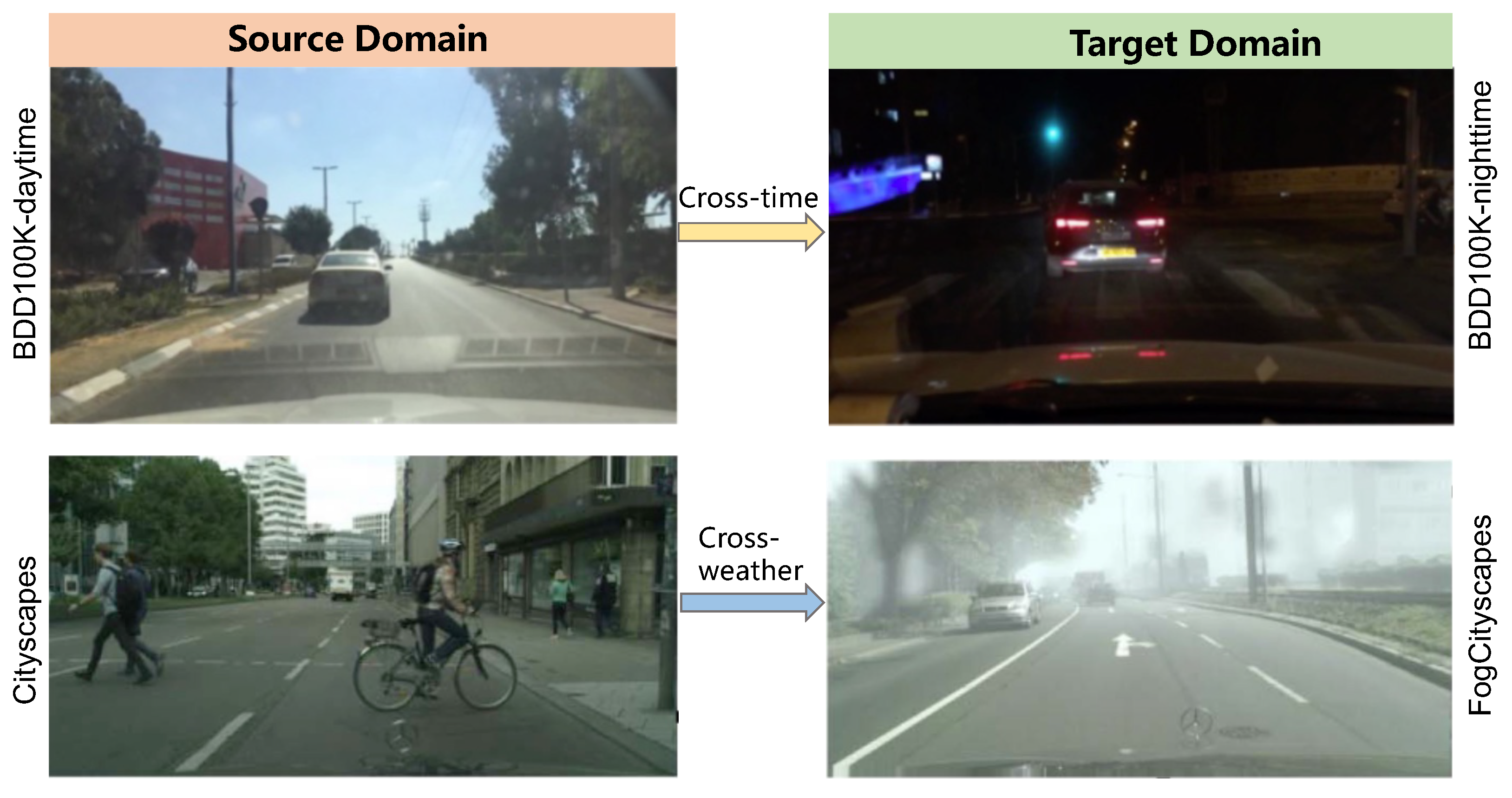

1. Introduction

- 1.

- We propose a novel teacher–student framework (WMFA-AT) for cross-domain UAV object detection that requires no bounding box annotations in the target domain, thereby significantly reducing annotation costs while maintaining high detection accuracy.

- 2.

- A weighted multi-layer feature adaptive alignment mechanism is introduced, enabling layer-wise domain adaptation based on estimated transferability. This approach effectively enhances pseudo-label quality and improves model generalization under complex domain shifts.

- 3.

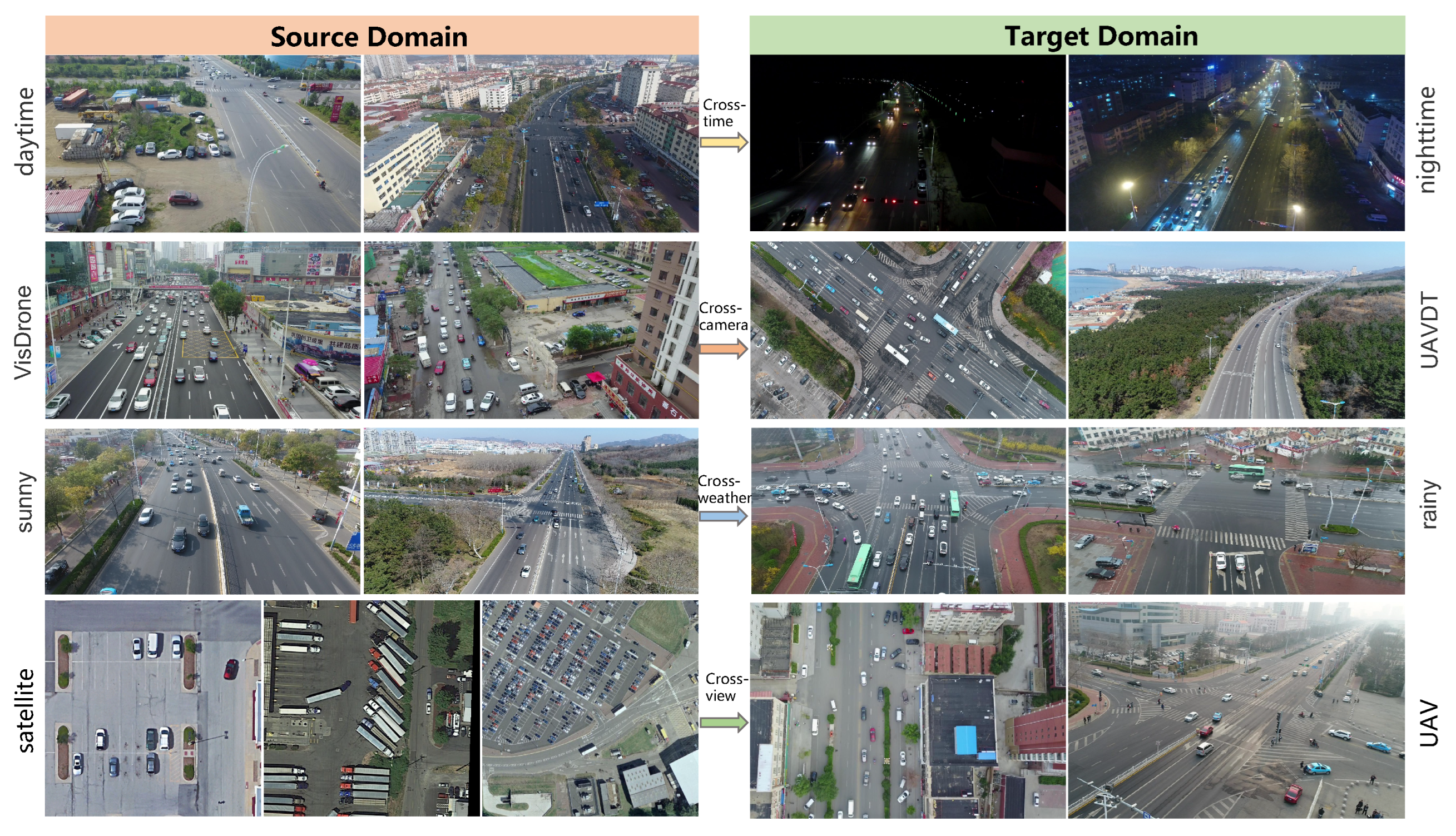

- We construct four challenging UAV cross-domain datasets spanning cross-time, cross-camera, cross-view, and cross-weather scenarios to comprehensively validate our approach. Extensive experiments demonstrate that WMFA-AT consistently outperforms state-of-the-art methods across all scenarios, highlighting its robustness and versatility.

2. Materials and Methods

2.1. Datasets

2.1.1. Cross-Time UAV Object Detection Dataset

2.1.2. Cross-Camera UAV Object Detection Dataset

2.1.3. Cross-View UAV Object Detection Dataset

2.1.4. Cross-Weather UAV Object Detection Dataset

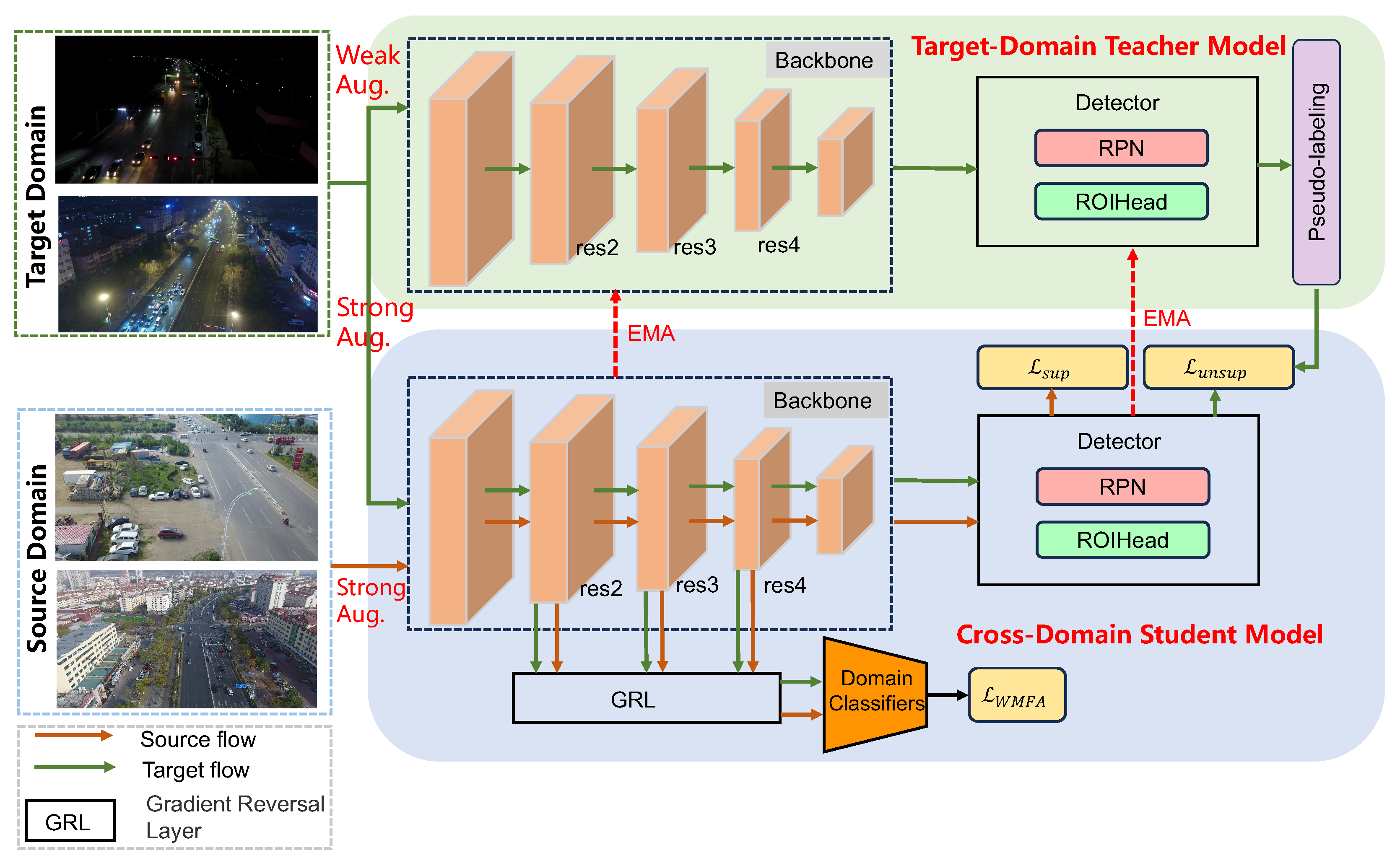

2.2. Methods

2.2.1. Basic Network Architecture

2.2.2. Teacher–Student Mutual Learning Strategy

- (1)

- Model Initialization

- (2)

- Optimizing the Student Model Using Target Pseudo-Labels

- (3)

- Gradually updating the teacher model from the student model

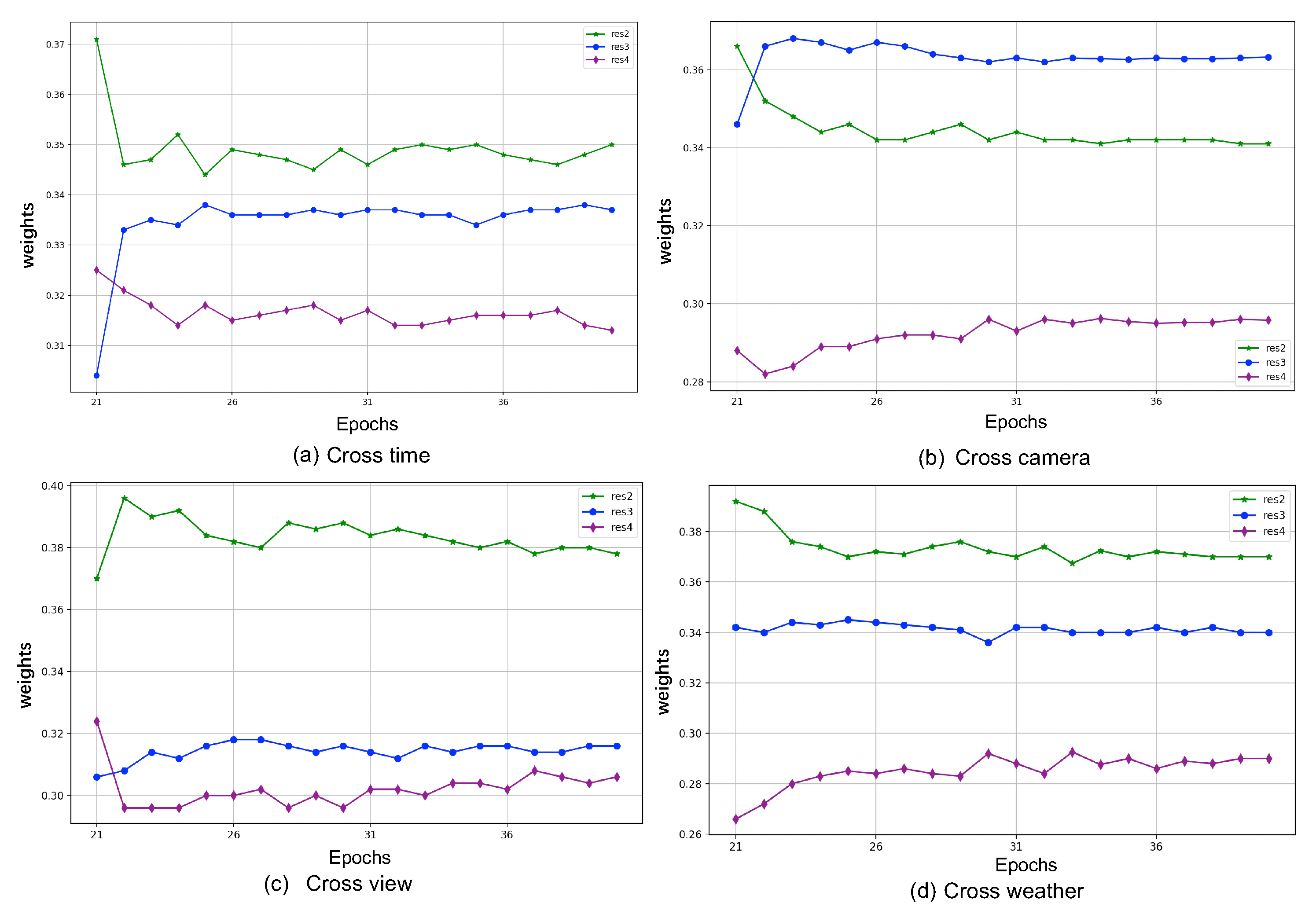

2.3. Weighted Multi-Layer Feature Alignment Strategy for Adversarial Learning

2.4. Loss Function

3. Results

3.1. Comparison Methods

3.2. Implementation Details

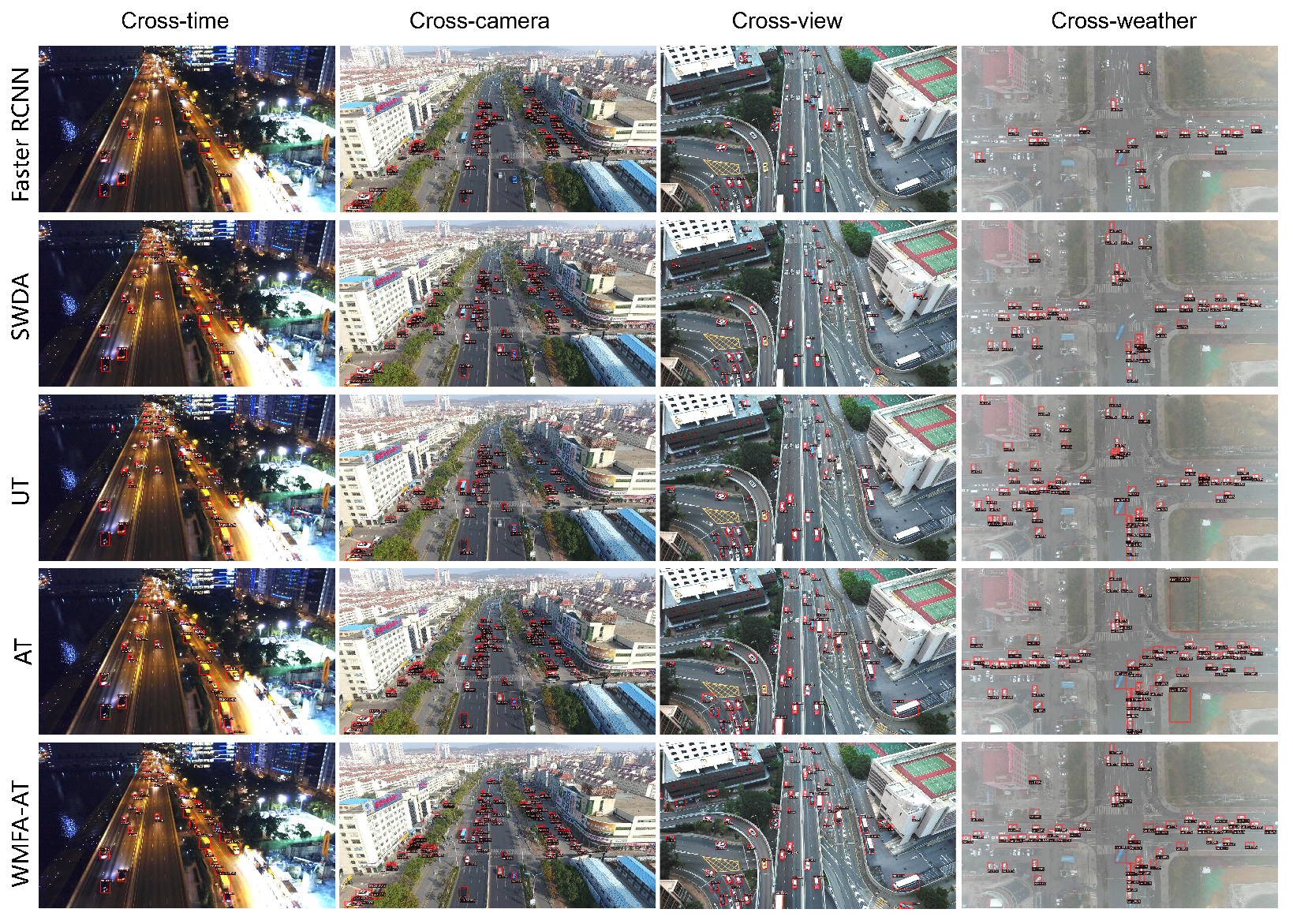

3.3. Quantitative Evaluation

3.3.1. Cross-Time Domain Adaptive Object Detection

3.3.2. Cross-Camera Domain Adaptive Object Detection

3.3.3. Cross-View Domain Adaptive Object Detection

3.3.4. Cross-Weather Domain Adaptive Object Detection

3.4. Qualitative Evaluation

4. Discussion

4.1. Impact of Weighted Multi-Layer Feature Alignment Loss

4.2. Impact of Data Augmentation Strategy

4.3. Impact of and EMA

4.4. Limitations of the Proposed Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Weber, I.; Bongartz, J.; Roscher, R. Artificial and Beneficial–Exploiting Artificial Images for Aerial Vehicle Detection. ISPRS J. Photogramm. Remote Sens. 2021, 175, 158–170. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Shao, Z.; Cheng, G.; Li, D.; Huang, X.; Lu, Z.; Liu, J. Spatio-temporal-spectral-angular observation model that integrates observations from UAV and mobile mapping vehicle for better urban mapping. Geo-Spat. Inf. Sci. 2021, 24, 615–629. [Google Scholar] [CrossRef]

- Cheng, G.; Feng, X.; Tian, Y.; Xie, M.; Dang, C.; Ding, Q.; Shao, Z. ASCDet: Cross-space UAV object detection method guided by adaptive sparse convolution. Int. J. Digit. Earth 2025, 18, 2528648. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, Z.; Zhao, H. DCS-YOLOv8: A Lightweight Context-Aware Network for Small Object Detection in UAV Remote Sensing Imagery. Remote Sens. 2025, 17, 2989. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object Detection in Aerial Images: A Large-Scale Benchmark and Challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Shao, Z.; Cheng, G.; Ma, J.; Wang, Z.; Wang, J.; Li, D. Real-time and accurate UAV pedestrian detection for social distancing monitoring in COVID-19 pandemic. IEEE Trans. Multimed. 2021, 24, 2069–2083. [Google Scholar] [CrossRef]

- Yu, H.; Wang, J.; Bai, Y.; Yang, W.; Xia, G.S. Analysis of large-scale UAV images using a multi-scale hierarchical representation. Geo-Spat. Inf. Sci. 2018, 21, 33–44. [Google Scholar] [CrossRef]

- Zhang, S.; Shao, Z.; Huang, X.; Bai, L.; Wang, J. An internal-external optimized convolutional neural network for arbitrary orientated object detection from optical remote sensing images. Geo-Spat. Inf. Sci. 2021, 24, 654–665. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, Y.; Shi, C.; Li, Q.; Yang, B.; Hao, W. Identifying vehicle types from trajectory data based on spatial-semantic information. Geo-Spat. Inf. Sci. 2024, 28, 1757–1773. [Google Scholar] [CrossRef]

- Xu, Z.; Zhao, H.; Liu, P.; Wang, L.; Zhang, G.; Chai, Y. SRTSOD-YOLO: Stronger Real-Time Small Object Detection Algorithm Based on Improved YOLO11 for UAV Imageries. Remote Sens. 2025, 17, 3414. [Google Scholar] [CrossRef]

- Qu, S.; Dang, C.; Chen, W.; Liu, Y. SMA-YOLO: An Improved YOLOv8 Algorithm Based on Parameter-Free Attention Mechanism and Multi-Scale Feature Fusion for Small Object Detection in UAV Images. Remote Sens. 2025, 17, 2421. [Google Scholar] [CrossRef]

- Li, Y.J.; Dai, X.; Ma, C.Y.; Liu, Y.C.; Chen, K.; Wu, B.; He, Z.; Kitani, K.; Vajda, P. Cross-Domain Adaptive Teacher for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7581–7590. [Google Scholar]

- Fang, F.; Kang, J.; Li, S.; Tian, P.; Liu, Y.; Luo, C.; Zhou, S. Multi-Granularity Domain-Adaptive Teacher for Unsupervised Remote Sensing Object Detection. Remote Sens. 2025, 17, 1743. [Google Scholar] [CrossRef]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A Theory of Learning from Different Domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef]

- Liu, W.; Liu, J.; Su, X.; Nie, H.; Luo, B. Multi-level domain perturbation for source-free object detection in remote sensing images. Geo-Spat. Inf. Sci. 2024, 28, 1034–1050. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, L.; Chen, Z. A Step-Wise Domain Adaptation Detection Transformer for Object Detection under Poor Visibility Conditions. Remote Sens. 2024, 16, 2722. [Google Scholar] [CrossRef]

- Ma, Y.; Chai, L.; Jin, L.; Yan, J. Hierarchical alignment network for domain adaptive object detection in aerial images. ISPRS J. Photogramm. Remote Sens. 2024, 208, 39–52. [Google Scholar] [CrossRef]

- Chen, Y.; Li, W.; Sakaridis, C.; Dai, D.; Van Gool, L. Domain Adaptive Faster R-CNN for Object Detection in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3339–3348. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T.; Saenko, K. Strong-Weak Distribution Alignment for Adaptive Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6956–6965. [Google Scholar]

- Zhao, L.; Wang, L. Task-Specific Inconsistency Alignment for Domain Adaptive Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14217–14226. [Google Scholar]

- Wang, W.; Zhang, J.; Zhai, W.; Cao, Y.; Tao, D. Robust Object Detection via Adversarial Novel Style Exploration. IEEE Trans. Image Process. 2022, 31, 1949–1962. [Google Scholar] [CrossRef]

- Arruda, V.F.; Berriel, R.F.; Paixão, T.M.; Badue, C.; De Souza, A.F.; Sebe, N.; Oliveira-Santos, T. Cross-Domain Object Detection Using Unsupervised Image Translation. Expert Syst. Appl. 2022, 192, 116334. [Google Scholar] [CrossRef]

- Deng, J.; Li, W.; Chen, Y.; Duan, L. Unbiased Mean Teacher for Cross-Domain Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4091–4101. [Google Scholar]

- Deng, J.; Xu, D.; Li, W.; Duan, L. Harmonious Teacher for Cross-Domain Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 23829–23838. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. Bdd100k: A Diverse Driving Video Database with Scalable Annotation Tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- He, Z.; Zhang, L. Multi-Adversarial Faster-RCNN for Unrestricted Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6668–6677. [Google Scholar]

- Hsu, C.C.; Tsai, Y.H.; Lin, Y.Y.; Yang, M.H. Every Pixel Matters: Center-Aware Feature Alignment for Domain Adaptive Object Detector. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12354, pp. 733–748. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Ding, X.; Huang, Y.; Dou, Q. Harmonizing Transferability and Discriminability for Adapting Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8869–8878. [Google Scholar]

- Zheng, Y.; Huang, D.; Liu, S.; Wang, Y. Cross-Domain Object Detection through Coarse-to-Fine Feature Adaptation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13766–13775. [Google Scholar]

- He, Z.; Zhang, L. Domain Adaptive Object Detection via Asymmetric Tri-Way Faster-RCNN. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12369, pp. 309–324. [Google Scholar] [CrossRef]

- Xu, C.D.; Zhao, X.R.; Jin, X.; Wei, X.S. Exploring Categorical Regularization for Domain Adaptive Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11724–11733. [Google Scholar]

- Zhao, Z.; Guo, Y.; Shen, H.; Ye, J. Adaptive Object Detection with Dual Multi-label Prediction. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12373, pp. 54–69. [Google Scholar] [CrossRef]

- Lee, D.H. Pseudo-Label: The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 20–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. arXiv 2017, arXiv:1610.02242. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean Teachers Are Better Role Models: Weight-averaged Consistency Targets Improve Semi-Supervised Deep Learning Results. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep Mutual Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4320–4328. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Inoue, N.; Furuta, R.; Yamasaki, T.; Aizawa, K. Cross-Domain Weakly-Supervised Object Detection through Progressive Domain Adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5001–5009. [Google Scholar]

- Khodabandeh, M.; Vahdat, A.; Ranjbar, M.; Macready, W.G. A Robust Learning Approach to Domain Adaptive Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 480–490. [Google Scholar]

- Cai, Q.; Pan, Y.; Ngo, C.W.; Tian, X.; Duan, L.; Yao, T. Exploring Object Relation in Mean Teacher for Cross-Domain Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 11457–11466. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Chen, M.; Chen, W.; Yang, S.; Song, J.; Wang, X.; Zhang, L.; Yan, Y.; Qi, D.; Zhuang, Y.; Xie, D.; et al. Learning Domain Adaptive Object Detection with Probabilistic Teacher. arXiv 2022, arXiv:2206.06293. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef] [PubMed]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Liu, Y.C.; Ma, C.Y.; He, Z.; Kuo, C.W.; Chen, K.; Zhang, P.; Wu, B.; Kira, Z.; Vajda, P. Unbiased Teacher for Semi-Supervised Object Detection. arXiv 2021, arXiv:2102.09480. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Yang, Z.; Miao, J.; Yang, Y. H2FA R-CNN: Holistic and Hierarchical Feature Alignment for Cross-Domain Weakly Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14329–14339. [Google Scholar]

- Cao, S.; Joshi, D.; Gui, L.Y.; Wang, Y.X. Contrastive Mean Teacher for Domain Adaptive Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 23839–23848. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 1 April 2024).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

| Dataset | Image Num | People | Car | Van | Truck | Bus | Motor | Instance Num |

|---|---|---|---|---|---|---|---|---|

| VisDrone_daytime_to_night_source | 6000 | 109,422 | 133,589 | 24,235 | 12,198 | 6565 | 28,947 | 314,956 |

| VisDrone_daytime_to_night_target | 1200 | 8706 | 21,065 | 3060 | 1695 | 1497 | 2359 | 38,382 |

| Dataset | Image Num | Car | Truck | Bus | Instance Num |

|---|---|---|---|---|---|

| UAVDT_daytime_to_night_source | 8000 | 157,901 | 6298 | 3815 | 168,014 |

| UAVDT_daytime_to_night_target | 2000 | 22,968 | 264 | 624 | 23,856 |

| Dataset | Image Num | Car | Truck | Bus | Instance Num |

|---|---|---|---|---|---|

| VisDrone_to_UAVDT_source_training | 6000 | 134,500 | 12,044 | 5528 | 152,072 |

| VisDrone_to_UAVDT_target_training | 6000 | 97,879 | 4313 | 2622 | 104,814 |

| VisDrone_to_UAVDT_target_testing | 2000 | 44,195 | 918 | 913 | 46,026 |

| Cross-View | Dataset | Image Num | Car |

|---|---|---|---|

| DIOR → VisDrone | DIOR_source_training | 6000 | 37,814 |

| VisDrone_target_training | 6000 | 151,813 | |

| VisDrone_target_testing | 548 | 15,065 | |

| DIOR → UAVDT | DIOR_source_training | 6000 | 37,814 |

| UAVDT_target_training | 6000 | 104,814 | |

| UAVDT_target_testing | 2000 | 46,026 | |

| DOTA → VisDrone | DOTA_source_training | 4357 | 103,283 |

| VisDrone_target_training | 6000 | 151,813 | |

| VisDrone_target_testing | 548 | 15,065 | |

| DOTA → UAVDT | DOTA_source_training | 4357 | 103,283 |

| UAVDT_target_training | 6000 | 104,814 | |

| UAVDT_target_testing | 2000 | 46,026 |

| Dataset | Image Num | Car |

|---|---|---|

| UAVDT_sunny_to_rainy_training | 6000 | 106,180 |

| UAVDT_sunny_to_rainy_testing | 1500 | 69,270 |

| Method | People () | Car () | Van () | Truck () | Bus () | Motor () | |

|---|---|---|---|---|---|---|---|

| Faster RCNN (source-only) | 23.0 | 14.3 | 54.0 | 14.9 | 16.0 | 31.2 | 7.6 |

| DA-Faster RCNN | 30.5 | 25.2 | 57.9 | 25.8 | 26.8 | 33.6 | 14.0 |

| SWDA | 31.1 | 29.2 | 63.3 | 26.6 | 31.8 | 34.3 | 16.6 |

| UT | 31.3 | 22.4 | 63.0 | 26.1 | 23.3 | 37.9 | 15.1 |

| PT | 26.7 | 11.4 | 58.5 | 28.6 | 20.3 | 33.3 | 7.8 |

| H2FA | 28.4 | 17.8 | 57.8 | 23.8 | 27.0 | 34.4 | 9.9 |

| AT | 31.6 | 20.5 | 61.8 | 27.1 | 32.0 | 37.7 | 10.4 |

| CMT | 32.1 | 21.3 | 62.2 | 26.0 | 31.7 | 39.1 | 12.4 |

| WMFA-AT | 33.4 | 21.6 | 64.5 | 27.7 | 34.1 | 38.9 | 13.8 |

| Method | Car () | Truck () | Bus () | |

|---|---|---|---|---|

| Faster RCNN (source-only) | 42.7 | 65.8 | 29.5 | 32.9 |

| DA-Faster RCNN | 48.7 | 76.4 | 35.5 | 34.1 |

| SWDA | 57.5 | 69.7 | 57.4 | 45.4 |

| UT | 62.1 | 66.1 | 74.8 | 45.3 |

| PT | 55.8 | 81.0 | 40.2 | 46.3 |

| H2FA | 62.7 | 75.8 | 50.2 | 62.2 |

| AT | 63.0 | 81.7 | 53.8 | 53.5 |

| CMT | 63.4 | 83.9 | 58.1 | 48.2 |

| WMFA-AT | 64.6 | 81.0 | 60.7 | 52.0 |

| Method | Car () | Truck () | Bus () | |

|---|---|---|---|---|

| Faster RCNN (source-only) | 22.6 | 40.9 | 3.5 | 23.4 |

| DA-Faster RCNN | 23.6 | 42.2 | 4.7 | 23.8 |

| SWDA | 25.7 | 45.4 | 8.6 | 23.2 |

| UT | 26.8 | 43.9 | 5.1 | 31.3 |

| PT | 27.1 | 42.7 | 9.1 | 29.6 |

| H2FA | 27.3 | 47.6 | 4.5 | 29.8 |

| AT | 26.5 | 44.6 | 6.5 | 28.4 |

| CMT | 26.8 | 46.0 | 7.9 | 26.5 |

| WMFA-AT | 27.5 | 45.6 | 5.0 | 32.0 |

| Method | DIOR → VisDrone Car | DIOR → UAVDT Car | DOTA → VisDrone Car | DOTA → UAVDT Car |

|---|---|---|---|---|

| Faster RCNN (source-only) | 7.5 | 9.8 | 5.5 | 8.3 |

| DA-Faster RCNN | 16.0 | 16.4 | 15.5 | 19.4 |

| SWDA | 7.6 | 17.6 | 8.8 | 15.4 |

| UT | 16.2 | 22.6 | 10.5 | 18.9 |

| PT | 10.3 | 19.5 | 5.2 | 15.7 |

| H2FA | 9.9 | 13.5 | 15.4 | 20.1 |

| AT | 35.8 | 36.3 | 35.6 | 44.1 |

| CMT | 33.4 | 35.7 | 26.3 | 43.0 |

| WMFA-AT | 40.3 | 41.5 | 38.7 | 49.1 |

| Method | Car |

|---|---|

| Faster RCNN (source-only) | 21.4 |

| DA-Faster RCNN | 33.2 |

| SWDA | 25.3 |

| UT | 38.4 |

| PT | 38.8 |

| H2FA | 37.4 |

| AT | 60.6 |

| CMT | 61.4 |

| WMFA-AT | 62.5 |

| Method | Cross-Time (UAVDT) | Cross- Camera | Cross-View (DOTA → UAVDT) | Cross- Weather |

|---|---|---|---|---|

| WMFA-AT | 64.6 | 27.5 | 49.1 | 62.5 |

| WMFA-AT w/o | 57.9 | 24.3 | 39.3 | 55.2 |

| WMFA-AT w/o WS Aug | 61.4 | 25.0 | 43.1 | 57.0 |

| WMFA-AT w/o &EMA | 52.5 | 23.4 | 29.5 | 41.8 |

| Method | Cross-Time (UAVDT) | Cross-Camera | Cross-View (DOTA → UAVDT) | Cross-Weather |

|---|---|---|---|---|

| w/SFA | 61.0 | 25.4 | 44.1 | 56.6 |

| w/MFA | 62.9 | 26.1 | 47.1 | 60.0 |

| w/WMFA | 64.6 | 27.5 | 49.1 | 62.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, G.; Yang, H.; Tian, Y.; Xie, M.; Dang, C.; Ding, Q.; Feng, X. WMFA-AT: Adaptive Teacher with Weighted Multi-Layer Feature Alignment for Cross-Domain UAV Object Detection. Remote Sens. 2025, 17, 3854. https://doi.org/10.3390/rs17233854

Cheng G, Yang H, Tian Y, Xie M, Dang C, Ding Q, Feng X. WMFA-AT: Adaptive Teacher with Weighted Multi-Layer Feature Alignment for Cross-Domain UAV Object Detection. Remote Sensing. 2025; 17(23):3854. https://doi.org/10.3390/rs17233854

Chicago/Turabian StyleCheng, Gui, Hao Yang, Yan Tian, Meilin Xie, Chaoya Dang, Qing Ding, and Xubin Feng. 2025. "WMFA-AT: Adaptive Teacher with Weighted Multi-Layer Feature Alignment for Cross-Domain UAV Object Detection" Remote Sensing 17, no. 23: 3854. https://doi.org/10.3390/rs17233854

APA StyleCheng, G., Yang, H., Tian, Y., Xie, M., Dang, C., Ding, Q., & Feng, X. (2025). WMFA-AT: Adaptive Teacher with Weighted Multi-Layer Feature Alignment for Cross-Domain UAV Object Detection. Remote Sensing, 17(23), 3854. https://doi.org/10.3390/rs17233854