Individual Tree-Level Biomass Mapping in Chinese Coniferous Plantation Forests Using Multimodal UAV Remote Sensing Approach Integrating Deep Learning and Machine Learning

Highlights

- An attention-enhanced, multi-scale detector (NB-YOLOv8: NAM + BiFPN) markedly achieves individual-tree detection in dense conifer plantations (precision 92.3%, recall 90.6%), with the largest gains for small-diameter crowns versus YOLOv8 and watershed baselines.

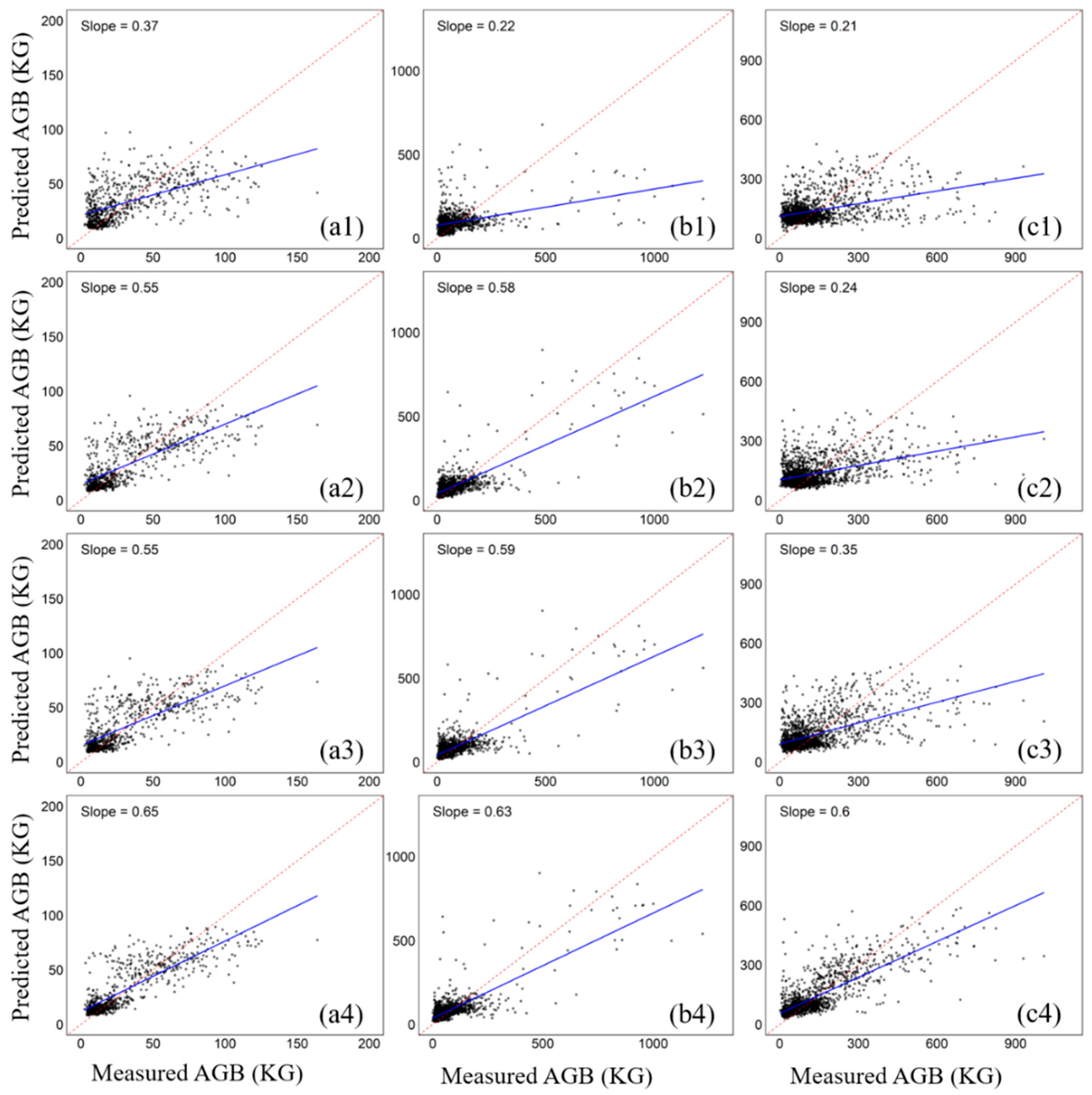

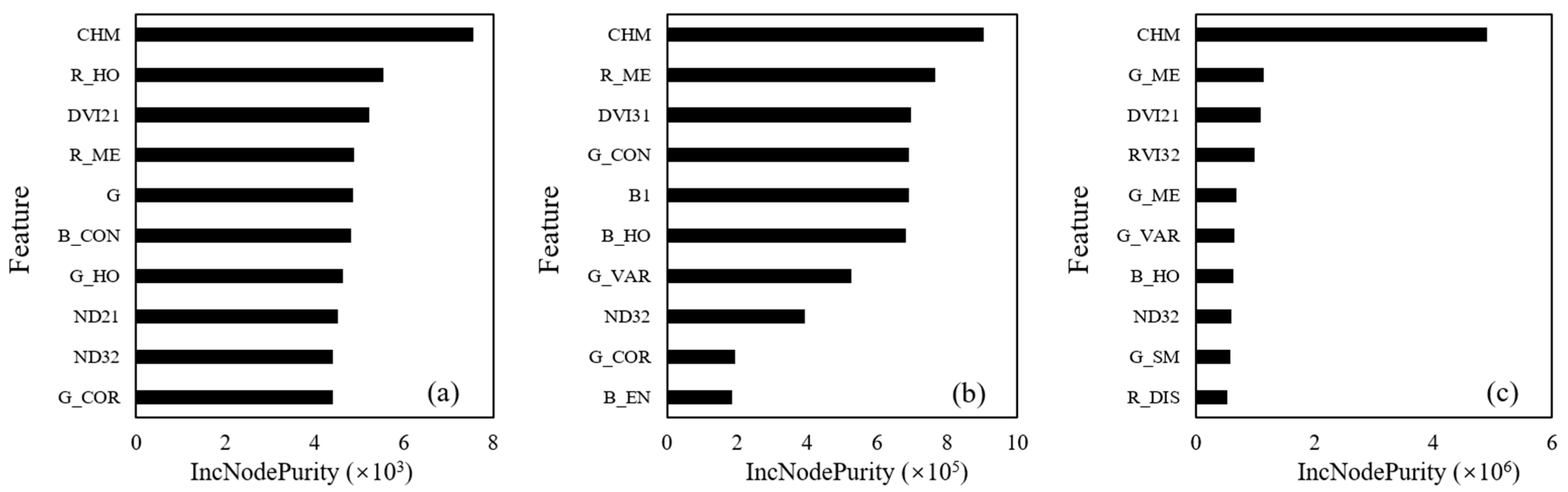

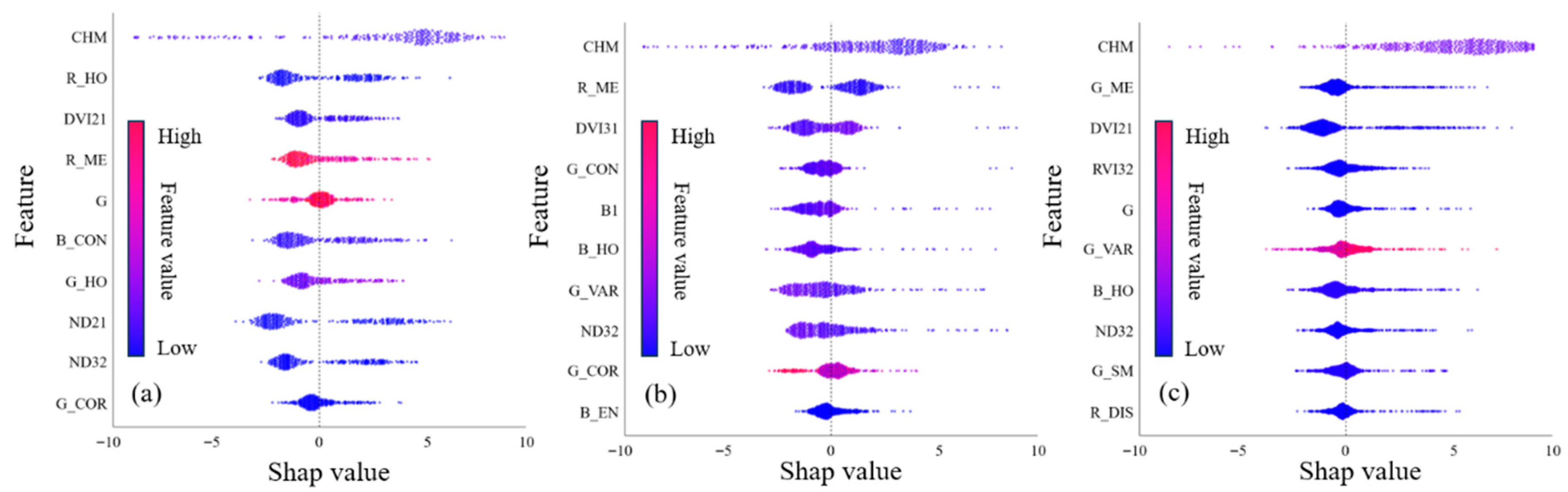

- Fusing UAV RGB texture features with LiDAR-derived CHM and modeling with Random Forest yields robust tree-level AGB estimates (R2 = 0.65–0.76); SHAP (SHapley Additive exPlanations) analysis reveals locally varying feature effects that often diverge from global importance rankings.

- The hybrid deep-learning + machine-learning workflow enables effective, fine-resolution carbon mapping and stand diagnostics (e.g., early detection of suppressed trees, prioritization of thinning zones) from multi-source UAV data.

- Model interpretability via SHAP supports defensible management decisions by exposing species- and site-specific drivers of AGB, guiding transferable feature selection and reducing black-box risk for real-world deployment.

Abstract

1. Introduction

2. Data and Method

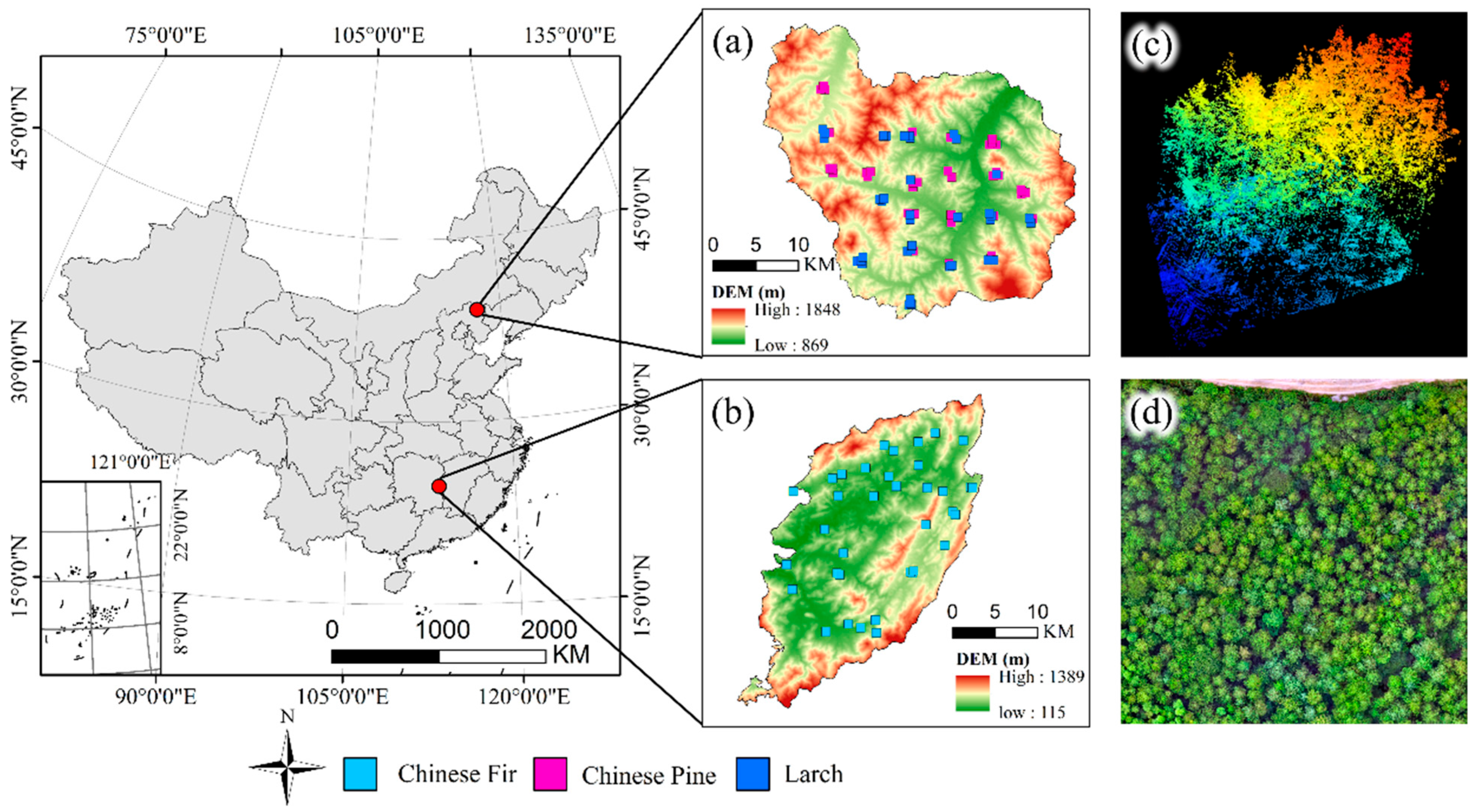

2.1. Study Area

2.2. Ground Data

2.3. UAV Data

2.3.1. Orthophoto Processing

2.3.2. LiDAR Point Cloud Data Processing

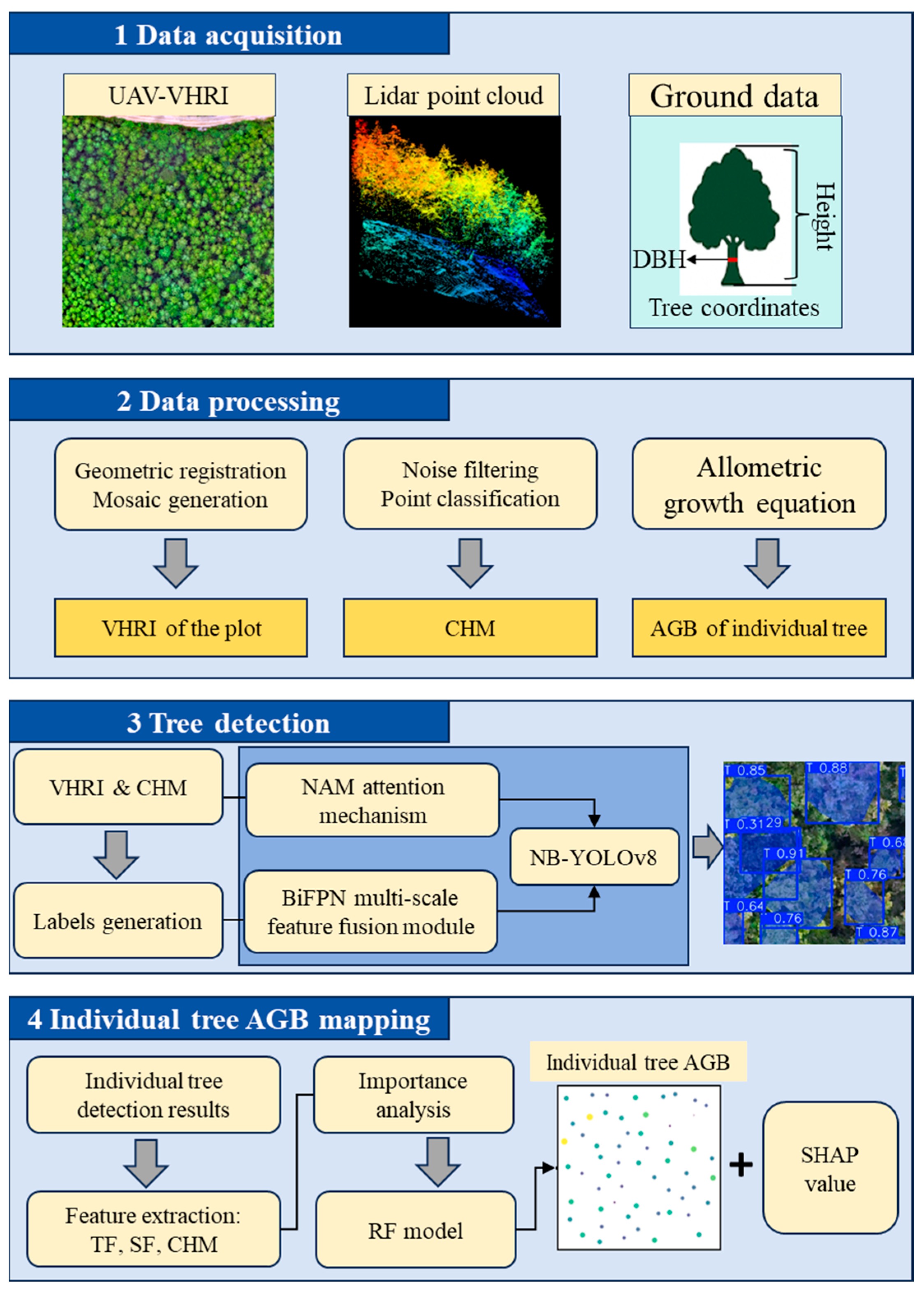

2.4. Process of This Study

2.5. Individual Tree Detection Algorithm

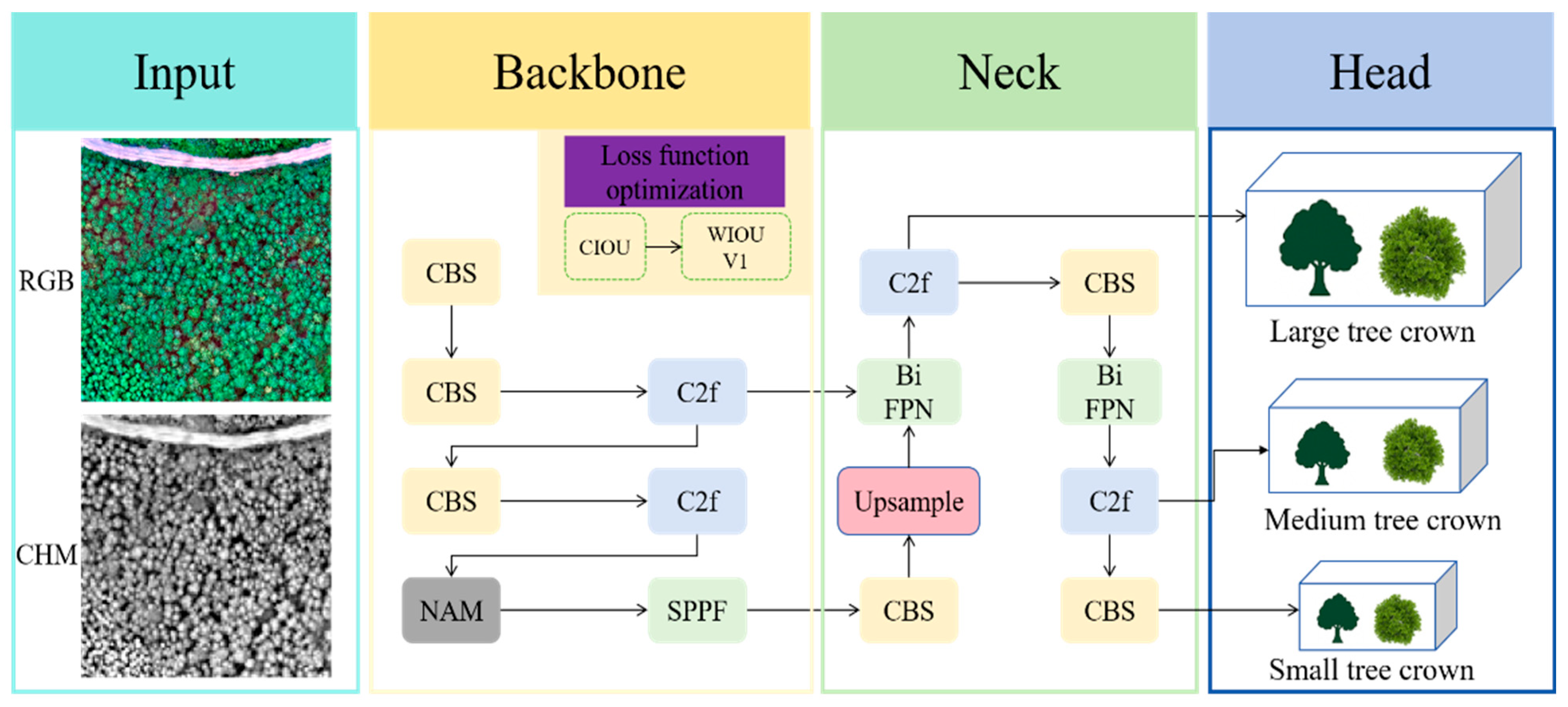

2.5.1. YOLOv8 Architecture

2.5.2. NB-YOLOv8 Algorithm

2.5.3. Watershed Algorithm

2.6. Extracting Variables Related to Individual Tree Biomass

2.6.1. Spectral Feature

2.6.2. Texture Features

2.7. Establishment of the Individual Tree AGB Model

2.7.1. Feature Selection of Variables of Interest

2.7.2. RF Model

2.7.3. Calculating SHAP Values to Explain Feature Contributions

2.8. Accuracy Verification

2.8.1. Verification of Individual Tree Detection Accuracy

2.8.2. Validation of Individual Tree AGB Estimation

3. Results

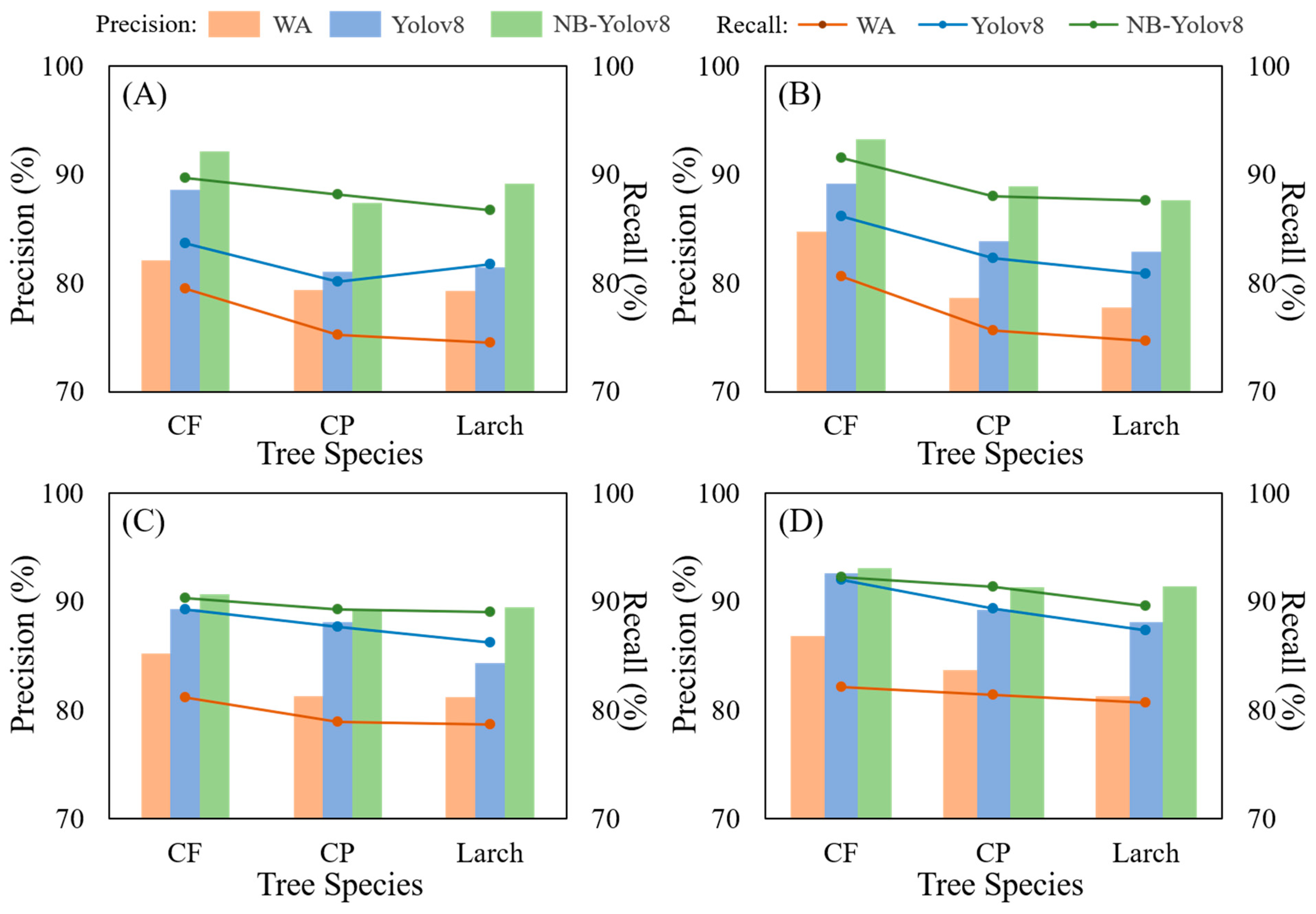

3.1. Individual Tree Detection Results

3.2. Estimation Results of Individual Tree AGB

3.3. Importance and SHAP Values of Variable

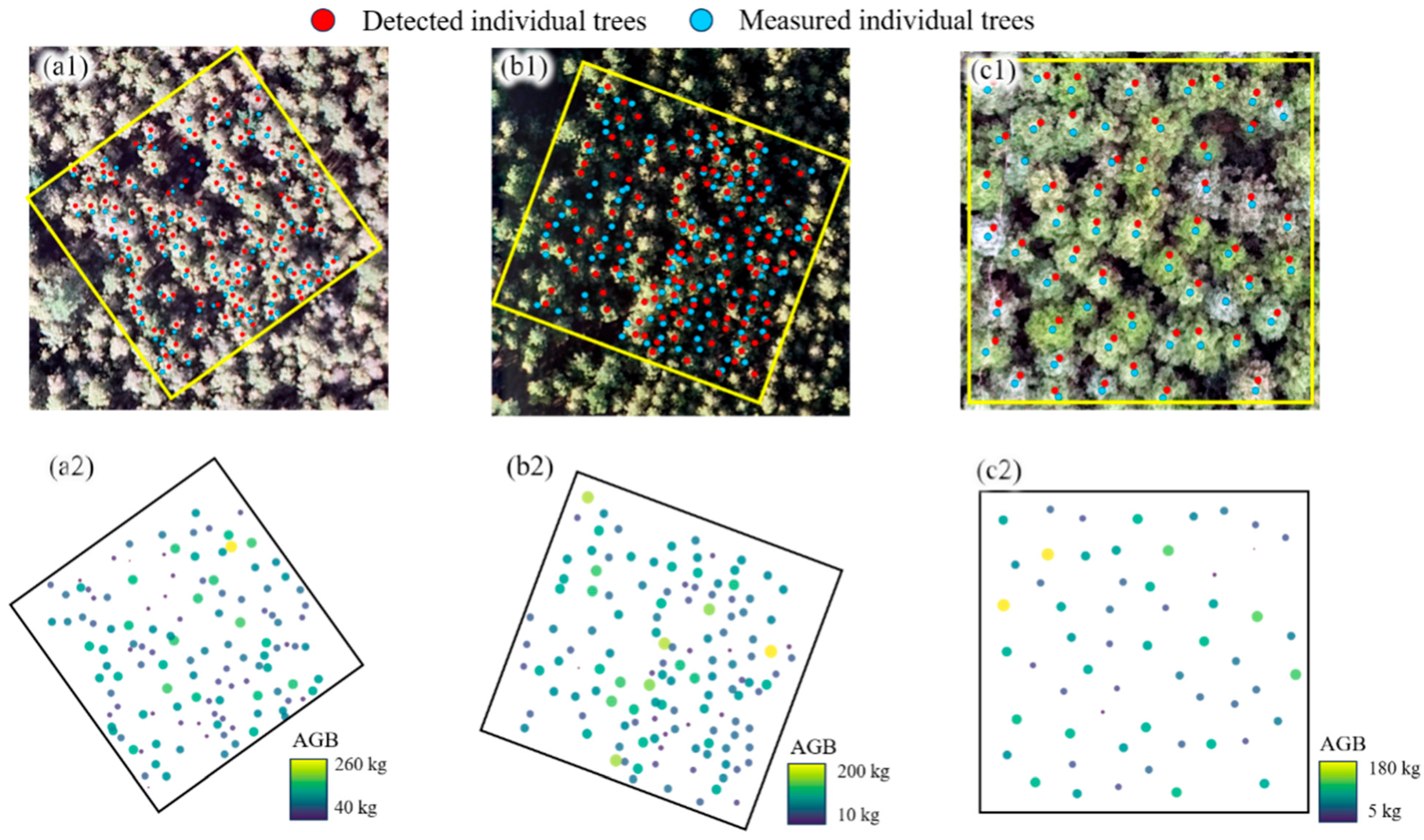

3.4. Individual Tree AGB Mapping in Sample Site

4. Discussion

4.1. Attentional Multi-Scale Fusion Boosts Tree Detection in Dense Plantations

4.2. Global Versus Local Feature Contributions in Biomass Estimation Using SHAP Analysis

4.3. Individual Tree Biomass Mapping for Precision Silviculture and Carbon Management

4.4. Strengths, Limitations, and Future Prospects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kankare, V.; Holopainen, M.; Vastaranta, M.; Puttonen, E.; Yu, X.; Hyyppä, J.; Vaaja, M.; Hyyppä, H.; Alho, P. Individual tree biomass estimation using terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2013, 75, 64–75. [Google Scholar] [CrossRef]

- Lian, X.; Zhang, H.; Wang, L.; Gao, Y.; Shi, L.; Li, Y.; Chang, J. Combining multisource remote sensing data to calculate individual tree biomass in complex stands. J. Appl. Remote Sens. 2024, 18, 014515. [Google Scholar] [CrossRef]

- Qiao, Y.; Zheng, G.; Du, Z.; Ma, X.; Li, J.; Moskal, L.M. Tree-species classification and individual-tree-biomass model construction based on hyperspectral and LiDAR data. Remote Sens. 2023, 15, 1341. [Google Scholar] [CrossRef]

- Sarker, L.R.; Nichol, J.E. Improved forest biomass estimates using ALOS AVNIR-2 texture indices. Remote Sens. Environ. 2011, 115, 968–977. [Google Scholar] [CrossRef]

- Yang, J.; Cooper, D.J.; Li, Z.; Song, W.; Zhang, Y.; Zhao, B.; Han, S.; Wang, X. Differences in tree and shrub growth responses to climate change in a boreal forest in China. Dendrochronologia 2020, 63, 125744. [Google Scholar] [CrossRef]

- Liu, Z.; Ye, Z.; Xu, X.; Lin, H.; Zhang, T.; Long, J. Mapping forest stock volume based on growth characteristics of crown using multi-temporal Landsat 8 OLI and ZY-3 stereo images in planted eucalyptus forest. Remote Sens. 2022, 14, 5082. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G.; Ducey, M.J. Quantifying the Accuracy of UAS-Lidar Individual Tree Detection Methods Across Height and Diameter at Breast Height Sizes in Complex Temperate Forests. Remote Sens. 2025, 17, 1010. [Google Scholar] [CrossRef]

- Cloutier, M.; Germain, M.; Laliberté, E. Influence of temperate forest autumn leaf phenology on segmentation of tree species from UAV imagery using deep learning. Remote Sens. Environ. 2024, 311, 114283. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, X.; Li, S.; Dong, R.; Wang, X.; Zhang, C.; Zhang, L. Multispecies individual tree crown extraction and classification based on BlendMask and high-resolution UAV images. J. Appl. Remote Sens. 2023, 17, 016503. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, Y.-F.; Ding, Z.-D.; Liang, R.-T.; Xie, Y.-H.; Li, R.; Li, H.-W.; Pan, L.; Sun, Y.-J. Individual tree segmentation and biomass estimation based on UAV Digital aerial photograph. J. Mt. Sci. 2023, 20, 724–737. [Google Scholar] [CrossRef]

- Chuang, H.-Y.; Kiang, J.-F. High-Resolution L-Band TomoSAR Imaging on Forest Canopies with UAV Swarm to Detect Dielectric Constant Anomaly. Sensors 2023, 23, 8335. [Google Scholar] [CrossRef] [PubMed]

- Grishin, I.A.; Krutov, T.Y.; Kanev, A.I.; Terekhov, V.I. Individual tree segmentation quality evaluation using deep learning models lidar based. Opt. Mem. Neural Netw. 2023, 32 (Suppl. 2), S270–S276. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating tree detection and segmentation routines on very high resolution UAV LiDAR data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Saeed, T.; Hussain, E.; Ullah, S.; Iqbal, J.; Atif, S.; Yousaf, M. Performance evaluation of individual tree detection and segmentation algorithms using ALS data in Chir Pine (Pinus roxburghii) forest. Remote Sens. Appl. Soc. Environ. 2024, 34, 101178. [Google Scholar] [CrossRef]

- Deng, S.; Jing, S.; Zhao, H. A hybrid method for individual tree detection in broadleaf forests based on UAV-LiDAR data and multistage 3D structure analysis. Forests 2024, 15, 1043. [Google Scholar] [CrossRef]

- de Oliveira, P.A.; Conti, L.A.; Neto, F.C.N.; Barcellos, R.L.; Cunha-Lignon, M. Mangrove individual tree detection based on the uncrewed aerial vehicle multispectral imagery. Remote Sens. Appl. Soc. Environ. 2024, 33, 101100. [Google Scholar] [CrossRef]

- Jiang, F.; Kutia, M.; Ma, K.; Chen, S.; Long, J.; Sun, H. Estimating the aboveground biomass of coniferous forest in Northeast China using spectral variables, land surface temperature and soil moisture. Sci. Total Environ. 2021, 785, 147335. [Google Scholar] [CrossRef]

- Kozniewski, M.; Kolendo, Ł.; Chmur, S.; Ksepko, M. Impact of Parameters and Tree Stand Features on Accuracy of Watershed-Based Individual Tree Crown Detection Method Using ALS Data in Coniferous Forests from North-Eastern Poland. Remote Sens. 2025, 17, 575. [Google Scholar] [CrossRef]

- Li, Y.; Xie, D.; Wang, Y.; Jin, S.; Zhou, K.; Zhang, Z.; Li, W.; Zhang, W.; Mu, X.; Yan, G. Individual tree segmentation of airborne and UAV LiDAR point clouds based on the watershed and optimized connection center evolution clustering. Ecol. Evol. 2023, 13, e10297. [Google Scholar] [CrossRef]

- Hu, X.; Hu, C.; Han, J.; Sun, H.; Wang, R. Point cloud segmentation for an individual tree combining improved point transformer and hierarchical clustering. J. Appl. Remote Sens. 2023, 17, 034505. [Google Scholar] [CrossRef]

- Zheng, J.; Yuan, S.; Li, W.; Fu, H.; Yu, L.; Huang, J. A Review of Individual Tree Crown Detection and Delineation From Optical Remote Sensing Images: Current progress and future. IEEE Geosci. Remote Sens. Mag. 2024, 13, 209–236. [Google Scholar] [CrossRef]

- Tolan, J.; Yang, H.-I.; Nosarzewski, B.; Couairon, G.; Vo, H.V.; Brandt, J.; Spore, J.; Majumdar, S.; Haziza, D.; Vamaraju, J.; et al. Very high resolution canopy height maps from RGB imagery using self-supervised vision transformer and convolutional decoder trained on aerial lidar. Remote Sens. Environ. 2024, 300, 113888. [Google Scholar] [CrossRef]

- Bolat, F. Assessing regional variation of individual-tree diameter increment of Crimean pine and investigating interactive effect of competition and climate on this species. Environ. Monit. Assess. 2025, 197, 24. [Google Scholar] [CrossRef]

- Zhou, M.; Liu, S.; Li, J. Multi-scale Forest Flame Detection Based on Improved and Optimized YOLOv5. Fire Technol. 2023, 59, 3689–3708. [Google Scholar] [CrossRef]

- Ferreira, M.P.; de Almeida, D.R.A.; Papa, D.D.A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Chen, W.; Guan, Z.; Gao, D. Att-Mask R-CNN: An individual tree crown instance segmentation method based on fused attention mechanism. Can. J. For. Res. 2024, 54, 825–838. [Google Scholar] [CrossRef]

- Mustafic, S.; Hirschmugl, M.; Perko, R.; Wimmer, A. Deep Learning for Improved Individual Tree Detection from Lidar Data. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: Piscataway, NJ, USA; pp. 3516–3519. [Google Scholar]

- Kwon, R.; Ryu, Y.; Yang, T.; Zhong, Z.; Im, J. Merging multiple sensing platforms and deep learning empowers individual tree mapping and species detection at the city scale. ISPRS J. Photogramm. Remote Sens. 2023, 206, 201–221. [Google Scholar] [CrossRef]

- Zhao, H.; Morgenroth, J.; Pearse, G.; Schindler, J. A systematic review of individual tree crown detection and delineation with convolutional neural networks (CNN). Curr. For. Rep. 2023, 9, 149–170. [Google Scholar] [CrossRef]

- Hao, Z.; Post, C.J.; Mikhailova, E.A.; Lin, L.; Liu, J.; Yu, K. How does sample labeling and distribution affect the accuracy and efficiency of a deep learning model for individual tree-crown detection and delineation. Remote Sens. 2022, 14, 1561. [Google Scholar] [CrossRef]

- Hayashi, Y.; Deng, S.; Katoh, M.; Nakamura, R. Individual tree canopy detection and species classification of conifers by deep learning. Jpn. Soc. For. Plan. 2021, 55, 3–22. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Su, M.; Sun, Y.; Pan, W.; Cui, H.; Jin, S.; Zhang, L.; Wang, P. Tree Crown Segmentation and Diameter at Breast Height Prediction Based on BlendMask in Unmanned Aerial Vehicle Imagery. Remote Sens. 2024, 16, 368. [Google Scholar] [CrossRef]

- Xu, L.; Yu, J.; Shu, Q.; Luo, S.; Zhou, W.; Duan, D. Forest aboveground biomass estimation based on spaceborne LiDAR combining machine learning model and geostatistical method. Front. Plant Sci. 2024, 15, 1428268. [Google Scholar] [CrossRef]

- Zadbagher, E.; Marangoz, A.; Becek, K. Estimation of above-ground biomass using machine learning approaches with InSAR and LiDAR data in tropical peat swamp forest of Brunei Darussalam. iForest-Biogeosci. For. 2024, 17, 172–179. [Google Scholar] [CrossRef]

- Wu, C.; Pang, L.; Jiang, J.; An, M.; Yang, Y. Machine learning model for revealing the characteristics of soil nutrients and aboveground biomass of Northeast Forest, China. Nat. Environ. Pollut. Technol. 2020, 19, 481–492. [Google Scholar] [CrossRef]

- Ali, N.; Khati, U. Forest aboveground biomass and forest height estimation over a sub-tropical forest using machine learning algorithm and synthetic aperture radar data. J. Indian Soc. Remote Sens. 2024, 52, 771–786. [Google Scholar] [CrossRef]

- Singh, C.; Karan, S.K.; Sardar, P.; Samadder, S.R. Remote sensing-based biomass estimation of dry deciduous tropical forest using machine learning and ensemble analysis. J. Environ. Manag. 2022, 308, 114639. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Long, J.; Lin, H.; Du, K.; Xu, X.; Liu, H.; Yang, P.; Zhang, T.; Ye, Z. Interpretation and map** tree crown diameter using spatial heterogeneity in relation to the radiative transfer model extracted from GF-2 images in planted boreal forest ecosystems. Remote Sens. 2023, 15, 1806. [Google Scholar] [CrossRef]

- Liu, Z.; Long, J.; Lin, H.; Xu, X.; Liu, H.; Zhang, T.; Ye, Z.; Yang, P. Combination Strategies of Variables with Various Spatial Resolutions Derived from GF-2 Images for Mapping Forest Stock Volume. Forests 2023, 14, 1175. [Google Scholar] [CrossRef]

- Yang, Y.; Geng, S.; Cheng, C.; Yang, X.; Wu, P.; Han, X.; Zhang, H. An Edge Algorithm for Assessing the Severity of Insulator Discharges Using a Lightweight Improved YOLOv8. J. Electr. Eng. Technol. 2024, 20, 807–816. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. Vegetable disease detection using an improved YOLOv8 algorithm in the greenhouse plant environment. Sci. Rep. 2024, 14, 4261. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, C.; Li, H.; Shen, S.; Cao, W.; Li, X.; Wang, D. Improved YOLOv8-CR network for detecting defects of the automotive MEMS pressure sensors. IEEE Sens. J. 2024, 24, 26935–26945. [Google Scholar] [CrossRef]

- Liu, H.; Lu, G.; Li, M.; Su, W.; Liu, Z.; Dang, X.; Zang, D. High-precision real-time autonomous driving target detection based on YOLOv8. J. Real-Time Image Process. 2024, 21, 174. [Google Scholar] [CrossRef]

- Chu, Y.; Yu, X.; Rong, X. A Lightweight Strip Steel Surface Defect Detection Network Based on Improved YOLOv8. Sensors 2024, 24, 6495. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Liao, Y.; Liu, Y.; Tian, R.; Guo, T. Lightweight rail surface defect detection algorithm based on an improved YOLOv8. Measurement 2025, 242, 115922. [Google Scholar] [CrossRef]

- Xu, X.; Chen, C.; Meng, K.; Lu, L.; Cheng, X.; Fan, H. NAMRTNet: Automatic classification of sleep stages based on improved ResNet-TCN network and attention mechanism. Appl. Sci. 2023, 13, 6788. [Google Scholar] [CrossRef]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2024, 15, 22. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, Y.; Wei, T.; Li, Y. Lightweight algorithm based on you only look once version 5 for multiple class defect detection on wind turbine blade surfaces. Eng. Appl. Artif. Intell. 2024, 138, 109422. [Google Scholar] [CrossRef]

- Shui, Y.; Yuan, K.; Wu, M.; Zhao, Z. Improved Multi-Size, Multi-Target and 3D Position Detection Network for Flowering Chinese Cabbage Based on YOLOv8. Plants 2024, 13, 2808. [Google Scholar] [CrossRef]

- Liu, Z.; Long, J.; Lin, H.; Sun, H.; Ye, Z.; Zhang, T.; Yang, P.; Ma, Y. Mapping and analyzing the spatiotemporal dynamics of forest aboveground biomass in the ChangZhuTan urban agglomeration using a time series of Landsat images and meteorological data from 2010 to 2020. Sci. Total Environ. 2024, 944, 173940. [Google Scholar] [CrossRef]

- Reitberger, J.; Krzystek, P.; Stilla, U. Analysis of full waveform LIDAR data for the classification of deciduous and coniferous trees. Int. J. Remote Sens. 2008, 29, 1407–1431. [Google Scholar] [CrossRef]

- Xu, X.; Lin, H.; Liu, Z.; Ye, Z.; Li, X.; Long, J. A combined strategy of improved variable selection and ensemble algorithm to map the growing stem volume of planted coniferous forest. Remote Sens. 2021, 13, 4631. [Google Scholar] [CrossRef]

- Atanasov, A.Z.; Evstatiev, B.I.; Vladut, V.N.; Biris, S.-S. A Novel Algorithm to Detect White Flowering Honey Trees in Mixed Forest Ecosystems Using UAV-Based RGB Imaging. Agriengineering 2024, 6, 95–112. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Huang, Z.-K.; Li, P.-W.; Hou, L.-Y. Segmentation of textures using PCA fusion based Gray-Level Co-Occurrence Matrix features. In Proceedings of the 2009 International Conference on Test and Measurement (ICTM), Hong Kong, China, 5–6 December 2009; IEEE: Piscataway, NJ, USA; pp. 103–105. [Google Scholar]

- Lyu, C.; Joehanes, R.; Huan, T.; Levy, D.; Li, Y.; Wang, M.; Liu, X.; Liu, C.; Ma, J. Enhancing selection of alcohol consumption-associated genes by random forest. Br. J. Nutr. 2024, 131, 2058–2067. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Parsa, A.B.; Movahedi, A.; Taghipour, H.; Derrible, S.; Mohammadian, A. (Kouros) Toward safer highways, application of XGBoost and SHAP for real-time accident detection and feature analysis. Accid. Anal. Prev. 2020, 136, 105405. [Google Scholar] [CrossRef]

- Wang, D.; Thunéll, S.; Lindberg, U.; Jiang, L.; Trygg, J.; Tysklind, M. Towards better process management in wastewater treatment plants: Process analytics based on SHAP values for tree-based machine learning methods. J. Environ. Manag. 2022, 301, 113941. [Google Scholar] [CrossRef]

- Ekanayake, I.; Meddage, D.; Rathnayake, U. A novel approach to explain the black-box nature of machine learning in compressive strength predictions of concrete using Shapley additive explanations (SHAP). Case Stud. Constr. Mater. 2022, 16, e01059. [Google Scholar] [CrossRef]

- Chen, R.R.; Yin, S. The equivalence of uniform and Shapley value-based cost allocations in a specific game. Oper. Res. Lett. 2010, 38, 539–544. [Google Scholar] [CrossRef]

- Matsumoto, H.; Ohtani, M.; Washitani, I. Tree crown size estimated using image processing: A biodiversity index for sloping subtropical broad-leaved forests. Trop. Conserv. Sci. 2017, 10, 1940082917721787. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A comparison of three methods for automatic tree crown detection and delineation from high spatial resolution imagery. Int. J. Remote Sens. 2011, 32, 3625–3647. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Gao, S.; Zhong, R.; Yan, K.; Ma, X.; Chen, X.; Pu, J.; Gao, S.; Qi, J.; Yin, G.; Myneni, R.B. Evaluating the saturation effect of vegetation indices in forests using 3D radiative transfer simulations and satellite observations. Remote Sens. Environ. 2023, 295, 113665. [Google Scholar] [CrossRef]

- Gougeon, F.A.; Leckie, D.G. Forest Information Extraction from High Spatial Resolution Images Using an Individual Tree Crown Approach; No.BC-X-396; Pacific Forestry Centre, Canadian Forest Service: Victoria, BC, Canada, 2003.

| Tree Species | Mean (kg) | Range (kg) | StdDev (kg) | Number |

|---|---|---|---|---|

| CP | 94.87 | 1.76–1225.38 | 134.63 | 957 |

| larch | 32.83 | 2.49–164.08 | 28.51 | 664 |

| CF | 135.64 | 1.25–1008.02 | 129.21 | 1658 |

| Tree Species | Allometric Equation |

|---|---|

| CP | 0.027639(D2H)0.9905 + 0.0091313(D2H)0.982 + 0.0045755(D2H)0.9894 |

| larch | 0.046238(D2H)0.905002 |

| CF | 0.045D2.48H0.86 |

| Variables Name | Formula |

|---|---|

| B, G, R | Band1, Band2 and Band3 |

| DVIij | Bandi – Bandj, i, j = 1, 2, 3, i ≠ j |

| RVIij | Bandi/Bandj, i, j = 1, 2, 3, i ≠ j |

| NDij | (Bandi − Bandj)/(Bandi + Bandj), i, j = 1, 2, 3, i ≠ j |

| Model | Recall (%) | Precision (%) | F1-Score (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| CF | CP | Larch | CF | CP | Larch | CF | CP | Larch | |

| WA | 80.84 | 76.81 | 77.16 | 84.74 | 80.75 | 79.89 | 82.74 | 78.73 | 78.50 |

| Yolov8 | 87.77 | 84.86 | 84.07 | 89.94 | 85.56 | 84.21 | 88.84 | 85.21 | 84.14 |

| NB-Yolov8 | 90.96 | 89.21 | 88.24 | 92.31 | 89.25 | 89.43 | 91.63 | 89.23 | 88.83 |

| Variable Combinations | Larch | CP | CF | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE (kg) | R2 | MAE (kg) | RMSE (kg) | R2 | MAE (kg) | RMSE (kg) | R2 | MAE (kg) | |

| SF | 68.17 | 0.37 | 16.48 | 141.86 | 0.14 | 77.23 | 86.75 | 0.19 | 66.93 |

| TF | 57.34 | 0.58 | 13.09 | 106.9 | 0.65 | 74.61 | 84.42 | 0.25 | 49.58 |

| SF + TF | 56.72 | 0.6 | 12.98 | 103.55 | 0.66 | 68.76 | 81.82 | 0.29 | 48.95 |

| SF + TF + CHM | 44.98 | 0.76 | 11.15 | 105.03 | 0.67 | 47.70 | 56.91 | 0.65 | 45.68 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Liu, Z.; Li, J.; Lin, H.; Long, J.; Mu, G.; Li, S.; Lv, Y. Individual Tree-Level Biomass Mapping in Chinese Coniferous Plantation Forests Using Multimodal UAV Remote Sensing Approach Integrating Deep Learning and Machine Learning. Remote Sens. 2025, 17, 3830. https://doi.org/10.3390/rs17233830

Wang Y, Liu Z, Li J, Lin H, Long J, Mu G, Li S, Lv Y. Individual Tree-Level Biomass Mapping in Chinese Coniferous Plantation Forests Using Multimodal UAV Remote Sensing Approach Integrating Deep Learning and Machine Learning. Remote Sensing. 2025; 17(23):3830. https://doi.org/10.3390/rs17233830

Chicago/Turabian StyleWang, Yiru, Zhaohua Liu, Jiping Li, Hui Lin, Jiangping Long, Guangyi Mu, Sijia Li, and Yong Lv. 2025. "Individual Tree-Level Biomass Mapping in Chinese Coniferous Plantation Forests Using Multimodal UAV Remote Sensing Approach Integrating Deep Learning and Machine Learning" Remote Sensing 17, no. 23: 3830. https://doi.org/10.3390/rs17233830

APA StyleWang, Y., Liu, Z., Li, J., Lin, H., Long, J., Mu, G., Li, S., & Lv, Y. (2025). Individual Tree-Level Biomass Mapping in Chinese Coniferous Plantation Forests Using Multimodal UAV Remote Sensing Approach Integrating Deep Learning and Machine Learning. Remote Sensing, 17(23), 3830. https://doi.org/10.3390/rs17233830