Self-Supervised Deep Hyperspectral Inpainting with Plug-and-Play and Deep Image Prior Models

Abstract

1. Introduction

1.1. Hyperspectral Image Inpainting

1.2. Sparsity and Low Rankness in HSIs

1.3. Contributions

- This paper explores the LRS-PnP and LRS-PnP-DIP algorithm in more depth by showing the following: (1) The sparsity and low-rank constraints are both important to the success of the methods in [20]. (2) DIP can better explore the low-rank subspace compared to conventional subspace models such as singular value thresholding (SVT).

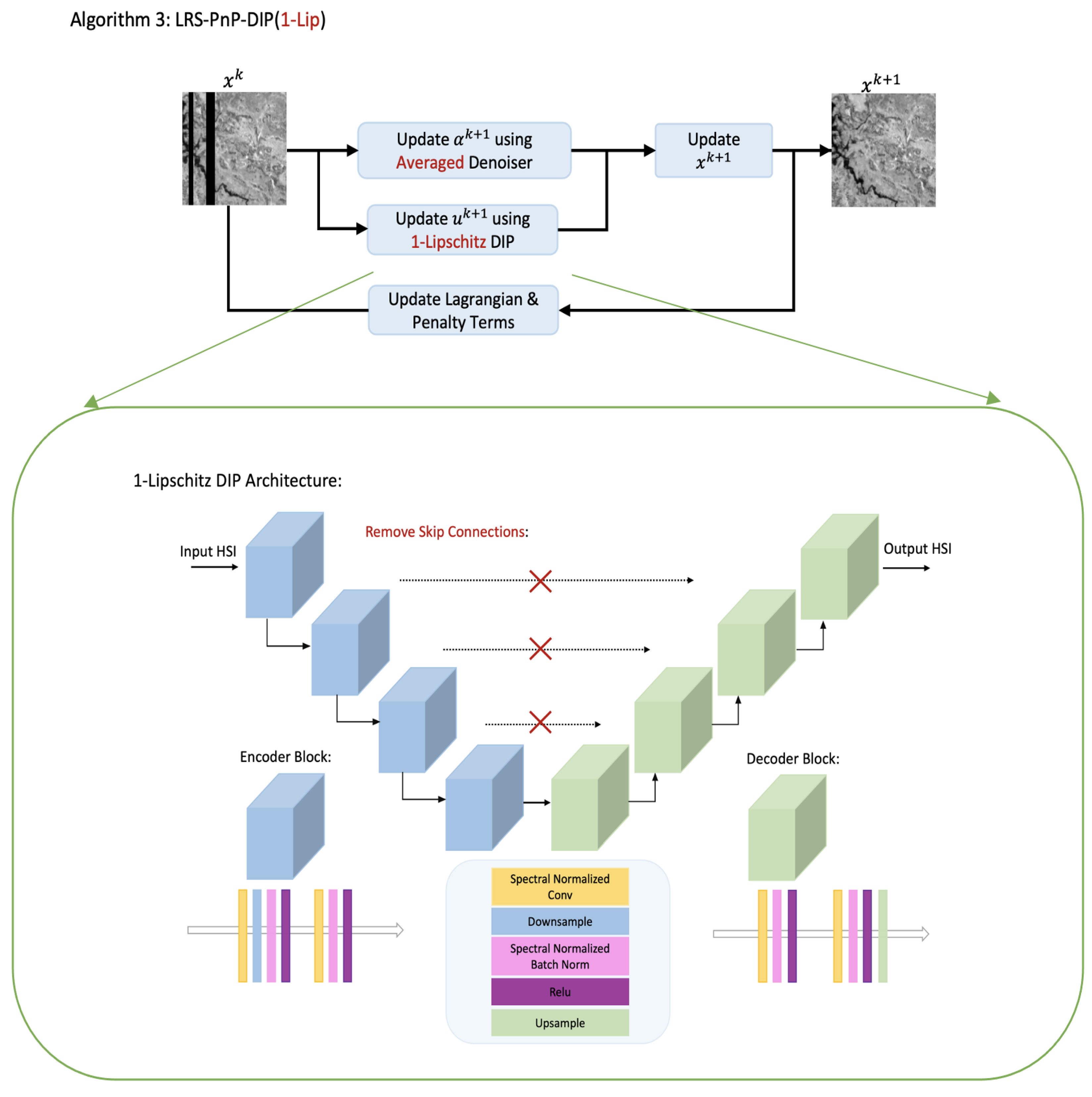

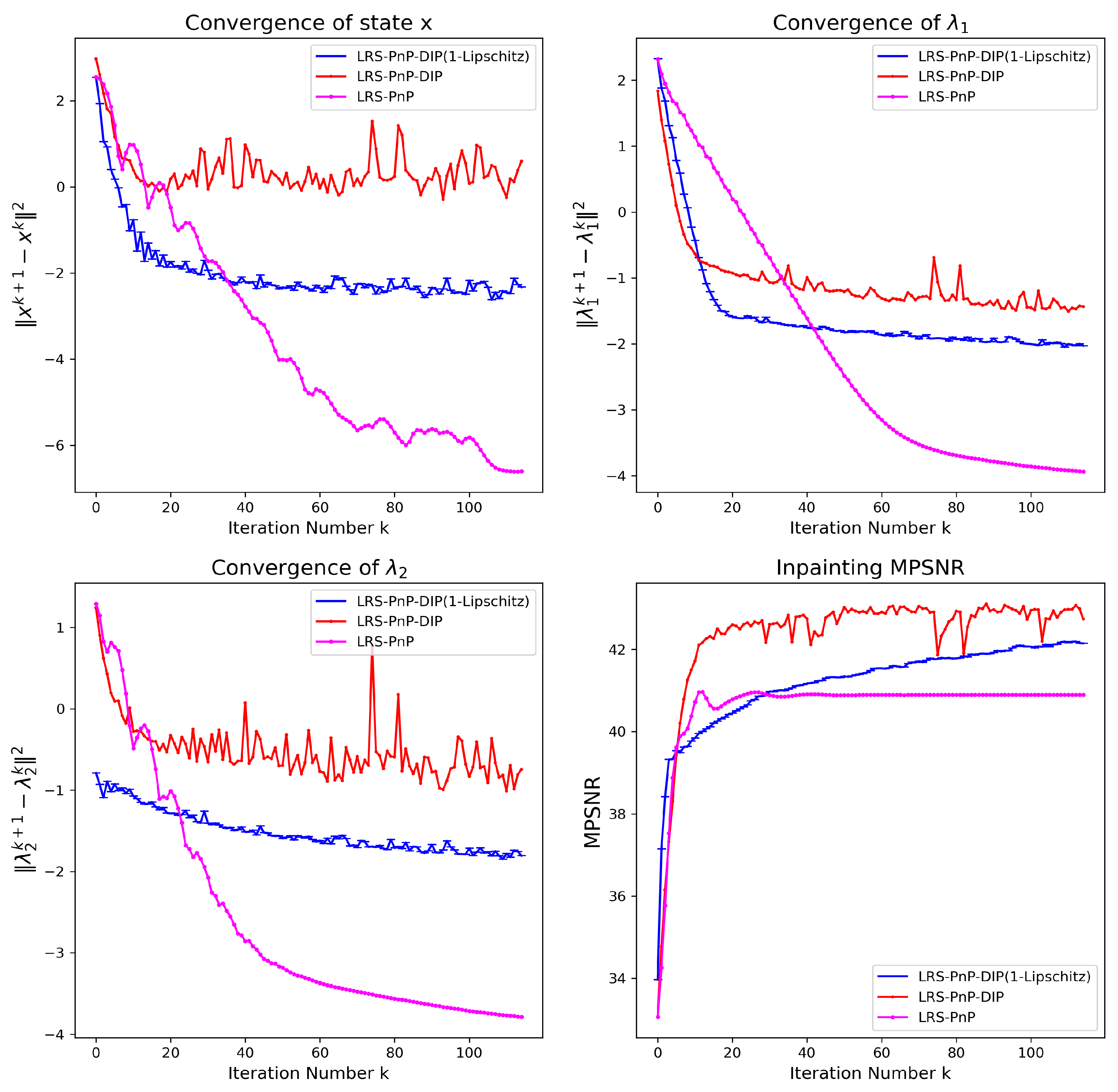

- Under some mild assumptions, a fixed-point convergence proof is provided for the LRS-PnP-DIP algorithm (see Theorem 1). We introduce a variant to the LRS-PnP-DIP called LRS-PnP-DIP(1-Lip), which effectively resolves the instability issue of the algorithm by slightly modifying both the DIP and PnP denoiser.

- To the best of our knowledge, this is the first time the theoretical convergence of PnP with DIP replaced the low-rank prior being analyzed under an iterative framework.

- Extensive experiments were conducted on real-world data to validate the effectiveness of the enhanced LRS-PnP-DIP(1-Lip) algorithm with a convergence certificate. The results demonstrate the superiority of the proposed solution over existing learning-based methods in both stability and performance.

2. Proposed Methods

Mathematical Formulations

| Algorithm 1 Pseudocode of ADMM for solving problem (6). |

| Initialization . while Not Converged do . . . end while |

| Algorithm 2 (LRS-PnP-DIP) algorithm. |

| Require: masking matrix: , noisy and incomplete HSI: , learned dictionary: . denoiser: . max iteration: . DIP: Output: inpainted HSI image . Initialization DIP parameters , . while Not Converged do for do: update DIP parameters , with target: and intermediate results: as input. update by (15). update Lagrangian parameters and penalty terms. end while |

- Remarks. In contrast to its original form, it is here proposed to replace the conventional PnP denoiser in (8) with an averaged NLM denoiser, as introduced in [39]. Additionally, we modify the in (13) to obtain Lipschitz continuity with Lipschitz constant 1. The averaged NLM denoiser is a variant of NLM denoiser whose weight is designed to be a doubly symmetric matrix, ensuring that both the columns and rows sum to 1. With this doubly symmetric property, the spectral norm of the weight matrix is bounded by 1, meaning the averaged NLM denoiser is by design, non-expansive. (We refer the reader to [39] for detailed structures of the averaged NLM denoiser).

| Algorithm 3 LRS-PnP-DIP(1-Lip) algorithm. |

| Require: masking matrix: , noisy and incomplete HSI: , learned dictionary: . averaged NLM Denoiser: . max iteration: . 1-Lipschitz DIP: Output: inpainted HSI image . Initialization DIP parameters , . while Not Converged do for do: update DIP parameters , with target: and intermediate results: as input. update by (15). update Lagrangian parameters and penalty terms. end while |

3. Convergence Analysis

- We made the following assumptions:

- Remarks. The -averaged property is a subset of the non-expansive operator that most of the existing PnP frameworks have worked with [39,42]. In fact, the non-expansive assumption is found to be easily violated for denoisers such as BM3D and NLM denoisers when used in practice. Nevertheless, the convergence of PnP methods using such denoisers can still be empirically verified. In the experiments, we adopted the modified NLM denoiser whose spectral norm is bounded by 1. Hence, it satisfies the -averaged property by design [39].

- Remarks. In the above assumption, the smallest constant L that makes the inequality hold is called the Lipschitz constant. The Lipschitz constant is expressed as the maximum ratio between the absolute change in the output and the input. It quantifies how much the function output changes with respect to the input perturbations or, roughly speaking, how robust the function is. In deep learning, it. Assumption 2 guarantees that the trained DIP has a Lipschitz constant, , which is a desirable feature so that a network does not vary drastically in response to minuscule changes in its input. In the experiments, this can be achieved by constraining the spectral norm of each layer of the neural network during training.

- We placed the detailed proof of Theorem 1 in Appendix A.2.

4. Experimental Results

4.1. Implementation Details

- The Chikusei airborne hyperspectral dataset [43] (the link to the Chikusei dataset can be found at https://naotoyokoya.com/Download.html, accessed on 4 January 2025); the test HS image consists of 192 channels, and it was cropped to 36 × 36 pixels in size.

- The Indian Pines dataset from AVIRIS sensor [44]; the test HS image consisted of 200 spectral bands, and it was cropped to 36 × 36 pixels size.

4.2. Low-Rank vs. Sparsity

4.3. Low Rankness Due to DIP

4.4. Convergence

4.5. Comparison with State of the Art

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. On the Design of 1-Lipschitz DIP

Appendix A.1.1. Convolutional Layer

Appendix A.1.2. Skip Connection Layer

- The skip connections take the following form:

Appendix A.1.3. Pooling Layer

Appendix A.1.4. Activation Layer

Appendix A.1.5. Batch Normalization Layer

Appendix A.1.6. Lipschitz Constant of the Full Network

Appendix A.1.7. Enforcing Lipschitz Constraint

Appendix A.2. Proof of Theorem 1

- Let , for a non-zero .

- Remarks. This design follows similar structures as in the original convergence proof of the ADMM algorithm [34] and in a recent work [57]. Here, is a function of the system’s state change, which is, by design, non-negative. The first two assumptions in Theorem 1.2 [55] automatically hold. Thus, we only need to show that the proposed candidate is a non-increasing function in order to be a valid Lyapunov function. More specifically, we will show that is a decreasing function, which satisfies the following:

Appendix A.3. Parameters Tuning and Ablation Tests

Appendix A.3.1. Sensitivity Analysis and Hyperparameters Selections

| Parameters | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 200 | 1/2 | 1 | 1 | 1/2 | 1/2 | 0 | 0 | 0.12 | |

| Parameters | |||||||||

| 0.1 | 0 | 1 | 50 | 0.1 |

| 0 | 0.1 | 0.25 | 0.5 | 0.75 | 1 | ||

|---|---|---|---|---|---|---|---|

| 0 | 30.692 | 37.479(±0.63) | 38.121(±0.46) | 38.475(±0.48) | 38.686(±0.52) | 38.884(±0.31) | |

| 0.1 | 37.004 | 40.849(±0.31) | 39.107(±0.35) | 39.321(±0.37) | 39.443(±0.30) | 39.812(±0.34) | |

| 0.25 | 37.211 | 40.355(±0.29) | 40.798(±0.36) | 40.266(±0.29) | 39.770(±0.23) | 39.906(±0.31) | |

| 0.5 | 37.592 | 39.766(±0.27) | 40.807(±0.24) | 40.831(±0.26) | 40.532(±0.25) | 40.245(±0.32) | |

| 0.75 | 37.663 | 39.897(±0.37) | 40.373(±0.33) | 40.565(±0.27) | 40.807(±0.30) | 40.780(±0.26) | |

| 1 | 37.957 | 38.591(±0.46) | 39.248(±0.31) | 39.848(±0.25) | 39.902(±0.25) | 40.835(±0.28) | |

Appendix A.3.2. Computational Efficiency

| Dataset | Cost | GLON [4] | DHP [11] | R-DLRHyIn [16] | DeepHyIn [50] | DDS2M [19] | DeepRED [22] | PnP-DIP [24] | LRS-PnP-DIP(1-Lip) |

|---|---|---|---|---|---|---|---|---|---|

| Chikusei | per-iteration | 0.138 | 0.083 | 0.089 | 0.102 | 0.114 | 2.412 | 2.542 | 3.622 |

| all iterations | 69.302 | 89.596 | 106.855 | 95.351 | 382.027 | 482.985 | 473.064 | 394.601 | |

| Indian Pines | per-iteration | 0.104 | 0.109 | 0.115 | 0.136 | 0.149 | 2.732 | 2.967 | 3.905 |

| all iterations | 74.741 | 97.215 | 115.541 | 103.344 | 401.654 | 494.088 | 474.156 | 425.601 |

Appendix A.3.3. Effect of the Lipschitz Constraint DIP

Appendix A.3.4. Effect of the Averaged Denoiser

| Method | Metric | Input | BM3D Denoiser | NLM Denoiser | Averaged NLM Denoiser |

|---|---|---|---|---|---|

| MPSNR ↑ | 31.76 | 41.23(±0.25) | 41.25(±0.30) | 41.22(±0.13) | |

| MSSIM ↑ | 0.304 | 0.920(±0.01) | 0.918(±0.01) | 0.920(±0.01) | |

| MPSNR ↑ | 30.38 | 39.55(±0.29) | 39.60(±0.22) | 39.55(±0.220) | |

| MSSIM ↑ | 0.247 | 0.913(±0.01) | 0.915(±0.01) | 0.914(±0.01) | |

| MPSNR ↑ | 28.75 | 37.68(±0.32) | 37.65(±0.35) | 37.67(±0.24) | |

| MSSIM ↑ | 0.229 | 0.904(±0.01) | 0.903(±0.01) | 0.903(±0.01) |

Appendix A.3.5. Effect of the DIP Network Architectures

| Methods | MPSNR↑ | MSSIM↑ |

|---|---|---|

| Input | 22.582 | 0.178 |

| ResNet 2D | 30.975(±0.62) | 0.610(±0.02) |

| ResNet 3D | 29.661(±1.20) | 0.589(±0.03) |

| UNet 2D | 35.963(±0.42) | 0.882(±0.02) |

| UNet 3D | 35.438(±0.56) | 0.868(±0.01) |

| Skip-Net 2D | ||

| Skip-Net 3D | 37.050(±0.32) | 0.890(±0.01) |

References

- Ortega, S.; Guerra, R.; Diaz, M.; Fabelo, H.; López, S.; Callico, G.M.; Sarmiento, R. Hyperspectral push-broom microscope development and characterization. IEEE Access 2019, 7, 122473–122491. [Google Scholar] [CrossRef]

- EMIT L1B At-Sensor Calibrated Radiance and Geolocation Data 60 m V001. Available online: https://search.earthdata.nasa.gov/search/granules?p=C2408009906-LPCLOUD&pg[0][v]=f&pg[0][gsk]=-start_date&q=%22EMIT%22&tl=1711560236!3!! (accessed on 27 August 2024).

- Zhuang, L.; Bioucas-Dias, J.M. Fast hyperspectral image denoising and inpainting based on low-rank and sparse representations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 730–742. [Google Scholar] [CrossRef]

- Zhao, X.L.; Yang, J.H.; Ma, T.H.; Jiang, T.X.; Ng, M.K.; Huang, T.Z. Tensor completion via complementary global, local, and nonlocal priors. IEEE Trans. Image Process. 2021, 31, 984–999. [Google Scholar] [CrossRef]

- Li, B.Z.; Zhao, X.L.; Wang, J.L.; Chen, Y.; Jiang, T.X.; Liu, J. Tensor completion via collaborative sparse and low-rank transforms. IEEE Trans. Comput. Imaging 2021, 7, 1289–1303. [Google Scholar] [CrossRef]

- Luo, Y.S.; Zhao, X.L.; Jiang, T.X.; Chang, Y.; Ng, M.K.; Li, C. Self-supervised nonlinear transform-based tensor nuclear norm for multi-dimensional image recovery. IEEE Trans. Image Process. 2022, 31, 3793–3808. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, J.; Gong, M.; Liu, Z.; Miao, Q.; Ma, W. MPCT: Multiscale point cloud transformer with a residual network. IEEE Trans. Multimed. 2023, 26, 3505–3516. [Google Scholar] [CrossRef]

- Yuan, Y.; Wu, Y.; Fan, X.; Gong, M.; Ma, W.; Miao, Q. EGST: Enhanced geometric structure transformer for point cloud registration. IEEE Trans. Vis. Comput. Graph. 2023, 30, 6222–6234. [Google Scholar] [CrossRef] [PubMed]

- Wong, R.; Zhang, Z.; Wang, Y.; Chen, F.; Zeng, D. HSI-IPNet: Hyperspectral imagery inpainting by deep learning with adaptive spectral extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4369–4380. [Google Scholar] [CrossRef]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9446–9454. [Google Scholar]

- Sidorov, O.; Yngve Hardeberg, J. Deep hyperspectral prior: Single-image denoising, inpainting, super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Meng, Z.; Yu, Z.; Xu, K.; Yuan, X. Self-supervised neural networks for spectral snapshot compressive imaging. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2622–2631. [Google Scholar]

- Lai, Z.; Wei, K.; Fu, Y. Deep Plug-and-Play Prior for Hyperspectral Image Restoration. Neurocomputing 2022, 481, 281–293. [Google Scholar] [CrossRef]

- Wu, Z.; Yang, C.; Su, X.; Yuan, X. Adaptive Deep PnP Algorithm for Video Snapshot Compressive Imaging. arXiv 2022, arXiv:2201.05483. [Google Scholar] [CrossRef]

- Gan, W.; Eldeniz, C.; Liu, J.; Chen, S.; An, H.; Kamilov, U.S. Image reconstruction for mri using deep cnn priors trained without groundtruth. In Proceedings of the 2020 54th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 1–4 November 2020; pp. 475–479. [Google Scholar]

- Niresi, K.F.; Chi, C.Y. Robust Hyperspectral Inpainting via Low-Rank Regularized Untrained Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Hong, D.; Yao, J.; Li, C.; Meng, D.; Yokoya, N.; Chanussot, J. Decoupled-and-coupled networks: Self-supervised hyperspectral image super-resolution with subpixel fusion. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5527812. [Google Scholar] [CrossRef]

- Yao, J.; Hong, D.; Chanussot, J.; Meng, D.; Zhu, X.; Xu, Z. Cross-attention in coupled unmixing nets for unsupervised hyperspectral super-resolution. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part XXIX 16. pp. 208–224. [Google Scholar]

- Miao, Y.; Zhang, L.; Zhang, L.; Tao, D. Dds2m: Self-supervised denoising diffusion spatio-spectral model for hyperspectral image restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12086–12096. [Google Scholar]

- Li, S.; Yaghoobi, M. Self-Supervised Hyperspectral Inpainting with the Optimisation inspired Deep Neural Network Prior. In Proceedings of the 2023 31st European Signal Processing Conference (EUSIPCO), Helsinki, Finland, 4–8 September 2023; pp. 471–475. [Google Scholar]

- Heckel, R.; Hand, P. Deep decoder: Concise image representations from untrained non-convolutional networks. arXiv 2018, arXiv:1810.03982. [Google Scholar]

- Mataev, G.; Milanfar, P.; Elad, M. DeepRED: Deep image prior powered by RED. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Liu, J.; Sun, Y.; Xu, X.; Kamilov, U.S. Image restoration using total variation regularized deep image prior. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7715–7719. [Google Scholar]

- Sun, Z.; Latorre, F.; Sanchez, T.; Cevher, V. A plug-and-play deep image prior. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, Canada, 6–11 June 2021; pp. 8103–8107. [Google Scholar]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4729–4743. [Google Scholar] [CrossRef]

- Li, C.; Zhang, B.; Hong, D.; Yao, J.; Chanussot, J. LRR-Net: An Interpretable Deep Unfolding Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G. Online dictionary learning for sparse coding. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 689–696. [Google Scholar]

- Peng, J.; Sun, W.; Du, Q. Self-paced joint sparse representation for the classification of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1183–1194. [Google Scholar] [CrossRef]

- Zhao, Y.Q.; Yang, J. Hyperspectral image denoising via sparse representation and low-rank constraint. IEEE Trans. Geosci. Remote Sens. 2014, 53, 296–308. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Kong, S.G. Joint spatial and spectral low-rank regularization for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1940–1958. [Google Scholar] [CrossRef]

- Ma, G.; Huang, T.Z.; Huang, J.; Zheng, C.C. Local low-rank and sparse representation for hyperspectral image denoising. IEEE Access 2019, 7, 79850–79865. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends® Optim. 2014, 1, 127–239. [Google Scholar] [CrossRef]

- Venkatakrishnan, S.V.; Bouman, C.A.; Wohlberg, B. Plug-and-play priors for model based reconstruction. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 945–948. [Google Scholar]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. J. Issued Courant Inst. Math. Sci. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Sreehari, S.; Venkatakrishnan, S.V.; Wohlberg, B.; Buzzard, G.T.; Drummy, L.F.; Simmons, J.P.; Bouman, C.A. Plug-and-play priors for bright field electron tomography and sparse interpolation. IEEE Trans. Comput. Imaging 2016, 2, 408–423. [Google Scholar] [CrossRef]

- Nair, P.; Gavaskar, R.G.; Chaudhury, K.N. Fixed-point and objective convergence of plug-and-play algorithms. IEEE Trans. Comput. Imaging 2021, 7, 337–348. [Google Scholar] [CrossRef]

- Ekeland, I.; Temam, R. Convex Analysis and Variational Problems; SIAM: Philadelphia, PA, USA, 1999. [Google Scholar]

- Ryu, E.; Liu, J.; Wang, S.; Chen, X.; Wang, Z.; Yin, W. Plug-and-play methods provably converge with properly trained denoisers. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 5546–5557. [Google Scholar]

- Yokoya, N.; Iwasaki, A. Airborne Hyperspectral Data over Chikusei; SAL-2016-05-27; University of Tokyo: Tokyo, Japan, 2016. [Google Scholar]

- Baumgardner, M.F.; Biehl, L.L.; Landgrebe, D.A. 220 band AVIRIS hyperspectral image data set: June 12, 1992 Indian Pine test site 3. Purdue Univ. Res. Repos. 2015, 10, 991. [Google Scholar]

- Denby, B.; Lucia, B. Orbital edge computing: Nanosatellite constellations as a new class of computer system. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 939–954. [Google Scholar]

- Gouk, H.; Frank, E.; Pfahringer, B.; Cree, M.J. Regularisation of neural networks by enforcing lipschitz continuity. Mach. Learn. 2021, 110, 393–416. [Google Scholar] [CrossRef]

- Wang, H.; Li, T.; Zhuang, Z.; Chen, T.; Liang, H.; Sun, J. Early Stopping for Deep Image Prior. arXiv 2021, arXiv:2112.06074. [Google Scholar]

- Nguyen, H.V.; Ulfarsson, M.O.; Sigurdsson, J.; Sveinsson, J.R. Deep sparse and low-rank prior for hyperspectral image denoising. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 1217–1220. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral normalization for generative adversarial networks. arXiv 2018, arXiv:1802.05957. [Google Scholar]

- Rasti, B.; Ghamisi, P.; Gloaguen, R. Unsupervised deep hyperspectral inpainting using a new mixing model. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 1221–1224. [Google Scholar]

- Maffei, A.; Haut, J.M.; Paoletti, M.E.; Plaza, J.; Bruzzone, L.; Plaza, A. A single model CNN for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2516–2529. [Google Scholar] [CrossRef]

- Cascarano, P.; Sebastiani, A.; Comes, M.C. ADMM DIP-TV: Combining Total Variation and Deep Image Prior for image restoration. arXiv 2020, arXiv:2009.11380. [Google Scholar]

- Jiao, L.; Zhang, X.; Liu, X.; Liu, F.; Yang, S.; Ma, W.; Li, L.; Chen, P.; Feng, Z.; Guo, Y.; et al. Transformer meets remote sensing video detection and tracking: A comprehensive survey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1–45. [Google Scholar] [CrossRef]

- Shevitz, D.; Paden, B. Lyapunov stability theory of nonsmooth systems. IEEE Trans. Autom. Control 1994, 39, 1910–1914. [Google Scholar] [CrossRef]

- Bof, N.; Carli, R.; Schenato, L. Lyapunov theory for discrete time systems. arXiv 2018, arXiv:1809.05289. [Google Scholar]

- Hill, D.; Moylan, P. The stability of nonlinear dissipative systems. IEEE Trans. Autom. Control 1976, 21, 708–711. [Google Scholar] [CrossRef]

- Zhang, T.; Shen, Z. A fundamental proof of convergence of alternating direction method of multipliers for weakly convex optimization. J. Inequal. Appl. 2019, 2019, 128. [Google Scholar] [CrossRef] [PubMed]

| Mask Type | Methods | Input | GLON [4] | DHP [11] | R-DLRHyIn [16] | DeepRED [22] | DeepHyIn [50] | PnP-DIP [24] | DDS2M [19] | LRS-PnP-DIP(1-Lip) |

|---|---|---|---|---|---|---|---|---|---|---|

| Mask Type 1 | MPSNR ↑ | 33.0740 | 41.3956(±0.52) | 41.5714(±0.31) | 41.5765(±0.28) | 41.6902(±0.57) | 41.7595(±0.25) | |||

| MSSIM ↑ | 0.2441 | 0.9102(±0.03) | 0.9135(±0.01) | 0.9121(±0.02) | 0.9180(±0.02) | 0.9250(±0.02) | ||||

| MSAM ↓ | 0.7341 | 0.1342 | 0.1296(±0.01) | 0.1250(±0.01) | 0.1237(±0.01) | 0.1221(±0.01) | ||||

| Mask Type 2 | MPSNR ↑ | 31.7151 | 38.4103 | 39.7510(±0.65) | 40.1586(±0.84) | 40.4119(±0.33) | 40.6260(±0.40) | 40.6661(±0.52) | 40.7904(±0.74) | |

| MSSIM ↑ | 0.2338 | 0.8768 | 0.8961(±0.01) | 0.9041(±0.01) | 0.9049(±0.01) | 0.9100(±0.01) | 0.9121(±0.01) | 0.9130(±0.01) | ||

| MSAM ↓ | 0.8296 | 0.1518 | 0.1436(±0.02) | 0.1373(±0.01) | 0.1320(±0.01) | 0.1315(±0.01) | 0.1298(±0.01) | |||

| Mask Type 3 | MPSNR ↑ | 30.3258 | 35.6927 | 38.1440(±0.90) | 38.9580(±0.79) | 39.6952(±0.99) | 39.8608(±0.68) | 39.8150(±0.40) | 40.0779(±0.82) | |

| MSSIM ↑ | 0.2018 | 0.8558 | 0.8805(±0.01) | 0.8871(±0.01) | 0.8902(±0.01) | 08950(±0.01) | 0.8958(±0.01) | 0.9003(±0.01) | ||

| MSAM ↓ | 0.8441 | 0.1670 | 0.1563(±0.01) | 0.1507(±0.01) | 0.1495(±0.01) | 0.1480(±0.01) | 0.1476(±0.01) | |||

| Mask Type 4 | MPSNR ↑ | 27.9802 | 34.5011 | 36.6018(±1.15) | 37.6504(±0.89) | 37.9080(±0.59) | 38.1961(±0.77) | 38.3442(±0.73) | 38.7529(±0.88) | |

| MSSIM ↑ | 0.1700 | 0.8267 | 0.8693(±0.01) | 0.8740(±0.01) | 0.8772(±0.01) | 0.8769(±0.01) | 0.8820(±0.01) | 0.8849(±0.01) | ||

| MSAM ↓ | 0.8847 | 0.1803 | 0.1714(±0.03) | 0.1636(±0.02) | 0.1602(±0.01) | 0.1580(±0.01) | 0.1557(±0.01) |

| Mask Type | Methods | Input | GLON [4] | DHP [11] | R-DLRHyIn [16] | DeepRED [22] | DeepHyIn [50] | PnP-DIP [24] | DDS2M [19] | LRS-PnP-DIP(1-Lip) |

|---|---|---|---|---|---|---|---|---|---|---|

| Mask Type 1 | MPSNR ↑ | 31.6903 | 40.1917(±0.33) | 40.5490(±0.41) | 40.7982(±0.32) | 40.9309(±0.60) | 40.8897(±0.34) | |||

| MSSIM ↑ | 0.2268 | 0.8917(±0.02) | 0.8930(±0.02) | 0.8932(±0.01) | 0.9028(±0.01) | 0.9050(±0.01) | ||||

| MSAM ↓ | 0.7723 | 0.1594 | 0.1580(±0.01) | 0.1369(±0.01) | 0.1368(±0.01) | 0.1320(±0.01) | ||||

| Mask Type 2 | MPSNR ↑ | 29.6815 | 36.9092 | 38.1331(±0.22) | 38.7072(±0.25) | 38.5630(±0.19) | 38.7976(±0.40) | 39.0021(±0.32) | 39.9861(±0.40) | |

| MSSIM ↑ | 0.2098 | 0.8849(±0.01) | 0.8902(±0.01) | 0.8864(±0.01) | 0.8919(±0.01) | 0.8972(±0.01) | ||||

| MSAM ↓ | 0.8723 | 0.1514 | 0.1509(±0.01) | 0.1469(±0.01) | 0.1450(±0.01) | 0.1444(±0.01) | ||||

| Mask Type 3 | MPSNR ↑ | 28.6195 | 36.7447 | 37.0714(±0.28) | 37.4790(±0.20) | 37.3976(±0.39) | 37.4555(±0.39) | 37.7821(±0.22) | 37.8070(±0.52) | |

| MSSIM ↑ | 0.1864 | 0.8787(±0.01) | 0.8870(±0.01) | 0.8869(±0.01) | 0.8893(±0.01) | 0.8921(±0.01) | ||||

| MSAM ↓ | 0.8823 | 0.1584 | 0.1579(±0.01) | 0.1539(±0.01) | 0.1521(±0.01) | 0.1490(±0.01) | ||||

| Mask Type 4 | MPSNR ↑ | 24.6998 | 34.5630 | 35.8009(±0.30) | 35.8292(±0.22) | 35.8145(±0.32) | 35.8905(±0.23) | 36.1022(±0.18) | 36.4938(±0.34) | |

| MSSIM ↑ | 0.1672 | 0.8592(±0.01) | 0.8651(±0.01) | 0.8678(±0.01) | 0.8698(±0.01) | 0.8733(±0.01) | ||||

| MSAM ↓ | 0.8977 | 0.1644 | 0.1610(±0.02) | 0.1611(±0.01) | 0.1602(±0.01) | 0.1588(±0.01) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Yaghoobi, M. Self-Supervised Deep Hyperspectral Inpainting with Plug-and-Play and Deep Image Prior Models. Remote Sens. 2025, 17, 288. https://doi.org/10.3390/rs17020288

Li S, Yaghoobi M. Self-Supervised Deep Hyperspectral Inpainting with Plug-and-Play and Deep Image Prior Models. Remote Sensing. 2025; 17(2):288. https://doi.org/10.3390/rs17020288

Chicago/Turabian StyleLi, Shuo, and Mehrdad Yaghoobi. 2025. "Self-Supervised Deep Hyperspectral Inpainting with Plug-and-Play and Deep Image Prior Models" Remote Sensing 17, no. 2: 288. https://doi.org/10.3390/rs17020288

APA StyleLi, S., & Yaghoobi, M. (2025). Self-Supervised Deep Hyperspectral Inpainting with Plug-and-Play and Deep Image Prior Models. Remote Sensing, 17(2), 288. https://doi.org/10.3390/rs17020288