Robust and Transferable Elevation-Aware Multi-Resolution Network for Semantic Segmentation of LiDAR Point Clouds in Powerline Corridors

Abstract

Highlights

- We propose EMPower-Net, a novel LiDAR point cloud segmentation network tailored for powerline corridors, which integrates an elevation-aware embedding and multi-resolution contextual learning.

- EMPower-Net achieves state-of-the-art performance in powerline corridor datasets and shows strong generalization ability across different geographic regions.

- The elevation-aware design substantially improves the recognition of critical vertical structures such as power lines and towers, ensuring more reliable safety analysis of transmission corridors.

- The demonstrated transferability highlights EMPower-Net’s potential for large-scale deployment in real-world corridor inspection.

Abstract

1. Introduction

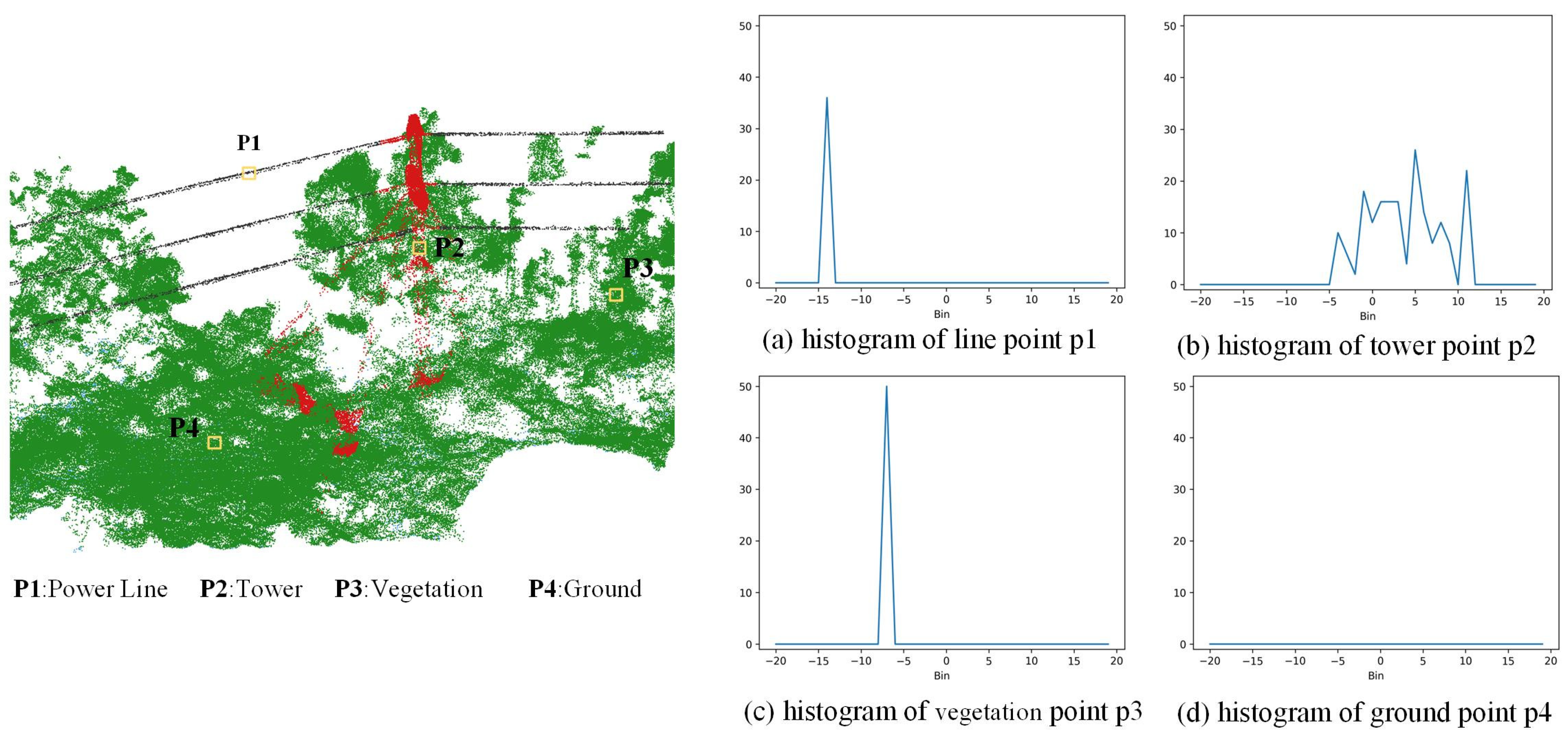

- Elevation-Aware Embedding: We design a histogram-based elevation distribution module that directly models vertical structural differences. This targeted embedding substantially improves the discrimination of towers and suspended power lines from ground and vegetation.

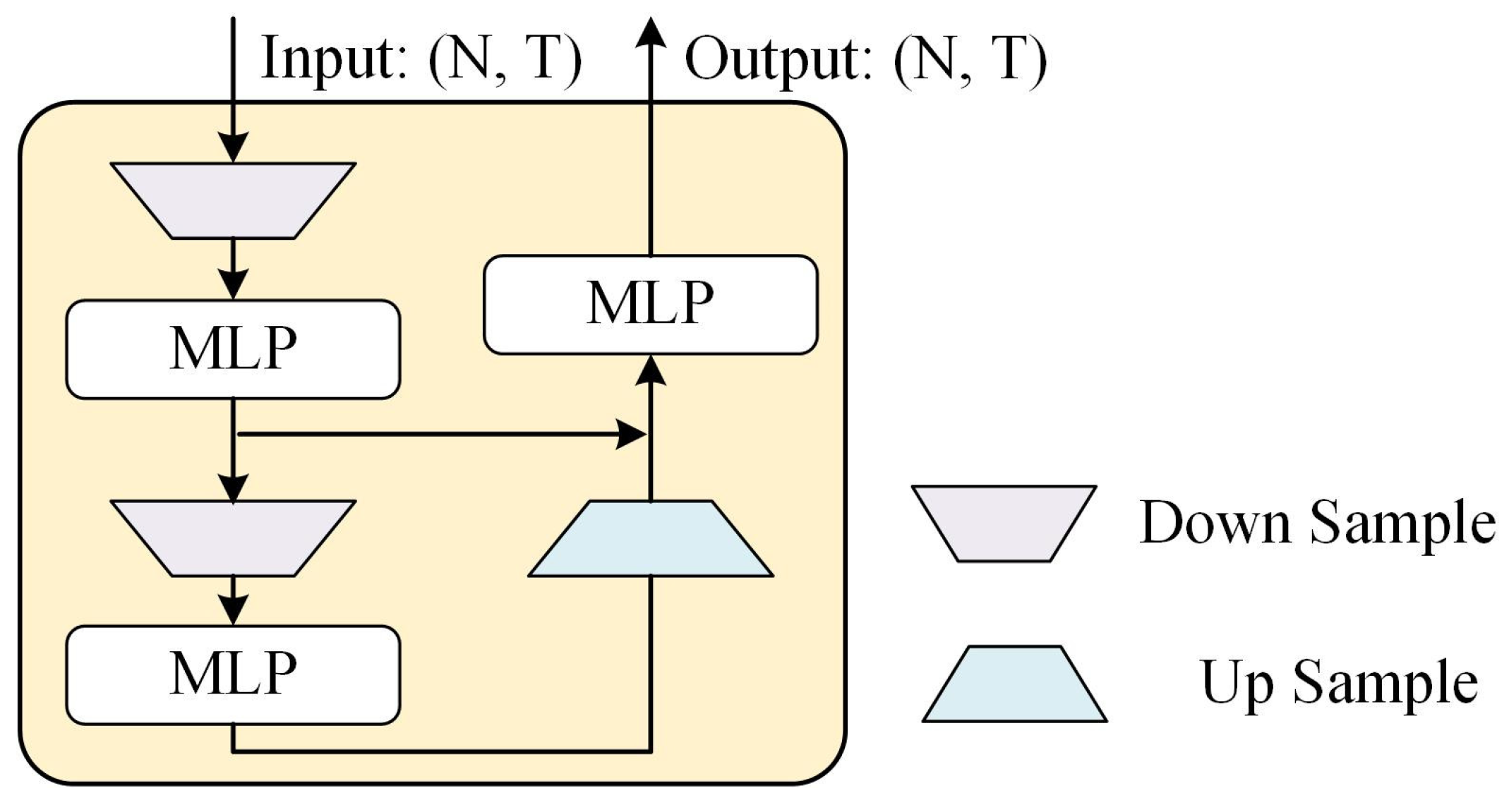

- Multi-Resolution Contextual Learning: We introduce a multi-scale strategy that simultaneously captures fine-grained local details and long-range sparse structures. This design effectively enhances segmentation accuracy for complex transmission corridor structures with varying object scales, addressing challenges that standard single-scale approaches struggle to handle.

- Transferable Feature Learning: We demonstrate that the proposed method not only achieves state-of-the-art performance on the training region (Yunnan dataset) but also exhibits strong generalization when transferred to a different region (Guangdong dataset) with diverse vegetation and occlusion conditions.

- Urban Generalization Validation: Beyond powerline corridors, we validate the elevation-aware features on the WHU3D and Paris-Lille-3D urban dataset, showing improved segmentation for buildings and other vertical structures.

2. Related Work

2.1. Multi-View-Based Methods

2.2. Voxel-Based Methods

2.3. Point-Based Methods

3. Methodology

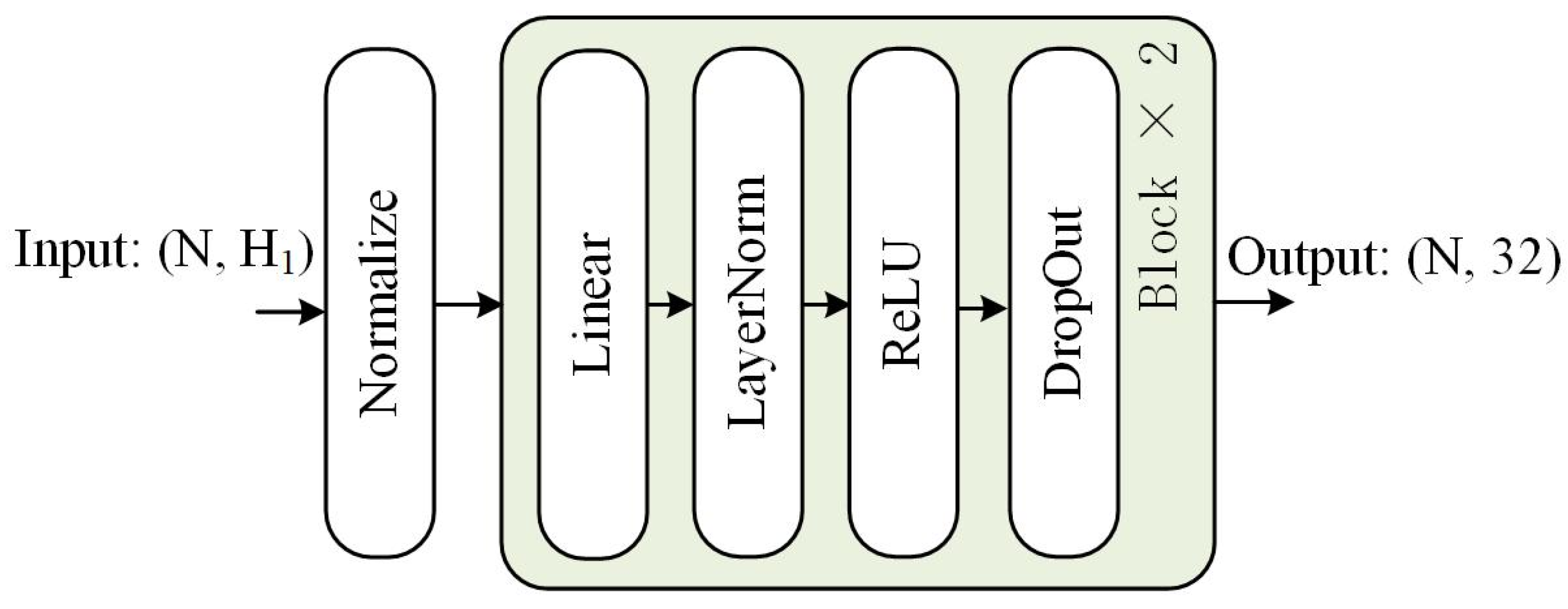

3.1. Backbone

3.2. Elevation Distribution Module

3.3. Multiple Resolutions Module

4. Experiments

4.1. Experimental Dataset

4.2. Implementation Details

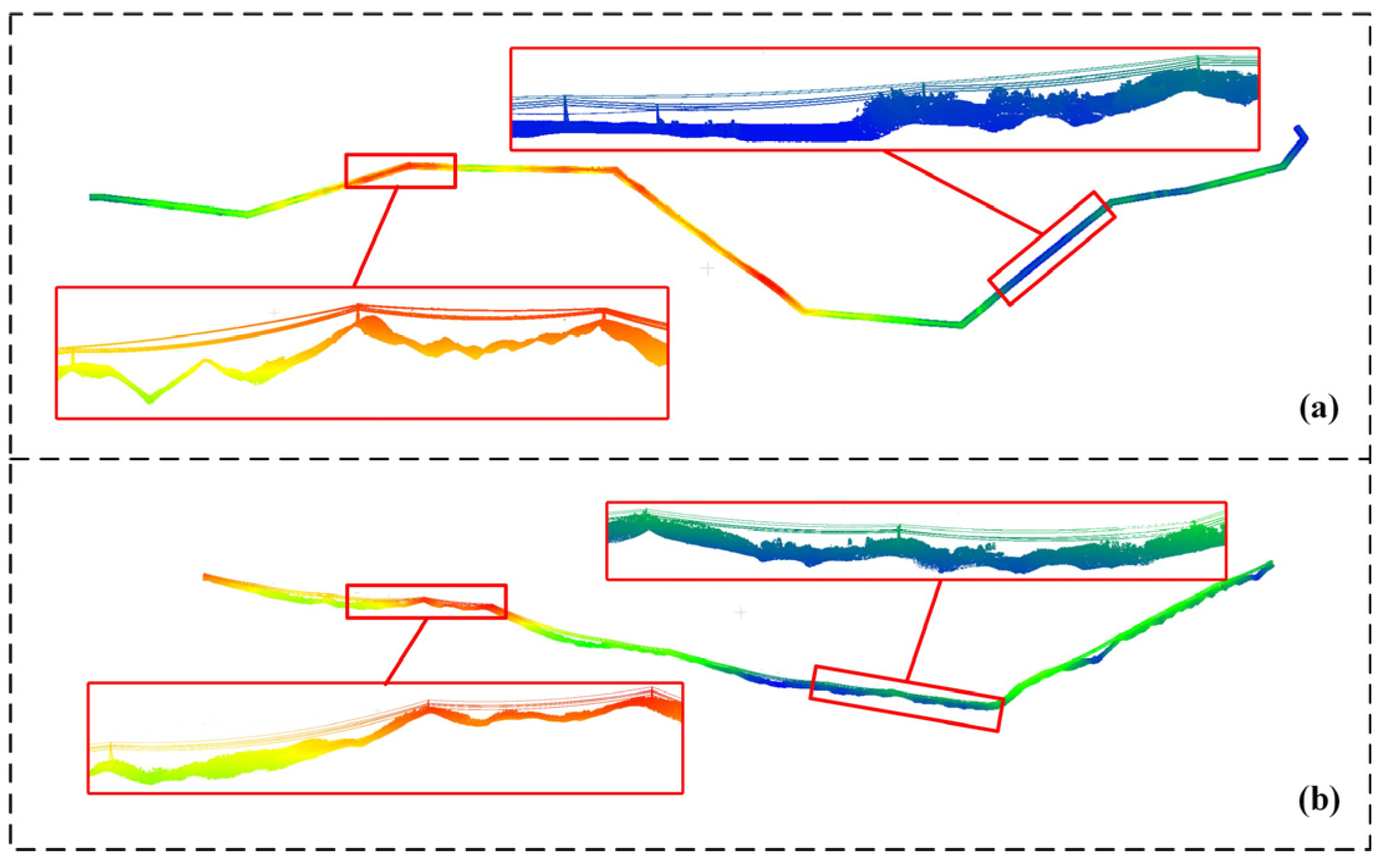

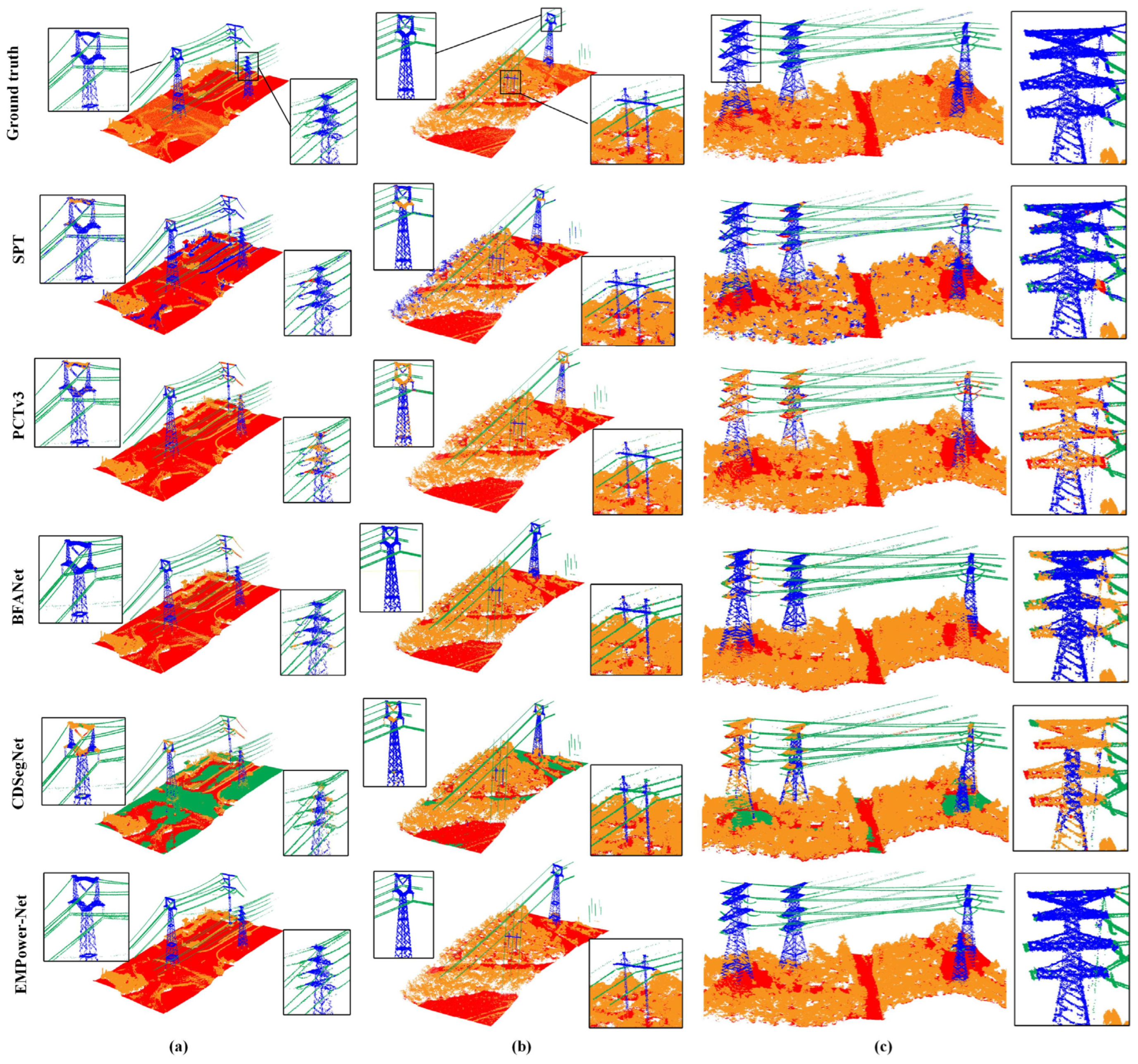

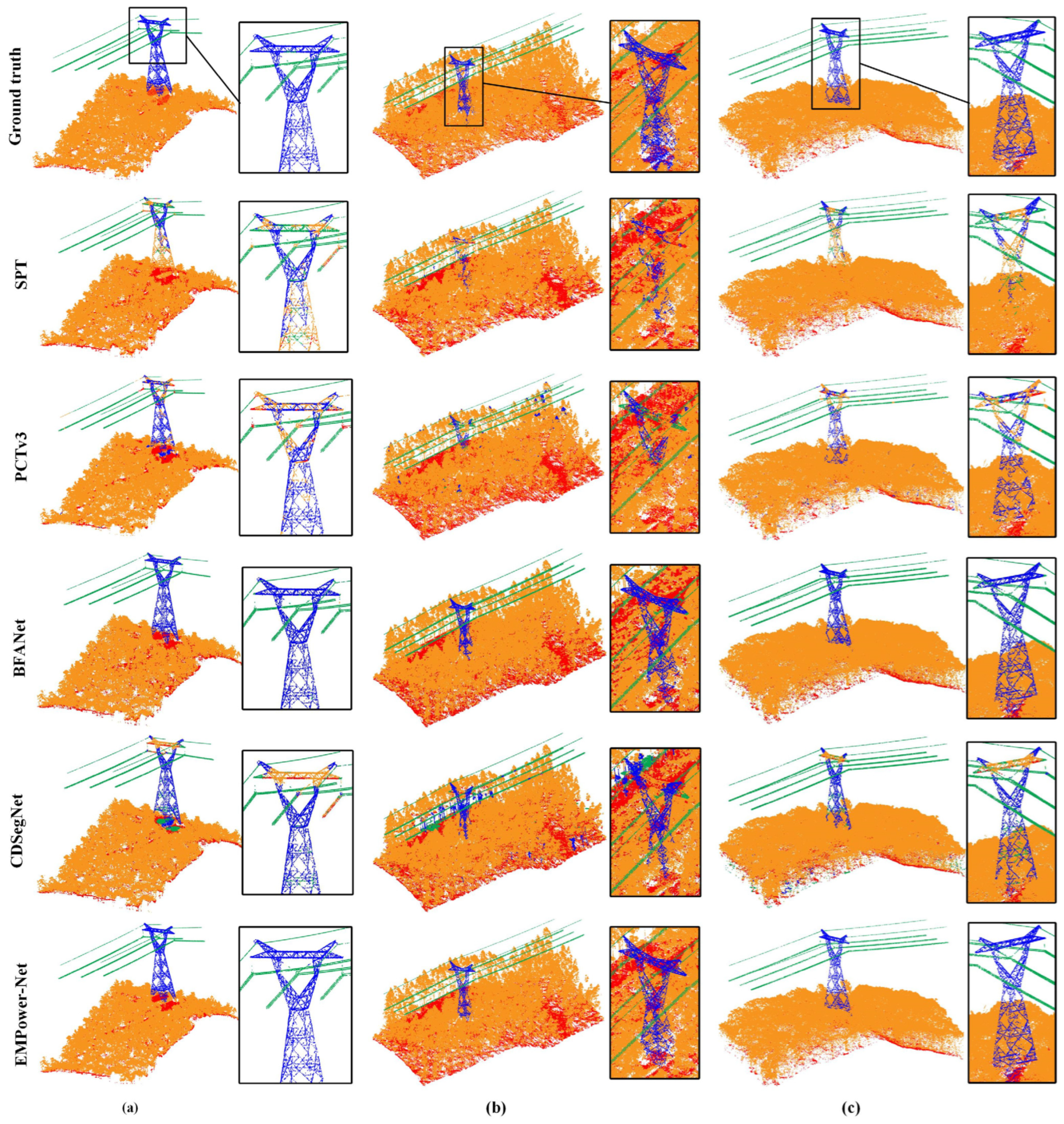

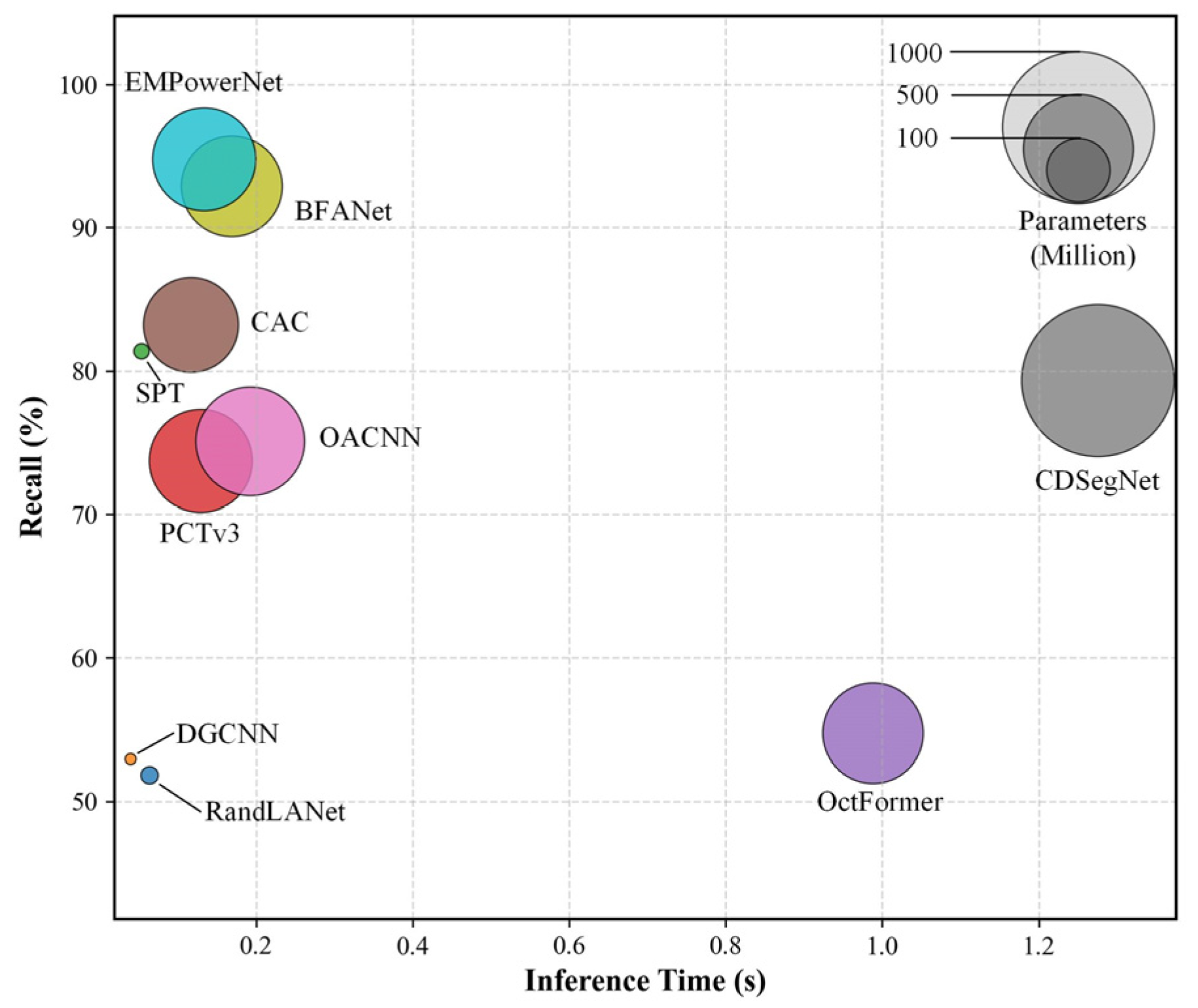

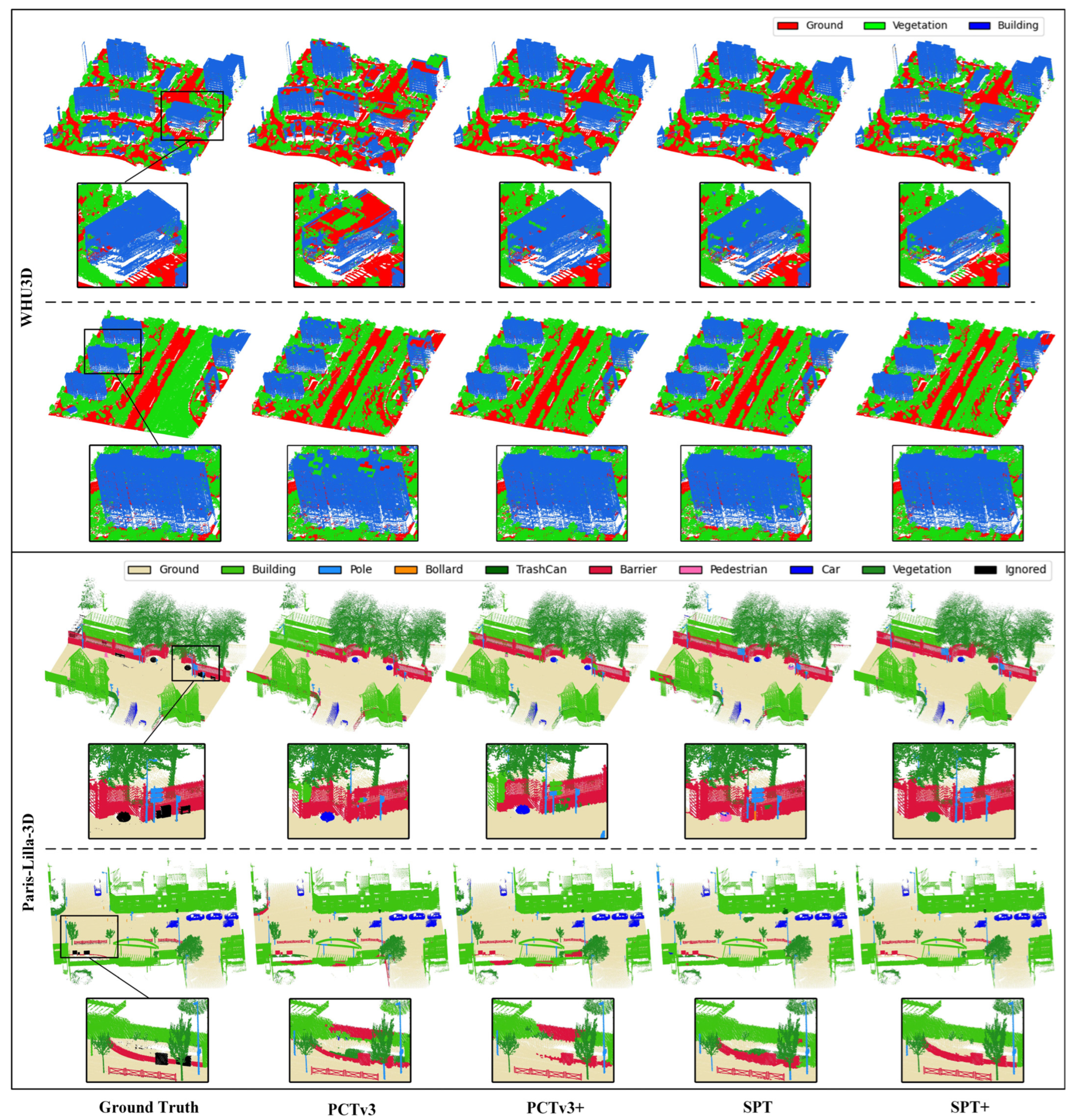

4.3. Qualitative Comparison

4.4. Quantitative Comparison

4.5. Ablation Study

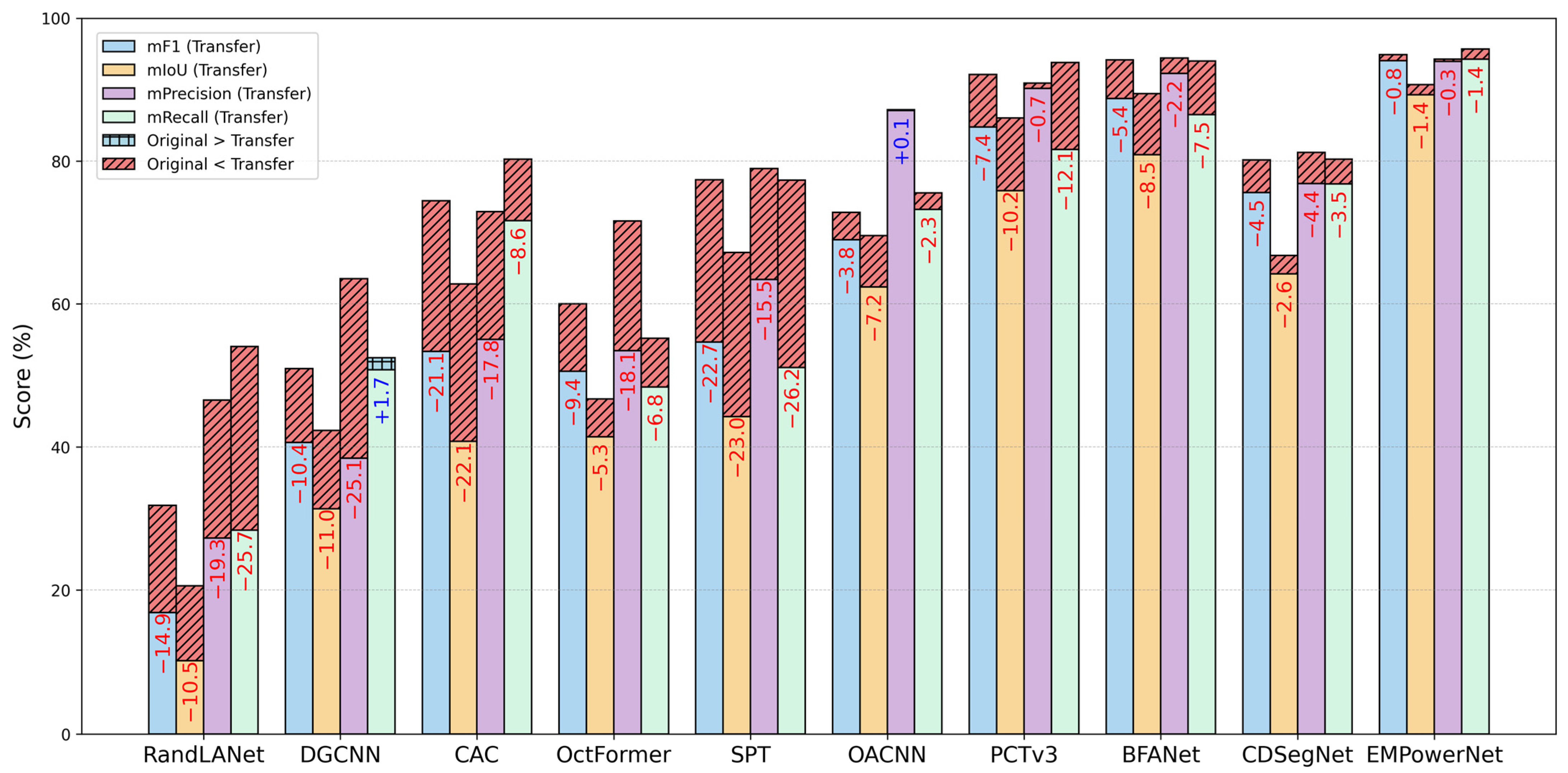

4.6. Transferability Evaluation on Different Powerline Regions

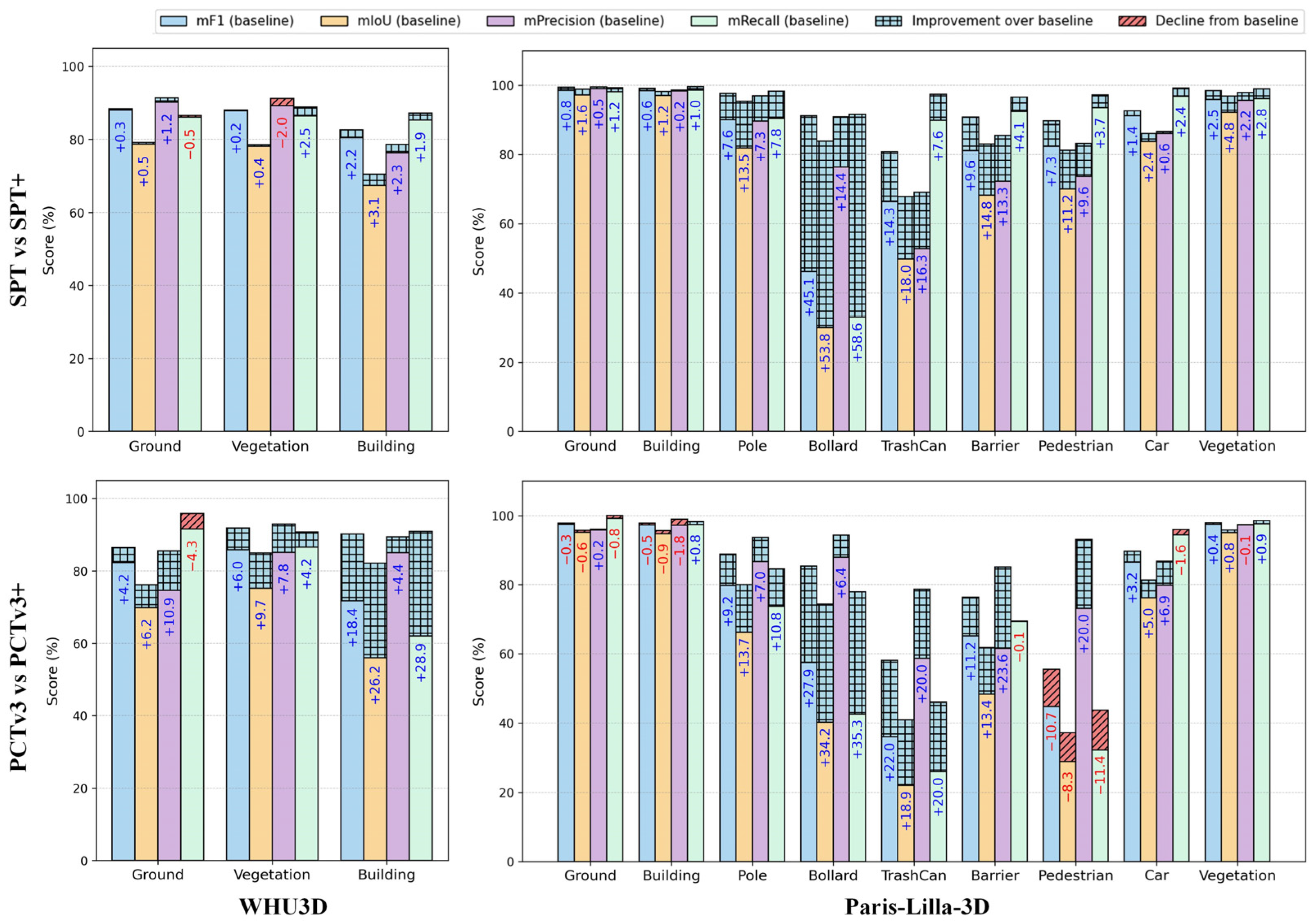

4.7. Validating the Generalization of Elevation Features in Urban Scenes

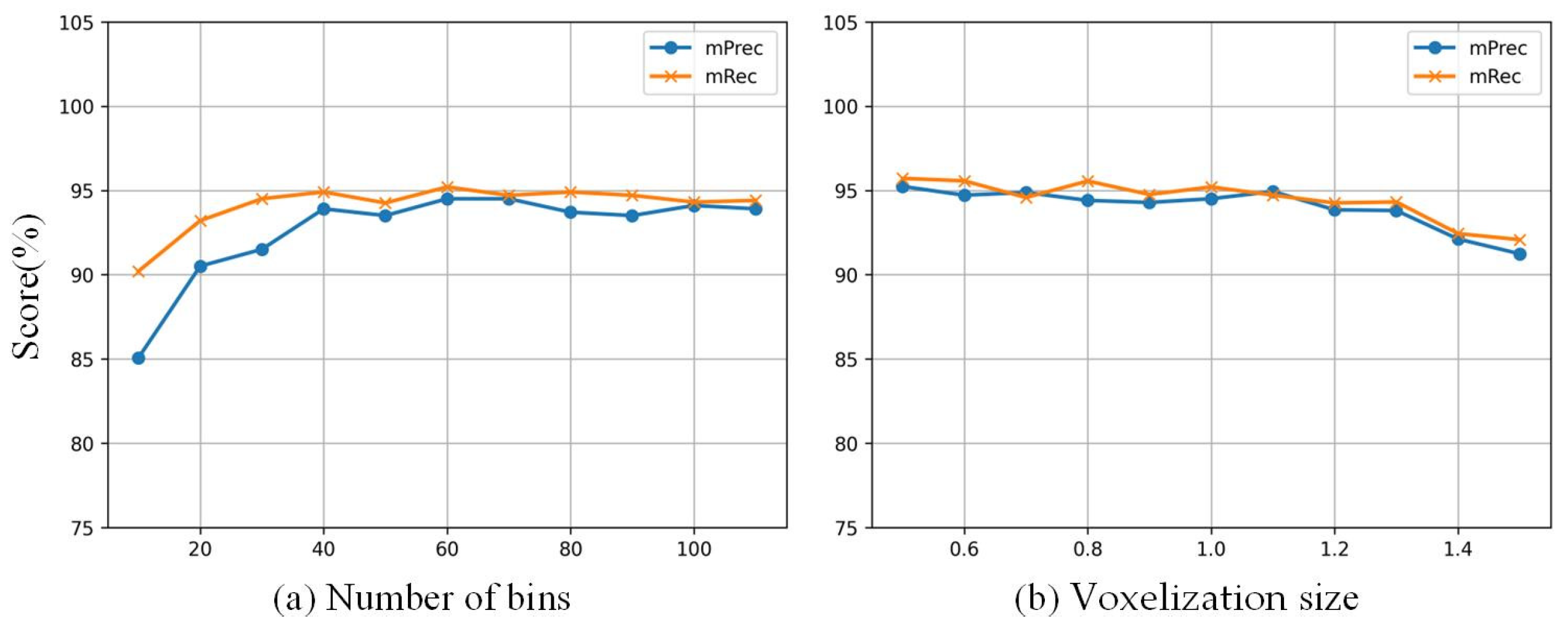

4.8. Parameter Sensitivity Analysis

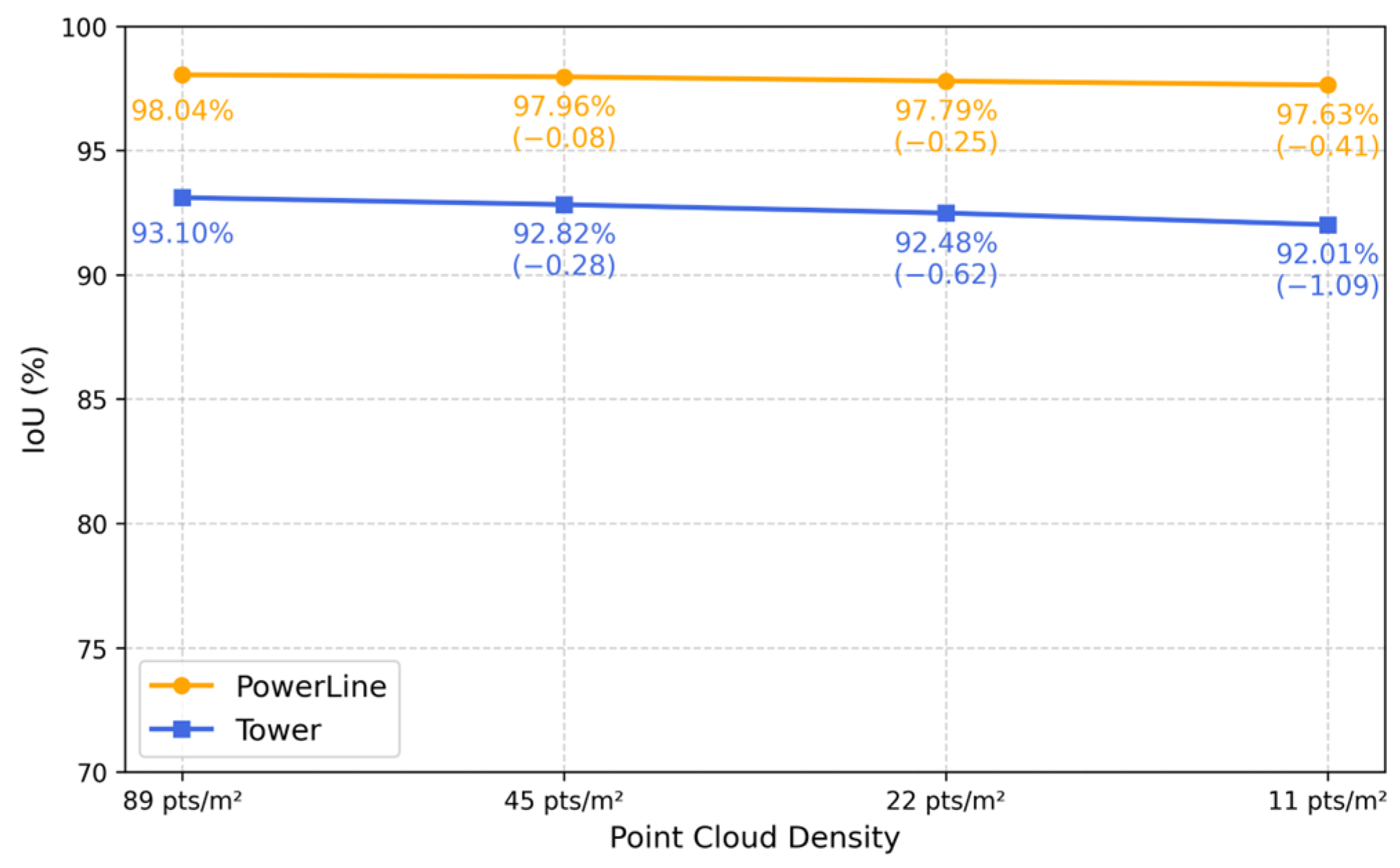

4.9. Evaluation of Model Robustness Under Varying Density

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, L.; Fan, J.; Xu, S.; Li, E.; Liu, Y. Vision-Based Power Line Segmentation with an Attention Fusion Network. IEEE Sens. J. 2022, 22, 8196–8205. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Wang, Z.; Liu, X.; Zhang, H.; Xu, D. Detection of Power Line Insulator Defects Using Aerial Images Analyzed with Convolutional Neural Networks. IEEE Trans. Syst. Man Cybern Syst. 2020, 50, 1486–1498. [Google Scholar] [CrossRef]

- Zhang, N.; Xu, W.; Dai, Y.; Ye, C.; Zhang, X. Application of UAV Oblique Photography and LiDAR in Power Facility Identification and Modeling: A Literature Review. In Proceedings of the Third International Conference on Geographic Information and Remote Sensing Technology (GIRST 2024), Rome, Italy, 2 April 2025; SPIE: Bellingham, WA, USA, 2025; Volume 13551, pp. 186–192. [Google Scholar]

- Xu, C.; Li, Q.; Zhou, Q.; Zhang, S.; Yu, D.; Ma, Y. Power Line-Guided Automatic Electric Transmission Line Inspection System. IEEE Trans. Instrum. Meas. 2022, 71, 3512118. [Google Scholar] [CrossRef]

- Tatarchenko, M.; Park, J.; Koltun, V.; Zhou, Q.-Y. Tangent Convolutions for Dense Prediction in 3D. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3887–3896. [Google Scholar]

- Li, H.; Guan, H.; Ma, L.; Lei, X.; Yu, Y.; Wang, H.; Delavar, M.R.; Li, J. MVPNet: A Multi-Scale Voxel-Point Adaptive Fusion Network for Point Cloud Semantic Segmentation in Urban Scenes. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103391. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 5105–5114. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 11105–11114. [Google Scholar]

- Guo, M.-H.; Cai, J.-X.; Liu, Z.-N.; Mu, T.-J.; Martin, R.R.; Hu, S.-M. PCT: Point Cloud Transformer. Comp. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Wu, X.; Jiang, L.; Wang, P.-S.; Liu, Z.; Liu, X.; Qiao, Y.; Ouyang, W.; He, T.; Zhao, H. Point Transformer V3: Simpler, Faster, Stronger. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE: New York, NY, USA, 2024; pp. 4840–4851. [Google Scholar]

- Wu, X.; Lao, Y.; Jiang, L.; Liu, X.; Zhao, H. Point Transformer V2: Grouped Vector Attention and Partition-Based Pooling. arXiv 2022, arXiv:2210.05666. [Google Scholar] [CrossRef]

- Robert, D.; Raguet, H.; Landrieu, L. Efficient 3D Semantic Segmentation with Superpoint Transformer. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 17149–17158. [Google Scholar]

- Jiang, L.; Zhao, H.; Shi, S.; Liu, S.; Fu, C.-W.; Jia, J. PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 4866–4875. [Google Scholar]

- Qu, W.; Wang, J.; Gong, Y.; Huang, X.; Xiao, L. An End-to-End Robust Point Cloud Semantic Segmentation Network with Single-Step Conditional Diffusion Models. arXiv 2025, arXiv:2411.16308. [Google Scholar]

- Zhao, W.; Zhang, R.; Wang, Q.; Cheng, G.; Huang, K. BFANet: Revisiting 3D Semantic Segmentation with Boundary Feature Analysis. arXiv 2025, arXiv:2503.12539. [Google Scholar] [CrossRef]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Niessner, M. ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 2432–2443. [Google Scholar]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 1534–1543. [Google Scholar]

- Roynard, X.; Deschaud, J.-E.; Goulette, F. Paris-Lille-3D: A Large and High-Quality Ground-Truth Urban Point Cloud Dataset for Automatic Segmentation and Classification. Int. J. Robot. Res. 2018, 37, 545–557. [Google Scholar] [CrossRef]

- Chen, M.; Hu, Q.; Yu, Z.; Thomas, H.; Feng, A.; Hou, Y.; McCullough, K.; Ren, F.; Soibelman, L. STPLS3D A Large-Scale Synthetic and Real Aerial Photogrammetry 3D Point Cloud Dataset. arXiv 2022, arXiv:2203.09065. [Google Scholar]

- Han, X.; Liu, C.; Zhou, Y.; Tan, K.; Dong, Z.; Yang, B. WHU-Urban3D: An Urban Scene LiDAR Point Cloud Dataset for Semantic Instance Segmentation. ISPRS J. Photogramm. Remote Sens. 2024, 209, 500–513. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-View Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Boulch, A.; Guerry, J.; Le Saux, B.; Audebert, N. SnapNet: 3D Point Cloud Semantic Labeling with 2D Deep Segmentation Networks. Comput. Graph. 2018, 71, 189–198. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; IEEE: New York, NY, USA, 2015; pp. 922–928. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 1912–1920. [Google Scholar]

- Wang, P.-S.; Sun, C.-Y.; Liu, Y.; Tong, X. Adaptive O-CNN: A Patch-Based Deep Representation of 3D Shapes. ACM Trans. Graph. 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Perdomo, O.; Otalora, S.; Gonzalez, F.A.; Meriaudeau, F.; Muller, H. OCT-NET: A Convolutional Network for Automatic Classification of Normal and Diabetic Macular Edema Using Sd-Oct Volumes. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; IEEE: New York, NY, USA, 2018; pp. 1423–1426. [Google Scholar]

- Le, T.; Duan, Y. PointGrid: A Deep Network for 3D Shape Understanding. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 9204–9214. [Google Scholar]

- Zhang, S.; Wang, B.; Chen, Y.; Zhang, S.; Zhang, W. Point and Voxel Cross Perception with Lightweight Cosformer for Large-Scale Point Cloud Semantic Segmentation. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103951. [Google Scholar] [CrossRef]

- Zhu, X.; Zhou, H.; Wang, T.; Hong, F.; Ma, Y.; Li, W.; Li, H.; Lin, D. Cylindrical and Asymmetrical 3D Convolution Networks for LiDAR Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 9934–9943. [Google Scholar]

- Zhang, C.; Wan, H.; Shen, X.; Wu, Z. PVT: Point-voxel Transformer for Point Cloud Learning. Int. J. Intell. Syst. 2022, 37, 11985–12008. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 77–85. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9621–9630. [Google Scholar]

- Robert, D.; Raguet, H.; Landrieu, L. Scalable 3D Panoptic Segmentation as Superpoint Graph Clustering. arXiv 2024, arXiv:2401.06704. [Google Scholar] [CrossRef]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-BERT: Pre-Training 3D Point Cloud Transformers with Masked Point Modeling. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 19291–19300. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: New York, NY, USA, 2019; pp. 9296–9306. [Google Scholar]

- Tian, Z.; Cui, J.; Jiang, L.; Qi, X.; Lai, X.; Chen, Y.; Liu, S.; Jia, J. Learning Context-Aware Classifier for Semantic Segmentation. Proc. AAAI Conf. Artif. Intell. 2023, 37, 2438–2446. [Google Scholar] [CrossRef]

- Wang, P.-S. OctFormer: Octree-Based Transformers for 3D Point Clouds. ACM Trans. Graph. 2023, 42, 1–11. [Google Scholar] [CrossRef]

- Peng, B.; Wu, X.; Jiang, L.; Chen, Y.; Zhao, H.; Tian, Z.; Jia, J. OA-CNNs: Omni-Adaptive Sparse CNNs for 3D Semantic Segmentation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE: New York, NY, USA, 2024; pp. 21305–21315. [Google Scholar]

| RandLA | DGCNN | CAC | OctFormer | SPT | OACNN | PCTv3 | BFA | CDSeg | EMPower | |

|---|---|---|---|---|---|---|---|---|---|---|

| epoch | 100 | 250 | 900 | 600 | 2000 | 900 | 800 | 400 | 800 | 500 |

| lr | 0.01 | 0.01 | 0.001 | 0.005 | 0.0015 | 0.001 | 0.006 | 0.001 | 0.002 | 0.001 |

| Class | Metric | Methods | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| RandLA | DGCNN | CAC | OctFormer | SPT | OACNN | PCTv3 | BFA | CDSeg | EMPower | ||

| Ground | Prec | 35.92 | 81.47 | 69.74 | 83.18 | 70.94 | 73.07 | 76.34 | 84.45 | 68.84 | 85.66 |

| Recall | 68.02 | 83.71 | 96.09 | 54.81 | 85.26 | 95.2 | 87.66 | 87.62 | 80.00 | 88.72 | |

| F1 | 47.01 | 82.57 | 80.82 | 66.08 | 77.44 | 82.68 | 81.61 | 86.01 | 74.00 | 87.16 | |

| IoU | 30.73 | 70.32 | 67.82 | 49.34 | 63.19 | 70.47 | 68.93 | 75.45 | 58.73 | 77.25 | |

| Vegetation | Prec | 88.4 | 88.68 | 98.31 | 86.73 | 93.57 | 97.83 | 95.61 | 96.37 | 97.46 | 96.82 |

| Recall | 20.77 | 90.2 | 87.92 | 87.02 | 84.74 | 90.37 | 92.52 | 95.53 | 89.77 | 95.87 | |

| F1 | 33.63 | 89.43 | 92.82 | 86.87 | 88.94 | 93.95 | 94.04 | 95.95 | 93.46 | 96.34 | |

| IoU | 20.22 | 86.67 | 86.61 | 76.79 | 80.08 | 88.6 | 88.75 | 92.21 | 87.72 | 92.94 | |

| Power Line | Prec | 5.22 | 72.4 | 82.65 | 84.33 | 86.05 | 95.51 | 94.1 | 99.14 | 50.34 | 98.67 |

| Recall | 81.55 | 34.7 | 98.25 | 60.84 | 86.5 | 92.75 | 91.06 | 98.26 | 94.06 | 99.35 | |

| F1 | 9.81 | 46.91 | 89.78 | 70.68 | 86.27 | 94.11 | 92.56 | 98.70 | 65.58 | 99.01 | |

| IoU | 5.16 | 69.58 | 81.45 | 54.66 | 75.86 | 88.87 | 86.14 | 97.44 | 48.79 | 98.04 | |

| Tower | Prec | 25.01 | 12.95 | 91.22 | 42.41 | 71.78 | 94.17 | 89.71 | 96.86 | 95.10 | 97.67 |

| Recall | 37.09 | 3.19 | 50.74 | 16.35 | 72.33 | 22.19 | 54.34 | 90.12 | 53.54 | 95.21 | |

| F1 | 29.87 | 5.12 | 65.21 | 23.60 | 72.05 | 35.92 | 67.69 | 93.37 | 68.50 | 96.43 | |

| IoU | 11.73 | 20.64 | 48.38 | 12.14 | 36.02 | 59.88 | 51.16 | 87.56 | 52.4 | 93.10 | |

| Average | Prec | 38.64 | 63.87 | 85.48 | 74.16 | 80.58 | 90.14 | 88.94 | 94.20 | 77.93 | 94.70 |

| Recall | 51.85 | 52.95 | 83.25 | 54.76 | 82.21 | 75.12 | 81.39 | 92.88 | 79.34 | 94.79 | |

| F1 | 30.08 | 56.01 | 82.16 | 61.80 | 81.17 | 76.67 | 83.97 | 93.51 | 75.38 | 94.73 | |

| IoU | 16.96 | 61.80 | 71.06 | 48.23 | 63.79 | 76.95 | 73.74 | 88.16 | 61.91 | 90.33 | |

| Class | Metric | Methods | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| RandLA | DGCNN | CAC | OctFormer | SPT | OACNN | PCTv3 | BFA | CDSeg | EMPower | ||

| Ground | Prec | 24.03 | 68.67 | 61.57 | 73.16 | 65.76 | 65.13 | 75.16 | 82.65 | 70.51 | 81.19 |

| Recall | 76.63 | 79.15 | 97.91 | 27.07 | 82.22 | 97.09 | 91.52 | 89.01 | 80.83 | 91.80 | |

| F1 | 36.59 | 73.54 | 75.60 | 39.51 | 73.08 | 77.96 | 82.54 | 85.71 | 75.32 | 86.17 | |

| IoU | 22.39 | 58.15 | 60.77 | 24.62 | 57.57 | 63.89 | 70.27 | 75.00 | 60.41 | 75.70 | |

| Vegetation | Prec | 91.58 | 94.91 | 99.49 | 88.94 | 96.17 | 98.98 | 98.64 | 98.30 | 97.90 | 98.75 |

| Recall | 46.05 | 95.02 | 89.49 | 97.69 | 91.96 | 92.27 | 95.50 | 97.24 | 94.63 | 96.89 | |

| F1 | 61.28 | 94.96 | 94.23 | 93.11 | 94.02 | 95.50 | 97.04 | 97.77 | 96.24 | 97.81 | |

| IoU | 44.18 | 90.40 | 89.09 | 87.11 | 88.71 | 91.39 | 94.26 | 95.63 | 92.75 | 95.72 | |

| Power Line | Prec | 10.11 | 78.87 | 74.90 | 68.26 | 95.36 | 90.58 | 99.11 | 99.38 | 82.67 | 99.84 |

| Recall | 87.26 | 17.62 | 98.90 | 61.10 | 96.64 | 99.61 | 99.12 | 99.85 | 92.34 | 99.82 | |

| F1 | 18.12 | 28.80 | 85.24 | 64.48 | 95.99 | 94.88 | 99.12 | 99.61 | 87.24 | 99.83 | |

| IoU | 9.96 | 16.82 | 74.28 | 47.58 | 92.30 | 90.26 | 92.25 | 99.23 | 77.36 | 99.66 | |

| Tower | Prec | 60.55 | 11.71 | 55.62 | 56.11 | 58.52 | 93.69 | 90.63 | 97.35 | 73.78 | 97.25 |

| Recall | 6.20 | 4.52 | 34.66 | 34.92 | 38.57 | 13.11 | 88.93 | 89.93 | 53.20 | 94.19 | |

| F1 | 11.25 | 6.52 | 42.70 | 42.05 | 46.50 | 23.00 | 89.77 | 93.50 | 61.82 | 95.70 | |

| IoU | 5.96 | 3.94 | 27.15 | 27.43 | 30.29 | 32.78 | 81.44 | 87.79 | 36.51 | 91.75 | |

| Average | Prec | 46.57 | 63.54 | 72.90 | 71.62 | 78.95 | 87.01 | 90.89 | 94.42 | 81.22 | 94.26 |

| Recall | 54.04 | 49.07 | 80.24 | 55.20 | 77.35 | 75.52 | 93.77 | 94.01 | 80.25 | 95.68 | |

| F1 | 31.81 | 50.96 | 74.44 | 59.79 | 77.40 | 72.84 | 92.12 | 94.15 | 80.16 | 94.88 | |

| IoU | 20.62 | 42.32 | 62.82 | 46.69 | 67.22 | 69.58 | 84.56 | 89.41 | 66.76 | 90.71 | |

| Metric | Ground | Vegetation | Power Line | Tower | Average | |

|---|---|---|---|---|---|---|

| Only with MR | Prec | 76.34 | 95.61 | 95.77 | 89.71 | 89.35 |

| Recall | 87.66 | 92.52 | 95.36 | 54.34 | 82.47 | |

| F1 | 81.61 | 94.04 | 95.57 | 67.69 | 84.72 | |

| IoU | 68.93 | 88.75 | 91.51 | 51.16 | 75.08 | |

| Only with ED | Prec | 80.87 | 93.64 | 94.10 | 92.40 | 90.25 |

| Recall | 78.68 | 94.79 | 91.06 | 69.21 | 83.43 | |

| F1 | 79.76 | 94.21 | 92.56 | 79.14 | 86.41 | |

| IoU | 66.33 | 89.05 | 86.14 | 65.48 | 76.75 |

| Dataset | Class | Z | Z+ | Histogram |

|---|---|---|---|---|

| Yunnan | PowerLine Line | 96.98 | 97.88 | 98.04 |

| Tower | 88.54 | 90.03 | 93.10 | |

| Guangdong | PowerLine Line | 97.82 | 98.93 | 99.66 |

| Tower | 85.33 | 86.26 | 91.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Li, S.; Wang, G.; Jiang, W.; Yan, Y.; Sun, J. Robust and Transferable Elevation-Aware Multi-Resolution Network for Semantic Segmentation of LiDAR Point Clouds in Powerline Corridors. Remote Sens. 2025, 17, 3318. https://doi.org/10.3390/rs17193318

Wang Y, Li S, Wang G, Jiang W, Yan Y, Sun J. Robust and Transferable Elevation-Aware Multi-Resolution Network for Semantic Segmentation of LiDAR Point Clouds in Powerline Corridors. Remote Sensing. 2025; 17(19):3318. https://doi.org/10.3390/rs17193318

Chicago/Turabian StyleWang, Yifan, Shenhong Li, Guofang Wang, Wanshou Jiang, Yijun Yan, and Jianwen Sun. 2025. "Robust and Transferable Elevation-Aware Multi-Resolution Network for Semantic Segmentation of LiDAR Point Clouds in Powerline Corridors" Remote Sensing 17, no. 19: 3318. https://doi.org/10.3390/rs17193318

APA StyleWang, Y., Li, S., Wang, G., Jiang, W., Yan, Y., & Sun, J. (2025). Robust and Transferable Elevation-Aware Multi-Resolution Network for Semantic Segmentation of LiDAR Point Clouds in Powerline Corridors. Remote Sensing, 17(19), 3318. https://doi.org/10.3390/rs17193318