Line-Structured Light-Based Three-Dimensional Reconstruction Measurement System with an Improved Scanning-Direction Calibration Method

Abstract

1. Introduction

- We develop a comprehensive line-structured light-based 3D reconstruction measurement system. In the reconstruction measurement experiment of a 5 mm standard gauge block, our developed system achieves the mean value of the error 0.02 mm with the standard deviation 0.00352;

- An improved scanning-direction calibration method is proposed to achieve dual improvement in measurement accuracy and operational convenience.

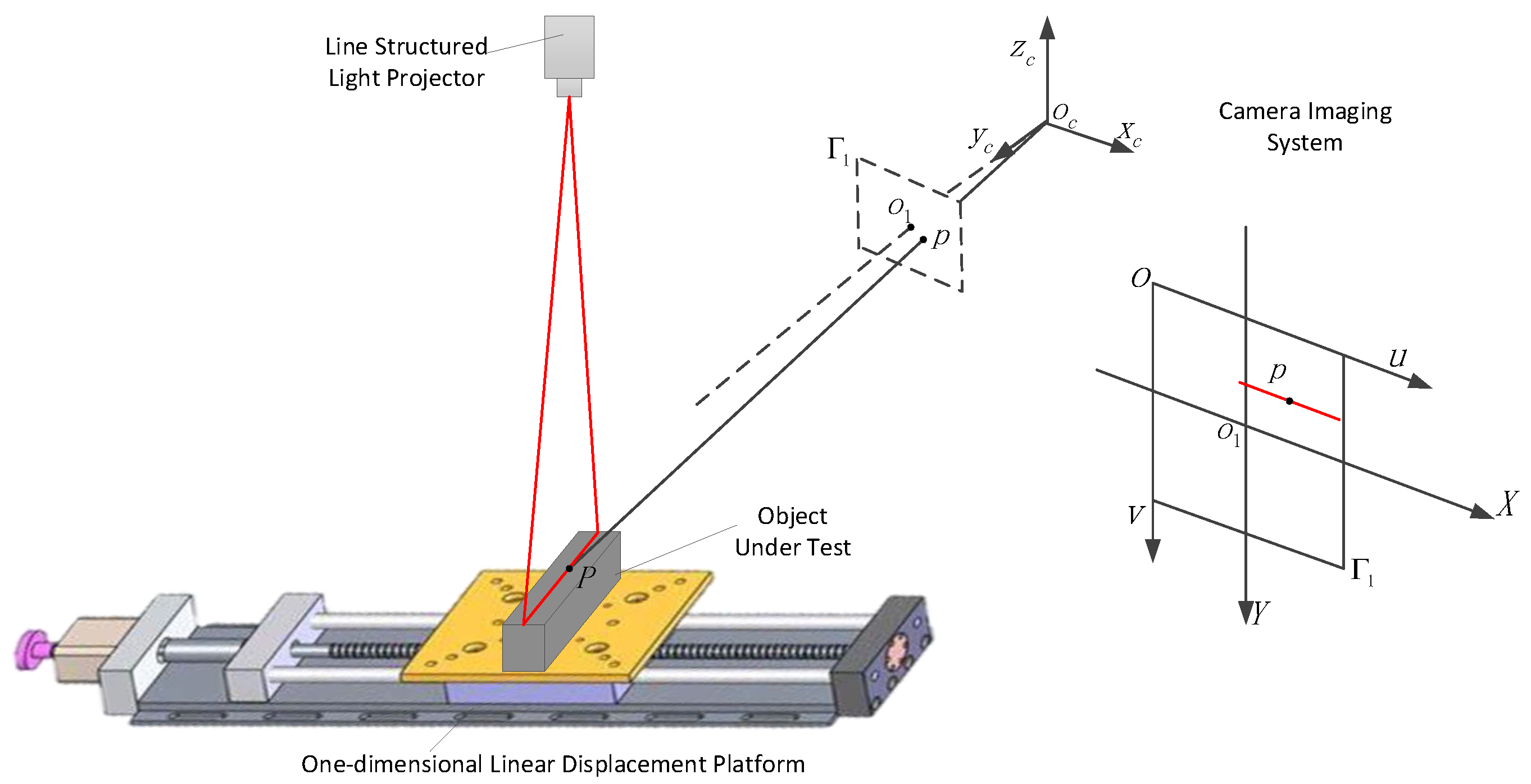

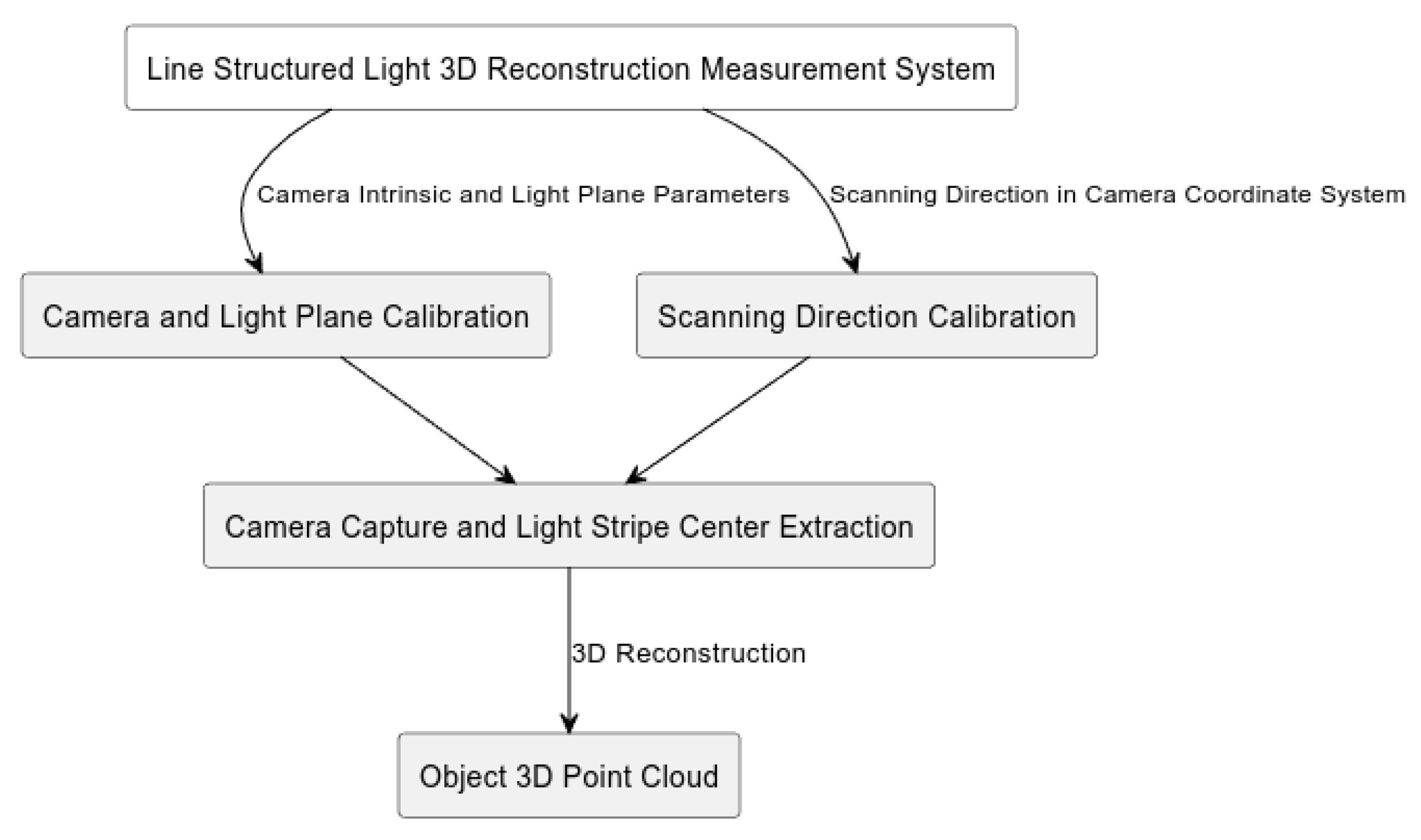

2. Key Steps and Related Work for Constructing a Line-Structured Light-Based 3D Reconstruction Measurement System

2.1. Calibration of Line-Structured Light-Based 3D Reconstruction Measurement System

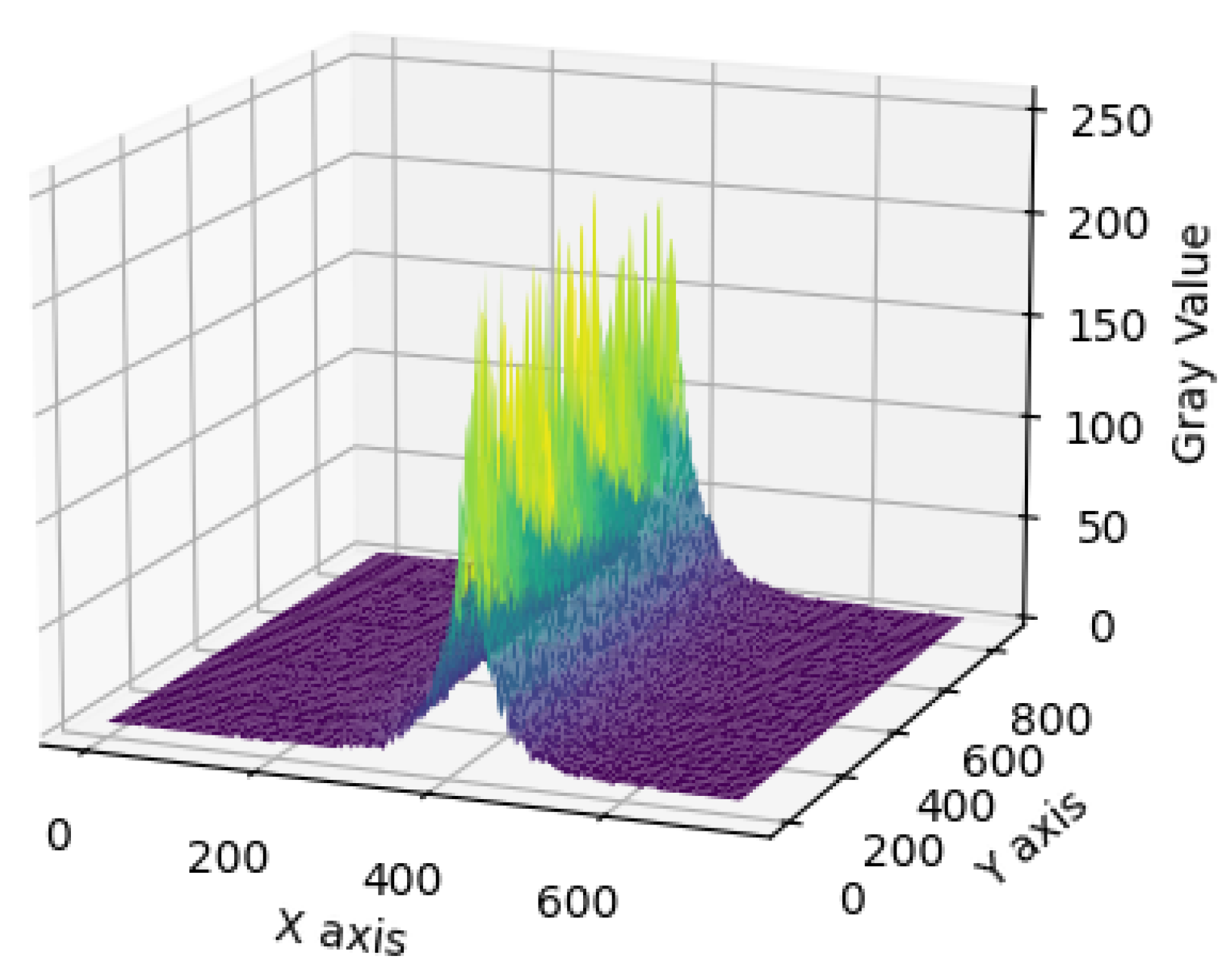

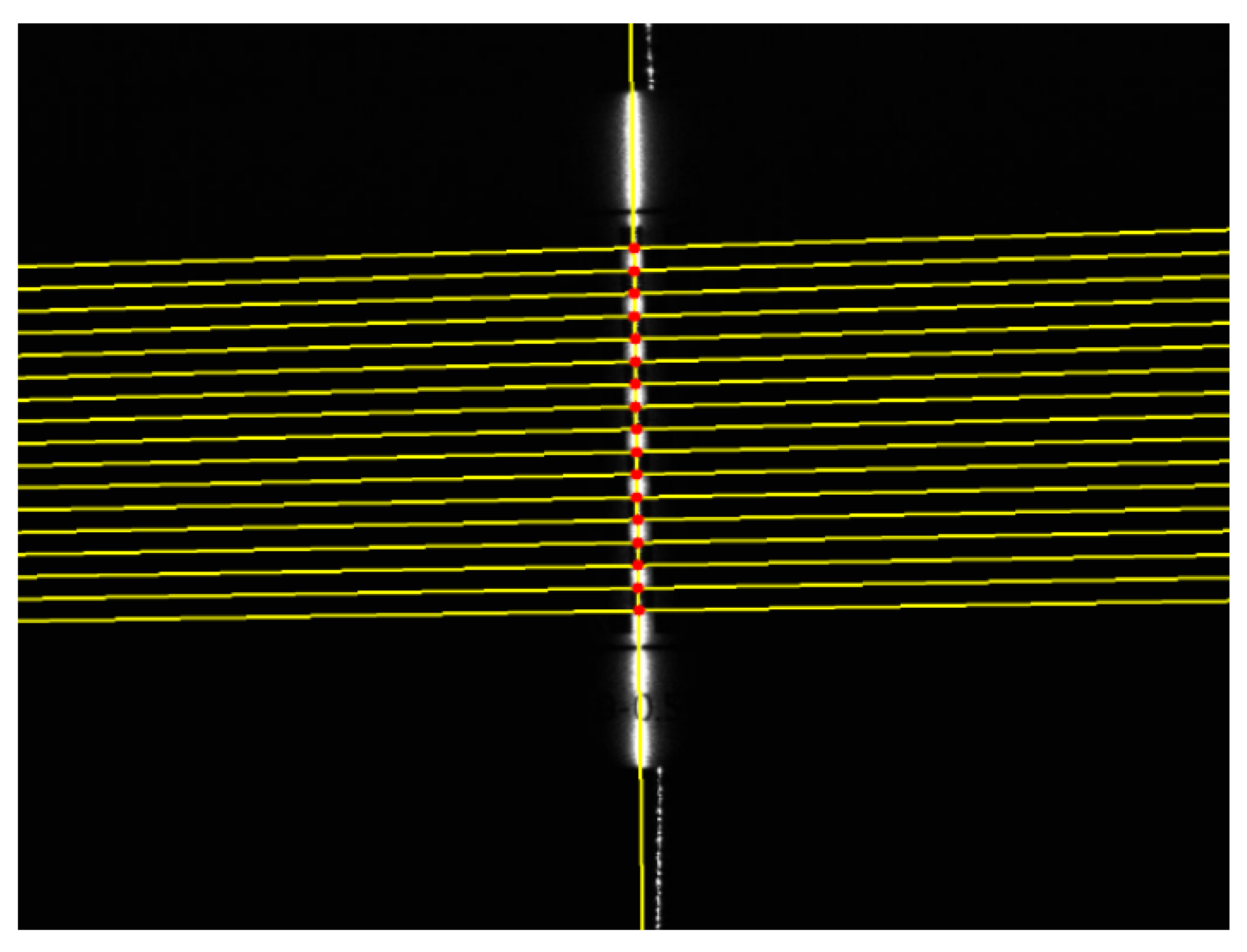

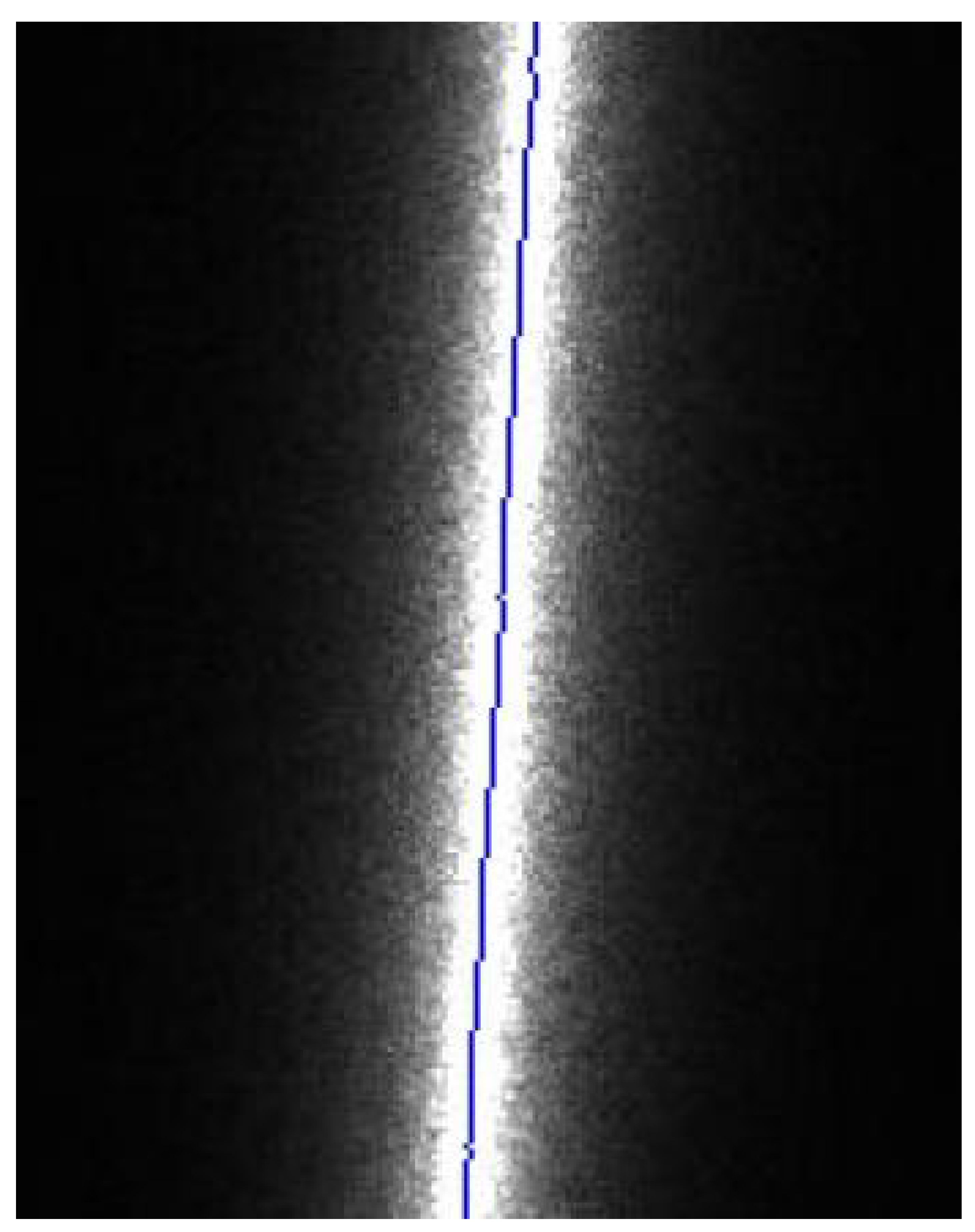

2.2. Extraction of Laser Stripe Center

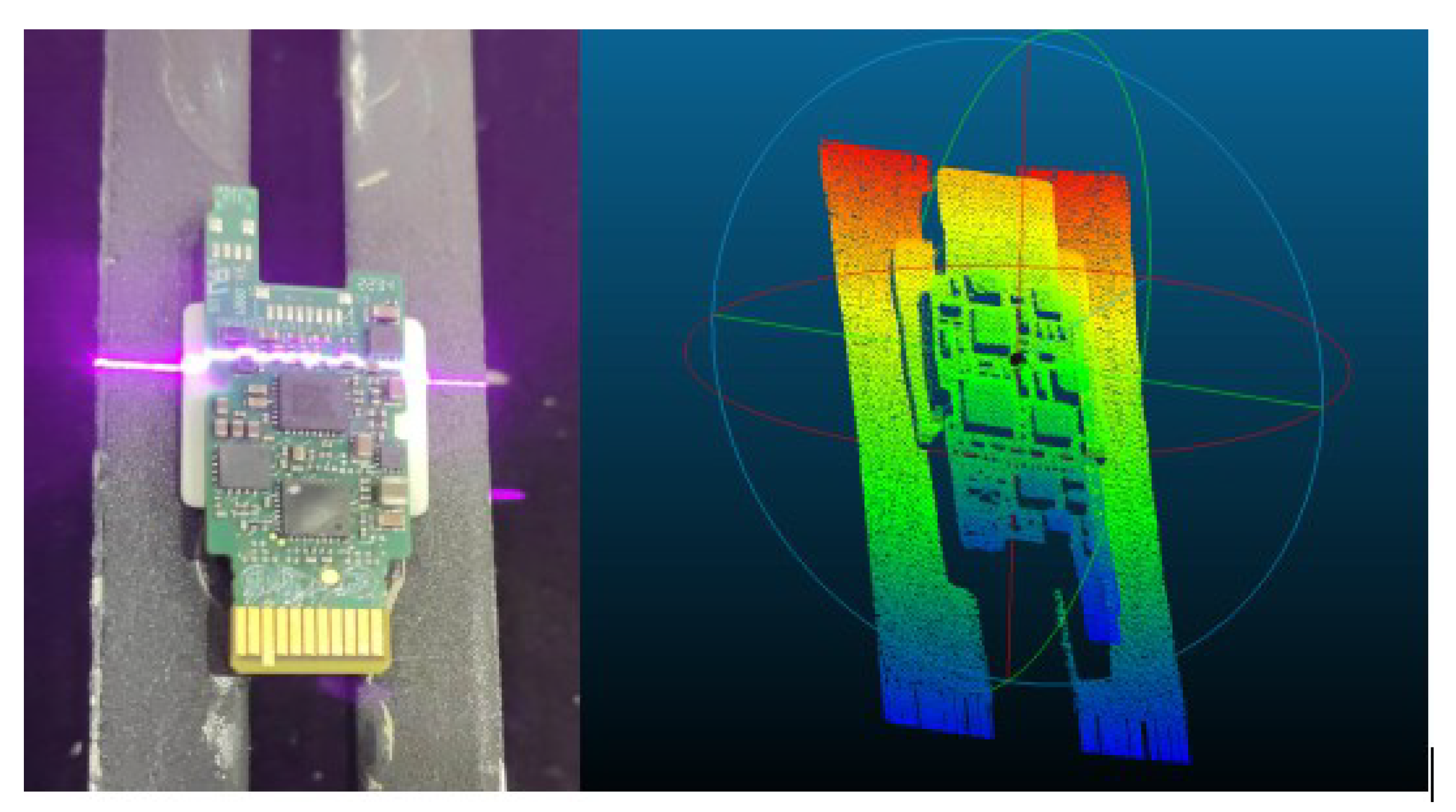

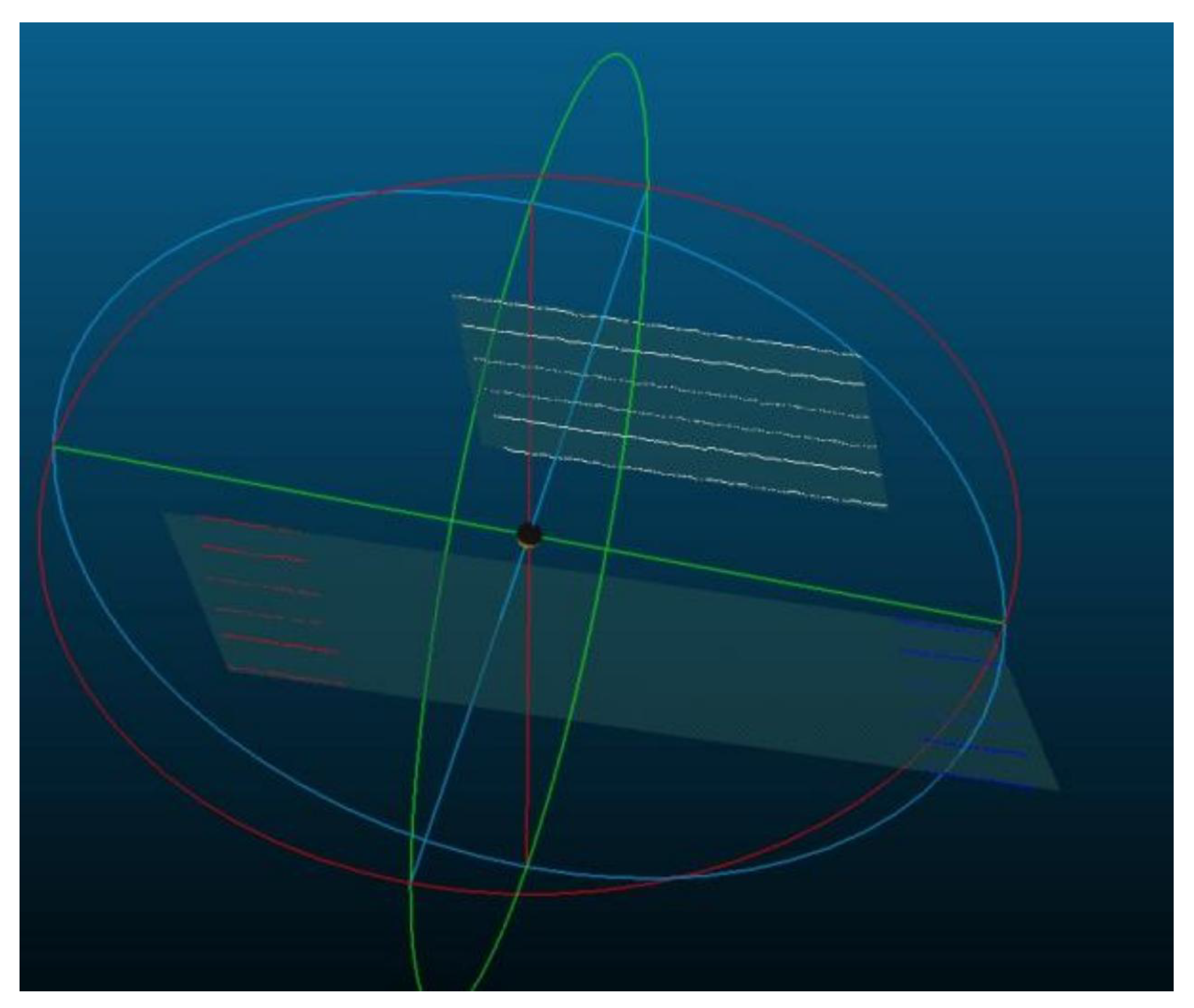

3. Construction of the Line-Structured Light-Based 3D Reconstruction Measurement System

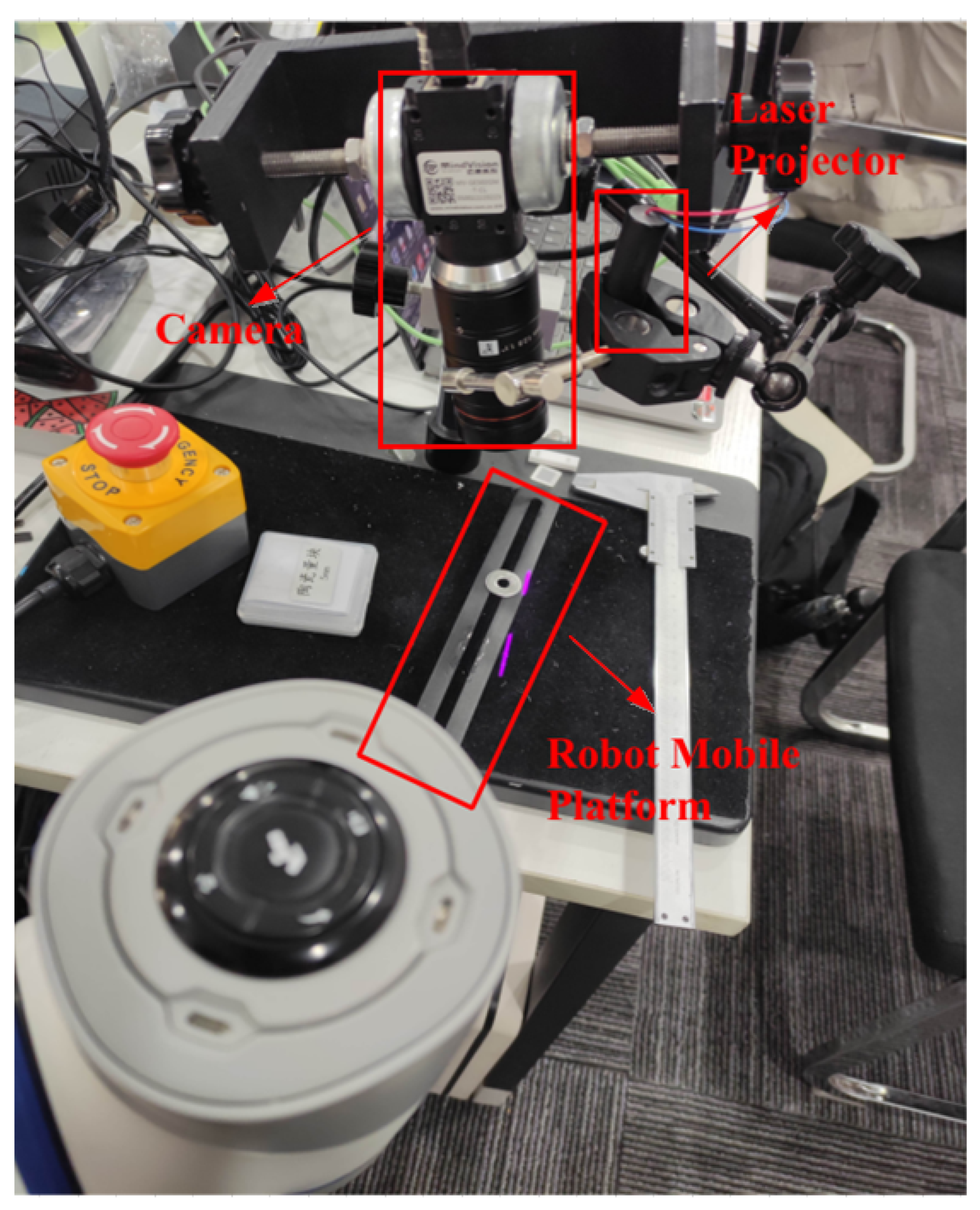

3.1. System Hardware Composition

- the camera model was MindVision MV-GE502GM, equipped with a lens of 35 mm focal length, featuring a resolution of 1280 × 1024 and a pixel size of 4.8 μm × 4.8 μm (Camera and its accessories are all sourced from Shenzhen MindVision Technology Co., Ltd., Shenzhen, China);

- the laser was a custom-made line-structured light laser;

- the robot mobile platform was essentially a one-dimensional linear displacement platform, with an error tolerance of ±0.02 mm;

- the gauge block was Grade 0, with a nominal size of 5 mm.

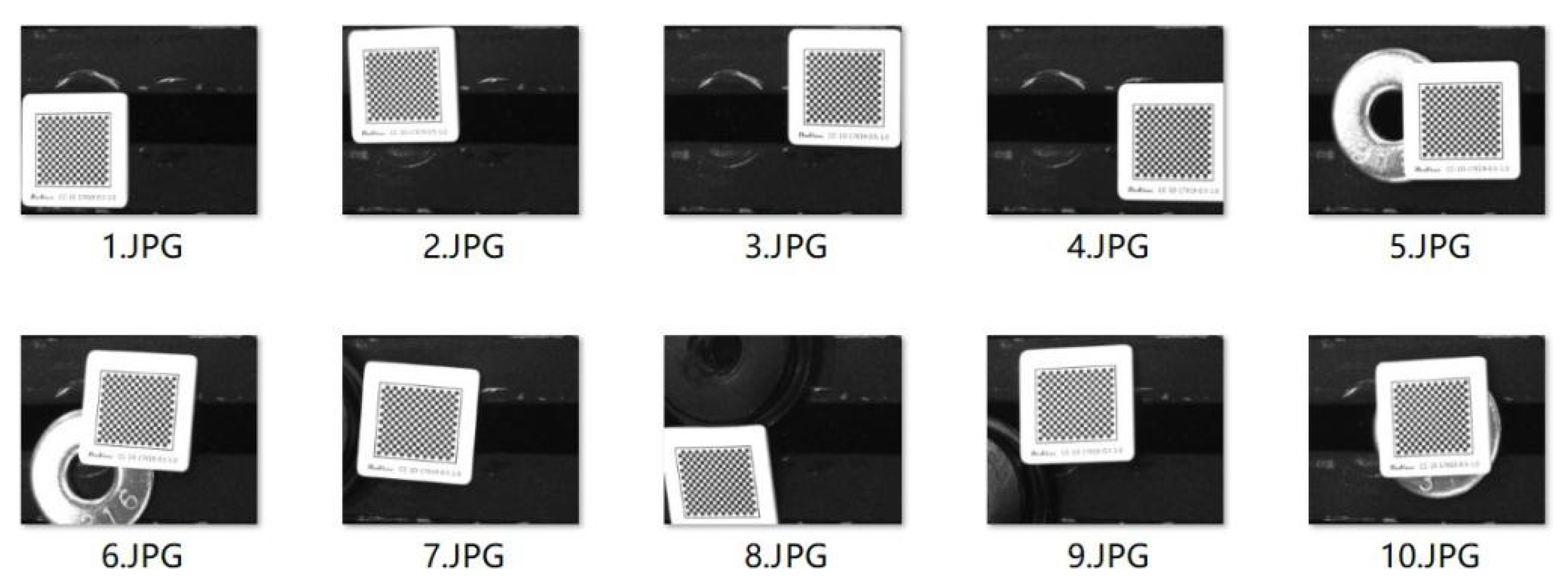

3.2. Calibration of the CCD Camera

3.3. Calibration of the Light Plane

3.4. Extraction of the Light Stripe Center and Calibration of the Scanning Direction

3.5. System Software Composition and Scanned 3D Point Cloud

4. An Improved Scanning-Direction Calibration Method

4.1. Problems with Traditional Methods

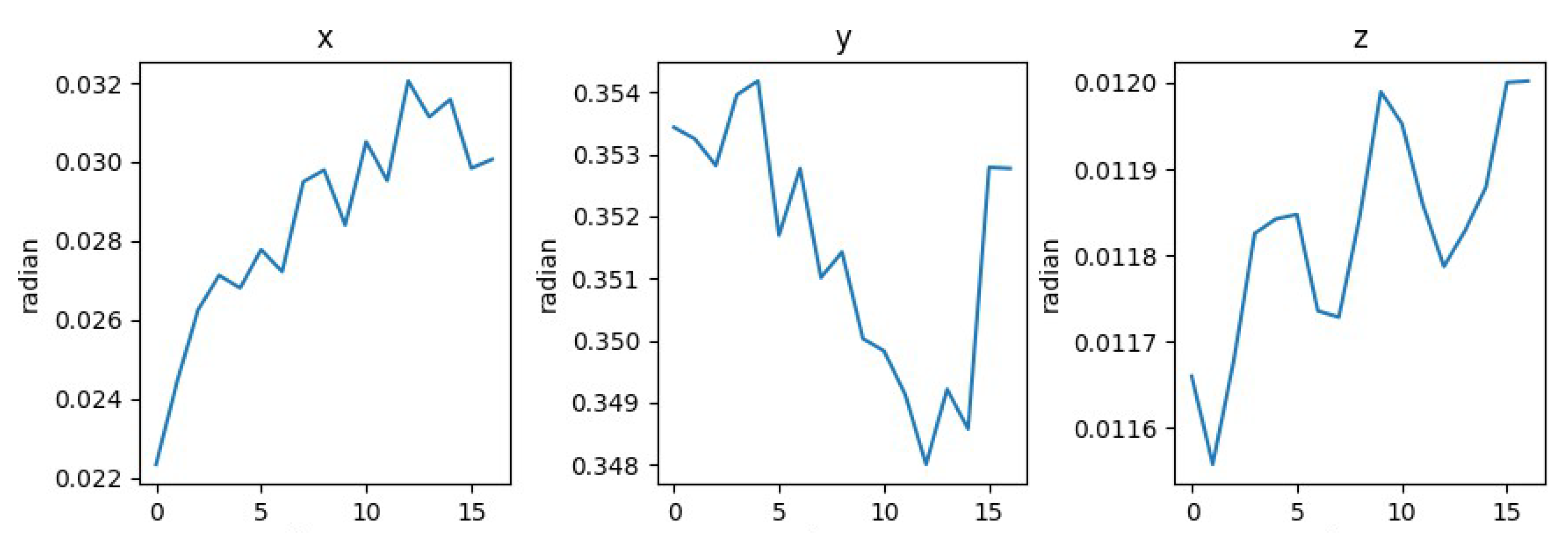

4.2. Our Proposed Method

- 1.

- To mitigate noise arising from perspective distortion, variations in image clarity, and other factors, this study introduces an iterative control point refinement technique utilizing planar transformation [31] to enhance the spatial accuracy of control points.

- 2.

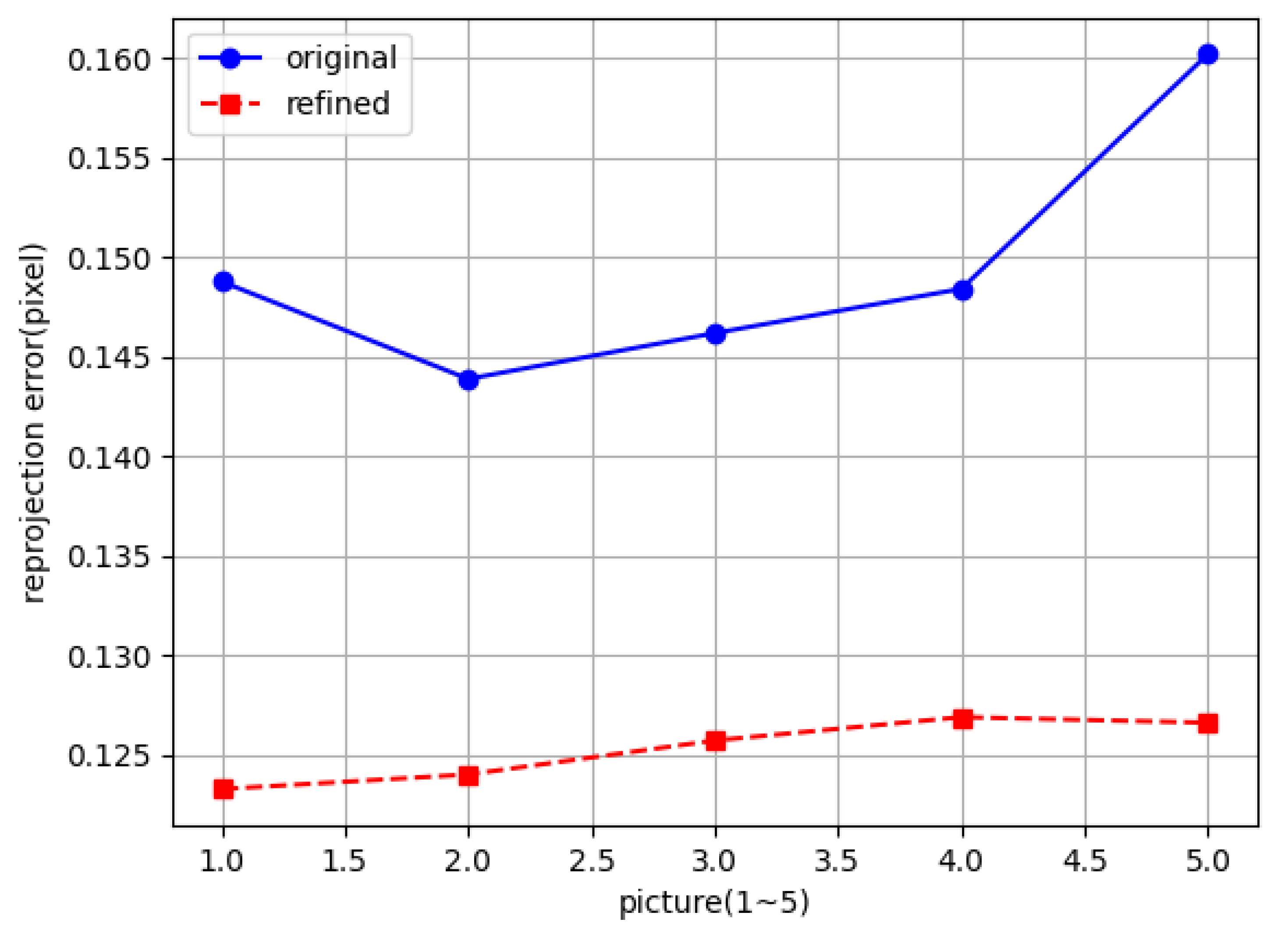

- Next, we combine all images and use the reprojection error as the evaluation criterion to select a more accurate rotation matrix.

- 3.

- Using the rotation matrix estimated in step 2, the Levenberg–Marquardt (LM) algorithm is applied to estimate the translation vector.

| Algorithm 1: Our Proposed Method |

Require: Image list captured along motion direction ; camera intrinsic parameters A Ensure: Scanning direction vector V; rotation–translation matrix list Generate world homogeneous coordinates of control points based on ; ; perform image undistortion for each i in do (a) Compute corner coordinates of image i (b) Optimize corner coordinates using iterative refinement with planar transformation, save to (c) Estimate homography matrix H using and , computer reprojection error if then ; end if end for Extract rotation matrix R and translation vector T from using A Build reprojection error function f with input translation vector T and output is the error e between the reprojection point and the real control corner point; for each i in do (a) Use translation vector T as initial estimate; (b) Apply Levenberg–Marquardt algorithm to minimize reprojection error f, obtain ; save and rotation matrix R to ; end for Transform to camera coordinates using ; Group the points in such that points at corresponding positions on the checkerboard are grouped together into a , and merge every into ; for each i in do (a) Centralize points of direction i; (b) Obtain the direction vector of direction i through SVD and save into ; end for Return ; |

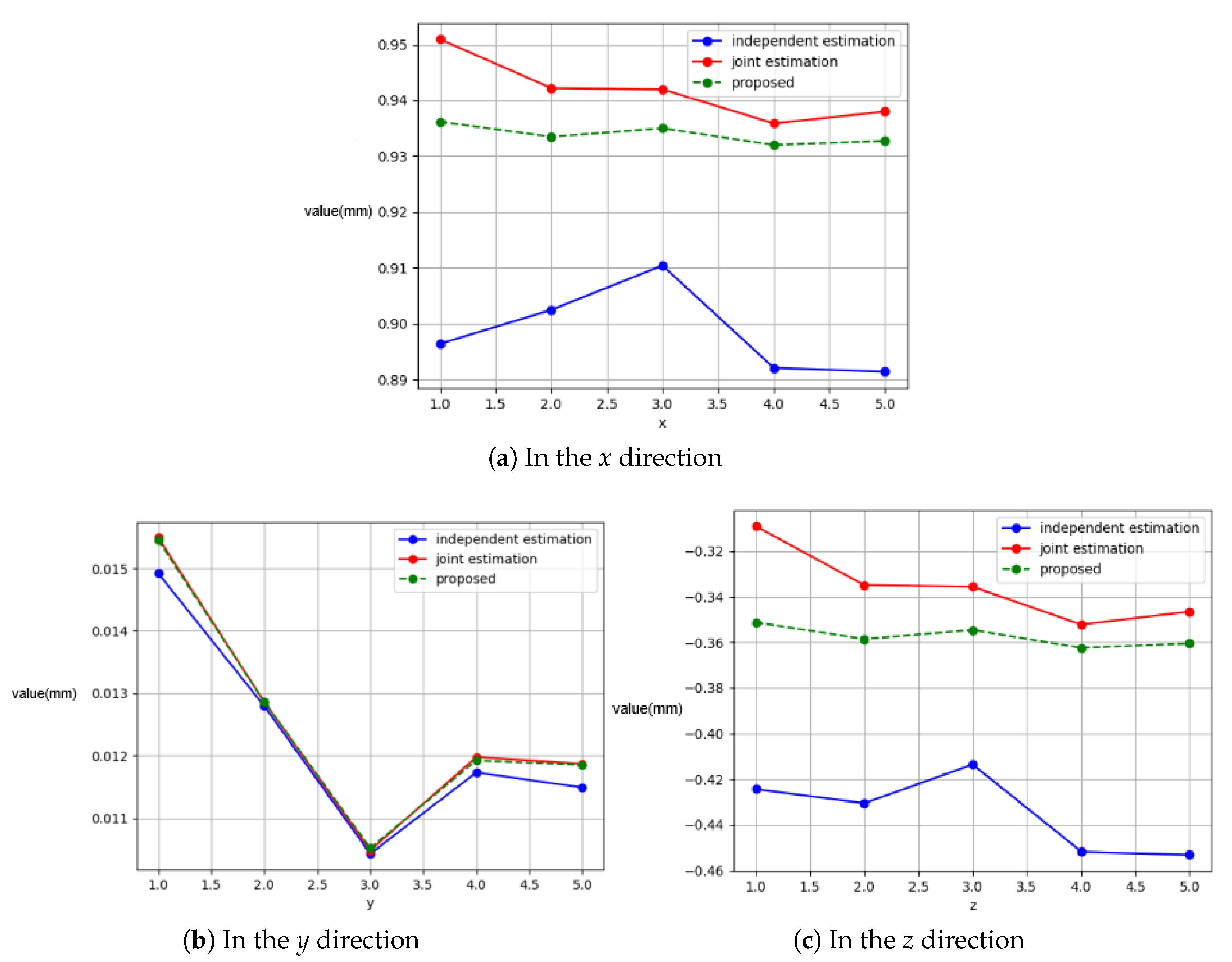

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zeng, X.J.; Huo, J.W.Q. Calibrate method for scanning direction of 3D measurement system based on linear-structure light. Chin. J. Lasers 2012, 39, 108002. [Google Scholar] [CrossRef]

- Liu, C.W.; Duan, F.L.J. Calibration Method for Scanning Direction of Line-Structured-Light 3D Sensor. Chin. J. Lasers 2023, 50, 504001. [Google Scholar] [CrossRef]

- Sha, O. Research of 3D reconstruction and its key technologies based on binocular and linear structured light. Univ. Chin. Acad. Sci. 2023. [Google Scholar] [CrossRef]

- Lyu, Y.; Liu, Z.; Wang, J.; Jiang, Y.; Li, Y.; Li, X.; Kong, L.; Li, J.; Xu, M. A High-Precision Binocular 3D Reconstruction System Based on Depth-of-Field Extension and Feature Point Guidance. Measurement 2025, 248, 116895. [Google Scholar] [CrossRef]

- Cao, C.; Ren, X.; Fu, Y. MVSFormer: Multi-view stereo by learning robust image features and temperature-based depth. arXiv 2022, arXiv:2208.02541. [Google Scholar] [CrossRef]

- Zhao, L.; Liu, Y.; Men, C.; Men, Y. Double propagation stereo matching for urban 3-D reconstruction from satellite imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3058144. [Google Scholar] [CrossRef]

- Saovana, N.; Yabuki, N.; Fukuda, T. Automated point cloud classification using an image-based instance segmentation for structure from motion. Autom. Constr. 2021, 129, 103804. [Google Scholar] [CrossRef]

- Zhao, S.H. Research on Real-Time 3D Reconstruction System Based on Binocular Stereo Vision. Master’s Thesis, Xidian University, Xi’an, China, 2022. [Google Scholar] [CrossRef]

- Wang, F.; Galliani, S.; Vogel, C.; Speciale, P.; Pollefeys, M. PatchmatchNet: Learned multi-view patchmatch stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X. On-Line three-dimensional coordinate measurement of dynamic binocular stereo vision based on rotating camera in large FOV. Opt. Express 2021, 29, 4986–5005. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, W.; Luo, Z.; Yang, L. A Novel 3D Reconstruction Method with a Binocular-Line Laser System. Measurement 2024, 227, 114238. [Google Scholar] [CrossRef]

- Ji, Y.; Zhong, J.; Li, Y.; Fu, J.; Liu, J. PCAPN: An Enhanced Feature Extraction Framework for Point Cloud. IEEE Access 2023, 11, 20786–20794. [Google Scholar] [CrossRef]

- Lu, Q.; Pan, Y.; Hu, L.; He, J. A method for reconstructing background from RGB-D SLAM in indoor dynamic environments. Sensors 2023, 23, 3529. [Google Scholar] [CrossRef]

- Liu, J.; Lu, C.; Wen, J.; Xiao, Y.; Yan, F.; Liu, Y. Three-dimensional measurement method based on binary coded fringes. Acta Opt. Sin. 2023, 43, 112004. [Google Scholar] [CrossRef]

- Nguyen, A.H.; Sun, B.; Li, C.Q.; Wang, Z. Different structured-light patterns in single-shot 2D-to-3D image conversion using deep learning. Appl. Opt. 2022, 61, 10105–10115. [Google Scholar] [CrossRef]

- Wang, F.; Wang, C.; Guan, Q. Single-shot fringe projection profilometry based on deep learning and computer graphics. Opt. Express 2021, 29, 8024–8040. [Google Scholar] [CrossRef]

- Huang, W.; Peng, X.; Li, L.; Li, X.Y. Review of camera calibration methods and their progress. Laser Optoelectron. Prog. 2023, 60, 1600001. [Google Scholar] [CrossRef]

- Xu, X.; Fei, Z.; Yang, J.; Tan, Z.; Luo, M. Line structured light calibration method and centerline extraction: A review. Results Phys. 2020, 19, 103637. [Google Scholar] [CrossRef]

- Pan, X.; Wu, J.; Li, Z.; Yang, J.; Zhang, C.; Deng, C.; Yi, Z.; Gao, Q.; Yu, M.; Zhang, Z.; et al. Self-Calibration for Linear Structured Light 3D Measurement System Based on Quantum Genetic Algorithm and Feature Matching. Optik 2021, 225, 165749. [Google Scholar] [CrossRef]

- Dai, G.; Zhang, Q.; Xu, X.; Zhao, B. A Calibration Method of Line-Structured Light System for Measuring Outer Circle Dimension during Machining. Results Eng. 2024, 23, 102525. [Google Scholar] [CrossRef]

- Li, T.; Liu, C.; Duan, F.; Fu, X.; Niu, G.; Liang, C.; Chen, A. A Light Plane Calibration Method of Line-Structured Light Sensors Based on Unified Extrinsic Parameters Estimation. Opt. Lasers Eng. 2025, 188, 108925. [Google Scholar] [CrossRef]

- Li, Y.Y.; Zhang, Z.Y.; Yuan, L. Survey on linear structured light stripe center extraction. Laser Optoelectron. Prog. 2013, 50, 100002. [Google Scholar] [CrossRef]

- Hu, K.; Zhou, F.; Zhang, G. Fast extrication method for sub-pixel center of structured light stripe. Chin. J. Sci. Instrum. 2006, 27, 1326–1329. [Google Scholar] [CrossRef]

- Zeng, C.; Wang, S.J.; Lu, H.; Kong, C. Center extraction algorithm of line structured light stripe. J. Image Graph. 2019, 24, 1772–1780. [Google Scholar] [CrossRef]

- Nan, F.; Li, D.; Gao, Q.; Xiao, Y. Implementation of adaptive light stripe center extraction of improved steger algorithm. Laser J. 2018, 39, 85–88. [Google Scholar] [CrossRef]

- Chen, X.Y.; Sun, X.; Sun, Y. Improved u2-Net for high-precision extraction of laser stripe centerline. J. Optoelectron. Laser 2025, 36, 61–68. [Google Scholar] [CrossRef]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1, pp. 666–673. [Google Scholar] [CrossRef]

- Chen, J.; Wu, X.; Wang, M.Y.; Li, X. 3D shape modeling using a self-developed hand-held 3D laser scanner and an efficient HT-ICP point cloud registration algorithm. Opt. Laser Technol. 2013, 45, 414–423. [Google Scholar] [CrossRef]

- He, Y.; Yang, J.; Hou, X.; Pang, S.; Chen, J. ICP registration with DCA descriptor for 3D point clouds. Opt. Express 2021, 29, 20423–20439. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, S.; Xu, Z. Study of solving nonlinear least squares under large residual based on Levenberg-Marquardy algorithm. China Meas. Test 2016, 42, 12–16. [Google Scholar] [CrossRef]

- Datta, A.; Kim, J.S.; Kanade, T. Accurate camera calibration using iterative refinement of control points. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1201–1208. [Google Scholar] [CrossRef]

| 1 mm | 2 mm | 3 mm | 4 mm | 5 mm | |

|---|---|---|---|---|---|

| Independent estimation method (mm) | 4.958 | 4.970 | 4.975 | 4.993 | 4.968 |

| Joint estimation method (mm) | 4.969 | 4.975 | 4.983 | 4.982 | 4.980 |

| Ours (mm) | 4.980 | 4.979 | 4.974 | 4.984 | 4.983 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Ping, S.; Liang, X.; Ma, X.; Pang, S.; He, Y. Line-Structured Light-Based Three-Dimensional Reconstruction Measurement System with an Improved Scanning-Direction Calibration Method. Remote Sens. 2025, 17, 2236. https://doi.org/10.3390/rs17132236

Chen J, Ping S, Liang X, Ma X, Pang S, He Y. Line-Structured Light-Based Three-Dimensional Reconstruction Measurement System with an Improved Scanning-Direction Calibration Method. Remote Sensing. 2025; 17(13):2236. https://doi.org/10.3390/rs17132236

Chicago/Turabian StyleChen, Jia, Shantao Ping, Xiaowei Liang, Xulong Ma, Shiyan Pang, and Ying He. 2025. "Line-Structured Light-Based Three-Dimensional Reconstruction Measurement System with an Improved Scanning-Direction Calibration Method" Remote Sensing 17, no. 13: 2236. https://doi.org/10.3390/rs17132236

APA StyleChen, J., Ping, S., Liang, X., Ma, X., Pang, S., & He, Y. (2025). Line-Structured Light-Based Three-Dimensional Reconstruction Measurement System with an Improved Scanning-Direction Calibration Method. Remote Sensing, 17(13), 2236. https://doi.org/10.3390/rs17132236