Abstract

Domain shifts pose significant challenges for cross-domain semantic segmentation in high-resolution remote sensing imagery. Inspired by the cognitive mechanisms of the human brain, we propose a Brain-Inspired Style Transfer and Semantic Segmentation Collaborative Adversarial Framework (SAF), which mimics neural processes such as hierarchical perception, memory retrieval, and multimodal integration to enhance cross-domain feature alignment and segmentation performance. To achieve the joint optimization of style transfer and semantic segmentation networks, we introduce three key components: a Semantic-Aware Transformer Module (SATM) that dynamically captures and preserves essential semantic features during style transfer; a Semantic-Driven Multi-feature Memory Module (SMM) that stores and retrieves historical style and semantic information to improve domain adaptability; a Domain-Invariant Style-Semantic Center Space (DSCS) that aligns style and semantic features within a shared representation space, mitigating discrepancies between style and semantic domains. Extensive experiments across multiple tasks demonstrate that SAF effectively reduces distortions and semantic inconsistencies by achieving deep style–semantic alignment. Compared to leading approaches, SAF achieves a superior balance between style adaptation and semantic preservation, significantly improving model generalization in remote sensing applications.

1. Introduction

With the continuous advancements in Earth observation and artificial intelligence technologies, the semantic segmentation of high-resolution remote sensing imagery has been widely applied in fields such as environmental protection, urban planning, disaster prediction, and damage assessment [1,2]. Semantic segmentation aims to assign a semantic label to each pixel in an image, typically under the assumption that the training samples (source domain) and test data (target domain) follow the same data distribution [3]. However, in real-world scenarios, due to geographic complexity and diverse imaging conditions (e.g., illumination changes, seasonal variations, imaging altitudes, and sensor differences), domain shift commonly occurs [4]. This phenomenon significantly hinders model generalization in targeting domains with limited or no annotations [5], making domain adaptation a critical research focus.

To address domain shift, Unsupervised Domain Adaptation (UDA) techniques have been developed to align the feature distributions of source and target domains through adversarial training [6,7]. Although adversarial UDA methods effectively reduce distributional discrepancies, they often struggle with substantial style variations induced by environmental and sensor factors in remote sensing images. [7,8]. Particularly, adversarial training alone is insufficient to fully bridge cross-domain gaps when style and semantic structures change simultaneously [9,10,11,12].

Style transfer techniques provide an effective approach to mitigating style discrepancies between the source and target domains [13,14]. By transforming source domain images to match the target domain’s style, style transfer significantly reduces inter-domain differences, offering more consistent inputs for subsequent domain adaptation tasks [14,15]. However, existing approaches typically separate style transfer and semantic segmentation into independent processes, neglecting the interaction between style adaptation and semantic structure preservation. As illustrated in Figure 1, without semantic constraints, style transfer may disrupt the alignment of critical objects such as buildings, roads, and vegetation, leading to significant semantic distortions. Recent methods have attempted to integrate Generative Adversarial Networks (GANs) into style transfer [16,17,18], but maintaining fine-grained semantic consistency during visual style adaptation remains a major challenge.

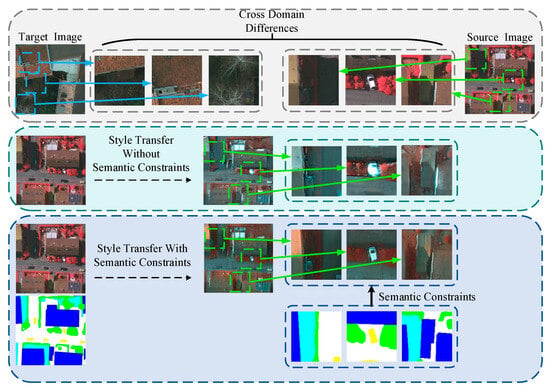

Figure 1.

Impact of semantic constraints on style transfer consistency across domains. The (top row) highlights cross-domain differences between the target and source images, showing substantial variations in appearance and spectral characteristics despite shared semantic content. The (middle row) presents style transfer without semantic constraints, where semantic structures are disrupted, leading to mismatches in object locations and shapes. The (bottom row) demonstrates that introducing semantic constraints preserves object consistency and spatial alignment during style adaptation, maintaining semantic integrity across domains.

Moreover, traditional artificial neural networks mainly rely on feedforward architectures and error backpropagation, lacking the dynamic feedback, memory retrieval, and multimodal integration capabilities observed in biological brains [19,20,21]. Recent advances in brain-inspired artificial intelligence have revealed that mechanisms such as hierarchical feature processing in the visual cortex, memory retrieval in the hippocampus, and cross-modal integration in the association cortex can significantly enhance generalization and adaptability [22,23,24].

To address the challenges of complex semantics and significant style variations in the cross-domain semantic segmentation of high-resolution remote sensing imagery, we propose a novel brain-inspired framework. The main contributions of this study are summarized as follows:

- We propose a brain-inspired synergistic adversarial framework (SAF) that integrates style transfer and semantic segmentation into a unified learning process. This framework enhances domain generalization by jointly optimizing style alignment and semantic consistency.

- We propose a Semantic-Aware Style Transfer Network (STGAN) equipped with a lightweight Semantic-Aware Transformer Module (SATM), which mimics the brain’s top–down and bottom–up information flow to guide style transformation while preserving semantic structure.

- We introduce a Semantic-Driven Multi-Feature Memory Mechanism (SMM) and a Domain-Invariant Style-Semantic Space (DSCS), which collaboratively support the storage, retrieval, and alignment of semantic and style features across domains, enabling effective style adaptation and cross-domain feature synergy.

The remainder of this article is organized as follows: Section 2 reviews related works on cross-domain semantic segmentation and style transfer. Section 3 details our proposed Brain-Inspired Style Transfer and Semantic Segmentation Framework, focusing on its core components. Section 4 discusses the experimental setup and results. Section 5 explores the interactions between the various modules within our framework. Finally, Section 6 concludes the paper, summarizing our findings and suggesting directions for future research.

2. Related Work

This section presents a concise review of relevant research, focusing on cross-domain semantic segmentation methods and recent advancements in style transfer for cross-domain tasks.

2.1. Unsupervised Domain Adaptation for Cross-Domain Semantic Segmentation

Cross-domain semantic segmentation is crucial for extending the applicability of remote sensing models to diverse environments. However, domain shifts caused by differences in imaging conditions, sensor characteristics, and geographic distributions often lead to significant performance degradation when models are directly transferred across domains [5]. In recent years, UDA has mitigated this issue by using adversarial training to align feature distributions and reduce inter-domain differences [7].

Early UDA methods primarily focused on feature-level and output-level adversarial alignment. For instance, adversarial training frameworks have been employed to match the feature distributions of the source and target domains, effectively reducing inter-domain discrepancies [7,8].

Recently, vision-language models and self-supervised learning strategies have been introduced to further improve UDA performance. Lim et al. leveraged vision-language models to relabel new classes in the target domain, addressing challenges posed by inconsistent taxonomies [25]. Subsequent research has introduced consistency-based regularization to enhance domain adaptation robustness. Zhou et al. proposed uncertainty-aware consistency regularization with dynamically weighted loss to promote reliable knowledge transfer across domains [26]. Li et al. incorporated weakly supervised constraints into the training objective to mitigate sensor and geographical domain shifts in remote sensing segmentation tasks [27]. Xu et al. proposed a self-embedding GAN framework to stabilize adversarial training and reduce the dependency on labeled data [28]. He et al. developed a cross-attention decomposition transformer equipped with gradient reversal blocks to explicitly reduce feature space domain gaps [29]. Luo proposed a teacher–student framework using spatial-aware data augmentation to mitigate pseudo-label noise and class imbalance in source-free adaptation [30]. Ren designed a mutual refinement and information distillation framework to enhance domain interaction and simplify testing with curriculum learning [31]. Wang introduced semantic augmentation across classes and domains to enhance generalization to unseen target domains [32].

While significant progress has been made, most existing UDA approaches mainly focus on aligning feature distributions at the semantic output or feature level. However, they often overlook the critical influence of style-level discrepancies, especially in very-high-resolution (VHR) remote sensing imagery, where large variations in illumination, seasonal appearance, and spectral responses exist between domains. These style inconsistencies lead to semantic distortions, even when feature-level alignment is performed, ultimately limiting segmentation accuracy and cross-domain robustness. Therefore, achieving a simultaneous alignment of both style and semantic structures remains a critical open problem, which our proposed framework aims to address.

2.2. Style Transfer for Cross-Domain Adaptation

Style transfer serves as a preprocessing step in cross-domain semantic segmentation by aligning source images with the target domain’s style. It mitigates visual style discrepancies between domains while preserving semantic content, thereby providing more consistent input data for domain adaptation.

Zhao proposed a self-training-guided domain disentanglement module to extract style features from both domains, employing a cross-domain self-training mechanism to stabilize adversarial training and improve segmentation performance [33]. Ni used integer programming to transfer target domain styles to source domain images with semantic constraints for category-level alignment [34]. Li introduced a semantic-preserving GAN by incorporating representation-invariant and semantic-preserving constraints, enabling unbiased source-to-target transformations for better domain-adaptive segmentation [35]. Psychogyios combined neural style transfer with segmentation models, applying localized style transfer with a boundary smoothing loss to optimize stylized region transitions [36]. Ye developed an attention-based multi-style transfer approach for instance segmentation, integrating convolutional block attention with conditional instance normalization [37]. Zhu addressed negative transfer caused by style differences with a shape-robust domain-adaptive segmentation algorithm, combining adjustable style transfer and feature differentiation [38]. Marco proposed a continual learning framework integrating style transfer to enhance cross-domain knowledge and mitigate forgetting in autonomous driving scenarios through a robust distillation framework [39]. To bridge the domain gap between synthetic and real-world data, Li proposed a pixel and feature contrastive alignment method, combining pixel-level style transfer with feature-level semantic alignment to improve segmentation [40]. Wang introduced an unrestricted adversarial attack method, embedding adversarial perturbations into style transfer to generate natural, highly transferable adversarial images with consistent style and semantics [41].

However, style transformation may result in semantic information loss or distortion, particularly in VHR remote-sensing data with complex semantic structures. Effectively integrating style transfer with cross-domain semantic segmentation remains a critical challenge.

3. Methodology

3.1. Overview Framework

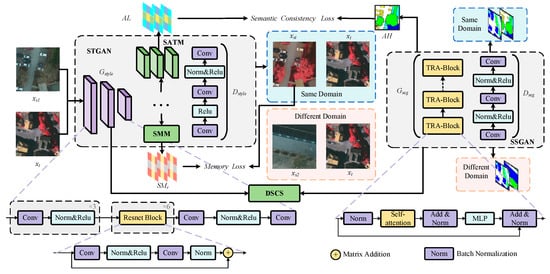

Our SAF is illustrated in Figure 2. The Semantic Segmentation Adversarial Generative Network (SSGAN) consists of two primary components: the semantic segmentation network , which is constructed using multiple TRA-Blocks, and the semantic discriminator , implemented with lightweight convolutional layers. The Style Transfer Generative Adversarial Network (STGAN) comprises the style transfer generation network , which integrates multiple ResNet blocks (RBs) with the SATM, and the style discriminator , ensuring that the generated images accurately reflect the target domain style.

Figure 2.

Overview of the proposed SAF. The source domain image and target domain image are first processed by the STGAN to generate the style-transferred image . The SATM preserves semantic consistency during style adaptation by extracting key semantic cues, while the SMM retrieves historical style and semantic information to guide the adaptation process. Subsequently, the triplet is input into the SSGAN for semantic segmentation, where high-level features from provide feedback to the SATM for generating semantic attention maps. The style discriminator evaluates whether the input image originates from the target domain. The semantic discriminator evaluates the domain similarity of . Intermediate features from and are aligned through DSCS, promoting dynamic cross-domain feature synergy and collaborative optimization.

The SATM dynamically captures key semantic attention during the style transfer process, while the SSGAN outputs high-order semantic feature maps . Inspired by the brain’s top–down and bottom–up information flow regulation, which facilitates prediction and error correction between lower and higher cortical regions, the SATM ensures semantic feature consistency in style transformation tasks. The SMM facilitates the conversion of short-term memory into long-term memory through an explicit memory network and leverages semantic guidance to dynamically store and retrieve historical style information. DSCS, inspired by the integrative function of the prefrontal cortex in processing heterogeneous visual features, projects style and semantic features from different domains into a shared domain-invariant feature space. By explicitly modeling the interrelationship between style and semantic features, the DSCS reinforces semantic consistency at the style level, enabling dynamic cross-domain feature alignment and collaborative optimization.

This work focuses on a labeled source domain dataset and an unlabeled target domain dataset , which share the same target categories but differ in shooting conditions, locations, and styles. Images sampled from these datasets are denoted as and , respectively. First, are fed into the STGAN to generate the style-transferred image . This process facilitates the transfer of semantic annotations from the source domain to the target domain, ensuring that not only aligns with the style distribution of the target domain image but also preserves the semantic consistency of the source domain image . Subsequently, are fed into the SSGAN. generates semantic predictions for , resulting in predicted labels . To ensure domain consistency, evaluates whether these predicted labels originate from the same domain.

To better coordinate style transfer and semantic segmentation in cross-domain scenarios, our framework design draws inspiration from several cognitive processes observed in the human brain. Specifically, SAF models the bidirectional information flow and biological feedback mechanisms between lower-order and higher-order cortical regions. In biological systems, lower-order sensory areas primarily process the basic features of sensory input, while higher-order areas, such as the parietal and prefrontal cortices, generate predictions based on contextual information and provide top-down feedback to dynamically regulate sensory processing. Motivated by this mechanism, the SATM extracts key but ambiguous semantic cues at a lower level and guides the SSGAN to progressively refine the semantic structure, thereby establishing a dynamic feedback loop that maintains semantic consistency throughout the style adaptation process.

Moreover, the memory retrieval and imaginative reconstruction capabilities of the hippocampus are reflected in the design of the SMM. In the brain, imagination typically involves integrating multiple memory features to reconstruct coherent experiences. Analogously, the SMM stores and retrieves historical semantic and style-related features, using semantic guidance to selectively retrieve the most contextually relevant style features, thereby enhancing the generation of semantically aligned style representations. Furthermore, inspired by the association cortex’s role in integrating multimodal sensory information, the DSCS is designed to project style and semantic features from different domains into a unified latent space. This integration facilitates the alignment and fusion of heterogeneous features, enabling effective cross-domain feature synergy and improving both style adaptation quality and semantic segmentation performance.

3.2. Style Transfer Generative Adversarial Network with Semantically Aware Modules

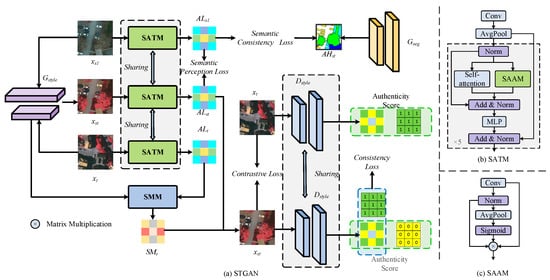

The proposed style transfer network framework STGAN, with semantic perception capabilities, is illustrated in Figure 3. First, is input into the RBs to generate the initial style-transferred image . To further enhance the semantic consistency of the generated images, are fed into the Semantic-Aware Transformer Module (SATM) for additional processing.

Figure 3.

The illustration of the style transfer process. (a) The structure of STGAN. The source domain image , target domain image , and the generated image are processed through a parameter-shared Semantic-Aware Transformer Module (SATM) to generate a semantic attention map, which is utilized to further refine . The style discriminator evaluates the credibility of and , determining whether the input images belong to the target domain. (b) The structure of SATM. (c) The structure of SAAM.

As shown in Figure 3b, SATM employs several lightweight attention mechanisms to dynamically focus on key semantic features in both the source and generated domain images, effectively guiding the style transformation process. Additionally, we integrated the Style Attention Module (SAM) into SATM, focusing on capturing fine-grained visual style features. First, local-style features are independently extracted for each channel using a convolution operation. Subsequently, pointwise convolution is applied to integrate information across different channels. Global average pooling is then used to aggregate the overall style information of the image into a unified global representation. Next, the global representation is aligned with local features through element-wise fusion, ensuring consistency between local and global style features. Finally, the style attention features and semantic features are fused to obtain the final semantic attention map .

The SATM dynamically refines by extracting semantic attention features. Specifically, are fed into the SATM to obtain the low-level semantic attention maps . The differences among are computed to measure semantic-aware loss and ensure semantic consistency during style transfer:

where represents the total number of elements in the semantic feature map. denotes the semantic attention value at the i-th position in , and refers to the corresponding semantic attention value in .

is utilized to further enhance , resulting in a style-transferred image with semantic consistency. This process can be expressed as follows:

where represents the channel mapping operation performed through convolution, and denotes the concatenation operation along dimension 1.

The design of the SATM is inspired by the brain’s top–down and bottom–up information flow regulation mechanism: lower-level brain regions process basic sensory input features, while higher-level regions generate corresponding feedback signals to dynamically adjust the activity of lower-level regions. For instance, when visual input is blurry, higher-level regions can infer possible content based on prior experience and provide feedback to lower-level regions, thereby modulating the visual cortex’s response to blurry input. Specifically, is input into the encoder layer of to obtain the high-level semantic attention map . The difference between the initial semantic attention map and the high-level semantic attention map is used to compute a semantic consistency loss, which dynamically optimizes SATM to ensure that critical semantic information is preserved during the style transfer process:

In this process, is treated as a pseudo-label, aiming for the predicted categories of the low-level semantic attention map to approximate as closely as possible. denotes the total number of pixels in each image, and represents the total number of categories. is the low-level semantic attention value at the i-th pixel in pseudo-category , while represents the low-level semantic attention value at the i-th pixel in category .

Finally, are input into to determine the authenticity of the images. adopts a three-layer convolutional structure to generate authenticity scores for each pixel, which can be expressed as follows:

where Relu refers to the Leaky Relu activation function and Norm represents the batch normalization layer.

3.3. Semantic-Driven Multi-Feature Memory Module for Style Transfer

Style transfer methods guided by a single image may deviate from the overall style distribution due to the incompleteness of style and semantic categories, necessitating the incorporation of a memory module for dynamic guidance. The hippocampus, as a critical memory region in the brain, can integrate and reconstruct multi-feature information from various sensory channels. Inspired by this mechanism, the proposed SMM adopts a dual-channel architecture consisting of a semantic memory bank and a style memory bank. By leveraging semantic guidance to dynamically store and retrieve historical style information, the SMM effectively mitigates the incompleteness of style and semantic categories in target domain images.

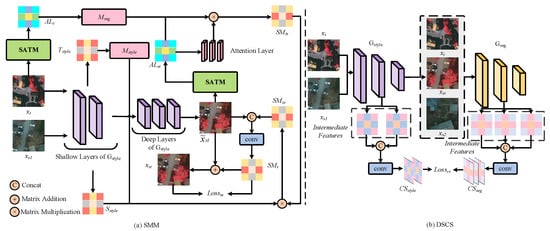

Figure 4a shows the structure of the SMM. In the experiment, we observed that the shallow layers of the style generator primarily capture style-specific features rather than performing specific style transformations. Therefore, is fed into the shallow layers of the style generator to extract the current target domain style representation , while is input into the corresponding network layers to obtain the current source domain style representation . This process can be expressed as follows:

where denotes the concatenation operation along dimension 1, and represents the output features extracted from the first to the third layers of the RBs.

Figure 4.

Illustration of the collaborative optimization of style and semantic features. (a) The structure of the SMM. is first fed into to construct a style memory bank, while SATM extracts semantic attention features to build a semantic memory bank. Through memory retrieval, the most relevant stored information is extracted and applied to refine the generated image , ensuring task-specific adaptation. (b) The structure of the DSCS. and are fed into and to construct the style memory space and the semantic memory space . By aligning cross-modal spaces, collaborative optimization of cross-domain and cross-modal features is achieved.

Additionally, we designed both short-term and long-term memory modules. The short-term memory stores the style and semantic features of the most recent five images, while the long-term memory retains the style and semantic features of up to 20 historical images. is stored into the short-term and long-term style memory , and the semantic attention map obtained by SATM is stored into the semantic short-term and long-term memory . This process can be expressed as follows:

where represents adaptive average pooling to a fixed output size of .

The semantic attention weight is obtained by applying attention layers to . is then employed to query the semantic memory , retrieving the semantic memory feature relevant to the current task. Subsequently, is applied to query , retrieving the memory features associated with the current source domain style. This process is expressed as follows:

where denotes a convolution operation with a kernel size of .

The short-term and long-term style memories, denoted as and , are extracted separately, and then adaptively fused to generate an integrated memory . This integrated memory is subsequently used to further optimize . The process is expressed as follows:

where denotes a convolution operation with a kernel size of , and represents concatenation along dimension 1. and are the memory fusion coefficient. The memory loss is calculated as follows:

where represents the total number of elements in the feature map.

3.4. Domain-Invariant Style-Semantic Center Space

We propose the domain-invariant style-semantic center space (DSCS), which explicitly incorporates the interrelationship between style and semantic features into the optimization objective by introducing a style-semantic central approximation loss. Inspired by the integrative function of the prefrontal cortex in the human brain for processing multimodal information, DSCS maps style and semantic features from different domains into a shared domain-invariant feature space. The workflow of DSCS is illustrated in Figure 4b. Specifically, we use the intermediate features extracted by and to construct the cross-domain style feature space and the cross-domain semantic feature space . This process is represented as follows:

where and denote the features extracted from layers 6 to 10 of and , respectively.

The DSCS dynamically refines the cross-domain style-semantic center through the style-semantic center approximation loss, defined as follows:

where is the total number of elements in the feature map.

The DSCS effectively mitigates visual style discrepancies between the source and target domains, reduces the interference of style differences on semantic feature extraction, and enables the dynamic collaborative optimization of cross-domain features. By progressively aligning the features of the source and target domains, DSCS enhances the feature extraction process of the semantic segmentation network, ensuring that the generated semantic features align with the target domain distribution at the style level. Acting as a dynamic feature fusion region, the center space adapts in real-time based on the distributional differences between the source and target domains, allowing the network to incrementally adapt to the target domain feature distribution.

3.5. Overall Objective Function

From an overarching perspective, our proposed model combines the concepts of style generation adversarial and semantic generation adversarial, and its objective function consists of four main components: the style generator loss , the style discriminator loss , the semantic generator loss , and the semantic discriminator loss .

- (1)

- Style Transfer GAN

The loss for the style generator is defined as a weighted combination of several sub-losses:

where represent the balance coefficients for style consistency loss, semantic perception loss, semantic alignment loss, and memory loss, respectively. In our framework, the loss balancing coefficients are set to , , , and . The model demonstrates stable performance under moderate variations in these parameters.

During the training of the style generator, the objective is for the style discriminator is to classify as belonging to the target domain. The style consistency loss is computed as follows:

where represents the score given by the style discriminator for the i-th pixel of , and is an all-ones matrix representing the target domain label. The purpose of is to ensure that the style features of the generated image closely align with those of the target domain.

To reduce the distance between and while increasing the distance between and , are fed into , and the intermediate outputs from its selected layers are concatenated. These concatenated feature maps are then passed through a 2D adaptive average pooling layer for dimensionality reduction and subsequently normalized to produce . The detailed process is defined as follows:

where represents the L2 normalization operation, ensuring the feature vectors are normalized, and is an adaptive average pooling operation that outputs a fixed size of . denotes the features extracted from the 4th, 8th, and 12th layers of . The style contrastive loss is defined as follows:

where is the contrastive loss coefficient, used to balance the weight between positive and negative sample distances.

The style discriminator assigns scores to input images, with scores closer to 1 indicating that the image style is more similar to the target domain, and scores closer to 0 suggesting that the style is more aligned with the source domain. The loss function for the style discriminator is expressed as follows:

where and represent the scores assigned by the discriminator to the i-th pixel of the style-transferred image and the source domain image, respectively. represents a matrix filled with ones, denoting the target value for the target domain, while represents a matrix filled with zeros, denoting the target value for the source domain. are weighting factors balancing the contributions of the source and target domain loss terms.

- (2)

- Semantic Segmentation GAN

The input to the semantic triplet adversarial generation network consists of , with corresponding semantic labels . Since serves as the final semantic segmentation target and lacks a label, the semantic feature generator loss is defined as a weighted combination of several partial losses:

where are balancing coefficients for the style-semantic center approximation loss , the semantic segmentation loss , and the semantic adversarial loss , respectively. The semantic segmentation loss is computed as follows:

where is the total number of pixels in each image, and is the number of categories. is the probability that the i-th pixel in is predicted to belong to class , and is the corresponding label as a binary vector.

To ensure the extracted semantic features align with the same domain and maintain domain-invariant characteristics, the semantic adversarial loss is employed. encourages the discriminator to classify as originating from the same domain. The calculation of is defined as follows:

represents the score assigned by the discriminator, indicating the probability that a given pair of images originates from the same domain. During the training of the semantic adversarial generation model, the style-transferred image is expected to align with the target domain, while is considered representative of the source domain. Therefore, the discriminator is designed to classify as belonging to the same domain and as originating from different domains. The loss function for the discriminator is defined as follows:

4. Experiments

4.1. Datasets

The ISPRS Vaihingen and Potsdam Challenge datasets are widely recognized benchmarks for the ISPRS 2D semantic labeling challenges, focusing on very-high-resolution (VHR) remote sensing imagery. Both datasets provide pixel-wise annotations for five semantic classes: impervious surfaces, buildings, low vegetation, trees, and cars. For training, images from both datasets are cropped into 512 × 512 patches with an overlap of 200 pixels in both width and height. To augment the training set, data augmentation techniques, including horizontal flipping, vertical flipping, and resizing (scaling factors of 0.5 and 1.5), are applied.

The Vaihingen dataset consists of 32 IRRG (Infrared, Red, and Green) images, each with a resolution of 2500 × 2000 pixels. Among these, 16 images contain pixel-level annotations. We randomly selected 10 labeled images for training, while the remaining annotated images were used for testing.

The Potsdam Dataset comprises 38 images, each with a resolution of 6000 × 6000 pixels and a ground sampling distance (GSD) of 5 cm. This dataset includes two channel combinations: IRRG (Infrared, Red, and Green) and RGB (Red, Green, and Blue). Among the 38 images, 24 are annotated at the pixel level. We randomly selected 10 labeled images for training, while the remaining annotated images were used for testing. Unlike Vaihingen, Potsdam’s test patches were generated without overlap.

Vaihingen IRRG and Potsdam IRRG differ not only in scene structure but also in spectral properties, requiring models to effectively address spectral discrepancies. Furthermore, the transition from Potsdam IRRG to Potsdam RGB introduces an additional challenge, as models must adapt to domain shifts caused by spectral variations while preserving semantic consistency. The diversity in urban environments, including differences in vegetation, building layouts, and road networks, further increases the complexity of cross-domain adaptation. These characteristics make the selected datasets highly suitable for evaluating the robustness and generalization of the proposed method.

4.2. Experimental Settings

- (1)

- Evaluation Metrics

To evaluate the performance of semantic segmentation, we adopt several widely used metrics, including Intersection over Union (IoU), mean Intersection over Union (mIoU), F1 score (F1), and mean F1 score (mF1), following the standard calculation formulas outlined in [42]. The IoU and mIoU metrics measure the overlap between the predicted pixel set and the ground truth set , and are defined as follows:

where is the total number of semantic classes. The symbols and denote the intersection and union operations, respectively. The F1 score and mean F1 score are calculated based on precision and recall, as follows:

where , , and represent the number of true positives, false positives, and false negatives, respectively.

Additionally, to evaluate the quality of the style-transferred images, we adopt the Fréchet Inception Distance (FID) [43]. Assuming these features follow multivariate Gaussian distributions, let and denote the mean and covariance of the generated and real image features, respectively. The FID is then computed as follows:

where denotes the trace of a matrix, and is the matrix square root of the product of covariances. A lower FID value indicates that the generated image distribution is closer to that of the real images, reflecting better visual fidelity and domain consistency.

- (2)

- Comparison Method

UDA Methods: To validate the effectiveness of our proposed SAF in cross-domain semantic segmentation, we compare it with several state-of-the-art UDA models, including TriADA [44], MCD [45], ResiDualGAN [46], CIA-UDA [34], DAFormer [47], Swin-Unet [48], HRDA [49], RS3Mamba [50], CACP [51], CSI [25], CACC [52], and DGSS [53].

The baseline method refers to a standard semantic segmentation model trained solely on labeled source domain data, without applying any domain adaptation techniques or style transfer mechanisms. During inference, the trained model is directly tested on the unlabeled target domain data. Th54is setup serves as a conventional reference point for evaluating performance degradation due to domain shift. To ensure a fair comparison, the network architecture, training configurations, and loss functions of the baseline are kept consistent with those of our proposed method, except that the style transfer module and collaborative components (i.e., SATM, SMM, and DSCS) are not included.

Style Transfer Methods: To evaluate the effectiveness of our style transfer module, we compare SAF with multiple representative style transfer models, including CycleGAN [54], CUT [55], and UNSB [56].

- (3)

- Implementation Details

For the style transfer model, we employ the Adam optimizer for both the generator and discriminator, with a learning rate of 0.0002 and betas (0.5, 0.999). For the semantic segmentation model, the generator was optimized using the SGD optimizer, with a momentum of 0.9, a weight decay of 0.0001, and an initial learning rate of 0.00025. The discriminator is trained with the Adam optimizer, using betas (0.9, 0.99) and an initial learning rate of 0.0001. All models are trained for 100,000 iterations on a GeForce RTX 3090 GPU, and all experiments are implemented using the PyTorch framework.

- (4)

- Cross-Domain Task Setup

To comprehensively evaluate the cross-domain generalization capability of the proposed framework, we design two cross-domain semantic segmentation tasks using three distinct datasets: Vaihingen IRRG, Potsdam IRRG, and Potsdam RGB.

The first task focuses on adaptation from Vaihingen IRRG to Potsdam IRRG. Although both datasets are captured in the near-infrared-red-green (IRRG) spectral range, significant domain discrepancies exist due to differences in urban morphology, building scales, vegetation density, road structures, seasonal variations, and acquisition conditions. Specifically, Vaihingen features a small-town environment characterized by tightly clustered residential buildings and narrower streets, while Potsdam represents a larger, more systematically organized urban area with broader roads and larger residential and commercial structures. These factors introduce considerable shifts in object shapes, sizes, spatial distributions, and spectral responses, despite the apparent spectral similarity.

The second task addresses adaptation from Potsdam RGB to Vaihingen IRRG, introducing an even greater level of domain shift. In addition to variations in urban structure and scene layout, this task involves a cross-spectral modality gap: Potsdam imagery is captured in the visible RGB range, while Vaihingen imagery employs an IRRG configuration incorporating near-infrared information. This substantial difference in spectral characteristics alters the visual appearance of key semantic categories, particularly vegetation, impervious surfaces, and buildings, thereby posing additional challenges for feature alignment and semantic consistency.

By covering both spatial and spectral domain shifts, these two tasks provide a rigorous and comprehensive evaluation of the proposed framework’s ability to achieve robust cross-domain generalization. They encompass variations in spatial structure, spectral modality, object morphology, seasonal imaging conditions, and data acquisition parameters, ensuring that the model is tested under diverse and realistic domain adaptation scenarios.

4.3. Cross-Domain Semantic Segmentation Task Between Vai and Potsdam Irrg

Table 1 presents a detailed comparison of our proposed SAF model against advanced methods for cross-domain semantic segmentation. The results demonstrate that SAF outperforms all baseline and advanced approaches, achieving the highest mIoU of 63.1% and mF1 of 75.9%. These results highlight SAF’s superior generalization capabilities and its ability to accurately segment various semantic categories across domains.

Table 1.

Comparison of IoU (%), mIoU (%), F1 (%), and mF1 (%) for cross-domain semantic segmentation from Vaihingen IRRG to Potsdam IRRG. “Average Results” represent the mean values of IoU and F1 scores across all semantic categories, corresponding to mIoU and mF1, respectively.

Specifically, for small objects such as “Car”, SAF achieves an IoU of 61.4% and an F1-score of 72.5%, outperforming strong methods like RS3Mamba (59.5% IoU, 69.4% F1) and DGSS (58.9% IoU, 69.2% F1), demonstrating its robustness in capturing fine-grained features under domain shifts. For large and complex structures like “Building”, SAF attains an IoU of 73.5% and an F1-score of 83.9%, exceeding recent competitive methods such as DAFormer (71.2% IoU, 82.5% F1), HRDA (72.4% IoU, 83.5% F1), and even DGSS (71.6% IoU, 82.7% F1). In natural scene categories, SAF consistently outperforms existing methods. For “Tree” and “Low Vegetation”, SAF achieves IoUs of 50.5% and 58.3%, respectively, which are higher than those achieved by TriADA (48.6% and 57.9%) and RS3Mamba (48.9% and 54.9%), showcasing stronger adaptability to category-level variations and subtle appearance differences across domains. In the “Surface” category, SAF further achieves remarkable results, with an IoU of 71.9% and an F1-score of 82.5%, again surpassing DGSS (66.2% IoU, 80.0% F1) and CACC (68.1% IoU, 80.3% F1).

These improvements can be attributed to the synergistic contributions of the SATM, SMM, and DSCS modules. Specifically, the SATM enhances semantic consistency during style adaptation by dynamically preserving critical object-level features, the SMM retrieves semantically aligned historical features to optimize cross-domain style transfer, and the DSCS reinforces domain-invariant feature embedding through the joint alignment of style and semantic representations. This collaborative design enables the SAF to reduce domain-induced semantic distortions and appearance shifts simultaneously, thereby achieving robust cross-domain segmentation performance. Notably, the SAF improves the mIoU and mF1 by 28.0% and 24.6% over the baseline, demonstrating its substantial advantage in cross-domain segmentation.

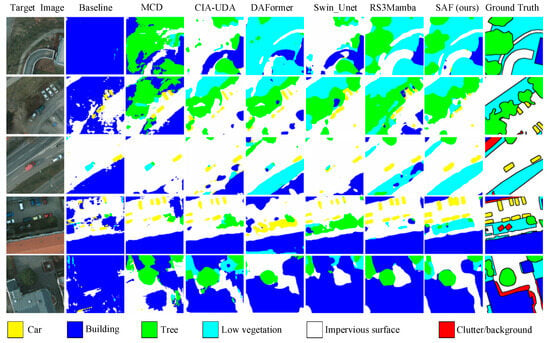

Figure 5 presents the visualization results comparing the performance of different UDA algorithms on cross-domain semantic segmentation tasks. Compared to other methods, the SAF exhibits a superior ability to produce more accurate pixel-wise category predictions, effectively capturing semantic details and preserving class consistency across domains.

Figure 5.

Visualization of different UDA algorithms applied to cross-domain semantic segmentation from Vaihingen IRRG to Potsdam IRRG.

Table 2 provides the FID scores for different methods across various domain adaptation scenarios. Lower FID scores indicate better alignment between the source and target domains. Among the compared methods, SAF consistently achieves the lowest FID scores across all scenarios, demonstrating superior style transfer and domain adaptation performance. Specifically, the SAF outperforms CycleGAN, CUT, and UNSB by significant margins, highlighting its effectiveness in preserving content fidelity while aligning style characteristics. These improvements can be attributed to the integration of the SATM and SMM: the SATM ensures that style transformation is guided by semantically critical features rather than purely visual patterns, while the SMM provides memory-assisted retrieval of semantically consistent style features, reinforcing domain-aligned generation. By jointly optimizing semantic preservation and style consistency, the SAF achieves high-quality, domain-consistent results in cross-domain adaptation tasks.

Table 2.

FID scores (lower is better) for different style transfer tasks.

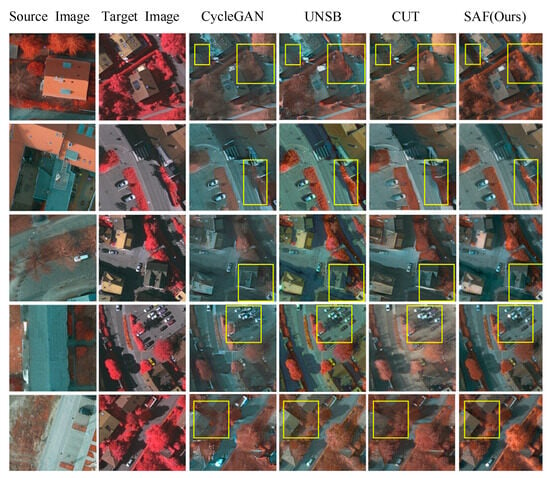

Figure 6 presents a visual comparison of target domain images generated by different style transfer algorithms for the Vaihingen IRRG to Potsdam IRRG task. The images generated by CycleGAN exhibit noticeable overall style changes but introduce significant visual artifacts in local details, such as blurred building edges and distorted vehicle shapes in specific regions. Although UNSB mitigates some of these artifacts, it still struggles with detail blurriness, particularly at road intersections and vegetation boundaries. In contrast, CUT improved visual consistency but compromised critical textures in vegetation and buildings. Compared to these methods, the proposed SAF approach achieves a better balance between style preservation and semantic structure integrity. The style-transferred images generated by SAF effectively mitigate visual artifacts and mitigate detail blurriness, while maintaining fine-grained textures and accurate semantic information.

Figure 6.

Visualization of the results from different style transfer algorithms for the Vaihingen IRRG to Potsdam IRRG task.

4.4. Cross-Domain Semantic Segmentation Task Between Potsdam Rgb and Vai

Table 3 presents the comparative results of cross-domain semantic segmentation performance from Potsdam R-G-B to Vaihingen using various state-of-the-art methods. The proposed SAF model demonstrates significant superiority, achieving the highest mean Intersection over Union (mIoU) of 55.2% and mean F1-score (mF1) of 69.1%, outperforming all baseline and advanced models. Specifically, for small objects like “Car”, the SAF achieves an IoU of 31.8% and an F1-score of 46.8%, surpassing HRDA and RS3Mamba, which previously performed well in this category. For large structures such as “Building”, the SAF achieves an IoU of 75.2% and an F1-score of 83.9%, further solidifying its capability to capture intricate semantic patterns. In natural scene categories such as “Tree” and “Low Vegetation”, the SAF demonstrates balanced performance with IoUs of 57.9% and 44.5%, and F1-scores of 74.8% and 60.8%, respectively. For “Impervious Surface”, SAF achieves an IoU of 66.6% and an F1-score of 79.4%, reflecting its ability to generalize well across varying spatial scales and feature distributions.

Table 3.

Comparison of IoU (%), mIoU (%), F1 (%), and mF1 (%) for cross-domain semantic segmentation from Potsdam RGB to Vaihingen IRRG. “Average Results” represent the mean values of IoU and F1 scores across all semantic categories, corresponding to mIoU and mF1, respectively.

Compared to competitive methods such as CIA-UDA and DAFormer, the SAF consistently achieves better performance across all semantic categories. Furthermore, when compared with other recent state-of-the-art methods such as CACP, CSI, CACC, and DGSS, the SAF shows clear advantages in both mIoU and mF1 metrics. While CACP and DGSS achieve competitive segmentation in certain categories, their overall mIoU and mF1 scores remain lower than the SAF, indicating limited generalization across diverse semantic categories. For instance, CACP achieves an mIoU of 52.6% and DGSS achieves 53.3%, both lower than the SAF’s 55.2%. Similarly, CSI and CACC achieve mIoUs of 51.9% and 53.2%, respectively, still inferior to the SAF. These improvements can be attributed to the collaborative operation of the SATM, SMM, and DSCS. The SATM enhances cross-domain feature alignment by emphasizing critical semantic structures during style adaptation, the SMM facilitates semantically guided retrieval of historical features to maintain feature consistency under spectral shifts, and the DSCS projects heterogeneous style and semantic representations into a unified latent space, thereby reducing domain-induced discrepancies. Through this synergistic design, the SAF effectively bridges both spatial and spectral gaps, resulting in superior adaptability to diverse feature scales and complex cross-domain variations.

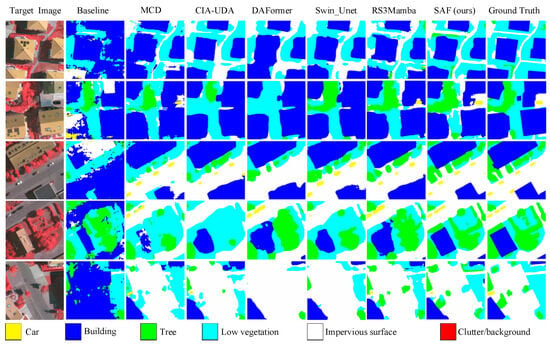

Figure 7 presents the visualization results of different UDA algorithms. Baseline and MCD exhibit significant misclassifications and poorly defined boundaries. While CIA-UDA, DAFormer, Swin_Unet, and RS3Mamba show progressive improvements in feature segmentation, they still struggle with fine-grained details and complex regions. By effectively integrating style transfer and semantic segmentation, the SAF achieves more accurate segmentation, sharper boundary delineation, and higher semantic consistency.

Figure 7.

Visualization of different UDA algorithms applied to the cross-domain semantic segmentation task from Potsdam RGB to Vaihingen IRRG.

Figure 8 presents the visualization results of different style transfer algorithms for the Potsdam R-G-B to Vaihingen task. It can be observed that CycleGAN introduces significant misalignment in style transformation, leading to incorrect style adaptation in large buildings and vegetation areas. CUT mitigates excessive style deviations in certain regions through its unidirectional consistency constraint but fails to preserve the clear geometric structure of buildings. UNSB performs better in reducing artifacts but still suffers from information loss when dealing with details and small objects. In contrast, the SAF not only achieves a high degree of style alignment with the target domain but also demonstrates superior performance in preserving fine-grained details. For instance, vehicles retain clearer shapes, building contours are better preserved, and vegetation textures closely resemble the real distribution of the target domain.

Figure 8.

Visualization of the results from different style transfer algorithms for the Potsdam RGB to Vaihingen IRRG task.

5. Discussion

5.1. Ablation Study

The ablation study results in Table 4 highlight the contribution of each module in enhancing cross-domain semantic segmentation. Using only STGAN and SSGAN results in low mIoU (52.1%) and mF1 (62.8%) with a high FID (115.8), indicating limitations in segmentation accuracy and image quality. Introducing SATM improves mIoU to 59.4% and mF1 to 72.3%, while reducing FID to 80.6, demonstrating that semantic-aware attention enhances style adaptation by preserving critical object-level structures. The integration of the SMM further refines feature retrieval, raising the mIoU to 61.2% and mF1 to 73.4%, and lowering the FID to 70.4, indicating that semantic-guided memory retrieval enhances cross-domain feature consistency. Incorporating the DSCS strengthens style-semantic alignment, achieving 62.7% mIoU and 74.6% mF1, and reducing FID to 65.3, reflecting the benefits of projecting heterogeneous features into a shared domain-invariant space. The full SAF achieves the best results (mIoU 63.1%, mF1 75.9%, FID 62.1), demonstrating the synergistic effect of integrating the SATM, SMM, and DSCS. This consistent trend is also observed on the Potsdam RGB to Vaihingen IRRG task, validating SAF’s robustness and generalizability across different domain adaptation scenarios.

Table 4.

Ablation study results of our proposed SAF. Higher mIoU (%) and mF1 (%) indicate better segmentation performance, while lower FID values indicate better style transfer quality. The checkmarks (√) indicate that the corresponding module is included in the configuration for each task.

To assess the efficiency and effectiveness of the SATM, we evaluated its computational overhead and impact on cross-domain segmentation and style transfer performance. Although the SATM introduces a moderate increase in inference time and memory consumption due to the use of five lightweight semantic-aware attention blocks, the additional cost remains acceptable, owing to dimension reduction strategies and compact design. The SATM effectively improves style adaptation, as evidenced by a notable reduction in the FID, by dynamically focusing on semantically critical regions during transformation. In addition, its hierarchical attention design preserves essential semantic structures, leading to consistent gains in mIoU and mF1 across different tasks.

5.2. Impact of Semantic Guidance on Style Transfer

Semantic guidance is primarily achieved through the SATM and further enhanced by the SMM and DSCS. Integrating SATM into the STGAN significantly reduces FID scores, reflecting its ability to dynamically capture key semantic features and align them with visual styles during style transfer. This ensures that the generated images maintain semantic content consistency, even under substantial domain shifts while reducing artifacts and improving overall domain alignment. The inclusion of the SMM further lowers the FID scores by leveraging memory-guided retrieval and the storage of historical style features. By incorporating semantically relevant information, SMM mitigates inconsistencies arising from incomplete or diverse domain-specific data, enhancing the model’s adaptability to complex target domain characteristics. The DSCS reduces the impact of domain-specific variations and enhances the coherence of style and semantic features. The complete SAF achieves the lowest FID scores, demonstrating that semantic guidance not only improves the quality of generated images but also ensures domain consistency.

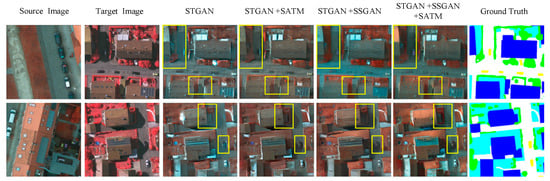

The ablation study results in Figure 9 illustrate the progressive impact of different modules on style transfer quality. Without semantic guidance, the style transfer process relying solely on the STGAN leads to the loss of semantic details in vegetation and buildings. Introducing the SATM helps preserve the semantic information and maintain color consistency in buildings and roads. The addition of the SSGAN further enhances fine-grained details, such as vehicle structures and building contours, through semantic constraints. By integrating adversarial generation at both the style and semantic levels, the SAF facilitates a more precise and context-aware style adaptation process, achieving superior semantic consistency and visual coherence.

Figure 9.

Visualization of the ablation study results for the Vaihingen IRRG to Potsdam IRRG task.

5.3. Impact of Style Transfer on Semantic Segmentation

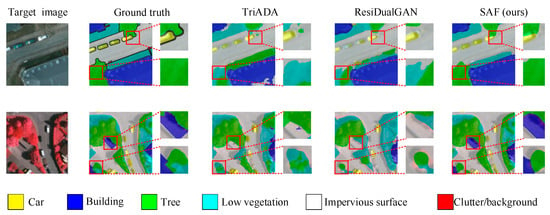

Style transfer plays a pivotal role in enhancing semantic segmentation performance in cross-domain adaptation by addressing style discrepancies between source and target domains. These discrepancies often impede effective semantic feature extraction and generalization. Integrating style transfer into the SAF, particularly through the STGAN model, ensures that source domain images are transformed to align with the target domain’s visual style while preserving semantic content, thereby enhancing the adaptability of the segmentation network. For challenging small objects like “Car”, the alignment of style features improves both IoU and F1 scores, while for complex structures like “Building”, style transfer mitigates domain inconsistencies, yielding higher accuracy. Additionally, natural scene elements such as “Tree” and “Low Vegetation” benefit from this alignment, with the model showing improved generalization under diverse target domain conditions. By addressing domain discrepancies and aligning visual styles, style transfer optimizes semantic segmentation by enhancing feature consistency, improving adaptability, and ensuring robust performance across diverse target domains. Figure 10 presents the performance of various UDA algorithms in cross-domain semantic segmentation. Compared to TriADA and ResiDualGAN, which do not incorporate style transfer, the SAF demonstrates significantly superior results. In close-up regions such as roads, vegetation, and impervious surfaces, the SAF effectively captures boundaries and accurately predicts categories. By integrating style transfer with semantic segmentation, the SAF enhances feature alignment and achieves more precise pixel-level predictions, further demonstrating its robustness in cross-domain scenarios.

Figure 10.

Visualization of cross-domain semantic segmentation results.

5.4. Cross-Domain Task Analysis

To further validate the effectiveness of the SAF under domain shift conditions, we analyzed the cross-domain segmentation results on the two designed tasks.

On the cross-domain task from Vaihingen IRRG to Potsdam IRRG, although both datasets share near-infrared spectral bands, substantial differences in urban morphology, building scale, vegetation distribution, and seasonal conditions pose significant adaptation challenges. The proposed framework consistently improves the mIoU and mF1 compared to the baseline and other competing methods, demonstrating its capability to align semantic structures across different urban layouts and environmental settings. Notably, the SATM plays a crucial role by preserving key object-level semantics during the style transfer process, enabling more stable feature alignment across domain variations.

For the more challenging cross-domain task from Potsdam RGB to Vaihingen IRRG, which involves both scene layout differences and cross-spectral modality gaps, the SAF achieves stable improvements in segmentation metrics while simultaneously reducing style discrepancies, as reflected by the FID scores. The integration of the SMM and DSCS modules effectively supports semantic-guided retrieval and cross-domain feature alignment, thereby enhancing domain invariance across both spatial and spectral dimensions. These results demonstrate that the SAF not only adapts to variations in spatial structures and object appearances, but also effectively generalizes across different spectral modalities, validating its robustness and flexibility in realistic cross-domain remote sensing segmentation scenarios.

6. Conclusions

In this study, we propose a brain-inspired synergistic adversarial framework (SAF) to address domain shifts in cross-domain semantic segmentation for high-resolution remote sensing imagery. By integrating style transfer and semantic segmentation into a unified adversarial learning framework, the SAF effectively enhances model generalization across different domains. To achieve collaborative optimization between style and semantic features, we introduce three key modules: the SATM, inspired by the brain’s hierarchical feedback mechanism, dynamically captures key semantic features to ensure semantic consistency during style transformation; the SMM, motivated by the hippocampus’ memory retrieval and consolidation functions, stores and retrieves historical style features to enhance adaptation to the target domain; and the DSCS, which aligns style and semantic features within a domain-agnostic latent space to reduce inter-domain style discrepancies while preserving semantic integrity.

Experiments on the ISPRS Vaihingen and Potsdam datasets demonstrate that the SAF outperforms state-of-the-art UDA and style transfer methods. Notably, the SAF effectively addresses both cross-spectral and cross-domain shifts, achieving superior results in adaptation tasks involving Vaihingen IRRG, Potsdam IRRG, and Potsdam RGB. This demonstrates its robustness in mitigating spectral discrepancies and structural variations.

Future work will focus on expanding the SAF to broader cross-domain and cross-spectral scenarios. This study demonstrates the effectiveness of brain-inspired mechanisms in preserving semantic integrity during style transfer while leveraging style adaptation to refine semantic feature learning, providing a promising direction for domain adaptation in remote sensing imagery.

Author Contributions

Conceptualization, X.W. and H.W.; methodology, X.W.; software, X.W. and Y.J.; validation, X.W. and X.L.; formal analysis, X.W.; investigation, X.Y.; resources, H.W.; data curation, Y.J.; writing—original draft preparation, X.W.; writing—review and editing, X.W. and H.W.; visualization, X.L.; supervision, H.W.; project administration, H.W.; funding acquisition, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Postgraduate Research and Practice Innovation Program of Jiangsu Province under Grant KYCX23_0363, the Interdisciplinary Innovation Fund for Doctoral Students of Nanjing University of Aeronautics and Astronautics under Grant KXKCXJJ202402, the Fundamental Research Funds for the Central Universities under Grant NJ2024015, the Project for Full-Stack Autonomous Computing Industrial Large Model Application from Edge to Cloud under Grant BHSD17 under the Special Program for New Technology Transformation in Manufacturing Pilot Cities, the Key Laboratory of Nondestructive Detection and Monitoring Technology for High-Speed Transportation Facilities, Ministry of Industry and Information Technology, and the Jiangsu Provincial Key Laboratory of Culture and Tourism for Nondestructive Testing and Safety Traceability of Cultural Relics.

Data Availability Statement

The data presented in this article are publicly available on GitHub at https://github.com/cindrew123/SAF (accessed on 23 May 2025).

Acknowledgments

The authors gratefully acknowledge the support from Nanjing University of Aeronautics and Astronautics.

Conflicts of Interest

Author Xianming Yang was employed by Phytium Information Technology Co., Ltd. All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, D. Study of land cover classification based on knowledge rules using high-resolution remote sensing images. Expert Syst. Appl. 2011, 38, 3647–3652. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the state-of-the-art technologies of semantic segmentation based on deep learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Zhang, H.; Jiang, Z.; Zheng, G.; Yao, X. Semantic Segmentation of High-Resolution Remote Sensing Images with Improved U-Net Based on Transfer Learning. Int. J. Comput. Intell. Syst. 2023, 16, 181. [Google Scholar] [CrossRef]

- Luo, Y.; Zheng, L.; Guan, T.; Yu, J.; Yang, Y. Taking a Closer Look at Domain Shift: Category-Level Adversaries for Semantics Consistent Domain Adaptation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2502–2511. [Google Scholar] [CrossRef]

- Liu, X.; Yoo, C.; Xing, F.; Oh, H.; El Fakhri, G.; Kang, J.W.; Woo, J. Deep Unsupervised Domain Adaptation: A Review of Recent Advances and Perspectives. APSIPA Trans. Signal Inf. Process. 2022, 11, e25. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Huang, J.; Guan, D.; Xiao, A.; Lu, S. Model Adaptation: Historical Contrastive Learning for Unsupervised Domain Adaptation without Source Data. Adv. Neural Inf. Process. Syst. 2021, 34, 3635–3649. [Google Scholar]

- Zhu, J.; Guo, Y.; Sun, G.; Yang, L.; Deng, M.; Chen, J. Unsupervised domain adaptation semantic segmentation of high-resolution remote sensing imagery with invariant domain-level prototype memory. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5603518. [Google Scholar] [CrossRef]

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.-H.; Chandraker, M. Learning to adapt structured output space for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- Wang, K.; Kim, D.; Feris, R.; Betke, M. CDAC: Cross-domain attention consistency in transformer for domain adaptive semantic segmentation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 11519–11529. [Google Scholar]

- Yang, Y.; Chen, Q.; Liu, Q. A dual-channel network for cross-domain one-shot semantic segmentation via adversarial learning. Knowl.-Based Syst. 2023, 275, 110698. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, Z.; Chen, H.; Ma, X.; Xie, C.; Xing, W.; Zhao, L.; Song, W. Image Style Transfer Algorithm Based on Semantic Segmentation. IEEE Access 2021, 9, 54518–54529. [Google Scholar] [CrossRef]

- Ettedgui, S.; Abu-Hussein, S.; Giryes, R.J.A. ProCST: Boosting Semantic Segmentation using Progressive Cyclic Style-Transfer. arXiv 2022, arXiv:2204.11891. [Google Scholar] [CrossRef]

- Jing, Y.; Yang, Y.; Feng, Z.; Ye, J.; Yu, Y.; Song, M. Neural style transfer: A review. IEEE Trans. Vis. Comput. Graph. 2019, 26, 3365–3385. [Google Scholar] [CrossRef] [PubMed]

- Madokoro, H.; Takahashi, K.; Yamamoto, S.; Nix, S.; Chiyonobu, S.; Saruta, K.; Saito, T.K.; Nishimura, Y.; Sato, K. Semantic Segmentation of Agricultural Images Based on Style Transfer Using Conditional and Unconditional Generative Adversarial Networks. Appl. Sci. 2022, 12, 7785. [Google Scholar] [CrossRef]

- Collins, E.; Bala, R.; Price, B.; Susstrunk, S. Editing in Style: Uncovering the Local Semantics of GANs. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5770–5779. [Google Scholar]

- Li, P.; Yang, M. Semantic GAN: Application for Cross-Domain Image Style Transfer. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 910–915. [Google Scholar] [CrossRef]

- Ren, J.; Xia, F. Brain-inspired Artificial Intelligence: A Comprehensive Review. arXiv 2024, arXiv:2408.14811. [Google Scholar] [CrossRef]

- Ahmad, A.S.; Sumari, A.D.W. Cognitive artificial intelligence: Brain-inspired intelligent computation in artificial intelligence. In Proceedings of the 2017 Computing Conference (2017), London, UK, 18–20 July 2017; pp. 135–141. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhao, D.; Zhao, F.; Shen, G.; Dong, Y.; Lu, E.; Zhang, Q.; Sun, Y.; Liang, Q.; Zhao, Y.; et al. BrainCog: A spiking neural network based, brain-inspired cognitive intelligence engine for brain-inspired AI and brain simulation. Patterns 2022, 4, 100789. [Google Scholar] [CrossRef]

- Lin, C.; Pang, X.; Hu, Y. Bio-inspired multi-level interactive contour detection network. Digit. Signal Process. 2023, 141, 104155. [Google Scholar] [CrossRef]

- Huang, S.; Howard, C.M.; Hovhannisyan, M.; Ritchey, M.; Cabeza, R.; Davis, S.W. Hippocampal Functions Modulate Transfer-Appropriate Cortical Representations Supporting Subsequent Memory. J. Neurosci. 2023, 44, e1135232023. [Google Scholar] [CrossRef] [PubMed]

- Reid, A.T.; Bzdok, D.; Langner, R.; Fox, P.T.; Laird, A.R.; Amunts, K.; Eickhoff, S.B.; Eickhoff, C.R. Multimodal connectivity mapping of the human left anterior and posterior lateral prefrontal cortex. Brain Struct. Funct. 2016, 221, 2589–2605. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.; Kim, Y. Cross-Domain Semantic Segmentation on Inconsistent Taxonomy using VLMs. In Computer Vision–ECCV 2024. ECCV 2024; Lecture Notes in Computer Science; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; Volume 15123, pp. 18–35. [Google Scholar]

- Zhou, Q.; Feng, Z.; Gu, Q.; Cheng, G.; Lu, X.; Shi, J.; Ma, L. Uncertainty-Aware Consistency Regularization for Cross-Domain Semantic Segmentation. Comput. Vis. Image Underst. 2020, 221, 103448. [Google Scholar] [CrossRef]

- Li, Y.; Shi, T.; Zhang, Y.; Chen, W.; Wang, Z.; Li, H. Learning deep semantic segmentation network under multiple weakly-supervised constraints for cross-domain remote sensing image semantic segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 175, 20–33. [Google Scholar] [CrossRef]

- Xu, Y.; He, F.; Du, B.; Tao, D.; Zhang, L. Self-Ensembling GAN for Cross-Domain Semantic Segmentation. IEEE Trans. Multimed. 2023, 25, 7837–7850. [Google Scholar] [CrossRef]

- He, L.; Todorovic, S. Attention Decomposition for Cross-Domain Semantic Segmentation. In Computer Vision–ECCV 2024. ECCV 2024; Lecture Notes in Computer Science; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; Volume 15072, pp. 414–431. [Google Scholar]

- Luo, X.; Chen, W.; Liang, Z.; Yang, L.; Wang, S.; Li, C. Crots: Cross-Domain Teacher–Student Learning for Source-Free Domain Adaptive Semantic Segmentation. Int. J. Comput. Vis. 2024, 132, 20–39. [Google Scholar] [CrossRef]

- Ren, D.; Wang, S.; Zhang, Z.; Yang, W.; Ren, M.; Zhang, H. Unsupervised cross domain semantic segmentation with mutual refinement and information distillation. Neurocomputing 2024, 586, 127641. [Google Scholar] [CrossRef]

- Wang, M.; Liu, Y.; Yuan, J.; Wang, S.; Wang, Z.; Wang, W. Inter-Class and Inter-Domain Semantic Augmentation for Domain Generalization. IEEE Trans. Image Process. 2024, 33, 1338–1347. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Lyu, S.; Zhao, H.; Liu, B.; Chen, L.; Cheng, G. Self-training guided disentangled adaptation for cross-domain remote sensing image semantic segmentation. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103646. [Google Scholar] [CrossRef]

- Ni, H.; Liu, Q.; Guan, H.; Tang, H.; Chanussot, J. Category-level assignment for cross-domain semantic segmentation in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5608416. [Google Scholar] [CrossRef]

- Li, Y.; Shi, T.; Zhang, Y.; Ma, J. SPGAN-DA: Semantic-preserved generative adversarial network for domain adaptive remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5406717. [Google Scholar] [CrossRef]

- Psychogyios, K.; Leligou, H.C.; Melissari, F.; Bourou, S.; Anastasakis, Z.; Zahariadis, T. SAMStyler: Enhancing Visual Creativity with Neural Style Transfer and Segment Anything Model (SAM). IEEE Access 2023, 11, 100256–100267. [Google Scholar] [CrossRef]

- Ye, W.; Liu, C.; Chen, Y.; Liu, Y.; Liu, C.; Zhou, H. Multi-style transfer and fusion of image’s regions based on attention mechanism and instance segmentation. Signal Process. Image Commun. 2023, 110, 116871. [Google Scholar] [CrossRef]

- Zhu, S.; Tian, Y. Shape robustness in style enhanced cross domain semantic segmentation. Pattern Recognit. 2023, 135, 109143. [Google Scholar] [CrossRef]

- Toldo, M.; Michieli, U.; Zanuttigh, P. Learning with Style: Continual Semantic Segmentation Across Tasks and Domains. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 7434–7450. [Google Scholar] [CrossRef]

- Li, T.; Roy, S.; Zhou, H.; Lu, H.; Lathuilière, S. Contrast, Stylize and Adapt: Unsupervised Contrastive Learning Framework for Domain Adaptive Semantic Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 4869–4879. [Google Scholar]

- Wang, X.; Chen, H.; Sun, P.; Li, J.; Zhang, A.; Liu, W.; Jiang, N. AdvST: Generating Unrestricted Adversarial Images via Style Transfer. IEEE Trans. Multimed. 2024, 26, 4846–4858. [Google Scholar] [CrossRef]

- Wang, X.; Wang, H.; Jing, Y.; Yang, X.; Chu, J. A Bio-Inspired Visual Perception Transformer for Cross-Domain Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 1514. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Klambauer, G.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Nash Equilibrium. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4–9, 2017, Long Beach, CA, USA; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2018. [Google Scholar]

- Yan, L.; Fan, B.; Liu, H.; Huo, C.; Xiang, S.; Pan, C. Triplet Adversarial Domain Adaptation for Pixel-Level Classification of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3558–3573. [Google Scholar] [CrossRef]

- Saito, K.; Watanabe, K.; Ushiku, Y.; Harada, T. Maximum Classifier Discrepancy for Unsupervised Domain Adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3723–3732. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, P.; Sun, Z.; Chen, X.; Gao, H. ResiDualGAN: Resize-Residual DualGAN for Cross-Domain Remote Sensing Images Semantic Segmentation. Remote Sens. 2023, 15, 1428. [Google Scholar] [CrossRef]

- Hoyer, L.; Dai, D.; Gool, L.V. DAFormer: Improving Network Architectures and Training Strategies for Domain-Adaptive Semantic Segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 9914–9925. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2021; pp. 205–218. [Google Scholar]

- Hoyer, L.; Dai, D.; Van Gool, L. HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation. In Computer Vision–ECCV 2022. ECCV 2022; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13690. [Google Scholar]

- Ma, X.; Zhang, X.; Pun, M. RS3Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Xue, Y.; Tian, X.; Zhang, F.; Wen, X.; Gao, Z.; Chen, S. CACP: Covariance-Aware Cross-Domain Prototypes for Domain Adaptive Semantic Segmentation. IEEE Trans. Multimed. 2025, 1–12. [Google Scholar] [CrossRef]

- Dong, W.; Liang, Z.; Wang, L.; Tian, G.; Long, Q. Unsupervised domain adaptation segmentation algorithm with cross-domain data augmentation and category contrast. Neurocomputing 2025, 623, 129393. [Google Scholar] [CrossRef]

- Niu, H.; Xie, L.; Lin, J.; Zhang, S. Exploring Semantic Consistency and Style Diversity for Domain Generalized Semantic Segmentation. Proc. AAAI Conf. Artif. Intell. 2025, 39, 6245–6253. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Park, T.; Efros, A.A.; Zhang, R.; Zhu, J.-Y. Contrastive Learning for Unpaired Image-to-Image Translation. In Computer Vision–ECCV 2020. ECCV 2020; Lecture Notes in Computer Science; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; Volume 12354. [Google Scholar]

- Kim, B.; Kwon, G.; Kim, K.; Ye, J.-C. Unpaired Image-to-Image Translation via Neural Schrödinger Bridge. arXiv 2023, arXiv:2305.15086. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).