Elimination of Irregular Boundaries and Seams for UAV Image Stitching with a Diffusion Model

Abstract

1. Introduction

2. Related Work

2.1. Image Rectangling and Seam Cutting

2.2. Denoising Diffusion Probabilistic Models

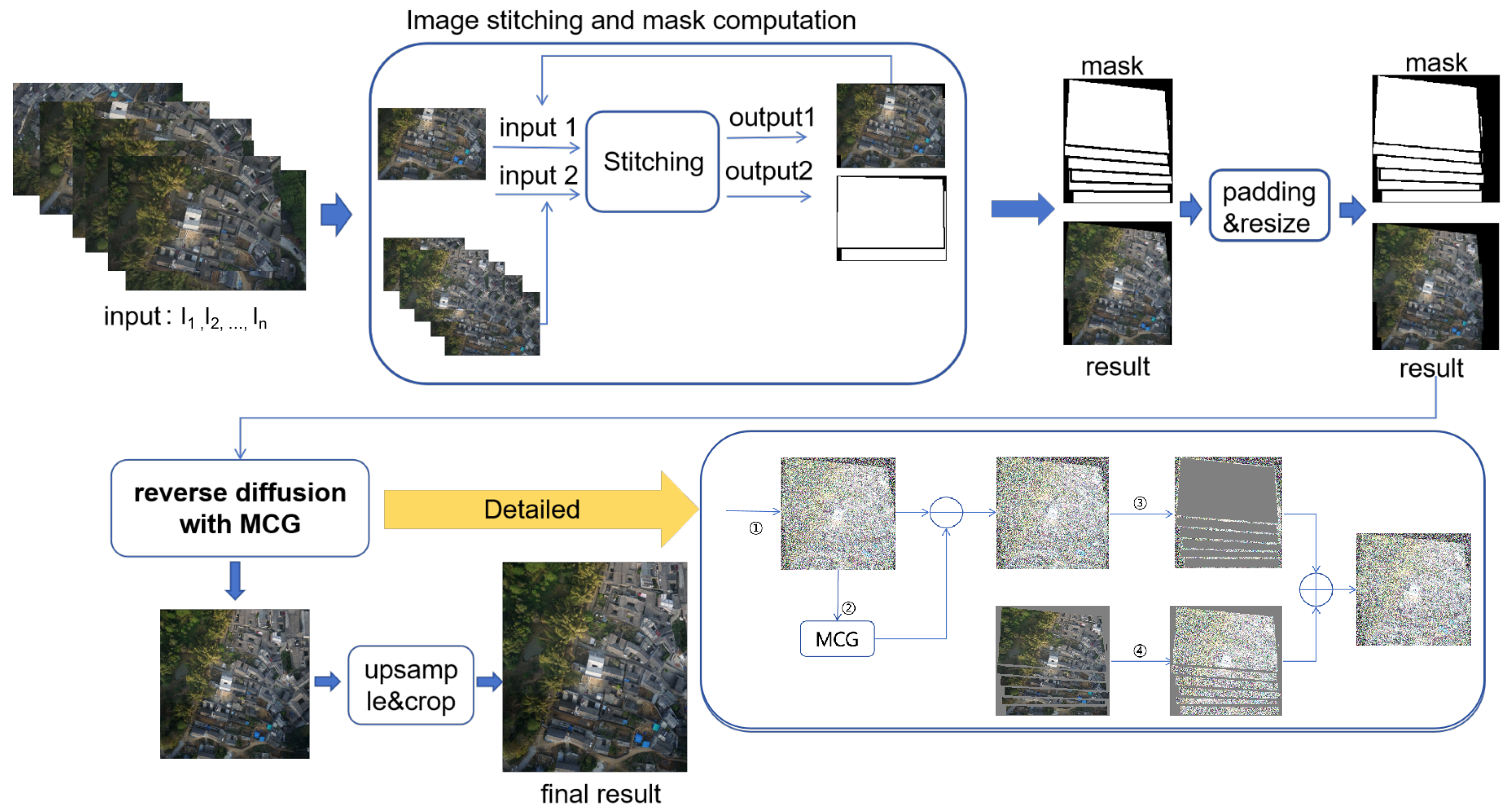

3. Methods

- (1)

- Compute masks for irregular boundaries and stitching seams that occur during image stitching, determining the areas that need to be repaired;

- (2)

- Adjust the input and output image sizes in an adaptive way to match the input and output dimensions of the diffusion model;

- (3)

- Employ the diffusion model to perform inverse diffusion on the stitched image with masks, repairing the masked regions.

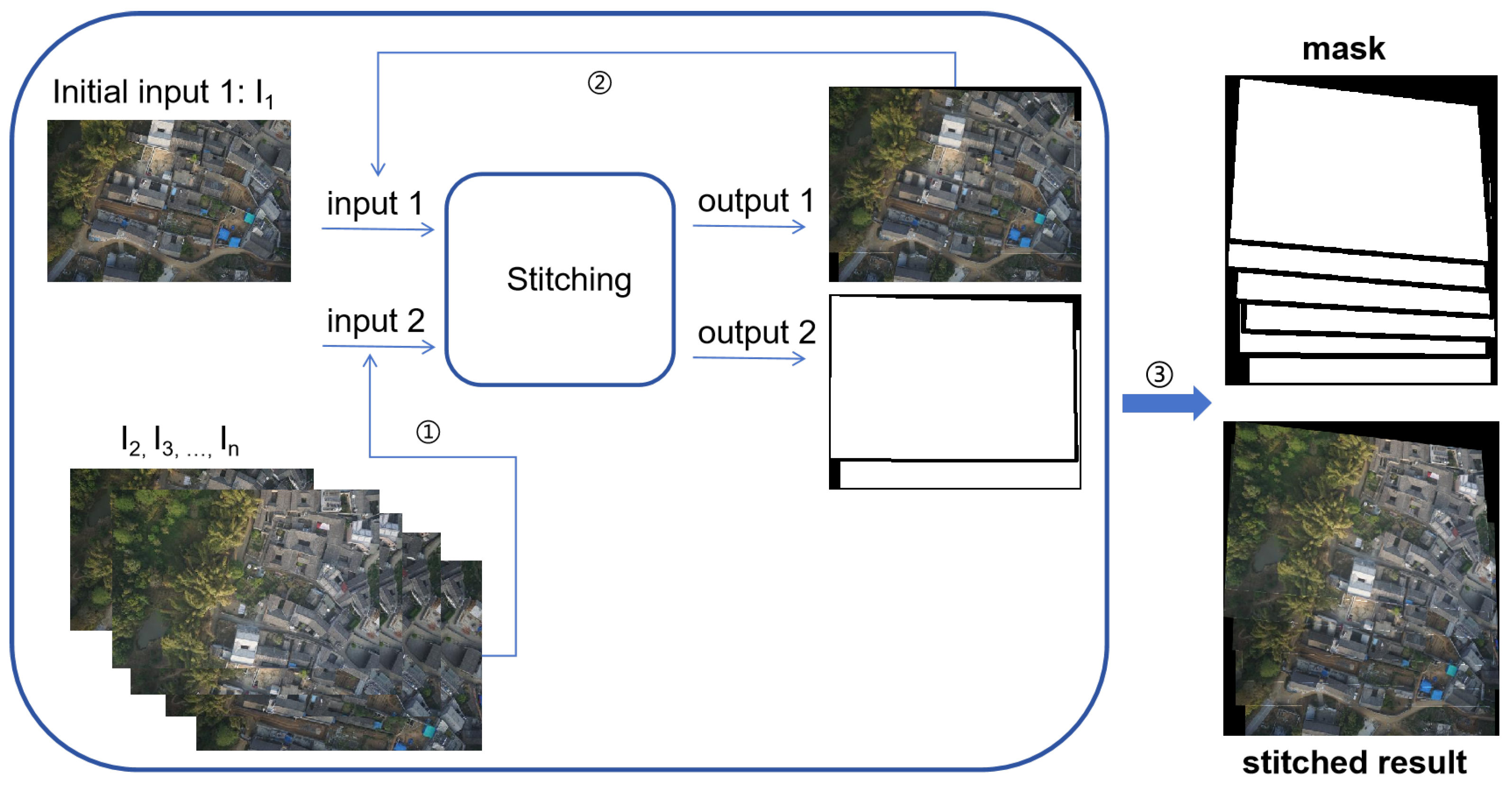

3.1. UAV Image Stitching and Mask Computation

3.1.1. UAV Image Alignment

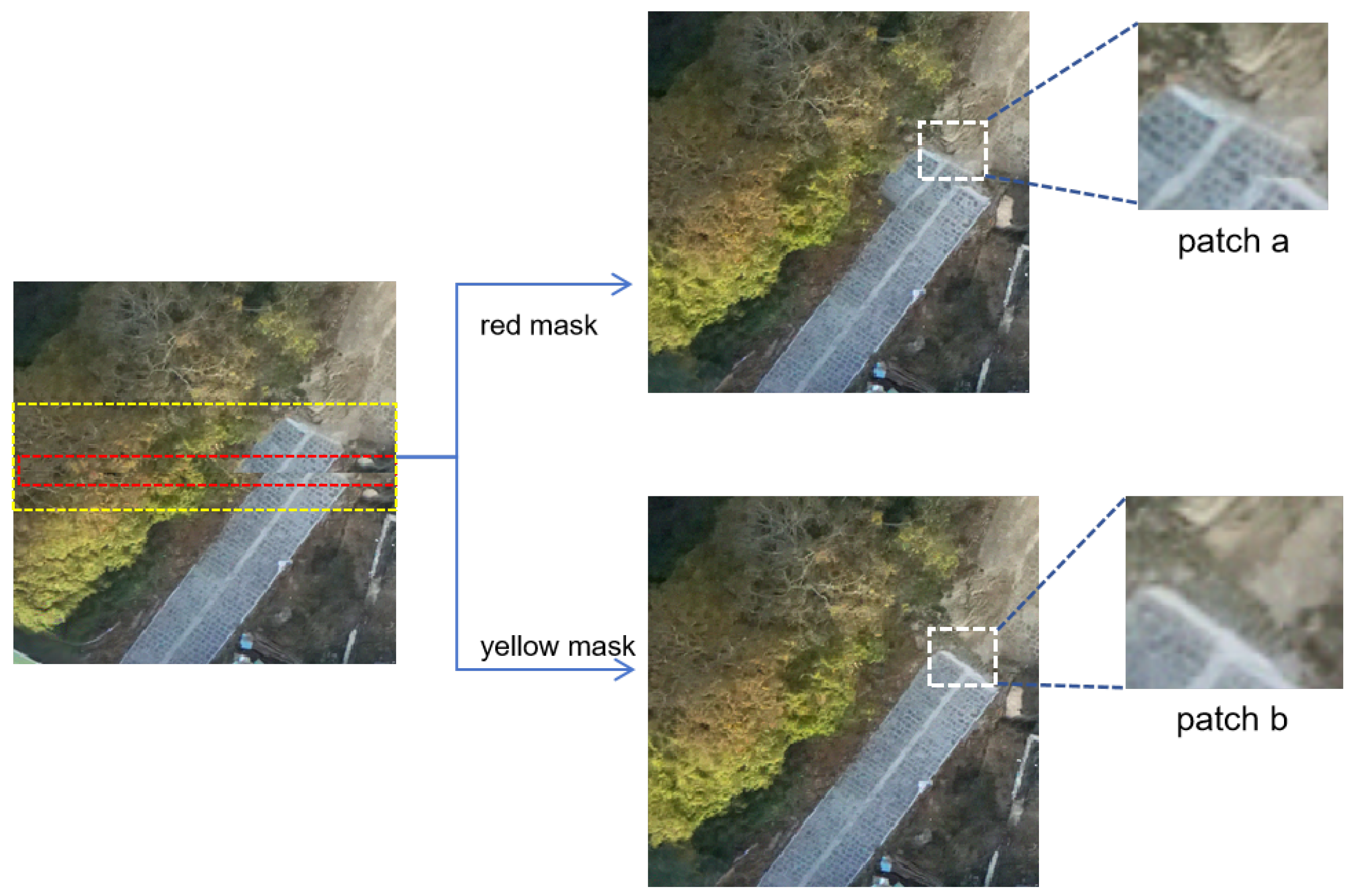

3.1.2. Computation of Masks for Stitched Images

| Algorithm 1 Stitching and Mask Calculation |

| Input: UAV images , and its corresponding blank mask Output: Stitched image and masks of seam and irregular boundary

|

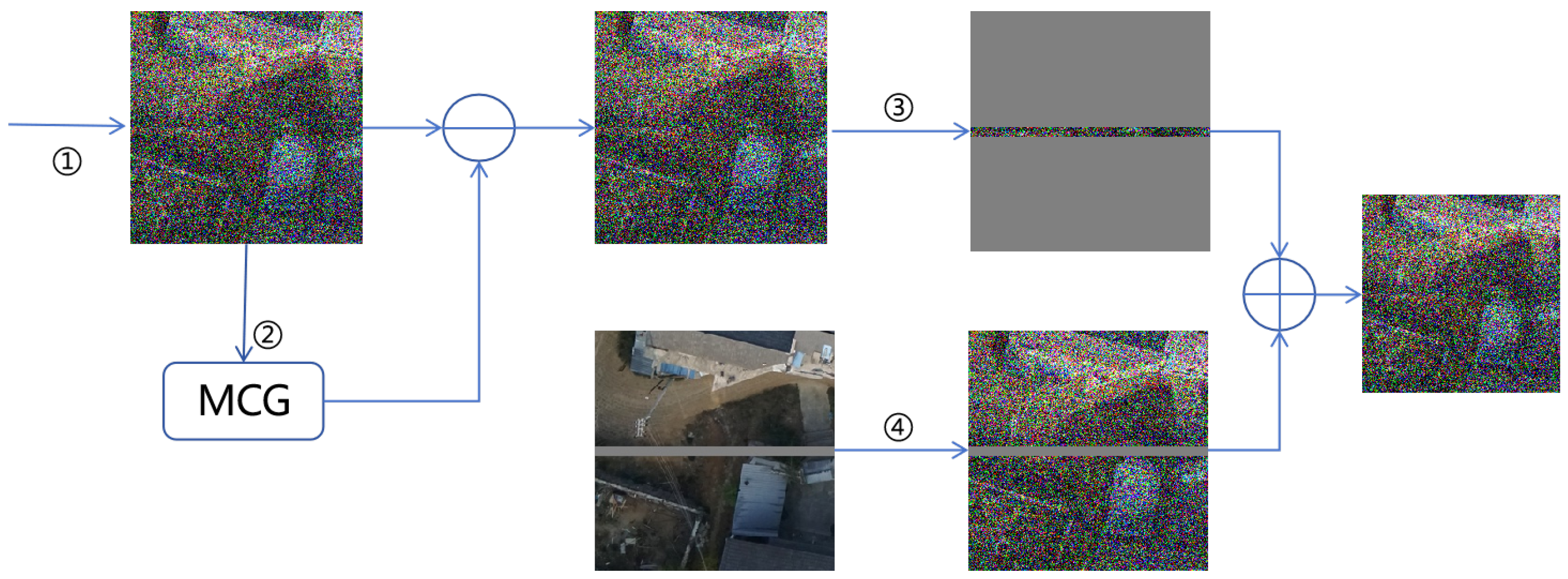

3.2. Irregular Boundaries and Stitching Seam Repairment with a Diffusion Model

3.3. Image Dimension Adaptation

4. Experiment

4.1. Data Preparation

4.2. Model Training Details

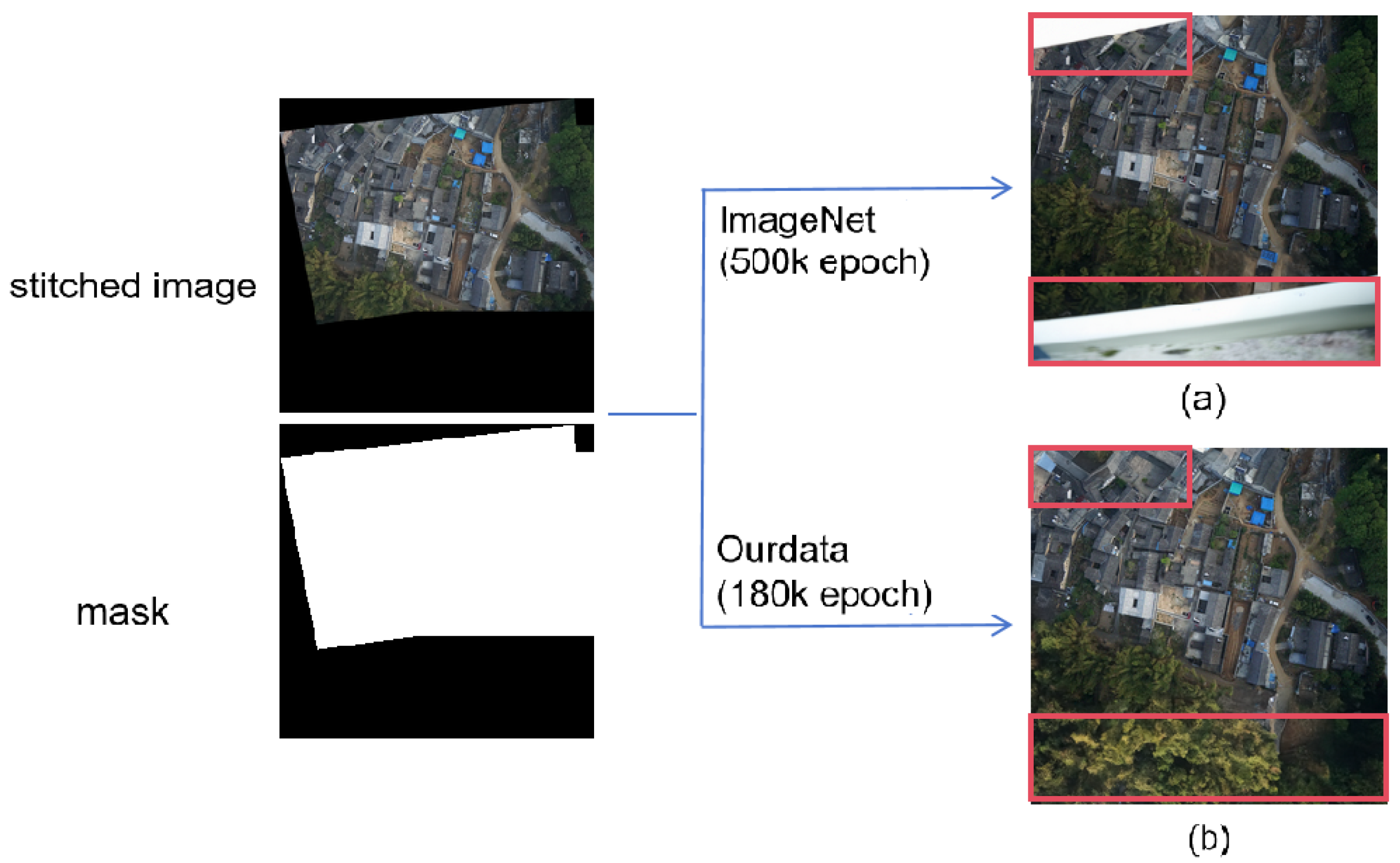

4.2.1. Diffusion Model Training

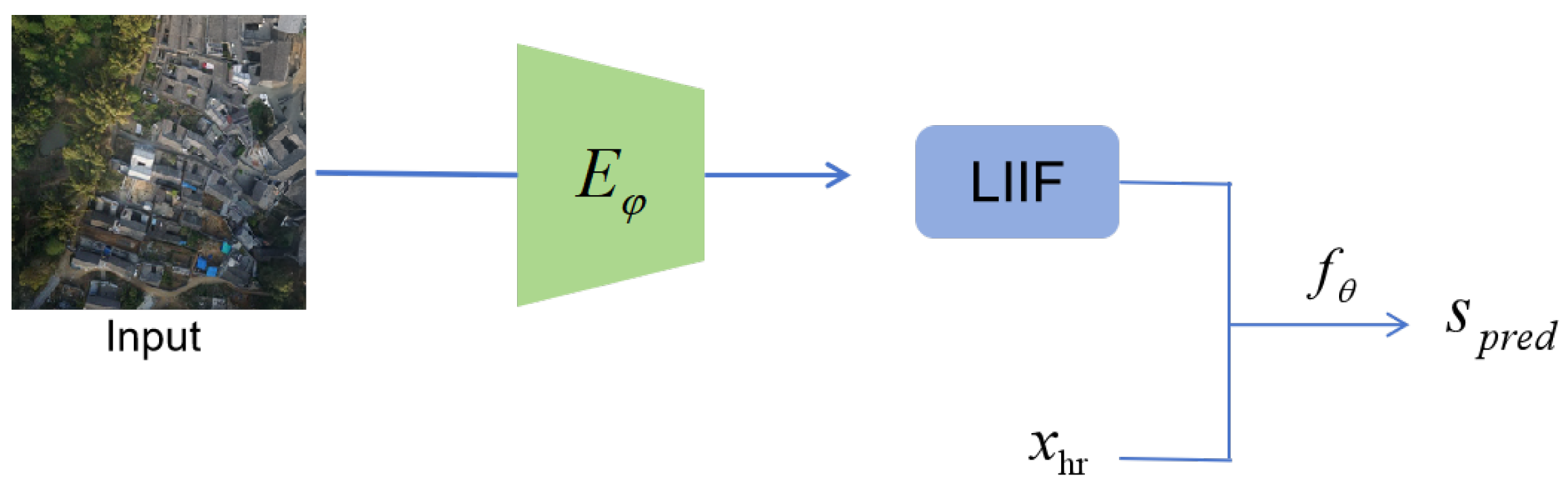

4.2.2. LIIF Training

4.3. UAV Image Stitching Results and Analysis

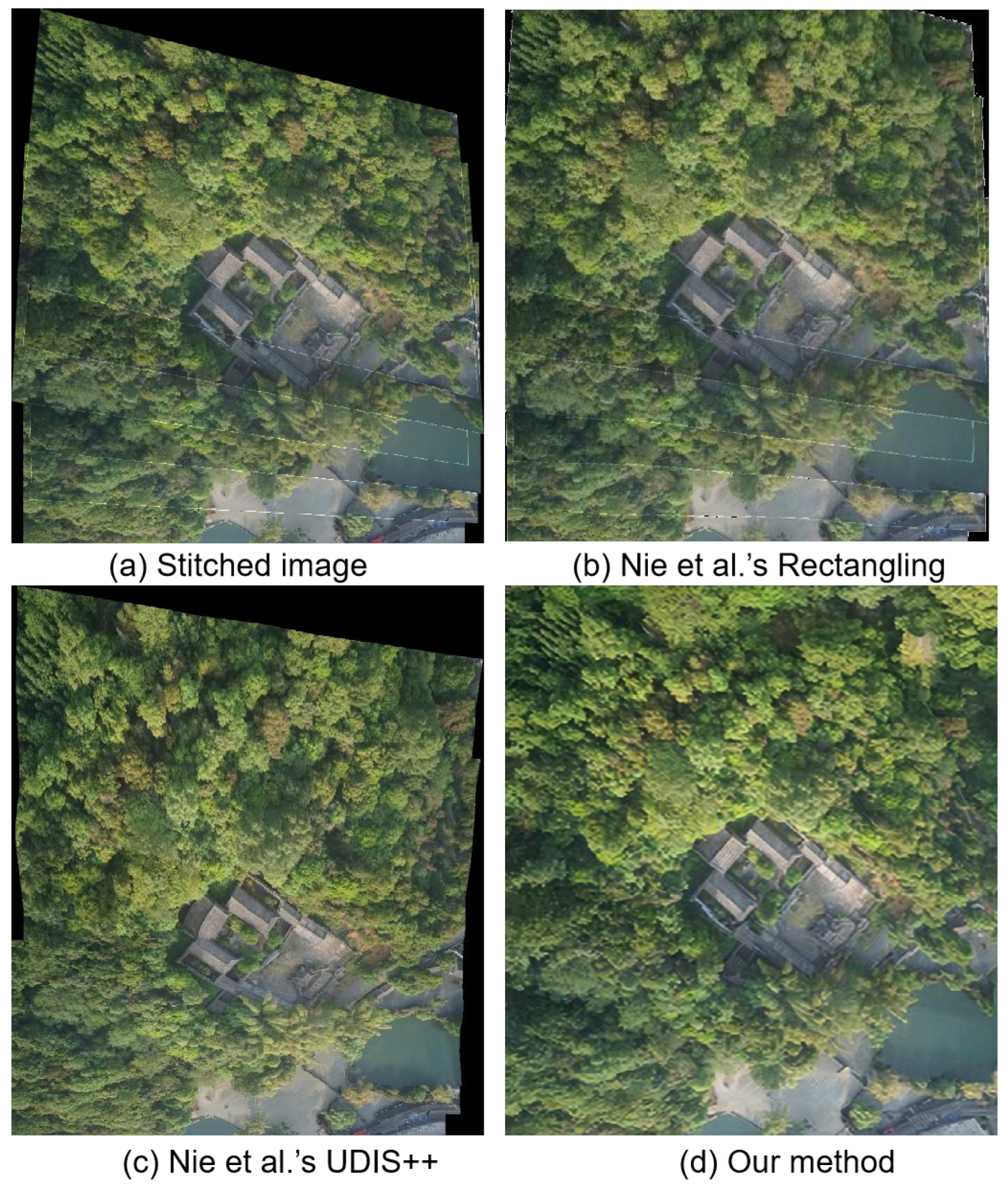

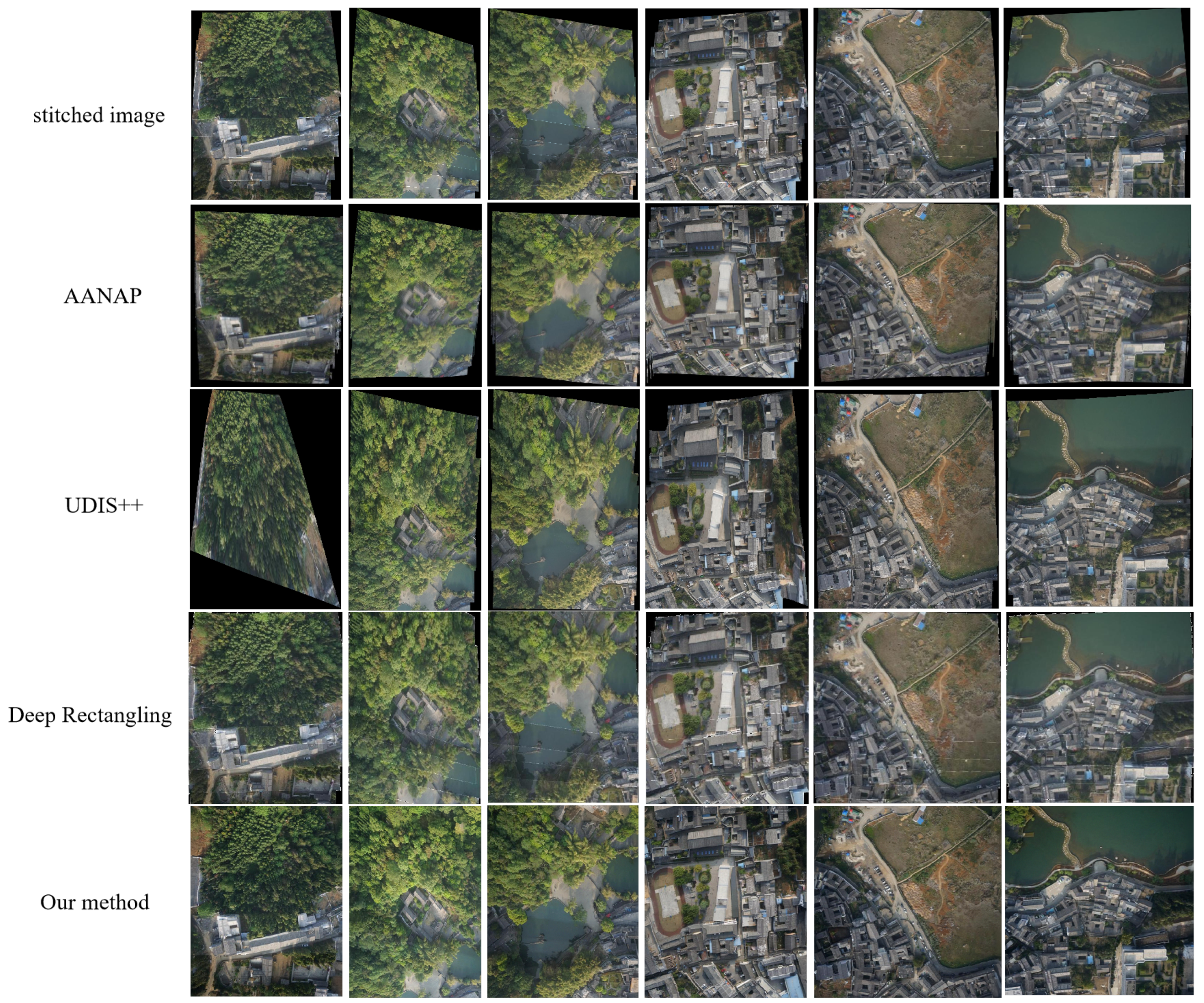

4.3.1. Overall Results Comparison

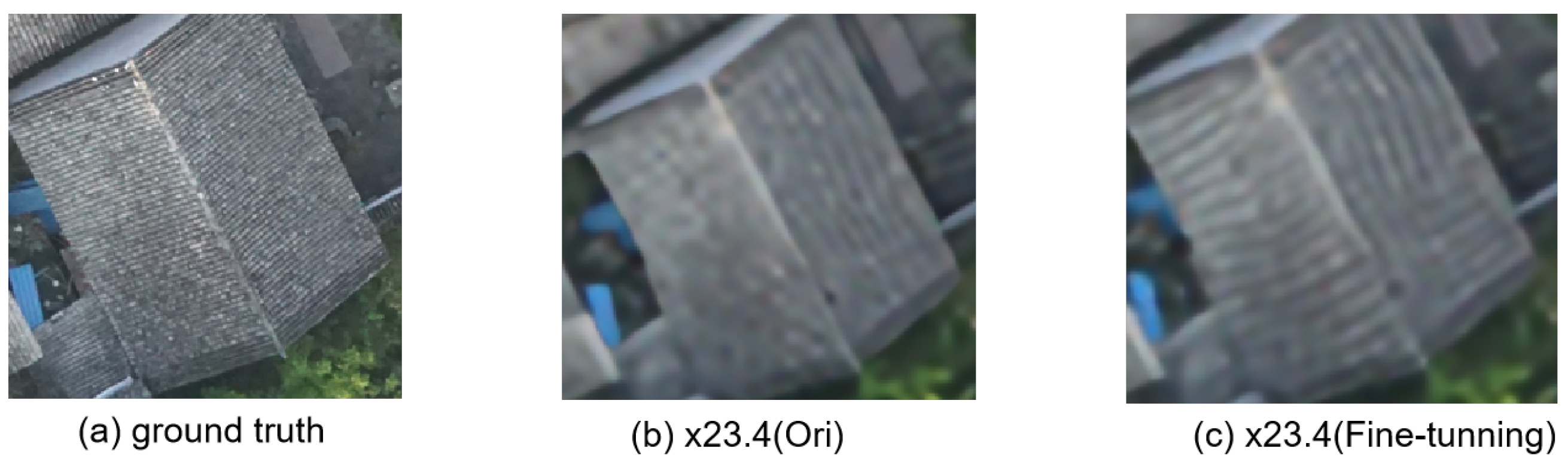

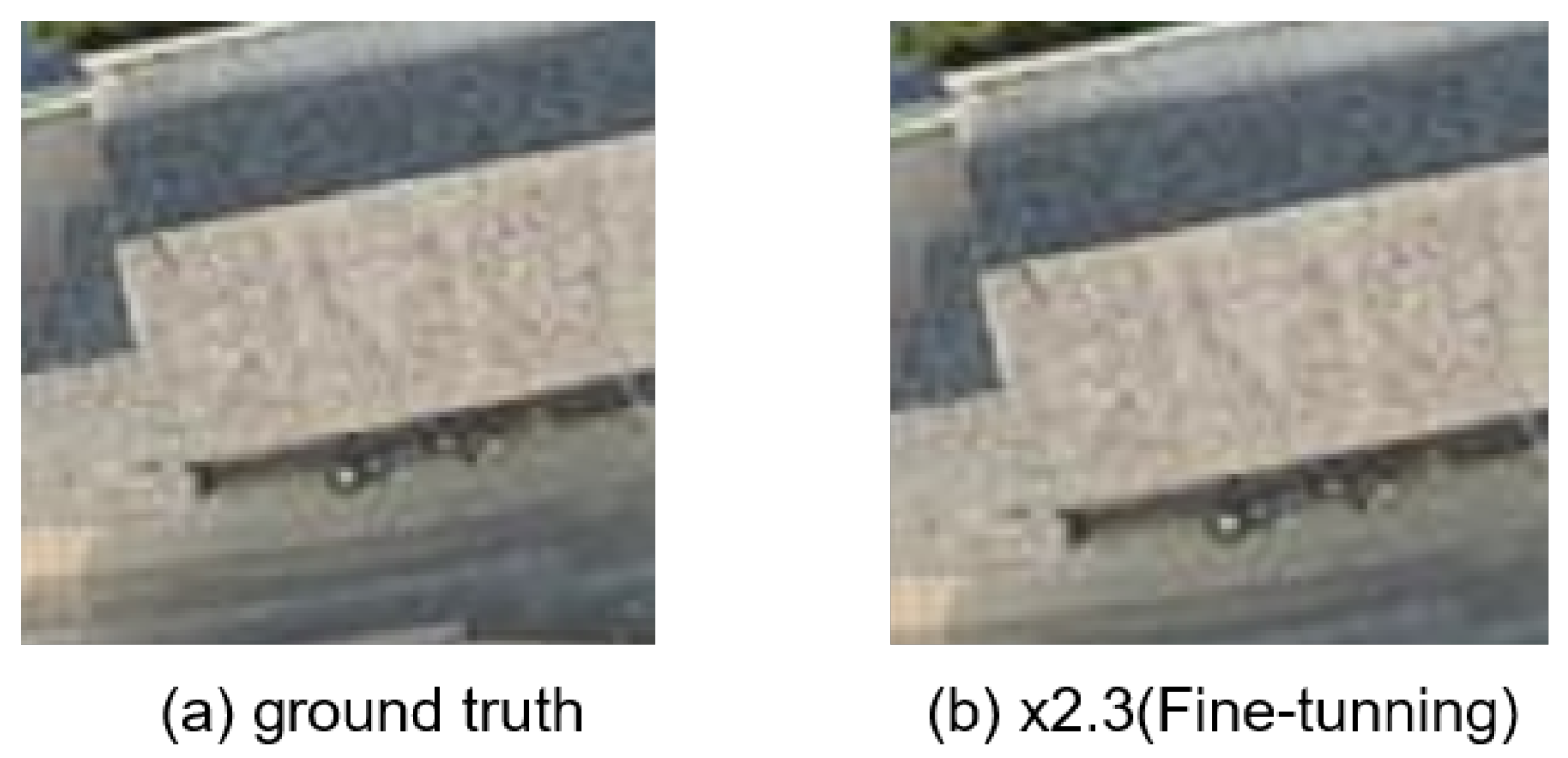

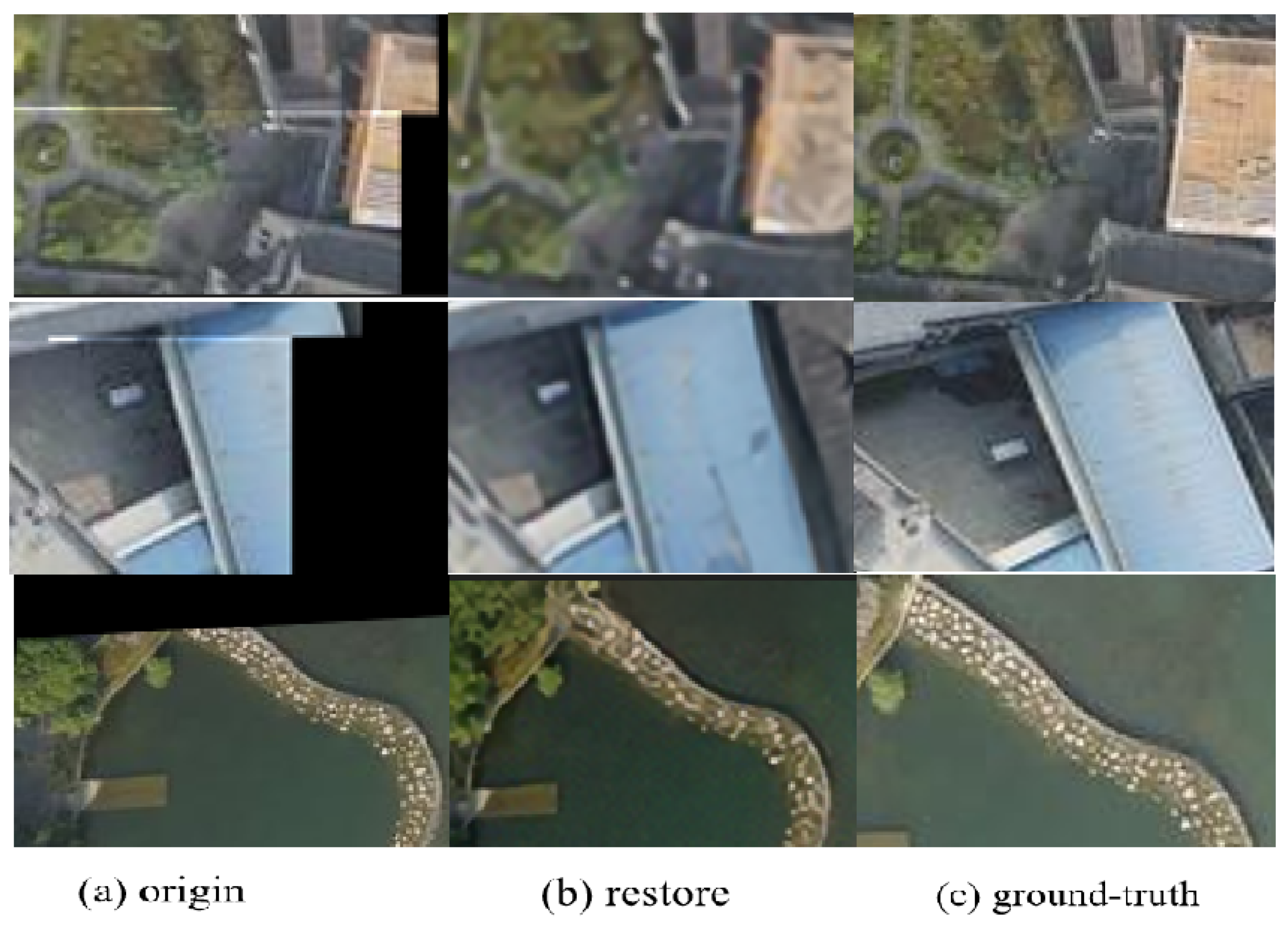

4.3.2. Seam Repairment Details

4.4. Quantitative Evaluation

4.5. Ablation Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LIIF | Local Implicit Image Function |

| DDPM | Denoising Diffusion Probabilistic Models |

| HNSW | Hierarchical Navigable Small World |

| SIFT | Scale-Invariant Feature Transform |

| UAV | Unmanned Aerial Vehicle |

| GAN | Generative Adversarial Network |

References

- Gómez-Reyes, J.K.; Benítez-Rangel, J.P.; Morales-Hernández, L.A.; Resendiz-Ochoa, E.; Camarillo-Gomez, K.A. Image mosaicing applied on UAVs survey. Appl. Sci. 2022, 12, 2729. [Google Scholar] [CrossRef]

- Chen, Y.S.; Chuang, Y.Y. Natural image stitching with the global similarity prior. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 186–201. [Google Scholar]

- Lee, K.Y.; Sim, J.Y. Warping residual based image stitching for large parallax. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8198–8206. [Google Scholar]

- Lin, W.Y.; Liu, S.; Matsushita, Y.; Ng, T.T.; Cheong, L.F. Smoothly varying affine stitching. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 345–352. [Google Scholar]

- Zaragoza, J.; Chin, T.J.; Brown, M.S.; Suter, D. As-projective-as-possible image stitching with moving DLT. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2339–2346. [Google Scholar]

- Guo, D.; Chen, J.; Luo, L.; Gong, W.; Wei, L. UAV image stitching using shape-preserving warp combined with global alignment. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8016005. [Google Scholar] [CrossRef]

- Cui, Z.; Tang, R.; Wei, J. UAV image stitching with Transformer and small grid reformation. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5001305. [Google Scholar] [CrossRef]

- He, L.; Li, X.; He, X.; Li, J.; Song, S.; Plaza, A. VSP-Based Warping for Stitching Many UAV Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5624717. [Google Scholar] [CrossRef]

- He, K.; Chang, H.; Sun, J. Rectangling panoramic images via warping. ACM Trans. Graph. 2013, 32, 1–10. [Google Scholar] [CrossRef]

- Li, D.; He, K.; Sun, J.; Zhou, K. A geodesic-preserving method for image warping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 213–221. [Google Scholar]

- Zhang, Y.; Lai, Y.K.; Zhang, F.L. Content-preserving image stitching with piecewise rectangular boundary constraints. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3198–3212. [Google Scholar] [CrossRef] [PubMed]

- Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: Image and video synthesis using graph cuts. ACM Trans. Graph. 2003, 22, 277–286. [Google Scholar] [CrossRef]

- Li, N.; Liao, T.; Wang, C. Perception-based seam cutting for image stitching. Signal Image Video Process. 2018, 12, 967–974. [Google Scholar] [CrossRef]

- Chen, X.; Yu, M.; Song, Y. Optimized seam-driven image stitching method based on scene depth information. Electronics 2022, 11, 1876. [Google Scholar] [CrossRef]

- Agarwala, A.; Dontcheva, M.; Agrawala, M.; Drucker, S.; Colburn, A.; Curless, B.; Salesin, D.; Cohen, M. Interactive digital photomontage. In ACM SIGGRAPH 2004 Papers; Association for Computing Machinery: New York, NY, USA, 2004; pp. 294–302. [Google Scholar]

- Dai, Q.; Fang, F.; Li, J.; Zhang, G.; Zhou, A. Edge-guided composition network for image stitching. Pattern Recognit. 2021, 118, 108019. [Google Scholar] [CrossRef]

- Eden, A.; Uyttendaele, M.; Szeliski, R. Seamless image stitching of scenes with large motions and exposure differences. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 7–22 June 2006; Volume 2, pp. 2498–2505. [Google Scholar]

- Lin, K.; Jiang, N.; Cheong, L.F.; Do, M.; Lu, J. Seagull: Seam-guided local alignment for parallax-tolerant image stitching. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 370–385. [Google Scholar]

- Gao, J.; Li, Y.; Chin, T.J.; Brown, M.S. Seam-driven image stitching. In Proceedings of the Eurographics, Girona, Spain, 6–10 May 2013; pp. 45–48. [Google Scholar]

- Zhang, F.; Liu, F. Parallax-tolerant image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3262–3269. [Google Scholar]

- Li, J.; Zhou, Y. Automatic color image stitching using quaternion rank-1 alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 8–24 June 2022; pp. 19720–19729. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Nichol, A.Q.; Dhariwal, P. Improved denoising diffusion probabilistic models. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8162–8171. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Zhu, Y.; Zhang, K.; Liang, J.; Cao, J.; Wen, B.; Timofte, R.; Van Gool, L. Denoising Diffusion Models for Plug-and-Play Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1219–1229. [Google Scholar]

- Kawar, B.; Elad, M.; Ermon, S.; Song, J. Denoising diffusion restoration models. Adv. Neural Inf. Process. Syst. 2022, 35, 23593–23606. [Google Scholar]

- Chung, H.; Sim, B.; Ryu, D.; Ye, J.C. Improving diffusion models for inverse problems using manifold constraints. Adv. Neural Inf. Process. Syst. 2022, 35, 25683–25696. [Google Scholar]

- Chen, Y.; Liu, S.; Wang, X. Learning continuous image representation with local implicit image function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8628–8638. [Google Scholar]

- Nie, L.; Lin, C.; Liao, K.; Liu, S.; Zhao, Y. Deep rectangling for image stitching: A learning baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 8–24 June 2022; pp. 5740–5748. [Google Scholar]

- Nie, L.; Lin, C.; Liao, K.; Liu, S.; Zhao, Y. Parallax-tolerant unsupervised deep image stitching. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 7399–7408. [Google Scholar]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef] [PubMed]

- Malkov, Y.A.; Yashunin, D.A. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 824–836. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Kadkhodaie, Z.; Simoncelli, E. Stochastic solutions for linear inverse problems using the prior implicit in a denoiser. Adv. Neural Inf. Process. Syst. 2021, 34, 13242–13254. [Google Scholar]

- Lin, C.C.; Pankanti, S.U.; Natesan Ramamurthy, K.; Aravkin, A.Y. Adaptive as-natural-as-possible image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1155–1163. [Google Scholar]

- Ying, Z.; Niu, H.; Gupta, P.; Mahajan, D.; Ghadiyaram, D.; Bovik, A. From patches to pictures (PaQ-2-PiQ): Mapping the perceptual space of picture quality. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3575–3585. [Google Scholar]

| Method | SSIM↑ | PSNR↑ |

|---|---|---|

| Origin | 0.361 | 12.724 |

| Restore | 0.434 | 17.011 |

| Method | PaQ-2-PiQ↑ |

|---|---|

| Stitched image | 0.741 |

| AANAP | 0.684 |

| UDIS++ | 0.734 |

| Deep Rectangling | 0.743 |

| Ours | 0.766 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Luo, Y.; Wang, J.; Tang, H.; Tang, Y.; Li, J. Elimination of Irregular Boundaries and Seams for UAV Image Stitching with a Diffusion Model. Remote Sens. 2024, 16, 1483. https://doi.org/10.3390/rs16091483

Chen J, Luo Y, Wang J, Tang H, Tang Y, Li J. Elimination of Irregular Boundaries and Seams for UAV Image Stitching with a Diffusion Model. Remote Sensing. 2024; 16(9):1483. https://doi.org/10.3390/rs16091483

Chicago/Turabian StyleChen, Jun, Yongxi Luo, Jie Wang, Honghua Tang, Yixian Tang, and Jianhui Li. 2024. "Elimination of Irregular Boundaries and Seams for UAV Image Stitching with a Diffusion Model" Remote Sensing 16, no. 9: 1483. https://doi.org/10.3390/rs16091483

APA StyleChen, J., Luo, Y., Wang, J., Tang, H., Tang, Y., & Li, J. (2024). Elimination of Irregular Boundaries and Seams for UAV Image Stitching with a Diffusion Model. Remote Sensing, 16(9), 1483. https://doi.org/10.3390/rs16091483