1. Introduction

Bistatic SAR is a well-established technique, both theoretically and experimentally, and optimal references are widely available such as [

1,

2]. Bistatic SAR interferometry has demonstrated its potential in extracting interferograms with minimal temporal decorrelation noise and atmospheric phase screen suppression [

3], which are crucial for applications like Digital Elevation Models (DEM) and ground motion measurements. In the bistatic SAR applications mentioned, there must be coherence between the illuminator (transmitter) and the bistatic receiver and thus they are located as close as possible to each other.

Wide-angle bistatic imaging offers advantages like the removal of corner reflector reflectivity and can provide an improvement in the recovery of the object characteristics as, according to diffraction tomography [

4,

5], the recovered wavenumbers are changed with respect to those obtained using monostatic SAR’s. However, as there is no coherence with the wavenumbers recovered by the monostatic SAR, multi-pass interferometry is mandatory, thus leading to temporal decorrelation issues. Finally, wide-angle bistatic SAR provides the advantage of offering new Lines of Sight (LOS) directions which, when combined with the monostatic illuminator, might enable a full 3D recovery of slow ground motions [

6], including the along-track one, which is important for seismologists.

Wide-angle bistatic imaging was studied in the evaluation of a companion to the Argentinean SAOCOM L-band satellite [

7]. A mission for a companion to a Sentinel-1 satellite, namely the ESA Harmony mission, was approved for a launch [

8]. This mission involves Sentinel-1 as illuminator and two companions in various relative locations. Among its many goals, Harmony aims to better identify the North-South (NS) motion of the terrain by positioning two companions 300 km up and down the track. It should be observed that Sentinel-1 and also the two companions of the Harmony mission are large satellites and their position along the orbit is and will be very well defined (the radius of the orbital tube is lower than 100 m and the distance of the companions controlled to 400 m [

8]).

The aim of this paper is to theoretically identify the limits of the volume in which the companion has to be positioned with respect to the illuminator, and to be able to propose much lighter and less controlled receivers. The LOS perturbations are first discussed, emphasizing that only the components of the LOS perturbations that are orthogonal to the LOS will impact the interferometric coherence, as is obvious if the diffraction tomography approach is adopted. Then, only the illumination and reception angles are involved and, together with the azimuth and range resolution, define the obtainable support of common wavenumbers. The fact that the LOS distance change has a very limited impact on interferometry is corroborated by the analyses made.

As the perturbations of the positions of the satellites are easily determined in Cartesian geometry, the paper then introduces the rotation matrix needed to move from Cartesian to LOS-aligned coordinates and simply parameterizes the geometry of the wide-angle bistatic SAR. The layover line is identified and the additional phase shifts due to the height of the scatterer are determined as a function of three baselines (the vertical ones for the receiver and the transmitter and the horizontal one for the receiver). The resolution in range and azimuth is then calculated. Then, the coherence of the distributed scatterers is evaluated. The analysis is extended to the wavenumber domain to identify the reduction of the common spectral support due to the wavenumber shifts. Only two parameters are needed as the initial geometrical analysis proves that the effects of the two vertical baselines can be combined. The bistatic wide-angle case is seen to be more tolerant of baseline changes due to their statistical combinations. In the monostatic case transmitter and receiver baselines coincide, leading to maximal sensitivity. Finally, the use of bistatic SAR for the determination of the location of interferers is analyzed.

1.1. LOS Distance Perturbations

Let us consider the LOS distance

between a target and the transmitter and its perturbations due to their position changes on the three axes. Indexes

refer to the transmitter and

to the target

We expand the expression of

d in a Taylor series of progressive powers of 1/

R to evaluate the distance changes due to the displacements of the transmitter (

) and target (

) and identify the terms dependent on

. Namely, considering only terms of second order in the perturbations, those containing products like

depend on

whereas those containing products like

depend on higher powers of the inverse distance, as indicated by the number in parentheses. Then, the second-order perturbations of interest belong only to the two planes orthogonal to the LOS. We notice here that this is consistent with the planar waves approach where only the incidence angles changes matter and thus only the components on the plane orthogonal to the LOS. Indicating with

the positions of the transmitter and target (namely in the LOS based coordinates they are

then the approximation of the double difference will be of the type:

Now, indicating with

the 3D vectors of the 2D components of the displacements of the transmitter and receiver orthogonal to their LOS and with

the target displacement on the ground (again a 2D vector in 3D) we have the following formula

not so far from the monostatic one, and also consistent with the wavenumber approach. However, now the baselines positioned in the horizontal plane may count, and they should be considered in the evaluation of the critical baseline.

I notice now and will recall later that moving along and across the resolution cell in the ground plane, changes in will be induced, and thus a linear change of the distance , and therefore a phase plane will be added to the returns. Another phase plane will be added by the changes in the receiver position and the changes in . The sum of the two planes is a third plane. The final fringe frequency will be directed along the summed plane gradient, and two different fringe frequencies will be created along the axes on the ground plane, namely that of the orbit direction (y) and its orthogonal (x). As separability applies, a simple calculation will check that the coherence is now the product of the two ’s on the two axes.

1.2. LOS Based Coordinate Systems

I now consider how to move from Cartesian to LOS-aligned coordinates and back. For that, I define the direction cosines of the two LOS’s (transmitter, receiver). I establish a Cartesian coordinate system with the origin in the nadir of the transmitter,

z the height,

y the orbit direction and finally

x as the ground range. Let

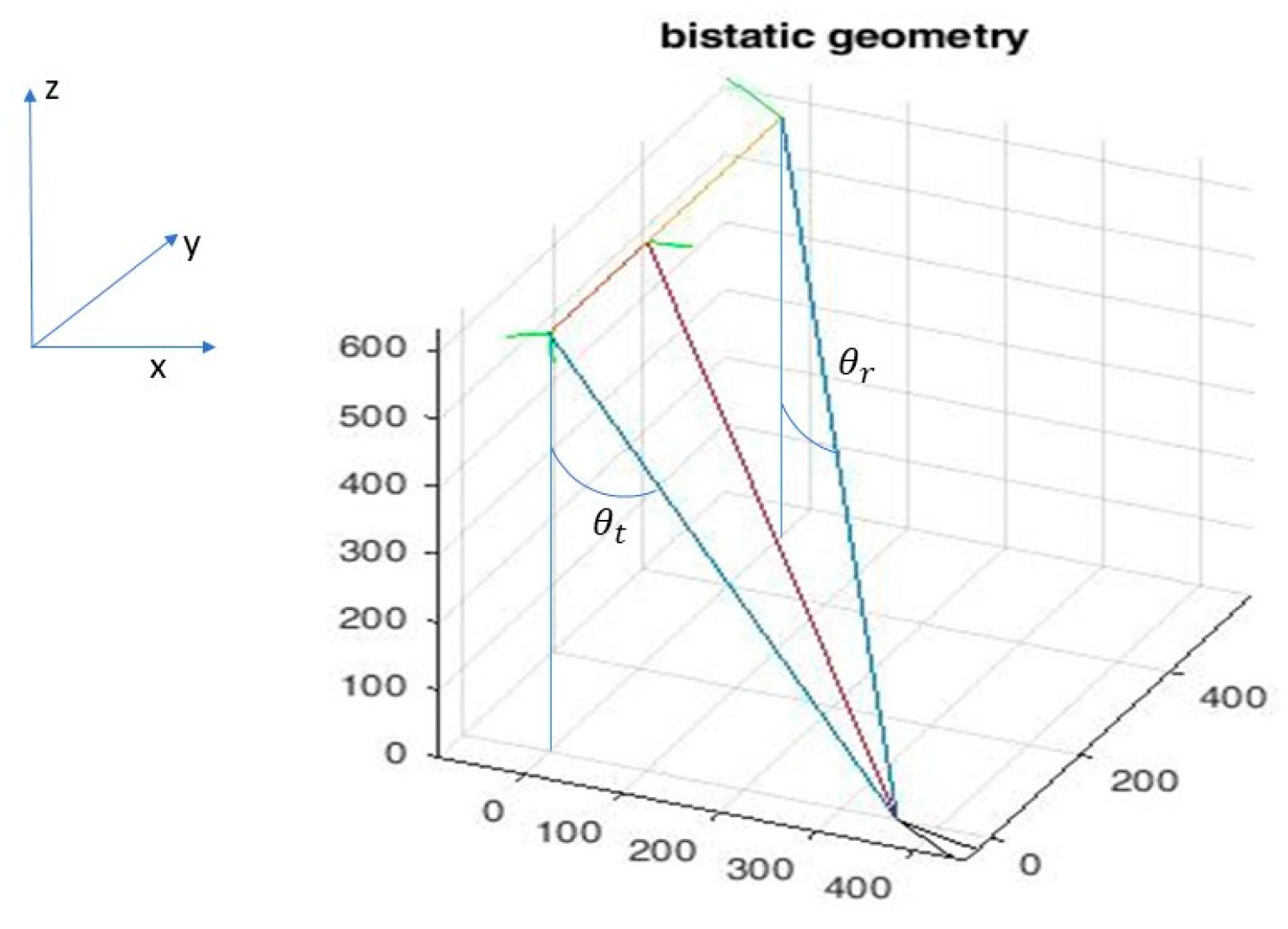

θt, θr be the incidence angles as indicated in

Figure 1.

Hence, we know that the LOS distance of the transmitter

and the LOS direction cosines are:

If the distance of the receiver along the orbit of the receiver is

D, then the LOS distance of the receiver and its direction cosines are:

For brevity, let us consider the following symbols only dependent on

The direction of the bistatic range is the bisector of the two LOS’s and therefore has the direction of their vector sum. Its direction cosines are as follows, where

Q is a normalizing factor.

On the ground plane, the direction of the projection of the bisector is:

not orthogonal to the orbit. Further, the angle of the bisector with the

z axis, that is always larger than

is:

I now define the rotation matrix

from the Cartesian coordinates to those that have the LOS of the receiver as the first axis, and the second axis in the horizontal plane. Indeed,

suffice for the description of the matrix; for the transmitter

. With some algebra we reach the following result in the case of the LOS of the receiver at a distance

from the transmitter:

Its transpose will allow it to pass from a LOS-based coordinate system where it is easy to define the orthogonal plane, to the Cartesian one. Posing

, I find the transmitter matrix:

2. Resolution in the Bistatic Range and Cross Range

I will now consider the impact of

η on the resolution in the bistatic range and cross range. Let us consider the ellipse that has foci in the source and receiver and passes through the target. If the target moves of a distance

δ along the projection of the bisector on the ground, the ellipse will inflate of

sin

where

is the angle of the bisector with the

z axis. The travel path will further change of the factor

Increasing

η, and thus with the bisector moving towards a more grazing incidence, the travel path change will be larger than

obtainable for

Increasing the factor q increases as the effect of the increment of is larger than that due to the increment of .

Expanding in series the previous formula in series with respect to

the resolution change is approximately proportional to:

Looking at the resolution of bistatic images along the bistatic cross range we have to evaluate the change in the deflection of the projection of the bisector in the ground plane due to the change in illumination direction from the transmitter. As found in the

Appendix A the change of the angle with the

x axis of the projection of the bisector as a function of the illumination aperture

ɛH is decreasing with

η and thus I found:

and now the resolution loss with

η is proportional to

Hence, we see that the overall areal resolution will decrease with To summarize, we have seen that indeed the bistatic fast (and slow) time resolutions are slightly higher (and lower) than in the monostatic case. In the fast time case, because the shorter wavenumber modulus, due to the bistatic angle increase, was more than compensated by the larger grazing incidence, whereas in the slow time case I have observed a progressively smaller angular excursion for the same illumination slow time.

2.1. The Layover Line

After azimuth focusing, the natural coordinate system will be bistatic-range and azimuth, not orthogonal. All points from the target that are along the line intersection of

- -

A vertical plane orthogonal to the y axis (constant azimuth)

- -

The plane tangent to the rotational ellipsoid (with foci in the satellites) that is also orthogonal to the bisector will have approximately the same travel time.

After azimuth and bistatic range focusing, the targets lying on this line will layover into the ground plane. I indicate this as the layover line. The excess phase found at the focused point will depend on the height of the target.

The direction cosines of the layover line are:

We find that the slope in the vertical plane is the same as the monostatic one, orthogonal to the transmitter LOS. As the direction cosines of the transmitter LOS are

and those of the layover line are

We see that these lines are orthogonal, as in the monostatic case.

2.2. Phase Rotations along the Layover Line

I can now calculate the phase shifts along the layover line and also the altitude of ambiguity, determining the effect not of the four baselines, but just three as the horizontal baseline of the transmitter is irrelevant, provided that D is calculated relative to the actual position of the illuminator. Let L be the distance from the ground of the target, along the layover line. All orthogonal to the LOS’s, the vertical baseline of the transmitter is indicated as Btv and the horizontal and vertical ones of the receiver are indicated as Brh, and Brv.

The rotation along the layover line is determined as follows,

I just notice that for small , the effect of Brh increases with , and it is smaller than that of Brv.

To find the altitude of ambiguity

hamb, it is only necessary to define as

Lamb the one that makes the phase shift

φ equal to 2

π, and then recall that the height is:

2.3. Distributed Scatterers: Coherence Losses

I now have to consider the effects of bistatic interferometry on distributed scatterers. The illuminated data will backscatter at a completely different angle, so that a change in the illumination direction due to the vertical baseline will indeed impact on the scattered spectrum. We now analyze how the baselines effects will combine.

2.4. Same Vertical Plane

For simplicity, let us start with the simplest case: two satellites in the same orbital plane at different heights; indeed, their velocities will be different, but the lower will slowly overtake the upper during the orbital cycle, and in some locations the two may be considered having a same nadir at the same time, repeatedly in every cycle. So, let us consider to have transmitter and receiver in the same vertical plane, illuminating with different incidence and reflection angles a cell of length

containing

scatterers. In the second passage both angles are slightly changed (I reuse some of the symbols, for brevity)

The cross-correlation of the data will yield the coherence that I wish to calculate, trying to keep it as high as possible. For example, I will calculate if and how we could compensate the baseline of the transmitter with that of the receiver, compensating the angle change in transmission with another complementary change in the reception. The cross-correlation is

Expanding in a power series of

and zeroing the constant and the linear term, so that I obtain the observation angles needed for total compensation, I obtain:

and then the next term that would lower the coherence is

Just to have a number, let us suppose that the upper satellite height is 619 km and the incidence angle is 20°. The lower satellite height is 420 km. The illuminated target is at ground range 217 km and the incidence angle of the second satellite is about 27°. Imposing that the reduction of the coherence be

and with a 3 m cell and

I obtain:

so that the baselines could compensate each other as long as they are both contained in a diameter of about

They will not compensate, in general, but the fact that they could is interesting and allows us to statistically sum their effects.

2.5. Two Vertical Planes

Let us now consider the case of two satellites in the same orbit at distance

. I have seen before that there are two vertical baselines for the two LOS. How do they combine? Let us consider the two phase-planes describing the distance changes on a square of side

. The three baselines will induce three distance change planes, each with a different inclination and direction, dependent also on the bistatic angle

and the offset D. However, considering the total effect we still have a planar variation along and across the resolution cell. I indicate with

the scatterers within the cell along the

axes. The cell is

, and with

I indicate the total linear change of the distance. Then, I have the following for the correlation between

in the two passes:

and the coherence is

The

variables can be separated and thus I have, approximately

where

are the fractions of cycles made in the resolution cell by the two frequencies along the two axes.

5. Wavenumber Shifts: Bistatic

The previous analysis was carried out hypothesizing that no interferogram flattening had happened. Then, the additional phase plane due to the baselines operates on the data as an additional phase screen reducing the coherence as the product of the two sinc’s, as said.

The situation is different if there is no significant volumetric effect and the local slope is approximately stationary. Then, the interferogram can be “demodulated” times the local fringes, the coherence re-established to 1, but the spectrum of one of the images is shifted along both directions and thus the area of the wavenumbers that have their correspondent in the other image is reduced, as well known from diffraction tomography [

4,

5]. In other words, the resolution of the interferogram is reduced as part of the spectra will not correlate, being independent and not co-located.

In order to briefly recall the wavenumber’s approach, recently used for the case of the Harmony mission [

9], I remember that the analysis starts with the hypothesis that the wave-front is locally planar. So, its spherical character is lost. Indeed, in the previous analyses the LOS distance was found to have a minor contribution and was used only to calculate those propagation angles, which will be found again in the wavenumber approach. The incident and the reflected wavenumbers

have the direction cosines that have already been calculated, and length

The wavenumber of the reflecting object

is the sum of the incident and reflected wavenumber

Finally, the wavenumber of the target on the ground plane

is the projection of

So we have to multiply times the cosine of the grazing angle, i.e., the sine of the angle with the

z axis, namely sin

(Equation (10)).

coherently with what we have seen in the previous Section, but from another viewpoint. All these wavenumbers lie in the target, source, and receiver planes. The illuminated wavenumber domain will change with ω as the different frequencies will correspond to different values of

λ, and thus a different modulus of

ko. This corresponds to the bistatic range resolution. Notice that this resolution on the ground plane will depend also on

η, as the incidence angle of the bisector and thus its projected wavenumber length will increase with

η.

I observe then that also the overall shape of the 2D spectrum is also dependent on η. This shape is approximately rectangular if η = 0 and if the extension of the illumination slow time is negligible. By increasing the illumination time, it becomes trapezoidal, even in the monostatic case, considering the ensuing non-orthogonality between the LOS’s and the y axis of the orbit.

In the

Appendix A, I calculate the angle with the

x axis of the projection of the bisector on the ground plane and its change with the slow time. We see that the extent of the change, i.e., the resolution in the cross-range direction, is slightly dependent on

η. As we have seen that the projection of the wavenumber on the ground plane will be longer with increasing

η, we expect an approximately parallelogram shape of the spectrum, for significant values of

η.

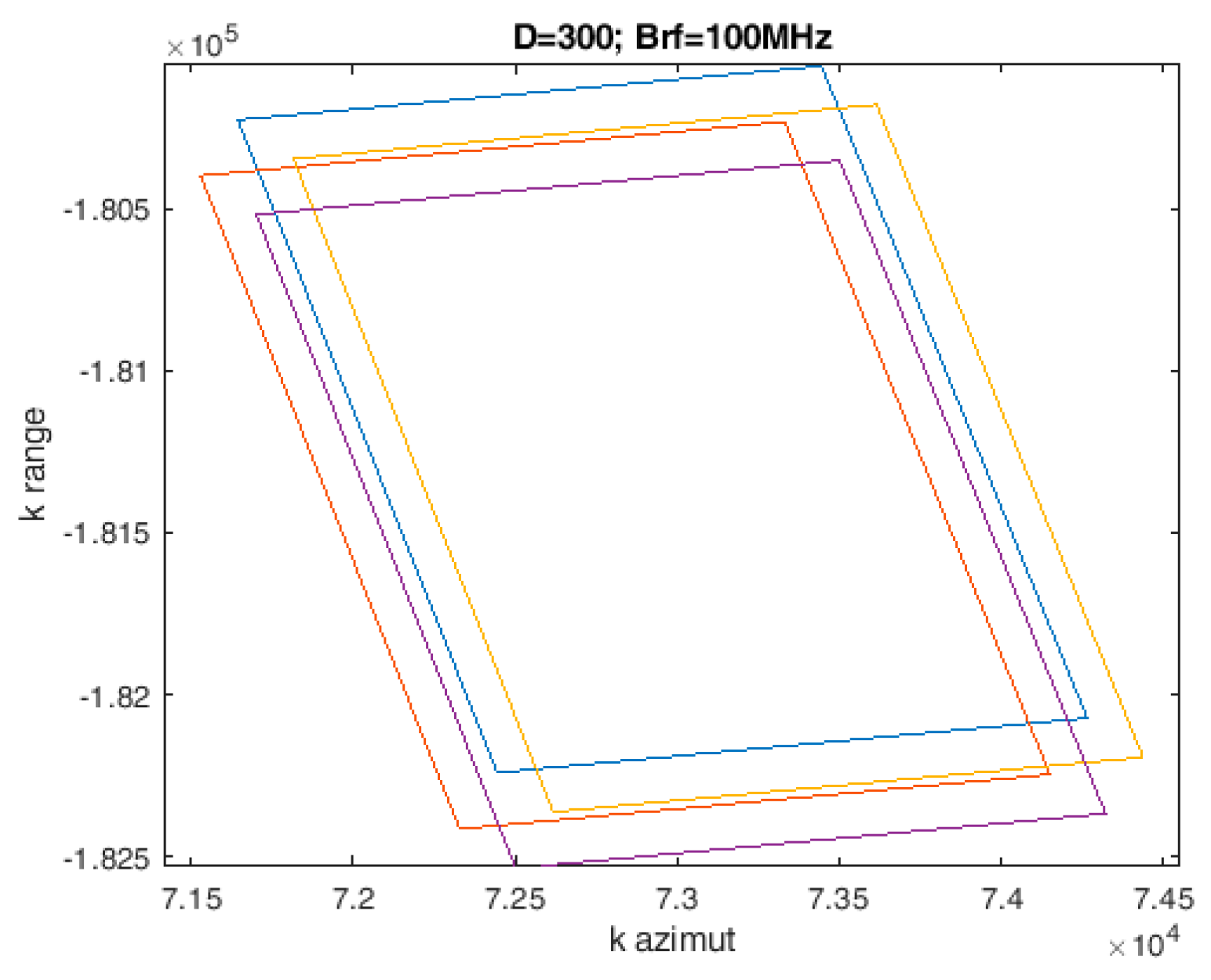

In

Figure 2, I show some spectral supports for equal radio frequency bandwidth and illumination duration and, for increasing

η, we have seen that the shape changes into that of a parallelogram.

Then, in order to appreciate the impact of the baselines, we can still recur to the values of μ, and ν previously calculated. To do so, we also have to consider also the rotation of the angle (Equation (9)) so that the phase shifts are oriented along the data spectrum. Then, it is possible to determine the wavenumber shifts along the directions of the spectral parallelograms, the parallelograms superpositions, and the ensuing interferogram resolution changes.

In

Figure 2, I show how the wavenumber domain support is changed, for

η = 0.5. The azimuthal resolution is, on purpose, made lower to be able to identify the two axes (longer along range). In

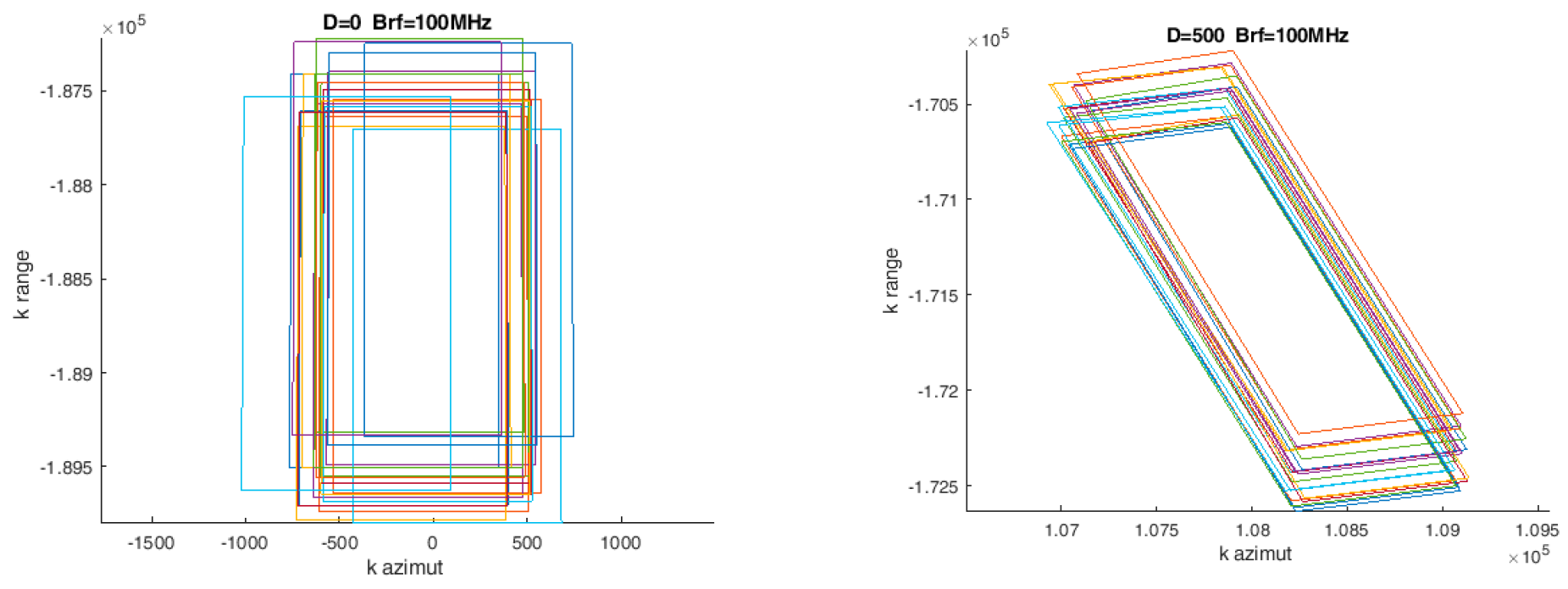

Figure 3 the superposition of the spectral supports for different baselines is shown, which are gaussian and distributed in a sphere with a radius (rms) of 1 km and an offset of 0 and 500 km: again, the azimuth resolution is made lower on purpose.

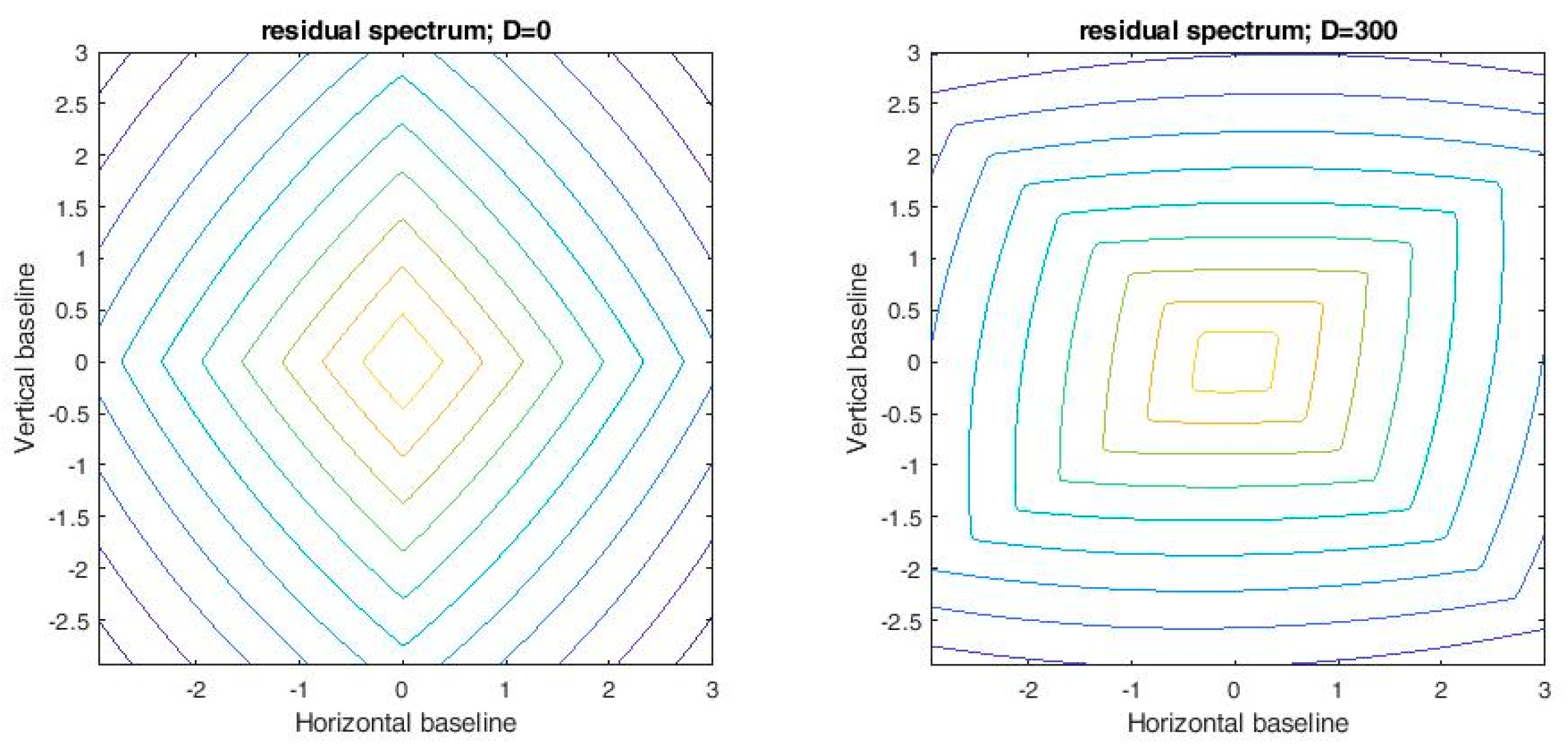

In order to represent these results compactly we can profit from the fact that, as said at the end of

Section 3, the two vertical baselines

, apart from scaling, combine additionally to the final result and thus we can suppose that systematically

and then refer to their combined vertical baseline. Then, we can represent the results of the residual fraction of overlapping spectra after the wavenumber shift as done in

Figure 4 (left, right) as a function of

(km) only, for D = 0; D = 300 km and for H = 619 km.

7. The Rotational Hyperboloids

Let

be the abscissa on the orbit,

the location of the center of the hyperboloid and let the foci be in

If

is the radius of the cylinder, the equation of the hyperboloid passing through the interferer is

Further,

is the measured difference between the two distances. The interferer is supposed to be on the ground in

and

Let

now be the orbit height, and

the third coordinate. The rotational hyperboloid has the same equation, but now

The ground hyperbola is

and thus

Its slope in the interferer location is

The

x direction resolution increases with the width of the angle Δ

ξ covered by all the hyperbolas, namely

and it is likely to be much lower than that along the

y direction.

The situation is opposite in the case that the two satellites have different orbit, and they both receive the interferer. If they are on top of each other, then the hyperboloid has a vertical axis, and the intersection with the ground is a circle passing through the interferer and centered in the common nadir of the two satellites. Now the resolution across the x axis will be maximal, limited only by the interferer band. Similarly, the superpositions of all the circles will better define the position of the interferer along track, but now the along track resolution is much worse.