Review on Hardware Devices and Software Techniques Enabling Neural Network Inference Onboard Satellites

Abstract

1. Introduction

1.1. Motivations and Contributions

- Improved Autonomy and Responsiveness: Onboard AI enables satellites to make real-time decisions by processing data and responding autonomously without waiting for instructions from ground control. This responsiveness is crucial for tasks such as collision avoidance, given the increase in space debris. Furthermore, autonomous systems can adapt to changing conditions in space, improving the reliability and efficiency of missions [4,5,6,7,8].

- Advanced Applications: AI improves Earth observation capabilities by improving the quality of collected data. For example, AI can improve image resolution and detect environmental changes, which are essential for monitoring natural disasters or the impacts of climate change. Furthermore, for planetary exploration or lunar rover missions, onboard AI facilitates autonomous navigation and obstacle detection, making these missions more efficient and less dependent on human intervention [14,15,16,17].

- Support for Complex Operations: AI enables the coordination of multiple satellites working together as a swarm, enabling complex operations that are difficult to manage manually from Earth. Furthermore, AI systems can monitor satellite health and predict potential failures, enabling proactive maintenance actions that extend the operational life of satellites [18,19,20].

1.2. Limitations for Adopting AI Onboard Satellites

- Hardware-related limitations.Ensuring the reliability of AI models in the face of errors caused by radiation is paramount. Satellites typically use radiation-hardened (rad-hard) processors to withstand the harsh space environment [25,26,27]. However, these processors are often significantly less powerful than contemporary commercial processors, limiting their ability to handle complex AI models effectively [28]. The performance gap makes it challenging to deploy state-of-the-art AI frameworks, which require substantial computational resources. Testing and validation processes must ensure that the AI models function correctly under extreme space conditions. This often involves creating simulations of these conditions, which can be both complex and costly [29]. Designing fault-tolerant systems capable of detecting and correcting errors in real time is a complex task and can introduce computational overhead. Balancing reliability with the available resources becomes necessary. Implementing radiation mitigation and fault tolerance solutions can increase costs and resource requirements, making it important to balance cost, performance, and resilience [30].Satellites’ onboard computational and hardware resources are limited. Onboard AI models may need considerable working memory to store model parameters and intermediate results during computations. Many satellite systems are not equipped with the necessary memory capacity, which restricts the complexity of the AI models that can be deployed, creating the need for optimal resource allocation techniques [31]. Moreover, the power available on satellites is limited, which restricts the use of high-performance chips that consume more energy. This results in a trade-off where lighter and smaller satellites may not be able to support the power demands of advanced AI processing units. Finally, the space environment presents unique challenges such as extreme temperatures and radiation exposure, which can affect the reliability and longevity of electronic components used for AI processing. Additionally, these conditions complicate the design of AI systems that must operate autonomously without human intervention.

- Model-related limitations.Lack of Large Datasets: Effective AI models, especially those based on deep learning, require large amounts of labeled training data to perform well. In many cases, particularly for novel instruments or missions to unexplored environments, such datasets are not available. This scarcity can hinder the model’s ability to generalize and perform accurately in real-world conditions [32]. Model Drift and Validation: Continuous validation of AI models is essential, especially for mission-critical applications [33]. This involves downlinking raw data for performance assessment and potentially retraining models onboard, a process complicated by limited communication bandwidth and high latency in space. Moreover, post-launch updates to models need to be carefully managed. Limitations in communication [34] and the risks associated with system malfunctions make it challenging to perform updates during a mission. It is crucial to monitor models continuously and, if necessary, implement updates to ensure ongoing reliability.

- Other limitations.There are also limitations that are broad and that may affect not only AI applications, such as unauthorized access risks. The integration of AI into satellite systems increases vulnerability to hacking and unauthorized control. Ensuring cybersecurity [35,36,37] is critical but adds another layer of complexity to the deployment of AI systems in space. Cybersecurity measures such as encryption and authentication are vital to protect AI models and data from potential threats. However, implementing these mechanisms can be complex and resource-intensive. Care must be taken to ensure that these security measures do not compromise the system’s overall performance. Continuous monitoring for potential threats is also necessary, but this requirement adds to the system’s workload.

2. Hardware Solutions

2.1. ASIC

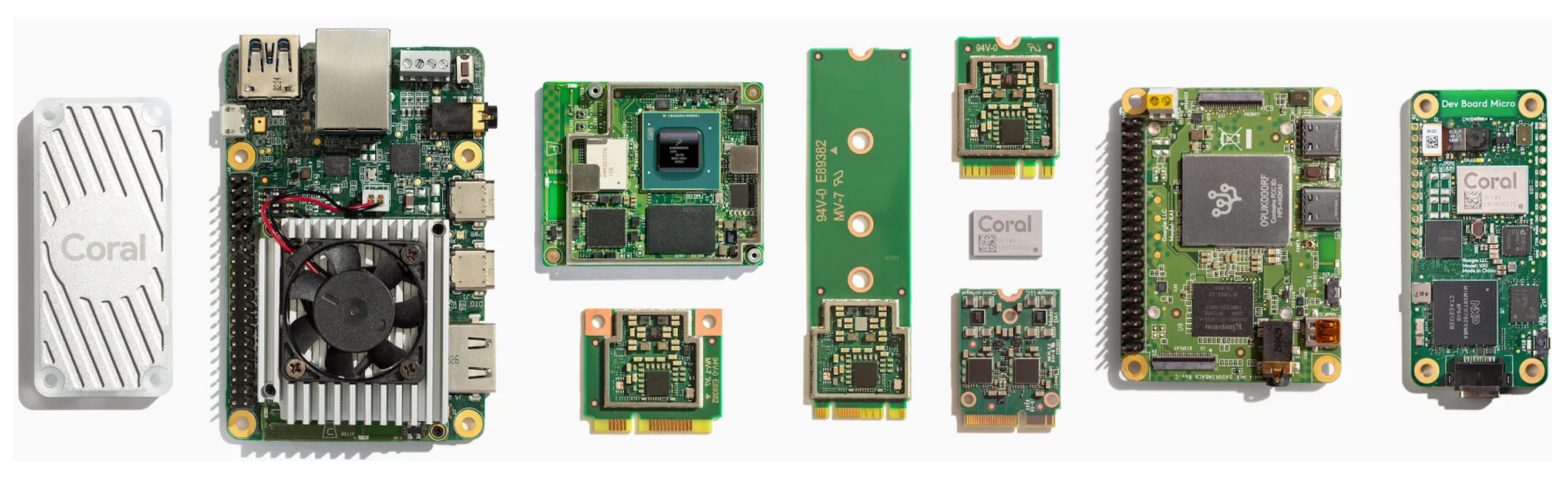

2.1.1. Google Edge TPU

- High-performance parallel processing mode for demanding computational tasks;

- Fault-tolerant mode designed for resilience against operational failures;

- Power-saving mode to conserve energy during less intensive tasks.

- Radiation beam testing to assess the performance of the SC-LEARN in space conditions;

- Flight demonstrations to validate the effectiveness of the SC-LEARN in real mission scenarios.

2.1.2. Nvidia Jetson Orin Nano

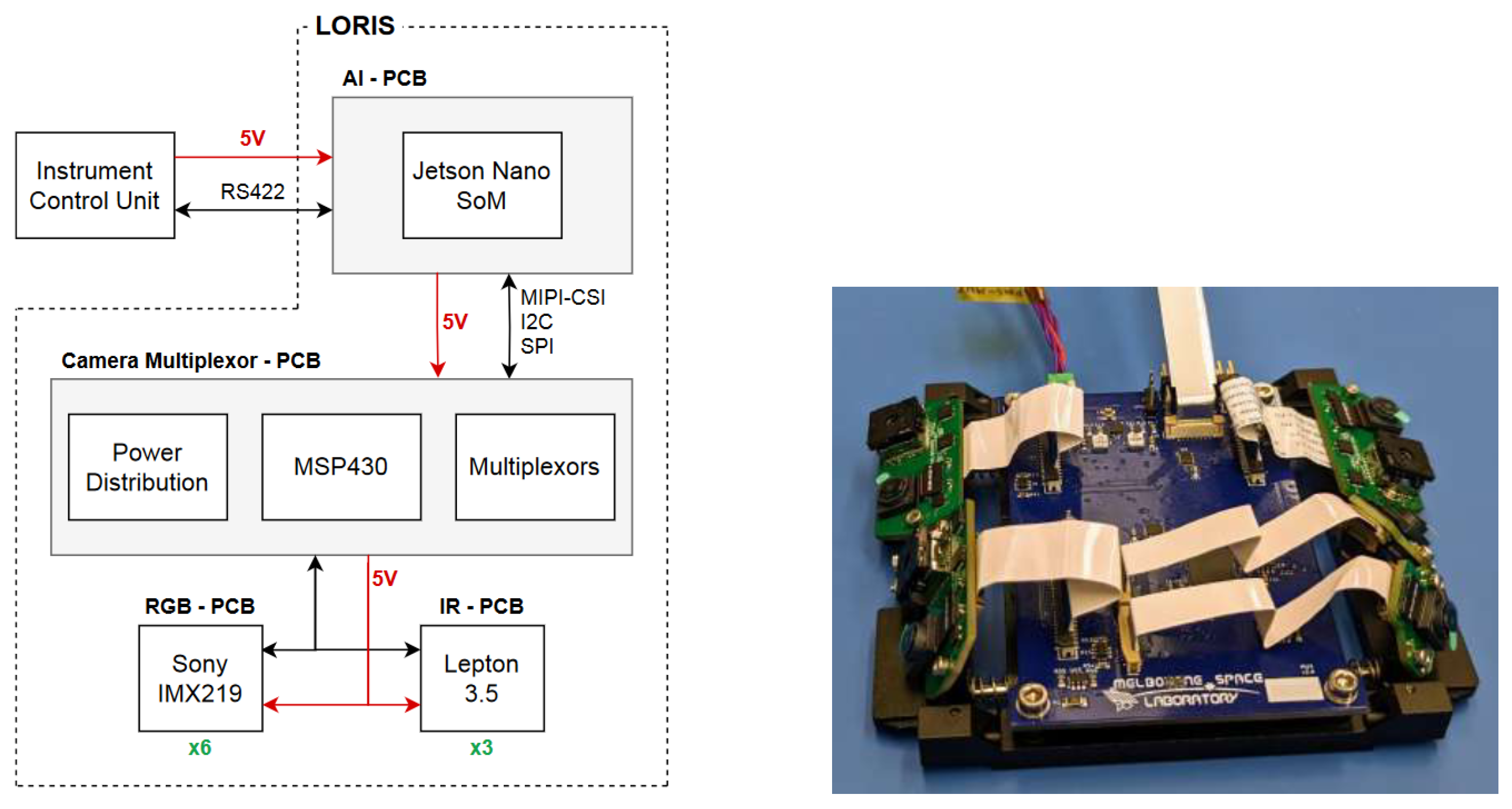

- Six visible light cameras (Sony IMX219);

- Three infrared cameras (FLIR Lepton 3.5);

- A camera control board;

- An NVIDIA Jetson Nano for processing.

- Multiplexing Strategy: this approach mitigates the risk of individual sensor failures by integrating multiple sensors into the system;

- Thermal Management: the payload is designed to operate effectively within a sinusoidal thermal profile experienced in orbit, ensuring that components like the Jetson Nano remain operational despite temperature fluctuations;

- Cost-Effectiveness: the use of commercial off-the-shelf (COTS) components aligns with budget constraints typical of nanosatellite missions, although it introduces higher risks regarding component reliability.

2.1.3. Other NVIDIA Jetson Boards

- NVIDIA Jetson TX2i. The Jetson TX2i is another notable component used in space applications:

- –

- Performance: it provides up to 1.3 TFLOPS of AI performance, making it suitable for demanding imaging and data processing tasks.

- –

- Applications: Aitech’s S-A1760 Venus system incorporates the TX2i, specifically designed for small satellite constellations operating in low Earth orbit (LEO) and near-Earth orbit (NEO). This system is characterized by its compact size and rugged design, making it ideal for harsh space environments.

- NVIDIA Jetson AGX Xavier Industrial. The Jetson AGX Xavier Industrial module offers advanced capabilities for more complex satellite missions:

- –

- High Performance: it delivers server-class performance with enhanced AI capabilities, making it suitable for sophisticated tasks like sensor fusion and real-time image processing.

- –

- Radiation Resistance: studies have indicated that the AGX Xavier can withstand radiation effects when properly enclosed, making it a viable option for satellites operating in challenging environments.

- Planet Labs’ Pelican-2 Satellite. The upcoming Pelican-2 satellite, developed by Planet Labs, will utilize the NVIDIA Jetson edge AI platform:

- –

- Intelligent Imaging: this integration aims to enhance imaging capabilities and provide faster insights through real-time data processing onboard.

- –

- AI Applications: the collaboration with NVIDIA will enable the satellite to leverage AI for improved data analytics, supporting rapid decision-making processes in various applications.

2.1.4. Intel Movidius Myriad X VPU

2.2. FPGA-Based Designs

- Xilinx Virtex-5QV [61]: it is designed specifically for space applications and is known for its high performance and re-programmability. It features enhanced radiation tolerance, making it suitable for use in satellites and other space systems where reliability is critical. This FPGA has been used in various missions, including those conducted by NASA and the European Space Agency (ESA).

- Actel/Microsemi ProASIC3 [62]: these FPGAs are anti-fuse-based devices that provide a high degree of immunity against radiation-induced faults [63]. These FPGAs are one-time programmable and are often used in applications where re-programmability is less critical but where robustness against radiation is essential.

- Re-configurability: it can be adapted for different instruments and tasks, allowing it to support a wide range of satellite missions;

- Open Source: utilizing open-source components promotes collaboration and innovation, enabling users to modify and improve the system as needed;

- Integration with AI: the unit is designed to incorporate AI, enhancing its ability to process data and make decisions autonomously.

- Direct Regression on Full Image: a straightforward method but less accurate;

- Detection-Based Methods: utilizing heatmaps to improve accuracy;

- Combination of Detection and Cropping: involves detecting the spacecraft first and then applying landmark localization algorithms, which significantly enhances accuracy.

2.3. Neuromorphic Hardware and Memristors

2.4. Commercial Solutions

- Industry-leading radiation performance and power efficiency;

- The SEP features an integral Ethernet/MPLS switch;

- AMD Ryzen V2748 CPU+GPU;

- AMD Versal coprocessor FPGA.

3. Software Tools, Methods, and Solutions for AI Integration

3.1. Main Challenges

3.2. SW Approaches for AI Integration

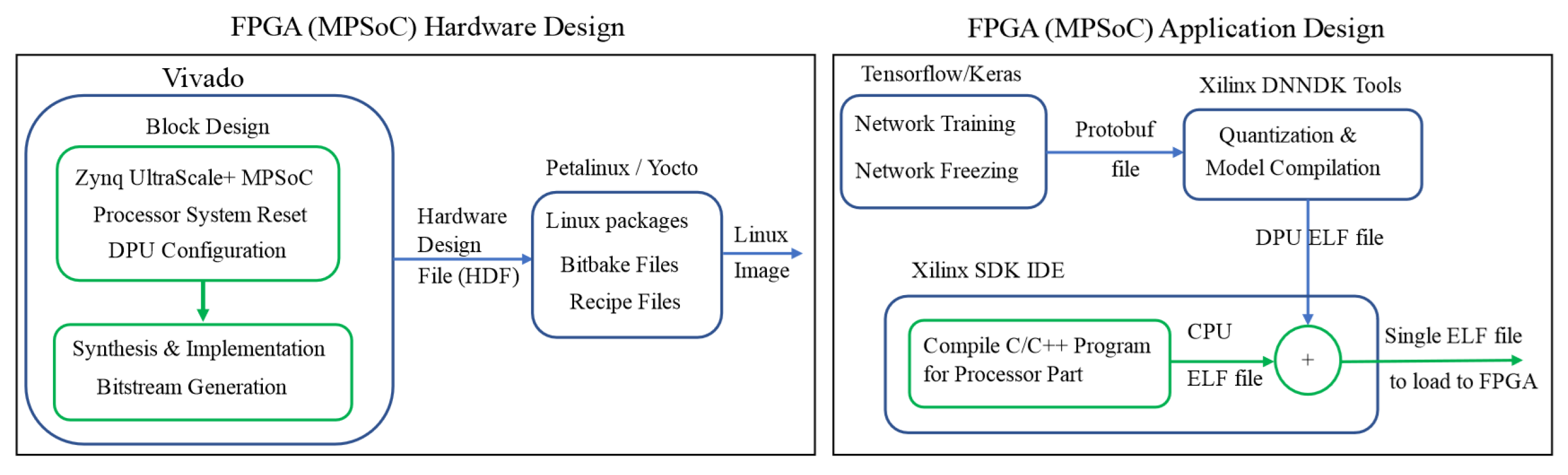

- A benchmarking pipeline that utilizes standard Xilinx tools and a deep learning deployment chain to run deep learning techniques on edge devices;

- Performance evaluation of three state-of-the-art deep learning algorithms for computer vision tasks on the Leopard DPU Evalboard, which will be deployed onboard the Intuition-1 satellite;

- The analysis focuses on the latency, throughput, and performance of the models. Quantification of deep learning algorithm performance at every step of the deployment chain allows practitioners to understand the impact of crucial deployment steps like quantization or compilation on the model’s operational abilities.

- Input Bands: the network was tested with varying numbers of spectral bands, revealing that using four specific bands (red, green, blue, and infrared) yielded the best results;

- Input Size: different input sizes were assessed, with a size of 128 × 128 pixels providing optimal accuracy while reducing memory usage;

- Precision: experiments with half-precision computations demonstrated significant memory savings but at the cost of performance degradation in segmentation tasks;

- Convolutional Filters: adjusting the number of filters affected both memory consumption and accuracy, suggesting a balance is necessary to maintain valuable performance while optimizing resources;

- Encoder Depth: utilizing deeper residual networks improved performance metrics significantly but increased memory usage and inference time.

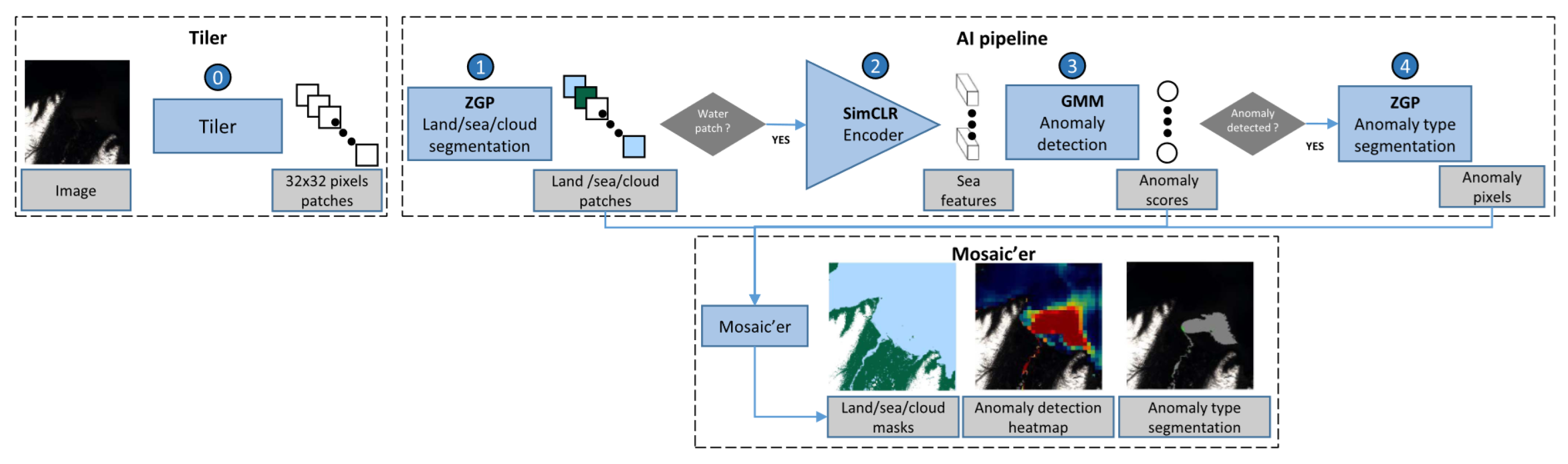

- Maritime Security: enhancing the capability to monitor illegal fishing, smuggling, and other illicit activities at sea;

- Environmental Monitoring: assisting in tracking oil spills and other environmental hazards;

- Traffic Management: improving the management of shipping routes and port operations.

4. AI Applications in Satellites Tasks and Missions

4.1. Earth Observation and Image Analysis

- AI Integration: The satellite employs a Convolutional Neural Network to perform cloud detection, filtering out unusable images before they are transmitted back to Earth. This enhances data efficiency by significantly reducing the volume of images sent down, which is crucial given that cloud cover can obscure over 30% of satellite images.

- Technological Demonstrator: as part of the Federated Satellite Systems (FSSCat) initiative, -Sat-1 serves as a technological demonstrator, showcasing how AI can optimize satellite operations and data collection.

- Payload: the satellite is equipped with a hyperspectral camera and an AI processor (Intel Movidius Myriad 2), which enables it to analyze and process data onboard.

- Future Developments: following the success of -Sat-1, plans are already in motion for the -Sat-2 mission, which aims to expand on these capabilities by integrating more advanced AI applications for various Earth observation tasks.

- Image Acquisition: high-resolution satellites capture panchromatic images with fine spatial resolution and multispectral images with coarser sampling due to downlink constraints.

- Processing Chain: the paper outlines a next-generation processing chain that includes onboard compression, correction of compression artifacts, denoising, deconvolution, and pan-sharpening techniques.

- Compression Techniques: a fixed-quality compression method is detailed, which minimizes the impact of compression on image quality while optimizing bitrate based on scene complexity.

- Denoising Performance: the study shows that non-local denoising algorithms significantly improve image quality, outperforming previous methods by 15% in terms of root mean squared error.

- Adaptation for CMOS Sensors: the authors also discuss adapting these processing techniques for low-cost CMOS Bayer colour matrices, demonstrating the versatility of the proposed image processing chain.

- Image Prioritization: the system prioritizes downloading images with the highest anomaly scores, optimizing data transmission and operator efficiency.

- Alert Mechanism: immediate alerts are sent for significant incidents detected onboard, enhancing response times.

- AI Efficiency: the application uses minimal annotated data for training, making it suitable for satellites with limited computational power and adaptable to various environments beyond marine settings.

4.2. Anomaly Detection and Predictive Maintenance

4.3. Autonomous Navigation and Control

- AI Modules. The proposed GNC system incorporates two state-of-the-art AI components:

- –

- Deep Learning (DL)-based Pose Estimation: this algorithm estimates the pose of a target from 2D images using a pre-trained neural network, eliminating the need for prior knowledge about the target’s dynamics;

- –

- Trajectory Modeling and Control: this technique utilizes probabilistic modeling to manage the trajectories of robotic manipulators, allowing the system to adapt to new situations without complex on-board trajectory optimizations. This minimizes disturbances to the spacecraft’s attitude caused by manipulator movements.

- Centralized Camera Network. The system employs a centralized camera network as its primary sensor, integrating a 7 Degrees of Freedom (DoF) robotic arm into the GNC architecture.

- Autonomous Navigation. DeepNav focuses on creating systems that allow satellites to navigate without constant human intervention, which is vital for missions that operate far from Earth;

- Deep Learning Techniques. The project leverages deep learning algorithms to process and analyze data collected from asteroid surfaces, enabling better decision-making and navigation strategies.

- Optical sensors. The CubeSat will be equipped with optical sensors to gather data about Earth features such as lakes and coastlines;

- AI processing. The data collected will be processed onboard to generate positional inputs for navigation filters, enhancing the accuracy of orbit determination.

4.4. Data Management, Data Compression, and Communication Optimization

5. Conclusions and Future Works

- Optimization of AI Models: Developing lightweight and energy-efficient AI models that can operate within the constraints of satellite hardware. Techniques such as model compression, pruning, and quantization should be explored to reduce the computational requirements of AI models.

- Radiation Tolerance: Enhancing the radiation tolerance of AI hardware through the development of radiation-hardened components and fault-tolerant systems. This includes testing and validating AI hardware in simulated space environments to ensure reliability.

- Neuromorphic and Memristor-Based Systems: Further research into neuromorphic hardware and memristor-based systems for space applications. These technologies offer promising solutions for developing energy-efficient AI systems that can operate in the harsh conditions of space.

- Cybersecurity: Implementing robust cybersecurity measures to protect AI models and data from unauthorized access and manipulation. This includes encryption, authentication, and continuous monitoring for potential threats.

- Real-Time Data Processing: Developing advanced AI algorithms for real-time data processing onboard satellites. This includes applications such as Earth observation, anomaly detection, and autonomous navigation, which require low-latency responses.

- Collaboration and Standardization: Promoting collaboration between industry, academia, and government agencies to standardize AI hardware and software solutions for space applications. This will facilitate the development of interoperable systems and accelerate the adoption of AI technologies in the space sector.

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, C.; Jin, J.; Kuang, L.; Yan, J. LEO constellation design methodology for observing multi-targets. Astrodynamics 2018, 2, 121–131. [Google Scholar] [CrossRef]

- Jaffer, G.; Malik, R.A.; Aboutanios, E.; Rubab, N.; Nader, R.; Eichelberger, H.U.; Vandenbosch, G.A. Air traffic monitoring using optimized ADS-B CubeSat constellation. Astrodynamics 2024, 8, 189–208. [Google Scholar] [CrossRef]

- Bai, S.; Zhang, Y.; Jiang, Y.; Sun, W.; Shao, W. Modified Two-Dimensional Coverage Analysis Method Considering Various Perturbations. IEEE Trans. Aerosp. Electron. Syst. 2023, 60, 2763–2777. [Google Scholar] [CrossRef]

- ESA. Artificial Intelligence in Space. Available online: https://www.esa.int/Enabling_Support/Preparing_for_the_Future/Discovery_and_Preparation/Artificial_intelligence_in_space (accessed on 20 October 2024).

- Thangavel, K.; Sabatini, R.; Gardi, A.; Ranasinghe, K.; Hilton, S.; Servidia, P.; Spiller, D. Artificial intelligence for trusted autonomous satellite operations. Prog. Aerosp. Sci. 2024, 144, 100960. [Google Scholar] [CrossRef]

- Thangavel, K.; Spiller, D.; Sabatini, R.; Amici, S.; Longepe, N.; Servidia, P.; Marzocca, P.; Fayek, H.; Ansalone, L. Trusted autonomous operations of distributed satellite systems using optical sensors. Sensors 2023, 23, 3344. [Google Scholar] [CrossRef]

- Al Homssi, B.; Dakic, K.; Wang, K.; Alpcan, T.; Allen, B.; Boyce, R.; Kandeepan, S.; Al-Hourani, A.; Saad, W. Artificial Intelligence Techniques for Next-Generation Massive Satellite Networks. IEEE Commun. Mag. 2024, 62, 66–72. [Google Scholar] [CrossRef]

- Nanjangud, A.; Blacker, P.C.; Bandyopadhyay, S.; Gao, Y. Robotics and AI-Enabled On-Orbit Operations With Future Generation of Small Satellites. Proc. IEEE 2018, 106, 429–439. [Google Scholar] [CrossRef]

- Alves de Oliveira, V.; Chabert, M.; Oberlin, T.; Poulliat, C.; Bruno, M.; Latry, C.; Carlavan, M.; Henrot, S.; Falzon, F.; Camarero, R. Satellite Image Compression and Denoising With Neural Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Guerrisi, G.; Schiavon, G.; Del Frate, F. On-Board Image Compression using Convolutional Autoencoder: Performance Analysis and Application Scenarios. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 1783–1786. [Google Scholar] [CrossRef]

- Garcia, L.P.; Furano, G.; Ghiglione, M.; Zancan, V.; Imbembo, E.; Ilioudis, C.; Clemente, C.; Trucco, P. Advancements in On-Board Processing of Synthetic Aperture Radar (SAR) Data: Enhancing Efficiency and Real-Time Capabilities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 16625–16645. [Google Scholar] [CrossRef]

- Guerrisi, G.; Frate, F.D.; Schiavon, G. Artificial Intelligence Based On-Board Image Compression for the Φ-Sat-2 Mission. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8063–8075. [Google Scholar] [CrossRef]

- Russo, A.; Lax, G. Using artificial intelligence for space challenges: A survey. Appl. Sci. 2022, 12, 5106. [Google Scholar] [CrossRef]

- Ortiz, F.; Monzon Baeza, V.; Garces-Socarras, L.M.; Vasquez-Peralvo, J.A.; Gonzalez, J.L.; Fontanesi, G.; Lagunas, E.; Querol, J.; Chatzinotas, S. Onboard processing in satellite communications using ai accelerators. Aerospace 2023, 10, 101. [Google Scholar] [CrossRef]

- Leyva-Mayorga, I.; Martinez-Gost, M.; Moretti, M.; Perez-Neira, A.; Vazquez, M.A.; Popovski, P.; Soret, B. Satellite Edge Computing for Real-Time and Very-High Resolution Earth Observation. IEEE Trans. Commun. 2023, 71, 6180–6194. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Y.; Xie, R.; Hao, X.; Huang, T. Integrating Edge Computing into Low Earth Orbit Satellite Networks: Architecture and Prototype. IEEE Access 2021, 9, 39126–39137. [Google Scholar] [CrossRef]

- Zhang, Z.; Qu, Z.; Liu, S.; Li, D.; Cao, J.; Xie, G. Expandable on-board real-time edge computing architecture for Luojia3 intelligent remote sensing satellite. Remote Sens. 2022, 14, 3596. [Google Scholar] [CrossRef]

- Pacini, T.; Rapuano, E.; Tuttobene, L.; Nannipieri, P.; Fanucci, L.; Moranti, S. Towards the Extension of FPG-AI Toolflow to RNN Deployment on FPGAs for On-board Satellite Applications. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan Les Pins, France, 2–6 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Razmi, N.; Matthiesen, B.; Dekorsy, A.; Popovski, P. On-Board Federated Learning for Satellite Clusters With Inter-Satellite Links. IEEE Trans. Commun. 2024, 72, 3408–3424. [Google Scholar] [CrossRef]

- Meoni, G.; Prete, R.D.; Serva, F.; De Beusscher, A.; Colin, O.; Longépé, N. Unlocking the Use of Raw Multispectral Earth Observation Imagery for Onboard Artificial Intelligence. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 12521–12537. [Google Scholar] [CrossRef]

- Pelton, J.N.; Finkleman, D. Overview of Small Satellite Technology and Systems Design. In Handbook of Small Satellites: Technology, Design, Manufacture, Applications, Economics and Regulation; Springer: Cham, Switzerland, 2020; pp. 125–144. [Google Scholar]

- Manoj, S.; Kasturi, S.; Raju, C.G.; Suma, H.; Murthy, J.K. Overview of On-Board Computing Subsystem. In Proceedings of the Smart Small Satellites: Design, Modelling and Development: Proceedings of the International Conference on Small Satellites, ICSS 2022, Punjab, India, 29–30 April 2022; Springer Nature: Singapore, 2023; Volume 963, p. 23. [Google Scholar]

- Cratere, A.; Gagliardi, L.; Sanca, G.A.; Golmar, F.; Dell’Olio, F. On-Board Computer for CubeSats: State-of-the-Art and Future Trends. IEEE Access 2024, 12, 99537–99569. [Google Scholar] [CrossRef]

- Schäfer, K.; Horch, C.; Busch, S.; Schäfer, F. A Heterogenous, reliable onboard processing system for small satellites. In Proceedings of the 2021 IEEE International Symposium on Systems Engineering (ISSE), Vienna, Austria, 13 September–13 October 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Ray, A. Radiation effects and hardening of electronic components and systems: An overview. Indian J. Phys. 2023, 97, 3011–3031. [Google Scholar] [CrossRef]

- Bozzoli, L.; Catanese, A.; Fazzoletto, E.; Scarpa, E.; Goehringer, D.; Pertuz, S.A.; Kalms, L.; Wulf, C.; Charaf, N.; Sterpone, L.; et al. EuFRATE: European FPGA Radiation-hardened Architecture for Telecommunications. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Pavan Kumar, M.; Lorenzo, R. A review on radiation-hardened memory cells for space and terrestrial applications. Int. J. Circuit Theory Appl. 2023, 51, 475–499. [Google Scholar] [CrossRef]

- Ghiglione, M.; Serra, V. Opportunities and challenges of AI on satellite processing units. In Proceedings of the 19th ACM International Conference on Computing Frontiers, New York, NY, USA, 17–22 May 2022; pp. 221–224. [Google Scholar] [CrossRef]

- Tao, C.; Gao, J.; Wang, T. Testing and Quality Validation for AI Software–Perspectives, Issues, and Practices. IEEE Access 2019, 7, 120164–120175. [Google Scholar] [CrossRef]

- Chen, G.; Guan, N.; Huang, K.; Yi, W. Fault-tolerant real-time tasks scheduling with dynamic fault handling. J. Syst. Archit. 2020, 102, 101688. [Google Scholar] [CrossRef]

- Valente, F.; Eramo, V.; Lavacca, F.G. Optimal bandwidth and computing resource allocation in low earth orbit satellite constellation for earth observation applications. Comput. Netw. 2023, 232, 109849. [Google Scholar] [CrossRef]

- Estébanez-Camarena, M.; Taormina, R.; van de Giesen, N.; ten Veldhuis, M.C. The potential of deep learning for satellite rainfall detection over data-scarce regions, the west African savanna. Remote Sens. 2023, 15, 1922. [Google Scholar] [CrossRef]

- Wang, P.; Jin, N.; Davies, D.; Woo, W.L. Model-centric transfer learning framework for concept drift detection. Knowl.-Based Syst. 2023, 275, 110705. [Google Scholar] [CrossRef]

- Khammassi, M.; Kammoun, A.; Alouini, M.S. Precoding for High-Throughput Satellite Communication Systems: A Survey. IEEE Commun. Surv. Tutor. 2024, 26, 80–118. [Google Scholar] [CrossRef]

- Salim, S.; Moustafa, N.; Reisslein, M. Cybersecurity of Satellite Communications Systems: A Comprehensive Survey of the Space, Ground, and Links Segments. IEEE Commun. Surv. Tutor. 2024; in press. [Google Scholar] [CrossRef]

- Elhanashi, A.; Gasmi, K.; Begni, A.; Dini, P.; Zheng, Q.; Saponara, S. Machine learning techniques for anomaly-based detection system on CSE-CIC-IDS2018 dataset. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society, Genova, Italy, 26–27 September 2022; Springer: Cham, Switzerland, 2022; pp. 131–140. [Google Scholar]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Integration of deep learning into the iot: A survey of techniques and challenges for real-world applications. Electronics 2023, 12, 4925. [Google Scholar] [CrossRef]

- Chen, X.; Xu, Z.; Shang, L. Satellite Internet of Things: Challenges, solutions, and development trends. Front. Inf. Technol. Electron. Eng. 2023, 24, 935–944. [Google Scholar] [CrossRef]

- Rech, P. Artificial Neural Networks for Space and Safety-Critical Applications: Reliability Issues and Potential Solutions. IEEE Trans. Nucl. Sci. 2024, 71, 377–404. [Google Scholar] [CrossRef]

- Bodmann, P.R.; Saveriano, M.; Kritikakou, A.; Rech, P. Neutrons Sensitivity of Deep Reinforcement Learning Policies on EdgeAI Accelerators. IEEE Trans. Nucl. Sci. 2024, 71, 1480–1486. [Google Scholar] [CrossRef]

- Buckley, L.; Dunne, A.; Furano, G.; Tali, M. Radiation test and in orbit performance of mpsoc ai accelerator. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–9. [Google Scholar]

- Dunkel, E.R.; Swope, J.; Candela, A.; West, L.; Chien, S.A.; Towfic, Z.; Buckley, L.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Hervas-Martin, E.; et al. Benchmarking deep learning models on myriad and snapdragon processors for space applications. J. Aerosp. Inf. Syst. 2023, 20, 660–674. [Google Scholar] [CrossRef]

- Ramaswami, D.P.; Hiemstra, D.M.; Yang, Z.W.; Shi, S.; Chen, L. Single Event Upset Characterization of the Intel Movidius Myriad X VPU and Google Edge TPU Accelerators Using Proton Irradiation. In Proceedings of the 2022 IEEE Radiation Effects Data Workshop (REDW) (in Conjunction with 2022 NSREC), Provo, UT, USA, 18–22 July 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Boutros, A.; Nurvitadhi, E.; Ma, R.; Gribok, S.; Zhao, Z.; Hoe, J.C.; Betz, V.; Langhammer, M. Beyond Peak Performance: Comparing the Real Performance of AI-Optimized FPGAs and GPUs. In Proceedings of the 2020 International Conference on Field-Programmable Technology (ICFPT), Maui, HI, USA, 9–11 December 2020; pp. 10–19. [Google Scholar] [CrossRef]

- Google. Edge TPU. Available online: https://coral.ai/products/ (accessed on 20 October 2024).

- Google. TensorFlow Models on the Edge TPU. Available online: https://coral.ai/docs/edgetpu/models-intro (accessed on 20 October 2024).

- Google. Run Inference on the Edge TPU with Python. Available online: https://coral.ai/docs/edgetpu/tflite-python/ (accessed on 20 October 2024).

- Google. Models for Edge TPU. Available online: https://coral.ai/models/ (accessed on 20 October 2024).

- Lentaris, G.; Leon, V.; Sakos, C.; Soudris, D.; Tavoularis, A.; Costantino, A.; Polo, C.B. Performance and Radiation Testing of the Coral TPU Co-processor for AI Onboard Satellites. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan-Les-Pins, France, 2–6 October 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Rech Junior, R.L.; Malde, S.; Cazzaniga, C.; Kastriotou, M.; Letiche, M.; Frost, C.; Rech, P. High Energy and Thermal Neutron Sensitivity of Google Tensor Processing Units. IEEE Trans. Nucl. Sci. 2022, 69, 567–575. [Google Scholar] [CrossRef]

- Goodwill, J.; Crum, G.; MacKinnon, J.; Brewer, C.; Monaghan, M.; Wise, T.; Wilson, C. NASA spacecube edge TPU smallsat card for autonomous operations and onboard science-data analysis. In Proceedings of the Small Satellite Conference, Virtual, 7–12 August 2021. SSC21-VII-08. [Google Scholar]

- Nvidia. Jetson Orin for Next-Gen Robotics. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-orin/ (accessed on 20 October 2024).

- Nvidia. Jetson Orin Nano Developer Kit Getting Started. Available online: https://developer.nvidia.com/embedded/learn/get-started-jetson-orin-nano-devkit (accessed on 20 October 2024).

- Nvidia. TensorRT SDK. Available online: https://developer.nvidia.com/tensorrt (accessed on 20 October 2024).

- Slater, W.S.; Tiwari, N.P.; Lovelly, T.M.; Mee, J.K. Total ionizing dose radiation testing of NVIDIA Jetson nano GPUs. In Proceedings of the 2020 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 21–25 September 2020; pp. 1–3. [Google Scholar]

- Rad, I.O.; Alarcia, R.M.G.; Dengler, S.; Golkar, A.; Manfletti, C. Preliminary Evaluation of Commercial Off-The-Shelf GPUs for Machine Learning Applications in Space. Master’s Thesis, Technical University of Munich, Munich, Germany, 2023. [Google Scholar]

- Del Castillo, M.O.; Morgan, J.; Mcrobbie, J.; Therakam, C.; Joukhadar, Z.; Mearns, R.; Barraclough, S.; Sinnott, R.; Woods, A.; Bayliss, C.; et al. Mitigating Challenges of the Space Environment for Onboard Artificial Intelligence: Design Overview of the Imaging Payload on SpIRIT. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 17–21 June 2024; pp. 6789–6798. [Google Scholar]

- Giuffrida, G.; Diana, L.; de Gioia, F.; Benelli, G.; Meoni, G.; Donati, M.; Fanucci, L. CloudScout: A deep neural network for on-board cloud detection on hyperspectral images. Remote Sens. 2020, 12, 2205. [Google Scholar] [CrossRef]

- Dunkel, E.; Swope, J.; Towfic, Z.; Chien, S.; Russell, D.; Sauvageau, J.; Sheldon, D.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Buckley, L.; et al. Benchmarking deep learning inference of remote sensing imagery on the qualcomm snapdragon and intel movidius myriad x processors onboard the international space station. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 5301–5304. [Google Scholar]

- Furano, G.; Meoni, G.; Dunne, A.; Moloney, D.; Ferlet-Cavrois, V.; Tavoularis, A.; Byrne, J.; Buckley, L.; Psarakis, M.; Voss, K.O.; et al. Towards the Use of Artificial Intelligence on the Edge in Space Systems: Challenges and Opportunities. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 44–56. [Google Scholar] [CrossRef]

- AMD. Virtex-5QV Family Data Sheet. Available online: https://docs.amd.com/v/u/en-US/ds192_V5QV_Device_Overview (accessed on 20 October 2024).

- Microchip. ProASIC 3 FPGAs. Available online: https://www.microchip.com/en-us/products/fpgas-and-plds/fpgas/proasic-3-fpgas (accessed on 20 October 2024).

- Bosser, A.; Kohler, P.; Salles, J.; Foucher, M.; Bezine, J.; Perrot, N.; Wang, P.X. Review of TID Effects Reported in ProASIC3 and ProASIC3L FPGAs for 3D PLUS Camera Heads. In Proceedings of the 2023 IEEE Radiation Effects Data Workshop (REDW) (in conjunction with 2023 NSREC), Kansas City, MI, USA, 24–28 July 2023; pp. 1–6. [Google Scholar]

- Microchip. RTG4 Radiation-Tolerant FPGAs. Available online: https://www.microchip.com/en-us/products/fpgas-and-plds/radiation-tolerant-fpgas/rtg4-radiation-tolerant-fpgas (accessed on 20 October 2024).

- Berg, M.D.; Kim, H.; Phan, A.; Seidleck, C.; Label, K.; Pellish, J.; Campola, M. Microsemi RTG4 Rev C Field Programmable Gate Array Single Event Effects (SEE) Heavy-Ion Test Report; Technical Report; 2019. Available online: https://ntrs.nasa.gov/citations/20190001593 (accessed on 20 October 2024).

- Tambara, L.A.; Andersson, J.; Sturesson, F.; Jalle, J.; Sharp, R. Dynamic Heavy Ion SEE Testing of Microsemi RTG4 Flash-based FPGA Embedding a LEON4FT-based SoC. In Proceedings of the 2018 18th European Conference on Radiation and Its Effects on Components and Systems (RADECS), Gothenburg, Sweden, 16–21 September 2018; pp. 1–6. [Google Scholar]

- Kim, H.; Park, J.; Lee, H.; Won, D.; Han, M. An FPGA-Accelerated CNN with Parallelized Sum Pooling for Onboard Realtime Routing in Dynamic Low-Orbit Satellite Networks. Electronics 2024, 13, 2280. [Google Scholar] [CrossRef]

- Rapuano, E.; Meoni, G.; Pacini, T.; Dinelli, G.; Furano, G.; Giuffrida, G.; Fanucci, L. An fpga-based hardware accelerator for cnns inference on board satellites: Benchmarking with myriad 2-based solution for the cloudscout case study. Remote Sens. 2021, 13, 1518. [Google Scholar] [CrossRef]

- Pitonak, R.; Mucha, J.; Dobis, L.; Javorka, M.; Marusin, M. Cloudsatnet-1: Fpga-based hardware-accelerated quantized cnn for satellite on-board cloud coverage classification. Remote Sens. 2022, 14, 3180. [Google Scholar] [CrossRef]

- Nannipieri, P.; Giuffrida, G.; Diana, L.; Panicacci, S.; Zulberti, L.; Fanucci, L.; Hernandez, H.G.M.; Hubner, M. Icu4sat: A general-purpose reconfigurable instrument control unit based on open source components. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022. [Google Scholar]

- Cosmas, K.; Kenichi, A. Utilization of FPGA for onboard inference of landmark localization in CNN-based spacecraft pose estimation. Aerospace 2020, 7, 159. [Google Scholar] [CrossRef]

- Intel. Next-Level Neuromorphic Computing: Intel Lab’s Loihi 2 Chip. Available online: https://www.intel.com/content/www/us/en/research/neuromorphic-computing-loihi-2-technology-brief.html (accessed on 20 October 2024).

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Ingeniars. GPU@SAT. Available online: https://www.ingeniars.com/in_product/gpusat/ (accessed on 20 October 2024).

- Benelli, G.; Todaro, G.; Monopoli, M.; Giuffrida, G.; Donati, M.; Fanucci, L. GPU@ SAT DevKit: Empowering Edge Computing Development Onboard Satellites in the Space-IoT Era. Electronics 2024, 13, 3928. [Google Scholar] [CrossRef]

- Benelli, G.; Giuffrida, G.; Ciardi, R.; Davalle, D.; Todaro, G.; Fanucci, L. GPU@ SAT, the AI enabling ecosystem for on-board satellite applications. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan-Les-Pins, France, 2–6 October 2023; pp. 1–4. [Google Scholar]

- MHI. AIRIS. Available online: https://www.mhi.com/news/240306.html (accessed on 20 October 2024).

- Communications, B.M. BMC Products. Available online: https://www.bluemarblecomms.com/products/ (accessed on 20 October 2024).

- Blue Marble Communications (BMC); BruhnBruhn Innovation (BBI). Space Edge Processor and Dacreo AI Ecosystem. Available online: https://bruhnbruhn.com/wp-content/uploads/2024/03/SAT2024-SEP-Apps-Demo-Flyer.pdf (accessed on 20 October 2024).

- Blue Marble Communications (BMC); BruhnBruhn Innovation (BBI). dacreo: Space AI Cloud Computing. Available online: https://bruhnbruhn.com/dacreo-space-ai-cloud-computing/ (accessed on 20 October 2024).

- AIKO. AIKO Onboard Data Processing Suite. Available online: https://aikospace.com/projects/aiko-onboard-data-processing-suite/ (accessed on 20 October 2024).

- Dini, P.; Diana, L.; Elhanashi, A.; Saponara, S. Overview of AI-Models and Tools in Embedded IIoT Applications. Electronics 2024, 13, 2322. [Google Scholar] [CrossRef]

- Furano, G.; Tavoularis, A.; Rovatti, M. AI in space: Applications examples and challenges. In Proceedings of the 2020 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFT), Frascati, Italy, 19–21 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on intrusion detection systems design exploiting machine learning for networking cybersecurity. Appl. Sci. 2023, 13, 7507. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Analysis, design, and comparison of machine-learning techniques for networking intrusion detection. Designs 2021, 5, 9. [Google Scholar] [CrossRef]

- Wei, L.; Ma, Z.; Yang, C.; Yao, Q. Advances in the Neural Network Quantization: A Comprehensive Review. Appl. Sci. 2024, 14, 7445. [Google Scholar] [CrossRef]

- Dantas, P.V.; Sabino da Silva, W., Jr.; Cordeiro, L.C.; Carvalho, C.B. A comprehensive review of model compression techniques in machine learning. Appl. Intell. 2024, 54, 11804–11844. [Google Scholar] [CrossRef]

- Deng, L.; Li, G.; Han, S.; Shi, L.; Xie, Y. Model compression and hardware acceleration for neural networks: A comprehensive survey. Proc. IEEE 2020, 108, 485–532. [Google Scholar] [CrossRef]

- Ekelund, J.; Vinuesa, R.; Khotyaintsev, Y.; Henri, P.; Delzanno, G.L.; Markidis, S. AI in Space for Scientific Missions: Strategies for Minimizing Neural-Network Model Upload. arXiv 2024, arXiv:2406.14297. [Google Scholar]

- Olshevsky, V.; Khotyaintsev, Y.V.; Lalti, A.; Divin, A.; Delzanno, G.L.; Anderzén, S.; Herman, P.; Chien, S.W.; Avanov, L.; Dimmock, A.P.; et al. Automated classification of plasma regions using 3D particle energy distributions. J. Geophys. Res. Space Phys. 2021, 126, e2021JA029620. [Google Scholar] [CrossRef]

- Guerrisi, G.; Del Frate, F.; Schiavon, G. Satellite on-board change detection via auto-associative neural networks. Remote Sens. 2022, 14, 2735. [Google Scholar] [CrossRef]

- Ziaja, M.; Bosowski, P.; Myller, M.; Gajoch, G.; Gumiela, M.; Protich, J.; Borda, K.; Jayaraman, D.; Dividino, R.; Nalepa, J. Benchmarking deep learning for on-board space applications. Remote Sens. 2021, 13, 3981. [Google Scholar] [CrossRef]

- Ghassemi, S.; Magli, E. Convolutional neural networks for on-board cloud screening. Remote Sens. 2019, 11, 1417. [Google Scholar] [CrossRef]

- Hughes, M.J.; Hayes, D.J. Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Lagunas, E.; Ortiz, F.; Eappen, G.; Daoud, S.; Martins, W.A.; Querol, J.; Chatzinotas, S.; Skatchkovsky, N.; Rajendran, B.; Simeone, O. Performance Evaluation of Neuromorphic Hardware for Onboard Satellite Communication Applications. arXiv 2024, arXiv:2401.06911. [Google Scholar]

- Orchard, G.; Frady, E.P.; Rubin, D.B.D.; Sanborn, S.; Shrestha, S.B.; Sommer, F.T.; Davies, M. Efficient neuromorphic signal processing with loihi 2. In Proceedings of the 2021 IEEE Workshop on Signal Processing Systems (SiPS), Coimbra, Portugal, 20–22 October 2021; pp. 254–259. [Google Scholar]

- Intel. Intel Advances Neuromorphic with Loihi 2, New Lava Software Framework and New Partners. Available online: https://www.intel.com/content/www/us/en/newsroom/news/intel-unveils-neuromorphic-loihi-2-lava-software.html#gs.ezemn0 (accessed on 20 October 2024).

- LAVA. Lava Software Framework. Available online: https://lava-nc.org/ (accessed on 20 October 2024).

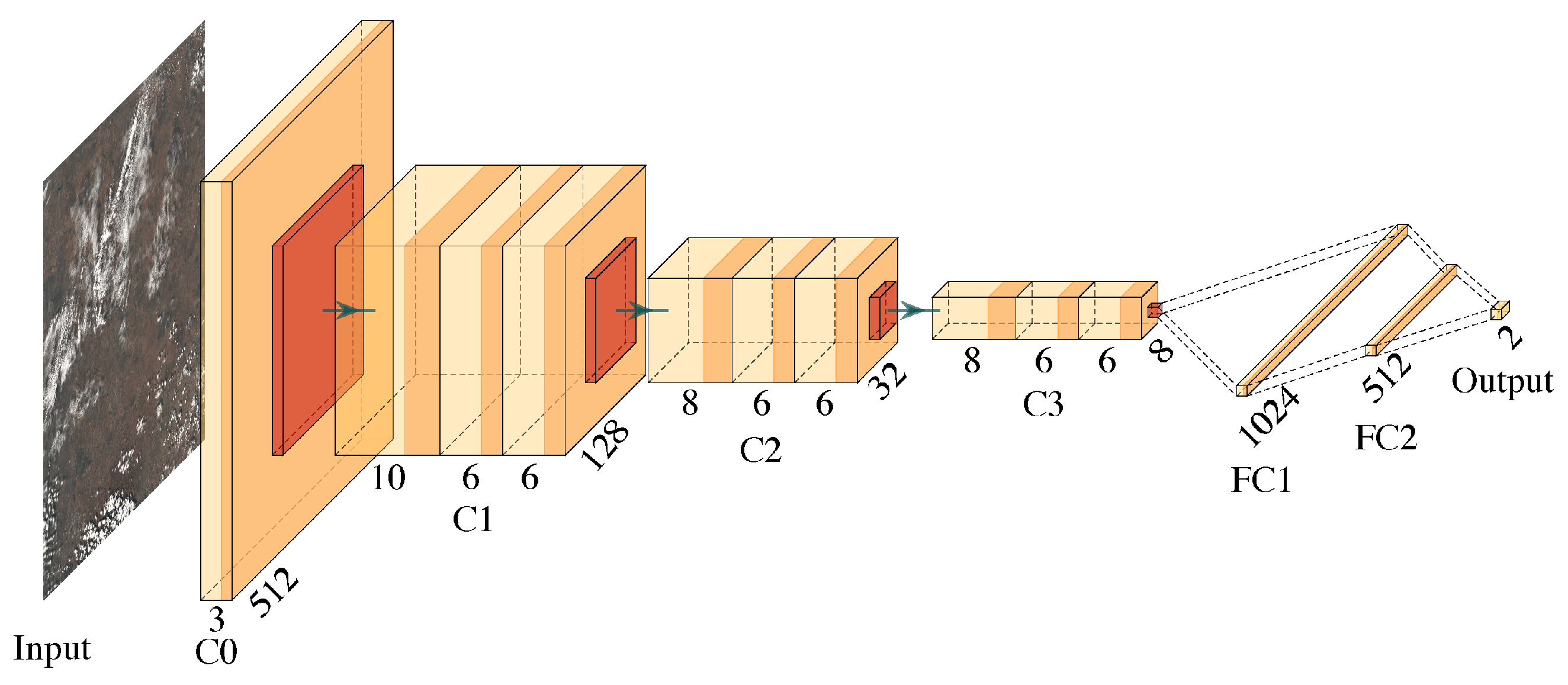

- Ieracitano, C.; Mammone, N.; Spagnolo, F.; Frustaci, F.; Perri, S.; Corsonello, P.; Morabito, F.C. An explainable embedded neural system for on-board ship detection from optical satellite imagery. Eng. Appl. Artif. Intell. 2024, 133, 108517. [Google Scholar] [CrossRef]

- Giuffrida, G.; Fanucci, L.; Meoni, G.; Batič, M.; Buckley, L.; Dunne, A.; van Dijk, C.; Esposito, M.; Hefele, J.; Vercruyssen, N.; et al. The Φ-Sat-1 Mission: The First On-Board Deep Neural Network Demonstrator for Satellite Earth Observation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Cucchetti, E.; Latry, C.; Blanchet, G.; Delvit, J.M.; Bruno, M. Onboard/on-ground image processing chain for high-resolution Earth observation satellites. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 755–762. [Google Scholar] [CrossRef]

- Chintalapati, B.; Precht, A.; Hanra, S.; Laufer, R.; Liwicki, M.; Eickhoff, J. Opportunities and challenges of on-board AI-based image recognition for small satellite Earth observation missions. Adv. Space Res. 2024; in press. [Google Scholar] [CrossRef]

- de VIEILLEVILLE, F.; Lagrange, A.; Ruiloba, R.; May, S. Towards distillation of deep neural networks for satellite on-board image segmentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1553–1559. [Google Scholar] [CrossRef]

- Goudemant, T.; Francesconi, B.; Aubrun, M.; Kervennic, E.; Grenet, I.; Bobichon, Y.; Bellizzi, M. Onboard Anomaly Detection for Marine Environmental Protection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7918–7931. [Google Scholar] [CrossRef]

- Begni, A.; Dini, P.; Saponara, S. Design and test of an lstm-based algorithm for li-ion batteries remaining useful life estimation. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society, Genoa, Italy, 26–27 September 2022; Springer: Cham, Switzerland, 2022; pp. 373–379. [Google Scholar]

- Dini, P.; Ariaudo, G.; Botto, G.; Greca, F.L.; Saponara, S. Real-time electro-thermal modelling and predictive control design of resonant power converter in full electric vehicle applications. IET Power Electron. 2023, 16, 2045–2064. [Google Scholar] [CrossRef]

- Murphy, J.; Ward, J.E.; Mac Namee, B. An Overview of Machine Learning Techniques for Onboard Anomaly Detection in Satellite Telemetry. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan-Les-Pins, France, 2–6 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Muthusamy, V.; Kumar, K.D. Failure prognosis and remaining useful life prediction of control moment gyroscopes onboard satellites. Adv. Space Res. 2022, 69, 718–726. [Google Scholar] [CrossRef]

- Salazar, C.; Gonzalez-Llorente, J.; Cardenas, L.; Mendez, J.; Rincon, S.; Rodriguez-Ferreira, J.; Acero, I.F. Cloud detection autonomous system based on machine learning and cots components on-board small satellites. Remote Sens. 2022, 14, 5597. [Google Scholar] [CrossRef]

- Murphy, J.; Ward, J.E.; Namee, B.M. Low-power boards enabling ml-based approaches to fdir in space-based applications. In Proceedings of the 35th Annual Small Satellite Conference, Salt Lake City, UT, USA, 6–11 August 2021. [Google Scholar]

- Pacini, F.; Dini, P.; Fanucci, L. Cooperative Driver Assistance for Electric Wheelchair. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society, Genoa, Italy, 28–29 September 2023; Springer: Cham, Switzerland, 2023; pp. 109–116. [Google Scholar]

- Pacini, F.; Dini, P.; Fanucci, L. Design of an Assisted Driving System for Obstacle Avoidance Based on Reinforcement Learning Applied to Electrified Wheelchairs. Electronics 2024, 13, 1507. [Google Scholar] [CrossRef]

- Hao, Z.; Shyam, R.A.; Rathinam, A.; Gao, Y. Intelligent spacecraft visual GNC architecture with the state-of-the-art AI components for on-orbit manipulation. Front. Robot. AI 2021, 8, 639327. [Google Scholar] [CrossRef]

- Buonagura, C.; Pugliatti, M.; Franzese, V.; Topputo, F.; Zeqaj, A.; Zannoni, M.; Varile, M.; Bloise, I.; Fontana, F.; Rossi, F.; et al. Deep Learning for Navigation of Small Satellites About Asteroids: An Introduction to the DeepNav Project. In Proceedings of the International Conference on Applied Intelligence and Informatics, Reggio Calabria, Italy, 1–3 September 2022; Springer: Cham, Switzerland, 2022; pp. 259–271. [Google Scholar]

- Buonagura, C.; Borgia, S.; Pugliatti, M.; Morselli, A.; Topputo, F.; Corradino, F.; Visconti, P.; Deva, L.; Fedele, A.; Leccese, G.; et al. The CubeSat Mission FUTURE: A Preliminary Analysis to Validate the On-Board Autonomous Orbit Determination. In Proceedings of the 12th International Conference on Guidance, Navigation & Control Systems (GNC) and 9th International Conference on Astrodynamics Tools and Techniques (ICATT), Sopot, Poland, 12–16 June 2023; pp. 1–15. [Google Scholar]

- Fourati, F.; Alouini, M.S. Artificial intelligence for satellite communication: A review. Intell. Converg. Netw. 2021, 2, 213–243. [Google Scholar] [CrossRef]

- Gómez, P.; Meoni, G. Tackling the Satellite Downlink Bottleneck with Federated Onboard Learning of Image Compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 6809–6818. [Google Scholar]

- Guerrisi, G.; Bencivenni, G.; Schiavon, G.; Del Frate, F. On-Board Multispectral Image Compression with an Artificial Intelligence Based Algorithm. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 2555–2559. [Google Scholar] [CrossRef]

- Garimella, S. Onboard deep learning for efficient small satellite reflectance retrievals and downlink. In Proceedings of the Image and Signal Processing for Remote Sensing XXIX, Amsterdam, The Netherlands, 4–5 September 2023; Volume 12733, pp. 20–23. [Google Scholar]

- Navarro, T.; Dinis, D.D.C. Future Trends in AI for Satellite Communications. In Proceedings of the 2024 9th International Conference on Machine Learning Technologies, Oslo, Norway, 24–26 May 2024; pp. 64–73. [Google Scholar]

| Hardware | Computational Efficiency (TOPS/W) | Power Consumption (W) | Suitability for Space |

|---|---|---|---|

| Intel Loihi 2 | 10 | <1 | High |

| Memristors | Varies | <1 | High |

| Google Edge TPU | 4 | 2 | Tested |

| Nvidia Jetson Orin Nano | 6 | 7–15 | Tested |

| Intel Movidius Myriad X | 1.5 | 1–2 | Tested |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diana, L.; Dini, P. Review on Hardware Devices and Software Techniques Enabling Neural Network Inference Onboard Satellites. Remote Sens. 2024, 16, 3957. https://doi.org/10.3390/rs16213957

Diana L, Dini P. Review on Hardware Devices and Software Techniques Enabling Neural Network Inference Onboard Satellites. Remote Sensing. 2024; 16(21):3957. https://doi.org/10.3390/rs16213957

Chicago/Turabian StyleDiana, Lorenzo, and Pierpaolo Dini. 2024. "Review on Hardware Devices and Software Techniques Enabling Neural Network Inference Onboard Satellites" Remote Sensing 16, no. 21: 3957. https://doi.org/10.3390/rs16213957

APA StyleDiana, L., & Dini, P. (2024). Review on Hardware Devices and Software Techniques Enabling Neural Network Inference Onboard Satellites. Remote Sensing, 16(21), 3957. https://doi.org/10.3390/rs16213957