Detecting Wheat Heads from UAV Low-Altitude Remote Sensing Images Using Deep Learning Based on Transformer

Abstract

1. Introduction

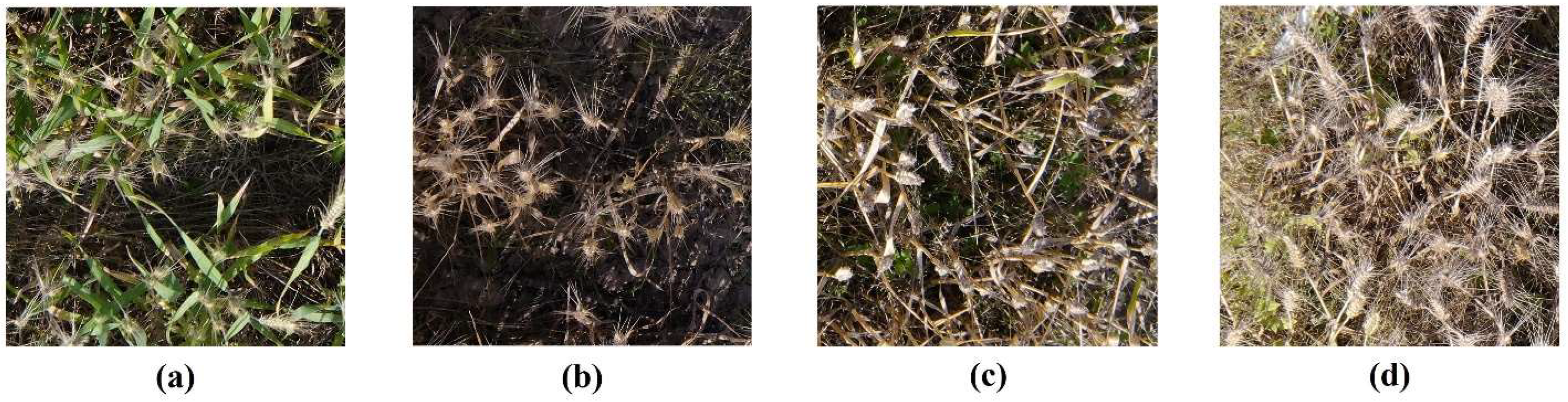

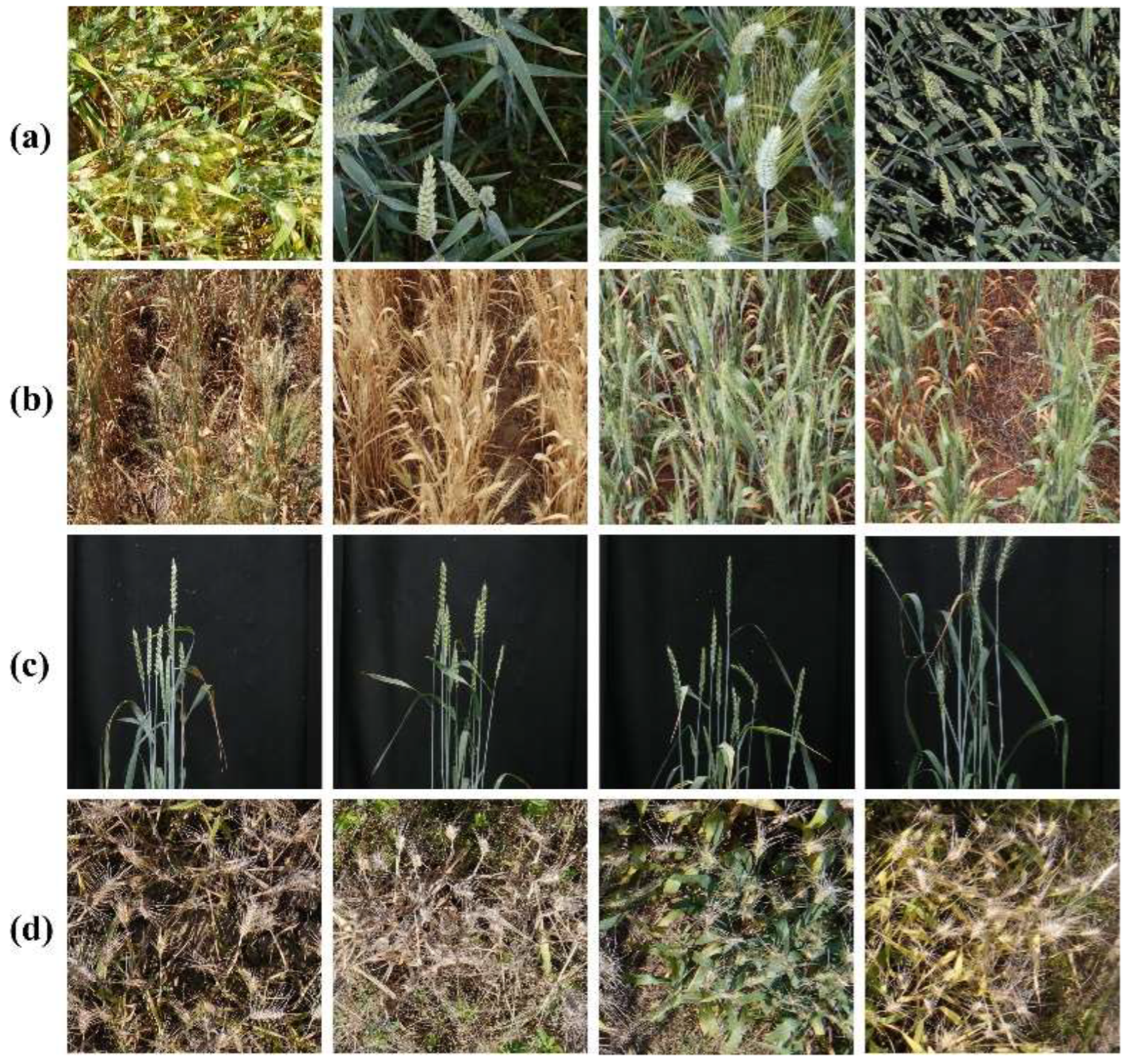

- The size, color, shape, and texture of wheat heads vary greatly depending on the variety and growth stage of wheat (Figure 1a).

- Due to the growth of wheat heads having different heights, angles, and directions, environmental illumination changes (Figure 1b) are uneven and unstable, and meteorological wind contributes to wheat heads shaking (Figure 1c), resulting in a large difference in the visual characteristics of wheat heads, which affects the accurate identification of wheat heads.

- The intensive planting of wheat leads to extremely dense distribution and severe occlusion, and there is a problem that different wheat organs block each other (Figure 1d).

2. Materials and Methods

2.1. Data Collection and Processing

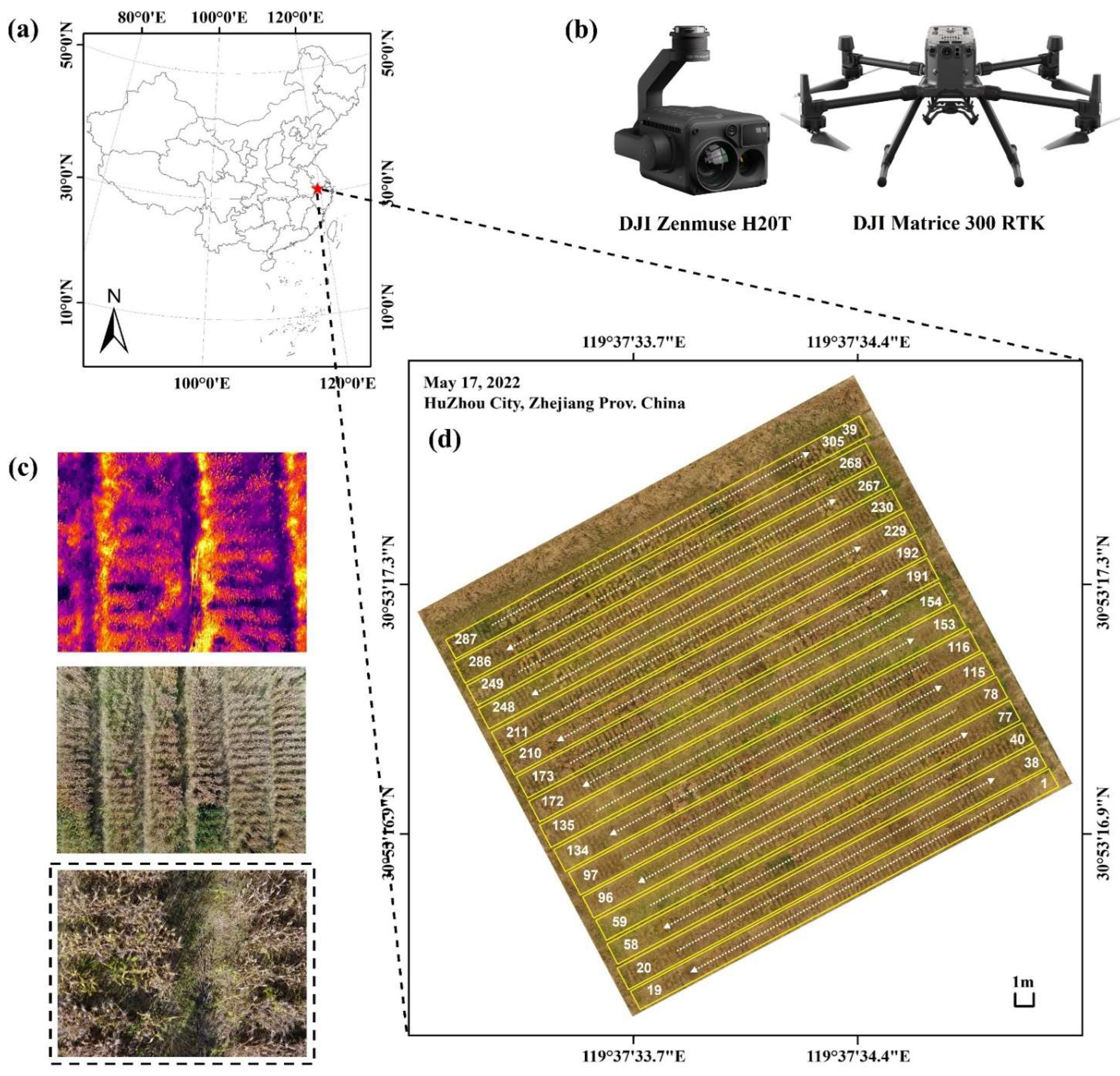

2.1.1. Experimental Site and Plant Material

2.1.2. Dataset

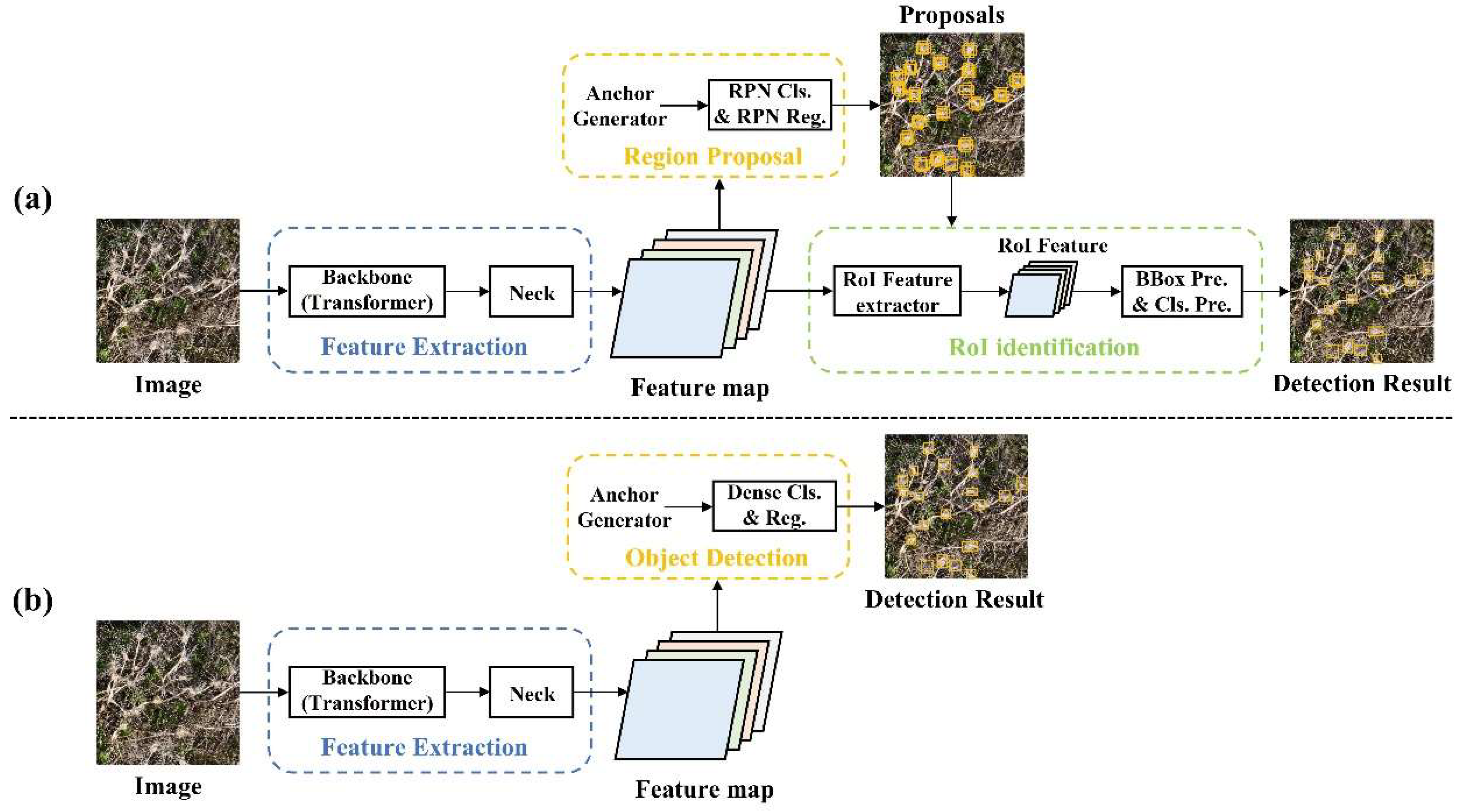

2.2. Wheat Head Detection Method

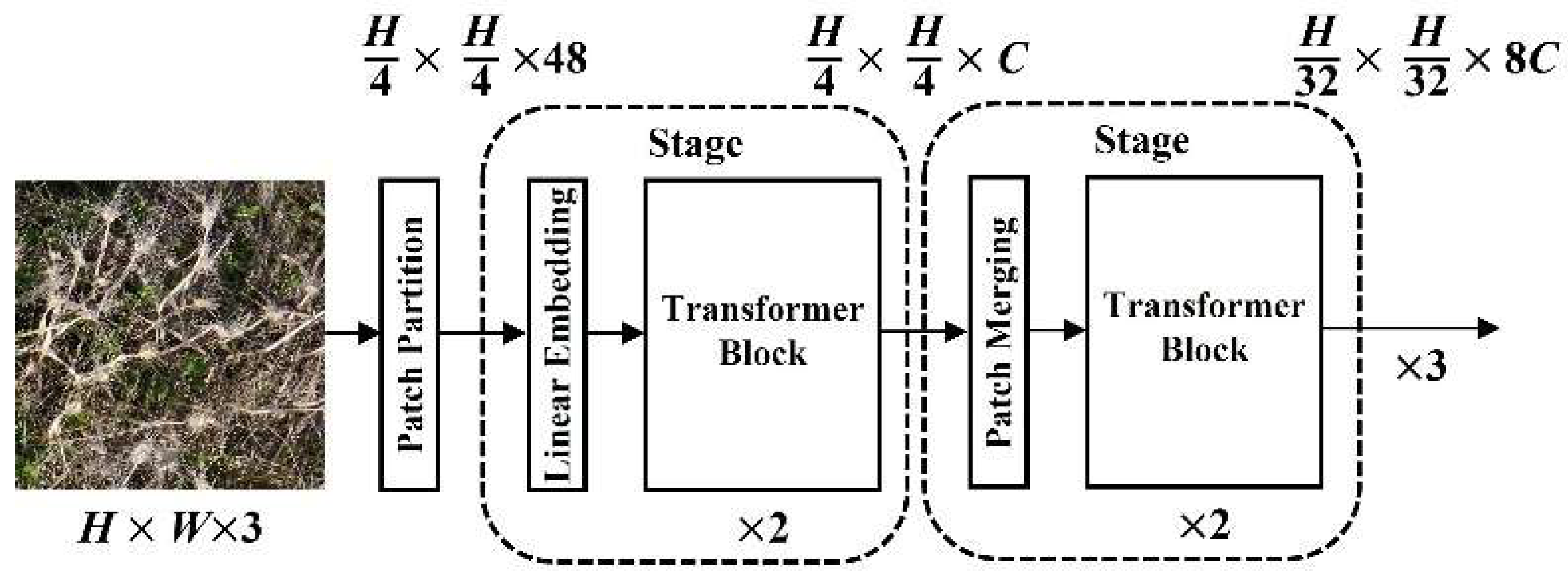

2.2.1. Transformer

2.2.2. Wheat Head Transformer Detection Method

2.2.3. Model Training and Testing

2.2.4. Evaluation Metrics

3. Results

3.1. Wheat Head Detect Results

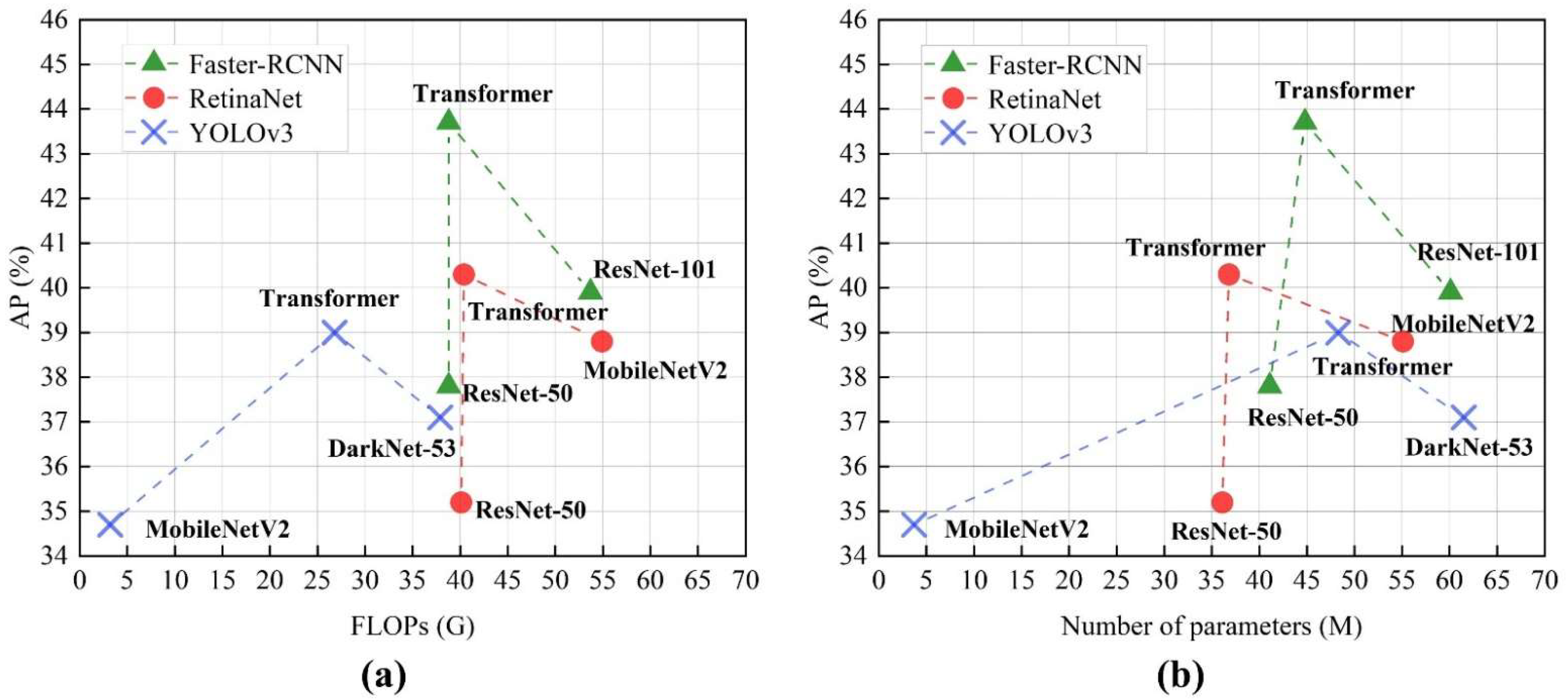

3.2. Comparison with Other Object Detection Methods

3.3. Comparing the Proposed Method on Different Common Wheat Head Datasets

4. Discussion

4.1. Robustness Interpretation of the Proposed Method and Optimization Direction of Detection Results

4.2. Comparison of Detection Metrics for Different Input Image Sizes

4.3. Detection of Wheat Head Using Different Backbones

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Method | Backbone | AP | AP50 | AP75 | APS | APM | APL | Params | FLOPs |

|---|---|---|---|---|---|---|---|---|---|

| Faster-RCNN | ResNet-50 | 37.8 | 79.4 | 32.0 | 2.5 | 38.0 | 49.6 | 41.1 M | 38.8 G |

| Faster-RCNN | ResNet-101 | 39.9 | 81.3 | 34.2 | 3.0 | 40.8 | 51.5 | 60.1 M | 53.7 G |

| Faster-RCNN | Transformer | 43.7 | 88.3 | 38.5 | 6.4 | 44.0 | 54.1 | 44.8 M | 38.8 G |

| RetinaNet | ResNet-50 | 35.2 | 75.6 | 26.8 | 2.6 | 32.5 | 42.1 | 36.1 M | 40.1 G |

| RetinaNet | ResNet-101 | 38.8 | 78.6 | 30.1 | 3.5 | 36.3 | 45.6 | 55.1 M | 54.9 G |

| RetinaNet | Transformer | 40.3 | 81.9 | 32.3 | 4.9 | 40.6 | 48.2 | 36.8 M | 40.4 G |

| YOLOv3 | DarkNet-53 | 37.1 | 78.6 | 31.8 | 3.5 | 36.7 | 42.1 | 61.5 M | 37.9 G |

| YOLOv3 | MobileNetV2 | 34.7 | 72.2 | 24.9 | 2.4 | 33.1 | 38.3 | 3.7 M | 3.2 G |

| YOLOv3 | Transformer | 39.0 | 80.9 | 33.6 | 3.8 | 39.6 | 45.3 | 48.3 M | 26.8 G |

References

- Hansen, L.B.S.; Roager, H.M.; Sondertoft, N.B.; Gobel, R.J.; Kristensen, M.; Valles-Colomer, M.; Vieira-Silva, S.; Ibrugger, S.; Lind, M.V.; Maerkedahl, R.B.; et al. A Low-Gluten Diet Induces Changes in the Intestinal Microbiome of Healthy Danish Adults. Nat. Commun. 2018, 9, 4630. [Google Scholar] [CrossRef]

- Delabre, I.; Rodriguez, L.O.; Smallwood, J.M.; Scharlemann, J.P.W.; Alcamo, J.; Antonarakis, A.S.; Rowhani, P.; Hazell, R.J.; Aksnes, D.L.; Balvanera, P.; et al. Actions on Sustainable Food Production and Consumption for the Post-2020 Global Biodiversity Framework. Sci. Adv. 2021, 7, eabc8259. [Google Scholar] [CrossRef]

- Deutsch, C.A.; Tewksbury, J.J.; Tigchelaar, M.; Battisti, D.S.; Merrill, S.C.; Huey, R.B.; Naylor, R.L. Increase in Crop Losses to Insect Pests in a Warming Climate. Science 2018, 361, 916–919. [Google Scholar] [CrossRef]

- Kinnunen, P.; Guillaume, J.H.A.; Taka, M.; D’Odorico, P.; Siebert, S.; Puma, M.J.; Jalava, M.; Kummu, M. Local Food Crop Production Can Fulfil Demand for Less than One-Third of the Population. Nat. Food 2020, 1, 229–237. [Google Scholar] [CrossRef]

- Laborde, D.; Martin, W.; Swinnen, J.; Vos, R. COVID-19 Risks to Global Food Security. Science 2020, 369, 500–502. [Google Scholar] [CrossRef] [PubMed]

- Watson, A.; Ghosh, S.; Williams, M.J.; Cuddy, W.S.; Simmonds, J.; Rey, M.-D.; Hatta, M.A.M.; Hinchliffe, A.; Steed, A.; Reynolds, D.; et al. Speed Breeding Is a Powerful Tool to Accelerate Crop Research and Breeding. Nat. Plants 2018, 4, 23–29. [Google Scholar] [CrossRef]

- Wang, H.; Sun, S.; Ge, W.; Zhao, L.; Hou, B.; Wang, K.; Lyu, Z.; Chen, L.; Xu, S.; Guo, J.; et al. Horizontal Gene Transfer of Fhb7 from Fungus Underlies Fusarium Head Blight Resistance in Wheat. Science 2020, 368, eaba5435. [Google Scholar] [CrossRef] [PubMed]

- Xiong, W.; Reynolds, M.P.; Crossa, J.; Schulthess, U.; Sonder, K.; Montes, C.; Addimando, N.; Singh, R.P.; Ammar, K.; Gerard, B.; et al. Increased Ranking Change in Wheat Breeding under Climate Change. Nat. Plants 2021, 7, 1207–1212. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Jia, H.; Li, T.; Wu, J.; Nagarajan, R.; Lei, L.; Powers, C.; Kan, C.-C.; Hua, W.; Liu, Z.; et al. TaCol-B5 Modifies Spike Architecture and Enhances Grain Yield in Wheat. Science 2022, 376, 180–183. [Google Scholar] [CrossRef] [PubMed]

- Yao, H.; Xie, Q.; Xue, S.; Luo, J.; Lu, J.; Kong, Z.; Wang, Y.; Zhai, W.; Lu, N.; Wei, R.; et al. HL2 on Chromosome 7D of Wheat (Triticum Aestivum L.) Regulates Both Head Length and Spikelet Number. Theor. Appl. Genet. 2019, 132, 1789–1797. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Jin, X.; Zarco-Tejada, P.J.; Schmidhalter, U.; Reynolds, M.P.; Hawkesford, M.J.; Varshney, R.K.; Yang, T.; Nie, C.; Li, Z.; Ming, B.; et al. High-Throughput Estimation of Crop Traits: A Review of Ground and Aerial Phenotyping Platforms. IEEE Geosci. Remote Sens. Mag. 2021, 9, 200–231. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and Challenges in Intelligent Remote Sensing Satellite Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Wang, X.; Luo, Z.; Li, W.; Hu, X.; Zhang, L.; Zhong, Y. A Self-Supervised Denoising Network for Satellite-Airborne-Ground Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5503716. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- de Almeida, D.R.A.; Broadbent, E.N.; Ferreira, M.P.; Meli, P.; Zambrano, A.M.A.; Gorgens, E.B.; Resende, A.F.; de Almeida, C.T.; do Amaral, C.H.; Corte, A.P.D.; et al. Monitoring Restored Tropical Forest Diversity and Structure through UAV-Borne Hyperspectral and Lidar Fusion. Remote Sens. Environ. 2021, 264, 112582. [Google Scholar] [CrossRef]

- Reddy Maddikunta, P.K.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ilk, H.G. A Novel Bilinear Unmixing Approach for Reconsideration of Subpixel Classification of Land Cover. Comput. Electron. Agric. 2018, 152, 126–140. [Google Scholar] [CrossRef]

- Lee, M.-K.; Golzarian, M.R.; Kim, I. A New Color Index for Vegetation Segmentation and Classification. Precis. Agric. 2021, 22, 179–204. [Google Scholar] [CrossRef]

- Li, H.; Li, Z.; Dong, W.; Cao, X.; Wen, Z.; Xiao, R.; Wei, Y.; Zeng, H.; Ma, X. An Automatic Approach for Detecting Seedlings per Hill of Machine-Transplanted Hybrid Rice Utilizing Machine Vision. Comput. Electron. Agric. 2021, 185, 106178. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y.; Komatsu, T. Development of a Low-Cost, Lightweight Hyperspectral Imaging System Based on a Polygon Mirror and Compact Spectrometers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 861–875. [Google Scholar] [CrossRef]

- Honkavaara, E.; Rosnell, T.; Oliveira, R.; Tommaselli, A. Band Registration of Tuneable Frame Format Hyperspectral UAV Imagers in Complex Scenes. ISPRS J. Photogramm. Remote Sens. 2017, 134, 96–109. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Raval, S.; Cullen, P.J. UAV-Hyperspectral Imaging of Spectrally Complex Environments. Int. J. Remote Sens. 2020, 41, 4136–4159. [Google Scholar] [CrossRef]

- Heylen, R.; Parente, M.; Gader, P. A Review of Nonlinear Hyperspectral Unmixing Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1844–1868. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Su, B.; Mi, Z.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.-H. Aerial Visual Perception in Smart Farming: Field Study of Wheat Yellow Rust Monitoring. IEEE Trans. Ind. Inform. 2021, 17, 2242–2249. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Explainable Identification and Mapping of Trees Using UAV RGB Image and Deep Learning. Sci. Rep. 2021, 11, 903. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep Learning for Multi-Task Plant Phenotyping. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Workshop ICCVW 2017, Venice, Italy, 22–29 October 2017; pp. 2055–2063. [Google Scholar]

- Hasan, M.M.; Chopin, J.P.; Laga, H.; Miklavcic, S.J. Detection and Analysis of Wheat Spikes Using Convolutional Neural Networks. Plant Methods 2018, 14, 1–13. [Google Scholar] [CrossRef]

- David, E.; Madec, S.; Sadeghi-Tehran, P.; Aasen, H.; Zheng, B.; Liu, S.; Kirchgessner, N.; Ishikawa, G.; Nagasawa, K.; Badhon, M.A.; et al. Global Wheat Head Detection (GWHD) Dataset: A Large and Diverse Dataset of High-Resolution RGB-Labelled Images to Develop and Benchmark Wheat Head Detection Methods. Plant Phenom. 2020, 2020, 3521852. [Google Scholar] [CrossRef] [PubMed]

- David, E.; Serouart, M.; Smith, D.; Madec, S.; Velumani, K.; Liu, S.; Wang, X.; Pinto, F.; Shafiee, S.; Tahir, I.S.A.; et al. Global Wheat Head Detection 2021: An Improved Dataset for Benchmarking Wheat Head Detection Methods. Plant Phenom. 2021, 2021, 9846158. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the 2021 IEEE CVF Conference Computer Vision and Pattern Recognition, CVPR 2021, Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar]

- Srinivas, A.; Lin, T.-Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck Transformers for Visual Recognition. In Proceedings of the 2021 IEEE CVF Conference Computer Vision and Pattern Recognition, CVPR 2021, Nashville, TN, USA, 20–25 June 2021; pp. 16514–16524. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 12009–12019. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 11976–11986. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 16000–16009. [Google Scholar]

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear Density Estimation from High Resolution RGB Imagery Using Deep Learning Technique. Agric. For. Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- Su, W.-H.; Zhang, J.; Yang, C.; Page, R.; Szinyei, T.; Hirsch, C.D.; Steffenson, B.J. Automatic Evaluation of Wheat Resistance to Fusarium Head Blight Using Dual Mask-RCNN Deep Learning Frameworks in Computer Vision. Remote Sens. 2021, 13, 26. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Ennadifi, E.; Carlier, A.; Gosselin, B.; Dumont, B.; Mercatoris, B. Deep Learning for Wheat Ear Segmentation and Ear Density Measurement: From Heading to Maturity. Comput. Electron. Agric. 2022, 199, 107161. [Google Scholar] [CrossRef]

- Gong, B.; Ergu, D.; Cai, Y.; Ma, B. Real-Time Detection for Wheat Head Applying Deep Neural Network. Sensors 2021, 21, 191. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Hong, Q.; Jiang, L.; Zhang, Z.; Ji, S.; Gu, C.; Mao, W.; Li, W.; Liu, T.; Li, B.; Tan, C. A Lightweight Model for Wheat Ear Fusarium Head Blight Detection Based on RGB Images. Remote Sens. 2022, 14, 3481. [Google Scholar] [CrossRef]

- Khaki, S.; Safaei, N.; Pham, H.; Wang, L. WheatNet: A Lightweight Convolutional Neural Network for High-Throughput Image-Based Wheat Head Detection and Counting. Neurocomputing 2022, 489, 78–89. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Ma, X.; Wu, X.; Wang, Y. High-Precision Wheat Head Detection Model Based on One-Stage Network and GAN Model. Front. Plant Sci. 2022, 13, 787852. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Yang, K.; Chen, C.; Shen, J.; Yang, Y.; Wu, X.; Norton, T. Wheat Head Counting in the Wild by an Augmented Feature Pyramid Networks-Based Convolutional Neural Network. Comput. Electron. Agric. 2022, 193, 106705. [Google Scholar] [CrossRef]

- Wen, C.; Wu, J.; Chen, H.; Su, H.; Chen, X.; Li, Z.; Yang, C. Wheat Spike Detection and Counting in the Field Based on SpikeRetinaNet. Front. Plant Sci. 2022, 13. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Wang, K.; Lu, H.; Cao, Z. Dynamic Color Transform for Wheat Head Detection. In Proceedings of the 2021 IEEE CVF International Conference Computer Vision Workshop, ICCVW 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 1278–1283. [Google Scholar]

- Najafian, K.; Ghanbari, A.; Stavness, I.; Jin, L.; Shirdel, G.H.; Maleki, F. A Semi-Self-Supervised Learning Approach for Wheat Head Detection Using Extremely Small Number of Labeled Samples. In Proceedings of the 2021 IEEE CVF International Conference Computer Vision Workshop, ICCVW 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 1342–1351. [Google Scholar]

- Bhagat, S.; Kokare, M.; Haswani, V.; Hambarde, P.; Kamble, R. WheatNet-Lite: A Novel Light Weight Network for Wheat Head Detection. In Proceedings of the 2021 IEEE CVF International Conference Computer Vision Workshop, ICCVW 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 1332–1341. [Google Scholar]

- Yang, G.; Chen, G.; He, Y.; Yan, Z.; Guo, Y.; Ding, J. Self-Supervised Collaborative Multi-Network for Fine-Grained Visual Categorization of Tomato Diseases. IEEE Access 2020, 8, 211912–211923. [Google Scholar] [CrossRef]

- Yang, G.; Chen, G.; Li, C.; Fu, J.; Guo, Y.; Liang, H. Convolutional Rebalancing Network for the Classification of Large Imbalanced Rice Pest and Disease Datasets in the Field. Front. Plant Sci. 2021, 12, 1150. [Google Scholar] [CrossRef]

- Yang, G.; He, Y.; Yang, Y.; Xu, B. Fine-Grained Image Classification for Crop Disease Based on Attention Mechanism. Front. Plant Sci. 2020, 11, 600854. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the 2021 IEEE CVF Conference on Computer Vision and Pattern Recognition, CVPR 2021, Nashville, TN, USA, 20–25 June 2021; pp. 6877–6886. [Google Scholar]

- Lin, K.; Wang, L.; Liu, Z. End-to-End Human Pose and Mesh Reconstruction with Transformers. In Proceedings of the 2021 IEEE CVF Conference on Computer Vision and Pattern Recognition, CVPR 2021, Nashville, TN, USA, 20–25 June 2021; pp. 1954–1963. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2015; Volume 28, pp. 91–99. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision, ICCV, Venice, Italy, 22–2 October 2017; pp. 2999–3007. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You Only Look One-Level Feature. In Proceedings of the 2021 IEEE CVF Conference on Computer Vision and Pattern Recognition, CVPR 2021, Berkeley, CA, USA, 19–25 June 2021; pp. 13034–13043. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

| Dataset | Release Time | Environment | Resolution | Numbers | Instances |

|---|---|---|---|---|---|

| GWHD [31] | 2020 | Field | 1024 1024 | 3422 | 188,445 |

| SPIKE [30] | 2018 | Lab | 2001 1501 | 335 | 25,000 |

| ACID [29] | 2017 | Field | 1956 1530 | 534 | 4100 |

| UWHD | 2022 | Field | 1120 1120 | 550 | 30,500 |

| Epochs | Batch Size | Learning Rate | Betas | Weight Decay |

|---|---|---|---|---|

| 100 | 8 | 0.001 | 0.9, 0.999 | 0.05 |

| Method | Backbone | AP | AP50 | AP75 | APS | APM | APL | Params | FLOPs |

|---|---|---|---|---|---|---|---|---|---|

| Faster-RCNN | Transformer | 43.7 | 88.3 | 38.5 | 6.4 | 44.0 | 54.1 | 44.8 M | 38.8 G |

| RetinaNet | Transformer | 40.3 | 81.9 | 32.3 | 4.9 | 40.6 | 48.2 | 36.8 M | 40.4 G |

| YOLOv3 | Transformer | 39.0 | 80.9 | 33.6 | 3.8 | 39.6 | 45.3 | 48.3 M | 26.8 G |

| SSD [64] | VGG-16 | 35.1 | 77.3 | 26.1 | 3.1 | 35.0 | 46.3 | 23.8 M | 67.2 G |

| Cascade R-CNN [65] | ResNet-50 | 38.5 | 78.1 | 30.5 | 3.2 | 36.5 | 48.0 | 68.9 M | 40.8 G |

| FCOS [66] | ResNet-50 | 37.8 | 80.2 | 31.3 | 3.8 | 35.6 | 47.5 | 31.8 M | 38.6 G |

| DETR [57] | ResNet-50 | 41.1 | 87.5 | 35.6 | 8.2 | 41.5 | 50.5 | 41.3 M | 18.5 G |

| YOLOF [67] | R-50-C5 | 42.8 | 82.1 | 39.9 | 5.8 | 42.9 | 55.2 | 42.0 M | 19.2 G |

| YOLOX [68] | YOLOX-M | 43.0 | 85.4 | 38.2 | 6.1 | 43.1 | 54.6 | 25.3 M | 18.0 G |

| Dataset | AP50 | Reference |

|---|---|---|

| GWHD | 91.3 | [52] |

| 92.6 | [49] | |

| 93.2 | Our | |

| SPIKE | 67.6 | [30] |

| 86.1 | [52] | |

| 88.4 | Our | |

| ACID | 76.9 | [52] |

| 79.5 | Our |

| Input Size | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| 448 × 448 | 43.7 | 88.3 | 38.5 | 6.4 | 44.0 | 54.1 |

| 672 × 672 | 44.8 | 89.1 | 40.9 | 9.9 | 45.3 | 55.4 |

| 896 × 896 | 46.3 | 90.3 | 47.2 | 11.4 | 48.6 | 57.9 |

| 1120 × 1120 | 46.7 | 89.8 | 47.1 | 12.5 | 48.5 | 58.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Yang, G.; Feng, X.; Li, X.; Fang, H.; Zhang, J.; Bai, X.; Tao, M.; He, Y. Detecting Wheat Heads from UAV Low-Altitude Remote Sensing Images Using Deep Learning Based on Transformer. Remote Sens. 2022, 14, 5141. https://doi.org/10.3390/rs14205141

Zhu J, Yang G, Feng X, Li X, Fang H, Zhang J, Bai X, Tao M, He Y. Detecting Wheat Heads from UAV Low-Altitude Remote Sensing Images Using Deep Learning Based on Transformer. Remote Sensing. 2022; 14(20):5141. https://doi.org/10.3390/rs14205141

Chicago/Turabian StyleZhu, Jiangpeng, Guofeng Yang, Xuping Feng, Xiyao Li, Hui Fang, Jinnuo Zhang, Xiulin Bai, Mingzhu Tao, and Yong He. 2022. "Detecting Wheat Heads from UAV Low-Altitude Remote Sensing Images Using Deep Learning Based on Transformer" Remote Sensing 14, no. 20: 5141. https://doi.org/10.3390/rs14205141

APA StyleZhu, J., Yang, G., Feng, X., Li, X., Fang, H., Zhang, J., Bai, X., Tao, M., & He, Y. (2022). Detecting Wheat Heads from UAV Low-Altitude Remote Sensing Images Using Deep Learning Based on Transformer. Remote Sensing, 14(20), 5141. https://doi.org/10.3390/rs14205141