ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images

Abstract

:1. Introduction

- A novel ensemble self-supervised feature-learning method is proposed for small sample classification of HSIs. Through constraining the cross-correlation matrix of different distortions of the same sample to the identity matrix, the proposed method can learn deep features of homogeneous samples gathering together and heterogeneous samples separating from each other in a self-supervised manner, effectively improving HSI classification performance in the case of small samples.

- To utilize the spatial–spectral information in HSIs more fully and effectively, a deep feature-learning network is designed based on the EfficientNet-B0. Experimental results show that the designed model possesses a greater feature-learning ability than general CNN-based models and deep residual models.

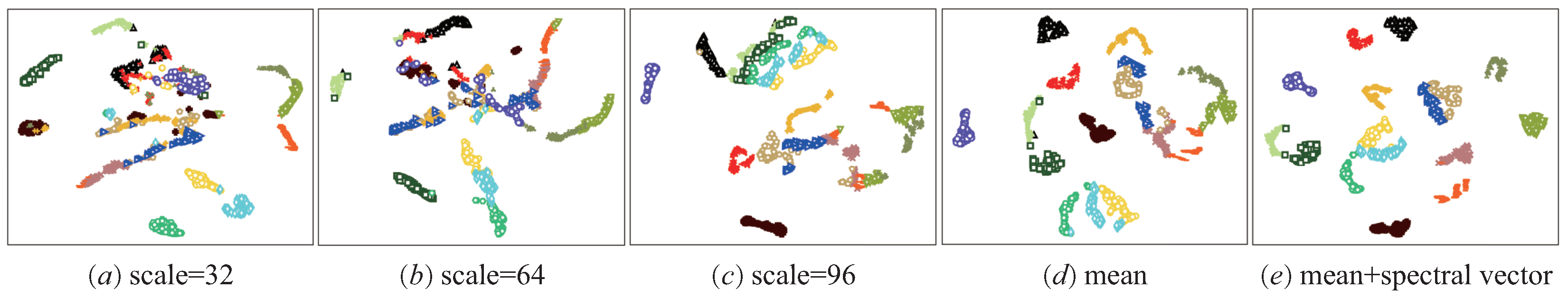

- Two simple but effective ensemble learning strategies, feature-level and view-level ensemble, are proposed to further improve the feature-learning effect and HSI small sample classification accuracy by jointly utilizing spatial contextual information at different scales and feature information at different bands.

- Extensive experiments on three HSIs are conducted and the statistical results demonstrate that the proposed method not only achieves better classification performance than existing advanced methods in the case of small samples, but also obtains higher classification accuracy with sufficient training samples.

2. Methodology

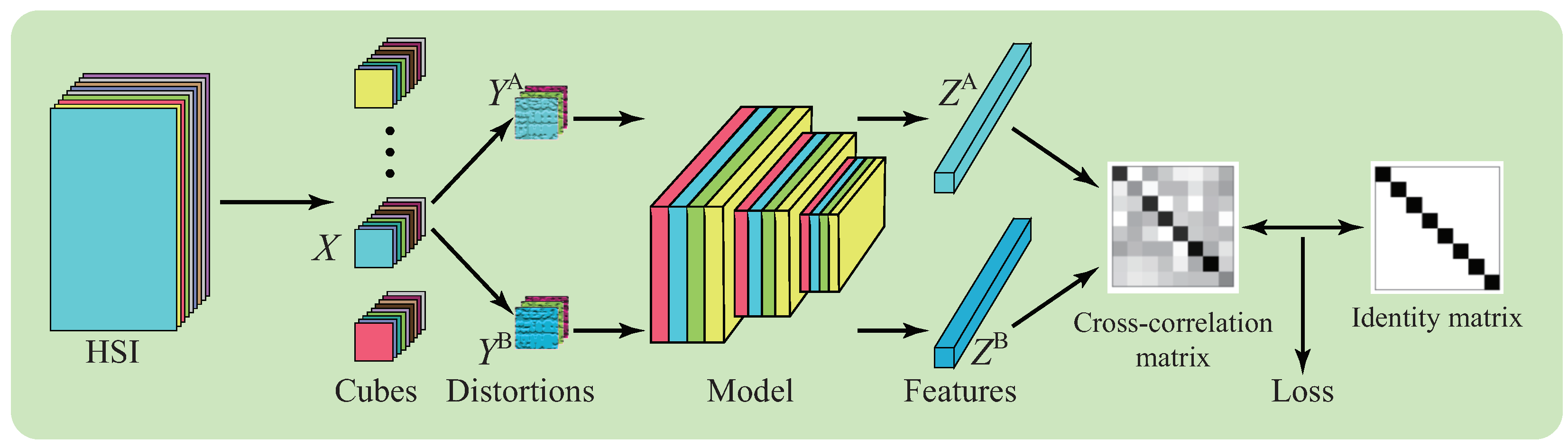

2.1. Workflow of the Proposed Method

2.2. Self-Supervised Loss Function for Model Training

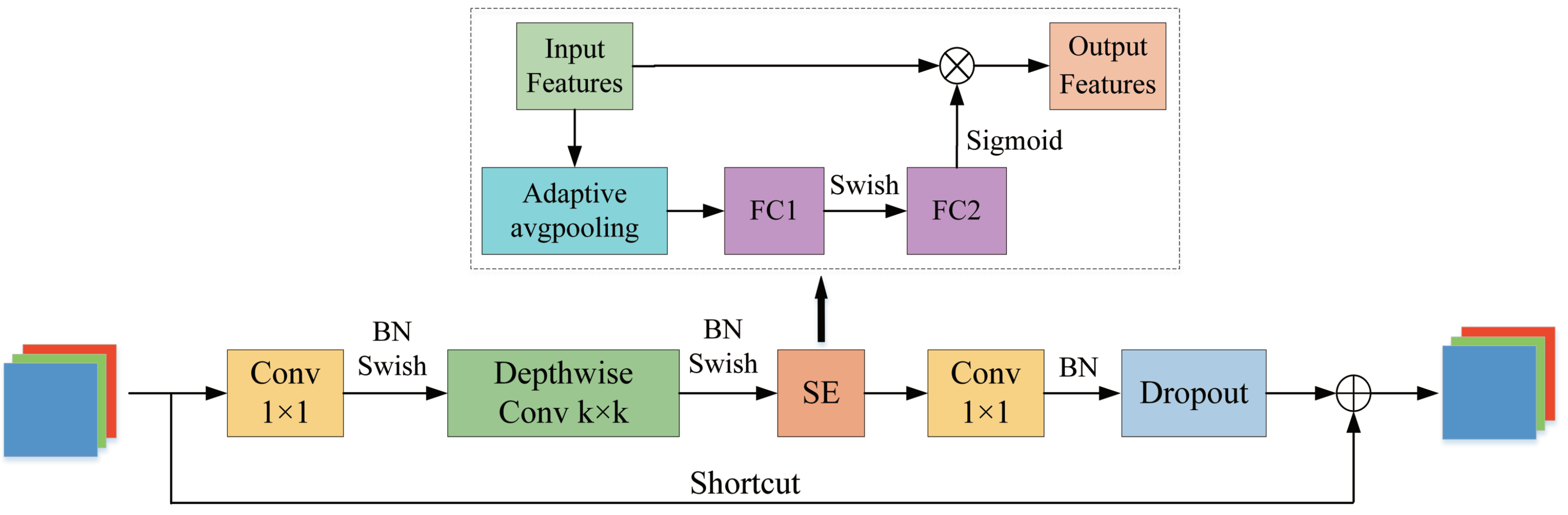

2.3. Deep Network for Feature Learning

- (1)

- The BN layer can accelerate the convergence speed of deep models and make the training process more effective. For the input of the qth layer, the BN result can be represented as:In Equation (3), and represent the input and output for the BN layer, and and denote the expectation and standard deviation, respectively.

- (2)

- The sigmoid activation function can map the output into the interval [0, 1], which is conducive to fast calculation and back propagation. For the input of the qth layer, the result of sigmoid activation can be represented as:In Equation (4), e is the natural logarithm.

- (3)

- The swish activation function is smooth and non-monotonic. Existing studies have shown that the swish activation function can further improve the performance of deep models in many computer vision tasks [52]. For the input of the qth layer, the result of swish activation can be represented as:In Equation (5), is either a constant or a trainable parameter.

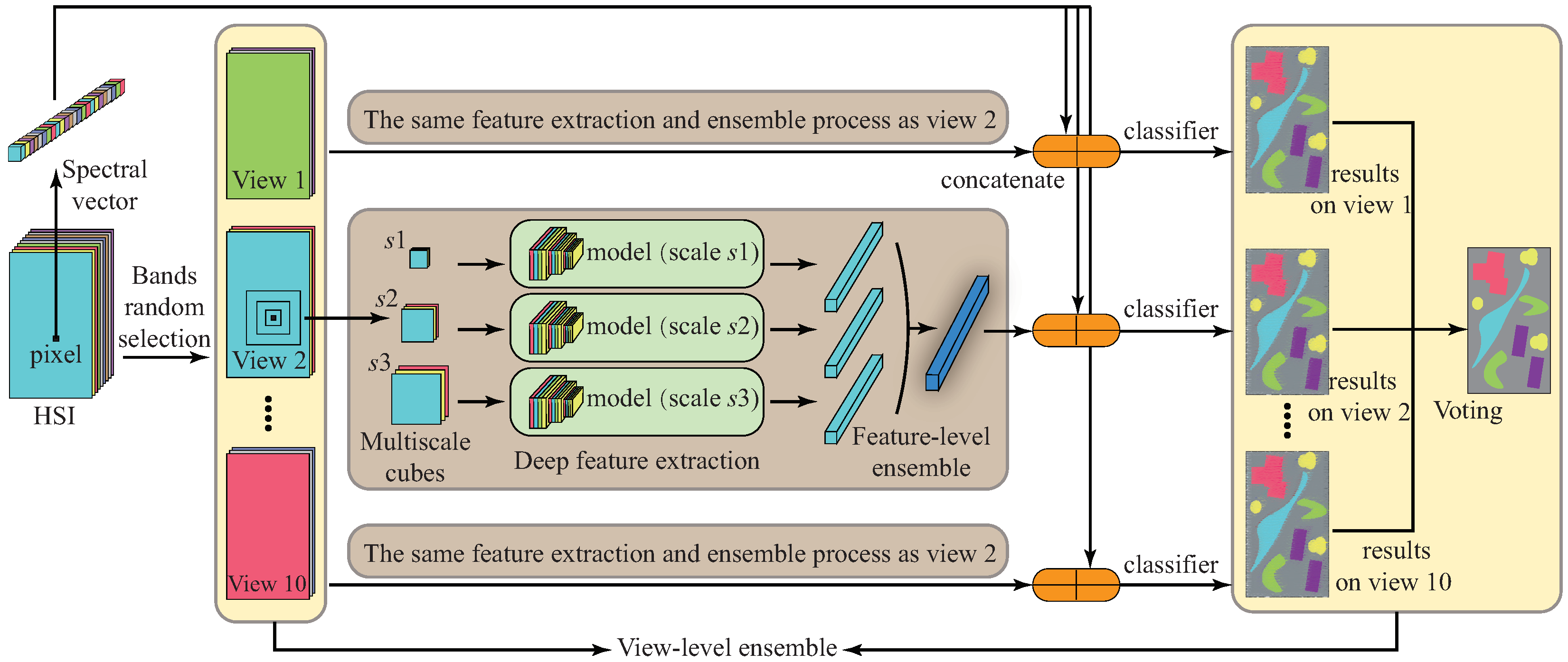

2.4. Feature Extraction and Classification Based on Two Ensemble Learning Strategies

- (1)

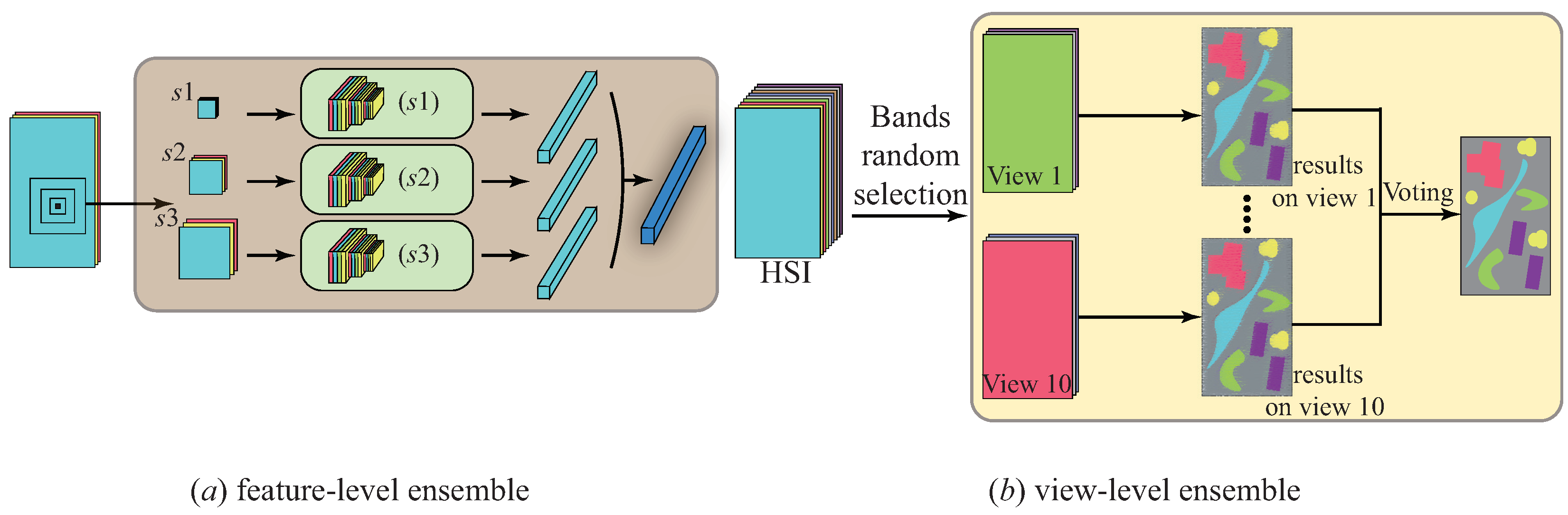

- Feature-level ensemble: As shown in Figure 4a, the first ensemble strategy attempts to improve the feature-learning effect by fusing multiscale spatial information. Firstly, the cubes with different scales around the center pixel are used to train the designed model in the self-supervised form, so that different models are more focused on spatial contextual information at different scales. In other words, deep models training on cubes with different scales are multiple base learners. Then, in the classification process, feature vectors generated by different base learners are fused to jointly utilize multiscale spatial information. Obviously, there are many different fusion methods, such as mean and concatenation, that can be employed. Moreover, there are many options for the size of the spatial scale. In the next section, the setting of the two hyperparameters will be analyzed in detail.

- (2)

- View-level ensemble: Inspired by the work of Liu et al. [51], different bands of HSIs can be regarded as different views. The proposed view-level ensemble strategy aims to improve the accuracy and robustness of classification results by comprehensively utilizing feature information from different views. As shown in Figure 4b, the target HSI is firstly reduced to multiple three-band images by random band selection, skilfully ensuring the consistency of the data dimension between the self-supervised training and classification process. Then, the voting strategy is used to integrate the classification results of different three-band images into the final classification results.

3. Experimental Results and Analysis

3.1. Data Sets

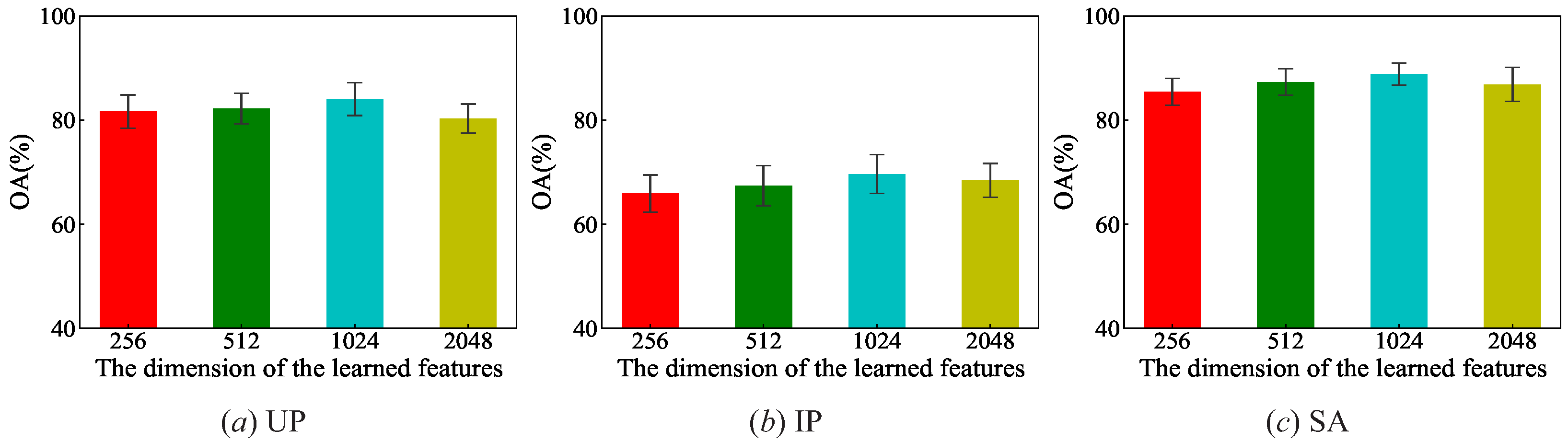

3.2. Hyperparameter Settings

3.3. Classification Results in the Case of Small Samples

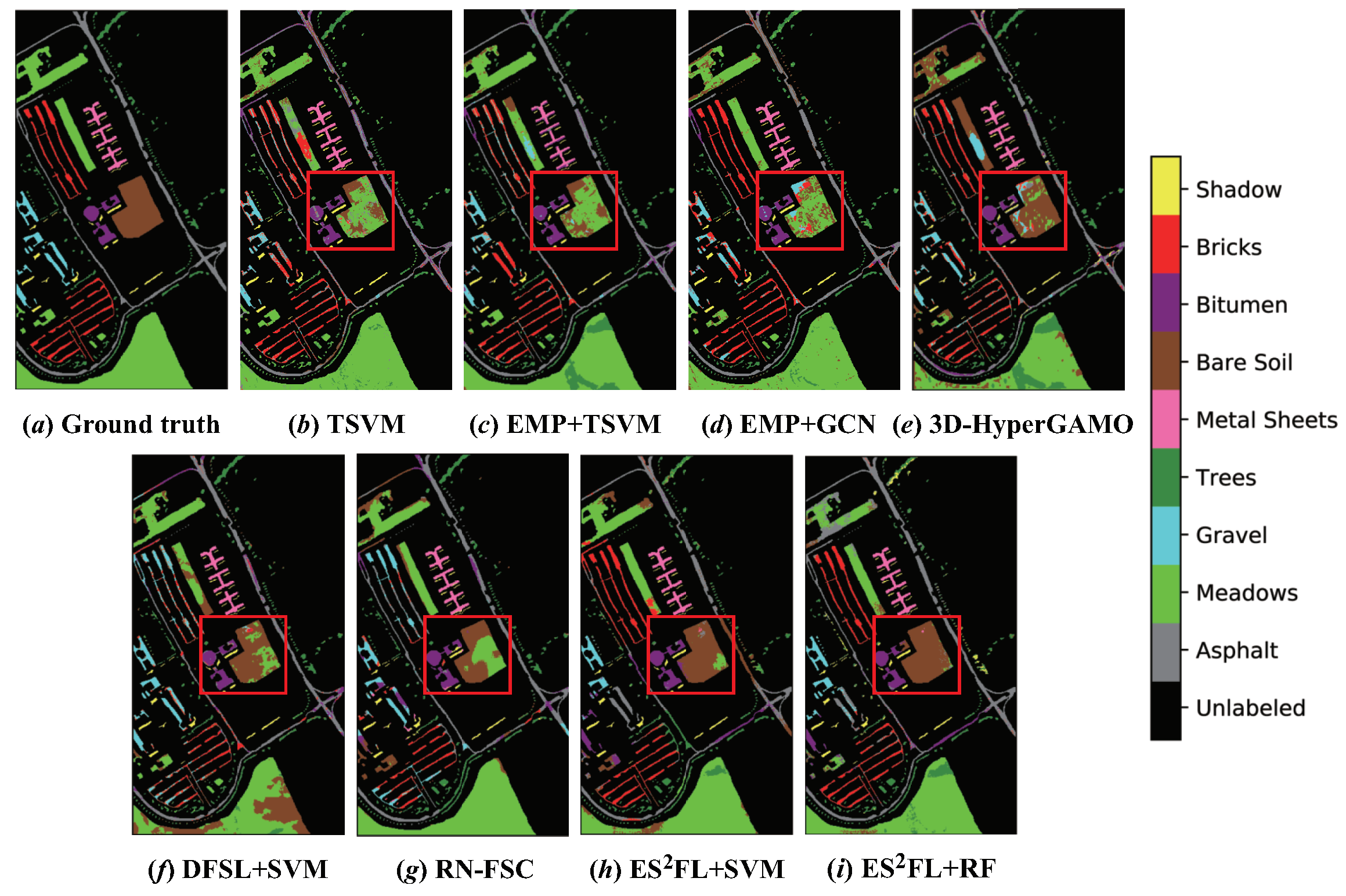

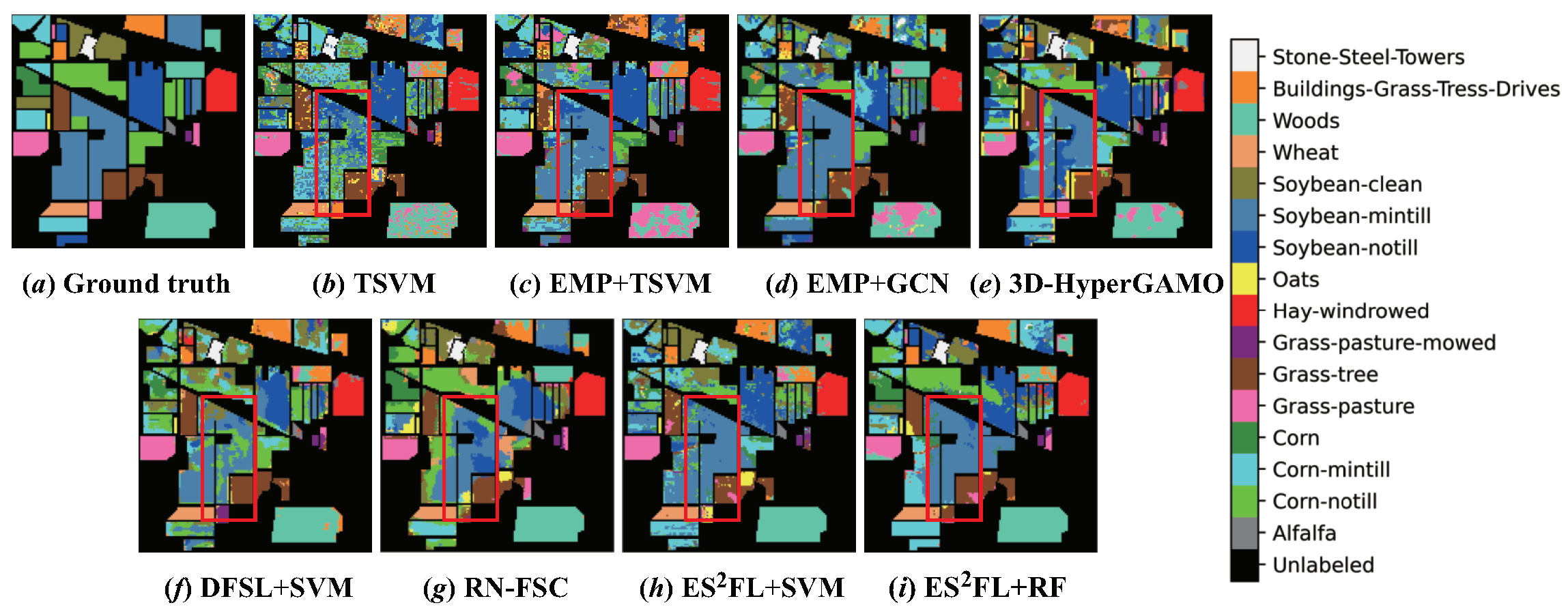

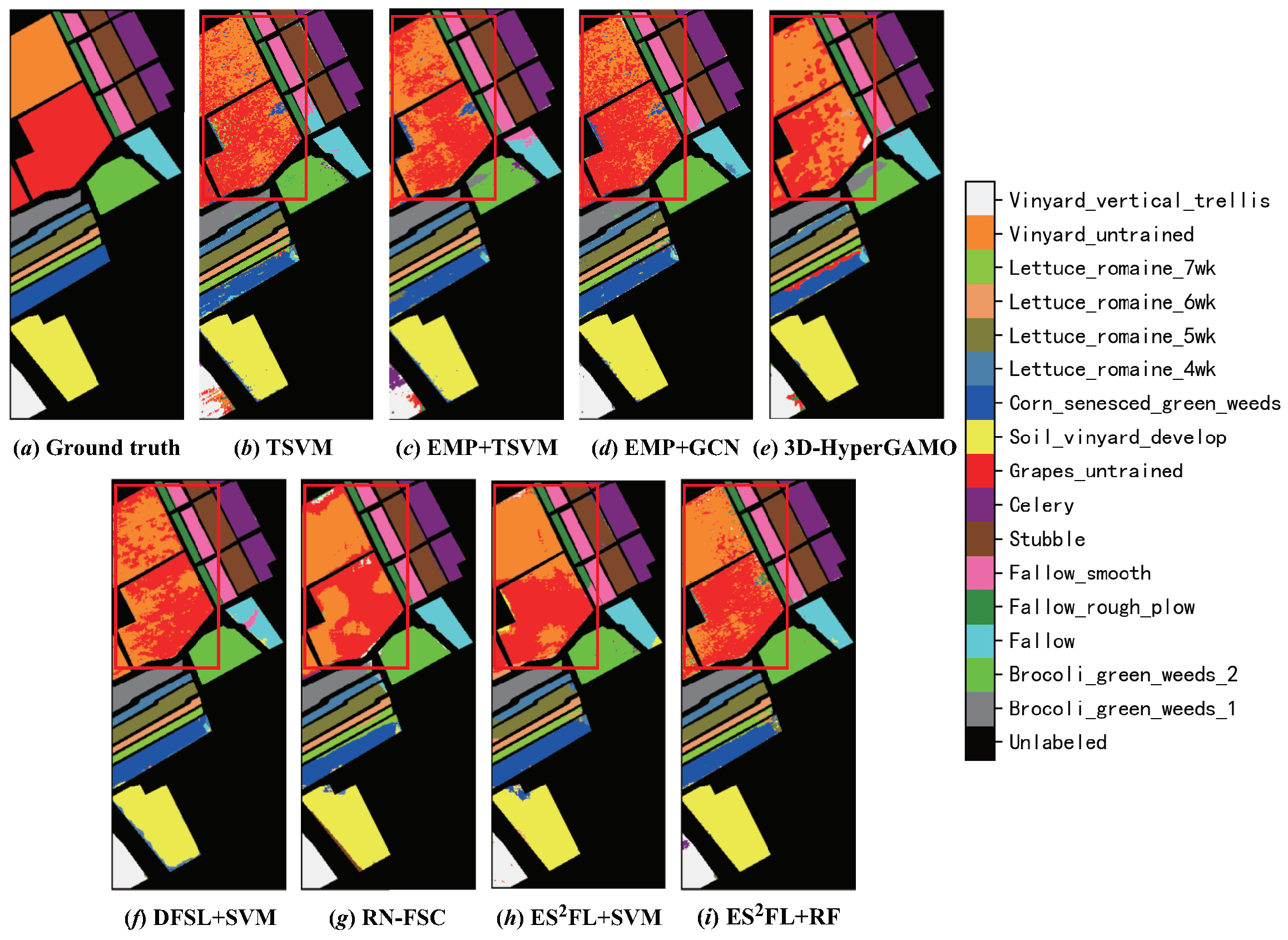

- (1)

- TSVM [57]: TSVM, short for Transductive Support Vector Machine, is one of the most representative models in semi-supervised support vector machines. TSVM can utilize the information contained in unlabeled samples to improve classification performance in the case of small samples by treating each unlabeled sample as a positive example or a negative example.

- (2)

- EMP + TSVM: EMP can utilize the spatial texture information in HSIs and retain the main spectral features, and its combination with TVSM can effectively improve the classification effect in the case of small samples.

- (3)

- EMP + GCN [58]: This method firstly extracts EMP features from HSIs, then organizes them into a graph structure by the k-neighbors method, and finally performs classification based on graph convolution. EMP + GCN is actually a semi-supervised classification method based on the designed graph convolution network, which can make full use of the information contained in labeled and unlabeled samples simultaneously.

- (4)

- 3D-HyperGAMO [59]: Aiming at the issue of imbalanced data in HSI classification, this method attempts to generate more samples for minority classes using a novel generative adversarial minority oversampling strategy. The generated and original samples are both used for training the deep model, effectively improving classification performance in the case of small samples.

- (5)

- DFSL + SVM [60]: Based on the prototype network, this method designs a novel feature-learning and few-shot classification framework, which can make full use of the large number of source-labeled samples to improve classification accuracy in the case of small samples. This method is the first one to have explored the performance of meta learning methods in HSI classification.

- (6)

- RN-FSC [32]: RN-FSC is an end-to-end few-shot classification framework based on the relation network. By comparing the similarity between different samples, this mothod can automatically extract the deep features of homogeneous samples gathering together and heterogeneous samples separating from each other to effectively improve classification accuracy in cases of small samples.

- (1)

- The results of the TSVM-based methods are significantly worse than those of other deep models in terms of OA, AA and kappa. In addition, extracting EMP features can significantly improve the classification performance of TSVM.

- (2)

- Compared with the TSVM-based methods, EMP + GCN and 3D-HyperGAMO can both significantly improve the accuracy and robustness of classification results in the case of small samples. It can be observed that EMP + GCN can acquire higher accuracy on the UP and SA data sets, while 3D-HyperGAMO has better classification performance on the IP data set.

- (3)

- The two advanced meta learning-based methods, DFSL + SVM and RN-FSC, can further improve classification performance. The meta-training with a large number of source-labeled samples and the feature-extraction network based on metric learning can significantly improve the learning ability and classification accuracy of the deep models in the case of small samples.

- (4)

- The proposed method achieves the best classification performance in all three HSIs, and both the classification results of ESFL + SVM and ESFL + RF are significantly better than other methods. In the UP and IP data sets, ESFL + RF can obtain higher classification accuracy, and its OA is improved by 6.83% and 8.78%, respectively, compared with the second place, which is undoubtedly significant. In the SA data set, the classification performance of ESFL + SVM is slightly better than ESFL + RF, which is 2.99% higher than the second place in terms of OA.

3.4. Classification Results with Sufficient Samples

3.5. Ablation Studies

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Xiao, J.; Li, J.; Yuan, Q.; Zhang, L. A Dual-UNet with Multistage Details Injection for Hyperspectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5515313. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X. Patch-Free Bilateral Network for Hyperspectral Image Classification Using Limited Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10794–10807. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, N.; Cui, J. Hyperspectral Image Classification with Small Training Sample Size Using Superpixel-Guided Training Sample Enlargement. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7307–7316. [Google Scholar] [CrossRef]

- Li, W.; Chen, C.; Su, H.; Du, Q. Local Binary Patterns and Extreme Learning Machine for Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhang, L. An Adaptive Artificial Immune Network for Supervised Classification of Multi-/Hyperspectral Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 894–909. [Google Scholar] [CrossRef]

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Ma, L.; Zhang, X.; Yu, X.; Luo, D. Spatial Regularized Local Manifold Learning for Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 609–624. [Google Scholar] [CrossRef]

- Duan, Y.; Huang, H.; Li, Z.; Tang, Y. Local Manifold-Based Sparse Discriminant Learning for Feature Extraction of Hyperspectral Image. IEEE T Cybernetics 2021, 51, 4021–4034. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Chanussot, J.; Jia, X.; Benediktsson, J.A. Multiple Kernel Learning for Hyperspectral Image Classification: A Review. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6547–6565. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Zhang, S.; Xu, M.; Zhou, J.; Jia, S. Unsupervised Spatial-Spectral CNN-Based Feature Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5524617. [Google Scholar] [CrossRef]

- Gao, K.; Guo, W.; Yu, X.; Liu, B.; Yu, A.; Wei, X. Deep Induction Network for Small Samples Classification of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3462–3477. [Google Scholar] [CrossRef]

- Fauvel, M.; Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J.; Tilton, J.C. Advances in Spectral-Spatial Classification of Hyperspectral Images. Proc. IEEE 2013, 101, 652–675. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Gabor-Filtering-Based Nearest Regularized Subspace for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1012–1022. [Google Scholar] [CrossRef]

- Jia, S.; Deng, B.; Zhu, J.; Jia, X.; Li, Q. Local Binary Pattern-Based Hyperspectral Image Classification With Superpixel Guidance. IEEE Trans. Geosci. Remote Sens. 2018, 56, 749–759. [Google Scholar] [CrossRef]

- Jia, S.; Shen, L.; Zhu, J.; Li, Q. A 3-D Gabor Phase-Based Coding and Matching Framework for Hyperspectral Imagery Classification. IEEE Trans. Cybern. 2018, 48, 1176–1188. [Google Scholar] [CrossRef]

- Xue, Z.; Yu, X.; Tan, X.; Liu, B.; Yu, A.; Wei, X. Multiscale Deep Learning Network with Self-Calibrated Convolution for Hyperspectral and LiDAR Data Collaborative Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5514116. [Google Scholar] [CrossRef]

- Wang, H.; Lin, Y.; Xu, X.; Chen, Z.; Wu, Z.; Tang, Y. A Study on Long-Close Distance Coordination Control Strategy for Litchi Picking. Agronomy 2022, 12, 1520. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Cui, Q.; Yang, B.; Liu, B.; Li, Y.; Ning, J. Tea Category Identification Using Wavelet Signal Reconstruction of Hyperspectral Imagery and Machine Learning. Agriculture 2022, 12, 1085. [Google Scholar] [CrossRef]

- Booysen, R.; Lorenz, S.; Thiele, S.T.; Fuchsloch, W.C.; Marais, T.; Nex, P.A.; Gloaguen, R. Accurate hyperspectral imaging of mineralised outcrops: An example from lithium-bearing pegmatites at Uis, Namibia. Remote Sens. Environ. 2022, 269, 112790. [Google Scholar] [CrossRef]

- Obermeier, W.; Lehnert, L.; Pohl, M.; Makowski Gianonni, S.; Silva, B.; Seibert, R.; Laser, H.; Moser, G.; Müller, C.; Luterbacher, J.; et al. Grassland ecosystem services in a changing environment: The potential of hyperspectral monitoring. Remote Sens. Environ. 2019, 232, 111273. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep Learning for Hyperspectral Image Classification: An Overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Going Deeper with Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef]

- Praveen, B.; Menon, V. Study of Spatial-Spectral Feature Extraction Frameworks with 3-D Convolutional Neural Network for Robust Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1717–1727. [Google Scholar] [CrossRef]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. A Simplified 2D-3D CNN Architecture for Hyperspectral Image Classification Based on Spatial–Spectral Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Mei, S.; Li, X.; Liu, X.; Cai, H.; Du, Q. Hyperspectral Image Classification Using Attention-Based Bidirectional Long Short-Term Memory Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5509612. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Qin, J.; Zhang, P.; Tan, X. Deep Relation Network for Hyperspectral Image Few-Shot Classification. Remote Sens. 2020, 12, 923. [Google Scholar] [CrossRef]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. Feedback Attention-Based Dense CNN for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5501916. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual Spectral-Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 449–462. [Google Scholar] [CrossRef]

- Yang, K.; Sun, H.; Zou, C.; Lu, X. Cross-Attention Spectral Spatial Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5518714. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Yu, A. Unsupervised Meta Learning with Multiview Constraints for Hyperspectral Image Small Sample set Classification. IEEE Trans. Image Process. 2022, 31, 3449–3462. [Google Scholar] [CrossRef]

- Tan, X.; Gao, K.; Liu, B.; Fu, Y.; Kang, L. Deep global-local transformer network combined with extended morphological profiles for hyperspectral image classification. J. Appl. Remote Sens. 2021, 15, 38509. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised Deep Feature Extraction for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1349–1362. [Google Scholar] [CrossRef]

- Wei, W.; Xu, S.; Zhang, L.; Zhang, J.; Zhang, Y. Boosting Hyperspectral Image Classification with Unsupervised Feature Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5502315. [Google Scholar] [CrossRef]

- Mei, S.; Ji, J.; Geng, Y.; Zhang, Z.; Li, X.; Du, Q. Unsupervised Spatial-Spectral Feature Learning by 3D Convolutional Autoencoder for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6808–6820. [Google Scholar] [CrossRef]

- Shi, C.; Pun, C.M. Multiscale Superpixel-Based Hyperspectral Image Classification Using Recurrent Neural Networks With Stacked Autoencoders. IEEE Trans. Multimedia 2020, 22, 487–501. [Google Scholar] [CrossRef]

- Feng, J.; Liu, L.; Cao, X.; Jiao, L.; Sun, T.; Zhang, X. Marginal Stacked Autoencoder with Adaptively-Spatial Regularization for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3297–3311. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Unsupervised Spectral-Spatial Feature Learning via Deep Residual Conv-Deconv Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 391–406. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Generative Adversarial Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5046–5063. [Google Scholar] [CrossRef]

- Zhang, M.; Gong, M.; Mao, Y.; Li, J.; Wu, Y. Unsupervised Feature Extraction in Hyperspectral Images Based on Wasserstein Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2669–2688. [Google Scholar] [CrossRef]

- Yu, W.; Zhang, M.; He, Z.; Shen, Y. Convolutional Two-Stream Generative Adversarial Network-Based Hyperspectral Feature Extraction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5506010. [Google Scholar] [CrossRef]

- Jing, L.; Tian, Y. Self-Supervised Visual Feature Learning With Deep Neural Networks: A Survey. IEEE Trans. Pattern Anal. 2021, 43, 4037–4058. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, F.; Hou, Z.; Wang, Z.; Mian, L.; Zhang, J.; Tang, J. Self-supervised Learning: Generative or Contrastive. IEEE Trans. Knowl. Data Eng. 2020. [Google Scholar] [CrossRef]

- Zbontar, J.; Jing, L.; Misra, I.; LeCun, Y.; Deny, S. Barlow Twins: Self-Supervised Learning via Redundancy Reduction. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the Machine Learning Research, Vancouver, BC, Canada, 13 December 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Liu, B.; Yu, A.; Yu, X.; Wang, R.; Gao, K.; Guo, W. Deep Multiview Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7758–7772. [Google Scholar] [CrossRef]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for Activation Functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral Image Classification Using Deep Pixel-Pair Features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 844–853. [Google Scholar] [CrossRef]

- Zhi, L.; Yu, X.; Liu, B.; Wei, X. A dense convolutional neural network for hyperspectral image classification. Remote Sens. Lett. 2019, 10, 59–66. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Joachims, T. Transductive Inference for Text Classification using Support Vector Machines. In Proceedings of the Sixteenth International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999; pp. 200–209. [Google Scholar]

- Liu, B.; Gao, K.; Yu, A.; Guo, W.; Wang, R.; Zuo, X. Semisupervised graph convolutional network for hyperspectral image classification. J. Appl. Remote Sens. 2020, 14, 26516. [Google Scholar] [CrossRef]

- Roy, S.K.; Haut, J.M.; Paoletti, M.E.; Dubey, S.R.; Plaza, A. Generative Adversarial Minority Oversampling for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5500615. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Yu, A.; Zhang, P.; Wan, G.; Wang, R. Deep Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2290–2304. [Google Scholar] [CrossRef]

- Xu, Q.; Xiao, Y.; Wang, D.; Luo, B. CSA-MSO3DCNN: Multiscale Octave 3D CNN with Channel and Spatial Attention for Hyperspectral Image Classification. Remote Sens. 2020, 12, 188. [Google Scholar] [CrossRef]

- Guo, H.; Liu, J.; Yang, J.; Xiao, Z.; Wu, Z. Deep Collaborative Attention Network for Hyperspectral Image Classification by Combining 2-D CNN and 3-D CNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4789–4802. [Google Scholar] [CrossRef]

- Ma, W.; Yang, Q.; Wu, Y.; Zhao, W.; Zhang, X. Double-Branch Multi-Attention Mechanism Network for Hyperspectral Image Classification. Remote Sens. 2019, 11, 1307. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, B.; Yu, X.; Yu, A.; Xue, Z.; Gao, K. Resolution reconstruction classification: Fully octave convolution network with pyramid attention mechanism for hyperspectral image classification. Int. J. Remote Sens. 2022, 43, 2076–2105. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Viualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Stages | Operations | Channel Number | Layer Number |

|---|---|---|---|

| 1 | Conv | 32 | 1 |

| 2 | MBConv1 | 16 | 1 |

| 3 | MBConv6 | 24 | 2 |

| 4 | MBConv6 | 40 | 2 |

| 5 | MBConv6 | 80 | 3 |

| 6 | MBConv6 | 112 | 3 |

| 7 | MBConv6 | 192 | 4 |

| 8 | MBConv6 | 320 | 1 |

| 9 | Conv &Pooling&FC | 1280 | 1 |

| UP | IP | SA | |

|---|---|---|---|

| Spatial size | |||

| Spectral range | 430–860 | 400–2500 | 400–2500 |

| No. of bands | 103 | 200 | 204 |

| GSD | 1.3 | 20 | 3.7 |

| Sensor type | ROSIS | AVIRIS | AVIRIS |

| Areas | Pavia | Indiana | California |

| No. of classes | 9 | 16 | 16 |

| Labeled samples | 42,776 | 10,249 | 54,129 |

| No. | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Backbone | DCNN | VGG16 | Resnet18 | EfficientNet-B0 |

| UP | 79.35 ± 3.74 | 80.63 ± 3.85 | 82.76 ± 3.69 | 83.99 ± 3.15 |

| IP | 63.07 ± 3.83 | 62.94 ± 4.17 | 63.73 ± 3.97 | 69.62 ± 3.73 |

| SA | 85.09 ± 3.07 | 86.74 ± 2.69 | 88.31 ± 2.73 | 88.81 ± 2.12 |

| Criteria | TSVM Mean ± SD | EMP + TSVM Mean ± SD | EMP + GCN Mean ± SD | 3D-HyperGAMO Mean ± SD | DFSL + SVM Mean ± SD | RN-FSC Mean ± SD | ESFL + SVM Mean ± SD | ESFL + RF Mean ± SD |

|---|---|---|---|---|---|---|---|---|

| Class 1 | 86.03 ± 10.37 | 93.74 ± 5.11 | 92.50 ± 5.00 | 80.46 ± 7.28 | 86.86 ± 6.39 | 84.11 ± 9.68 | 84.95 ± 12.81 | 92.62 ± 9.67 |

| Class 2 | 82.60 ± 6.10 | 83.62 ± 5.75 | 85.94 ± 2.56 | 86.95 ± 3.64 | 91.81 ± 3.25 | 94.88 ± 3.65 | 95.64 ± 4.11 | 96.81 ± 3.05 |

| Class 3 | 43.42 ± 8.95 | 49.25 ± 12.14 | 49.20 ± 8.71 | 49.78 ± 8.41 | 59.08 ± 12.03 | 54.74 ± 9.34 | 94.57 ± 4.42 | 80.28 ± 15.16 |

| Class 4 | 58.98 ± 12.28 | 65.18 ± 18.17 | 74.83 ± 17.82 | 59.53 ± 13.48 | 92.79 ± 4.18 | 75.76 ± 9.51 | 78.26 ± 10.19 | 71.07 ± 11.56 |

| Class 5 | 93.21 ± 3.46 | 98.09 ± 1.35 | 99.73 ± 0.09 | 98.90 ± 1.03 | 99.92 ± 0.24 | 86.73 ± 8.22 | 98.77 ± 2.41 | 93.57 ± 9.01 |

| Class 6 | 38.23 ± 14.85 | 41.42 ± 13.85 | 48.96 ± 10.98 | 47.40 ± 8.10 | 40.26 ± 7.48 | 62.22 ± 18.90 | 69.16 ± 14.25 | 68.95 ± 13.90 |

| Class 7 | 42.16 ± 4.53 | 49.64 ± 13.81 | 54.95 ± 17.35 | 52.43 ± 17.15 | 44.49 ± 9.24 | 54.02 ± 11.17 | 57.24 ± 8.37 | 61.00 ± 12.96 |

| Class 8 | 66.56 ± 8.15 | 73.02 ± 7.92 | 73.44 ± 6.28 | 71.37 ± 9.16 | 65.45 ± 8.62 | 66.58 ± 11.33 | 72.92 ± 12.93 | 86.10 ± 6.85 |

| Class 9 | 99.78 ± 0.18 | 99.88 ± 0.09 | 96.53 ± 6.87 | 82.90 ± 12.39 | 86.42 ± 23.10 | 74.34 ± 12.93 | 99.10 ± 0.58 | 93.41 ± 7.92 |

| OA | 64.72 ± 6.89 | 69.58 ± 7.55 | 75.23 ± 3.96 | 70.42 ± 4.91 | 71.64 ± 6.07 | 77.16 ± 5.82 | 81.95 ± 3.30 | 83.99 ± 3.15 |

| AA | 67.89 ± 2.60 | 72.65 ± 3.70 | 75.12 ± 3.27 | 69.97 ± 3.60 | 74.12 ± 4.65 | 72.60 ± 4.38 | 83.36 ± 2.07 | 82.64 ± 2.85 |

| kappa | 56.17 ± 6.68 | 61.83 ± 7.67 | 68.02 ± 4.19 | 62.59 ± 5.45 | 64.41 ± 6.53 | 70.86 ± 6.66 | 77.05 ± 3.73 | 79.64 ± 3.75 |

| Criteria | TSVM Mean ± SD | EMP + TSVM Mean ± SD | EMP + GCN Mean ± SD | 3D-HyperGAMO Mean ± SD | DFSL + SVM Mean ± SD | RN-FSC Mean ± SD | ESFL + SVM Mean ± SD | ESFL + RF Mean ± SD |

|---|---|---|---|---|---|---|---|---|

| Class 1 | 28.66 ± 4.25 | 35.89 ± 8.60 | 37.72 ± 12.98 | 38.59 ± 14.17 | 37.00 ± 9.42 | 32.93 ± 19.87 | 60.49 ± 13.53 | 61.71 ± 19.88 |

| Class 2 | 36.00 ± 5.03 | 39.47 ± 8.21 | 47.32 ± 10.01 | 51.92 ± 9.99 | 46.78 ± 10.07 | 58.53 ± 16.81 | 56.92 ± 10.03 | 67.90 ± 11.78 |

| Class 3 | 27.44 ± 9.44 | 43.43 ± 9.80 | 40.54 ± 8.71 | 40.86 ± 10.13 | 39.90 ± 11.55 | 52.43 ± 21.49 | 34.08 ± 5.39 | 46.66 ± 9.17 |

| Class 4 | 25.78 ± 6.10 | 37.20 ± 8.41 | 26.48 ± 6.77 | 39.36 ± 12.82 | 28.08 ± 7.08 | 48.14 ± 13.95 | 31.82 ± 8.83 | 47.90 ± 15.40 |

| Class 5 | 52.84 ± 7.80 | 46.29 ± 12.14 | 52.02 ± 11.42 | 64.54 ± 18.72 | 77.88 ± 10.18 | 72.62 ± 18.69 | 82.82 ± 4.58 | 80.99 ± 8.48 |

| Class 6 | 83.38 ± 3.95 | 79.37 ± 4.84 | 81.03 ± 9.75 | 83.82 ± 10.02 | 94.13 ± 4.62 | 83.11 ± 13.59 | 89.35 ± 4.66 | 86.26 ± 7.06 |

| Class 7 | 28.17 ± 5.74 | 30.87 ± 3.73 | 31.48 ± 13.05 | 24.67 ± 10.50 | 30.82 ± 9.48 | 29.48 ± 12.65 | 30.81 ± 10.37 | 23.05 ± 5.57 |

| Class 8 | 94.87 ± 3.29 | 96.93 ± 1.98 | 95.89 ± 4.25 | 95.70 ± 3.43 | 98.04 ± 2.45 | 94.81 ± 6.98 | 97.38 ± 2.02 | 87.84 ± 5.77 |

| Class 9 | 15.57 ± 6.67 | 21.48 ± 9.61 | 16.00 ± 14.56 | 10.37 ± 4.45 | 19.36 ± 6.95 | 13.55 ± 5.34 | 20.65 ± 8.51 | 27.00 ± 12.61 |

| Class 10 | 39.79 ± 7.93 | 49.57 ± 11.23 | 52.40 ± 7.73 | 48.84 ± 12.69 | 58.26 ± 12.92 | 58.13 ± 17.90 | 49.23 ± 10.02 | 58.12 ± 12.20 |

| Class 11 | 62.98 ± 3.67 | 66.46 ± 5.47 | 70.44 ± 6.74 | 71.18 ± 10.28 | 74.33 ± 6.83 | 72.51 ± 11.74 | 76.45 ± 4.53 | 80.87 ± 6.08 |

| Class 12 | 23.10 ± 3.24 | 27.73 ± 5.52 | 24.23 ± 7.40 | 34.20 ± 9.16 | 34.27 ± 5.53 | 39.75 ± 9.15 | 41.81 ± 10.88 | 64.15 ± 11.38 |

| Class 13 | 71.75 ± 8.06 | 74.89 ± 10.09 | 91.92 ± 11.37 | 72.02 ± 11.21 | 75.01 ± 11.52 | 49.34 ± 15.30 | 87.65 ± 7.10 | 93.35 ± 5.03 |

| Class 14 | 88.75 ± 4.16 | 86.18 ± 6.97 | 81.50 ± 5.15 | 89.00 ± 7.22 | 94.21 ± 3.02 | 93.31 ± 4.31 | 97.28 ± 2.36 | 97.07 ± 1.80 |

| Class 15 | 33.87 ± 7.88 | 50.30 ± 12.56 | 43.06 ± 9.66 | 51.54 ± 10.66 | 55.58 ± 7.68 | 62.08 ± 8.38 | 66.75 ± 12.99 | 73.98 ± 13.18 |

| Class 16 | 83.89 ± 24.83 | 96.94 ± 5.15 | 84.29 ± 20.80 | 71.13 ± 11.60 | 86.99 ± 9.88 | 45.91 ± 24.51 | 93.71 ± 15.33 | 56.46 ± 20.95 |

| OA | 49.45 ± 2.22 | 55.09 ± 3.51 | 55.98 ± 2.93 | 57.02 ± 3.00 | 60.18 ± 3.53 | 60.84 ± 3.15 | 63.16 ± 3.23 | 69.62± 3.73 |

| AA | 49.80 ± 1.70 | 55.19 ± 1.96 | 54.77 ± 1.89 | 55.48 ± 2.47 | 59.41 ± 1.73 | 56.66 ± 4.65 | 63.58 ± 2.63 | 65.83 ± 3.41 |

| kappa | 43.64 ± 2.33 | 49.62 ± 3.80 | 50.65 ± 3.00 | 52.17 ± 3.30 | 55.66 ± 3.72 | 56.52 ± 3.41 | 58.84 ± 3.38 | 65.99 ± 4.01 |

| Criteria | TSVM Mean ± SD | EMP + TSVM Mean ± SD | EMP + GCN Mean ± SD | 3D-HyperGAMO Mean ± SD | DFSL + SVM Mean ± SD | RN-FSC Mean ± SD | ESFL + SVM Mean ± SD | ESFL + RF Mean ± SD |

|---|---|---|---|---|---|---|---|---|

| Class | 96.11 ± 5.46 | 94.81 ± 5.92 | 99.93 ± 0.21 | 89.82 ± 11.30 | 99.92 ± 0.24 | 91.105 ± 7.38 | 87.74 ± 15.27 | 93.92 ± 10.86 |

| Class 2 | 98.12 ± 1.18 | 98.92 ± 0.47 | 98.22 ± 0.36 | 96.78 ± 3.78 | 99.63 ± 1.02 | 97.21 ± 4.33 | 95.07 ± 7.26 | 98.88 ± 2.30 |

| Class 3 | 86.09 ± 6.85 | 85.83 ± 5.36 | 92.04 ± 1.43 | 92.82 ± 4.40 | 93.04 ± 3.40 | 88.68 ± 7.02 | 96.95 ± 3.27 | 94.14 ± 5.04 |

| Class 4 | 96.35 ± 1.76 | 97.27 ± 0.75 | 96.67 ± 2.72 | 92.82 ± 4.72 | 98.48 ± 1.63 | 86.56 ± 9.46 | 90.04 ± 4.41 | 81.09 ± 8.35 |

| Class 5 | 94.29 ± 4.81 | 94.08 ± 4.45 | 96.59 ± 3.37 | 93.76 ± 3.89 | 94.32 ± 4.16 | 97.06 ± 2.98 | 98.54 ± 2.45 | 96.89 ± 1.58 |

| Class 6 | 99.74 ± 0.26 | 99.97 ± 0.04 | 99.93 ± 0.03 | 99.27 ± 1.14 | 99.99 ± 0.02 | 97.89 ± 2.66 | 99.98 ± 0.03 | 99.22 ± 0.77 |

| Class 7 | 92.88 ± 5.17 | 91.24 ± 3.11 | 98.04 ± 1.77 | 96.52 ± 4.82 | 99.13 ± 2.39 | 93.60 ± 4.02 | 99.47 ± 0.57 | 98.36 ± 1.51 |

| Class 8 | 72.93 ± 4.03 | 74.59 ± 5.73 | 71.15 ± 1.69 | 75.35 ± 11.71 | 80.08 ± 5.79 | 84.95 ± 7.24 | 91.83 ± 9.81 | 89.89 ± 5.27 |

| Class 9 | 97.25 ± 3.18 | 98.23 ± 1.35 | 98.67 ± 1.05 | 97.80 ± 1.97 | 96.34 ± 5.80 | 95.78 ± 3.00 | 97.81 ± 2.16 | 97.90 ± 1.13 |

| Class 10 | 78.75 ± 8.44 | 86.68 ± 4.63 | 84.89 ± 5.74 | 88.90 ± 6.01 | 91.74 ± 8.65 | 88.66 ± 9.62 | 98.82 ± 1.15 | 95.20 ± 3.80 |

| Class 11 | 63.99 ± 9.98 | 69.43 ± 6.94 | 78.57 ± 8.20 | 86.46 ± 11.81 | 63.45 ± 3.75 | 87.01 ± 8.15 | 90.37 ± 5.15 | 90.41 ± 9.54 |

| Class 12 | 91.68 ± 5.12 | 90.51 ± 3.86 | 92.56 ± 4.18 | 92.59 ± 7.77 | 98.67 ± 1.38 | 96.83 ± 3.62 | 96.13 ± 5.16 | 92.74 ± 7.90 |

| Class 13 | 82.34 ± 10.91 | 81.16 ± 11.20 | 90.58 ± 7.04 | 89.34 ± 13.49 | 99.84 ± 0.33 | 95.12 ± 4.56 | 89.62 ± 5.21 | 88.39 ± 7.01 |

| Class 14 | 80.62 ± 13.62 | 92.90 ± 3.25 | 80.06 ± 16.48 | 79.95 ± 12.65 | 99.63 ± 0.53 | 81.26 ± 11.66 | 90.18 ± 5.33 | 69.64 ± 12.28 |

| Class 15 | 55.43 ± 5.69 | 58.29 ± 6.83 | 64.91 ± 5.22 | 55.17 ± 11.43 | 54.62 ± 9.92 | 65.69 ± 11.15 | 65.39 ± 12.12 | 67.27 ± 6.46 |

| Class 16 | 93.61 ± 4.11 | 86.66 ± 7.21 | 95.11 ± 5.56 | 92.83 ± 6.01 | 97.82 ± 5.32 | 88.60 ± 9.91 | 99.44 ± 0.60 | 96.34 ± 2.19 |

| OA | 82.25 ± 1.36 | 83.58 ± 1.65 | 85.93 ± 0.99 | 83.70 ± 4.40 | 84.52 ± 3.32 | 86.37 ± 3.32 | 89.36± 4.42 | 88.81 ± 2.12 |

| AA | 86.26 ± 1.30 | 87.54 ± 0.79 | 89.87 ± 0.98 | 88.76 ± 2.59 | 91.67 ± 0.84 | 89.75 ± 1.61 | 92.96 ± 2.40 | 90.63 ± 1.56 |

| kappa | 80.31 ± 1.48 | 81.78 ± 1.81 | 84.33 ± 1.10 | 81.97 ± 4.84 | 82.90 ± 3.60 | 84.91 ± 3.65 | 88.23 ± 4.87 | 87.61 ± 2.33 |

| HSI | Criteria | 3DCAE Mean ± SD | EMP + TNT Mean ± SD | CACNN Mean ± SD | DBMA Mean ± SD | DIN-SSC Mean ± SD | FOctConvPA Mean ± SD | ESFL + SVM Mean ± SD | ESFL + RF Mean ± SD |

|---|---|---|---|---|---|---|---|---|---|

| UP | OA | 88.65 ± 1.78 | 93.65 ± 0.81 | 97.49 ± 1.39 | 98.03 ± 1.30 | 97.54 ± 0.74 | 83.57 ± 3.48 | 99.05 ± 0.31 | 97.89 ± 0.28 |

| AA | 86.96 ± 1.29 | 92.34 ± 0.79 | 97.95 ± 0.81 | 97.11 ± 1.49 | 96.93 ± 0.94 | 73.22 ± 7.29 | 98.55 ± 0.59 | 96.80 ± 0.57 | |

| kappa | 85.24 ± 2.20 | 91.72 ± 1.05 | 96.67 ± 1.83 | 97.41 ± 1.68 | 96.76 ± 0.97 | 78.04 ± 4.81 | 98.75 ± 0.41 | 97.22 ± 0.37 | |

| IP | OA | 82.00 ± 1.02 | 94.60 ± 2.14 | 93.79 ± 0.89 | 95.70 ± 1.44 | 94.02 ± 1.61 | 95.87 ± 0.90 | 96.17 ± 0.49 | 96.44 ± 0.69 |

| AA | 82.76 ± 0.89 | 94.73 ± 1.79 | 95.65 ± 0.66 | 96.13 ± 1.10 | 93.81 ± 1.54 | 95.34 ± 1.40 | 96.01 ± 0.54 | 96.04 ± 0.77 | |

| kappa | 79.11 ± 1.19 | 93.69 ± 2.48 | 92.67 ± 1.04 | 94.97 ± 1.66 | 93.02 ± 1.87 | 95.14 ± 1.07 | 95.51 ± 0.57 | 95.83 ± 0.81 | |

| SA | OA | 90.73 ± 0.61 | 97.19 ± 4.39 | 95.95 ± 0.60 | 96.18 ± 1.49 | 97.76 ± 1.06 | 98.21 ± 0.64 | 99.19 ± 0.27 | 98.20 ± 0.41 |

| AA | 93.86 ± 0.43 | 98.64 ± 1.23 | 98.19 ± 0.27 | 98.25 ± 0.59 | 98.32 ± 0.76 | 96.50 ± 1.52 | 99.48 ± 0.18 | 98.02 ± 0.48 | |

| kappa | 89.70 ± 0.67 | 96.89 ± 4.83 | 95.48 ± 0.68 | 95.75 ± 1.65 | 97.51 ± 1.17 | 98.01 ± 0.71 | 99.09 ± 0.30 | 98.00 ± 0.45 |

| Classifier | Criteria | No Ensemble | Feature-Level Ensemble Mode | Feature- and View-Level Ensembles | |||||

|---|---|---|---|---|---|---|---|---|---|

| Scale = 32 | Scale = 64 | Scale = 96 | Mean | Element Multiplication | Concatenation | Mean+ Spectral Vector | |||

| SVM | OA | 70.37 ± 2.06 | 63.87 ± 3.13 | 79.27 ± 2.42 | 83.74 ± 4.31 | 82.59 ± 5.35 | 75.94 ± 5.68 | 87.12 ± 4.75 | 89.36± 4.42 |

| AA | 67.09 ± 0.87 | 73.23 ± 2.22 | 79.55 ± 2.39 | 88.22 ± 2.41 | 87.75 ± 2.69 | 86.80 ± 2.38 | 91.80 ± 1.83 | 92.96 ± 2.40 | |

| kappa | 67.38 ± 2.19 | 60.57 ± 3.51 | 77.02 ± 2.75 | 81.99 ± 4.75 | 80.72 ± 5.91 | 73.26 ± 6.76 | 85.77 ± 5.20 | 88.23 ± 4.87 | |

| RF | OA | 71.73 ± 2.99 | 70.96 ± 3.78 | 77.78 ± 1.97 | 83.70 ± 4.17 | 83.21 ± 3.87 | 81.62 ± 5.32 | 88.16 ± 2.34 | 88.81 ± 2.12 |

| AA | 68.68 ± 2.19 | 75.28 ± 2.99 | 75.74 ± 1.14 | 86.62 ± 2.49 | 85.56 ± 2.62 | 84.90 ± 3.04 | 90.81 ± 1.15 | 90.63 ± 1.56 | |

| kappa | 68.86 ± 3.19 | 68.14 ± 4.07 | 75.53 ± 2.22 | 81.98 ± 4.59 | 81.43 ± 4.26 | 79.70 ± 5.84 | 86.92 ± 2.57 | 87.61 ± 2.33 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Gao, K.; Yu, A.; Ding, L.; Qiu, C.; Li, J. ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sens. 2022, 14, 4236. https://doi.org/10.3390/rs14174236

Liu B, Gao K, Yu A, Ding L, Qiu C, Li J. ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sensing. 2022; 14(17):4236. https://doi.org/10.3390/rs14174236

Chicago/Turabian StyleLiu, Bing, Kuiliang Gao, Anzhu Yu, Lei Ding, Chunping Qiu, and Jia Li. 2022. "ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images" Remote Sensing 14, no. 17: 4236. https://doi.org/10.3390/rs14174236

APA StyleLiu, B., Gao, K., Yu, A., Ding, L., Qiu, C., & Li, J. (2022). ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sensing, 14(17), 4236. https://doi.org/10.3390/rs14174236