Abstract

With the rapid development of high-resolution synthetic aperture radar (SAR) systems, the technique that utilizes multiple two-dimensional (2-D) SAR images with different view angles to extract three-dimensional (3-D) coordinates of targets has gained wide concern in recent years. Unlike the traditional multi-channel SAR utilized for 3-D coordinate extraction, the single-channel curvilinear SAR (CLSAR) has the advantages of large variation of view angle, requiring fewer acquisition data, and lower device cost. However, due to the complex aerodynamic configuration and flight characteristics, important issues should be considered, including the mathematical model establishment, imaging geometry analysis, and high-precision extraction model design. In this paper, to address these challenges, a 3-D vector model of CLSAR was presented and the imaging geometries under different view angles were analyzed. Then, a novel 3-D coordinate extraction approach based on radargrammetry was proposed, in which the unique property of the SAR system, called cylindrical symmetry, was utilized to establish a novel extraction model. Compared with the conventional approach, the proposed one has fewer constraints on the trajectory of radar platform, requires fewer model parameters, and can obtain higher extraction accuracy without the assistance of extra ground control points (GCPs). Numerical results using simulated data demonstrated the effectiveness of the proposed approach.

1. Introduction

As a well-known technique using range and Doppler information to produce high-resolution images of the observing scene, synthetic aperture radar (SAR) can operate at different frequencies and view angles [1]. This feature makes the SAR a flexible tool for information extraction. Based on the assumption of a linear flight path with a certain height, a two-dimensional (2-D) SAR image is obtained by the synthetic aperture in the azimuth direction and the large time-bandwidth product signal in range direction [2,3,4]. However, a single 2-D SAR image cannot be used to provide the target’s height information because all targets with different height coordinates are projected onto one 2-D SAR image [5,6,7]. The real 3-D coordinates of targets cannot be obtained, which greatly limits its practical implementation. Thus, the 3-D coordinate extraction technique based on multi-aspect SAR images, which can obtain accurate 3-D position information of targets, is preferred [8,9]. Currently, the 3-D coordinate extraction technique is mainly used for reconnaissance, disaster prediction, and target location, but still is not extensively applied because of its limitations including the low accuracy of the extraction model and the strict requirements of radar system and platform [10].

Several realizations for 3-D SAR coordinate extraction have been studied in recent years. According to different processing methods, it can be mainly divided into two aspects, which are elaborated as follows:

- 3-D SAR coordinate extraction based on 2-D synthetic aperture: the 2-D synthetic aperture in the azimuth and height directions can be formed by controlling the motion trajectory of the aircraft in space. Hence, after combining with the large bandwidth signal, the 3-D coordinate information of targets can be extracted. This phase-based method is one of the mainstream methods for extracting 3-D coordinates of targets. Several technologies based on that have been proposed and utilized in recent years, including the interferometric SAR (In-SAR) [11,12,13], tomography SAR (Tomo-SAR) [14,15,16], and Linear Array SAR (LA-SAR) [17,18,19], which are shown below.

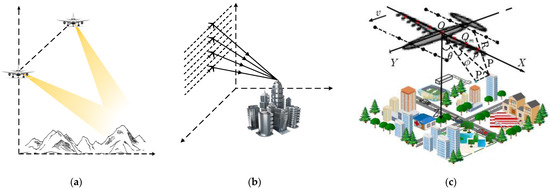

As illustrated in Figure 1, In-SAR and Tomo-SAR can form a 2-D synthetic aperture by employing several multi-pass acquisitions over the same area. However, the acquisition numbers in height direction cannot always meet the requirement of the Nyquist sampling theorem [20]. Furthermore, the possible non-uniform spatial samples in the elevation direction pose great challenges to the processing approaches. These two problems greatly limit the applicability of these two techniques [21]. The LA-SAR technique, by installing a large linear array along the cross track, can avoid these problems and form a 2-D synthetic aperture by a single flight path. However, LA-SAR cannot obtain high resolution in the cross-track direction due to the limited installation space [22]. Moreover, to ensure the imaging quality and coordinate extracting accuracy, LA-SAR must maintain a straight path flight with a fixed height. Therefore, LA-SAR is also limited in application [23].

Figure 1.

3-D SAR coordinate extraction techniques based on 2-D synthetic aperture. (a) In-SAR. (b) Tomo-SAR. (c) LA-SAR.

- 2.

- 3-D SAR coordinate extraction based on radargrammetry: with respect to the phase-based techniques, an alternative method called radargrammetry has been implemented [24]. Although radargrammetry theory was first introduced in 1950 and was the first method used to derive Digital Surface Models (DSMs) from airborne radar data in 1986 [25], the accuracy achieved has been in the order of 50–100 m, which is not satisfactory for application. Thus, it is less used than In-SAR and Tomo-SAR. In the last decade, with the emergence of more and more high-resolution SAR systems, radargrammetry has again become a hot topic. The radargrammetry technique exploits only the amplitude of SAR images taken from the same side but different view angles, resulting in a relative change of the position [26], as shown in Figure 2, which can avoid the phase unwrapping errors and the temporal decorrelation problems [27,28]. Moreover, compared with the techniques based on 2-D synthetic aperture, the radargrammetry technique has fewer restrictions on the radar flight path and installation space [29]. Therefore, it can be implemented with a single-channel airborne CLSAR.

Figure 2. TerraSAR-X images of Swissotel Berlin, taken from view angles of 27.1°, 37.8°, and 45.8° from left to right, respectively.

Figure 2. TerraSAR-X images of Swissotel Berlin, taken from view angles of 27.1°, 37.8°, and 45.8° from left to right, respectively.

At present, several geometry modeling solutions and their improvements have been established to extract the 3-D coordinates from SAR stereo models based on radargrammetry. The mainstream model is the Range and Doppler model (RDM), which primarily considers the precise relationship between image coordinates (line, sample) and target–space coordinates (latitude, longitude, height) with respect to the geometry of the SAR image [30]. The solution of this model utilizes two Doppler equations and two range equations to obtain the position of the unknown targets in space intersections [31]. Another common model is the rational polynomial coefficient (RPC) model, which was first demonstrated by the researchers in ref. [32] to process high-resolution optical satellite images and was applied by Guo Zhang and Zhen Li to the 3-D coordinate extraction from SAR stereoscopic pairs [33]. However, these models utilize a series of SAR system parameters including the instantaneous velocity vector, the roll angle, pitch angle, and yaw angle of the Antenna Phase Center (APC), which significantly affect the accuracy of coordinate extraction. Moreover, in order to ensure the accuracy of the model, both methods need to place ground control points (GCPs) in the imaging scene in advance, which further affects the practicality of the methods [34,35].

To solve the problems brought by the radargrammetry technique, a novel 3-D coordinate extraction approach for the single-channel CLSAR system was proposed, where the 3-D vector geometry model of CLSAR with different view angles and baselines was established firstly, and 2-D SAR images projected on different imaging planes were obtained. After that, the geometrical relationship between image coordinates and the radar platform was analyzed. Based on that, a novel model solution called the cylindrical symmetry (CS) model was proposed and utilized for 3-D coordinate extraction. Compared with the common ones, the approach proposed in our work has high extraction accuracy, little constraint on flight trajectory, and does not need extra GCPs for assistance, making it perform well in practical applications.

The rest of this paper is organized as follows. In Section 2, the geometry model of the CLSAR system with different view angles and baselines is established by using the 2-D Taylor series expansion, and the vector expression of the echo signal is presented. The 3-D coordinate extraction approach based on a novel radargrammetry model is proposed in Section 3. Section 4 presents numerical simulation results to evaluate our approach. In Section 5, a conclusion is drawn.

2. Geometry Model

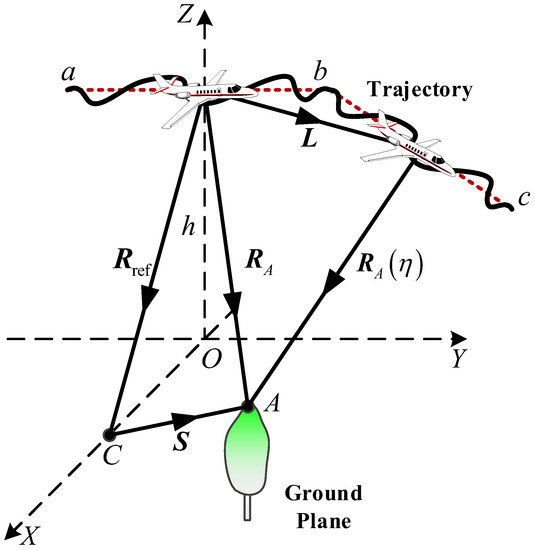

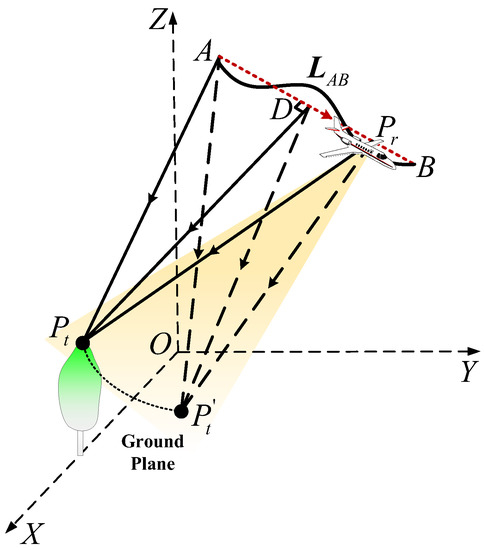

The radargrammetry technique performs 3-D coordinate extraction based on the determination of the SAR system stereo model. In this section, a 3-D vector geometry model of the CLSAR system is illustrated firstly, where the radar works in spotlight mode. As shown in Figure 3, the projection of the initial radar location on the ground (point ) is assumed to be the origin of the Cartesian coordinate system , where , , and denote the range, azimuth, and height directions. The trajectory of the radar platform is a curved path with a large variation of view angle. In order to obtain different SAR images, the whole curved aperture was divided into two sub-apertures according to different view angles and baselines. was defined as the initial elevation of the radar platform. Point was chosen as the central reference target and denotes the corresponding reference slant range vector. The slant range history corresponding to the arbitrary target is expressed as:

where denotes the azimuth slow time. and are respectively the velocity and acceleration vectors. denotes the initial slant range vector from to the platform, represents the vector from to , and is the magnitude operator. The 2-D Taylor series expansion of is given by:

where is expanded into two parts, i.e., the scalar one which is the same in the traditional SAR and the vectorial one that is caused by the curved path. and are respectively the scalar and vectorial coefficients [36]. With range history in (2), the echo signal of can be expressed as:

where is the fast time and and denote the window functions of fast and slow times, respectively. represents the synthetic aperture time, is the beam center time of reference point , is the wavelength, denotes the range chirp, and is the speed of light.

Figure 3.

3-D geometry model of CLSAR.

As shown in Figure 3, the original echo signal consists of the slave part and the master part according to different view angles and baselines of the radar flight path, which is given by:

where is the echo signal corresponding to the sub-aperture with a small variation in baseline height (from point to ) and utilized for slave image focusing with being the corresponding slow time. is the echo signal corresponding to the sub-aperture with a large variation in baseline height (from point to ), which is utilized for master image focusing with being the corresponding slow time. These two parts can form an image pair composed of two images from different view angles and can be utilized for 3-D coordinate extraction by using radargrammetry model.

3. Extraction Approach

3.1. Imaging Focusing of 2-D SAR Image Pair

According to the geometry model, two sub-apertures corresponding to different baselines were utilized to obtain a SAR image pair with different view angles. In this section, in order to increase the geometric differences between the two images without increasing the integration time, two different algorithms which make SAR images project onto different imaging planes were utilized.

3.1.1. 2-D Slave Image Focusing

In the SAR image pair, the slave image is used to provide assisted information of targets and focuses on the slant range plane. The imaging speed and accuracy should be both considered. Therefore, an Omega-K algorithm was chosen. However, due to the existence of accelerations in CLSAR, the echo signal corresponding to slave sub-aperture cannot be processed directly by the conventional Omega-K algorithm [37]. Thus, an improved Omega-K algorithm is presented.

By using the vectorial slant range history expressed in (3), the received echo signal corresponding to the slave sub-aperture in range-azimuth 2-D time domains can be expressed as:

where denotes the carrier frequency. After range compression, the signal can be rewritten as:

where represents the range wavenumber. Before using the conventional Omega-K algorithm, the space invariant terms caused by acceleration should be compensated for first, where the compensation function is derived as:

where with , , and , respectively. By multiplying (6) with (7) and FT along the azimuth slow time direction, the signal in the 2-D wavenumber domain can be expressed as:

where is the equivalent azimuth wavenumber with . , and is the azimuth frequency. It can be known that, after being compensated for by , the 2-D spectrum expressed in (8) has a form similar to the traditional cases. Thus, the conventional Omega-K processing can be utilized, where the bulk focusing is processed by a 2-D wavenumber domain phase compensation function:

After bulk focusing, the residual range migration can be compensated for by the Stolt interpolation, which is expressed as follows:

This substitution is considered to be a mapping of the original range wavenumber variable into a new range wavenumber variable , during which the residual phase after bulk focusing can be compensated for. Thus, performing the Stolt interpolation and 2-D inverse Fourier transform (IFT), the targets in the 3-D imaging scene will be projected onto a 2-D slant range plane, which is:

According to (11), all targets will be focused at the pixel on the salve image after utilizing the improved Omega-K algorithm, where denotes the azimuth location and denotes the distance from targets to the baseline of slave sub-aperture.

3.1.2. 2-D Master Image Focusing

In order to extract the accurate 3-D coordinate information of the target, the SAR image pair should be projected onto different imaging planes. The slave image is focused on the slant range plane by an improved Omega-K algorithm. Thus, the master image should be focused on the ground plane by a back-projection algorithm (BPA).

One of the most important steps of the BPA is to divide the ground plane into grids. The size of these grids depends on the range and azimuth resolutions of the imaging system. Therefore, it is necessary to calculate the corresponding resolution of the master image focusing system. Affected by the 3-D velocity vector and acceleration vector in the master sub-aperture, the analysis and calculation methods of resolutions in traditional SAR are not suitable and need modification. The most comprehensive information regarding the SAR resolution can be obtained by the ambiguity function (AF) approaches. However, due to the severe coupling of range frequency and spatial rotation angle in the master sub-aperture, the traditional methods that divide the AF into two independent functions cannot work [38,39]. In SAR, the linear frequency modulation (LFM) signal is transmitted, which can measure differences in time delay and Doppler frequency. Imaging performance depends on the capability to translate these differences in different detected areas [40,41]. Concerning this issue, spatial resolutions of the master image focusing system are analyzed by using the time delay and Doppler frequency differences based on the vector geometry and 2-D Taylor series expansion in (2).

According to the vector geometry model shown in Figure 3, the time delay difference and Doppler frequency difference between arbitrary target and reference target are:

where is the phase history difference between targets and . Then, the spatial range resolution and spatial azimuth resolution (−3 dB width) can be analyzed by performing gradient operation on the range delay time and Doppler frequency with respect to the variation , which can be represented as:

where and denote the differential operator and gradient operator, respectively. is the unit vector from the arbitrary target to the radar platform in range gradient direction. is the transmitted pulse bandwidth. and are the start time and end time of the effective synthetic aperture.

Due to the irregular curved path flight corresponding to the master sub-aperture, the resolutions expressed in (14) and (15) are in the spatial domain, which are different from the traditional range and azimuth resolutions in the ground plane.

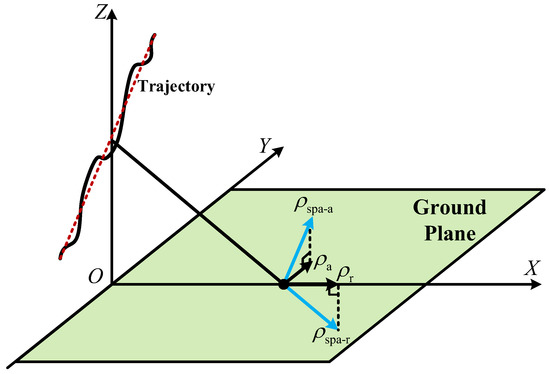

As illustrated in Figure 4, and are the spatial resolutions calculated by time delay and Doppler frequency differences, respectively. and are the traditional range and azimuth resolutions on the ground plane. The directions of spatial resolutions depend on the radar flight path, which cannot meet the requirement of BPA. Thus, it is necessary to analyze the relations between spatial resolutions and ground plane resolutions.

Figure 4.

Directions of spatial resolution and traditional resolution.

Assuming that the spatial resolution space is spanned by two orthogonal unit vectors and , where denotes the unit vector of the spatial Doppler gradient, the ground plane space is also spanned by two unit vectors and , which are the projections of vectors and on the ground plane, respectively, and can be expressed as:

where is the unit vector along the height direction. According to the relations in (16) and (17), a transfer matrix can be derived as:

It is clear that the spatial resolution space can be generated by multiplying the transfer matrix and the ground plane space. Thus, the resolution in the ground plane can be obtained as:

After the range and azimuth resolutions are calculated, the ground plane can be divided into a 2-D matrix grid , i.e., and . Then, after completing the coherent integration of all signals projected to the corresponding grids, the master image can be focused on the ground plane by BPA:

where denotes the pixel value of the target located at . is the slant range history of target . Note that, after processed by BPA, targets will be focused at the pixel on the master image.

3.2. Image Registration

After obtaining the master and slave images, the two images need to be registered to determine the coordinate position of the same target in two different images. As a most sensitive step in the proposed approach, the image registration must be proportionally correct to create a disparity map from the master image to the slave image [42,43]. Since the two images are focused by the echo data corresponding to different view angles, the rotation invariance must be considered. Therefore, a SAR image registration method based on feature point matching, called Speeded Up Robust Features (SURF), was utilized. The detector in this method is based on the box filter, which is a simple alternative of traditional Gaussian filter and can be evaluated vary fast using integral images, independently of size. Hence, the computation time and accuracy are both satisfied. Furthermore, the RANSAC algorithm was used to eliminate the mismatches of feature points, which further ensures the accuracy of registration between the master and slave images. The transformation matrix from the master image to the slave image can be derived as:

where , denote the 2-D coordinates of feature points in the master image and slave image. denotes the parameters which need to be solved. From (22), one can be known that the transformation matrix contains six unknown parameters. Thus, at least three groups of feature point pairs with the highest matching degree need to be selected to calculate the transformation matrix.

3.3. 3-D Coordinate Extraction Model

In this section, a novel 3-D coordinate extraction model is proposed according to the geometric relationship between target coordinate and trajectory baselines from different view angles. Our key was to utilize a unique property of the SAR system, called cylindrical symmetry, to establish the extraction model. Compared with the conventional models, the proposed CS model has the advantages of high precision, low trajectory constraints, and it does not need to deploy extra GCPs in advance, making it perform well in practical applications.

3.3.1. Geometric Relationship in the Slave Image

The imaging geometry model of the slave image focusing is illustrated in Figure 5, where segment is the baseline of the slave sub-aperture. Targets , , and are located at the same azimuth cell and is the reference target on the ground plane. Targets and are located at the same slant range cell. After being processed by the improved Omega-K algorithm, the slave image was projected onto the slant range plane, which is spanned by two orthogonal vectors and , respectively, where is the vector corresponding to the baseline of radar flight path and denotes the slant range vector from reference target to baseline. The instantaneous slant range corresponding to , , and can be expressed as:

where denotes the slant range from targets and to baseline , is the velocity vector of the platform, is the slow time and , , and are the azimuth coordinates of targets , , and , respectively, where . According to (23), the instantaneous slant range and remain consistent with the variation of slow time . Thus, the echo phase of targets and are identical. These two targets will be focused at the same location on the slave image. This property, called cylindrical symmetric, is the reason that makes one 2-D SAR image cannot be used for 3-D coordinate extraction. As a unique property of the SAR imaging system, cylindrical symmetric can be utilized to show the geometric relationship between the real 3-D coordinate of the target and the 2-D coordinate of its projection on the slave image, which can be derived as:

Figure 5.

Geometry model of slave imaging focusing.

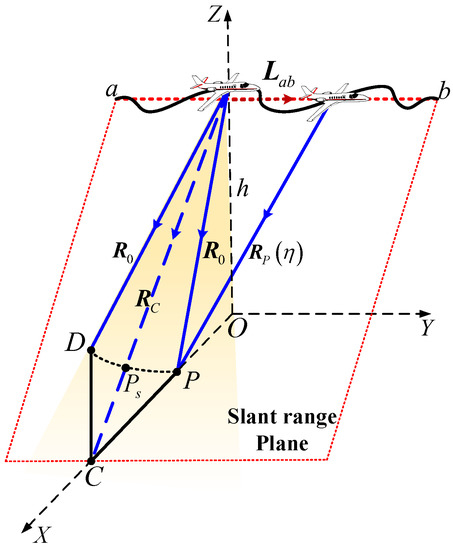

3.3.2. Geometric Relationship in the Master Image

The imaging geometry model of the master image focusing is illustrated in Figure 6, where segment denotes the baseline of master sub-aperture. denotes the radar platform. is an arbitrary target located at the 3-D observing space. According to the imaging property of BPA, the master image will be focused on the ground plane. Thus, will be projected onto the location of . The instantaneous slant range vector from radar platform to targets and can be expressed as:

where denotes the slant range vector from target to the baseline of the master sub-aperture , and denotes the slant range vector from target to the baseline. Since is the projection of on the master image, the instantaneous slant range vectors from two targets to the radar platform must remain consistent through the variation of slow time , i.e., . Thus, the slant range vectors from two targets to the baseline of master sub-aperture must be equal, which is

Figure 6.

Geometry model of master image focusing.

According to Figure 6, and are all orthogonal to the baseline . Thus, the area of the triangle and are equal, i.e.,

The area of a triangle can also be represented as a cross-product of its two sides. Hence, (27) can be rewritten as:

where and represent the vectors from the initial position of master sub-aperture baseline to targets and , respectively. is the cross-product operator. Hence, according to (28), the geometric relationships of and its projection can be represented as:

With the help of the high-precision integrated navigation system (INS) mounted on the radar platform, (29) can be expressed in the Cartesian coordinate system:

where is the real 3-D coordinate of target that needs to be solved. is the 2-D coordinate of extracted from the master image. After being expanded and simplified, (29) can be rewritten as:

where the coefficients are expressed as follows:

Combining (24) and (32), the CS model of the CLSAR system can be established as

Since (38) contains three equations, the 3-D coordinates of target can be easily solved and no extra GCPs are required, which can be utilized to extract the accurate 3-D coordinates of targets in any imaging scene.

According to (38), the 3-D coordinate extraction depends on two parameters, i.e., the position of the sensor platform and the 2-D coordinates of the target in the SAR image pair. Therefore, the extraction accuracy depends on two error sources:

- (a)

- Orientation errors of sensor platform: the orientation errors of the sensor platform are mainly caused by the errors of the orientation equipment (GPS/INS) mounted on the platform. They affect the accuracy of the baseline vector and the slant range vector, which will reduce the accuracy of the model established by the master image and ultimately affect the 3-D coordinate extraction result. After analysis, it could be found that the 3-D coordinate extraction error caused by the platform orientation errors (generally within 2 m) was less than 5 m, which meets the actual application requirements.

- (b)

- Phase errors of the 2-D SAR image: the phase errors of the 2-D SAR image are mainly caused by the motion errors of the sensor platform, since the platform cannot maintain uniform motion. The phase errors include linear phase error and nonlinear phase error. Among them, the nonlinear phase error will only cause defocus of the target without affecting the position of the target, while the linear phase error will cause the azimuth offset of the target in the 2-D SAR image pair. Therefore, it is necessary to use the motion error compensation algorithm to minimize the linear phase error to ensure the accuracy of 3-D coordinate extraction.

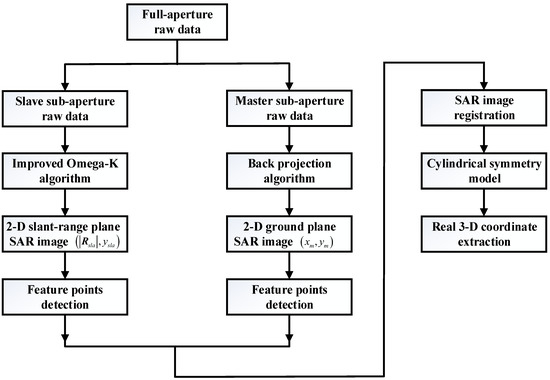

3.4. Flowchart of Extraction

The flowchart of the proposed 3-D coordinate extraction approach based on the CS model is shown in Figure 7. Note that the proposed approach consists of three steps, which are summarized as follows:

Figure 7.

Flowchart of the proposed approach.

- 2-D SAR image pair focusing. Divide the full curved aperture into two sub-apertures according to different view angles and baselines. The sub-aperture with a small height variation, called the slave sub-aperture, is used to obtain the slave image on the slant range plane. The sub-aperture with a large height variation, called the master sub-aperture, is used to obtain the master image on the ground plane.

- Image pair registration. Match the master and slave images based on target features. After that, the accurate 2-D coordinate position of the same target on different SAR images can be extracted;

- 3-D coordinates extraction. Apply the CS model to extract the real 3-D coordinates of the targets from the SAR image pair.

According to the flowchart, after separating the full curved aperture into two sub-apertures, a pair of 2-D SAR images of the same 3-D scene with different view angles and imaging geometries can be obtained, which are utilized to extract the accurate 3-D coordinates of targets by the CS model. The proposed extraction approach of the single-channel CLSAR has high extraction accuracy since only the radar position parameters are required. Furthermore, the approach is practical because it has fewer restrictions on radar trajectory and no extra GCPs are required.

4. Simulation Results

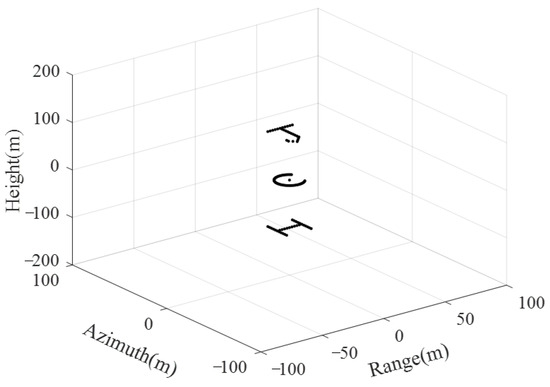

In this section, the simulation results of the targets are presented to verify the effectiveness of the proposed 3-D coordinate extraction approach. The CLSAR system works in the spotlight mode and the parameters utilized in the simulation are listed in Table 1. The targets setting for the simulation are shown in Figure 8, where three letters ‘J’, ‘C’, and ‘H’ composed of point targets (PTs) are located on different height planes and the letter ‘C’ is settled on the ground plane as reference.

Table 1.

Simulation parameters.

Figure 8.

Geometry of the simulated targets.

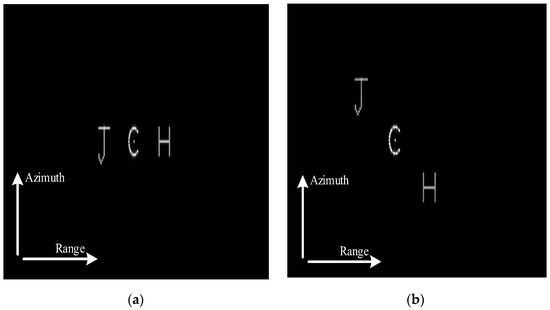

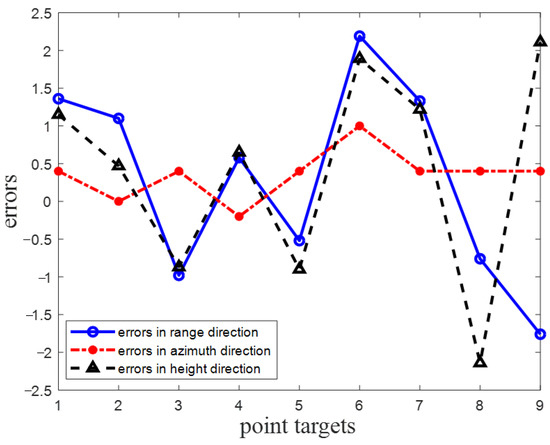

The simulation results, which were processed by two different algorithms, are shown in Figure 9, where Figure 9a is the slave image obtained by the echo data of the slave sub-aperture. Note that three letter targets located on different height planes were all visually well focused on a 2-D slant range image by the improved Omega-K algorithm. Figure 9b is the master image obtained by the echo data of the master sub-aperture, where targets were also well focused on the ground plane by BPA. By comparing the two images, it can be found that the same letter target was focused on the different positions in different images, which is due to the differences in the view angles and imaging geometry between the two sub-apertures. Figure 10 shows the result of image registration between the master and the slave images. Note that more than three groups of feature points were selected and letter targets in two different images were all well matched by SURF. After accurate registration, the proposed 3-D coordinate extraction model could be utilized. The 3-D coordinates of nine PTs (PT1~PT9) on letter targets were extracted by the proposed CS model, which are shown and compared with the real 3-D coordinates in Table 2. Note that the 3-D coordinates extracted by the proposed model were consistent with the real one. Furthermore, Figure 11 shows the coordinate extraction errors of nine PTs in the range, azimuth, and height directions. Note that the extraction errors of the proposed CS model were all less than 5 m in three directions, which further verifies the effectiveness and practicability of our work.

Figure 9.

Imaging results of two different sub-apertures. (a) Result of the slave image; (b) result of the master image.

Figure 10.

Result after image pair registration.

Table 2.

Coordinate extraction results of nine PTs.

Figure 11.

Extraction errors of nine point targets.

5. Conclusions

As an application in the imaging system, SAR shows an advantage in target coordinate extraction because of its high resolution. However, a single 2-D SAR image obtained by a traditional single-channel straight path SAR system cannot be utilized to extract the real 3-D coordinate of targets. To solve this problem, in this paper, a novel 3-D coordinate extraction approach based on radargrammetry was proposed. Firstly, the signal model of the CLSAR imaging system was presented with the vector notation, where the full curved synthetic aperture was divided into two sub-apertures according to different baselines, and the highly accurate expression of range history in the CLSAR system was listed. Secondly, according to the vector geometry model, the approach of 3-D coordinate extraction based on a novel radargrammetry model was presented, where two different imaging algorithms, i.e., improved Omega-K algorithm and BPA, were utilized to project the 3-D observing scene onto different 2-D imaging planes. Thus, based on the different view angles and imaging geometries of two SAR images, a cylindrical symmetry model was established to extract the 3-D coordinates of targets. Compared with the common extraction approach, the novel approach proposed in this paper had higher extraction accuracy, fewer constraints on flight trajectory, and did not need extra GCPs or hardware for assistance, making it perform well in practical applications. Our theoretical analysis was corroborated and the applicability was evaluated via numerical experiments.

Author Contributions

Conceptualization, C.J., S.T., Y.R. and Y.L.; methodology, C.J., S.T. and G.L.; software, C.J. and S.T.; validation, C.J., S.T., G.L., J.Z. and Y.R.; writing—original draft preparation, C.J. and S.T.; writing—review and editing, J.Z. and L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the National Natural Science Foundation of China, grant number 61971329, 61701393, and 61671361, Natural Science Basis Research Plan in Shaanxi Province of China, grant number 2020ZDLGY02-08, National Defense Foundation of China.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ausherman, D.A.; Kozma, A.; Walker, J.L.; Jones, H.M.; Poggio, E.C. Developments in radar imaging. IEEE Trans. Aerosp. Electron. Syst. 1984, AES-20, 363–400. [Google Scholar] [CrossRef]

- Carrara, W.G.; Goodman, R.S.; Majewski, R.M. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms; Artech House: Norwood, MA, USA, 1995. [Google Scholar]

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar: Systems and Signal Processing; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Boston, MA, USA, 2005. [Google Scholar]

- Chen, K.S. Principles of Synthetic Aperture Radar Imaging: A System Simulation Approach; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Liu, Y.; Xing, M.; Sun, G.; Lv, X.; Bao, Z.; Hong, W.; Wu, Y. Echo model analyses and imaging algorithm for high-resolution SAR on high-speed platform. IEEE Trans. Geosci. Remote Sens. 2012, 50, 933–950. [Google Scholar] [CrossRef]

- Frey, O.; Magnard, C.; Rüegg, M.; Meier, E. Focusing of airborne synthetic aperture radar data from highly nonlinear flight tracks. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1844–1858. [Google Scholar] [CrossRef] [Green Version]

- Ran, W.L.; Liu, Z.; Zhang, T.; Li, T. Autofocus for correcting three dimensional trajectory deviations in synthetic aperture radar. In Proceedings of the 2016 CIE International Conference on Radar (RADAR), Guangzhou, China, 10–13 October 2016; pp. 1–4. [Google Scholar]

- Bryant, M.L.; Gostin, L.L.; Soumekh, M. 3-D E-CSAR imaging of a T-72 tank and synthesis of its SAR reconstructions. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 211–227. [Google Scholar] [CrossRef]

- Austin, C.D.; Ertin, E.; Moses, R.L. Sparse signal methods for 3-D radar imaging. IEEE J. Sel. Top. Signal Process. 2011, 5, 408–423. [Google Scholar] [CrossRef]

- Bamler, R.; Hartl, P. Synthetic aperture radar interferometry—Topical review. Inverse Probl. 1998, 14, R1–R54. [Google Scholar] [CrossRef]

- Ferretti, A.; Prati, C.; Rocca, F. Permanent scatterers in SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2001, 39, 8–20. [Google Scholar] [CrossRef]

- Rosen, P.A.; Hensley, S.; Joughin, I.R.; Li, F.K.; Madsen, S.N.; Rodriguez, E.; Goldstein, R.M. Synthetic aperture radar interferometry. Proc. IEEE 2000, 88, 333–382. [Google Scholar] [CrossRef]

- Reigber, A.; Moreira, A. First demonstration of airborne SAR tomography using multibaseline L-band data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2142–2152. [Google Scholar] [CrossRef]

- Ferraiuolo, G.; Meglio, F.; Pascazio, V.; Schirinzi, G. DEM reconstruction accuracy in multi-channel SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2009, 47, 191–201. [Google Scholar] [CrossRef]

- Zhu, X.; Bamler, R. Very high resolution spaceborne SAR tomography in urban environment. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4296–4308. [Google Scholar] [CrossRef] [Green Version]

- Donghao, Z.; Xiaoling, Z. Downward-Looking 3-D linear array SAR imaging based on Chirp Scaling algorithm. In Proceedings of the 2009 2nd Asian-Pacific Conference on Synthetic Aperture Radar, Xi’an, China, 26–30 October 2009; pp. 1043–1046. [Google Scholar]

- Chen, S.; Yuan, Y.; Xu, H.; Zhang, S.; Zhao, H. An efficient and accurate three-dimensional imaging algorithm for forward-looking linear-array sar with constant acceleration based on FrFT. Signal Process. 2021, 178, 107764. [Google Scholar] [CrossRef]

- Ren, X.; Sun, J.; Yang, R. A new three-dimensional imaging algorithm for airborne forward-looking SAR. IEEE Geosci. Remote Sens. Lett. 2010, 8, 153–157. [Google Scholar] [CrossRef]

- Budillon, A.; Evangelista, A.; Schirinzi, G. Three-dimensional SAR focusing from multipass signals using compressive sampling. IEEE Trans. Geosci. Remote Sens. 2011, 49, 488–499. [Google Scholar] [CrossRef]

- Lombardini, F.; Pardini, M.; Gini, F. Sector interpolation for 3D SAR imaging with baseline diversity data. In Proceedings of the 2007 International Waveform Diversity and Design Conference, Pisa, Italy, 4–8 June 2007; pp. 297–301. [Google Scholar]

- Wei, S.; Zhang, X.; Shi, J. Linear array SAR imaging via compressed sensing. Prog. Electromagn. Res. 2011, 117, 299–319. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Kuang, G.; Zhu, Y.; Dong, G. Compressive sensing algorithm for downward-looking sparse array 3-D SAR imaging. In Proceedings of the IET International Radar Conference, Hangzhou, China, 14–16 October 2015; pp. 1–5. [Google Scholar]

- Crosetto, M.; Aragues, F.P. Radargrammetry and SAR interferometry for DEM generation: Validation and data fusion. In Proceedings of the CEOS SAR Workshop, Toulouse, France, 26–29 October 1999; p. 367. [Google Scholar]

- Leberl, F.; Domik, G.; Raggam, J.; Cimino, J.; Kpbrocl, M. Multiple Incidence Angle SIR-B Expenment Over Argentina: Stereo-Radargrammetrc Analysis. IEEE Trans. Geosci. Remote Sens. 1986, GE-24, 482–491. [Google Scholar] [CrossRef]

- Goel, K.; Adam, N. Three-Dimensional Positioning of Point Scatterers Based on Radargrammetry. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2355–2363. [Google Scholar] [CrossRef]

- Yang, J.; Liao, M.; Du, D. Extraction of DEM from single SAR based on radargrammetry. In Proceedings of the 2001 International Conferences on Info-Tech and Info-Net. Proceedings (Cat. No.01EX479), Beijing, China, 29 October–1 November 2001; Volume 1, pp. 212–217. [Google Scholar]

- Toutin, T.; Gray, L. State-of-the-art of elevation extraction from satellite SAR data. ISPRS J. Photogramm. Remote Sens. 2000, 55, 13–33. [Google Scholar] [CrossRef]

- Meric, S.; Fayard, F.; Pottier, É. A Multiwindow Approach for Radargrammetric Improvements. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3803–3810. [Google Scholar] [CrossRef] [Green Version]

- Sansosti, E.; Berardino, P.; Manunta, M.; Serafino, F.; Fornaro, G. Geometrical SAR image registration. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2861–2870. [Google Scholar] [CrossRef]

- Capaldo, P.; Crespi, M.; Fratarcangeli, F.; Nascetti, A.; Pieralice, F. High-Resolution SAR Radargrammetry: A First Application With COSMO-SkyMed SpotLight Imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 1100–1104. [Google Scholar] [CrossRef]

- Hanley, H.B.; Fraser, C.S. Sensor orientation for high-resolution satellite imagery: Further insights into bias-compensated RPC. In Proceedings of the XX ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; pp. 24–29. [Google Scholar]

- Zhang, G.; Li, Z.; Pan, H.; Qiang, Q.; Zhai, L. Orientation of Spaceborne SAR Stereo Pairs Employing the RPC Adjustment Model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2782–2792. [Google Scholar] [CrossRef]

- Zhang, L.; He, X.; Balz, T.; Wei, X.; Liao, M. Rational function modeling for spaceborne SAR datasets. ISPRS J. Photogramm. Remote Sens. 2011, 66, 133–145. [Google Scholar] [CrossRef]

- Raggam, H.; Gutjahr, K.; Perko, R.; Schardt, M. Assessment of the Stereo-Radargrammetric Mapping Potential of TerraSAR-X Multibeam Spotlight Data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 971–977. [Google Scholar] [CrossRef]

- Tang, S.; Lin, C.; Zhou, Y.; So, H.C.; Zhang, L.; Liu, Z. Processing of long integration time spaceborne SAR data with curved orbit. IEEE Trans. Geosci. Remote Sens. 2018, 56, 888–904. [Google Scholar] [CrossRef]

- Tang, S.; Guo, P.; Zhang, L.; So, H.C. Focusing hypersonic vehicle-borne SAR data using radius/angle algorithm. IEEE Trans. Geosci. Remote Sens. 2020, 58, 281–293. [Google Scholar] [CrossRef]

- Chen, J.; Sun, G.-C.; Wang, Y.; Guo, L.; Xing, M.; Gao, Y. An analytical resolution evaluation approach for bistatic GEOSAR based on local feature of ambiguity function. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2159–2169. [Google Scholar] [CrossRef]

- Chen, J.; Xing, M.; Xia, X.-G.; Zhang, J.; Liang, B.; Yang, D.-G. SVD-based ambiguity function analysis for nonlinear trajectory SAR. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3072–3087. [Google Scholar] [CrossRef]

- Guo, P.; Zhang, L.; Tang, S. Resolution calculation and analysis in high-resolution spaceborne SAR. Electron. Lett. 2015, 51, 1199–1201. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, L.; Guo, P.; Liu, G.; Sun, G. Acceleration model analyses and imaging algorithm for highly squinted airborne spotlight-mode SAR with maneuvers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1120–1131. [Google Scholar] [CrossRef]

- Ding, H.; Zhang, J.; Huang, G.; Zhu, J. An Improved Multi-Image Matching Method in Stereo-Radargrammetry. IEEE Geosci. Remote Sens. Lett. 2017, 14, 806–810. [Google Scholar] [CrossRef]

- Jing, G.; Wang, H.; Xing, M.; Lin, X. A Novel Two-Step Registration Method for Multi-Aspect SAR Images. In Proceedings of the 2018 China International SAR Symposium (CISS), Shanghai, China, 10–12 October 2018; pp. 1–4. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).