Detection and Classification of Floating Plastic Litter Using a Vessel-Mounted Video Camera and Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

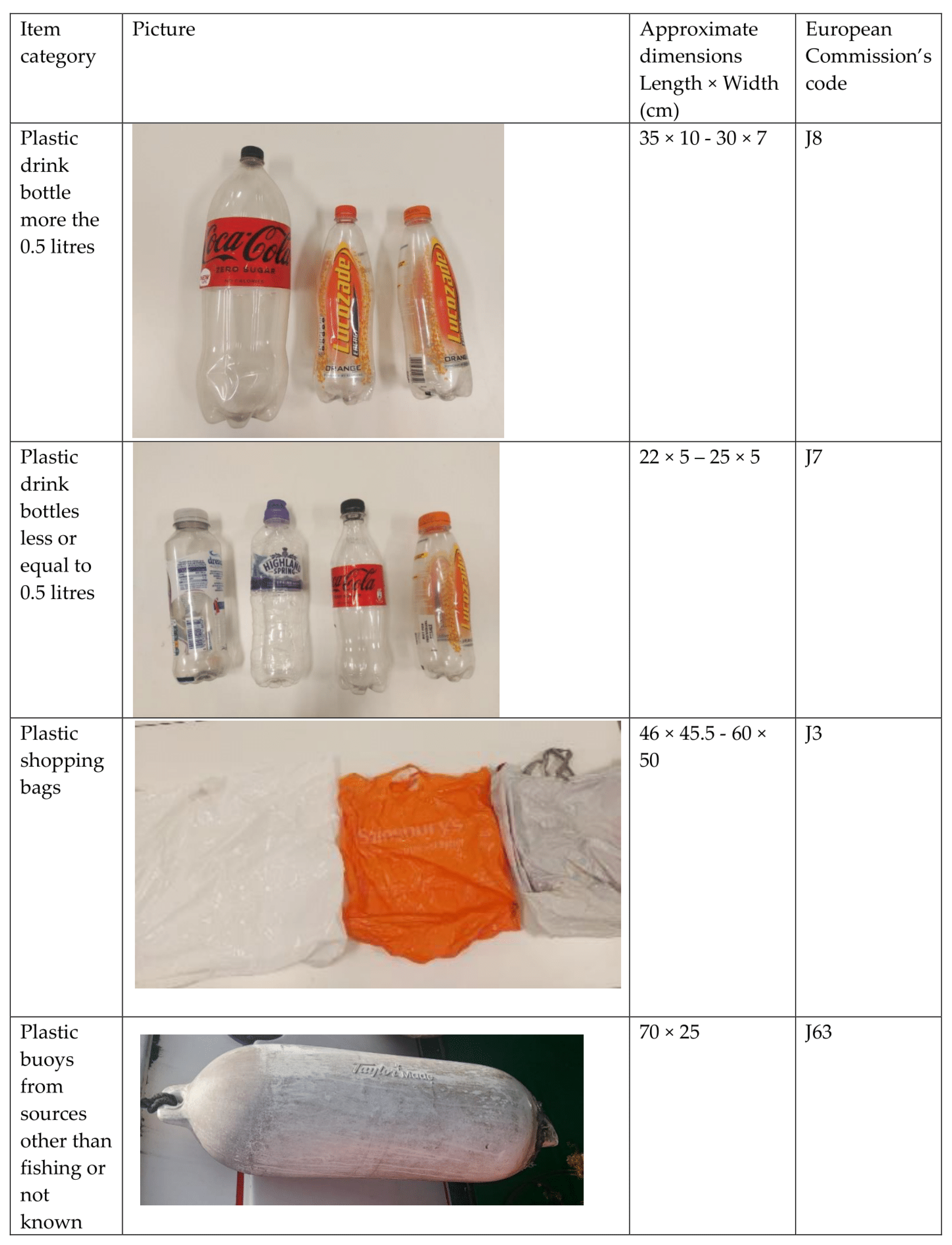

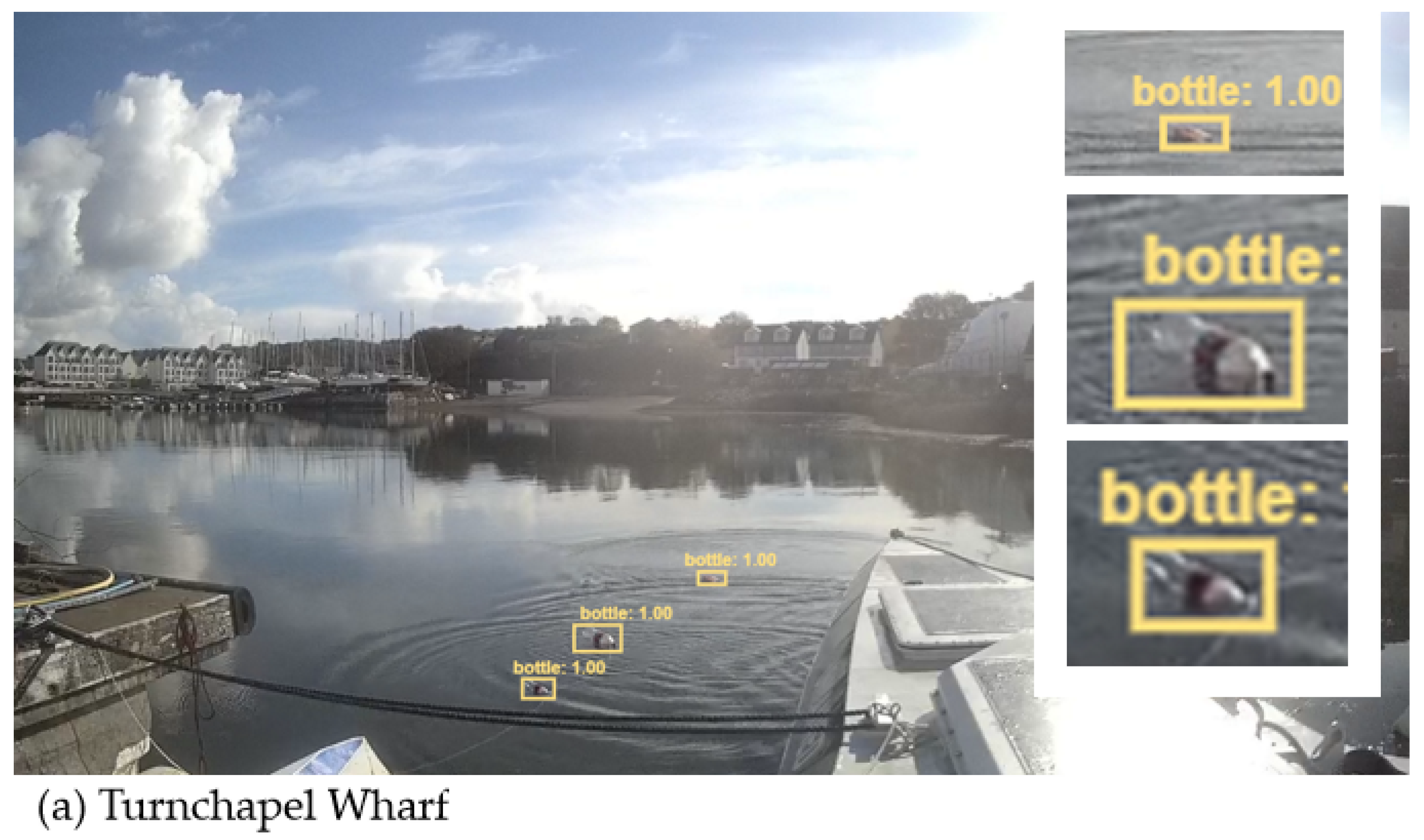

2.1. Data Collection

2.2. Data Processing

2.3. Neural Network Training

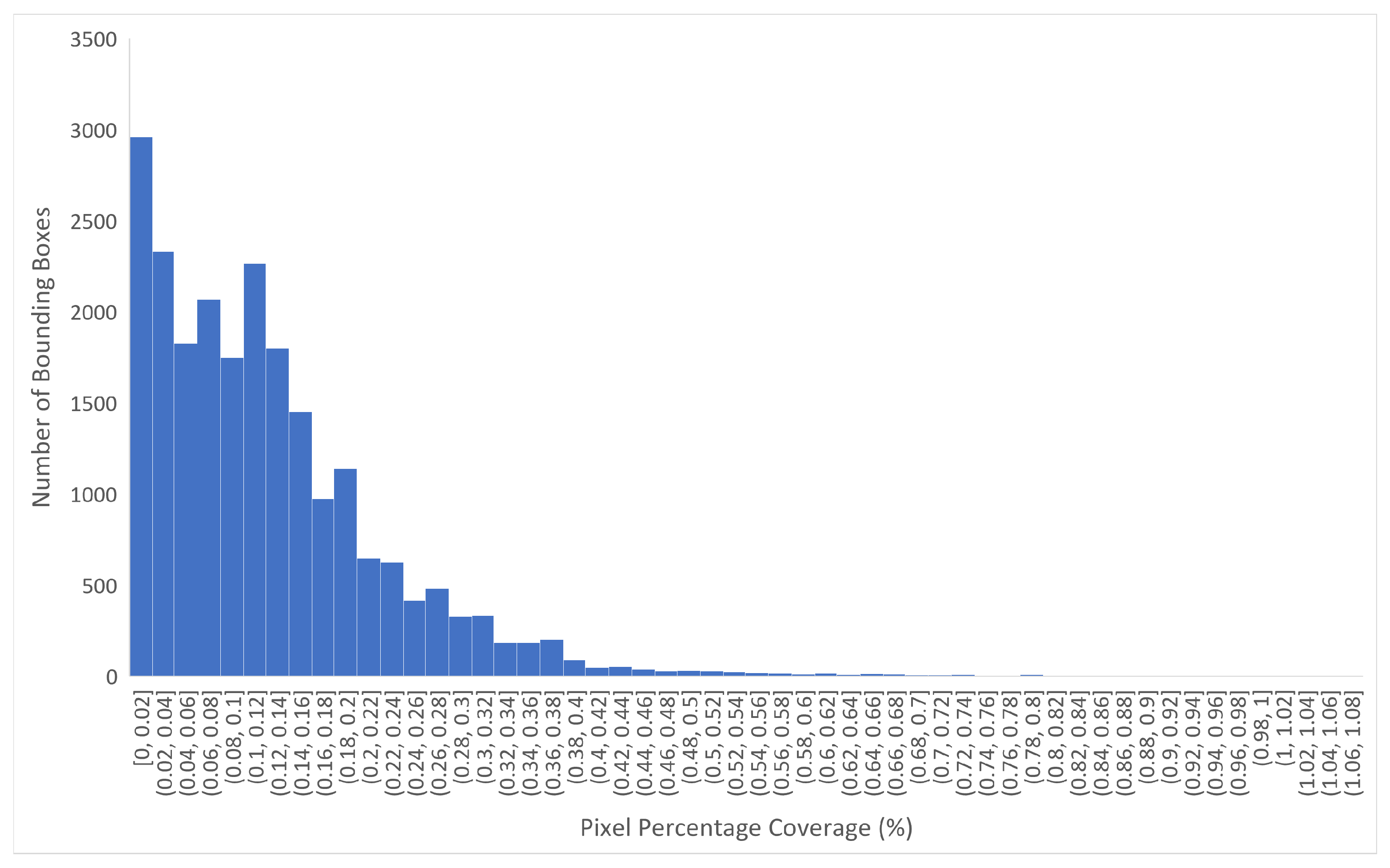

2.4. Percentage Pixel Coverage

3. Results

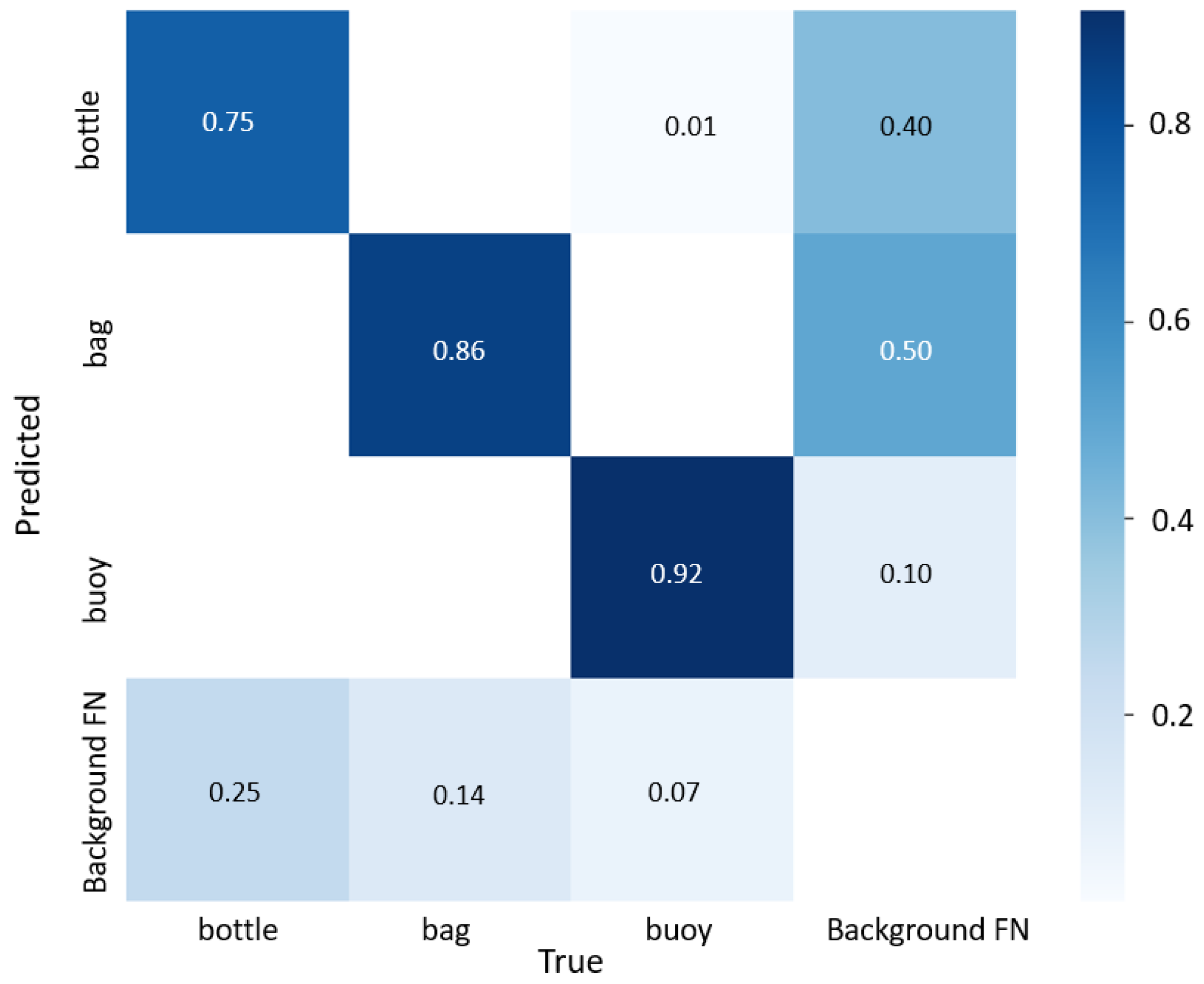

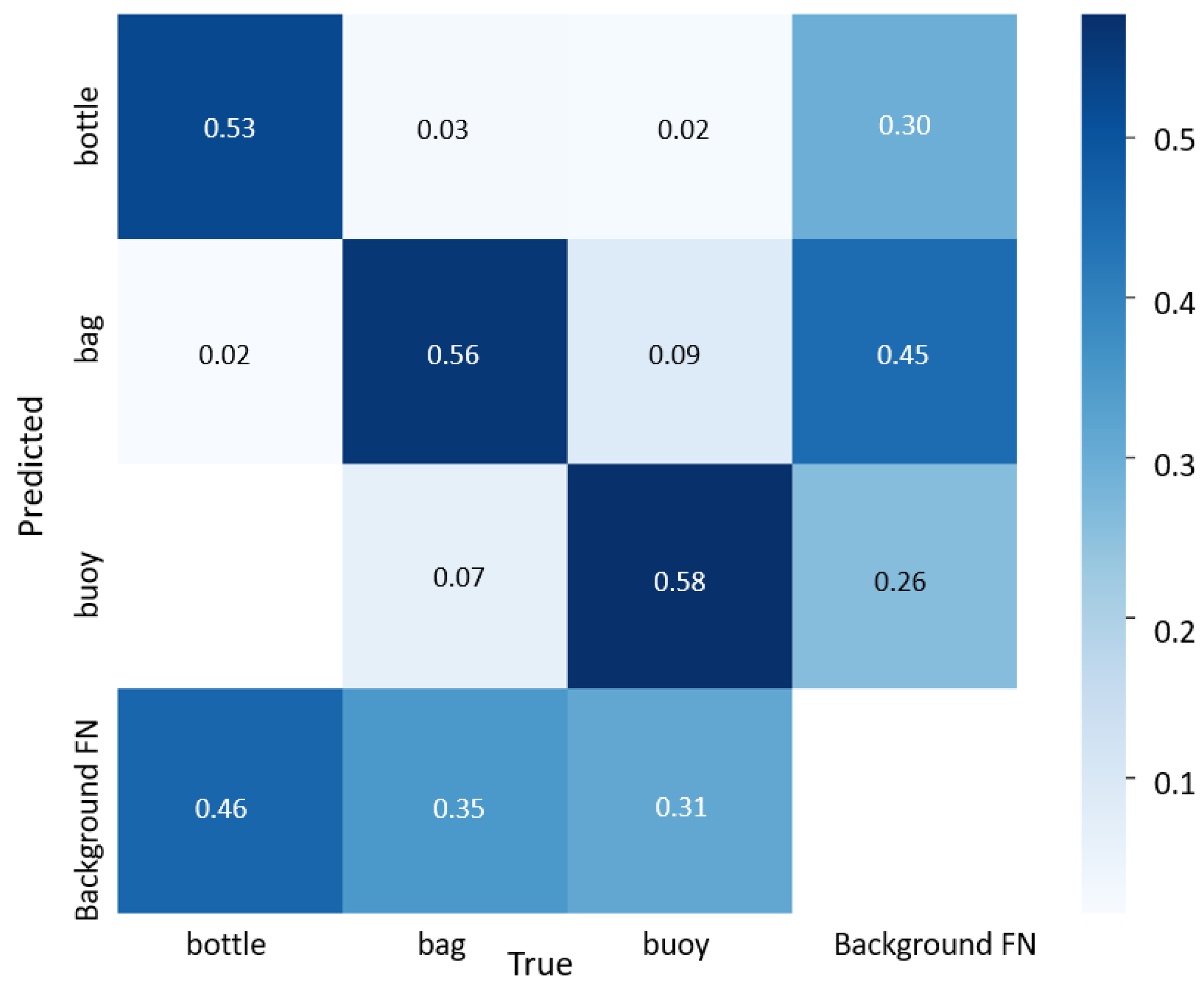

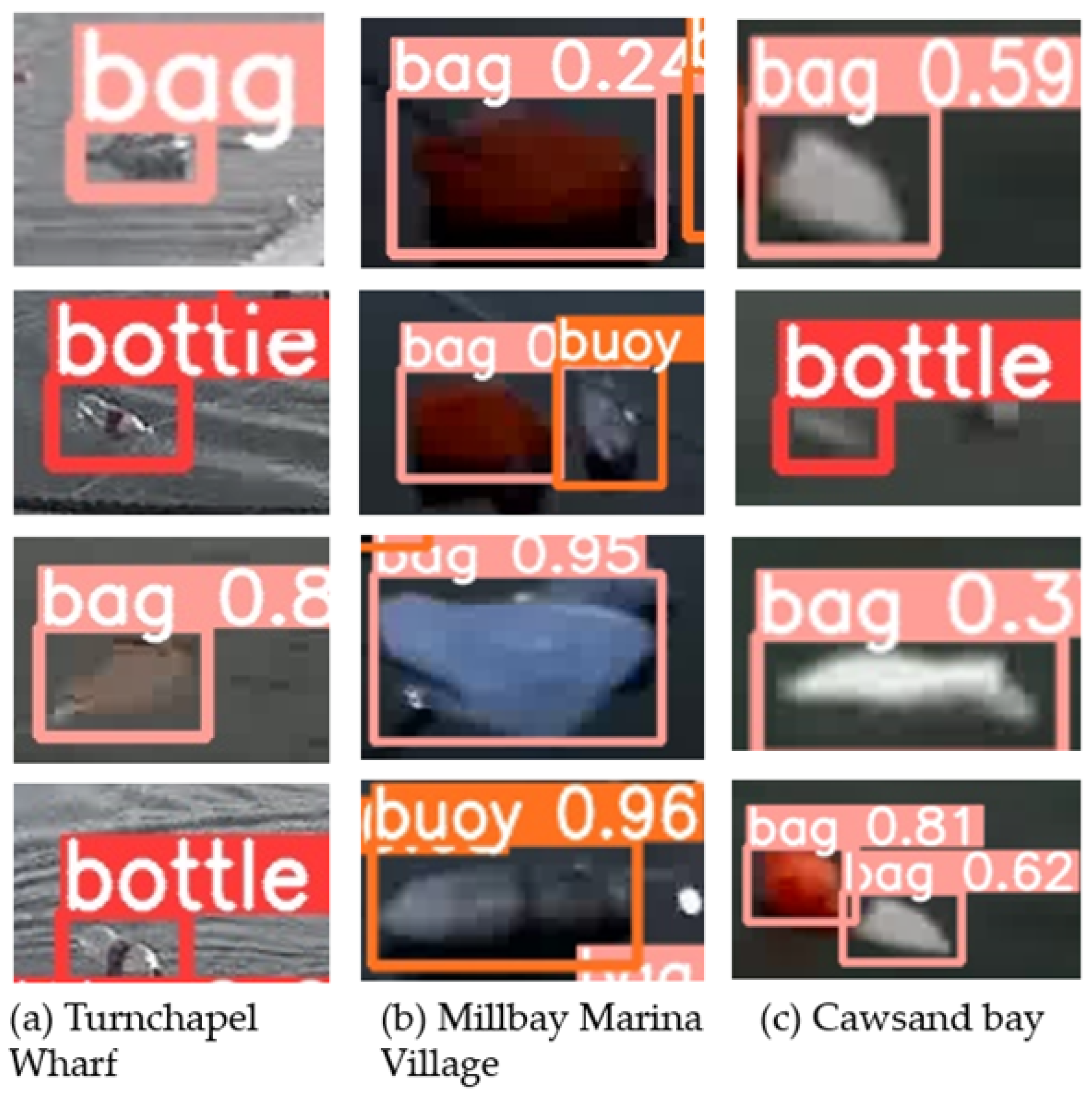

3.1. Neural Network Performance on the Validation Data Set

3.2. Neural Networks Performance on the Test Data Set

Percentage Pixels of Object

4. Discussion

5. Conclusions and Recommendations

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gregory, M.R.; Ryan, P.G. Pelagic Plastics and Other Seaborne Persistent Synthetic Debris: A Review of Southern Hemisphere Perspectives. In Marine Debris: Sources, Impacts, and Solutions; Coe, J.M., Rogers, D.B., Eds.; Springer Series on Environmental Management; Springer: New York, NY, USA, 1997; pp. 49–66. [Google Scholar] [CrossRef]

- Jambeck, J.R.; Geyer, R.; Wilcox, C.; Siegler, T.R.; Perryman, M.; Andrady, A.; Narayan, R.; Law, K.L. Plastic waste inputs from land into the ocean. Science 2015, 347, 768–771. [Google Scholar] [CrossRef]

- Sivan, A. New perspectives in plastic biodegradation. Curr. Opin. Biotechnol. 2011, 22, 422–426. [Google Scholar] [CrossRef]

- Hartmann, N.B.; Hüffer, T.; Thompson, R.C.; Hassellöv, M.; Verschoor, A.; Daugaard, A.E.; Rist, S.; Karlsson, T.; Brennholt, N.; Cole, M.; et al. Are We Speaking the Same Language? Recommendations for a Definition and Categorization Framework for Plastic Debris. Environ. Sci. Technol. 2019, 53, 1039–1047. [Google Scholar] [CrossRef] [Green Version]

- Azzarello, M.Y.; Vleet, E.S.V. Marine birds and plastic pollution. Mar. Ecol. Prog. Ser. 1987, 37, 295–303. [Google Scholar] [CrossRef]

- McCauley, S.J.; Bjorndal, K.A. Conservation Implications of Dietary Dilution from Debris Ingestion: Sublethal Effects in Post-Hatchling Loggerhead Sea Turtles. Conserv. Biol. 1999, 13, 925–929. [Google Scholar] [CrossRef]

- Gregory, M.R. Environmental implications of plastic debris in marine settings—entanglement, ingestion, smothering, hangers-on, hitch-hiking and alien invasions. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 2013–2025. [Google Scholar] [CrossRef]

- Fossi, M.C.; Marsili, L.; Baini, M.; Giannetti, M.; Coppola, D.; Guerranti, C.; Caliani, I.; Minutoli, R.; Lauriano, G.; Finoia, M.G.; et al. Fin whales and microplastics: The Mediterranean Sea and the Sea of Cortez scenarios. Environ. Pollut. 2016, 209, 68–78. [Google Scholar] [CrossRef]

- Provencher, J.F.; Vermaire, J.C.; Avery-Gomm, S.; Braune, B.M.; Mallory, M.L. Garbage in guano? Microplastic debris found in faecal precursors of seabirds known to ingest plastics. Sci. Total Environ. 2018, 644, 1477–1484. [Google Scholar] [CrossRef]

- Guerrera, M.C.; Aragona, M.; Porcino, C.; Fazio, F.; Laurà, R.; Levanti, M.; Montalbano, G.; Germanà, G.; Abbate, F.; Germanà, A. Micro and Nano Plastics Distribution in Fish as Model Organisms: Histopathology, Blood Response and Bioaccumulation in Different Organs. Appl. Sci. 2021, 11, 5768. [Google Scholar] [CrossRef]

- Messinetti, S.; Mercurio, S.; Parolini, M.; Sugni, M.; Pennati, R. Effects of polystyrene microplastics on early stages of two marine invertebrates with different feeding strategies. Environ. Pollut. 2018, 237, 1080–1087. [Google Scholar] [CrossRef]

- Eriksen, M.; Lebreton, L.C.M.; Carson, H.S.; Thiel, M.; Moore, C.J.; Borerro, J.C.; Galgani, F.; Ryan, P.G.; Reisser, J. Plastic Pollution in the World’s Oceans: More than 5 Trillion Plastic Pieces Weighing over 250,000 Tons Afloat at Sea. PLoS ONE 2014, 9, e111913. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rothäusler, E.; Jormalainen, V.; Gutow, L.; Thiel, M. Low abundance of floating marine debris in the northern Baltic Sea. Mar. Pollut. Bull. 2019, 149, 110522. [Google Scholar] [CrossRef]

- Sá, S.; Bastos-Santos, J.; Araújo, H.; Ferreira, M.; Duro, V.; Alves, F.; Panta-Ferreira, B.; Nicolau, L.; Eira, C.; Vingada, J. Spatial distribution of floating marine debris in offshore continental Portuguese waters. Mar. Pollut. Bull. 2016, 104, 269–278. [Google Scholar] [CrossRef]

- Maximenko, N.; Corradi, P.; Lavender Law, K.; Van Sebille, E.; Shungudzemwoyo, P.G.; Lampitt, R.S.; Galgani, F.; Martinez_vincente, V.; Goddijn-Murphy, L.; Veiga, J.M.; et al. Towards the Integrated Marine Debris Observing System. Front. Mar. Sci. 2019, 6, 447. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Garin, O.; Monleón-Getino, T.; López-Brosa, P.; Borrell, A.; Aguilar, A.; Borja-Robalino, R.; Cardona, L.; Vighi, M. Automatic detection and quantification of floating marine macro-litter in aerial images: Introducing a novel deep learning approach connected to a web application in R. Environ. Pollut. 2021, 273, 116490. [Google Scholar] [CrossRef] [PubMed]

- Unger, B.; Herr, H.; Viquerat, S.; Gilles, A.; Burkhardt-Holm, P.; Siebert, U. Opportunistically collected data from aerial surveys reveal spatio-temporal distribution patterns of marine debris in German waters. Environ. Sci. Pollut. Res. 2021, 28, 2893–2903. [Google Scholar] [CrossRef] [PubMed]

- Geraeds, M.; van Emmerik, T.; de Vries, R.; bin Ab Razak, M.S. Riverine Plastic Litter Monitoring Using Unmanned Aerial Vehicles (UAVs). Remote Sens. 2019, 11, 2045. [Google Scholar] [CrossRef] [Green Version]

- van Lieshout, C.; van Oeveren, K.; van Emmerik, T.; Postma, E. Automated River Plastic Monitoring Using Deep Learning and Cameras. Earth Space Sci. 2020, 7, e2019EA000960. [Google Scholar] [CrossRef]

- de Vries, R.; Egger, M.; Mani, T.; Lebreton, L. Quantifying Floating Plastic Debris at Sea Using Vessel-Based Optical Data and Artificial Intelligence. Remote Sens. 2021, 13, 3401. [Google Scholar] [CrossRef]

- Gonçalves, G.; Andriolo, U. Operational use of multispectral images for macro-litter mapping and categorization by Unmanned Aerial Vehicle. Mar. Pollut. Bull. 2022, 176, 113431. [Google Scholar] [CrossRef]

- Garaba, S.P.; Aitken, J.; Slat, B.; Dierssen, H.M.; Lebreton, L.; Zielinski, O.; Reisser, J. Sensing Ocean Plastics with an Airborne Hyperspectral Shortwave Infrared Imager. Environ. Sci. Technol. 2018, 52, 11699–11707. [Google Scholar] [CrossRef] [PubMed]

- Freitas, S.; Silva, H.; Silva, E. Remote Hyperspectral Imaging Acquisition and Characterization for Marine Litter Detection. Remote Sens. 2021, 13, 2536. [Google Scholar] [CrossRef]

- Garcia-Garin, O.; Aguilar, A.; Borrell, A.; Gozalbes, P.; Lobo, A.; Penadés-Suay, J.; Raga, J.A.; Revuelta, O.; Serrano, M.; Vighi, M. Who’s better at spotting? A comparison between aerial photography and observer-based methods to monitor floating marine litter and marine mega-fauna. Environ. Pollut. 2020, 258, 113680. [Google Scholar] [CrossRef]

- Garcia-Garin, O.; Borrell, A.; Aguilar, A.; Cardona, L.; Vighi, M. Floating marine macro-litter in the North Western Mediterranean Sea: Results from a combined monitoring approach. Mar. Pollut. Bull. 2020, 159, 111467. [Google Scholar] [CrossRef] [PubMed]

- Tata, G.; Royer, S.J.; Poirion, O.; Lowe, J. A Robotic Approach towards Quantifying Epipelagic Bound Plastic Using Deep Visual Models. arXiv 2021, arXiv:2105.01882. [Google Scholar]

- Kylili, K.; Kyriakides, I.; Artusi, A.; Hadjistassou, C. Identifying floating plastic marine debris using a deep learning approach. Environ. Sci. Pollut. Res. 2019, 26, 17091–17099. [Google Scholar] [CrossRef] [PubMed]

- Majaj, N.J.; Pelli, D.G. Deep learning—Using machine learning to study biological vision. J. Vis. 2018, 18, 2. [Google Scholar] [CrossRef] [Green Version]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Tata, G. Using Deep Learning to Quantify, Monitor, and Remove Marine Plastic; Towards Data Science: Toronto, ON, Canada, 2021. [Google Scholar]

- European Commission. Joint Research Centre. Joint List of Litter Categories for Marine Macro-Litter Monitoring: Manual for the Application of the Classification System; Publications Office: Luxembourg, 2021. [Google Scholar]

- Current Weather Conditions at Rame Head NCI. Nci-ramehead.org.uk. 2022. Available online: http://www.nci-ramehead.org.uk/weather/archive/ (accessed on 1 June 2022).

- VIAME/VIAME: Video and Image Analytics for Marine Environments. GitHub - VIAME/VIAME: Video and Image Analytics for Multiple Environments [Internet]. GitHub. 2022. Available online: https://github.com/VIAME/VIAME (accessed on 1 June 2022).

- Imgaug—Imgaug 0.4.0. Available online: https://https://imgaug.readthedocs.io/en/latest/index.html (accessed on 1 June 2022).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Ying, X. An Overview of Overfitting and its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- GitHub—ultralytics/yolov5: YOLOv5 in PyTorch > ONNX > CoreML > TFLite. GitHub. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 June 2022).

- Gonçalves, G.; Andriolo, U.; Gonçalves, L.; Sobral, P.; Bessa, F. Quantifying Marine Macro Litter Abundance on a Sandy Beach Using Unmanned Aerial Systems and Object-Oriented Machine Learning Methods. Remote Sens. 2020, 12, 2599. [Google Scholar] [CrossRef]

- Garaba, S.; Dierssen, H. An airborne remote sensing case study of synthetic hydrocarbon detection using short wave infrared absorption features identified from marine-harvested macro- and microplastics. Remote Sens. Environ. 2018, 205, 224–235. [Google Scholar] [CrossRef]

- Ricciardi, M.; Pironti, C.; Motta, O.; Miele, Y.; Proto, A.; Montano, L. Microplastics in the Aquatic Environment: Occurrence, Persistence, Analysis, and Human Exposure. Water 2021, 13, 973. [Google Scholar] [CrossRef]

| Date (Day/Month/Year) | Min-Max Temperature (Degrees Celsius) | Average Wind Speed (mph) | Min-Max Rainfall (mm) |

|---|---|---|---|

| 20 October 2021 | 14.7–14.8 | 28.9 | 0.00 |

| 2 November 2021 | 11.8–11.9 | 2.9 | 0.00 |

| 12 November 2021 | 12.1–12.3 | 21.9 | 0.00 |

| 2 December 2021 | 5.8–6.0 | 14.2 | 0.00–0.2 |

| 25 January 2022 | 6.4–6.3 | 13.0 | 0.00 |

| Model | mAP | F1-score | Precision | Recall |

|---|---|---|---|---|

| Model 1 (v5s, 640) | 0.833 | 0.84 | 0.981 | 0.86 |

| Model 2 (v5s, 1280) | 0.851 | 0.85 | 0.977 | 0.88 |

| Model 3 (v5m, 640) | 0.847 | 0.85 | 0.976 | 0.87 |

| Model 4 (v5s, 2592) | 0.863 | 0.86 | 0.952 | 0.90 |

| Model Detection | Bottle | Bag | Buoy | Total |

|---|---|---|---|---|

| Correct | 3063 | 6630 | 2673 | 12,366 |

| Incorrect class | 399 | 505 | 775 | 1679 |

| False negative | 674 | 1016 | 590 | 4953 |

| Data Set | mAP | F1-Score | Precision | Recall |

|---|---|---|---|---|

| Overall Test | 0.653 | 0.64 | 0.979 | 0.75 |

| 75% Test | 0.66 | 0.65 | 0.979 | 0.73 |

| 25% Test | 0.515 | 0.52 | 0.978 | 0.66 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Armitage, S.; Awty-Carroll, K.; Clewley, D.; Martinez-Vicente, V. Detection and Classification of Floating Plastic Litter Using a Vessel-Mounted Video Camera and Deep Learning. Remote Sens. 2022, 14, 3425. https://doi.org/10.3390/rs14143425

Armitage S, Awty-Carroll K, Clewley D, Martinez-Vicente V. Detection and Classification of Floating Plastic Litter Using a Vessel-Mounted Video Camera and Deep Learning. Remote Sensing. 2022; 14(14):3425. https://doi.org/10.3390/rs14143425

Chicago/Turabian StyleArmitage, Sophie, Katie Awty-Carroll, Daniel Clewley, and Victor Martinez-Vicente. 2022. "Detection and Classification of Floating Plastic Litter Using a Vessel-Mounted Video Camera and Deep Learning" Remote Sensing 14, no. 14: 3425. https://doi.org/10.3390/rs14143425

APA StyleArmitage, S., Awty-Carroll, K., Clewley, D., & Martinez-Vicente, V. (2022). Detection and Classification of Floating Plastic Litter Using a Vessel-Mounted Video Camera and Deep Learning. Remote Sensing, 14(14), 3425. https://doi.org/10.3390/rs14143425