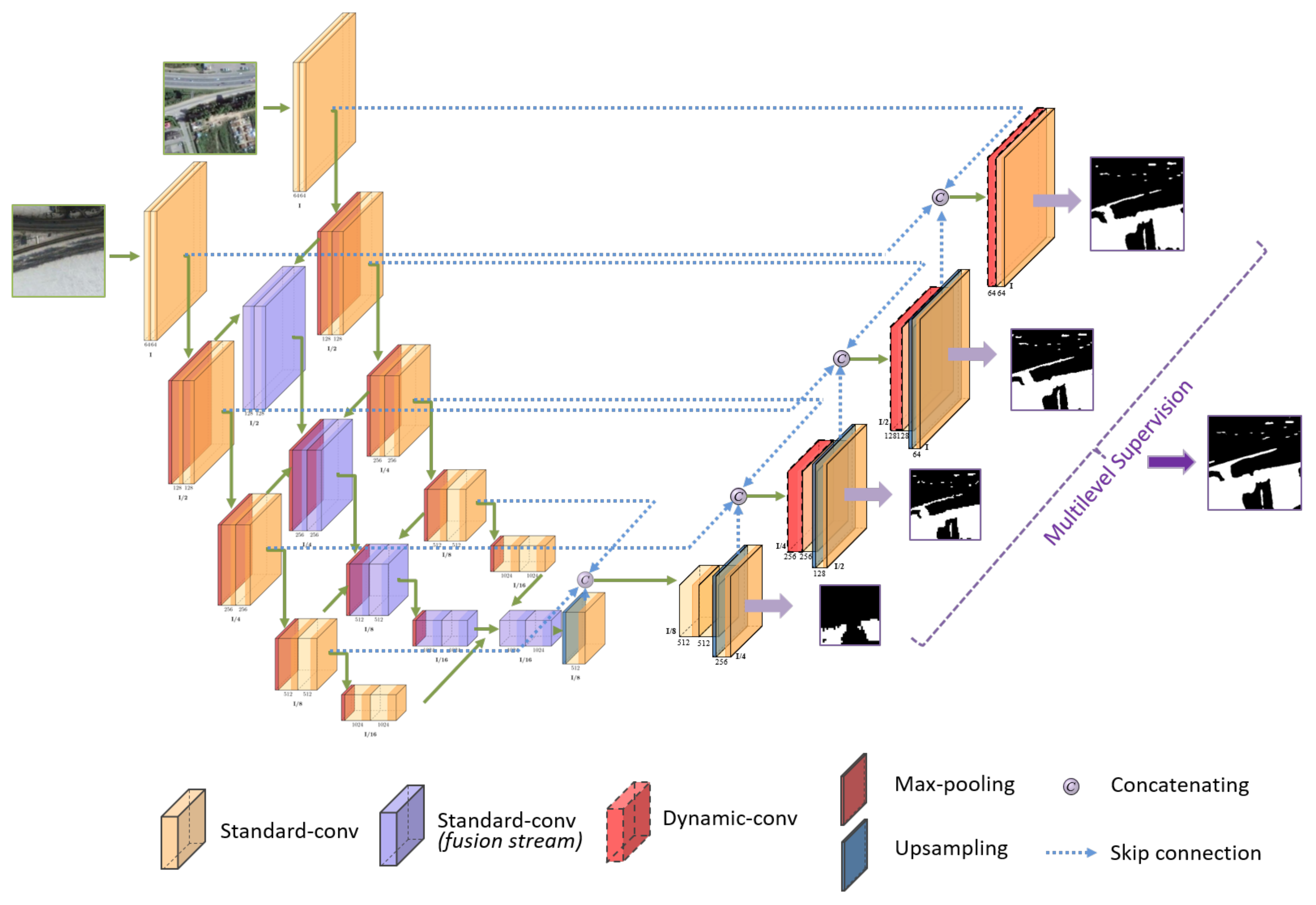

4.1. Datasets and Settings

The dataset provided by LEBEDEV [

41] contains two types of images in this dataset: composite images with small target offset or not, and real optical satellite images with seasonal changes, obtained by Google Earth. The real images are 11 pairs of optical images, including seven pairs of seasonal variation images of

pixels without additional objects and four pairs of

pixels with additional objects, which are the data we use for experiments. The original image sets are provided as a subset consisting of 16,000 clipped images with size of

from original real-temporal seasonal images, distributed with 10,000 train sets, 3000 test sets also validation sets. As shown in

Figure 4, the change areas in LEBEDEV defined according to the change of cars, buildings, surface uses, etc. The visual differences caused by changing seasons are not considered to be change areas. The change areas usually appear in such as multiple scales, shapes, numbers, which leads to challenges in detection.

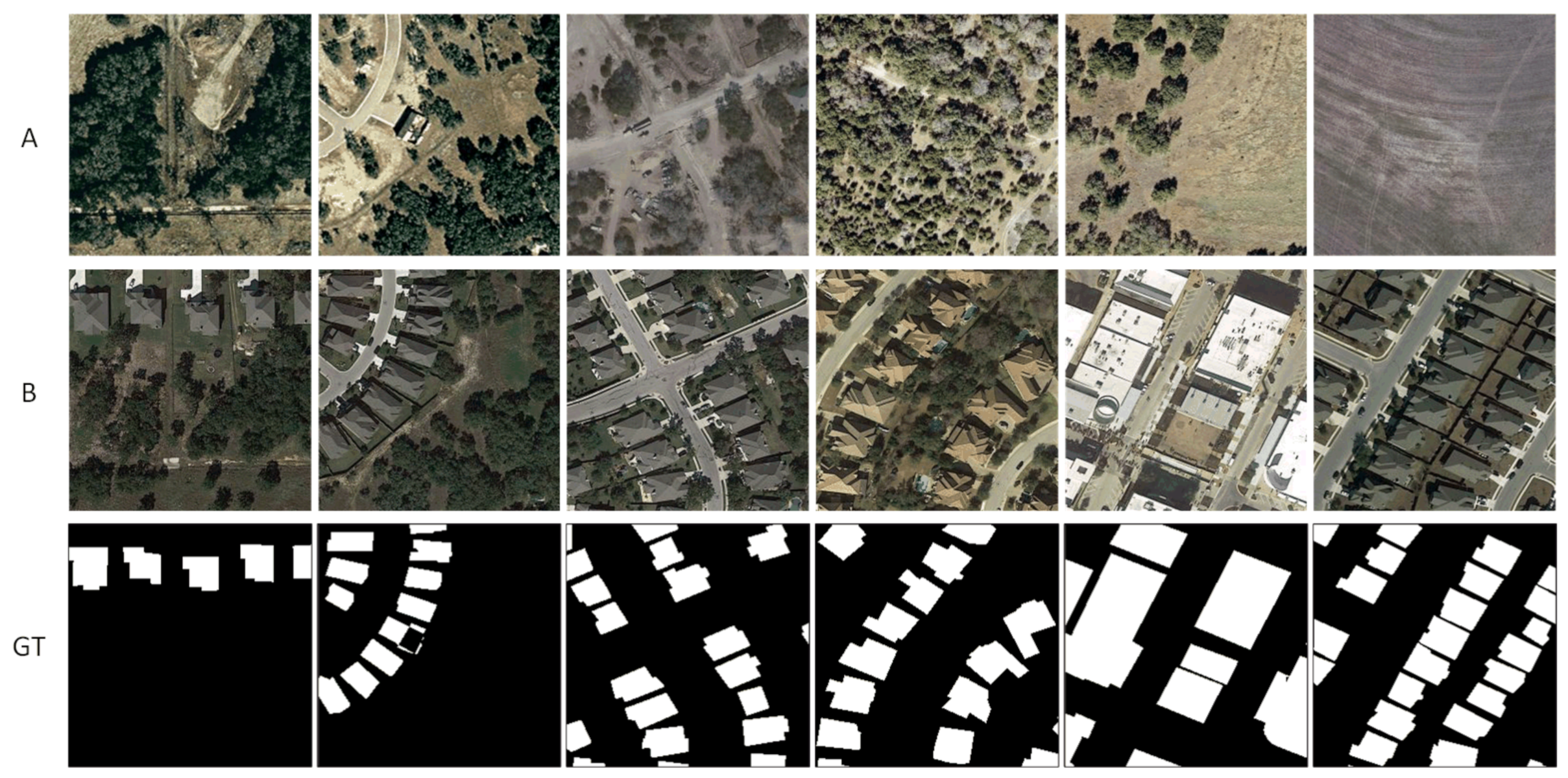

The LEVIR-CD [

33] dataset provided by researchers from the LEarning VIsion and Remote sensing laboratory (LEVIR) of image processing center of Beijing University of Aeronautics and Astronautics, is a collection of 637 high-resolution (0.5 m/pixel) Google Earth image pairs with the size of

pixels. These bi-temporal images come from 20 different regions of cities in Texas collected from 2002 to 2018. The change areas are mainly marked according to building change on two aspects: building growth (from soil/grass/hardened ground or building under construction to new area change) and building decay. All data is annotated by experts from artificial intelligence data service companies, who have rich experience in interpreting remote sensing images and understanding change-detection tasks. The fully annotated LEVIR-CD contains a total of 31,333 individual altered buildings. In the experiment, for the convenience of training and following other state-of-the-art methods, as shown in

Figure 5, the original images are cropped into clipped images with size of

without overlaps. There are 7120 clipped image pairs for training set and 2048 pairs for validation set also testing set. The challenge of this dataset is mainly reflected in the uneven distribution of positive and negative samples. In the clipped

images, there are a certain number of images without change areas (that is, all pixels in a sample are negative). At the same time, the change area is mainly on architectural change.

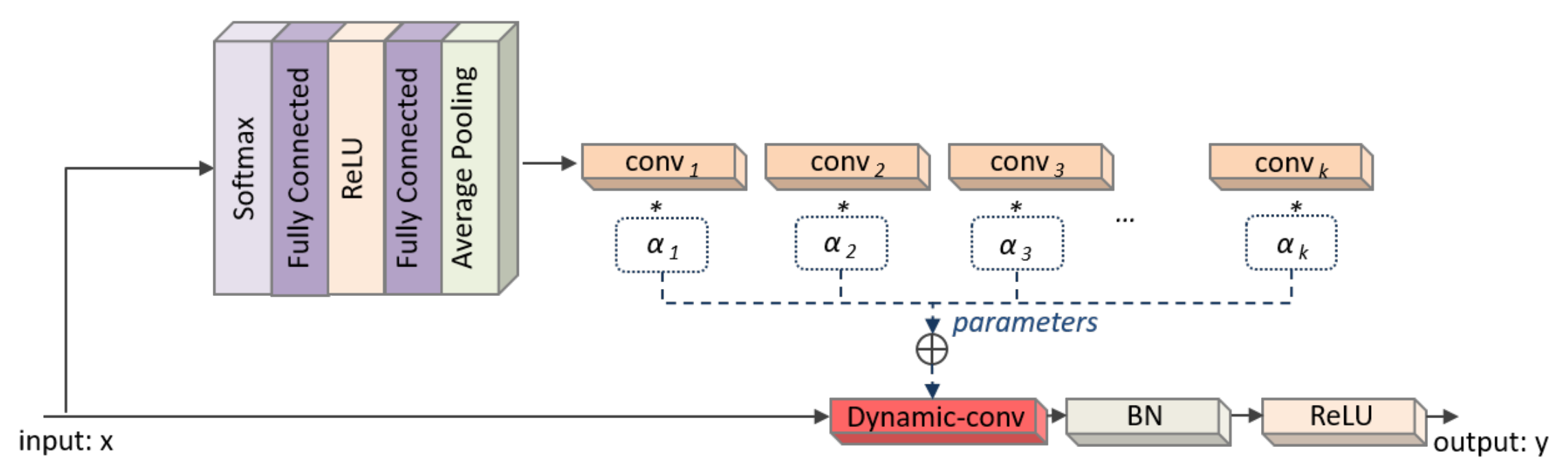

The experiments are implemented on PyTorch (version 1.0.1) platform on a 10 core Intel Xeon (R) e5-2640 V4 @ 2.40 GHz workstation with NVIDIA GTX 1080ti GPU. The batch size is 4 pairs and the learning rate is . The number of parallel convolution kernels in dynamic convolution modules are set to . The weight w for each is 1. The parameters in focal loss are set as and . We use a random data augmentation strategy in the training process: the data loader will automatically transform the image batch according to the randomly generated augmentation probability value, including random rotation, clipping, flipping and brightness, contrast, saturation changes, etc.

4.3. Result Comparison

To prove the effectiveness of our proposed HDFNet, we compare the HDFNet with other deep-learning-based change-detection methods including: (1) The deep Siamese convolutional network (DSCN) [

24] is a Siamese-based network without pooling layers which can maintain the respective fields and features dimension; (2) The FC-EF, FC-Siam-conc and FC-Siam-diff [

3] are based on FCN structure with early-fusion and late-fusion strategies using skip connections, which are widely used classic image-to-image baselines; (3) The fully convolutional network pyramid pooling (FCN-PP) [

31] and deep Siamese multiScale fully convolutional network (DSMS-FCN) [

5] involved multiscale designs based on the previous baselines, by pyramid pooling and multiscale convolutional kernels unit, respectively; (4) The change detection based on UNet++ with multiple side-outputs fusion(UNet++MSOF) design a multiple side loss supervision on features densely upsampled from multiple scales in the UNet++; (5) The IFN [

1] and boundary-aware attentive network (

Net) [

36] involve attention mechanisms in the decoding process also deep supervision and refined detection to deal with features in different scales, based on late fusion and early fusion respectively; (6) The spatial–temporal attention-based network (STANet) [

33] is based on late fusion and introduces pyramid pooling involved attention modules to adapt multiscale features. For quantitative comparisons, the evaluation metrics were calculated and summarized as shown in

Table 1 and

Table 2, on LEBEDEV and LEVIR-CD, respectively. The best scores are highlighted by red, while green and blue indicate the second best and the third best, respectively.

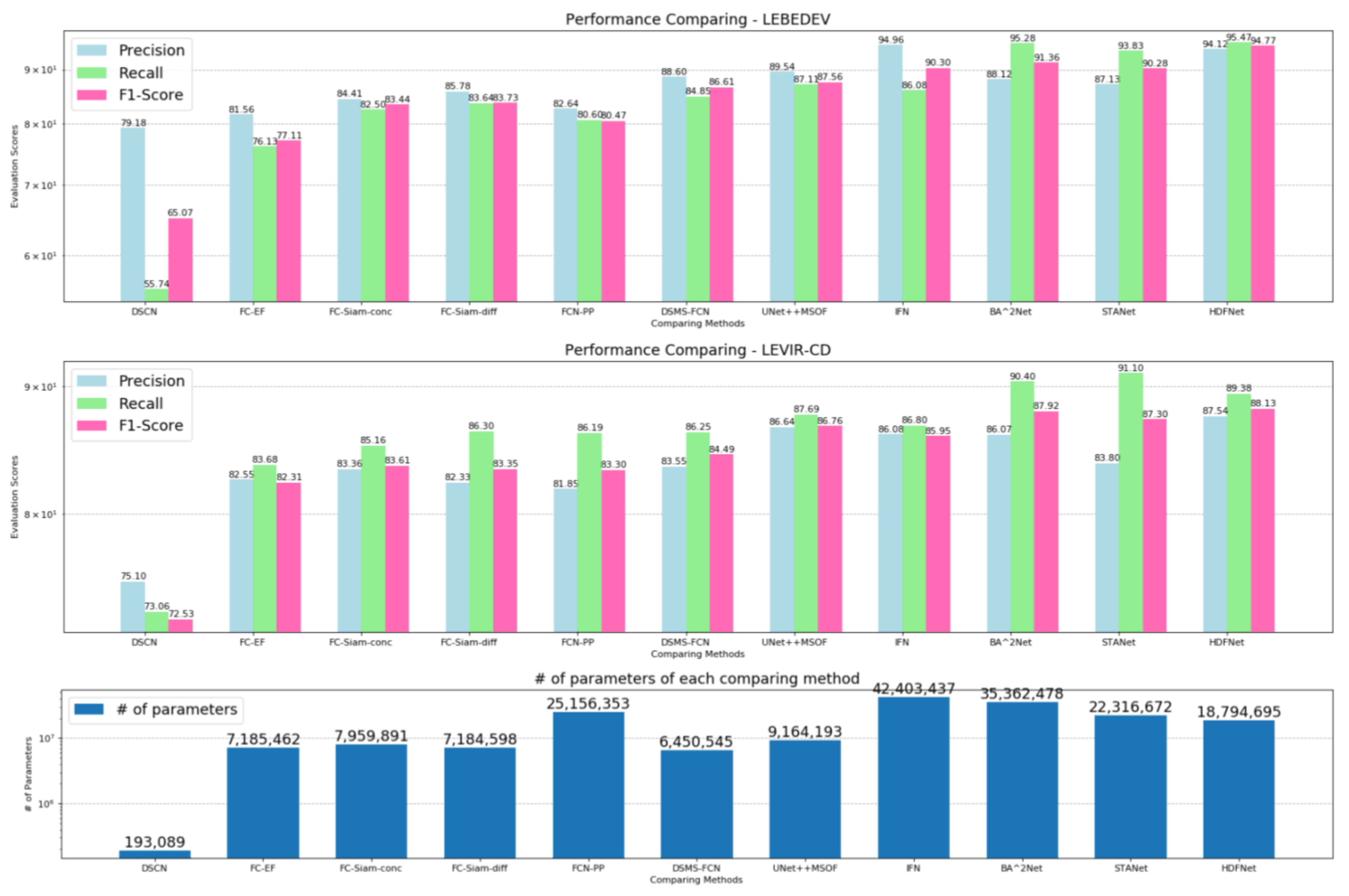

From

Table 1, it can be observed that on the dataset of LEBEDEV, the proposed HDFNet achieves the first in recall, F1 score and the second in precision (less than 0.9% after the first). Though the IFN achieves the highest precision, its recall is limited (at 86.08%, which is more than 9% than the proposed network). It also can be observed in

Figure 6 that change maps obtained by IFN have certain unpredicted change areas. Specifically, the DSCN which does not involve design on multiscale features is the relatively lower network in all evaluation metrics. This is because of its simple structure and ignorance of the multiscale information problem within the bi-temporal images. The high-dimensional features maintained in the whole processing lead to an obvious lower recall score. By using encoding-decoding with skip connections, the baselines of FC-EF, FC-Siam-conc and FC-Siam-diff achieve greatly better performance with their brief and effective structures. Among these three baselines, late-fusion baselines show obvious advantages over the early-fusion baseline. The strategy of keeping original image encoding features for decoding can help with a higher precision and recall scores. Based on pyramid pooling which can further use multiscale information, the FCN-PP improves the F1-score by more than 3% compared with its similarly baseline FC-EF. Although the multiscale convolution kernels and pooling with in the DSMS-FCN improves F1-score about 3% compared with its similarly baseline FC-Siam-diff. It indicates that the multiscale computing operations during the encoding can effectively improve performance.

By using the densely nodes and skip connections in each encoding scale of UNet++, UNet++MSOF achieves the third precision score. However, the network gives almost equal attention to each scale, which results in limited improvement in its detection performance. Based on late-fusion strategy, by introducing spatial and channel attention mechanisms in each decoding stage and supervising on them, IFN achieves the highest precision and the third F1-score, but limited recall. Based on early-fusion strategy, Net uses attention gates and coarse-to-fine strategies to use context information and local information. However, its attention gates are guided by higher level features which lead to precision is still obviously lower than that of recall. The STANet is a late-fusion-based network involving a pyramid spatial–temporal attention module achieving the third recall. It indicates that for such complex data as LEBEDEV dataset, the pattern of encoding-decoding and multiscale self-attentive learning respectively may require further design to accommodate. The proposed HDFNet reaches the highest in recall and F1-score, while precision ranks second, which is due to the fact that the network maintains the advantages of early fusion and late fusion in the process of encoding and applies self-adaptive learning by dynamic convolution modules in the process of decoding. Also, multilevel supervision can help improve performance. It is worth mentioning that HDFNet achieves a good trade-off between precision and recall.

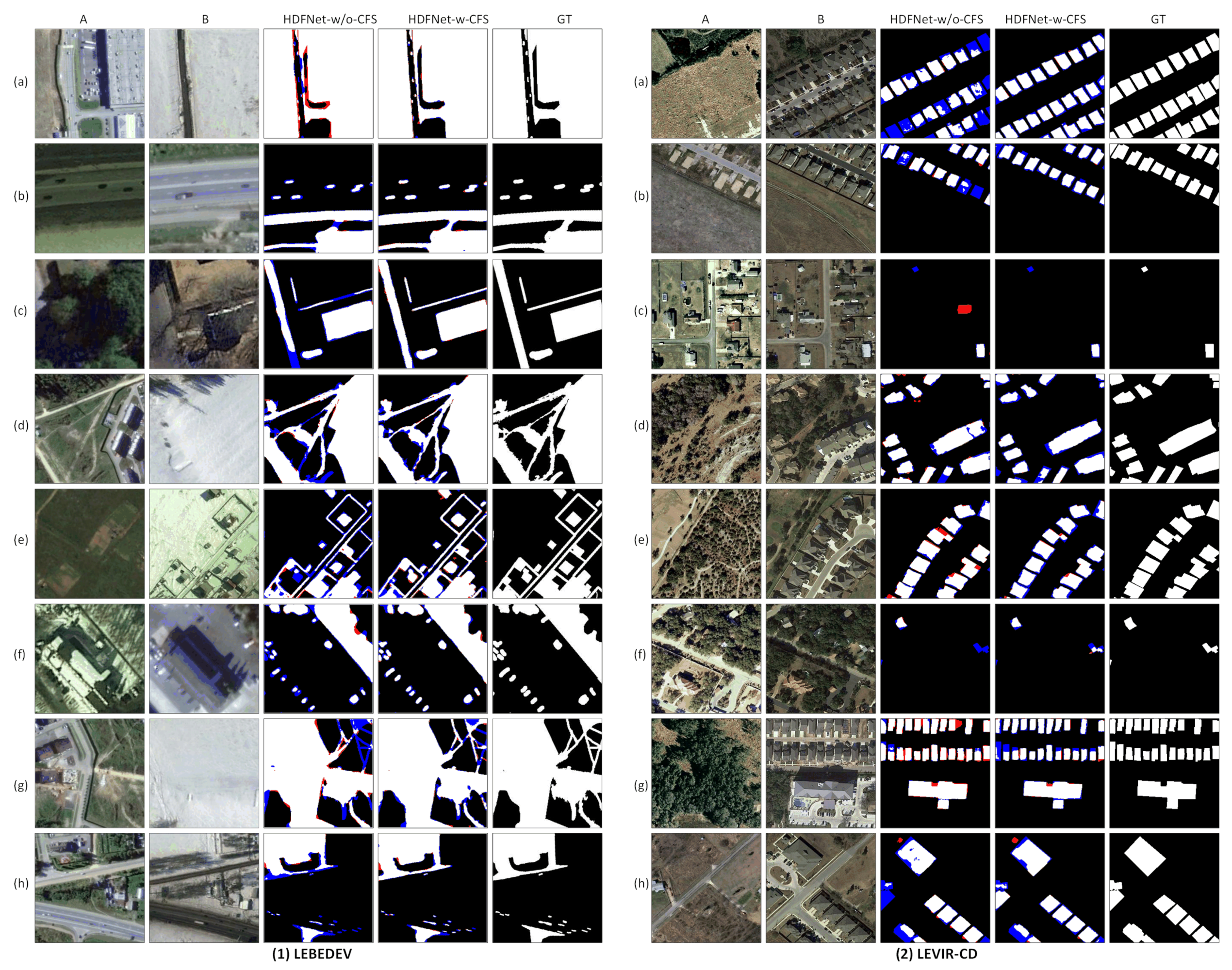

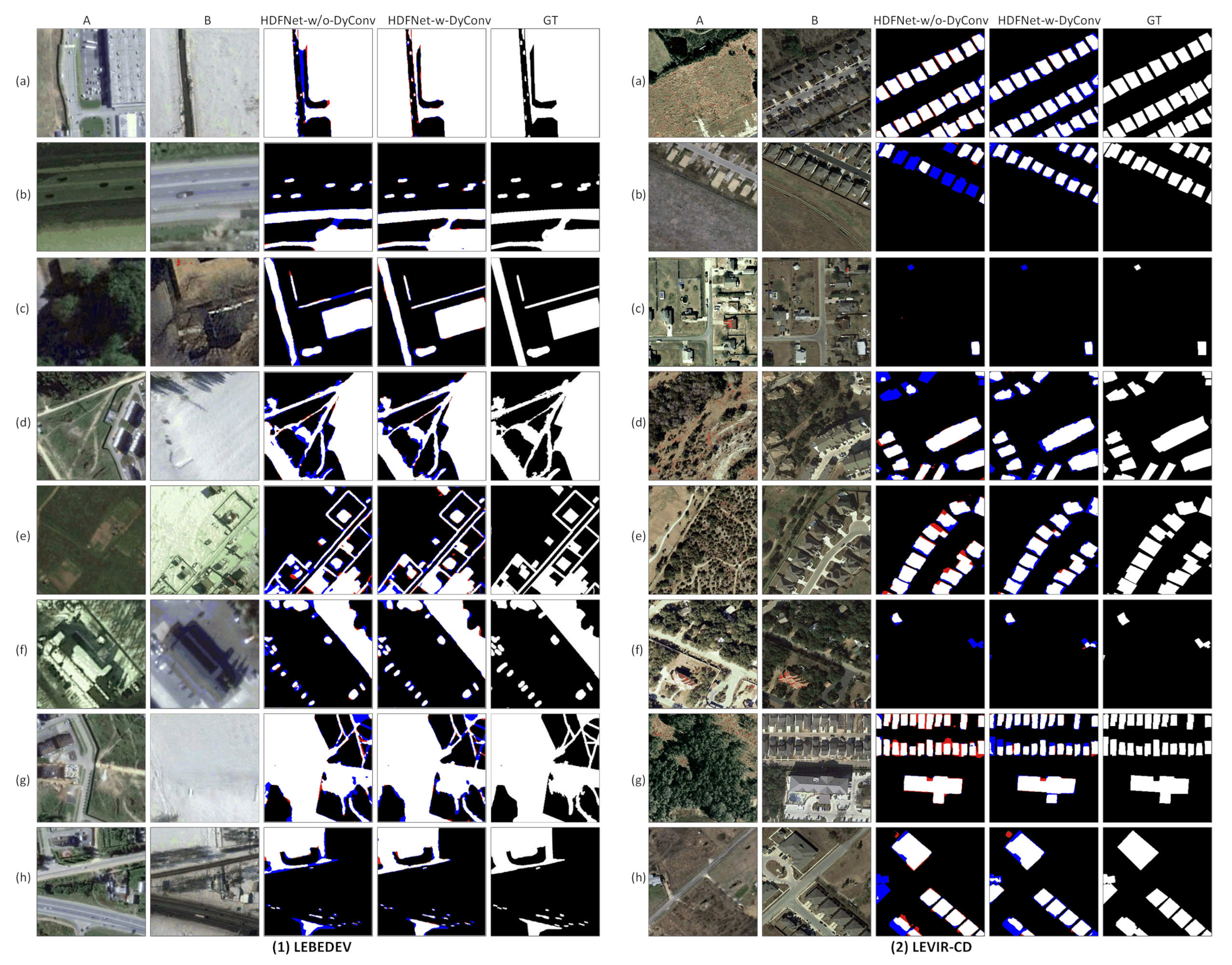

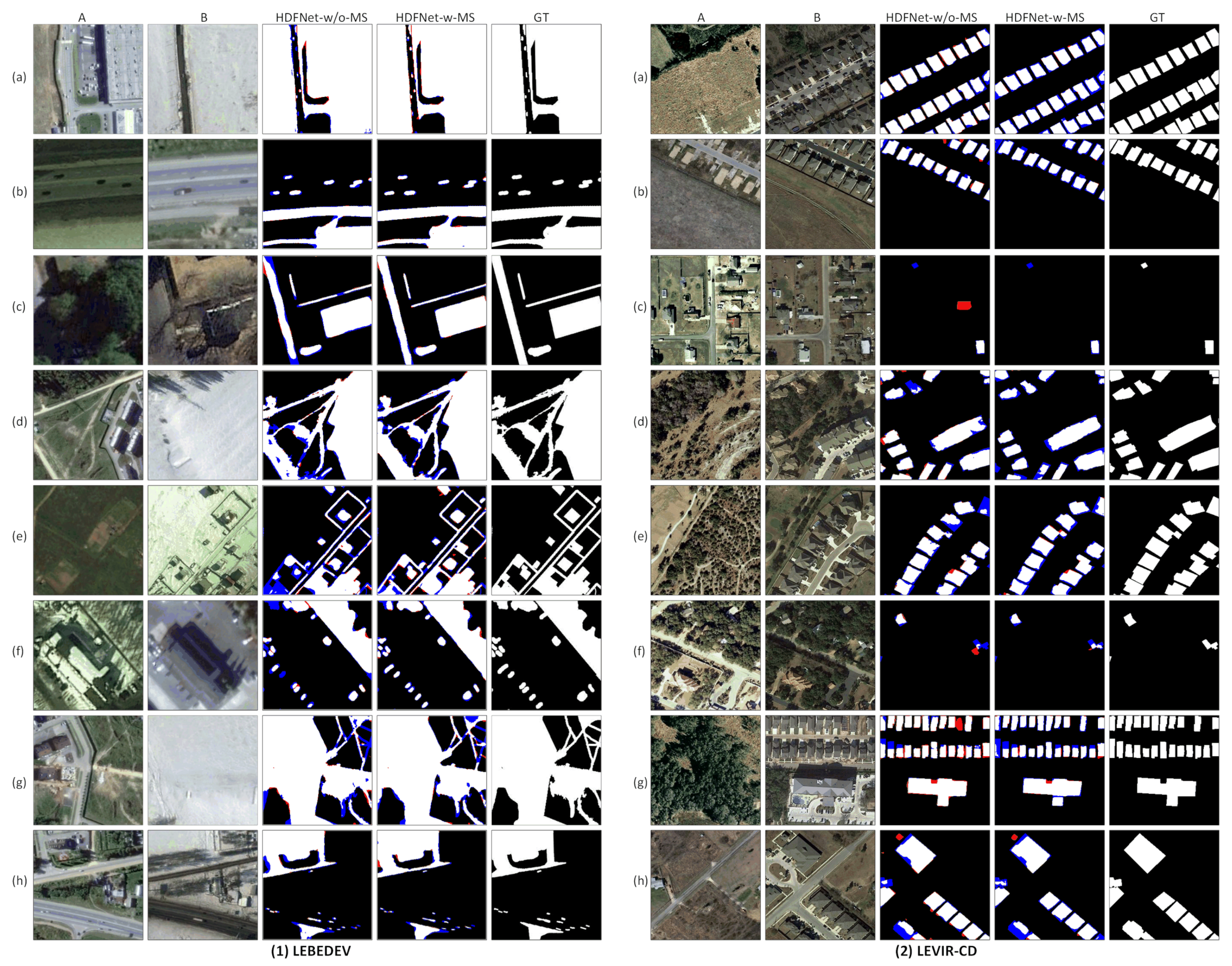

Also, the qualitative analysis is shown in

Figure 6 by showing five challenging sets of bi-temporal images, each containing disjoint multiple change areas in wide range scales. It can be observed that DSCN has obvious false detection in almost all these challenging samples. Among the three baselines based on FCN, the FC-Siam-conc and the FC-Siam-diff are more powerful than the FC-EF. The FC-EF can locate most areas of change except for some very small areas of change (sample

Figure 6b,d,e). However, it does not retain each original image features, especially the shallow layer features, which makes the obvious inaccuracy of the detected change areas. The other two late-fusion baselines generally perform better than the FC-EF, which is reflected on the more complete and accurate shape of detected change areas. However, its detection rate, integrity and precision can be improved. By introducing multiscale processing modules before the decoding phase, the FCN-PP and DSMS-FCN improve the quantitative scores obviously compared with their baselines, but the improvement in the qualitative analysis is not obvious, and there is still obvious error detection in samples c, d, e.

Benefiting from the dense design on multiscale stages, the change maps obtained by UNet++MSOF are more correct than the previous methods. However, it has the problem based on the early-fusion strategy that is not accurate in the boundary and details of change areas. The IFN and STANet based on late fusion are relatively more complete in local details, benefiting from their spatial and channel attention mechanisms. However, it has false positive and false negative detection in some small areas, and appears smoother than GTs in the boundary with rich details. By introducing attention gates and refined detection, Net can obtain the visually closer change maps with the GT maps, especially the boundary with complex information. The mechanism of paying more attention to higher resolution features improves the detection rate, but the neglect of the lower resolution features of each original image leads to over ranged change areas, which leads to its limited precision. The proposed HDFNet can correctly locate most of the change areas and accurately detect the shape of the areas and other information. Though there are a few errors in the very small change areas and very complex boundaries, it maintains accurate change maps in general.

It can be observed from

Table 2 that on dataset LEVIR-CD, the F1-scores of most comparison methods do not show as large a gap as in LEBEDEV, except the DSCN is obviously lower than other methods. The difference in the performance of the three baselines of FCN is not obvious, and the F1-scores are about 83%, among which FC-Siam-conc is slightly higher. The FCN-PP and DSMS-FCN improve the F1-score by around 1% compared with their baselines through the multiscale pooling and convolution operations. In other words, the performance improvement of these multiscale design based on fixed kernel sizes on LEVIR-CD is not as significant as that on LEBEDEV. This may be because the multiple scale range of LEVIR-CD is not as wide as that of LEBEDEV. However, the challenge of LEVIR-CD is mainly reflected in the uneven numbers and distribution of the change areas.

The UNet++MSOF maintains its precision robustness on LEVIR-CD, reaching the second-best precision while obviously improving the F1-score to 86.76%. This is due to its adequate computation at each encoding scale and multiple outputs supervision. The IFN reaches the third precision score, while F1-score is slightly lower than UNet++MSOF, especially the recall. The Net reaches the second-best recall and F1-score which benefits from its deeper features attentive guidance for network updating. By introducing attention mechanisms involving multiple scales, STANet achieves the best recall and the third F1-score, and a limited precision meanwhile. The proposed HDFNet improves the F1-score to 88.13% which is superior. At the same time, the precision rate also reaches the highest value of 87.54% in the comparison methods. Also, the HDFNet maintains the good trade-off between precision and recall among the top three networks on F1-scores.

Figure 7 also illustrates the change maps on five selected sets of bi-temporal images. The change areas in these bi-temporal image pairs range over multiple numbers, scales, shapes and distribution patterns. For multiple regular shape building change in sample (a), most of the comparing methods do not correctly detect the building change inside the highlighted boxes, while STANet is able to locate the change areas but with incompleteness detection and proposed HDFNet is more accurate than other methods. For the change areas with details in change area shape sample (b), the late-fusion-based methods FC-Siam-conc, FC-Siam-diff, IFN and STANet and proposed hierarchical fusion-based HDFNet are visually closer to the GT maps. For more densely distributed change areas within samples (c) and (d),

Net, STANet and HDFNet maintain the visually correctness, while HDFNet is with less errors. For sample (e), which has integrated two kinds of change areas, the HDFNET shows better adaptability, i.e., it can accurately detect and distinguish multiple dense change areas, and it can also accurately detect the change regions with complex shapes.

As shown in

Figure 8, we summarize the performance of all comparing methods on both datasets and the numbers of their parameters in three sets of histograms. Each chart displays its evaluation scores and the number of parameters on a per-method basis, i.e., one column is for each method. It can be observed that among the methods with higher evaluation scores, the proposed method has relatively fewer parameters, which means that compared with the simple method with fewer parameters, the proposed method has greatly improved the performance. At the same time, HDFNet maintains the highest F1 score and the trade-off between precision and recall.