First Successful Rescue of a Lost Person Using the Human Detection System: A Case Study from Beskid Niski (SE Poland)

Abstract

:1. Introduction

2. Methods

2.1. Terrestrial Search Methods

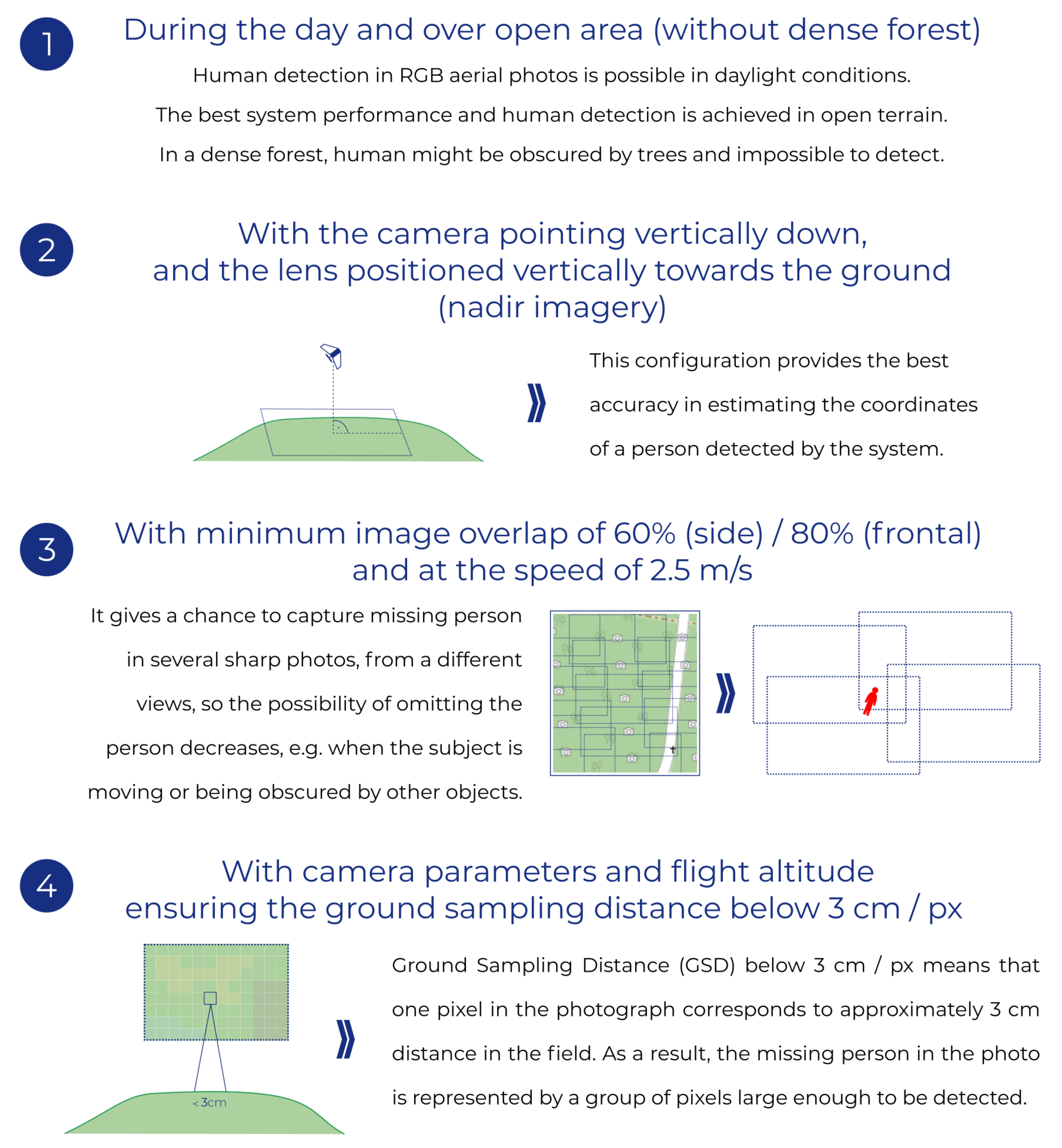

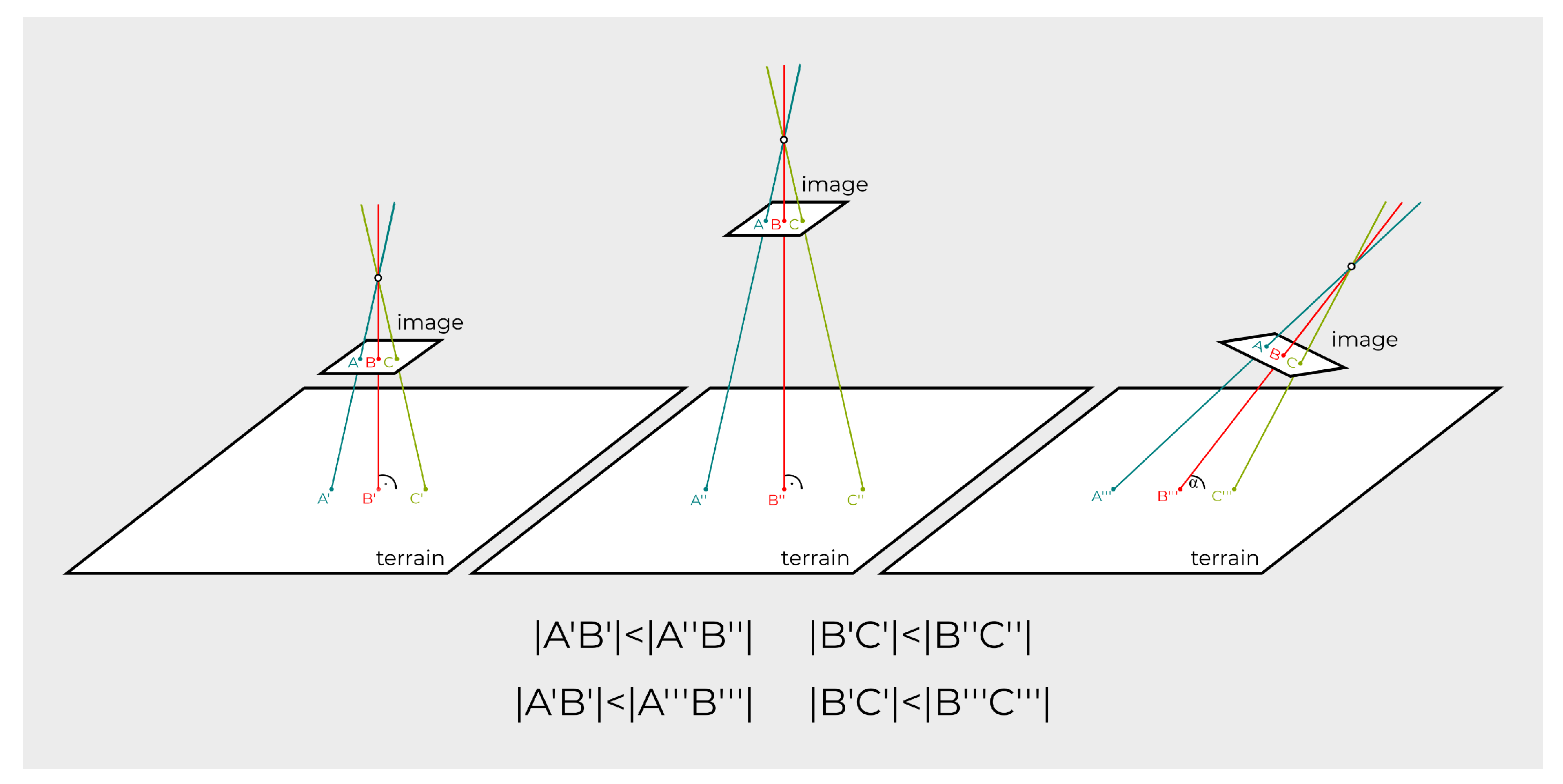

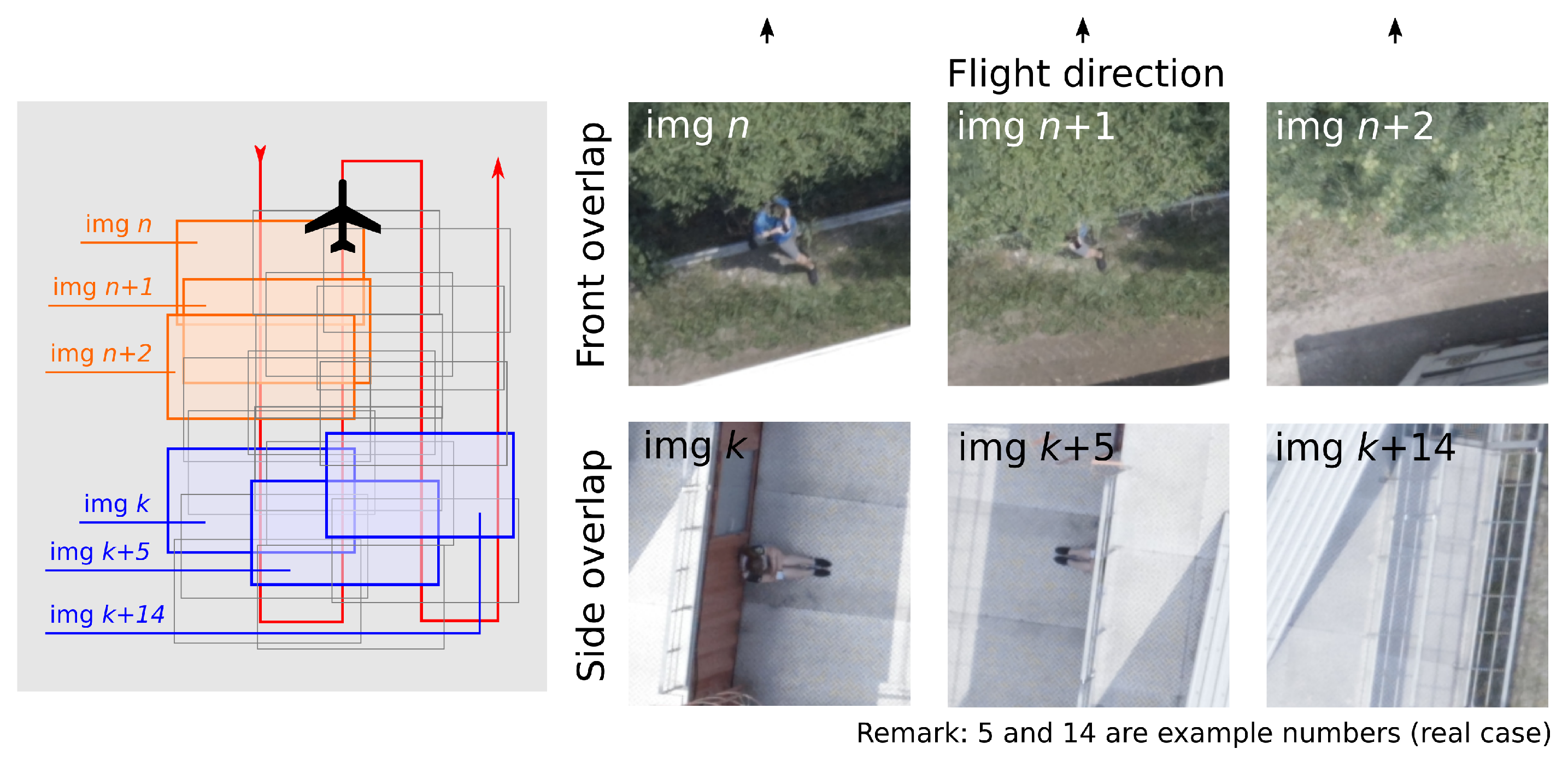

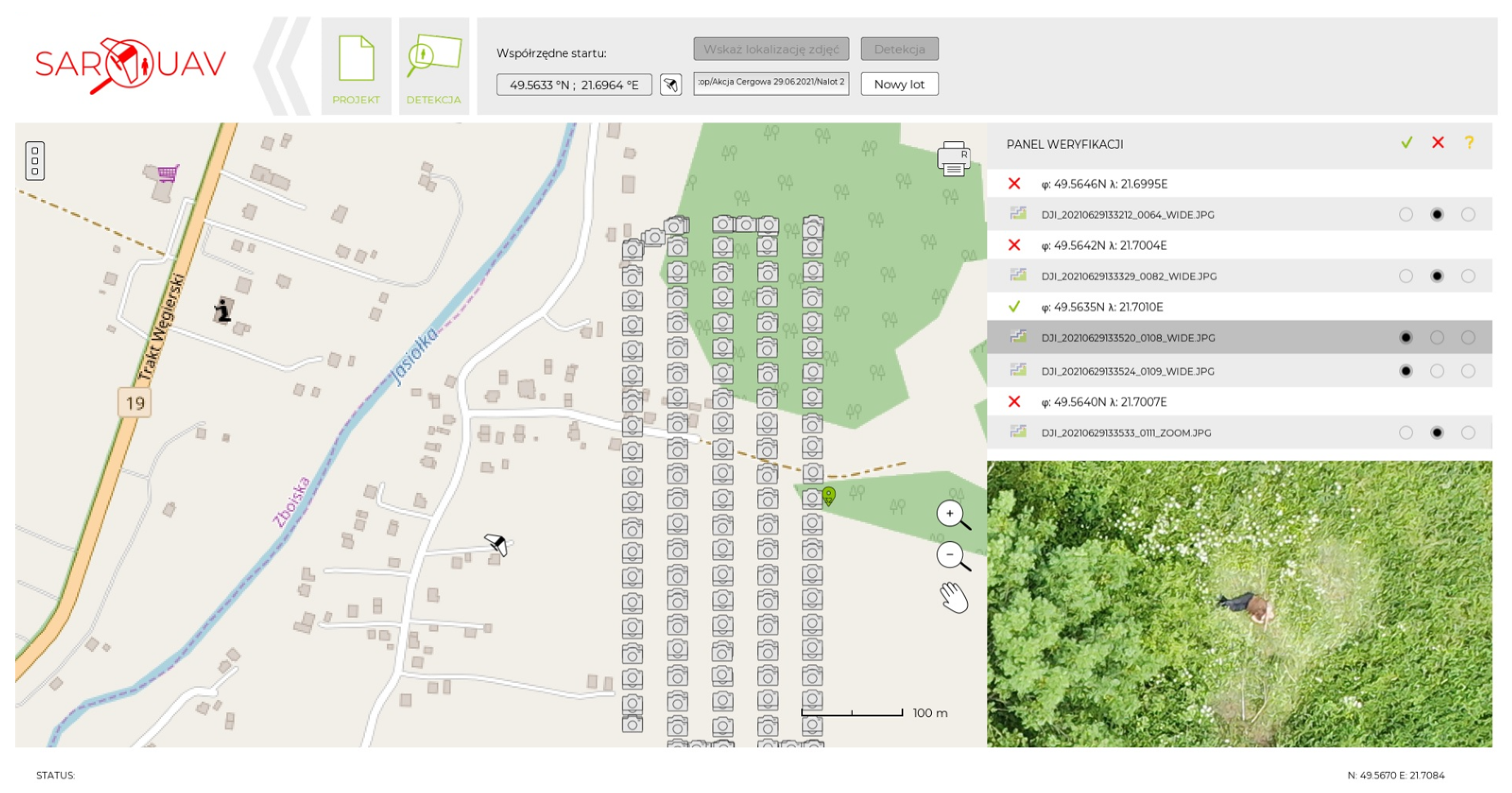

2.2. Aerial Search Methods

2.3. Methods of Image Analysis

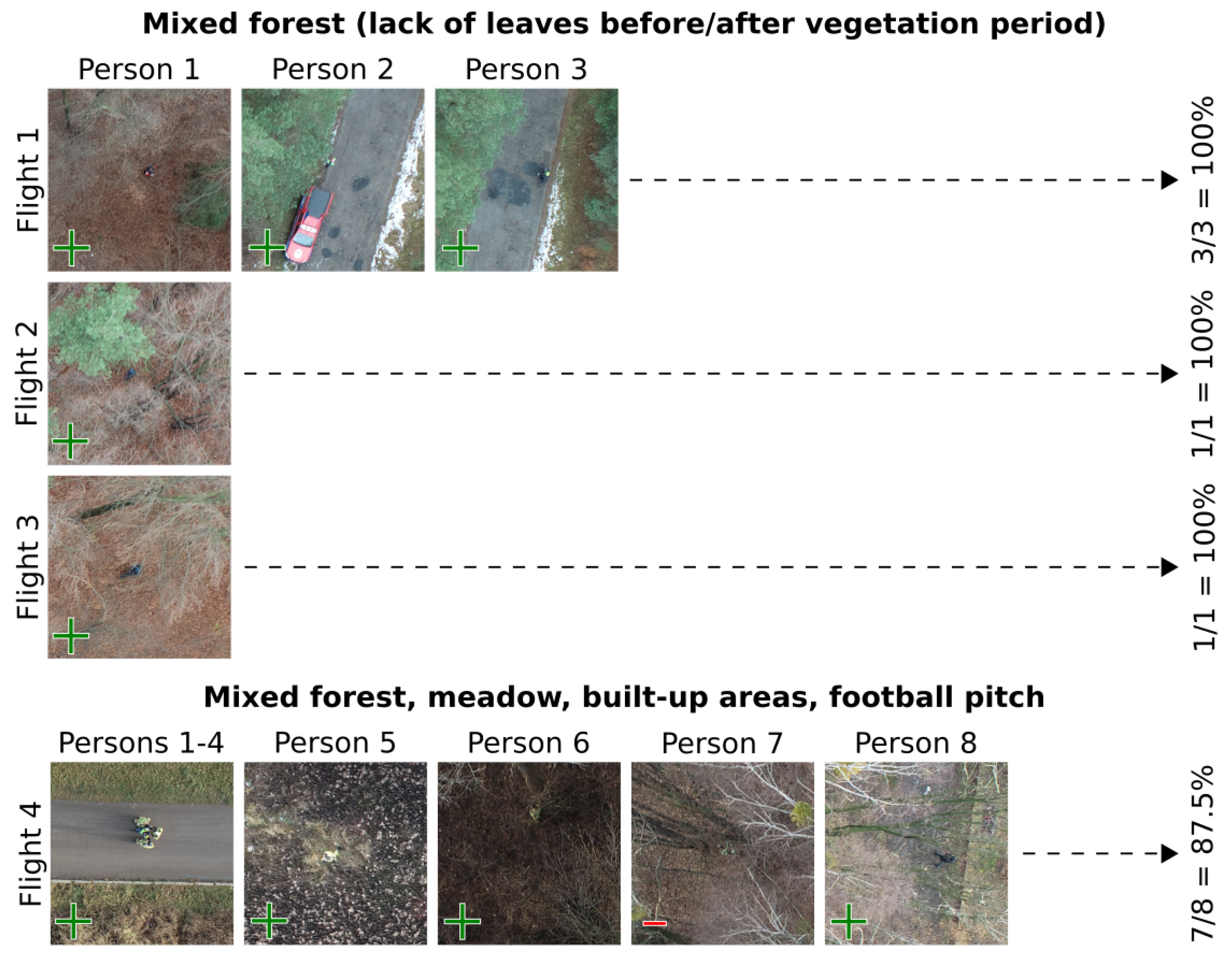

3. Results

4. Discussion

5. Conclusions

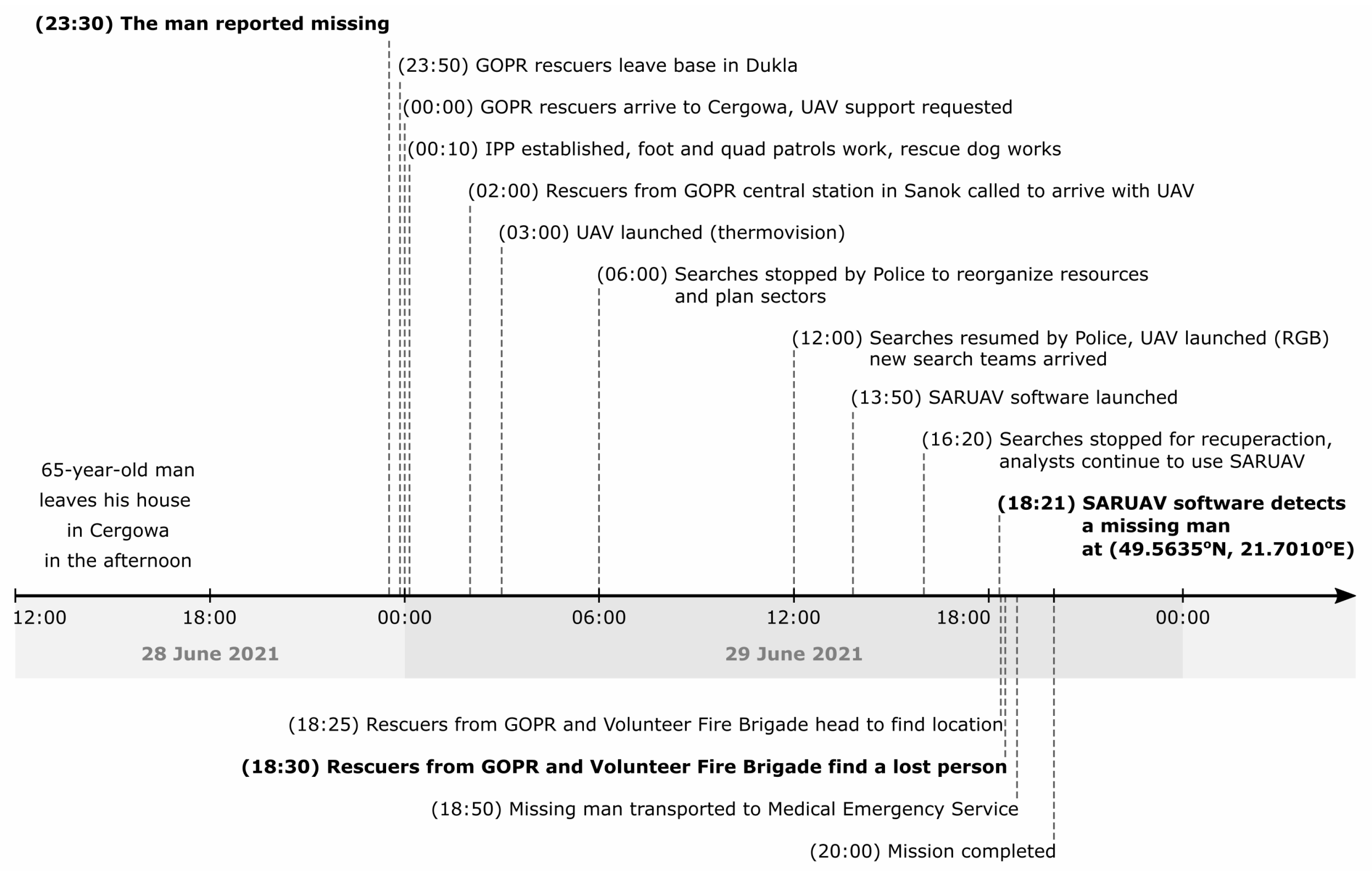

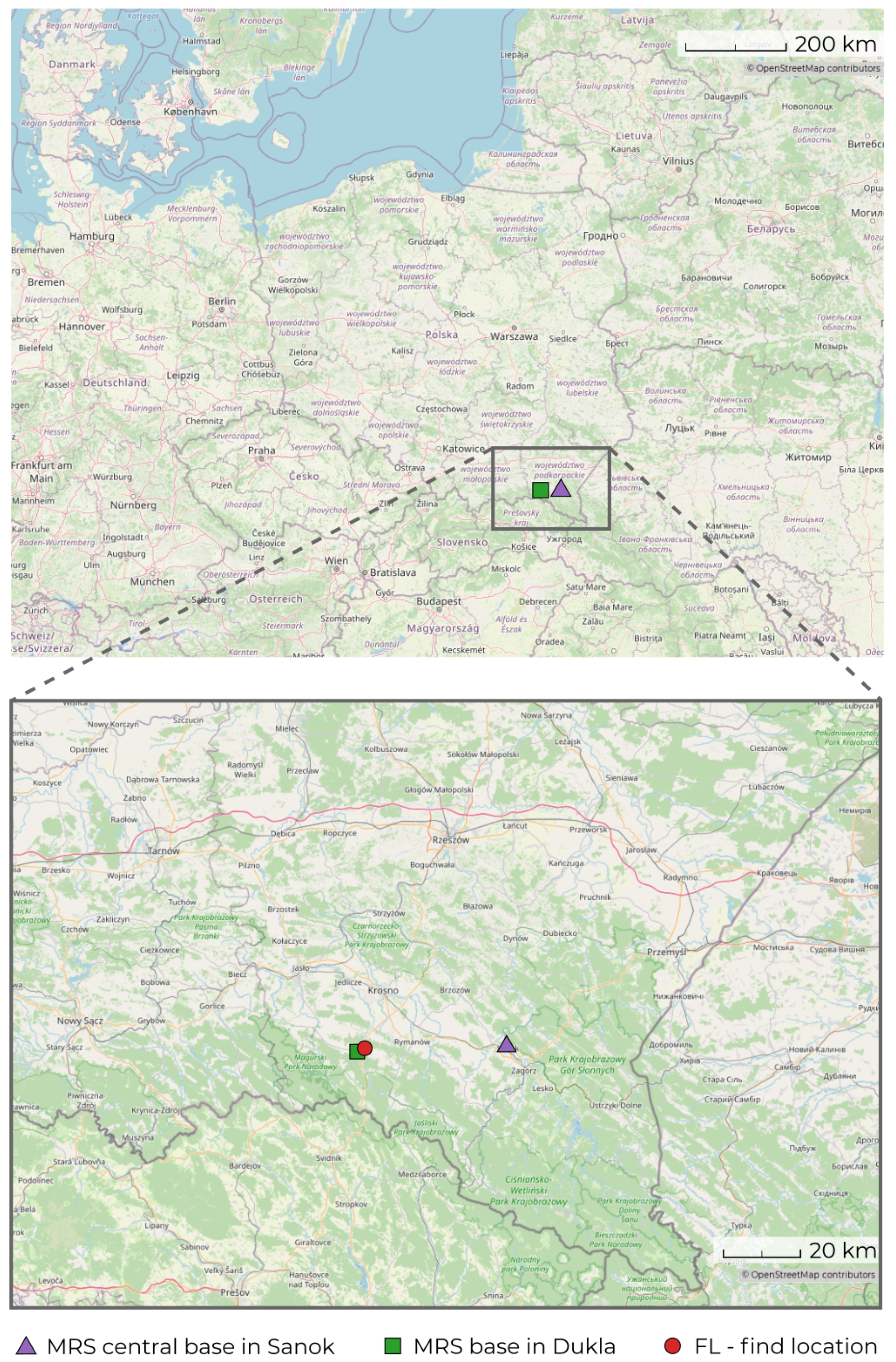

- The missing 65-year-old man, who suffered from the Alzheimer’s disease, ensured a stroke and had problems with mobility; he spent more than 24 h in the wilderness.

- Aerial monitoring of terrain using drones, assisted by automated human detection offered by the SARUAV system, was conduced, along with a variety of terrestrial search methods.

- At 13:50 on 29 June 2021, the SARUAV system was launched for the first time during the searches. It was used to process 782 near-nadir JPG images acquired during four photogrammetric flights. At 18:21, the SARUAV detector spotted the missing man. The time from the first launch of the system to the successful detection was 4 h 31 min.

- The data from the fifth flight (RGB3) was automatically processed in 1 min 50 s and verified by the analyst in 2 min 15 s. Thus, the detection was performed rapidly.

- Knowing the survivability of lost persons suffering from Alzheimer’s disease after 24 h of being exposed to the wilderness (54%), it is likely that quickening the mission by the use of UAV and SARUAV technologies significantly contributed to rescuing him, in a stable, healthy condition. Other illnesses will possibly cause further risks.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned aerial vehicle |

| GOPR | Górskie Ochotnicze Pogotowie Ratunkowe |

| ISRID | International Search & Rescue Incident Database |

| PLS | point last seen |

| LKP | last known point |

| IPP | initial planning point |

| SPD | Systematyczne przeszukanie dróg OR Szczegółowe przeszukanie dróg |

| GSD | ground sampling distance |

| GPU | graphics processing unit |

| MRS | Mountain Rescue Service |

References

- Heth, C.D.; Cornell, E.H. Characteristics of travel by persons lost in Albertan Wilderness Areas. J. Environ. Psychol. 1998, 18, 223–235. [Google Scholar] [CrossRef]

- Jurecka, M.; Niedzielski, T. A procedure for delineating a search region in the UAV-based SAR activities. Geomat. Nat. Hazards Risk 2017, 8, 53–72. [Google Scholar] [CrossRef]

- Koester, R. Lost Person Behavior: A Search and Rescue Guide on Where to Look—For Land, Air, and Water; dbS Productions: Charlottesville, VA, USA, 2008. [Google Scholar]

- Doherty, P.J.; Guo, Q.; Doke, J.; Ferguson, D. An analysis of probability of area techniques for missing persons in Yosemite National Park. Appl. Geogr. 2014, 47, 99–110. [Google Scholar] [CrossRef]

- Chrustek, R. Poszukiwania osób zaginionych: Szybka trójka poszukiwawcza—Metoda szczegółowego przeszukania terenu. Arcana GIS GIS Trendy 2015, wiosna 2015, 33–37. [Google Scholar]

- Tuśnio, N.; Wolny, P. Nowoczesne narzędzia i sprzęt wykorzystywane do poszukiwań osób zaginionych (Modern Tools and Equipment Used to Search for Missing Persons). Zesz. Nauk. SGSP 2017, 61, 7–23. [Google Scholar]

- Grissom, C.K.; Thomas, F.; James, B. Medical helicopters in wilderness search and rescue operations. Air Med. J. 2006, 25, 18–25. [Google Scholar] [CrossRef] [PubMed]

- Karaca, Y.; Cicek, M.; Tatli, O.; Sahin, A.; Pasli, S.; Fatih Beser, M.; Turedi, S. The potential use of unmanned aircraft systems (drones) in mountain search and rescue operations. Am. J. Emerg. Med. 2018, 36, 583–588. [Google Scholar] [CrossRef]

- Doherty, P.; Rudol, P. A UAV Search and Rescue Scenario with Human Body Detection and Geolocalization. In AI 2007: Advances in Artificial Intelligence; Mehmet, A.O., Thornton, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1–13. [Google Scholar]

- Murphy, R.R.; Tadokoro, S.; Nardi, D.; Jacoff, A.; Fiorini, P.; Choset, H.; Erkmen, A.M. Search and Rescue Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1151–1173. [Google Scholar]

- Goodrich, M.A.; Morse, B.S.; Gerhardt, D.; Cooper, J.L. Supporting Wilderness Search and Rescue Using a Camera-Equipped Mini UAV. J. Field Robot. 2008, 25, 89–110. [Google Scholar] [CrossRef]

- Van Tilburg, V. First Report of Using Portable Unmanned Aircraft Systems (Drones) for Search and Rescue. Wilderness Environ. Med. 2017, 28, 116–118. [Google Scholar] [CrossRef] [Green Version]

- Drone Spots Scots Climber Feared Dead in Himalayas. Available online: www.bbc.com/news/uk-scotland-highlands-islands-44858758 (accessed on 9 August 2021).

- Missing Norfolk Man in ‘Lucky’ Police Drone Rescue. Available online: www.bbc.com/news/uk-england-norfolk-44526984 (accessed on 9 August 2021).

- Tychy: Wypatrzył ją Policyjny Dron. 63-Latka z Demencją Leżała w Rowie Melioracyjnym. Available online: www.polsatnews.pl/wiadomosc/2021-07-17/tychy-wypatrzyl-ja-policyjny-dron-63-latka-z-demencja-lezala-w-rowie-melioracyjnym (accessed on 9 August 2021).

- Drone Rescues Around the World. Available online: enterprise.dji.com/drone-rescue-map (accessed on 15 August 2021).

- Bejiga, M.; Zeggada, A.; Nouffidj, A.; Melgani, F. A convolutional neural network approach for assisting avalanche search and rescue operations with UAV imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef] [Green Version]

- Xia, D.X.; Su, S.Z.; Geng, L.C.; Wu, G.X.; Li, S.Z. Learning rich features from objectness estimation for human lying-pose detection. Multimed. Syst. 2017, 23, 515–526. [Google Scholar] [CrossRef]

- Tian, L.; Li, M.; Hao, Y.; Liu, J.; Zhang, G.; Chen, Y.Q. Robust 3-d human detection in complex environments with a depth camera. IEEE Trans. Multimed. 2018, 20, 2249–2261. [Google Scholar] [CrossRef]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised Human Detection with an Embedded Vision System on a Fully Autonomous UAV for Search and Rescue Operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef] [Green Version]

- Agcayazi, M.T.; Cawi, E.; Jurgenson, A.; Ghassemi, P.; Cook, G. ResQuad: Toward a semi-autonomous wilderness search and rescue unmanned aerial system. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 898–904. [Google Scholar]

- Niedzielski, T.; Jurecka, M.; Stec, M.; Wieczorek, M.; Miziński, B. The nested k-means method: A new approach for detecting lost persons in aerial images acquired by unmanned aerial vehicles. J. Field Robot. 2017, 34, 1395–1406. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Gómez-Silva, M.J.; Moreno, F.M.; de la Escalera, A.; Armingol, J.M. An appearance-based tracking algorithm for aerial search and rescue purposes. Sensors 2019, 19, 652. [Google Scholar] [CrossRef] [Green Version]

- Felzenszwalb, P.; Huttenlocher, D.P. Pictorial structures for object recognition. Int. J. Comput. Vis. 2005, 61, 55–79. [Google Scholar] [CrossRef]

- Andriluka, M.; Roth, S.; Schielem, B. Pictorial structures revisited: People detection and articulated pose estimation. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1014–1021. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the International Conference on Computer Vision & Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Zhao, Y.; Zhang, Y.; Cheng, R.; Wei, D.; Li, G. An enhanced histogram of oriented gradients for pedestrian detection. IEEE Intell. Transp. Syst. Mag. 2015, 7, 29–38. [Google Scholar] [CrossRef]

- Gąszczak, A.; Breckon, T.B.; Han, J. Real-time people and vehicle detection from UAV imagery. In Proceedings of the SPIE 7878, Intelligent Robots and Computer Vision XXVIII: Algorithms and Techniques, San Francisco, CA, USA, 24–25 January 2011; p. 78780B. [Google Scholar]

- Aguilar, W.G.; Luna, M.A.; Moya, J.F.; Abad, V.; Parra, H.; Ruiz, H. Pedestrian detection for UAVs using cascade classifiers with meanshift. In Proceedings of the 2017 IEEE 11th International Conference on Semantic Computing (ICSC), San Diego, CA, USA, 30 January–1 February 2017; pp. 509–514. [Google Scholar]

- Felzenszwalb, P.F.; McAllester, D.A.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Bourdev, L.; Malik, J. Poselets: Body part detectors trained using 3d human pose annotations. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1365–1372. [Google Scholar]

- Khan, F.S.; Anwer, R.M.; Van De Weijer, J.; Bagdanov, A.D.; Vanrell, M.; Lopez, A.M. Color attributes for object detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3306–3313. [Google Scholar]

- Robinson, G. First to Deploy—Unmanned Aircraft for SAR & Law Enforcement; RPFlightSystems, Inc.: Wimberley, TX, USA, 2012. [Google Scholar]

- Image Scanning Software. Developed to Search for People and Objects Quickly and Effectively. Once Lost. Now Found. Available online: https://loc8.life/ (accessed on 15 August 2021).

- Weldon, W.T.; Hupy, J. Investigating methods for integrating unmanned aerial systems in search and rescue operations. Drones 2020, 4, 38. [Google Scholar] [CrossRef]

- SARUAV. Technology to Support Search for Missing Persons. To Arrive on Time. Available online: https://www.saruav.pl/index-en.html (accessed on 15 August 2021).

- Niedzielski, T. Dronem na ratunek: SARUAV, czyli automatyczna detekcja ludzi na zdjęciach lotniczych. Geodeta 2021, 313, 28–31. [Google Scholar]

- Odnaleziony Dzięki Pomocy Innowacyjnego Programu. Available online: https://radio.rzeszow.pl/wiadomosci/odnaleziony-dzieki-wsparciu-komputerow (accessed on 23 September 2021).

- A Lost Person Is Found!—Spectacular Success of Drones and the SARUAV System. Available online: uni.wroc.pl/en/a-lost-person-is-found-spectacular-success-of-drones-and-the-saruav-system (accessed on 14 August 2021).

- Czekaj, D. Jak geoinformatyka zaczyna wspierać służby zarządzania kryzysowego: SARUAV odnalazł człowieka. Geodeta 2021, 315, 48–49. [Google Scholar]

- International Search & Rescue Incident Database (ISRID). Available online: https://www.dbs-sar.com/SAR_Research/ISRID.htm (accessed on 28 August 2021).

- Chrustek, R. Metoda SPD (Szczegółowego Przeszukania Dróg). Rescue Mag. 2016, 1. [Google Scholar]

- Woidtke, L. Mantrailing at the police of Saxony. Kwart. Policyjny 2016, 3, 74–77. [Google Scholar]

- Niedzielski, T.; Jurecka, M. Can clouds improve the performance of automated human detection in aerial images? Pure Appl. Geophys. 2018, 175, 3343–3355. [Google Scholar] [CrossRef] [Green Version]

- Jurecka, M.; Miziński, B.; Niedzielski, T. Impact of boosting saturation on automatic human detection in imagery acquired by unmanned aerial vehicles. J. Appl. Remote Sens. 2019, 13, 044525. [Google Scholar] [CrossRef]

- Niedzielski, T.; Jurecka, M.; Miziński, B.; Remisz, J.; Ślopek, J.; Spallek, W.; Witek-Kasprzak, M.; Kasprzak, Ł.; Świerczyńska-Chlaściak, M. A real-time field experiment on search and rescue operations assisted by unmanned aerial vehicles. J. Field Robot. 2018, 35, 906–920. [Google Scholar] [CrossRef]

- Imamura, Y.; Okamoto, S.; Lee, J.H. Human tracking by a multi-rotor drone using HOG features and linear SVM on images captured by a monocular camera. In Proceedings of the International MultiConference of Engineers and Computer Scientists, IMECS 2016, Hong Kong, China, 16–18 March 2016; Volume 1, pp. 8–13. [Google Scholar]

- Koester, R.J.; Stooksbury, D.E. Behavioral profile of possible Alzheimer’s disease patients in Virginia search and rescue incidents. Wilderness Environ. Med. 1995, 6, 34–43. [Google Scholar] [CrossRef]

- Adams, A.L.; Schmidt, T.A.; Newgard, C.D.; Federiuk, C.S.; Christie, M.; Scorvo, S.; DeFreest, M. Search is a time-critical event: When search and rescue missions may become futile. Wilderness Environ. Med. 2007, 18, 95–101. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tikuisis, P. Predicting survival time for cold exposure. Int. J. Biometeorol. 1995, 39, 94–102. [Google Scholar] [CrossRef]

- Croft, J.L.; Pittman, D.J.; Scialfa, C.C.T. Gaze behavior of spotters during an air-to-ground search. Hum. Factors 2007, 49, 671–678. [Google Scholar] [CrossRef] [PubMed]

- Murphy, R.R.; Pratt, K.S.; Burke, J.L. Crew roles and operational protocols for rotary-wing micro-UAVs in close urban environments. In Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 73–80. [Google Scholar]

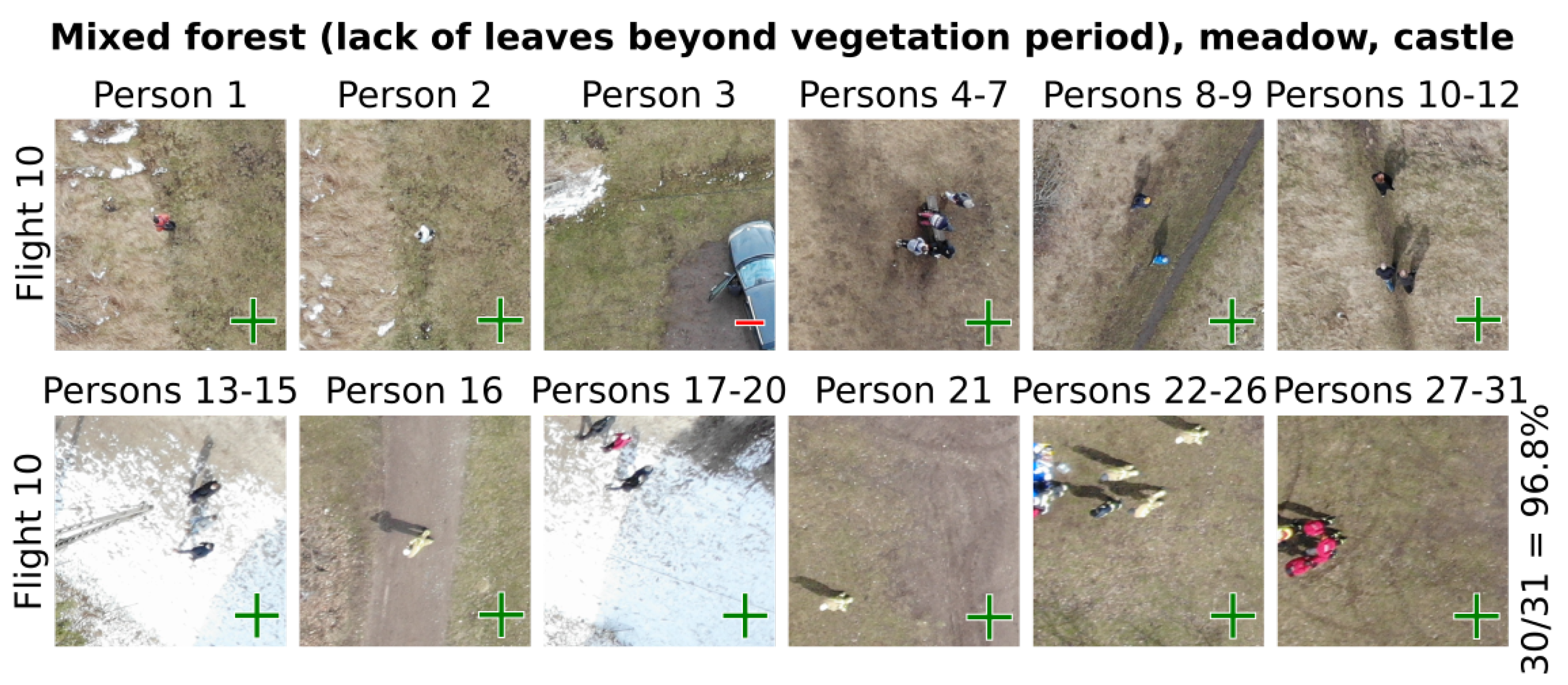

| Flight | Topography | Land | Number | Number of Persons * | Performance | |

|---|---|---|---|---|---|---|

| No. | Cover | of Images | True | Detected | [%] | |

| 1 | upland | a | 37 | 3 | 3 | 100 |

| 2 | upland | a | 124 | 1 | 1 | 100 |

| 3 | upland | a | 98 | 1 | 1 | 100 |

| 4 | upland | b | 115 | 8 | 7 | 87.5 |

| 5 | lowland | c | 20 | 7 | 7 | 100 |

| 6 | lowland | c | 20 | 7 | 6 | 85.7 |

| 7 | upland | d | 31 | 3 | 3 | 100 |

| 8 | upland | d | 18 | 3 | 3 | 100 |

| 9 | lowland | d | 77 | 6 | 6 | 100 |

| 10 | upland | e | 145 | 31 | 30 | 96.8 |

| ∑ | 685 | 70 | 67 | |||

| Thermovision | Visible Light | ||||||

|---|---|---|---|---|---|---|---|

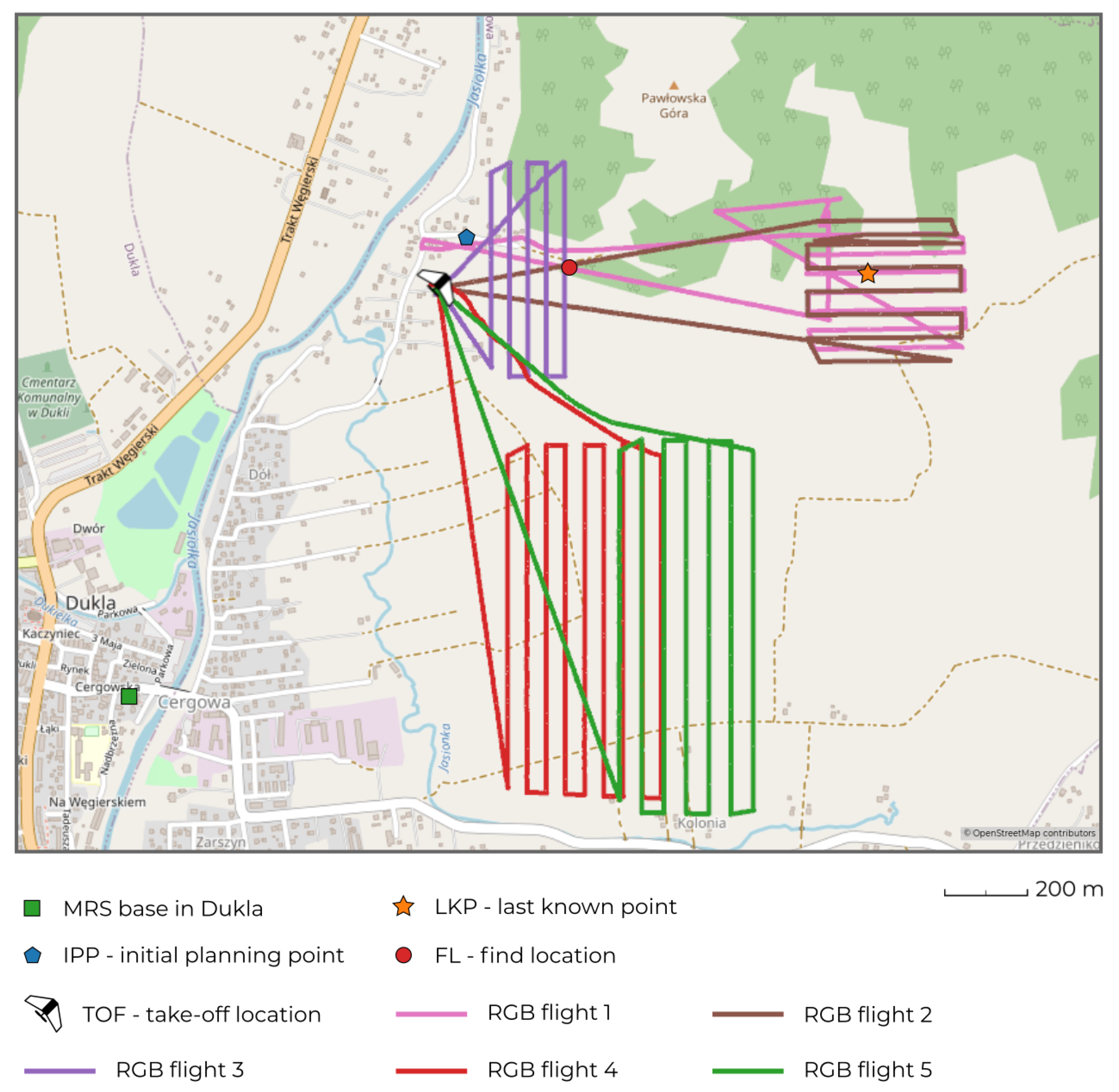

| T1 | T2 | RGB1 | RGB2 | RGB3 | RGB4 | RGB5 | |

| Spectrum | thermal | thermal | RGB | RGB | RGB | RGB | RGB |

| Camera | XT-S | XT-S | ZH20 | ZH20 | ZH20 | ZH20 | ZH20 |

| Data | video | video | images a | images a | images a | images a | images a |

| Target | road | road | sector | sector | sector | sector | sector |

| # images | n/a | n/a | 128 | 133 | 121 | 308 | 220 |

| Area [ha] | n/a | n/a | 12 | 14 | 10 | 34 | 32 |

| Local time | 03:11 | 03:38 | 04:13 | 12:59 | 13:26 | 13:42 | 14:52 |

| Duration [min] | 27 | 33 | 26 | 21 | 13 | 40 | 38 |

| ATO b [m] | n/a | n/a | 110 | 105 | 80 | 80 | 93 |

| Max AGL c [m] | 126 | 126 | 131 | 121 | 100 | 80 | 103 |

| Mean AGL c [m] | 65 | 96 | 103 | 104 | 77 | 74 | 89 |

| Distance [km] | 4.2 | 5.5 | 6.4 | 5.8 | 3.5 | 10.0 | 8.9 |

| Temp. [ C] | 16.1 | 15.8 | 15.7 | 26.6 | 27.1 | 27.4 | 28.4 |

| Wind spd. [m/s] | 1 | 1 | 1 | 2 | 2 | 2 | 2 |

| Clouds [%] | 30 | 35 | 36 | 41 | 38 | 36 | 51 |

| Humidity [%] | 95 | 96 | 96 | 59 | 57 | 56 | 54 |

| SARUAV used? | no | no | no | yes | yes | yes | yes |

| Detection | no | no | no | no | yes d | no | no |

| RGB2 | RGB3 | RGB4 | RGB5 | |

|---|---|---|---|---|

| Number of images | 133 | 121 | 308 | 220 |

| Area [ha] | 14 | 10 | 34 | 32 |

| Number of SARUAV hits | 4 | 32 a | 22 | 23 |

| Duration of copying images to laptop [min:s] | 01:14 | 00:48 | 02:59 | 01:50 |

| Duration of SARUAV computations [min:s] | 01:59 | 01:50 | 04:11 | 02:56 |

| Duration of analyst’s work [min:s] | 00:16 | 02:15 | 01:44 | 02:02 |

| Duration of additional activities b [min:s] | 01:15 | 00:55 | 00:46 | 00:47 |

| Overall duration of SARUAV-related activities [min:s] | 04:44 | 05:48 | 09:40 | 07:35 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niedzielski, T.; Jurecka, M.; Miziński, B.; Pawul, W.; Motyl, T. First Successful Rescue of a Lost Person Using the Human Detection System: A Case Study from Beskid Niski (SE Poland). Remote Sens. 2021, 13, 4903. https://doi.org/10.3390/rs13234903

Niedzielski T, Jurecka M, Miziński B, Pawul W, Motyl T. First Successful Rescue of a Lost Person Using the Human Detection System: A Case Study from Beskid Niski (SE Poland). Remote Sensing. 2021; 13(23):4903. https://doi.org/10.3390/rs13234903

Chicago/Turabian StyleNiedzielski, Tomasz, Mirosława Jurecka, Bartłomiej Miziński, Wojciech Pawul, and Tomasz Motyl. 2021. "First Successful Rescue of a Lost Person Using the Human Detection System: A Case Study from Beskid Niski (SE Poland)" Remote Sensing 13, no. 23: 4903. https://doi.org/10.3390/rs13234903

APA StyleNiedzielski, T., Jurecka, M., Miziński, B., Pawul, W., & Motyl, T. (2021). First Successful Rescue of a Lost Person Using the Human Detection System: A Case Study from Beskid Niski (SE Poland). Remote Sensing, 13(23), 4903. https://doi.org/10.3390/rs13234903