1. Introduction

Cropland and crop type maps at global and regional scales provide critical information for agricultural monitoring systems. Satellite-derived remotely sensed data are proven to be an effective tool for crop type identification and spatial mapping. Nevertheless, the operational production of upscaling and delivering reliable and timely crop map products is challenging. Agricultural areas are complex landscapes characterized by many wide-ranging cropping systems, crop species and varieties. Another source of difficulty is the high diversity of existing agricultural practices (e.g., multiple within-year cropping), pedoclimatic conditions and geographical environments.

Crop mapping methods based on remote sensing exploit the spatiotemporal information of satellite image time series. The accurate recognition of crops requires high-quality spatial and temporal data [

1,

2,

3]. In practice, the resolutions of satellite imagery will limit the accuracy of crop map products and their corresponding class legends [

4]. Cropland extent knowledge is usually obtained from global maps, such as Moderate Resolution Imaging Spectroradiometer (MODIS) land cover [

5], the Globland 30 m [

6] or the GlobCover [

7]. Unfortunately, these products do not always provide reliable information given the large disagreements and uncertainties existing among them [

8,

9,

10]. In addition, their quality is far from satisfactory for applications requiring up-to-date accurate knowledge about food production or environmental issues. Therefore, accurate and timely crop information is still required for government decision making and agricultural management. Ideally, the user community has expressed the need for near-real-time high spatial resolution crop type maps from the early season [

11].

In recent years, new high-resolution image time series have been acquired by the Sentinel mission under the EU’s Copernicus environmental monitoring program. SAR and optical data with high revisit frequency and large spatial coverage have become freely available thanks to the launch of the European Space Agency (ESA)’s Sentinel-1 and Sentinel-2 satellites. The high resolutions of these complementary satellite data present unprecedented opportunities for regional and global agriculture monitoring.

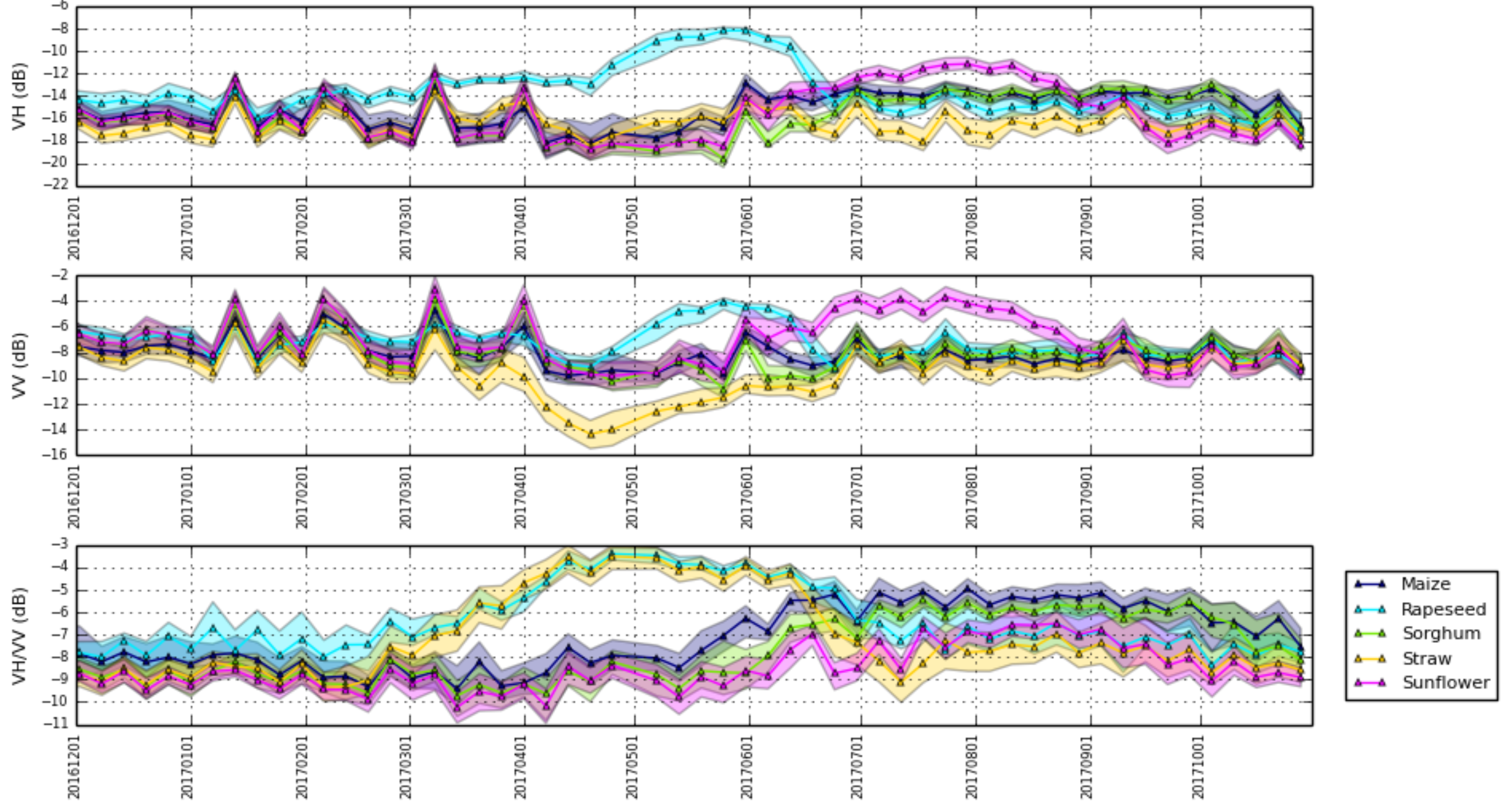

Although Sentinel-2 offers many opportunities for characterizing the spectral reflectance properties of crops [

12], its temporal resolution can be limited. Cloud coverage can strongly affect important key data during the agricultural season or create consistent cloud-covered areas. Under this situation, Sentinel-1 images that are not affected by cloudy weather conditions can provide valuable complementary information. In addition, SAR images can help to discriminate between different crop types having similar spectro-temporal profiles [

13]. The benefits of Sentinel-1 images can be reduced due to the presence of speckle noise. The high radar sensitivity to soil moisture may also complicate the interpretation of information from vegetation. Fluctuations induced by speckle or moisture changes (e.g., rain or snow) can then be confused with vegetation growth changes.

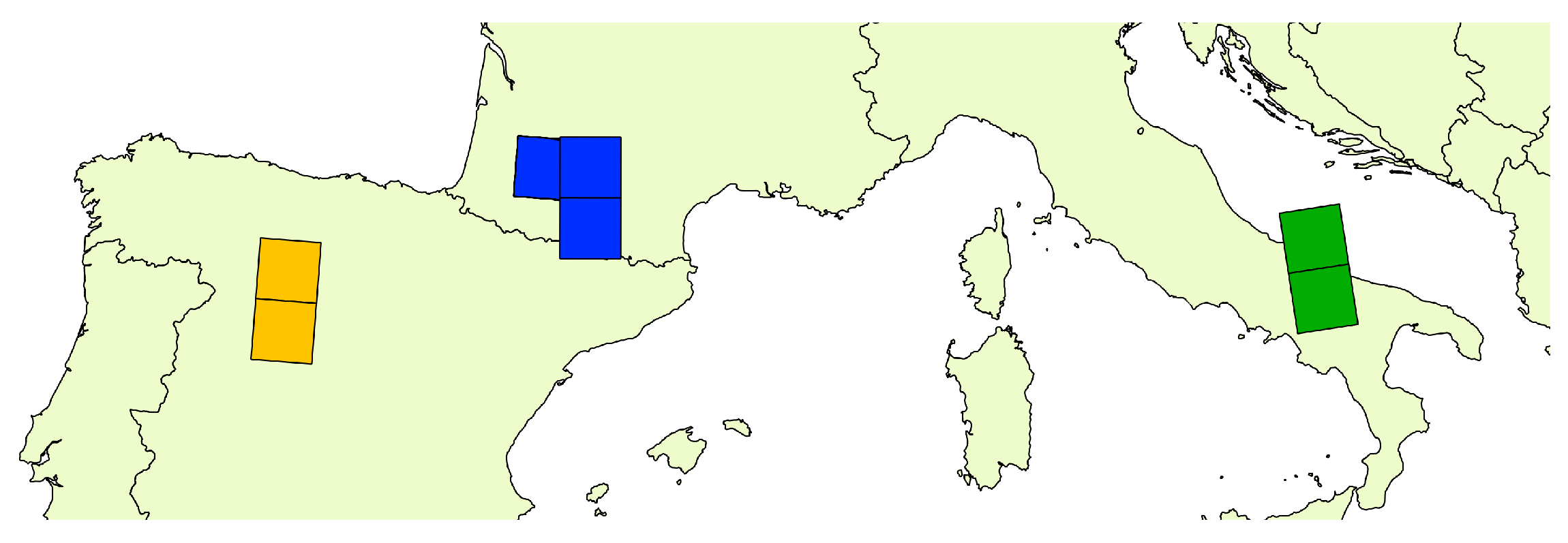

Operational large-scale crop monitoring methods need to satisfy some well-known requirements, such as cost-effectiveness, a high degree of automation, transferability and genericity. In this framework, the European H2020 Sentinels Synergy for Agriculture (SenSAgri) projet [

14] has been proposed to demonstrate the benefit of the combined Sentinel -1 and -2 missions to develop an innovative portfolio of prototype agricultural monitoring services. In this project, the synergy of optical and radar monitoring was exploited to develop three prototype services capable of near-real-time operations: (1) surface soil moisture (SSM), (2) green and brown leaf area index (LAI) and (3) in-season crop mapping. Different European test sites covering different areas and agricultural practices have been used for method development, prototyping and validation.

The work presented here describes the research conducted in the SenSAgri project to develop a new processing chain for in-season crop mapping. From the early season, the innovative crop mapping chain exploits Sentinel-1 and -2 data to produce seasonal binary cropland masks and crop type maps. The two crop products are generated with a spatial resolution of 10 meters, and they follow the product specifications proposed by the Sen2-Agri system [

15]. The proposed methodology aims to face the challenges of high-dimensional Sentinel data classification. Different experiments are presented in this work to assess (1) the crop mapping performances of the SenSAgri processing chain and (2) the synergy and complementarity of Sentinel’s data, showing the interest of fusing both data types. The main contributions of this study are as follows:

It presents a robust and largely validated crop classification system to produce accurate crop map products in the early season with limited training data;

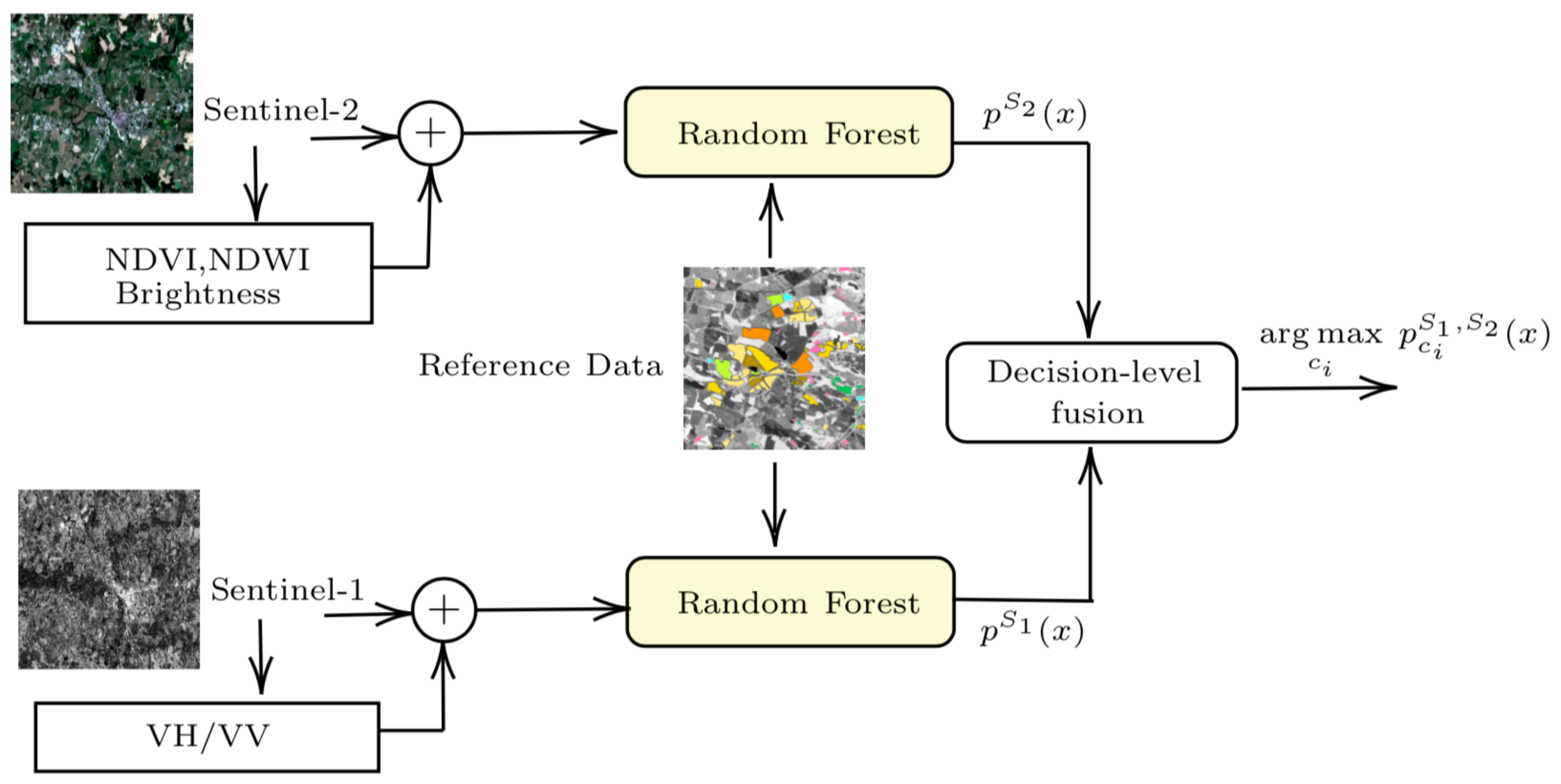

It highlights the benefit of postdecision fusion strategies to exploit the synergy and complementarity among classifiers trained with Sentinel-1 (S1) and Sentinel-2 (S2) time series. This demonstrates the limitations of the early fusion method for classifying multimodal high-dimensional Sentinel time series;

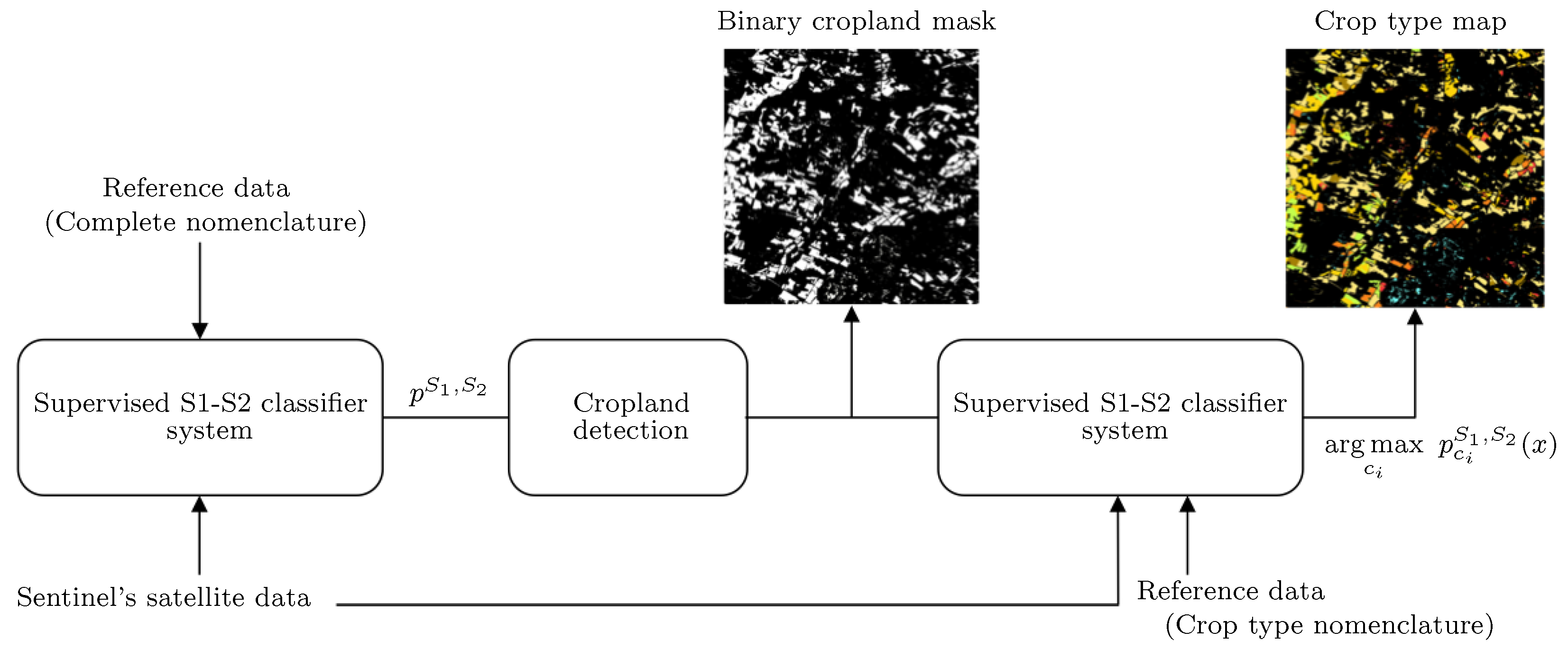

It confirms the interest of detecting cropland areas before the identification of crop types. A strategy for binary cropland area detection is proposed to reduce the impact of land cover legend definition on crop classification results.

This paper is organized as follows:

Section 2 reviews the state-of-the-art classification strategies for crop mapping.

Section 3 details the SenSagri methodology and, more precisely, the S1-S2 classification scheme and input features;

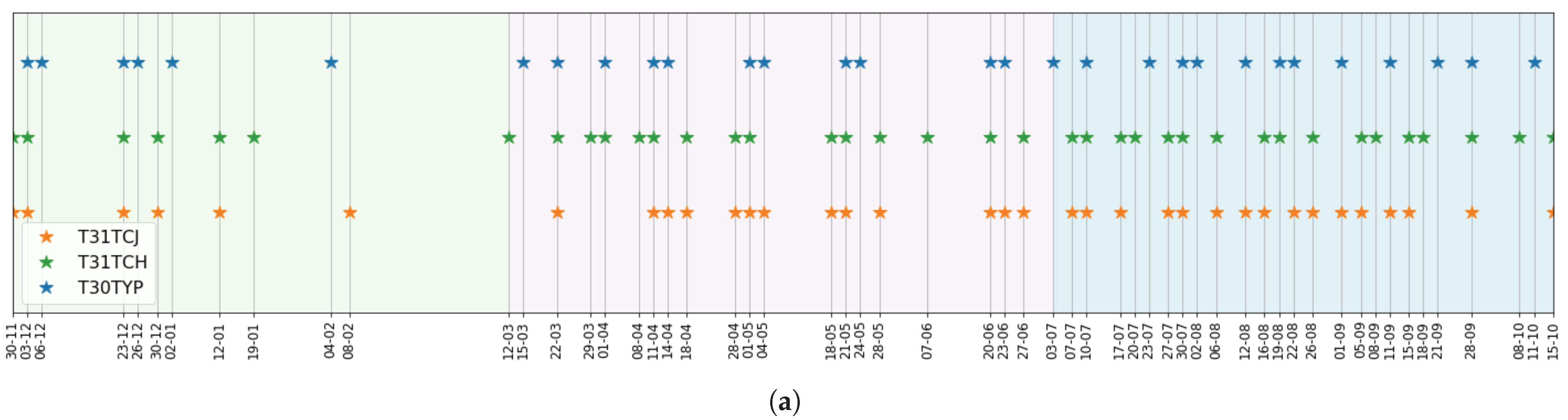

Section 4 describes the satellite and reference data;

Section 5 and

Section 6 are devoted to the results and discussions; and finally,

Section 7 draws the conclusions.

2. Related Work

The classification of multitemporal data by algorithms trained on user knowledge has been commonly proposed in the crop mapping literature. The classification results of these methods are noticeably impacted by the choice of the supervised classifier, the input data, the legend (details and number of classes) and the reference data [

16]. Ideally, the quantity and quality of reference data need to ensure the diversity of the classification problem to ensure the generalization of the learned models.

Despite the large family of existing crop classification methods, most of them are not scalable. In practice, they have been tested on tiny test sites over restricted geographical areas. In addition, most of the existing classification methodologies have not considered natural vegetation classes such as grassland or shrubland, which are mostly confused with crop classes. In the same direction, classical land cover classification methodologies (see [

17] for a review) do not generally satisfy the needs of operational agricultural monitoring requirements [

18].

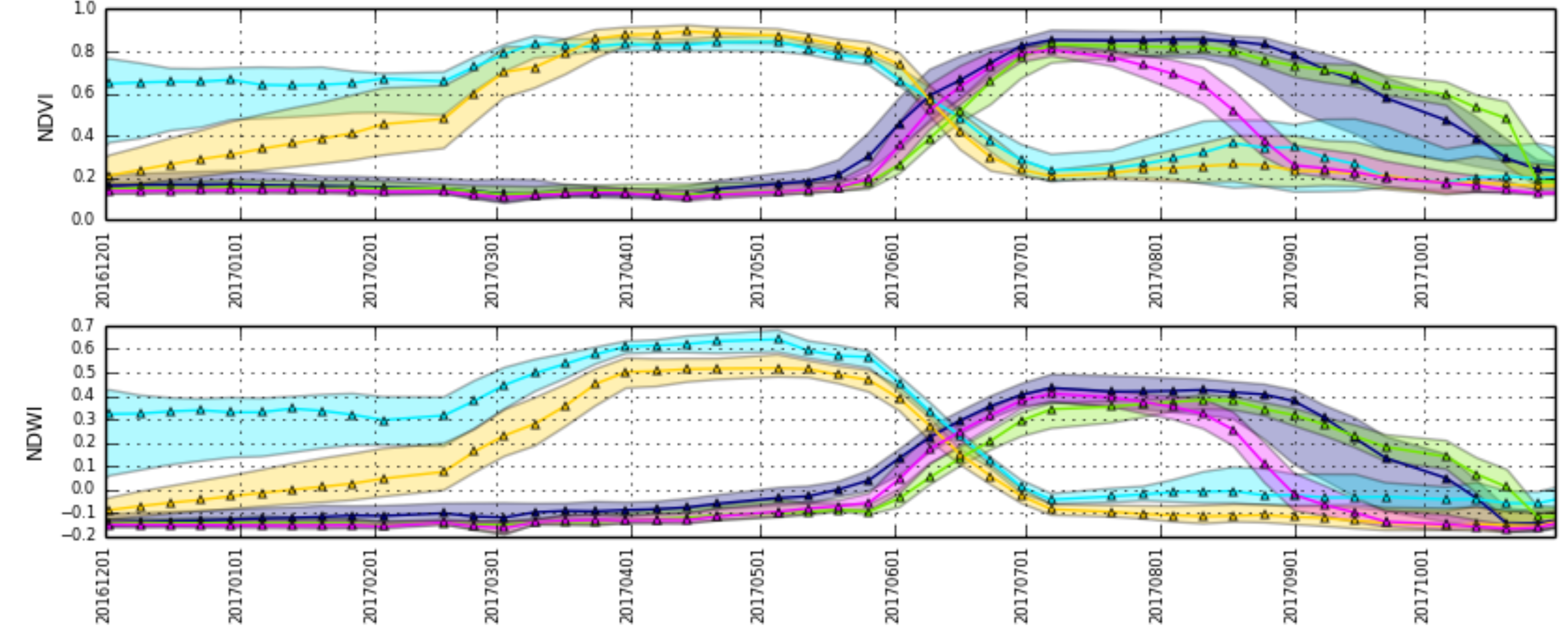

Traditionally, optical data with low and moderate resolutions have been exploited for large-scale crop mapping. The high temporal resolution of MODIS has shown an obvious benefit for the characterization of vegetation surfaces. Numerous crop mapping works have thus highlighted the usefulness of the normalized difference vegetation index (NDVI) derived from MODIS. Several works, such as [

19,

20,

21], have proposed applying the decision tree (DT) classifier to MODIS data. These low-resolution multitemporal data have also been classified by other strategies based on the Jeffries–Matusita distance [

22] or k mean [

23]. Unfortunately, median resolution satellite data, such as MODIS, are too coarse to capture detailed crop information due to mixed land covers or land uses.

Several works have proposed combining the temporal information of MODIS with high-spatial resolution data. For instance, a DT methodology was proposed in [

24] to reach 30 m crop maps by combining cropland MODIS 250 m products with LANDSAT data. The combination of MODIS and LANDSAT data has also been proposed by the US Cropland Data Layer [

25,

26], which is produced by the United States Department of Agriculture (USDA) and the National Agricultural Statistics Service (NASS). The accurate performances of this last DT methodology can be explained by the extensive use of parcel-level information provided by farmers’ declarations. SPOT data have also been combined with MODIS in [

27], where an SVM joint classifier is applied to temporal NDVI features. The interest of incorporating ancillary data with multiple input satellite datasets in the classification process has also been highlighted in [

28]. In this last work, crop maps with low spatial resolution were obtained by applying a spectral correlation classification strategy. The combination of Landsat and Sentinel-2 has been recently proposed in [

15,

29]. In this last work, the use of NDVI phenological metrics derived from high-spatial resolution datasets was investigated.

Some works have proposed combining the prediction of several DT classifiers, which are trained on different sets of satellite-derived features [

20,

30]. The high performances of classifier ensemble strategies have been confirmed in recent decades by the use of random forest (RF) classifiers. The robustness of RF to classify multitemporal satellite data over large geographical areas has been proposed in several studies [

31,

32,

33]. The short training time, easy parameterization and high robustness to high-dimensional input features explain why RF has witnessed a growing interest in crop classification. Some works have applied RF to Landsat multiyear time series composites [

34] and satellite-derived EVI time series [

35]. More recently, pixel-wise [

36] and object-based crop mapping methods [

37] have also been proposed to classify Sentinel-2 data.

In recent years, deep learning (DL) classification strategies have been proposed for crop classification. Different deep architectures have been evaluated on very restricted geographical areas by some promising works. Some interesting examples of deep architectures are LSTM [

38,

39], transformers [

40] and 3D-CNN [

41]. Model transferability, the handling of spatial context with complex semantic models and the high semantic (categorial) resolution of mapping applications are some advantages highlighted by the use of CNN. However, these architectures do not exploit the spatiotemporal features of the data. The design of architectures capturing spatiotemporal information involves multiple options that are nontrivial and expensive to evaluate. Despite the unreasonable effectiveness of DL methodologies, they still involve important challenges for operational global crop mapping systems [

42], including the requirement of a large amount of field data. Unfortunately, the scarcity of reference data is a well-known challenge for operational remote sensing applications. The obtention of crop and non-crop reference data over large-scale areas can be tedious. In addition, such data is not available in a timely fashion during the agricultural season and can contain class label noise. On the other hand, DL methods are also computationally expensive, and they require large computational operations in terms of memory. Last, these methods are usually patch-based approaches, and the patch size definition has negative impacts on object classification results.

From an operational point of view, the use of RF for classifying Sentinel-2 data has been assessed by the Sen2Agri project [

15,

43,

44]. This operational processing chain has proposed an accurate two-fold methodology for producing binary cropland masks and crop type maps. Despite the satisfactory accuracies obtained by the Sen2Agri system, the crop mapping methodology has some limitations. First, the phenological metrics proposed for binary cropland masking can lead to a performance decrease for large-scale mapping [

32]. Second, the proposed binary classification methodology requires the construction of a non-crop reference dataset, which can be very challenging. Last, Sen2Agri products are strongly dependent on the availability of Sentinel-2 acquisitions. Therefore, less accurate results are obtained in cloudy areas.

As previously mentioned, the integration of weather-independent synthetic aperture radar (SAR) imagery into crop classification methodologies may be a solution to address the lack of optical data. The use of multitemporal SAR data has gained increasing attention in crop monitoring [

45,

46]. Recently, the multisite crop classification experiment described in [

47] proved the good performance of the RF classifier on multitemporal SAR data.

In the context of land use mapping and monitoring, the fusion of optical and radar data has been reviewed by different studies [

48,

49,

50]. Despite the large number of such studies, most of the existing approaches do not consider the temporal dimension, and only a few images are fused. The most widely used strategy for exploiting multimodal time series is early fusion, which combines multisource information at the pixel or feature level. This strategy consists of placing all the raw data (or features) from multiple sensors in a single dataset, which is then classified.

The early fusion of multitemporal optical and SAR data has been proposed by some land cover classification studies. For instance, the combination of multitemporal Sentinel-1 and Landsat data is presented in [

51]. This study has demonstrated how the incorporation of SAR data can increase the classification results when the number of optical and radar images is low. With the arrival of Sentinel satellites, more studies have confirmed the benefits of exploiting optical and SAR image time series by an early fusion strategy at the pixel [

52,

53] or feature levels [

54,

55].

In the literature, only a few studies have jointly exploited Sentinel’s time series to produce high spatial resolution maps describing the precise location and extent of major crop types. This can be explained by the large data volume that needs to be processed and the lack of reference data over large geographical areas. The most relevant crop mapping work has proposed training a hierarchical RF classifier after the early fusion stage [

56]. Unfortunately, such a fusion approach can have some noticeable limitations due to the very high dimensional feature space of the multi-sensor data stack [

57]. Specifically, it could lead to the Hughes phenomenon, which is caused by the large number of features and the limited availability of training samples [

58].

In addition to early fusion, other strategies not applied to high-resolution multitemporal datasets have been proposed in the remote sensing literature to fuse single optical and radar images. For instance, decision-level methods have been proposed to individually classify optical and SAR data and to treat the classifier outputs to yield a final classification decision [

59,

60,

61,

62]. Working radar and optical sensors on two different principles allows two complementary classifiers to be trained using two different representations of the input data. Therefore, the combination of two of them will help to improve the classification results obtained by using just one of them. In addition, postdecision classification systems require less training data to overcome the curse of dimensionality issues. Therefore, this work aims to propose a crop mapping processing chain where the synergy of Sentinel’s data is exploited by a decision-level fusion strategy.

6. Discussion

6.1. Joint Exploitation of Sentinel’s Data

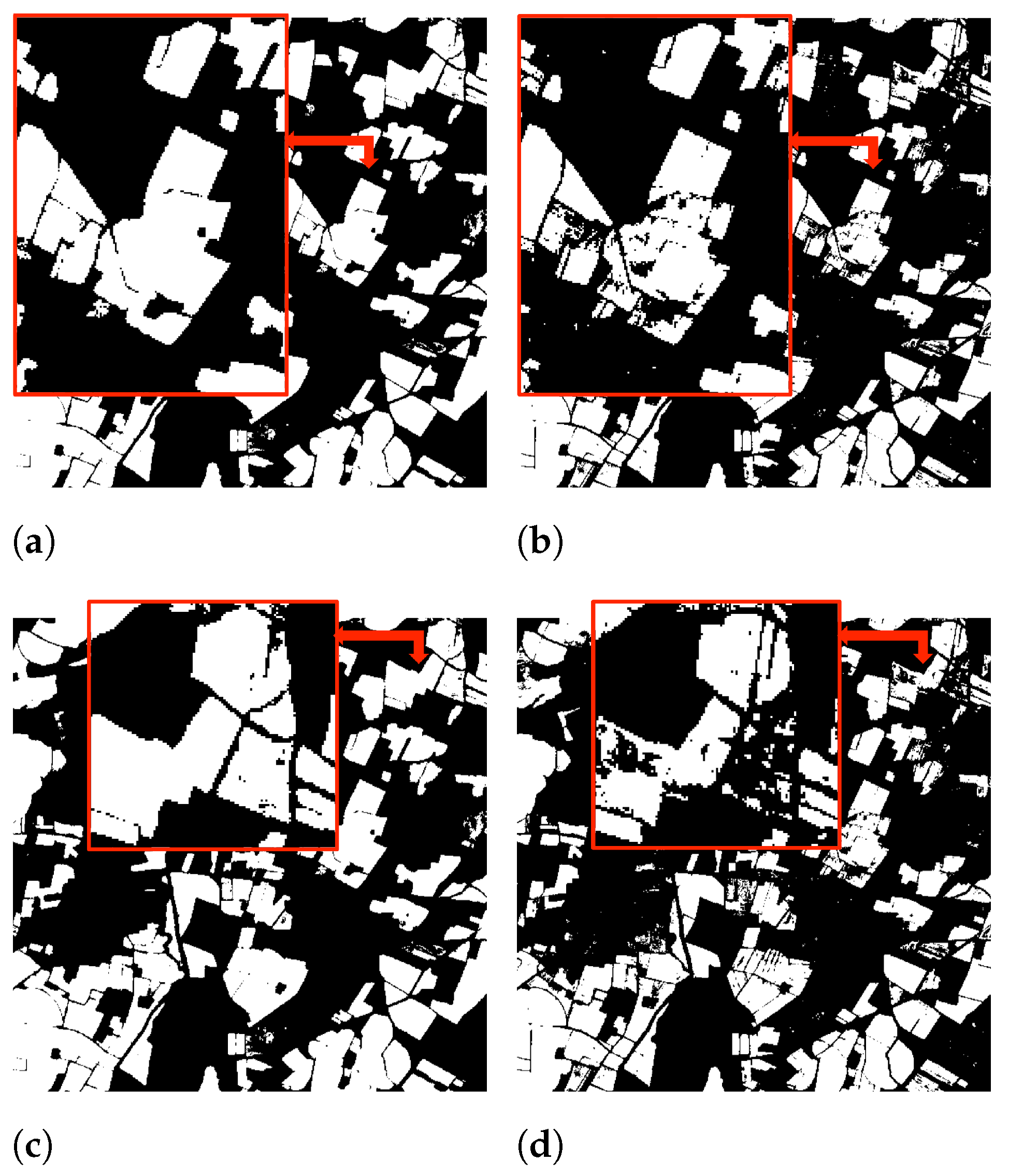

The research presented here has confirmed the interest of using joint multitemporal optical and SAR data for early crop mapping. The first experiments assessed the performances and complementarity of independent RF classifiers trained on Sentinel’s data. Comparing the individual results, it has been observed that more accurate land cover maps are derived from Sentinel-2 images. A loss of accuracy up to 5% was obtained by models trained on the Sentinel-1 time series. In addition, optical results have achieved better performances in terms of fine detail preservation.

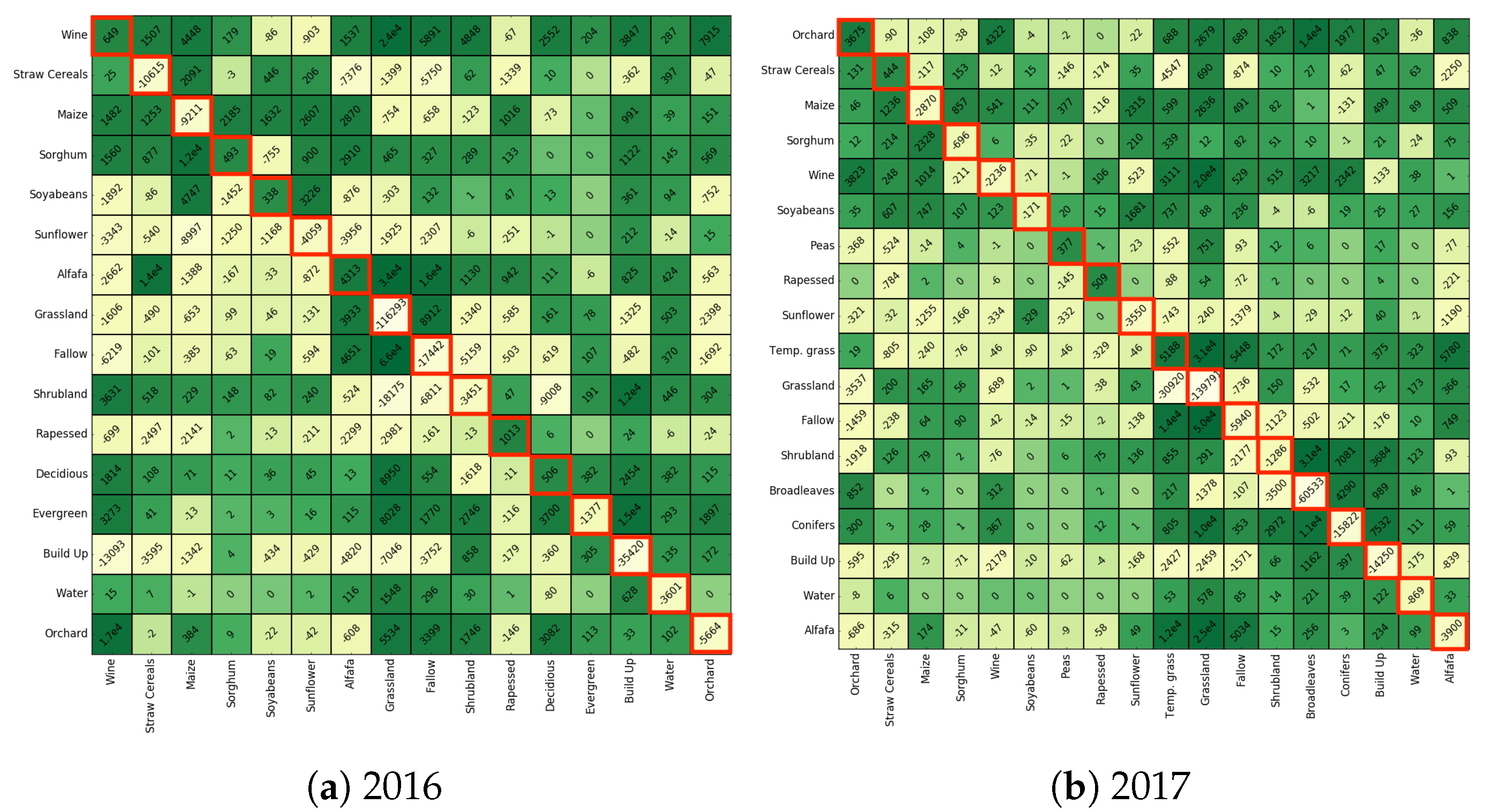

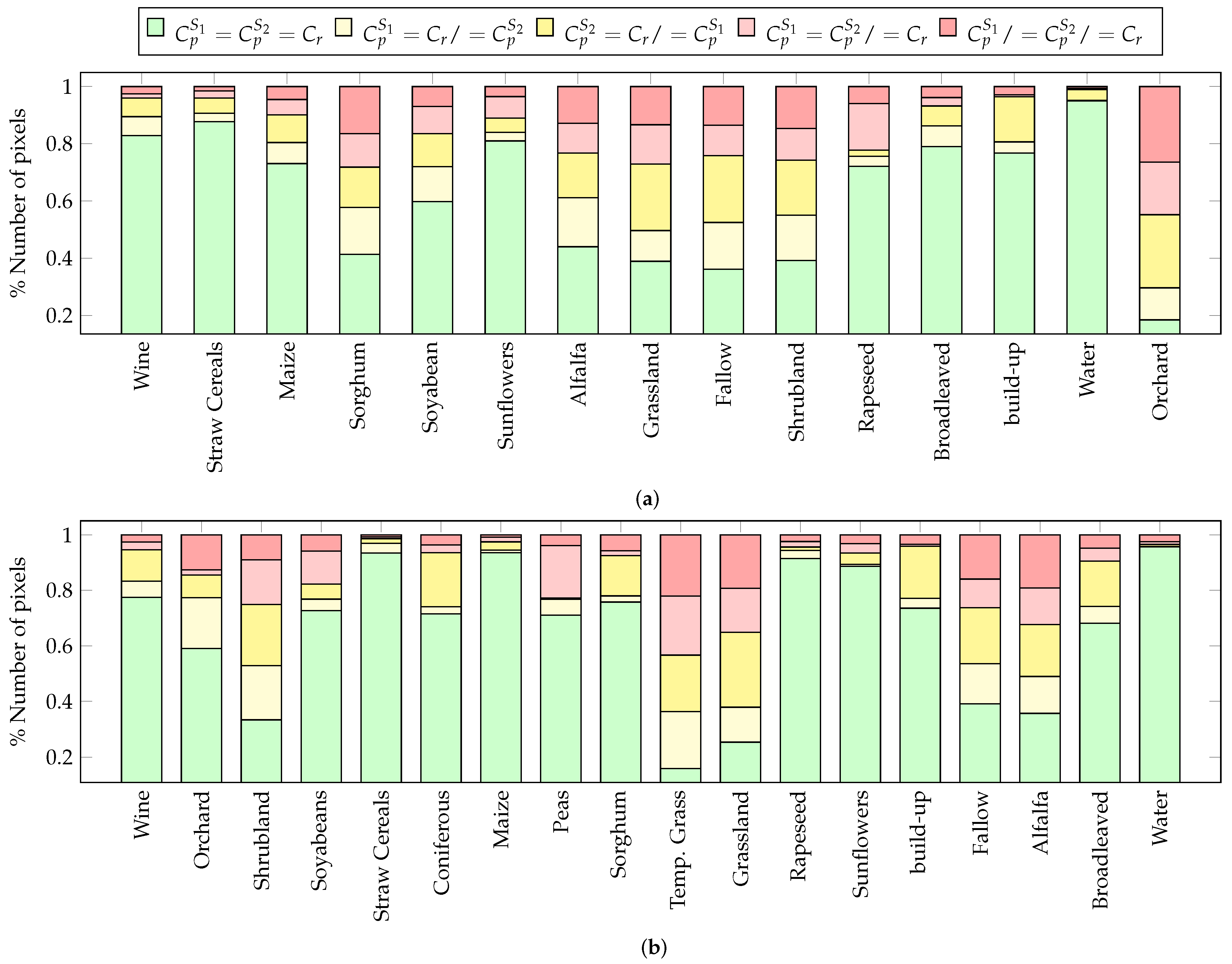

The results show that RF classifiers trained on optical and SAR data obtain different confusions and predictions. Independent errors were observed by the analysis of the posterior probabilities obtained by each classifier. The complementarity has been especially noticeable for complex land cover classification classes such as grassland, fallows or orchards. To exploit this synergy, an S1-S2 classifier system based on a postdecision fusion strategy has been proposed and evaluated.

Postdecision fusion results have proven that the combination of classifier strengths leads to more accurate predictions. The obtained results have highlighted the limitations of the early fusion strategy, which is the most commonly used method for classifying multimodal time series. The early fusion scheme has learned less accurate models because of the huge dimensional dataset resulting from stacking Sentinel’s optical and SAR images. For this fusion strategy, the empty space phenomenon and the curse of dimensionality have complicated the extraction of useful information. The low performances of early fusion have been confirmed by several experiments performed with different numbers of training samples and satellite images. The results have also shown that early fusion accuracies considerably decrease by reducing the number of training samples. As early fusion models are trained with more image features, this strategy needs much more training data to avoid the Hughes phenomenon. As the availability of reference data can be scarce, it confirms that early fusion is not the most appropriate strategy for large-scale crop mapping.

Among the probabilistic fusion strategies, product of experts (PoE) has achieved the best performance over all combination rules. The product rule solved classifier disagreements by systematically removing confusions appearing in French agricultural seasons. The fusion benefit has been mainly observed in the early season, where averaged overall accuracies have risen more than 6%. This gain has confirmed that the incorporation of SAR data into the joint classifier system can handle the lack of optical images due to winter cloud coverage.

Despite the satisfactory performances of , more efforts could be conducted to increase the performances of the classifier fusion system. For instance, information about the class accuracies reached by the individual optical and radar classifiers could be incorporated in the fusion process. Specific rules could also be proposed to exploit when both classifiers provide incorrect and very uncertain predictions. The combination of the RF probabilistic outputs by using a high-level classifier may be another possible fusion strategy to improve the results. Middle fusion strategies could also be investigated to address the high dimensionality of Sentinel’s data. The exploration of dimensionality reduction techniques to extract useful features from Sentinel will be an interesting research field to propose intermediate fusion strategies.

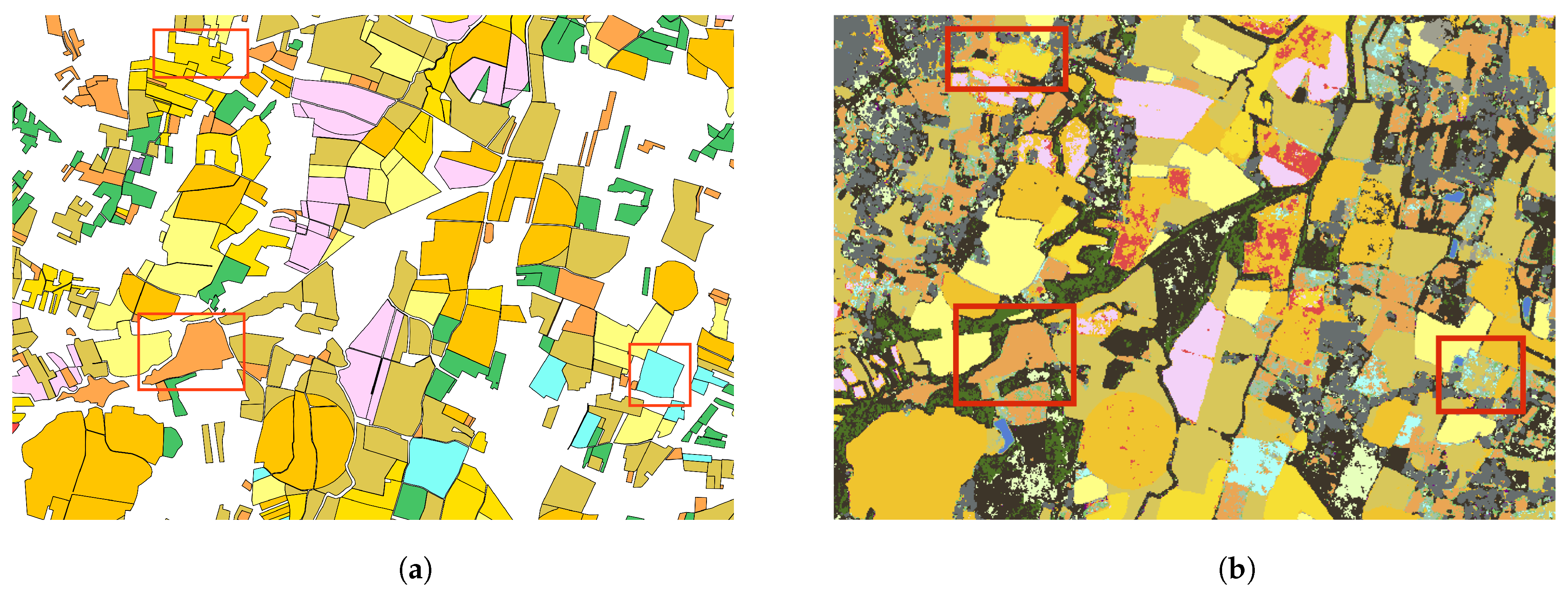

6.2. Seasonal Crop Maps

Accurate binary cropland masks and crop type maps were obtained by the proposed SenSAgri methodology. These results have been assessed over large validation data sets covering different geographical areas. The classification performances were evaluated in the early, middle and end of different annual agricultural seasons. The results show that classification accuracies rise throughout the year when the number of image features increases. The best results were reached at the end of the season, where crop phenological cycles were well captured by satellite data. The results confirmed that accurate crop map products can be delivered early (middle of July) during the summer crop growing season.

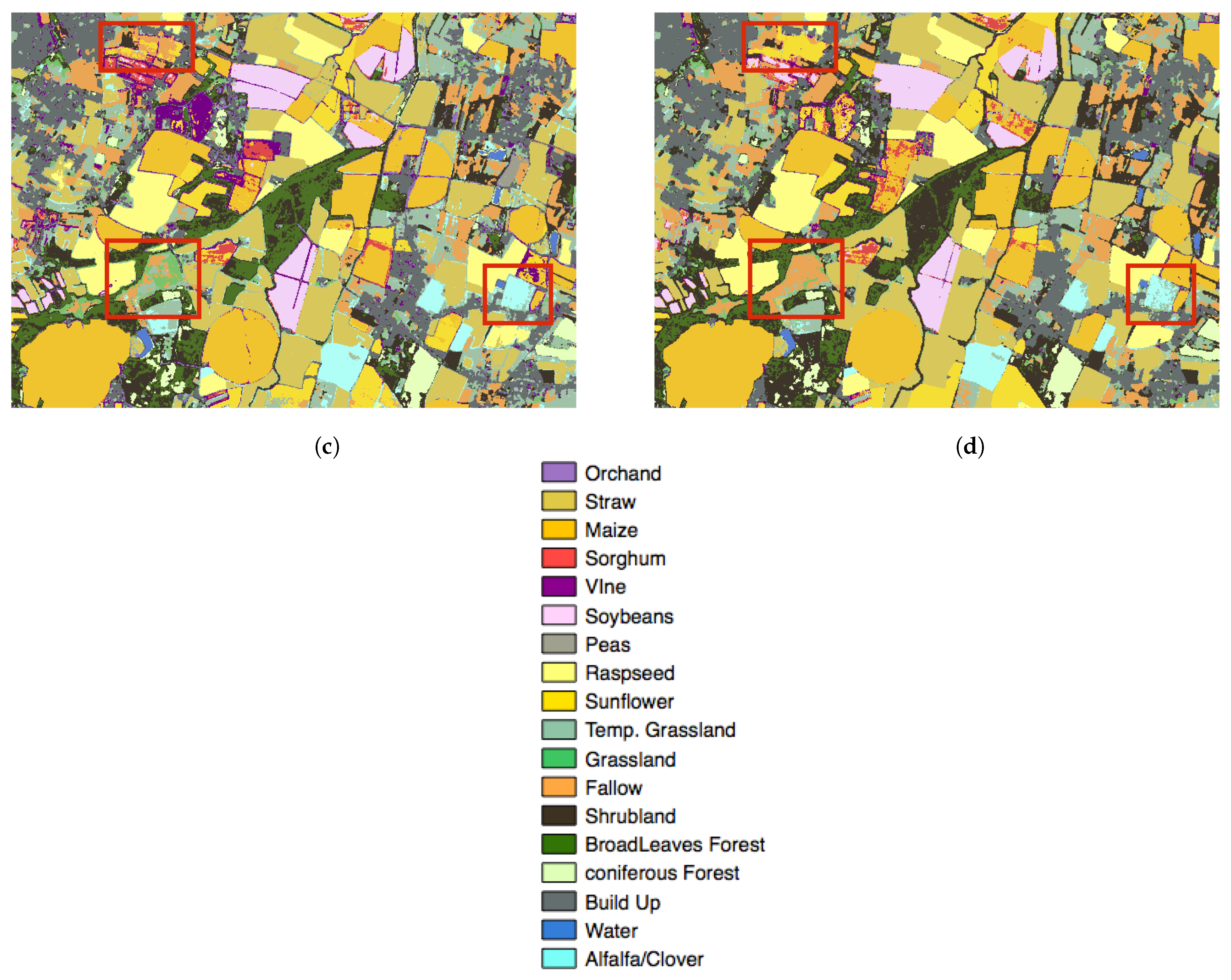

Cropland areas have been correctly identified by binary masks, whose precision and recall values were higher than 90%. The different European test sites obtained similar and satisfactory performances. Binary classification results have shown that crops were rarely confused with non-crop classes. Most of the errors were found in vegetation classes such as alfalfa, fallows or temporary grassland. These classes have not been considered here as cropland areas according to the user crop map product specification. The results also show that confusion between crops and surrounding natural/seminatural vegetation classes can reveal important classification challenges. More efforts could be made in the future to improve the classification of natural/seminatural vegetation areas. It must be remarked that most of the existing crop classification studies have not considered seminatural vegetation classes in their reduced validation datasets.

The results have shown how posterior probabilities obtained by independent RF classifiers can be exploited for the binary detection of agricultural areas. For each pixel, the proposed approach allowed us to estimate the joint probability of belonging to the crop class. The obtained maps recognized cropland areas incorrectly categorized by a classical classification strategy using a complete class legend. The resulting binary cropland masks were compared with those obtained by the Sen2AGri methodology [

44], which considers cropland mapping as a binary imbalanced classification problem. Quantitative and visual results have proven that more accurate crop masks are obtained by the SenSAgri methodology. The highest accuracy improvements were achieved by crop class, whose precision and recall measures increased by approximately 5%. In addition, the SenSAgri methodology avoided the definition of the non-crop class data set, which can be challenging given the high variability of non-crop land cover classes describing landscapes. The quality of binary cropland maps could also be improved by optimizing the choice of the binary decision threshold. A strategy to select a threshold depending on an accuracy metric could be proposed.

The different experiments have confirmed the interest of detecting cropland areas before classifying the types of crops. The proposed two-fold methodology has reduced the impact of land cover legend definitions on crop classification results. The use of specific classifiers using only crop legends have led to more accurate performances. High-quality crop type maps were obtained with averaged overall accuracies higher than . Most of the classes reached satisfactory F-score values, which increased during the season. The lowest F-score values were obtained by minority classes (validation data sets were imbalanced). The different European test sites obtained similar satisfactory results, achieving accurate crop maps at the beginning of the summer season. It must be noted that the early detection of summer crops before the irrigation period could provide essential information to anticipate irrigation water needs.

The quality of crop type maps obtained at the end of the season was also confirmed by a visual evaluation. The results showed how pixel-level classification results can be filtered by a majority vote strategy considering LPIS boundaries. Other filtering strategies could be proposed in the future by considering the classification decisions of the spatial neighborhoods.

Although accurate crop type maps have been produced by the joint use of optical and SAR data, the French test site showed that more accurate maps can be produced by increasing the number of Sentinel-2 images. Accordingly, the incorporation of complementary optical sensors such as Landsat sensors could be investigated to improve the performance of the proposed methodology.

The quality of the resulting crop map products was assessed on large validation datasets, which has rarely been performed in the crop classification literature. Despite the volume of testing data, satisfactory results were obtained with limited training data. Some experiments also confirmed that accuracies could be slightly improved by increasing the number of training samples.

Although the experiments confirmed that the SenSAgri processing chain could be deployed for in-season crop classification, an important consideration needs to be taken into account. The presented results were obtained by in situ data collected from the agricultural season that is to be classified. Consequently, the availability and quality of the in-season reference data have impacted the classification results. Reference data describing non-cropland classes can be obtained from previous years assuming that these classes do not change in consecutive years. In contrast, obtaining crop reference samples describing the agricultural season to be classified can be challenging. According to the recent literature [

68,

69], one solution could be the exploitation of historical time series and reference data obtained during previous years.