Abstract

3D GIS has attracted increasing attention from academics, industries, and governments with the increase in the requirements for the interoperability and integration of different sources of spatial data. Three-dimensional road extraction based on multisource remote sensing data is still a challenging task due to road occlusion and topological complexity. This paper presents a novel framework for 3D road extraction by integrating LiDAR point clouds and high-resolution remote sensing imagery. First, a multiscale collaborative representation-based road probability estimation method was proposed to segment road surfaces from high-resolution remote sensing imagery. Then, an automatic stratification process was conducted to specify the layer values of each road segment. Additionally, a multifactor filtering strategy was proposed in consideration of the complexity of ground features and the existence of noise in LiDAR points. Lastly, a least-square-based elevation interpolation method is used for restoring the elevation information of road sections blocked by overpasses. The experimental results based on two datasets in Hong Kong Island show that the proposed method obtains competitively satisfactory results.

1. Introduction

In the last twenty years, three-dimensional (3D) city modeling technology has been gradually applied in smart city infrastructure construction and urban intelligent transportation network construction [1,2]. In this case, the road network, as the lifeblood of urban transport, is the main component of a city, and the construction of 3D virtual road network for the creation of a digital city is of great significance [3].

The methods for generating 3D road networks can be classified into three categories. One is based on manual modeling tools such as Creator and Maya. From geometric modeling of road surfaces to texture mapping, good results are achieved through manual road modeling at the expense of significant labor and time. As a result, this inefficient manual modeling severely limits the number of road models generated and their widespread use in various applications.

The other is based on two-dimensional (2D) Geographic Information Systems (GIS) data and some elevation data. Satellite images are used as textures for the roads. In this way, the generated 3D road networks are based on real data, so they are sufficiently realistic. However, it is difficult to automatically generate various intersections in 3D space.

The third is the method based on oblique photogrammetry or Light Detection and Ranging (LiDAR) point clouds. To generate a realistic scene, the data of the 3D road models can be collected by several data acquisition devices, such as airborne oblique photogrammetry or LiDAR equipment [4]. However, the method still has many difficulties in data noise reduction and texture reconstruction due to occlusion and other reasons.

In addition, all three methods cannot generate the road structure that can be used for traffic simulation well directly.

To address the above challenges, this paper uses an automated approach to generate large-scale urban 3D road networks based on satellite remote sensing images and airborne LiDAR data. Firstly, we automatically generate 2D vector data of road networks based on satellite remote sensing images. Then, the road point cloud is extracted according to the 2D road boundary, and the point cloud is filtered and segmented. Finally, we interpolate the elevation of road nodes based on the least-square method, and smooth the elevation curve of extracted roads. With satellite images and elevation data as input, a large, 3D virtual road network with a real environment and a smooth road surface can be automatically generated.

To sum up, the contributions of our work are summarized as follows:

- A road segmentation method based on multiscale collaborative representation is proposed to extract road boundary information from satellite images.

- A method of automatically creating a 3D road network with satellite remote sensing images and LiDAR point-cloud data as inputs. It is worth mentioning that our method supports automatic generation of diversified and complex 3D road intersections.

- A method to interpolate and smooth elevation data gives the 3D road network a fine and smooth road surface, which also conforms to civil engineering specifications.

2. Related Work

2.1. Studies on Road Extraction

Many studies consider road extraction an image segmentation problem, and road features are used to assist in road extraction. Das et al. extracted the road areas by combining the probabilistic support-vector machine method, the dominant singular value method, the local gradient function, and the vertical central axis transformation method [5]. Wegner et al. developed a higher-order conditional random field (CRF) formulation for road extraction based on superpixel segmentation, in which the prior is represented by long-range cliques with robust PN-Potts potentials [6]. To overcome the drawback of too many irrelevant cliques, minimum-cost paths are embedded in a higher-order CRF framework to construct an explicit prior about the shape of roads. Cheng et al. proposed to use fused, multiscale, collaborative representation and graph cuts to firstly segment the image [7]. The initial contour of the road was then obtained by filtering the road shape. Finally, the road centerline was obtained through tensor voting. Alshehhi and Marpu introduced a hierarchical graph-based image segmentation for road extraction, which consisted of a graph representation of initial segmentation and hierarchical merging and splitting of these segments based on color and shape features [8]. Despite their advantages, these methods only work well in multispectral images and detect main roads in urban areas. Thus, extracting roads from areas with dense buildings or other areas similar to road grayscale is challenging.

Deep-learning-based methods have a good effect in road segmentation, but it is difficult to train a general model to deal with different remote sensing images due to the limited number of training samples [9,10,11,12,13]. To simplify the training of deep neural networks, Zhang et al. proposed the residual U-Net, which combines the advantages of U-Net with residual blocks [14]. To extract roads of various widths, Gao et al. proposed a multifeature pyramid network (MFPN) [15]. Chen et al. proposed a reconstruction bias U-Net for road extraction from high-resolution optical remote sensing images [16]. D-LinkNet is an efficient method in comprehensive performance of road extraction, which won first place in the 2018 DeepGlobe Road Extraction Challenge [17]. Despite its advantages, the down-sampling process of the encoder module usually leads to a reduction in the boundary accuracy of the road extraction results. In addition, these methods are challenging regarding accurate extraction of roads with complex backgrounds and multiple scales.

2.2. Studies on 3D Road Reconstruction

The traditional method to generate a 3D road network is to use 3D models. Zhu et al. proposed a hierarchical lane-oriented 3D road-network model, with a unified modelling language diagram, which aims to resolve problems such as: (1) true distance measurement across hilly terrain; (2) representation of 3D structures, such as overpasses and onramps, and (3) assigning multiple routes over a single arc [18]. Garciadorado et al. created roads automatically in 3D scenes by taking the attributes of lane number, intersections, and the shape of roads as the input of the program [19]. LiDAR data can be used to obtain the elevation and intensity information of ground targets, so it is often used in 3D modeling of ground objects [20,21,22,23,24]. Dias et al. used LiDAR data to conduct 3D modeling of real-world scenes, which can truly and accurately restore the real world, but it is still difficult regarding data denoising and texture reconstruction [25]. The method based on airborne LiDAR data can accurately generate the realistic 3D road model. However, due to the huge amount of data and the error of point cloud itself, the preprocessing of point-cloud data needs to consume a certain time. At the same time, due to the existence of road occlusion, there are problems in the modeling of some complex road targets.

A more efficient way to generate 3D road networks is based on GIS data, such as satellite images and elevation models [26,27,28]. Wang et al. extracted the road central axis from remote sensing images, and then combined it with the terrain to create a 3D road network [29]. The roads in the above scene have width and texture, but they do not support the generation and display of intersections. Wang et al. proposed a new method of automatic, high fidelity, 3D road-network generation, which transformed the existing road GIS data containing only 2D road centerline information into 3D road-network model [30]. Wen et al. proposed a deep-learning framework for recovering 3D road boundaries using multisource data, including mobile laser scanning (MLS) point cloud, spatial trajectory data and remote sensing images [31]. Wang et al. presented an automatic method to generate a large-scale 3D virtual road network based on GIS data [32]. The method based on GIS data can make full use of the existing surveying and mapping results and create a 3D road model by introducing the digital elevation model (DEM), but this method is limited by the accuracy of DEM and the timeliness of GIS data information, and cannot be updated at high frequency. In addition, the 3D road model established by this method lacks a 3D topological relationship, so it is difficult to carry out more in-depth spatial analysis.

In short, the existing methods can generate 3D realistic road networks. Nevertheless, the 3D road-extraction method based on a single data source makes it difficult to accurately extract the 3D information of the road, and cannot solve the problem of mutual occlusion of elevated roads. A feasible solution is to combine the airborne LiDAR data with the satellite remote sensing images, extract the road surface by using the remote sensing image, and then complete the 3D road extraction by combining the elevation information with vectorized road surfaces.

3. Three-Dimensional Road-Extraction Method

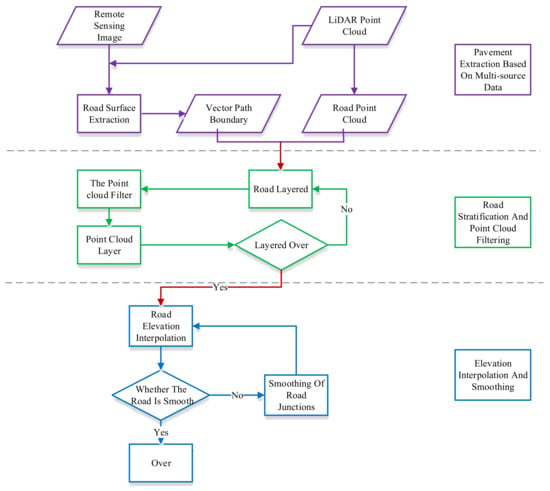

This section introduces a 3D road-extraction method integrating multisource remote sensing data, including three stages: road extraction based on multisource data, road layering and point-cloud filtering, and road elevation interpolation (Figure 1). First, the road surface was extracted from a high-resolution remote sensing image and LiDAR points in the road area were segmented from a LiDAR point cloud. The road surface data in raster form was transformed into road polygon data in vector form using ArcGIS software. As a result, each road polygon is assigned a Road ID. Then, the data processing is divided into two steps: The first step is to use the automatic road-layering algorithm based on average elevation value to sort the heights of 2D road polygons and specify the layer values of road polygons from low to high according to elevation values. The second step is to filter the road point-cloud data in accordance with different terrain factors. Finally, the height interpolation technology based on least squares is used to recover the height value of the road in the overhead shelter part, and the 3D road extraction is realized.

Figure 1.

Flow chart of the 3D road-extraction method.

3.1. Road Surface Extraction Based on Multisource Data

The performance characteristics of roads are different because of the diverse acquisition methods for high-resolution remote sensing images and airborne LiDAR data [33,34]. In general, the spectral and textural features of roads in high-resolution remote sensing images are rich, and the edge features are evident. However, the features also contain considerable noise and occlusion. Airborne LiDAR data not only contain accurate elevation information, but also include laser echo and intensity information, which can be used to provide auxiliary information in road extraction. Therefore, combining the two data sources can improve the effect and accuracy of 3D road extraction.

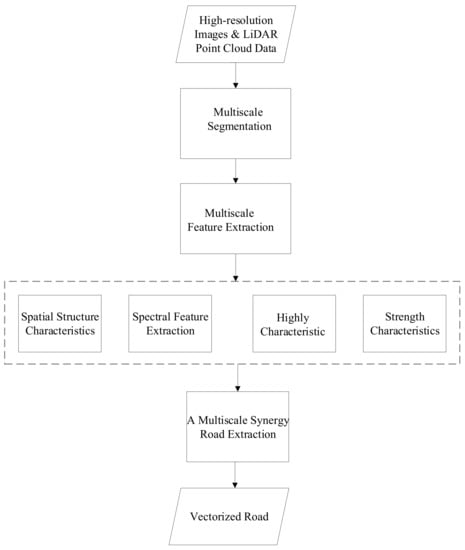

Figure 2 shows the flow chart of road surface extraction based on multisource data. First, on the basis of high-resolution remote sensing images and airborne LiDAR data, multiscale segmentation and feature extraction are conducted. Then, a multiscale synergy road-extraction method is used for road extraction, and the road surface from the extracted raster data is transformed into planar vector data as a 2D vector road map for the next road extraction and 3D point-cloud road extraction. The detailed description of the multiscale synergy road-extraction method is as follows.

Figure 2.

Flow chart of road surface extraction based on multisource data.

The road probability estimation based on multiscale collaborative representation considers the dataset with n training samples, where , is the feature dimension. , where is the number of categories, is set as the classification label. For the road extraction task, . Let and be the numbers of training samples for road and nonroad classes, respectively.

For a test sample , its corresponding representation coefficient is , which can be calculated through all the training samples as

where is the deviation Tikhonov matrix between test samples and all training samples, and is a global regularization parameter that balances the loss and regularization terms. denotes the best representation vector for with elements. Specifically, the design form of is as follows:

Given that is a diagonal matrix, the diagonal value of measures the difference between a certain training sample and the test sample. Thus, intuitively, if the test sample belongs to the road class, the difference between the test sample and the road training sample is small. On the contrary, the difference between the test and road training samples is great. Given a large regularization parameter , to achieve the minimum goal in Formula (1), the road class test samples should be more likely to be represented by the road class samples rather than the nonroad class samples; consequently, tends to be sparse. The representation coefficient can be estimated in a closed-form solution as

Then, the training sample is divided into road sample and nonroad sample , and the coefficient vector is correspondingly divided into and . The residual between approximation and test sample can be defined as

In this method, it is necessary to calculate the probability that each pixel belongs to the road class rather than the category label of each pixel. Therefore, the probability of the road class is defined as

Hence, the probability of being in a nonroad class is .

After the road probability of each road object is obtained on a certain scale, all pixels in the same road object are given a probability value equal to that road object. Then, all the road probability graphs of different scales are fused into one whole. Finally, the probability that each pixel belongs to the road can be defined as

Other fusion strategies, such as average, median, and minimum, have also been attempted in this subsection, with results below the maximum rule. According to the results of many experiments, we find that the maximum road-probability value of each scale usually indicates that the road features are obvious. Therefore, the maximum fusion rule is used.

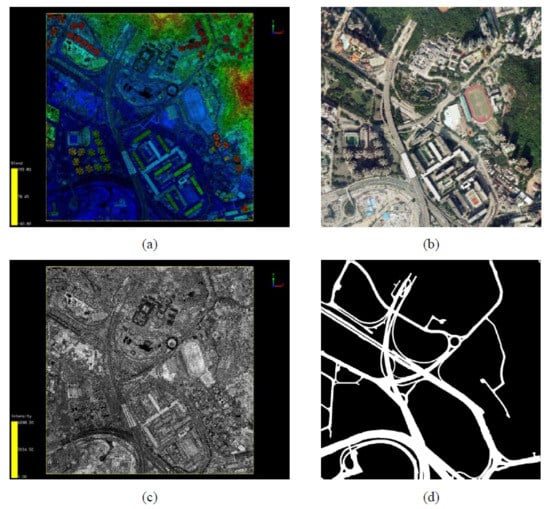

Figure 3 shows an example of road surface extraction based on multisource remote sensing data.

Figure 3.

Example of road surface extraction based on multisource data: (a) Point-cloud elevation information; (b) High-resolution image; (c) Point-cloud intensity information; (d) Road surface extraction result.

3.2. Road Stratification and Point-Cloud Filtering

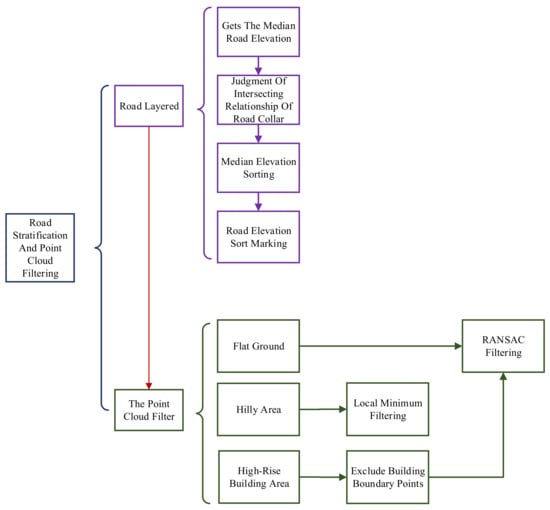

This subsection includes automatic stratification of roads in the viaduct area and point-cloud filtering in accordance with different topographic factors. First, an automatic road-layering algorithm based on average elevation value is proposed to sort the heights of 2D road polygons and specify the layer values of road polygons. Second, a multifactor point-cloud filtering method is introduced to filter the road point-cloud data. The technical flowchart is shown in Figure 4.

Figure 4.

Flowchart of the road stratification and point-cloud filtering method.

3.2.1. Road Automatic Stratification

Owing to urban overpass areas, the existence of the viaduct of different road crossings, and the overlapping phenomenon (Figure 5), different sections of the viaduct cross one another, and the viaduct cross is complex. Thus, to improve the accuracy of 3D road extraction and reduce the difficulty of a point cloud after filtering, roads should be graded in accordance with elevation. The purpose of this process is to mark the level of each road polygon in a property sheet with the “level” attribute value (a higher-level value indicates a higher elevation level of the road). The stratification of roads is based on two rules. The first rule is road height, which is reflected by the median elevation of LiDAR data points within the road polygon. The second rule is the type of road. The general assumption is that the level of a road on the ground should be 0, and the level of overpasses and viaducts should not be less than 2. In the actual algorithm design, the two rules should be considered to determine the level of each road polygon, and its level value should be recorded in the “level” attribute field of the polygon.

Figure 5.

Schematic of road crossing and overlap: (a) Google Earth 3D scene view; (b) Remote sensing image view.

The road automatic stratification algorithm proposed in this sub-subsection sorts the elevation of roads by counting the median elevation of point clouds falling into the road polygon, and then by obtaining the elevation levels of different roads in the target region. Table 1 presents the specific steps of this algorithm.

Table 1.

Road automatic stratification algorithm steps.

3.2.2. Point-Cloud Filtering

Road point-cloud filtering is an essential data-preprocessing process to improve the accuracy of road elevation interpolation. For a large range of road area, in accordance with the topography, dense buildings, and other special circumstances, different filtering strategies should be selected in accordance with various circumstances. This sub-subsection is divided into three cases for filtering (Figure 4), where “plain area” refers to areas with low altitude; “Mountain” means an area with high altitude and large ups and downs; “building cluster” is an area of high-rise buildings, where buildings and roads are usually close and often adjacent to each other (with common edges) on a 2D map.

In this sub-subsection, corresponding filtering methods are designed in accordance with the three cases.

- (a)

- Flat Ground

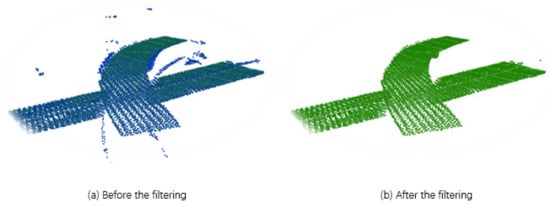

For roads in areas with less undulating terrain, the random sample consensus (RANSAC) [35] algorithm is used to filter the LiDAR data points in the road polygon. RANSAC is a robust model-fitting algorithm which is suitable for many abnormal data points. The effect of the filtering algorithm is shown in Figure 6. The specific steps of the RANSAC-based road-filtering algorithm are shown in Table 2.

Figure 6.

Example of a RANSAC-based filtering algorithm.

Table 2.

Steps of the RANSAC-based filtering algorithm.

- (b)

- Hilly Area

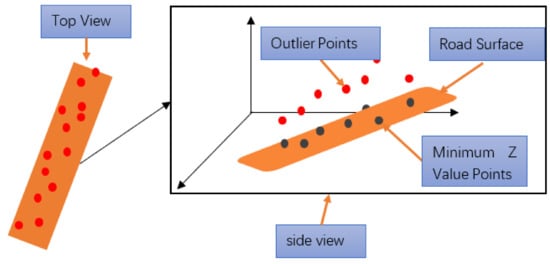

A local minimum filtering (LMF) algorithm based on the Delaunay triangulation is proposed for roads in mountainous or hilly areas with dense vegetation and large terrain undulations. The schematic of the algorithm is shown in Figure 7. Using the LMF algorithm to filter road point clouds can eliminate road points with abnormal elevation in mountainous or hilly areas. The LMF algorithm is used to select the correct mountain waypoints, and the number of selected waypoints is obtained in accordance with the estimation of the minimum number of points required by the elevation interpolation algorithm. If the number of vertices of the road polygon is n, at least approximately n/2 waypoints should be selected. The execution steps of the LMF algorithm are shown in Table 3.

Figure 7.

LMF algorithm.

Table 3.

Procedures of the LMF algorithm.

- (c)

- High-Rise Building Area

For the building aggregation area, given that a covered or adjacent relationship may exist between a road and a building, the point cloud is preprocessed first to avoid the influence on the following elevation interpolation. The “high-rise building areas” were collected from open-source GIS data as supplementary data. Then, the buffer zone on the building surface is established through the buffer method in spatial analysis, and the points falling into the building or close to the boundary of the building are removed. Then, road point-cloud filtering is performed using the RANSAC filtering method.

3.3. Elevation Interpolation and Smoothing

This subsection covers road node elevation interpolation and road connection smoothing.

3.3.1. Road Node Elevation Interpolation

The filtered point-cloud dataset P is used to construct a buffer for each node on the road surface. The median value of the points that fall into the buffer and have the same road layer as the current road node is used as the initial elevation value of the current road node. The median value is saved. If the number of qualified elevation points found by the current node is 0, then the elevation value of the current node is marked as 0.

When a section of the road is processed, the elevation value of the node whose elevation is not 0 is interpolated and saved through an interpolation method based on least-squares fitting. The specific solving process is as follows:

A fitting plane is constructed. Let the equation of the plane be

where and . are two slope parameters, and is a distance parameter.

The equation can be rewritten as a system of linear equations as follows:

In Equation (8), contains the observed value (z value of the laser point), is the vector of three unknown plane parameters, and matrix A contains information about the configuration of the laser point, in which each row of matrix A contains the horizontal position vector of a single laser point (, , 1). Hence,

To solve these equations in the least-squares adjustment, the weights of the observed values are given, and the plane parameters are estimated using the following formula:

where is the weight matrix of the observed value; if the weight is equal, then .

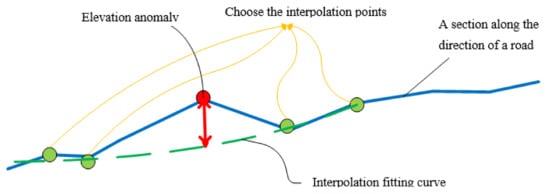

3.3.2. Road Smoothing

The road-smoothing procedure includes handling elevation anomalies in a road, as well as smooth-transition and elevation-anomaly processing at the junction among roads. The path-smoothing algorithm calculates in accordance with the relevant road design standard between adjacent nodes of a road slope, finds road-height anomaly points, and uses the height anomaly points as a known point. From the method of least-squares curve-fitting of height anomaly points, the final path equation is obtained through polynomial fitting calculation of the abnormal points’ new elevation values. Thus, smooth processing of the road is realized. The schematic of the road-smoothing algorithm is shown in Figure 8.

Figure 8.

Road-smoothing diagram.

- Step 1: Elevation anomaly detection of road nodes is conducted in accordance with relevant road standards (such as slope information).

- Step 2: The detected elevation anomalies are marked.

- Step 3: A least-squares curve-fitting or interpolation method is used to fit the marked elevation anomalies, and new elevation values are inserted in accordance with the fitting results.

4. Experiment and Analysis

4.1. Experimental Data

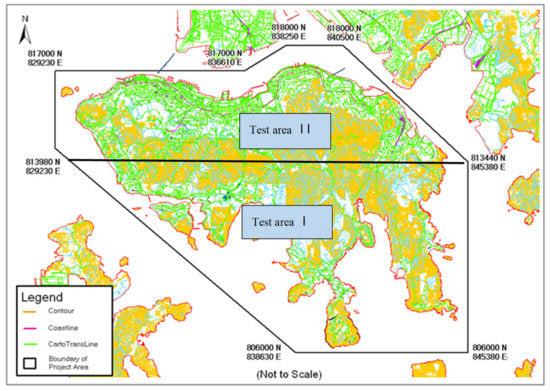

The entire Hong Kong Island is regarded as the research area (Figure 9). Two-dimensional road polygon data are extracted using the high-resolution remote sensing image data and airborne LiDAR data of Hong Kong Island, and the extraction results are transformed into 2D vector data. The 2D road polygon dataset includes two types of features: road polygons and accessory road polygons. The number of road polygon elements in the study area is 11,500, and the overall road length is 770 km. Its horizontal position reference system is the Hong Kong 1980 grid reference system.

Figure 9.

Boundary of the research area. Test area I is in the south of Hong Kong Island, and Test area II is in the north of Hong Kong Island.

Airborne LiDAR data were acquired in 2010 by using an Optech Gemini ALTM LiDAR sensor which was equipped with a fixed-wing UAV (unmanned aerial vehicle). The horizontal position reference system is the Hong Kong 1980 grid reference system, and the vertical position reference system is the Hong Kong elevation reference system. The maximum point spacing of the LiDAR data is 0.5 m, and the vertical accuracy and horizontal accuracy of the LiDAR data are 0.1 and 0.3 m, respectively, with a confidence interval of 95%.

4.2. Road Surface Extraction and Result Analysis

To verify the performance of the proposed road surface-extraction method, it is compared with two related methods, including Miao et al.’s method [36] and Maboudi et al.’s method [37]. The selection is based on the fact that they all use road shape features, but the definition of road shape features emphasized by different methods is different. The parameters were set as follows: 50 positive samples, and 50 negative samples were randomly selected in the multiscale collaborative representation algorithm. Three different object numbers were set to 2000, 3000, and 4000.

The first remote sensing image used in the experiment is shown in Figure 10a, with a spatial size of 800 × 1024 pixels and a spatial resolution of 1m/pixel. Captured by the IKONOS satellite, the image includes different types of noise, such as vehicle occlusion, curving roads and building shadows.

Figure 10.

Images used in the road surface extraction experiment: (a) IKONOS satellite image; (b) WorldView satellite image.

The second image used in the experiment was collected by the WorldView satellite, as shown in Figure 10b. The image consists of three bands (R, G and B). Its spatial resolution is 0.7 m/pixel, and its spatial size is 731 × 1507 pixels. The images include roads, buildings, vegetation, and other ground objects.

The accuracy evaluation indicators used here were (1) completeness; (2) correctness; and (3) quality [38]. To present quantitative comparisons, road reference data were generated by a manual drawing method.

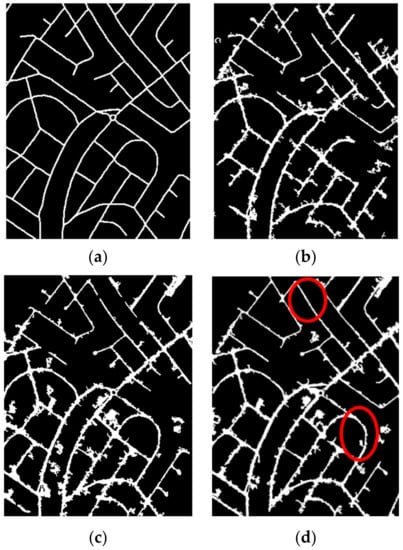

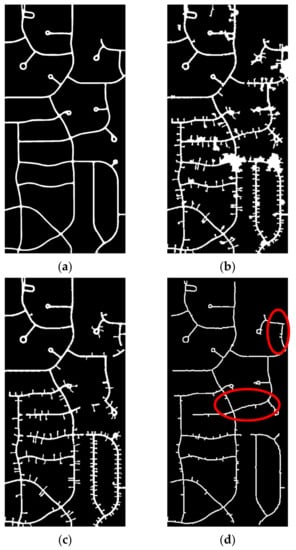

Figure 11 shows the extraction results of different methods, and Table 4 shows the quantitative evaluation results of different methods. It can be seen from the figure that there were many false positives when using Miao’s method, and many nonroad elements were identified as roads because the spectral characteristics of the road were easily confused with the surrounding objects, while the proposed method is more complete because the shape feature reflects the spatial structure information of the road, therefore, there were fewer false positives for buildings or vegetation adjacent to the road.

Figure 11.

Comparisons of different methods using IKONOS satellite images: (a) Reference data; (b) extraction results by Miao’s method; (c) extraction results by Maboudi’s method; (d) extraction results by the proposed method. The area in the red circle shows that our proposed method can extract a more complete road.

Table 4.

Quantitative evaluation results from experiment 1.

Additionally, both Miao’s method and Maboudi’s method had omission errors when extracting curved roads because the road shape features used in the two methods cannot accurately depict curved roads. There are advantages in this aspect due to the spatial extension of the proposed shape feature, which still provided accurate road features in the case of road spectral feature failure. Additionally, Maboudi’s method uses spectral and shape features, so the false positives in the road extraction results were less than Miao’s method, but Maboudi’s method had more missing errors than the proposed method, which indicates that the proposed method considers the spectral features and spatial shape features of the road and is more advantageous than the general road-shape features in the feature recognition of curved roads.

Comparing the results of Miao’s method and Maboudi’s method in Figure 11, the proposed method based on multiscale segmentation obtained better results than the other two methods, which is due to the large difference in the width of the road in the image. Multiscale collaborative representation using different segmentation scales maximized the true probability of road expression and reduced the uncertainty.

Figure 12 shows that the road results extracted by Miao’s method and Maboudi’s method had many false positives, and many buildings connected with the road and paths connecting the main roads and buildings were extracted. The proposed method achieved good results, but there were also some road breaks due to the partial blockage by vegetation. The quantitative statistics in Table 5 also reflect conclusions consistent with the visual analysis. The proposed method achieved a good balance between integrity and correctness, and the extracted road had higher quality.

Figure 12.

Comparisons of different methods using WorldView satellite image: (a) Reference data; (b) extraction results by Miao’s method; (c) extraction results by Maboudi’s method; (d) extraction results by the proposed method. The area in the red circle shows that our proposed method can extract a more complete road.

Table 5.

Quantitative evaluation results from experiment 2.

Compared with Maboudi’s method using only one segmentation scale, the proposed method uses multiple segmentation scales and extracted more roads. Additionally, the proposed method uses the multiscale collaborative representation to obtain the probability that each pixel belongs to the road on three different scales. Additionally, when only one segmentation scale is used, the characteristics of different road widths in high-resolution remote sensing images cannot be considered, so the accuracy of the road-segmentation results was not as good as that at of multiple scales. The comparison of the results shows that the method using multiscale segmentation obtained a more accurate result than the single-scale segmentation method.

Compared with Miao’s method, although it also uses multiple segmentation scales and combines the spatial features of the segmented object with the spectral features, the shape feature of the segmented object used in Miao’s method is limited to a single segmented object. The ability to express the spatial form of the road is not enough. Specifically, for the extraction of curved roads, the shape features used in Miao’s method cannot be accurately described due to their spatial morphological characteristics, resulting in many curved roads being omitted. The proposed method enhanced effective road features and suppressed ineffective features, thus achieving the purpose of deep feature extraction.

4.3. Three-Dimensional Road Extraction and Result Analysis

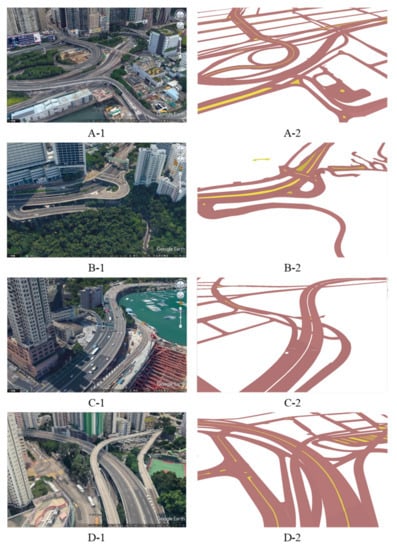

The 3D road-extraction method proposed in this paper is utilized to synthesize high-resolution remote sensing image data, airborne LiDAR data, and other auxiliary data of Hong Kong Island. The resulting 3D road polygon data include the comprehensive height information of all specified datasets. Figure 13 shows the bird’s eye view of all the roads in Hong Kong Island. Figure 14 shows the local 3D road map and sample 3D road extraction results of the four regions marked in Figure 13.

Figure 13.

Aerial view of Hong Kong Island and distribution of experimental samples. A, B, C, and D in the figure indicate the four test areas, respectively.

Figure 14.

3D local road display maps. A, B, C, and D represent the four test areas shown in Figure 13, where (A-1,B-1,C-1,D-1) refer to real 3D images from Google Earth, (A-2,B-2,C-2,D-2) show 3D views in a geographic information system.

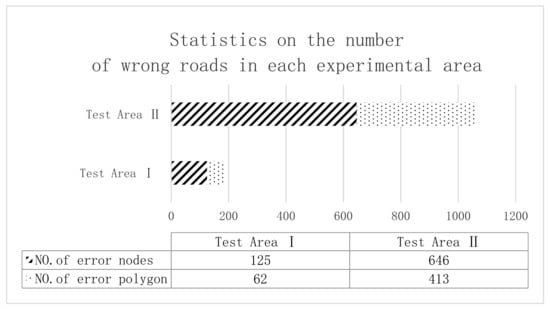

Quality evaluation factors, such as integrity, connectivity, vertical accuracy, and undulation of 3D roads, are used to check the quality of 3D road-extraction results. Most of the quality evaluation processes with quantitative results are conducted automatically by using computer programs, and the rest of the results that could not be counted quantitatively are generated manually. Table 6 shows the quality evaluation statistical results of the extracted 3D road data, in which the total number of wrong road nodes that do not meet the quality evaluation indices is counted. Figure 15 shows the normalized error-rate statistics of each quality indicator.

Table 6.

Statistical results of quality evaluation.

Figure 15.

Error statistics for the two experimental areas.

According to the statistics in Table 6, the error-prone quality elements in the generated 3D road data are errors in vertical accuracy and road undulation. There are many reasons for these two errors, among which are the accuracy of the airborne LiDAR point-cloud data themselves, the errors caused by the road elevation interpolation algorithm and the point-cloud filtering algorithm are the main factors.

Completeness errors in 3D road extraction results are caused by the elevation interpolation stage. Given that the area of some road polygons is small, or that some roads do not contain adequate LiDAR points, elevation interpolation of roads cannot be conducted in the elevation interpolation stage. As reflected in the final 3D road-extraction results, no elevation value exists for the nodes of the road polygon.

The connectivity error rates of both test areas are low. Connectivity errors are caused by the road interpolation process, in which each road polygon should be traversed and interpolated separately. Therefore, different LiDAR points can be used to interpolate the elevation values of common nodes at the junction of two connecting roads, resulting in inconsistent elevation values at the common points. The connectivity error rate in each test area is low because automatic checks by using computer algorithms lead to false detection of the error. Given that the road-connectivity detection algorithm will erroneously report some roads that are not actually connected as connectivity errors, fewer roads with connectivity errors exist after manual elimination.

A road with an elevation anomaly problem refers to a road polygon whose elevation root-mean-square error (RMSE) is greater than 0.4 m. The vertical accuracy of the road is calculated using the manually collected height checkpoints with higher accuracy within the road polygon. Road elevation anomalies in some areas are caused by the LiDAR point-cloud filtering algorithm. Although different filtering algorithms are applied to meet various road conditions, the automatic filtering method still causes excessive LiDAR points of some roads to be filtered out, resulting in insufficient LiDAR points in some roads. In addition, LiDAR points are lacking in some extremely small road polygons because a road polygon itself contains few LiDAR points.

Figure 15 depicts that more errors are detected in test area II than in test area I. Test area II has 8386 road polygons, whereas test area I only has 2916 road polygons. The number of road polygons in test area II is 3 times that in test area I. From the perspective of road complexity, test area II has a more complex road structure than test area I because more viaducts exist. These factors make the 3D road-extraction process in test area II more difficult and error prone.

5. Discussion

In this section, we present our analysis and discussion of the parameter selection and road smoothness of the two experiments. The details are provided in the following subsections.

5.1. Parameter Analysis

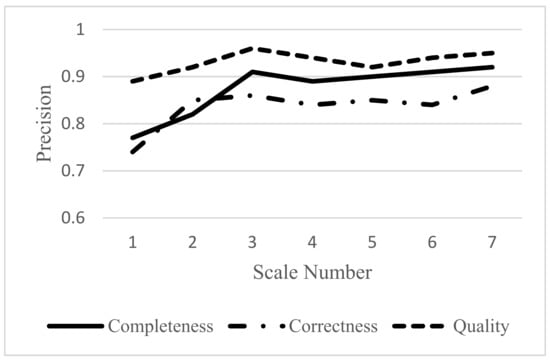

Precise road segmentation from high-resolution remote sensing images is still a challenging problem due to the complex background and multiscale roads in these images. The multiscale collaborative representation method has an important influence on the calculation of the road probability and the effect of the final road surface extraction. To test the influence of the combination of the segmentation scales on the road extraction accuracy in the proposed multiscale collaborative representation method, the first multiscale segmentation of remote sensing images used seven segmentation scales (from 1000 to 7000 superpixels, each scale was increased by 1000), and then the road extraction accuracy under different segmentation scale combinations was compared. According to the permutation formula, a total of 127 scale combinations from one scale to seven scales were calculated. First, the accuracy of the roads extracted by each scale combination method was calculated, and then the road extraction precisions of one to seven scale combinations were averaged. The value was used as the road-extraction accuracy under the scale combination mode. The test results are shown in Figure 16. Quantitative evaluation of the impact of road extraction accuracy (completeness, correctness, and quality) from different combinations of scales was performed.

Figure 16.

Relationship between scale number and extraction precision.

According to the accuracy evaluation results shown in Figure 16, it can be found that the method of road-probability estimation using multiscale collaborative representation increased with the number of scales, and the values of the three indicators of integrity, accuracy and quality of road-extraction results were available. As the number of scales continued to increase, the quality indicators of road extraction entered a stationary period, and the extraction quality reached the highest value when using three division scales. Subsequently, although the number of division scales increased, there was no significant impact on the improvement of extraction accuracy. Therefore, the experiments in this section used three segmentation scales for road-extraction experiments in road-probability estimation based on multiscale collaborative representation.

5.2. Road Smoothness Analysis

In this section, we present a discussion of the undulation errors of the proposed approach. The fluctuation error is caused by the z value of adjacent points on the road fluctuating along the road direction. The slope of roads in the study area does not exceed 0.15. Nevertheless, in the actual data, at the junction of two sections of a road, a case in which common nodes belong to different road entities may occur, which makes detecting the ups and downs between adjacent roads difficult. Owing to the edge of the interpolation algorithm used in elevation interpolation and the error in the filtering algorithm, the road undulation problem is caused by the interpolation algorithm and the LiDAR filtering algorithm. The noise points contained in the LiDAR point cloud and the point errors of the LiDAR data themselves can also affect the interpolation results, as well as the true elevation value of roads.

Most of the undulating roads are found in hilly areas, and the undulating road nodes are usually located in the corner region of the road polygon. For the common vertices of two adjacent path polygons, the errors that are excessively unfluctuated by manual correction can be fixed. The errors in the undulation of other vertices can be automatically corrected using the road-smoothing algorithm proposed in Section 3.3.2.

6. Conclusions

In this paper, we addressed the issue of 3D road extraction using LiDAR point clouds and high-resolution remote sensing imagery. As the focus of our work, we first proposed a multiscale road-segmentation method based on collaborative representation, to extract road boundary information from satellite images. An automated stratification process was used to determine the height level of each road segment, and a multifactor filtering strategy was proposed for LiDAR point denoising in accordance with complex ground features. Based on the current situation with roads, we introduced a multistrategy coordinated road elevation interpolation algorithm. An adaptive road-smoothing algorithm was proposed to reduce the potential fluctuations of generated 3D road segments. Satisfactory results were presented in four test areas on Hong Kong Island.

The results obtained in this paper show that the combination of high-resolution remote sensing images and airborne LiDAR data for geospatial data modeling may take 3D road modeling a step forward, that is, towards the actual exploitation and valorization of high-definition maps serving autonomous driving. It is also important to note that the available airborne LiDAR data do not provide the complete information needed to generate a 3D road model. For example, without elevation (height) information, it is difficult to determine the relative vertical locations of different ramps on a highway interchange. In addition, some existing roads do not meet the design standards (especially some old roads in urban areas), creating potential difficulties for road reconstruction.

Nonetheless, the proposed method takes advantage of multisource remote sensing data, provides an innovative, automated approach for 3D road-network modeling, significantly reduces labor costs, and benefits many road-based applications that require a high level of precision and efficiency. In future studies, the capabilities of the proposed method will be further improved, such as more efficient and accurate simulation of complex overpasses with three or more levels, and pavement markings at complex urban intersections. The proposed method can also be used in several areas (e.g., 3D city modelling, smart cities, smart transportation, etc.). Lane-level road identification and modeling based on multisource remote sensing data is a worthy research direction.

Author Contributions

Conceptualization, L.G. and W.S.; methodology, L.G and P.S.; software, S.S. and Y.L.; validation, Y.L. and F.G.; formal analysis, F.W.; investigation, P.S.; data curation, J.Z.; writing—original draft preparation, L.G.; writing—review and editing, J.Z.; visualization, S.S.; supervision, W.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (42101469 and 41901341), in part by the Open Fund of Key Laboratory of National Geographical Census and Monitoring, Ministry of Natural Resources (2020NGCM08), in part by the Guangdong Basic and Applied Basic Research Foundation (2020A1515111158), in part by the Natural Science Basic Research Program of Shaanxi Province (2021JQ-099).

Acknowledgments

We are grateful to the Survey and Mapping Office of Lands Department of Hong Kong that provided the data for this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Toschi, I.; Ramos, M.M.; Nocerino, E.; Menna, F.; Remondino, F.; Moe, K.; Poli, D.; Legat, K.; Fassi, F. Oblique photogrammetry supporting 3D urban reconstruction of complex scenarios. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 519–526. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Costantino, D.; Alfio, V.S.; Vozza, G.; Cartellino, E. A Novel Method Based on Deep Learning, GIS and Geomatics Software for Building a 3D City Model from VHR Satellite Stereo Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 697. [Google Scholar] [CrossRef]

- Toschi, I.; Nocerino, E.; Remondino, F.; Revolti, A.; Soria, G.; Piffer, S. Geospatial data processing for 3D city model generation, management and visualization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 527–534. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Toschi, I.; Gerke, M.; Nex, F.; Holland, D.; McGill, A.; Lopez, J.T.; Magarinos, A. Oblique aerial imagery for nma-some best practices. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B4, 639–645. [Google Scholar] [CrossRef] [Green Version]

- Das, S.; Mirnalinee, T.T.; Varghese, K. Use of Salient Features for the Design of a Multistage Framework to Extract Roads From High-Resolution Multispectral Satellite Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3906–3931. [Google Scholar] [CrossRef]

- Wegner, J.D.; Montoya-Zegarra, J.A.; Schindler, K. A Higher-Order CRF Model for Road Network Extraction. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2013; pp. 1698–1705. [Google Scholar]

- Cheng, G.; Zhu, F.; Xiang, S.; Wang, Y.; Pan, C. Accurate urban road centerline extraction from VHR imagery via multiscale segmentation and tensor voting. Neurocomputing 2016, 205, 407–420. [Google Scholar] [CrossRef] [Green Version]

- Alshehhi, R.; Marpu, P.R. Hierarchical graph-based segmentation for extracting road networks from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Zhou, M.; Sui, H.; Chen, S.; Wang, J.; Chen, X. BT-RoadNet: A Boundary and Topologically-Aware Neural Network for Road Extraction from High-Resolution Remote Sensing Imagery. ISPRS J. Photogramm. Remote Sens. 2020, 168, 288–306. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, Z.; Huang, X.; Zhang, Y. MRENet: Simultaneous Extraction of Road Surface and Road Centerline in Complex Urban Scenes from Very High-Resolution Images. Remote Sens. 2021, 13, 239. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, J.; Lu, X.; Xia, M.; Wang, X.; Liu, Y. RoadNet: Learning to Comprehensively Analyze Road Networks in Complex Urban Scenes From High-Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2043–2056. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, Y.; Xu, S.; Wang, H.; Xiang, S.; Pan, C. Automatic Road Detection and Centerline Extraction via Cascaded End-to-End Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3322–3337. [Google Scholar] [CrossRef]

- Lu, X.; Zhong, Y.; Zheng, Z.; Liu, Y.; Zhao, J.; Ma, A.; Yang, J. Multi-Scale and Multi-Task Deep Learning Framework for Automatic Road Extraction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9362–9377. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Gao, X.; Sun, X.; Zhang, Y.; Yan, M.; Xu, G.; Sun, H.; Jiao, J.; Fu, K. An End-to-End Neural Network for Road Extraction From Remote Sensing Imagery by Multiple Feature Pyramid Network. IEEE Access 2018, 6, 39401–39414. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Li, J.; Xie, N.; Han, Y.; Du, J. Reconstruction Bias U-Net for Road Extraction From Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2284–2294. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the CVPR Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- Zhu, Q.; Li, Y. Hierarchical lane-oriented 3D road-network model. Int. J. Geogr. Inf. Sci. 2008, 22, 479–505. [Google Scholar] [CrossRef]

- Garcia-Dorado, I.; Aliaga, D.G.; Bhalachandran, S.; Schmid, P.; Niyogi, D. Fast Weather Simulation for Inverse Procedural Design of 3D Urban Models. ACM Trans. Graph. 2017, 36, 1–19. [Google Scholar] [CrossRef]

- Morsy, S.; Shaker, A.; El-Rabbany, A. Multispectral LiDAR Data for Land Cover Classification of Urban Areas. Sensors 2017, 17, 958. [Google Scholar] [CrossRef] [Green Version]

- Tejenaki, S.A.K.; Ebadi, H.; Mohammadzadeh, A. A new hierarchical method for automatic road centerline extraction in urban areas using LIDAR data. Adv. Space Res. 2019, 64, 1792–1806. [Google Scholar] [CrossRef]

- Chen, Y.; Cheng, L.; Li, M.; Wang, J.; Tong, L.; Yang, K. Multiscale Grid Method for Detection and Reconstruction of Building Roofs from Airborne LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4081–4094. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Li, H.T.; Todd, Z.; Bielski, N.; Carroll, F. 3D lidar point-cloud projection operator and transfer machine learning for effective road surface features detection and segmentation. Vis. Comput. 2021. [Google Scholar] [CrossRef]

- Dias, P.; Matos, M.; Santos, V. 3D Reconstruction of Real World Scenes Using a Low-Cost 3D Range Scanner. Comput. Aided Civ. Infrastruct. Eng. 2006, 21, 486–497. [Google Scholar] [CrossRef]

- Zhi, Y.; Gao, Y.; Wu, L.; Liu, L.; Cai, H. An improved algorithm for vector data rendering in virtual terrain visualization. In Proceedings of the 2013 21st International Conference on Geoinformatics, Kaifeng, China, 20–22 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–4. [Google Scholar]

- Thöny, M.; Billeter, M.; Pajarola, R. Deferred Vector Map Visualization. In Proceedings of the SIGGRAPH ASIA 2016 Symposium on Visualization, New York, NY, USA, 5–8 December 2016; Frontiers Research Foundation: Lausanne, Switzerland, 2016; pp. 1–8. [Google Scholar]

- She, J.; Zhou, Y.; Tan, X.; Li, X.; Guo, X. A parallelized screen-based method for rendering polylines and polygons on terrain surfaces. Comput. Geosci. 2017, 99, 19–27. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, H.; Lu, Q.; Lian, Y. 3D Scene Modeling and Rendering Algorithm Based on Road Extraction of Simple Attribute Remote Sensing Image. In Proceedings of the 2018 International Conference on Computer Modeling, Simulation and Algorithm (CMSA 2018); Atlantis Press: Dordrecht, The Netherlands, 2018. [Google Scholar]

- Wang, J.; Lawson, G.; Shen, Y. Automatic high-fidelity 3D road network modeling based on 2D GIS data. Adv. Eng. Softw. 2014, 76, 86–98. [Google Scholar] [CrossRef]

- Wen, C.; You, C.; Wu, H.; Wang, C.; Fan, X.; Li, J. Recovery of urban 3D road boundary via multi-source data. ISPRS J. Photogramm. Remote Sens. 2019, 156, 184–201. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Y.; Han, X.; Xu, M.; Chen, W. Automatic generation of large-scale 3D road networks based on GIS data. Comput. Graph. 2021, 96, 71–81. [Google Scholar] [CrossRef]

- Lian, R.; Wang, W.; Mustafa, N.; Huang, L. Road Extraction Methods in High-Resolution Remote Sensing Images: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5489–5507. [Google Scholar] [CrossRef]

- Sánchez, J.; Rivera, F.; Domínguez, J.; Vilariño, D.; Pena, T. Automatic Extraction of Road Points from Airborne LiDAR Based on Bidirectional Skewness Balancing. Remote Sens. 2020, 12, 2025. [Google Scholar] [CrossRef]

- Xu, S.; Ye, P.; Han, S.; Sun, H.; Jia, Q. Road lane modeling based on RANSAC algorithm and hyperbolic model. In Proceedings of the 2016 3rd International Conference on Systems and Informatics (ICSAI), Shanghai, China, 19–21 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 97–101. [Google Scholar]

- Miao, Z.; Shi, W.; Zhang, H.; Wang, X. Road Centerline Extraction from High-Resolution Imagery Based on Shape Features and Multivariate Adaptive Regression Splines. IEEE Geosci. Remote Sens. Lett. 2012, 10, 583–587. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Road Network Extraction from VHR Satellite Images Using Context Aware Object Feature Integration and Tensor Voting. Remote Sens. 2016, 8, 637. [Google Scholar] [CrossRef] [Green Version]

- Wiedemann, C.; Heipke, C.; Mayer, H.; Jamet, O. Empirical Evaluation of Automatically Extracted Road Axes. In Empirical Evaluation Techniques in Computer Vision; Wiley: Hoboken, NJ, USA, 1998; pp. 172–187. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).