Abstract

The detection of rice leaf folder (RLF) infestation usually depends on manual monitoring, and early infestations cannot be detected visually. To improve detection accuracy and reduce human error, we use push-broom hyperspectral sensors to scan rice images and use machine learning and deep neural learning methods to detect RLF-infested rice leaves. Different from traditional image processing methods, hyperspectral imaging data analysis is based on pixel-based classification and target recognition. Since the spectral information itself is a feature and can be considered a vector, deep learning neural networks do not need to use convolutional neural networks to extract features. To correctly detect the spectral image of rice leaves infested by RLF, we use the constrained energy minimization (CEM) method to suppress the background noise of the spectral image. A band selection method was utilized to reduce the computational energy consumption of using the full-band process, and six bands were selected as candidate bands. The following method is the band expansion process (BEP) method, which is utilized to expand the vector length to improve the problem of compressed spectral information for band selection. We use CEM and deep neural networks to detect defects in the spectral images of infected rice leaves and compare the performance of each in the full frequency band, frequency band selection, and frequency BEP. A total of 339 hyperspectral images were collected in this study; the results showed that six bands were sufficient for detecting early infestations of RLF, with a detection accuracy of 98% and a Dice similarity coefficient of 0.8, which provides advantages of commercialization of this field.

1. Introduction

Rice leaf folder (RLF), Cnaphalocrocis medinalis Guenée, is widely distributed in the rice-growing regions of humid tropical and temperate countries [1], and the developmental time of RLF decreases with an increase in temperature [2]. Due to global warming, RLF has become one of the most important insect pests of rice cultivation [3]. The larvae of RLF fold the leaves longitudinally and feed on the mesophyll tissue within the folded leaves. The feeding of RLF generates lineal white stripes (LWSs) in the early stage and then enlarge into ocher patches (OPs) and membranous OPs [4]. As the infestation of RLF increases, the number and area of OPs will increase. The feeding of RLF not only reduced the chlorophyll content and photosynthesis efficiency [4] but also provided a method for fungal and bacterial infection [5]. Therefore, the severe damage caused by RLF may cause 63–80% yield loss [6], and the highest record of the damaged area to rice cultivation in a single year exceeded 30,000 hectares [7].

The economic injury level of RLF, which is important for the determination of insecticide applications, has been established as 4.2% damaged leaves and 1.3 larvae per plant by the International Rice Research Institute [8]. However, it is laborious and time-consuming to visually inspect for damage. In addition, RLF is a long-distance migratory insect pest. The uncertain timing of the appearance of RLF means that farmers are unable to predict pest arrival, so to avoid damage by undetected infestations, farmers often preventively spray chemical insecticides, which generates unnecessary costs and environmental pollution [9,10].

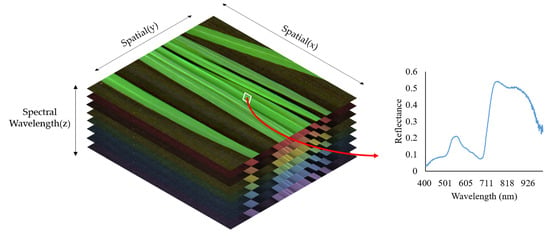

Hyperspectral imaging (HSI) is a novel technique that combines the simultaneous advantages of imaging and spectroscopy and that has been investigated and applied in crop protection [11,12,13,14,15]. HSI, which contains spatial and spectral information, is given in Figure 1. The external damage and internal damage caused by pest infestations, such as yellowing/attenuation/defects and loss of pigments/photosynthetic activity/water content, respectively, can be identified by this system through image or spectral reflectance. Further automatic detection can be fulfilled by taking advantage of pest damage detection algorithms. For instance, constrained energy minimization (CEM) [16] and principal component analysis (PCA) [17] have been employed for band selection, and support vector machines (SVMs) [18], convolutional neural networks (CNNs) [19], and deep neural networks (DNNs) [20] are utilized for classification. Fan et al. [21] applied a visible/near-infrared hyperspectral imaging system to detect early invasion of rice streak insects. Using the successive projection algorithm (SPA) [22], PCA, and a back-propagation neural network (BPNN) [23] as classifiers to identify key wavelengths, the classification accuracy of the calibration and prediction sets was 95.65%. Chen et al. [24] also employed a visible/near-infrared hyperspectral imaging system to acquire images and further developed a hyperspectral insect damage detection algorithm (HIDDA) to detect pests in green coffee beans. The method combines CEM and SVM and achieves 95% accuracy and a 90% kappa coefficient. In addition, spectroscopy technology has been applied to detect plant diseases [25], the quality of agricultural products [26], and pesticide residues [27].

Figure 1.

Two-dimensional projection of a hyperspectral cube.

To effectively manage RLF with a rational application of insecticides, an artificial-intelligent inspection of economic injury levels is necessary. The purpose of this study is to establish a model for detecting early infestation of RLF based on visible light hyperspectral data exploration techniques and deep learning technology. The specific objectives include (1) predefining the region of interest (ROI); (2) data preprocessing through a band selection and band expansion process (BEP); (3) simultaneously combining a deep learning network to train the model and to classify multiple different levels of damage; (4) using an automatic target generation program (ATGP) algorithm [28] to test unknown samples to fully automate the process and optimize the process to shorten the prediction time; and (5) establishing the spectral signatures of damaged leaves caused by RLF, which can serve as an expert system to provide valuable resources for the best timing of insecticide application.

2. Materials and Methods

2.1. Insect Breeding

The RLF in this study was collected from the Taichung District Agricultural Research and Extension Station. The larvae were raised in insect rearing cages (47.5 × 47.5 × 47.5 cm3, MegaView Science Co., Ltd., Taichung, Taiwan) with corn seedlings (agricultural friend seedling Yumeizhen) and maintained at 27 ± 2 °C and 70% relative humidity during a photoperiod of 16:8 h (L:D). The adults were reared in a cage with 10% honey at 27 ± 2 °C and 90% relative humidity, which allows adults to lay more eggs.

2.2. Preparation of Rice Samples

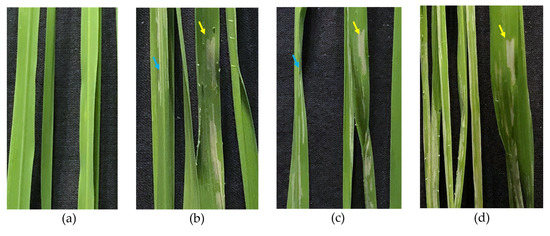

The variety Tainan No. 11, which is the most prevalent cultivar planted in Taiwan, was selected for this study. Larvae were grown in a greenhouse to prevent the infestation of insect pests and diseases. To obtain different levels of damage caused by RLF, e.g., LWS and OP, 1st-, 2nd-, 3rd-, 4th-, or 5th-instar larvae of RLF were manually introduced to infest 40-day-old healthy rice for seven days, and three replicates were conducted for each treatment. Three different types of samples shown in Figure 2, e.g., healthy leaves (HL), LWS, and OP caused by RLF, were prepared for imaging acquisition and spectral information extraction.

Figure 2.

Appearance of healthy and damaged leaf types. (a) Healthy leaves, (b) lineal white stripe (LWS) caused by RLF (blue arrow) and LWS enlarge into ocher patch (OP) (yellow arrow) on Day 1 (D1), (c) LWS and OP on D2, and (d) OP on D6.

2.3. Hyperspectral Imaging System and Imaging Acquisition

2.3.1. Hyperspectral Sensor

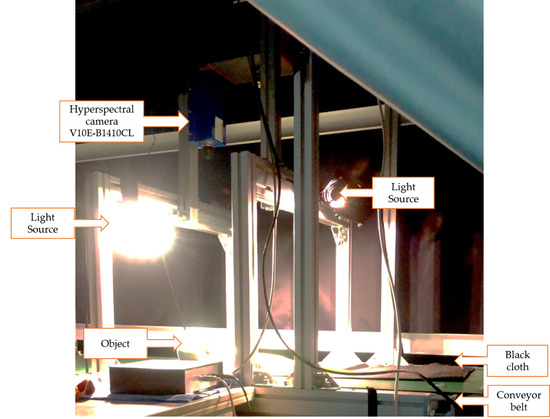

The hyperspectral scanning system employed in the experiment is shown in Figure 3. The hyperspectral image capturing system was composed of the following equipment: hyperspectral sensor, halogen light source, conveyor system, computer, and photographic darkroom isolated from external light sources. The hyperspectral sensor utilized in the study was a V10E-B1410CL sensor (IZUSU OPTICS), which contained visible and near-infrared (VNIR) bands with a spectral range from 380–1030 nm, a resolution of 5.5 nm, and 616 bands for imaging. The type of camera sensor is an Imspector Spectral Camera, SW ver 2.740. The halogen light sources used to illuminate the image were “3900e-ER”, and the power was 150 W. Halogen lights were simultaneously illuminated on the left and right sides and focused on the conveyor track at an incident angle of 45 degrees to reduce shadow interference during the sampling process. The temperature and relative humidity in the laboratory were kept at 25 °C and 60%, respectively. A conveyor belt was designed to deliver rice plants for acquiring hyperspectral images by line scanning (Figure 3). Both the speed of the conveyor belt and the halogen lights were controlled by computer software. The distance between the VNIR sensor and the rice sample was 0.6 m.

Figure 3.

Hyperspectral imaging system.

2.3.2. Image Acquisition

The damage to leaves infested with different RLF larvae (from 1st to 5th instar larvae) for various durations of feeding (1–6 days) was assessed using VNIR hyperspectral imaging. Leaves were placed flat on the conveyor belt to scan the image at every 90° turn to enlarge the dataset. The exposure time for scanning was 5.495 ms, and the number of pixels in each scan raw was 816. Healthy leaves without RLF infestation were selected as the control. Before taking the VNIR hyperspectral images, light correction was conducted, and all processing of images was conducted in a dark box to avoid interference from other light sources. In total, 339 images, including 52 images of healthy leaves and 69, 32, 48, 52, 52, and 34 images of leaves infested for 1 day to 6 days, were taken.

2.3.3. Calibration

To eliminate the impacts of uneven illumination and dark current noise, the object scan, reference dark value, and reference white value are needed to perform the normalization step. To reduce noise and avoid the influence of dark noise, the original hyperspectral image must be calibrated according to the following formula [21]:

where R0 is the raw hyperspectral image, RC is the hyperspectral image after calibration, W is the standard white reference value with a Teflon rectangular bar, and B is the standard black reference value obtained by covering the lens with a lens cap.

2.4. Spectral Information Extraction

Removing the background of the image will help extract useful spectral information and reduce noise. The background removal process performs binary segmentation through the Otsu method, dividing the image into background and meaningful parts with similar features and attributes [29], including healthy, RLF-infested, and other defective leaves. To reduce unnecessary analysis work, the first step is to separate plant pixels from non-plant pixels. This task directly converts the grayscale image from the true-color image or generates a single channel image (grayscale image) based on a simple index (e.g., Excess Green [30]). Second, the threshold value is obtained using the Otsu method; the grayscale value of each pixel point is compared with the threshold value, and the pixel is classified as a target or background based on the result of the comparison [31]. Since plants and backgrounds have very different characteristics, they can be separated quickly and accurately.

Third, the images that had been removed from the background were applied to determine the ROI using the CEM algorithm [16]. CEM has been widely employed for target detection in hyperspectral remote sensing imagery. CEM detects the desired target signal source by using a unity constraint while suppressing noise and unknown signal sources; it also minimizes the average energy of output. This algorithm generates a finite impulse response filter through a given vector as the d value to suppress regions that are not related to the features of the ROI. The vector indicates the spectral reflectance of a pixel in this study, and the ROI was predefined as an RLF-infested region in the images of rice leaves, e.g., Figure 2b,c. The results of the CEM processing of the image show the enhanced characteristics of pixels similar to the target feature d value. Using the Otsu method, if the pixel value exceeds the threshold, the feature similarity is set to 1; otherwise, it is set to 0. Last, a binary image is obtained. This algorithm is an efficient method of pixel-based detection [32].

2.5. Band Selection

Since HSIs usually contain hundreds of spectral bands, full-band analysis of the spectrum is not only time-consuming but also too redundant. To decrease the analysis time and redundancy, the first step of data analysis is to determine the key wavelengths. The way to achieve this goal is to select highly correlated wavelengths by comparing the reflectance and to maximize the representativeness of the information by decorrelation. Various band spectral methods based on certain statistical criteria have been selected to achieve this purpose [33]. The concept of band selection is similar to feature extraction in image processing, which can improve the accuracy of identification and classification.

2.5.1. Band Prioritization

In the band prioritization (BP) part, the priority of the spectral bands will be calculated by statistical criteria [27]. Five criteria—variance, entropy, skewness, kurtosis, and signal-to-noise ratio (SNR)—were chosen to calculate the priority of the spectral bands in this work. Thus, each spectral band has a priority and can be ranked with high priority.

2.5.2. Band Decorrelation

When applying BP in the band selection process, the correlation between each band will highly affect the priority score. Neighboring bands will frequently be selected because of the high correlation between each band. Nevertheless, these redundant spectral bands are not helpful for improving detection performance. Therefore, to solve this problem, band decorrelation (BD) is utilized to remove these redundant spectral bands.

In this study, spectral information divergence (SID) [34] was applied for BD and utilized to measure the similarity between two vectors. By calculating the SID value, a threshold will be set to remove the bands with high similarity. The formula is:

The parameter “b” represents a vector of spectral information, and denotes Kullback–Leibler divergence, that is, the average amount of difference between the self-information of and the self-information of , and vice versa.

2.6. Band Expansion Process

Although the band selection-acquired spectral images can reduce storage space and processing time, some of the original features of the spectra were lost. To solve the problem of information loss after band selection, the difference in reflectivity can be increased by expanding the band to increase the divergence. The concept of the BEP [35] is derived from the fact that a second-order random process is generally specified by its first-order and second-order statistics. These correlated multispectral images provide missing but useful second-order statistical information about the original hyperspectral images. The second-order statistical information utilized for the BEP includes autocorrelation, cross-correlation, and nonlinear correlation to create nonlinearly correlated images. The concept of generating second-order, correlated band images coincide with the concept of covariance functions employed in signal processing to generate random processes. Even though there may be no real physical inference for the band expansion process, it does provide an important advantage for addressing the problem of an insufficient number of spectral bands.

2.7. Data Training Models

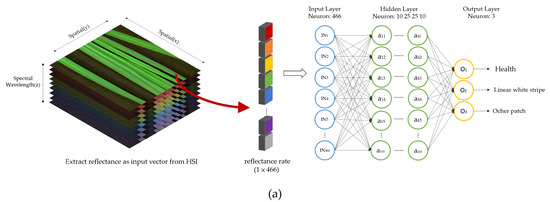

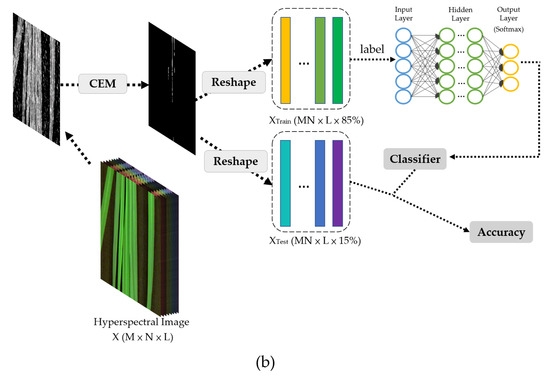

Hyperspectral imaging data analysis is based on pixel-based classification and target recognition, using low-level features (such as spectral reflectance and texture) as the bedding, and the output feature representation at the top of the network can be directly input to subsequent classifiers for pixel-based classification [36]. The classification of this pixel is particularly suitable for deep learning algorithms to learn representative and discriminative features from the data in a hierarchical manner. In this study, the input neuron is the reflectance of a pixel. The input layer has 466 neurons in the full band, 6 neurons after band selection, and 27 neurons after band expansion. As shown in Figure 4a,b, the reflectivity of the HL, D1 OP, and D6 OP samples was divided into three categories. The model is trained with four hidden layers, and the learning rate parameter is 0.001. A softmax classifier was provided in the DNN terminal, and the classification results of the spectrum were obtained. The classified result was compared with the ground truth to calculate the accuracy. The model repeated the cross-validation ten times and averaged it as its overall accuracy (OA).

Figure 4.

(a) DNN model architecture. (b) Flowchart of classifying reflectance using DNN.

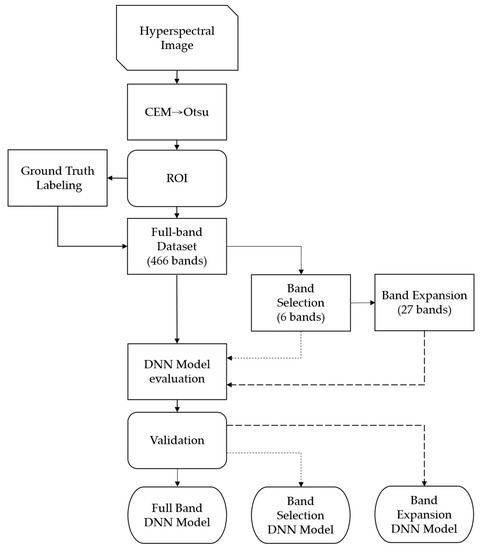

Figure 5 depicts the data training flowchart of this study, starting with hyperspectral image capture. First, the reflectivity is extracted from the ROI as a ground truth, which was selected by the entomologist. Second, the reflectance dataset applied in the full-band spectrum was processed in the same way to build DNN models after band selection. Last, the band selection dataset was processed by the BEP to build a DNN model.

Figure 5.

Data training flowchart of full bands, band selection, and band expansion process.

The DNN model is constructed using three processes: full bands, band selection, and BEP. Each classification model has the best weight evaluated by its own model. Three DNN classification process models are constructed based on randomly distributed datasets, including 70% training, 15% validation, and 15% testing (as shown in Table 1). In the testing phase, the accuracy of each classification situation will be compared, and the OA of multiple classifications will be integrated. As a result, the most suitable model for identifying the classification was obtained.

Table 1.

Number of pixels used for band section, training, and testing in the rice dataset.

2.8. Model Test for Unknown Samples

To apply the spectral reflectance of unknown samples of healthy leaves, early and late OPs leave machine learning. The first step is to quickly determine the ROI to reduce the time required for image recognition. To achieve this goal, a method that combines an ATGP [28] and CEM is proposed. The ATGP is an unsupervised target recognition method that uses the concept of orthogonal subspace projection (OSP) to find a distinct feature without a priori knowledge. The ATGP method was employed to identify the target pixel in the hyperspectral image, and all the similar pixel data obtained were averaged as the d value of CEM.

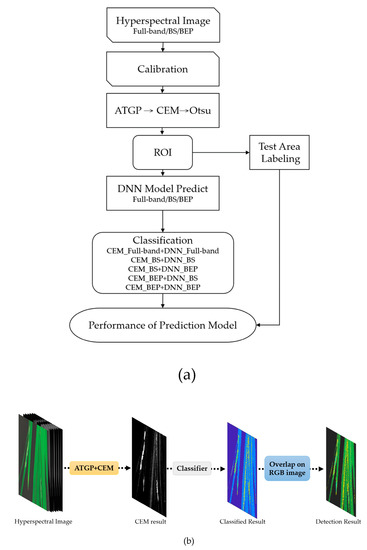

Figure 6a,b shows the flow chart of the unknown sample prediction model. To automate the detection process, first, the full-band HSI, band selection, and BEP of the rice sample must be calibrated. Second, through the combined method of the ATGP and CEM, the Otsu method is utilized to mark the ROI. The ROI obtained from the full band, band selection, and BEP is classified by the corresponding DNN model and is labeled HL, early OP, or late OP by entomologists according to the occurrence of damage caused by RLF. The labeled ROI will be utilized to verify the prediction results of the DNN model. Five analysis methods, such as CEM_Full-band→DNN_Full-band, CEM_band selection→DNN_band selection, CEM_band selection→DNN_BEP, CEM_BEP→DNN_band selection, and CEM_BEP→DNN_BEP, are established to evaluate the prediction performance.

Figure 6.

(a) Flow chart of the DNN model is used to predict unknown samples. (b) Flow chart of DNN model prediction.

Last, the model classification results were visualized and overlaid on the original true-color images, and agricultural experts verified the actual situation afterward to compare the performance of the models.

2.9. Predict Unknown Samplings

After a cross-validated predictive model has been established, a completely unknown sample with different data from the training set is needed to test its robustness. Eligible samples were obtained from the field. To fix other conditions, the retrieved samples were also photographed with a push-broom hyperspectral camera.

Many different evaluation metrics have been mentioned in the literature. The confusion matrix [37] was selected as a measure of model accuracy. A true positive (TP) is a correct detection of the ground truth. A false positive (FP) is an object that is mistaken as true. A false negative (FN) is an object that is not detected, although it is positive.

However, it is not enough to rely on the confusion matrix alone. An additional pipeline of common evaluation metrics was needed to facilitate a better comparison of classification models. The following metrics were employed for the evaluation in this study:

- (i)

- recall

- (ii)

- precision

- (iii)

- Dice similarity coefficient

The recall is the ability of the model to detect all relevant objects, i.e., the ability of the model to detect all detected bounding boxes of the validation set. Precision is the ability of the model to identify only relevant objects. The Dice similarity coefficient (DSC) is an ensemble similarity measure function that is usually applied to calculate the similarity between two samples in the value range between 0 when there is no overlap and 1 when there is complete overlap.

3. Results and Discussion

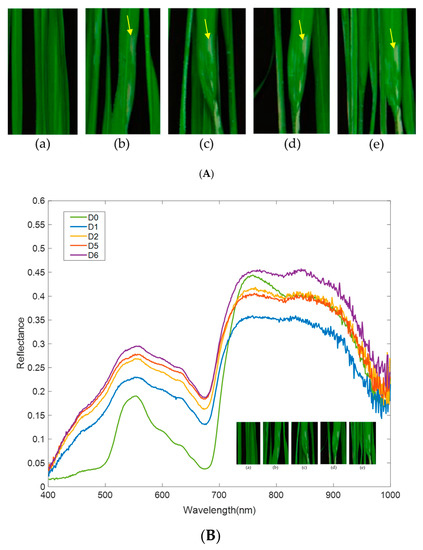

3.1. Images and Spectral Signatures of Healthy and RLF-Infested Rice Leaves

When larvae of RLF feed on rice leaves, they generate LWS or OP on the leaves. As time passes, the LWSs are enlarged into a patch; the color of the patch gradually turns from white to ocher; and the images and spectral signatures of these patches also change during this process, as shown in Figure 7a,b, respectively. The spectral signatures of HL and OP in Figure 7b were obtained manually, according to entomological experts. The OPs have higher reflectance than HL in the blue to red wavelength range. Among these spectral bands, the longer the infestation period is, the higher the reflectance, e.g., day 6 (D6) > D5 > D2 > D1. However, only the reflectance of D6 OP is higher than that of HL at the NIR wavelength (Figure 7b). The reflectance of D1-OP is much lower than the HL reflectance, and the reflectance of D2- and D5-OPs is approximately the same as that of healthy leaves. The decrease in reflectance in D1 OP at NIR was mainly due to the destruction of leaf structure, which caused photon scattering [38]. These results suggest that the early defects caused by RLF have very different spectral signatures of vectors from the subsequent damage of infestation. Differences in the spectral properties between the early phase of damage and the late phase of damage, which could serve as a basis for the early identification of RLF infestations.

Figure 7.

(A) Hyperspectral images of healthy leaves on day 0 (a) and ocher patches (yellow arrow) infested by rice leaf folders on day 1 (b), day 2 (c), day 5 (d), and day 6 (e). (B) Spectral signature and corresponding hyperspectral images of the healthy leaves (D0) and ocher patches (from D1 to D6) caused by RLF.

3.2. Band Selection and Band Expansion Process

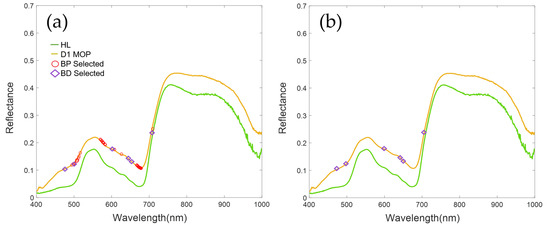

The HSI and spectral signature from the full band system shown in Figure 7a,b contain considerable redundant information that slow the analysis efficiency and consume too much storage space. Therefore, band selection and the BEP were employed to select the most informative bands to increase the analysis efficiency and reduce storage space. To more effectively detect early RLF infection, the number of training sessions for HL and D1 OP was 5%, as shown in Table 1; these sessions were chosen to perform band selection. Five criteria were utilized in BP to calculate the priority of each band from the full-band signature of HL and D1 OP, and then, a value of 2.5 for SID was chosen as the threshold for BD to remove the adjacent bands with high similarity for D1 OP. Six bands of 489, 501, 603, 664, 684, and 705 nm, which had the largest difference in reflectance between HL and D1 OP, were selected as candidates through BP and BD using the criteria of entropy (Figure 8a,b). To adapt to the cheaper and easy-to-use, six-band handheld spectrum sensor, we only chose the six-band spectrum. The results of band selection using the other four criteria are shown in Supplementary Table S1 and Figure S1. Furthermore, the six bands were expanded to 27 bands using the BEP to improve the deficiency caused by band selection.

Figure 8.

Bands selected through band prioritization (a) and band decorrelation (b) using criteria of entropy. Red circles denote bands selected from band prioritization, and purple diamonds denote bands selected after band decorrelation.

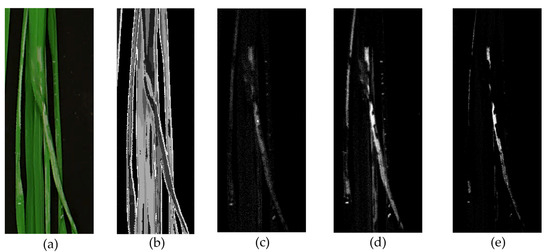

3.3. ROI Detection with CEM in Full Bands, Band Selection, and Band Expansion Process

CEM, a standard linear detector, was selected as a filter in this study to quickly identify the ROI. CEM increases the accuracy of automated detection and reduces the analysis time. The spectral signature of the OP that appeared on D1 in Figure 7b was employed as the d value of CEM to detect damaged leaves caused by RLF. Figure 9 shows the effect of different degrees of enhancement on ROI detection in the case of the full band, band selection, and BEP and the results of k-means clustering as a contrast. In the case of full bands, very minimal damage caused by RLF was detected (Figure 9b). The abundance of spectral data increases the complexity of detection and reduces the spectral reflectance resulting from RLF. On the other hand, the ROI detection in the cases of band selection reveals almost all the damage shown in Figure 9a. This finding indicates that band selection can achieve the best performance in ROI detection through CEM (Figure 9c). In the case of the BEP, the result of CEM is better than the full bands but not as good as band selection (Figure 9d).

Figure 9.

Region of interest detection with k-means (k = 10) or constrained energy minimization algorithm on different datasets using the reflectance of the D1 ocher patch as a d value on rice leaves. (a) True-color image, (b) k-means in full bands, (c) CEM in full bands, (d) band selection, and (e) band expansion process.

3.4. DNN Model for Classification of Testing Dataset

The DNN multilayer perceptron model is suited for HSI data for classification because the spectral reflection of each pixel can form a vector. Even if we have fewer images, we can still use enough pixels as samples for analysis. Therefore, this study does not require thousands of images to train a set of deep learning models, which greatly reduces the tedious work of collecting samples and the difficulty of controlling sample conditions.

Table 2 describes the results of the OA verification using the DNN models of the full bands, band selection (6 bands), and BEP (27 bands). The confusion matrix [37] was utilized to evaluate the classification performance; the complete confusion matrix calculated for DNN classification is shown in Supplementary Figure S2. In the case of full bands, the OA (95%) and performance are the best in the classification of various situations, but a longer time (14.88 s) is needed than band selection and BEP in classification. The application of band selection saves approximately half the time of full bands, but it will also reduce the classification accuracy. Except for HL, the accuracy of early and late OPs decreased after band selection, which may be attributed to a decrease in some spectral information. The accuracy of the BEP is not higher than that of band selection, as expected, and it is possible that BEP amplifies the noise and interferes with the classification ability. Among the five criteria, the OA of classification is the best among the bands selected by entropy. In terms of entropy, the accuracy of early OP from band selection is approximately 4% higher than that from BEP.

Table 2.

Results for the testing dataset for DNN classification in different bands. The best performance is highlighted in red.

3.5. Prediction of Unknown Samples

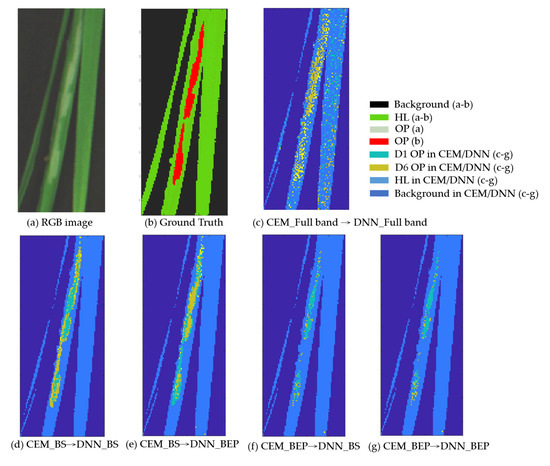

The predictions were carried out using ROIs obtained from full bands, band selection, and the BEP, as shown in Figure 6. CEM was applied to suppress the background and to detect the ROI. The DNN models of full bands, band selection, and the BEP were used as classifiers to predict unknown samples through five analysis methods. For band selection and the BEP, bands selected by entropy were selected as examples according to the results of Table 2 to execute the prediction. Figure 10 shows the results of the true-color image (a), ground truth (b), and predictions from an unknown sample (c–g). The ground truth was determined by entomologists and given different colors to distinguish HL (green) from OP (red). Figure 10c–g shows the classification results from the full bands and band selection/BEP, respectively, which were also colored for visualization. Figure 10d,e shows the best results as expected, in which the predicted areas of the ROI were approximately the same as the ground truth (Figure 10). However, the predicted ROI in Figure 10c was distributed over the rice leaves in addition to the ROI of the ground truth.

Figure 10.

Prediction of spectral information from unknown rice sample: (a) true-color image, (b) Ground Truth, (c) CEM_Full-band→DNN_Full-band, (d) CEM_band selection→DNN_band selection, (e) CEM_band selection→DNN_BEP, (f) CEM_BEP→DNN_band selection, and (g) CEM_BEP→DNN_BEP.

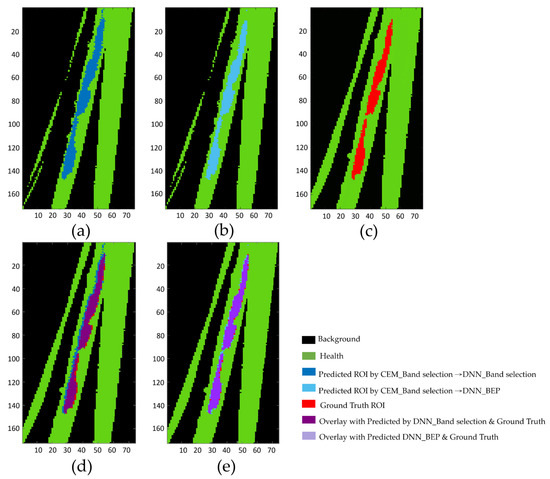

The performance of the pixel classification of DNN models was verified by comparing the prediction results with ground truth using a confusion matrix; the results are shown in Table 3 and Table 4. Similar to the results in Figure 10, the analysis methods show that CEM_band selection→DNN_band selection showed the best prediction performance (Table 3) because this method showed the highest TP (correct identification of OP) and overall accuracy (OA) and the lowest FN (misidentification of OP). However, very high false positives (FPs) were obtained from the methods of CEM_Full-band→DNN_Full-band, CEM_band selection→DNN_band selection, and CEM_band selection→DNN_BEP (Figure 10c–e). The high FP value of CEM_Full-band→DNN_Full-band may be derived from the scattered distribution of predicted ROI, while the high FP values of CEM_band selection→DNN_band selection and CEM_band selection→DNN_BEP predicted area of ROI may be derived from the predicted areas of ROI that are undetectable by the naked eye. To prove the above observation, the images of Figure 10d or Figure 10e were overlaid with ground truth (Figure 10b). The extra predicted area around the ROI of ground truth in Figure 11d,e should be the early infestation of RLF that cannot be detected by human eyes.

Table 3.

Accuracy of DNN classification evaluated by the confusion matrix.

Table 4.

Evaluation metrics of DNN prediction. The best performance is highlighted in red color.

Figure 11.

Overlaid images of the predicted ROI with the ground-truth ROI for evaluating the performance of DNN classification. (a) Predicted ROI with CEM_band selection→DNN_band selection, (b) predicted ROI with CEM_band selection→DNN_BEP, (c) Ground Truth, (d) Overlay with (a,c,e) Overlay with (b,c).

To verify the necessity of using CEM to extract ROI, the DNN classification results of the background-removed images are shown in Supplementary Table S2 and Figure S3. The results show that the accuracy of DNN classification after CEM processing is approximately 22% higher than that of the DNN applied directly to remove the background.

The performance of DNN classification was further evaluated by the metrics of recall, precision, accuracy, and DSC, as shown in Table 4. The analysis method of CEM_band selection→DNN_band selection was again rated as the best model for predicting unknown samples, as it had the highest accuracy, recall, and DSC and took the shortest time. Although the analysis method of CEM_band selection→DNN_BEP also showed reasonably good performance, the overall results indicated that six bands obtained from band selection are good enough to detect the early OP caused by RLF. The analysis method of CEM_BEP→DNN_band selection has the highest precision, but its recall and DSC are lower than those of CEM_band selection→DNN_band selection and CEM_band selection→DNN_BEP.

Taking the OP as an example, the pixels of the ROI were utilized for prediction evaluation, and a confusion matrix was employed for performance in this study. As shown in Table 4, all analysis methods were successful in classification, and their accuracies reached at least 95%. The area of the block classified as OP is smaller than the actual situation, which is the case in Figure 11f. As shown in Figure 11d, CEM_band selection→DNN_band selection, the distribution of false positives was observed around the OP, which means that the earlier defects caused by insect pests could be identified as false-positive areas in hyperspectral images but could not be recognized in true-color images or human eyes.

3.6. Discussion

Automatic detection of plant pests is extremely useful because it reduces the tedious work of monitoring large paddy fields and detects the damage caused by RLF at the early stage of pest development and eventually stop further plant degradation. This study proposes an automatic detection method that combines CEM and the ATGP. CEM is an efficient hyperspectral detection algorithm that can efficiently handle subpixel detection [39]. The quality of the CEM results is determined by the d-value used as a reference. Therefore, it is important to provide a plausible spectral feature. The ATGP was applied to identify the most representative feature vector as the d-value from an unknown sample. Another problem with the CEM is that it only provides a rough detection result. The DNN was selected to classify the reflectance of the ATGP→CEM detection results. In addition, band selection and the BEP were chosen to identify the key wavelengths among the five criteria to save time and improve accuracy. The accuracy of CEM_band selection→DNN_band selection in predicting the performance of unknown samples reached 98.1%. Traditional classifiers such as linear SVM (support vector machine) and LR (logistic regression) can be attributed to single-layer classifiers, while decision trees or SVM with kernels are considered to have two layers [40,41]. However, deep neural architectures with more layers can potentially extract abstract and invariant features for better image or signal classification [42]. Our previous studies to detect Fusarium wilt on Phalaenopsis have shown this result [43]. In addition, we have used the Entoscan Plant imaging system to detect the infestation of RLF, but this system only covers 16 bands (390, 410, 450, 475, 520, 560, 625, 650, 730, 770, 840, 860, 880, 900, 930, and 960 nm) to obtain the Normalized Difference Vegetation Index. The results are shown in Supplementary Figure S4. It may not be specific enough to distinguish the damage caused by different pests. Therefore, we attempt to find a more representative vector from the spectral fingerprint of the hyperspectral imaging system to detect the infestation of RLF. At the same time, the band selection was used to remove redundant information to achieve the time required for the automatic detection process. It is not only reducing the time by 2.45 times (from 8′11″ to 3′20″) but also reach higher accuracy (0.981) than that (0.951) in the full band. The time required for each stage of the prediction process is shown in Supplementary Figure S5. The six bands (489, 501, 603, 664, 684, and 705 nm) obtained through band selection are more representative than bands supplied by the Entoscan Plant imaging system and can be applied to the multispectral sensor of UAVs and portable instruments for field use. The methods, algorithms, and models we established in this paper will be applied to other important rice insect pests and verified in the field by using either UAVs or portable instruments that carry the multispectral sensor. In addition, a platform to integrate all this information will be established to interact with farmers.

Other studies [44,45] used conventional true-color images, which can only classify spatial information based on their color and shape and identify damage that is clearly visible by the naked eye. Compared with previous studies, the DNN was based on high spectral sensors to provide spectral information, which can detect pixel-level targets and retain the spatial information of the original image. The authors [44,45] employed the CNN to detect pests and achieved a classification accuracy of 90.9% and 97.12%, respectively. The method proposed in this paper is slightly higher than the final accuracy of CNN. Although it can simultaneously classify multiple insect pests and diseases, it often causes confusion. In addition, their studies were conducted with images of the late damage stage and could not classify the level of infestation. In addition, most image classifications are trained by a CNN. CNNs often need to collect a large number of training samples, and it is difficult to obtain a large number of sufficient training images in a short period of time. In contrast, hyperspectral image classification based on spectral pixels can be trained by a DNN, which means that even a single hyperspectral image can have a large amount of data for training.

4. Conclusions

HSI techniques can provide a real-time monitoring system to guide the precise application and reduction of pesticides and to provide objective and effective options for the automatic detection of crop damage caused by insect pests or diseases. In this research, we propose a deep learning classification and detection method that is based on band selection and a BEP that can be applied to determine the lowest cost to achieve the monitoring of leaf defects caused by RLF. To compensate for the deficiencies caused by band selection, the BEP method was selected to improve the detection efficiency. The results of the test dataset show that the use of the full-band classification is the best, and the band selection classification is better than the BEP. Except for criteria on skewness and signal-to-noise ratio, the accuracy of full-band classification is nearly 95%.

After using the trained model to predict the unknown samples, the results show that the CEM_band selection→DNN_band selection analysis method is the best model and has reached the expected prediction. The maximum DSC is 0.80, which means that its classification is 80%, which is identical to the classification recognized by entomologists. In addition, we discovered that the predictive area of the model was larger than the area observed by the human eye. This phenomenon may indicate that RLF damage may produce changes in parts of the spectrum that cannot be easily detected by the human eye. In addition, comparing the implementation of prediction operations based on the full-band DNN model and the band selection-based DNN model, the band selection method only needs 1% of the full-band time, which provides a vast potential for wider applications and has good rice identification capabilities. Only six bands are needed while reducing the technical cost required for on-site monitoring.

By providing more training data, the method also has significant room for improvement by implementing a data argumentation process or extending other data, such as the mean or variance-generating structures. While the current research has only been conducted in the laboratory or used non-specified multispectral images in the field, the handheld six-band sensor provided very good results, and its portability means that it could be adapted for use in the field to obtain realistic multispectral images on-site using band Selection methods. In addition, most of the existing UAVs use CNN or vegetation indices for analysis and have not been studied much in spectral reflectance. As mentioned in Section 3.5, the HSI prediction model can detect infested areas before noticed by the human eye. This technique can be extended to UAV in the future to monitor the invisible spectral changes on the leaf surface. This technology can be extended to UAV in the future to monitor the invisible spectral changes on the leaves. Combining HSI techniques and deep learning classification models could provide real-time surveys that give on-site early warning of damage.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/rs13224587/s1, Figure S1: Bands selected through band prioritization and band decorrelation, Figure S2: Confusion Matrix result of DNN model, Figure S3: Prediction of spectral information from unknown rice sample, Figure S4: Entoscan Plant imaging system, Figure S5: Approximate time required for each step of the prediction of unknown samples, Table S1: Results of the first six bands of band selection using different criteria, Table S2: The accuracy of DNN classification evaluated by confusion matrix.

Author Contributions

Conceptualization, Y.-C.O. and S.-M.D.; methodology, Y.-C.O. and S.-M.D.; software, Y.-C.O.; validation, Y.-C.O. and S.-M.D.; formal analysis, G.-C.L.; investigation, G.-C.L.; resources, Y.-C.O. and S.-M.D.; data curation, G.-C.L.; writing—original draft preparation, G.-C.L.; writing—review and editing, Y.-C.O. and S.-M.D.; visualization, G.-C.L.; supervision, Y.-C.O. and S.-M.D.; funding acquisition, Y.-C.O. and S.-M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology (MOST), Taiwan (Grant No. MOST 107-2321-B-005-013, 108-23321-B-005-008, and 109-2321-B-005-024), and Council of Agriculture, Taiwan (Grant No. 110AS-8.3.2-ST-a6). The APC was funded by MOST 109-2321-B-005-024.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are grateful to Chung-Ta Liao from Taichung District Agricultural Research and Extension Station for RLF collection and maintenance. We would also like to thank the publication subsidy from the Academic Research and Development of NCHU.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, Z.R.; Barrion, A.T.; Litsinger, J.A.; Castilla, N.P.; Joshi, R.C. A bibliography of rice leaf folders (Lepidoptera: Pyralidae)-Mini review. Insect Sci. Appl. 1988, 9, 129–174. [Google Scholar]

- Park, H.H.; Ahn, J.J.; Park, C.G. Temperature-dependent development of Cnaphalocrocis medinalis Guenée (Lepidoptera: Pyralidae) and their validation in semi-field condition. J. Asia Pac. Entomol. 2014, 17, 83–91. [Google Scholar] [CrossRef][Green Version]

- Bodlah, M.A.; Gu, L.L.; Tan, Y.; Liu, X.D. Behavioural adaptation of the rice leaf folder Cnaphalocrocis medinalis to short-term heat stress. J. Insect Physiol. 2017, 100, 28–34. [Google Scholar] [CrossRef] [PubMed]

- Padmavathi, C.; Katti, G.; Padmakumari, A.P.; Voleti, S.R.; Subba Rao, L.V. The effect of leaffolder Cnaphalocrocis medinalis (Guenee) (Lepidoptera: Pyralidae) injury on the plant physiology and yield loss in rice. J. Appl. Entomol. 2013, 137, 249–256. [Google Scholar] [CrossRef]

- Pathak, M.D. Utilization of Insect-Plant Interactions in Pest Control. In Insects, Science and Society; Pimentel, D., Ed.; Academic Press: London, UK, 1975; pp. 121–148. [Google Scholar]

- Murugesan, S.; Chelliah, S. Yield losses and economic injury by rice leaf folder. Indian J. Agri. Sci. 1987, 56, 282–285. [Google Scholar]

- Kushwaha, K.S.; Singh, R. Leaf folder (LF) outbreak in Haryana. Int. Rice Res. Newsl. 1984, 9, 20. [Google Scholar]

- Bautista, R.C.; Heinrichs, E.A.; Rejesus, R.S. Economic injury levels for the rice leaffolder Cnaphalocrocis medinalis (Lepidoptera: Pyralidae): Insect infestation and artificial leaf removal. Environ. Entomol. 1984, 13, 439–443. [Google Scholar] [CrossRef]

- Heong, K.L.; Hardy, B. Planthoppers: New Threats to the Sustainability of Intensive Rice Production Systems in Asia; International Rice Research Institute: Los Banos, Philippines, 2009. [Google Scholar]

- Norton, G.W.; Heong, K.L.; Johnson, D.; Savary, S. Rice pest management: Issues and opportunities. In Rice in the Global Economy: Strategic Research and Policy Issues for Food Security; Pandey, S., Byerlee, D., Dawe, D., Dobermann, A., Mohanty, S., Rozelle, S., Hardy, B., Eds.; International Rice Research Institute: Los Baños, Philippines, 2010; pp. 297–332. [Google Scholar]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods. 2017, 13, 80. [Google Scholar] [CrossRef]

- Sytar, O.; Brestic, M.; Zivcak, M.; Olsovska, K.; Kovar, M.; Shao, H.; He, X. Applying hyperspectral imaging to explore natural plant diversity towards improving salt stress tolerance. Sci. Total Environ. 2017, 578, 90–99. [Google Scholar]

- Thomas, S.; Kuska, M.T.; Bohnenkamp, D.; Brugger, A.; Alisaac, E.; Wahabzada, M.; Behmann, J.; Mahlein, A.-K. Benefits of hyperspectral imaging for plant disease detection and plant protection: A technical perspective. J. Plant. Dis. Prot. 2017, 125, 1–16. [Google Scholar] [CrossRef]

- Zhao, Y.; Yu, K.; Feng, C.; Cen, H.; He, Y. Early detection of aphid (myzus persicae) infestation on chinese cabbage by hyperspectral imaging and feature extraction. Trans. Asabe 2017, 60, 1045–1051. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, W.; Qiu, Z.; Cen, H.; He, Y. A novel method for detection of pieris rapae larvae on cabbage leaves using nir hyperspectral imaging. Appl. Eng. Agric. 2016, 32, 311–316. [Google Scholar]

- Harsanyi, J.C. Detection and Classification of Subpixel Spectral Signatures in Hyperspectral Image Sequences. Ph.D. Thesis, Department of Electrical Engineering, University of Maryland Baltimore County, College Park, MD, USA, August 1993. [Google Scholar]

- Pearson, K. LIII. On lines and planes of closest fit to systems of points in space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J.C. A framework for evaluating land use and land cover classification using convolutional neural networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Wang, T.; Qiu, Z.; Peng, J.; Zhang, C.; He, Y. Fast Detection of Striped Stem-Borer (Chilo suppressalis Walker) Infested Rice Seedling Based on Visible/Near-Infrared Hyperspectral Imaging System. Sensors 2017, 17, 2470. [Google Scholar] [CrossRef]

- Araujo, M.C.U.; Saldanha, T.C.B.; Galvao, R.K.H.; Yoneyama, T.; Chame, H.C.; Visani, V. The successive projections algorithm for variable selection in spectroscopic multicomponent analysis. Chemom. Intell. Lab. Syst. 2001, 57, 65–73. [Google Scholar] [CrossRef]

- Al-Allaf, O.N.A. Improving the performance of backpropagation neural network algorithm for image compression/decompression system. J. Comput. Sci. 2010, 6, 834–838. [Google Scholar]

- Chen, S.Y.; Chang, C.Y.; Ou, C.S.; Lien, C.T. Detection of Insect Damage in Green Coffee Beans Using VIS-NIR Hyperspectral Imaging. Remote Sens. 2020, 12, 2348. [Google Scholar] [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.; Liu, L.; Wang, J. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precis. Agric. 2007, 8, 187–197. [Google Scholar] [CrossRef]

- Dang, H.Q.; Kim, I.K.; Cho, B.K.; Kim, M.S. Detection of Bruise Damage of Pear Using Hyperspectral Imagery. In Proceedings of the 12th International Conference on Control Automation and Systems, Jeju Island, Korea, 17–21 October 2012; pp. 1258–1260. [Google Scholar]

- Ma, K.; Kuo, Y.; Ouyang, Y.C.; Chang, C. Improving pesticide residues detection using band prioritization and constrained energy minimization. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4802–4805. [Google Scholar]

- Ren, H.; Chang, C.-I. Automatic spectral target recognition in hyperspectral imagery. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1232–1249. [Google Scholar]

- Kaur, D.; Kaur, Y. Various Image Segmentation Techniques: A Review. Int. J. Comput. Sci. Mob. Comput. 2014, 3, 809–814. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification under Various Soil, Residue and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Senthilkumaran, N.; Vaithegi, S. Image Segmentation by Using Thresholding Techniques for Medical Images. Comput. Sci. Eng. Int. J. 2016, 6, 1–13. [Google Scholar]

- Chang, C.-I. Target signature-constrained mixed pixel classification for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1065–1081. [Google Scholar] [CrossRef]

- Chang, C.I.; Du, Q.; Sun, T.-L.; Althouse, M. A joint band prioritization and band-decorrelation approach to band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote. Sens. 1999, 37, 2631–2641. [Google Scholar] [CrossRef]

- Chang, C.I.; Liu, K.H. Progressive band selection of spectral unmixing for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2002–2017. [Google Scholar] [CrossRef]

- Ouyang, Y.C.; Chen, H.M.; Chai, J.W.; Chen, C.C.; Poon, S.K.; Yang, C.W.; Lee, S.K.; Chang, C.I. Band expansion process-based over-complete independent component analysis for multispectral processing of magnetic resonance images. IEEE Trans. Biomed. Eng. 2008, 55, 1666–1677. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Knipling, E.B. Physical and physiological basis for the reflection of visible and near-infrared radiation from vegetation. Remote Sens. Environ. 1970, 1, 155–159. [Google Scholar] [CrossRef]

- Youden, W.J. Index for rating diagnostic tests. Cancer 1950, 3, 32–35. [Google Scholar] [CrossRef]

- Chen, S.-Y.; Lin, C.; Tai, C.-H.; Chuang, S.-J. Adaptive Window-Based Constrained Energy Minimization for Detection of Newly Grown Tree Leaves. Remote Sens. 2018, 10, 96. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Bengio, Y.; LeCun, Y. Scaling Learning Algorithms towards AI. In Large-Scale Kernel Machines; MIT Press: Cambridge, MA, USA, 2007; pp. 1–41. ISBN 1002620262. [Google Scholar]

- Bengio, Y.; Courville, A.C.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE TPAMI 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Hsu, Y.; Ouyang, Y.C.; Lu, Y.L.; Ouyang, M.; Guo, H.Y.; Liu, T.S.; Chen, H.M.; Wu, C.C.; Wen, C.H.; Shin, M.S.; et al. Using Hyperspectral Imaging and Deep Neural Network to Detect Fusarium Wilton Phalaenopsis. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARS, Brussels, Belgium, 11–16 July 2021; pp. 4416–4419. [Google Scholar]

- Mique, E.L.; Palaoag, T.D. Rice Pest and Disease Detection Using Convolutional Neural Network. In Proceedings of the 2018 International Conference on Information Science and System, Jeju Island, Korea, 27–29 April 2018; pp. 147–151. [Google Scholar]

- Rahman, C.R.; Arko, P.S.; Ali, M.E.; Iqbal Khan, M.A.; Apon, S.H.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).