Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information

Abstract

1. Introduction

2. Methodology

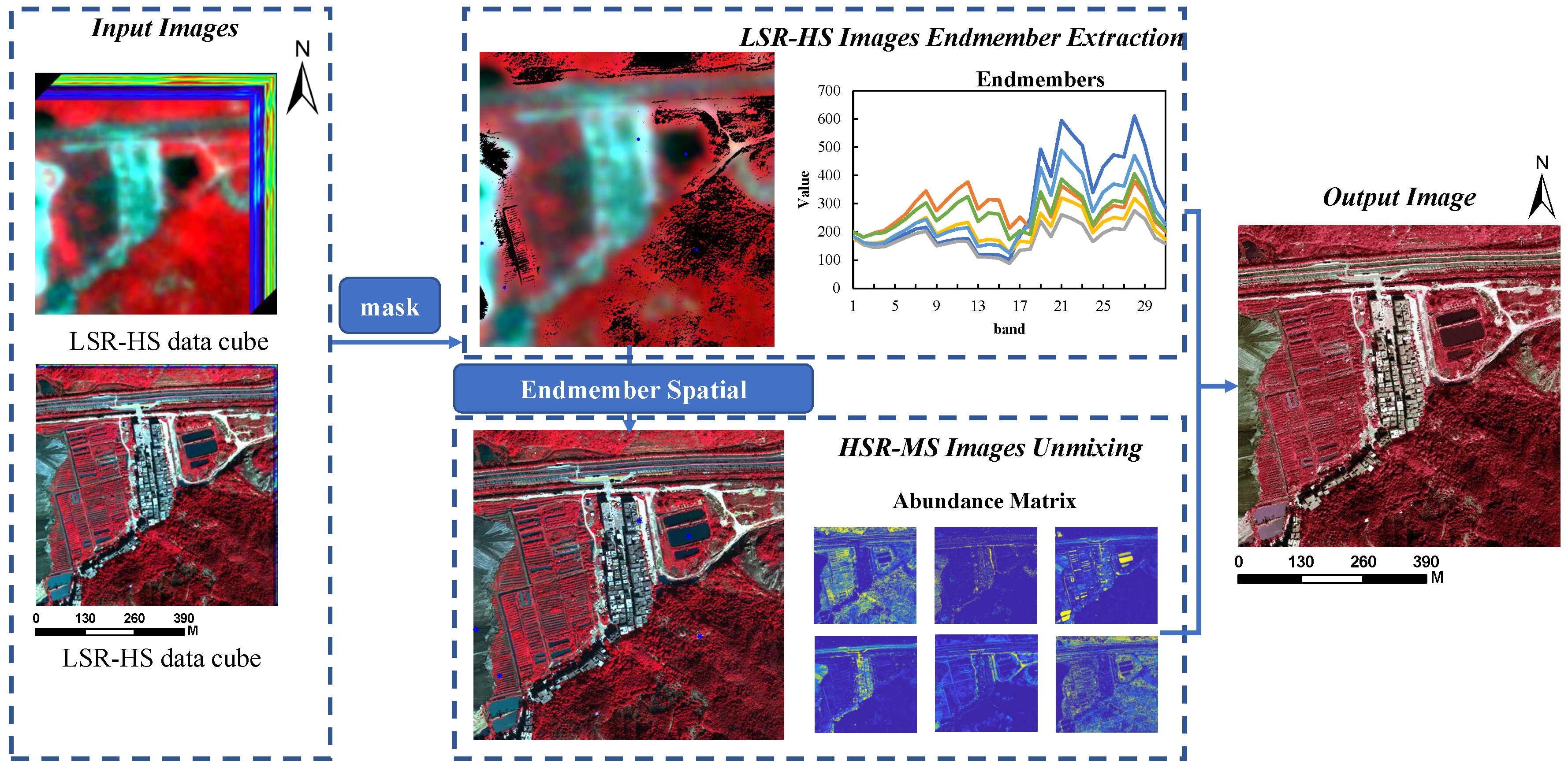

2.1. The Proposed Method Overview

2.2. Regional Mask of the LSR-HS Image and HSR-HS Image Variation Area

2.3. LSR-HS Image Endmember Extracting

2.4. HSR-MS Image Unmixing

3. Experiments and Results

3.1. Performance Evaluation Metrics

- PSNRPSNR is for measuring the spatial quality of each band. It is the ratio between the maximum pixel value and the mean square error of the reconstructed image in each band. The PSNR of the i-th band is defined as:where is the maximum pixel value in the i th band of the reference image, N is the pixel number of .

- SAMSAM [32] is for quantifying the spectral similarity between the estimated and reference spectra in each pixel. The smaller of the SAM value indicates the higher spectral quality, the smaller spectral distortion.where denotes the norm.

- ERGASEGRAS index [33] describes the global statistical quality of the fused data, the smaller the better.where S is the ground sampling distance (GSD) ratio between the HSR-MS and LSR-HS images.

- Q2nQ2n is a generalization of the universal image quality index (UIQI) [34] to measure the spatial and spectral quality in monochromatic images. The UIQI between reference image and fused image is defined as:where , , , , .

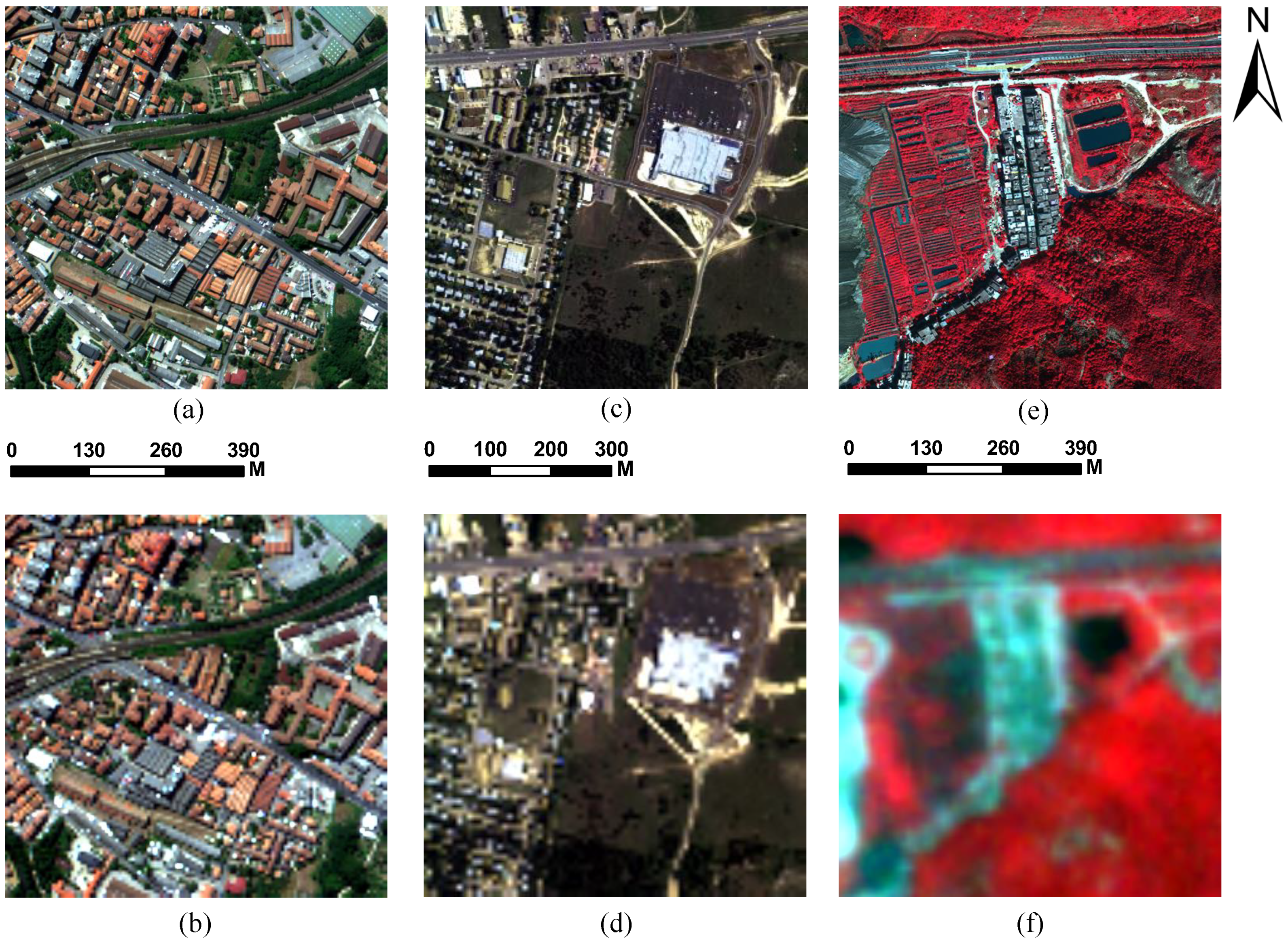

3.2. Experiment Data Sets

- ROSIS Pavia center datasetThe city center scene in Pavia is in the northern Italy, and the image was obtained by the Reflective Optics System Imaging Spectrometer (ROSIS-3) sensor with high spatial resolution (1.3 m) and the spectrum range is 430–834 nm (http://www.ehu.eus/ccwintco/index.php). There are 102 effective bands with a size of pixels in the image, and in this experiment we use the pixels in the bottom right part the original image as the experiment data. The number of endmembers in this dataset is six.

- HYDICE Urban datasetThe Urban dataset was obtained by the Hyperspectral Digital Imagery Collection Experiment (HYDICE) over the urban in Copperas Cove (http://www.erdc.usace.army.mil/Media/FactSheets/FactSheetArticleView/tabid/9254/Article/610433/hypercube.aspx). The image includes 210 bands with high spatial resuolution (2 m) and the spectrum range is 400 to 2400 nm. After the removal of low-SNR and water absorption bands (1–4, 76, 87, 101–111, 136–153 and 198–210), 162 bands remain. The image size is pixels, and in order to get a integer scale, we subset the in image as pixels. The number of endmembers in this dataset is six.We use the original datasets as reference images . To obtain the LSR-HS images , the reference images are first blurred by Gaussian kernel in the spatial domain and then downsampled by a ratio of 4. As to obtain HSR-MS , we directly select the bands whose center wavelengths are the same as landsat 8 from the original reference images. We choose the blue, green, red and NIR channel to simulate the HSR-MS images. For Pavia center dataset, we choose 478 nm, 558 nm, 658 nm and 830 nm, for Urban dataset, we choose 481 nm, 555 nm, 650 nm and 859 nm. Because an HS image with lower spatial resolution contains more noise than an HSR-MS image, we add Gaussian white noises with standard deviation 0.1 and 0.04, respectively [35].

- Worldview-3 and OHS Hengqin datasetThe third dataset is Hengqin island area of Guangdong Province, China. The HSR-MS image was captured by worldview-3 on 15 January 2018. The data includes four bands: blue, green, red and NIR, with a spatial resolution of 1.332 m. The LSR-MS image was captured by OHS sensor, which commercial satellites launched by Zhuhai Orbita Aerospace Science and Technology Company in 28 April 2018 carry. The LSR-HS image has 32 bands with 2.5 nm spectral resolution, the spectrum range is 400–1000 nm, and the spatial resolution is 10 m. We discard the 32-th band for the noise, so there are 31 bands left. In this experiment, we choose the area with small change of surface features, which includes water body, vegetation, residential area, highway, etc. The size of HSR-MS image and LSR-HS image in the same experimental area is and , respectively. For the convenience of comparative experiment, LSR-HS image was resampled to (shown in Figure 3). In this experiment, is set as 1.3, and the number of endmembers in this dataset is six.

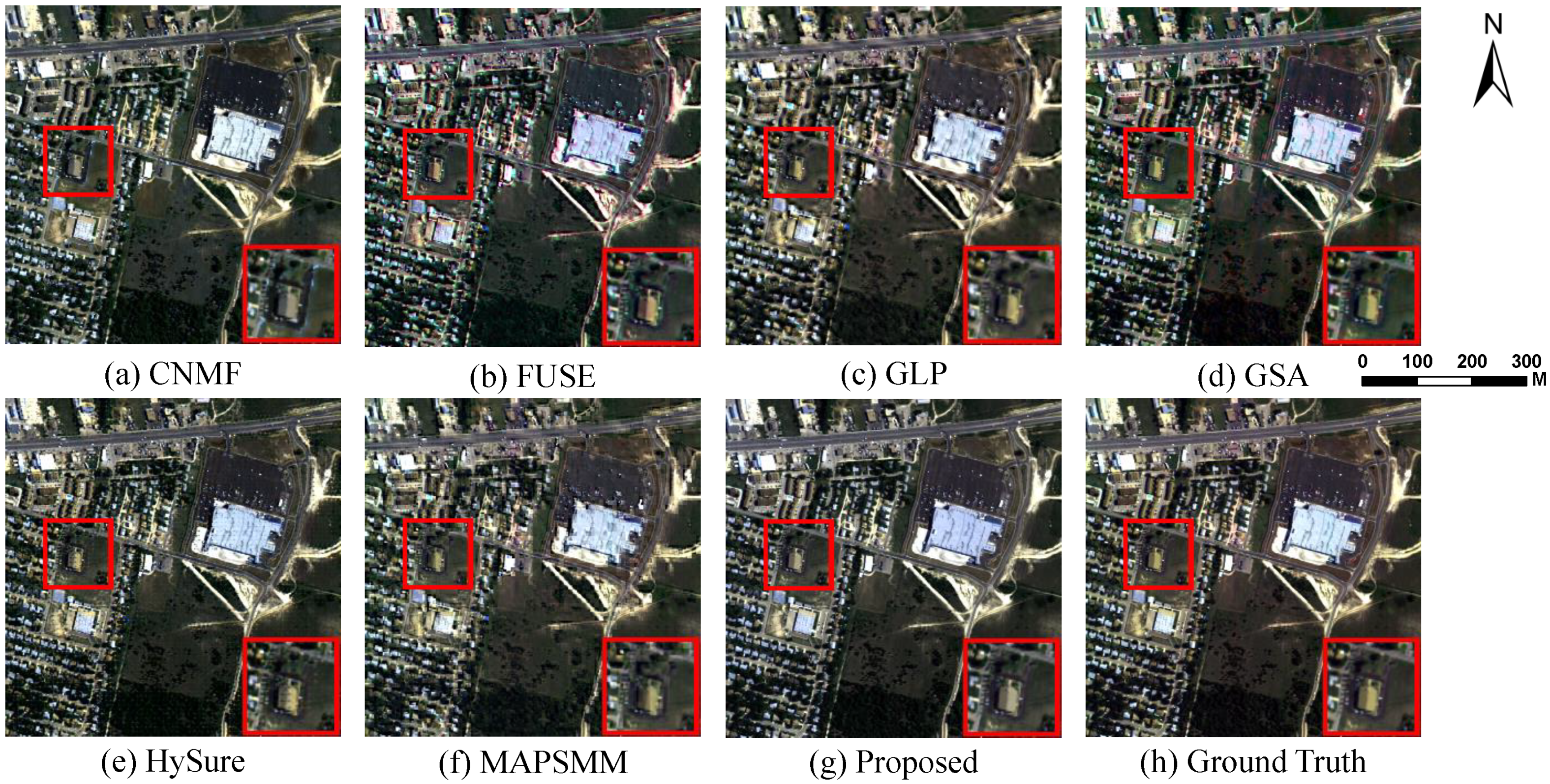

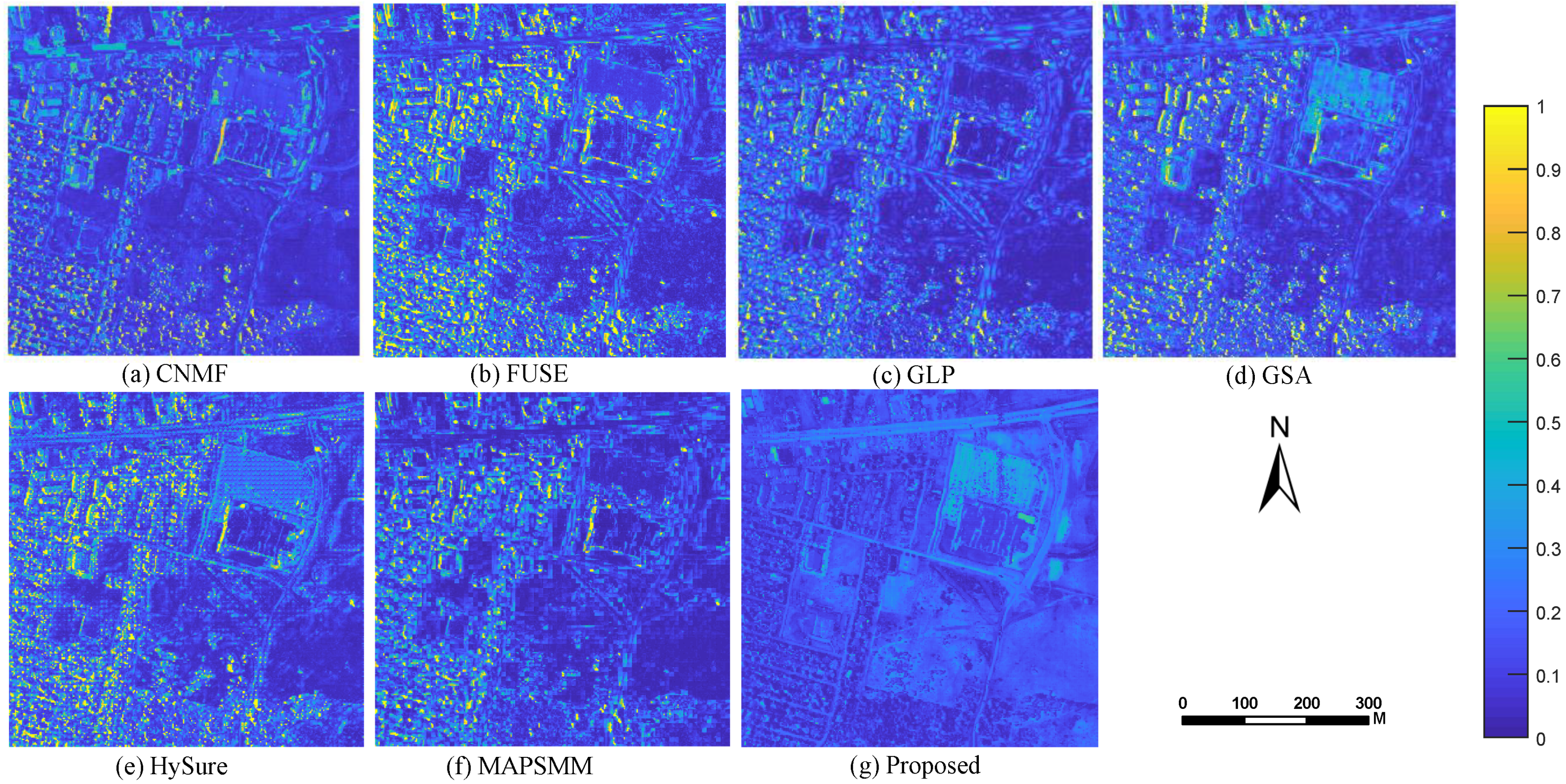

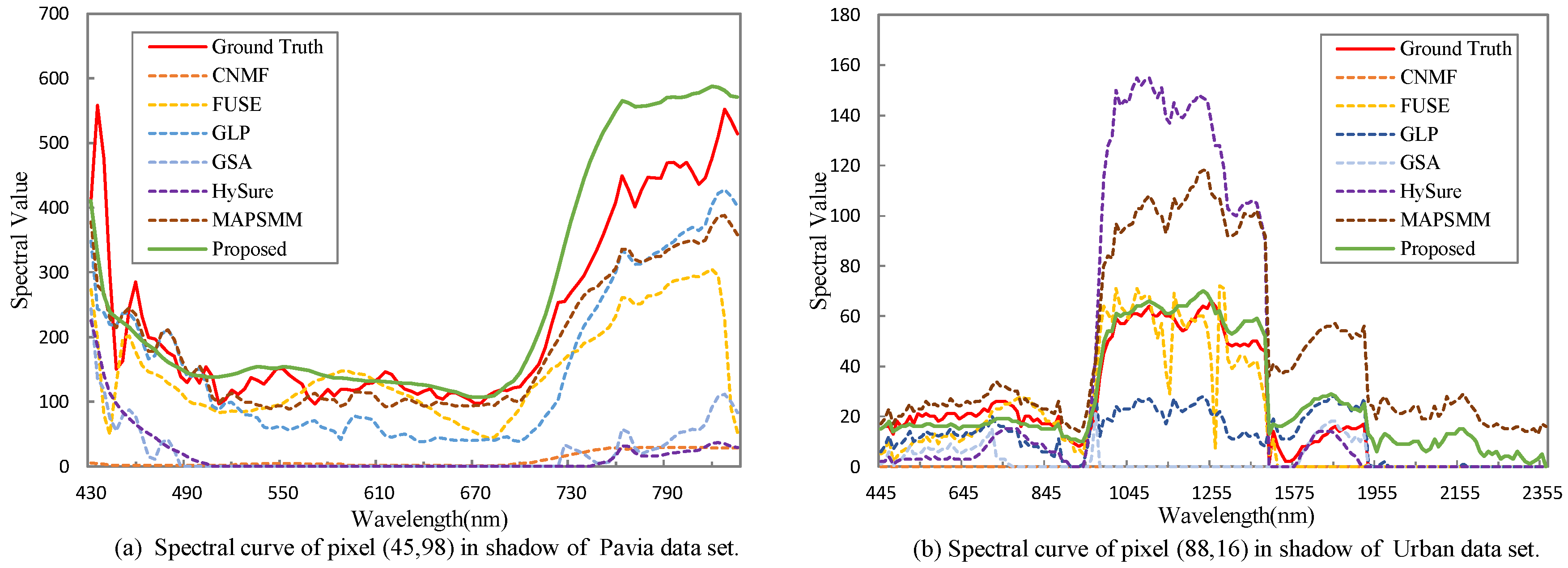

3.3. Experimental Results

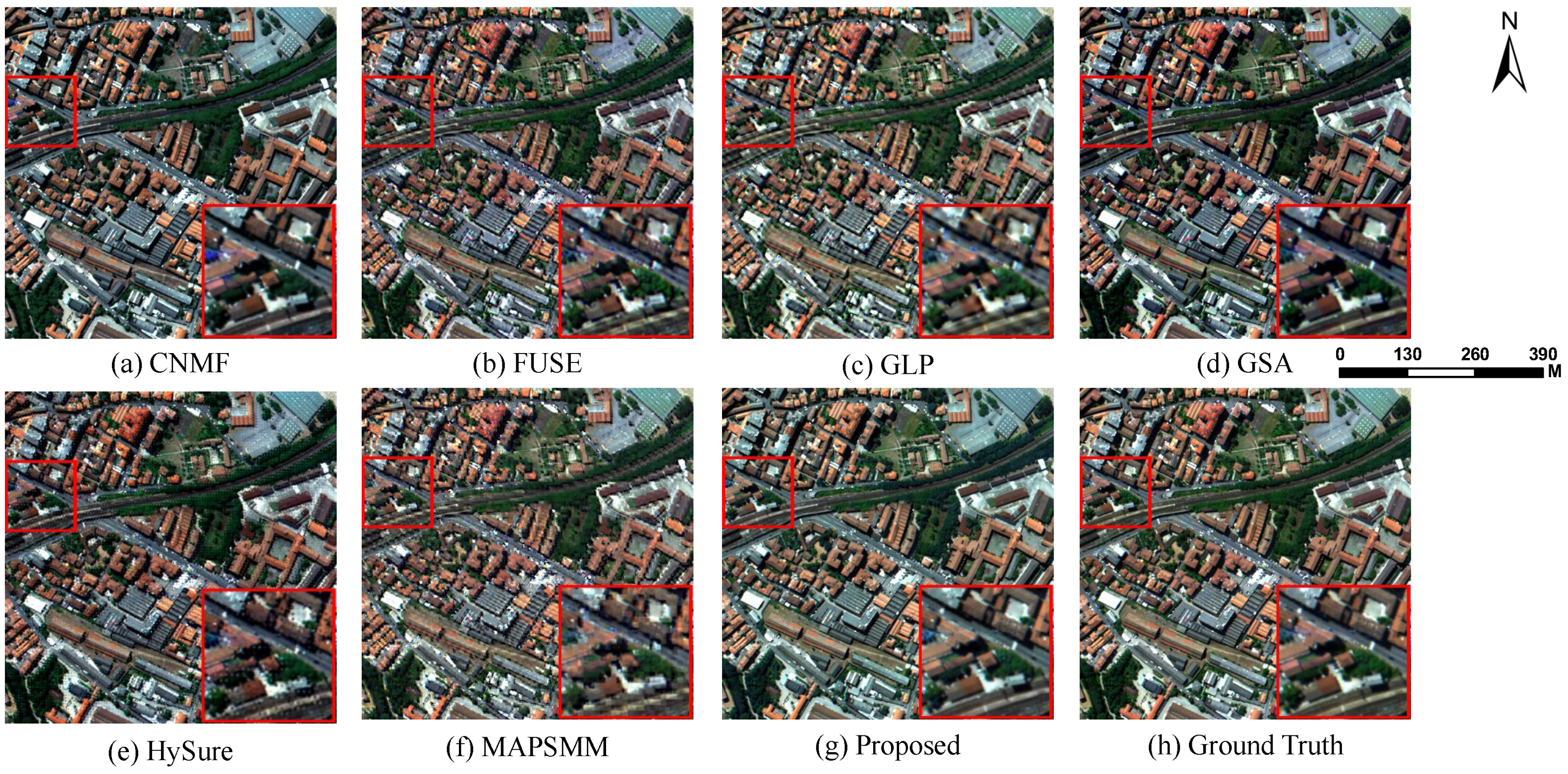

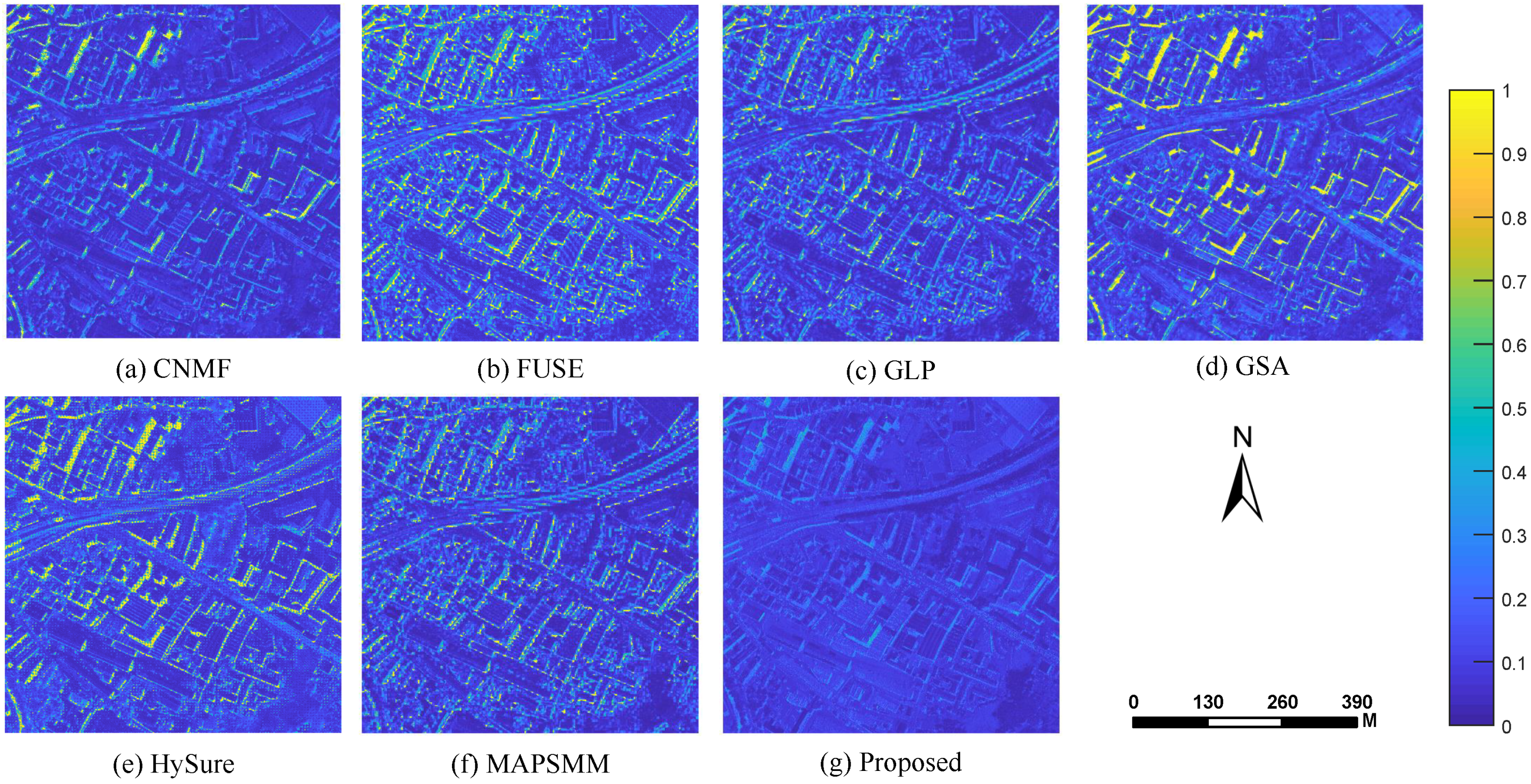

- CNMF [23] is a well-known HS–MS fusion method based on the spectral unmixing theory.

- FUSE [18] is one of the Bayesian-based HS–MS fusion algorithms with lower computational cost.

- GLP [17] determines the difference between the high-resolution image and its low-pass version, and multiplies the gain factor to obtain the spatial details of each low-resolution band.

- GSA [16] is an adaptive Gram–Schmidt algorithm, which better preserves the spectral information.

- HySure [20] preserves the edges of the fused image and uses total variation regularization to smooth out noise in homogeneous regions.

- MAPSMM [21] is the classic baysein-based HS–MS fusion method.

3.3.1. Simulated Dataset Fusion Results

3.3.2. Real Dataset Fusion Results

4. Discussion

4.1. Results Discussion

4.2. Time Complexity

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Shao, Z.; Fu, H.; Li, D.; Altan, O.; Cheng, T. Remote sensing monitoring of multi-scale watersheds impermeability for urban hydrological evaluation. Remote Sens. Environ. 2019, 232, 111338. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, L.; Zhou, X.; Ding, L. A novel hierarchical semisupervised SVM for classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1609–1613. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, W.; Cheng, Q.; Diao, C.; Lei, Z. An effective hyperspectral image retrieval method using integrated spectral and textural features. Sens. Rev. 2015, 35, 274–281. [Google Scholar] [CrossRef]

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral image classification using deep pixel-pair features. IEEE Trans. Geosci. Remote Sens. 2016, 55, 844–853. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, Y.; Mei, X.; Dai, X.; Ma, J. Multifeature-Based Discriminative Label Consistent K-SVD for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4995–5008. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, L. Sparse dimensionality reduction of hyperspectral image based on semi-supervised local Fisher discriminant analysis. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 122–129. [Google Scholar] [CrossRef]

- Zhang, L.; Weng, Q.; Shao, Z. An evaluation of monthly impervious surface dynamics by fusing Landsat and MODIS time series in the Pearl River Delta, China, from 2000 to 2015. Remote Sens. Environ. 2017, 201, 99–114. [Google Scholar] [CrossRef]

- Jiang, J.; Ma, J.; Chen, C.; Wang, Z.; Cai, Z.; Wang, L. SuperPCA: A superpixelwise PCA approach for unsupervised feature extraction of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4581–4593. [Google Scholar] [CrossRef]

- Shao, Z.; Cai, J.; Fu, P.; Hu, L.; Liu, T. Deep learning-based fusion of Landsat-8 and Sentinel-2 images for a harmonized surface reflectance product. Remote Sens. Environ. 2019, 235, 111425. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- He, L.; Wang, M.; Zhu, Y.; Chang, X.; Feng, X. Image Fusion for High-Resolution Optical Satellites Based on Panchromatic Spectral Decomposition. Sensors 2019, 19, 2619. [Google Scholar] [CrossRef] [PubMed]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Fast fusion of multi-band images based on solving a Sylvester equation. IEEE Trans. Image Process. 2015, 24, 4109–4121. [Google Scholar] [CrossRef]

- Zhang, Y.; De Backer, S.; Scheunders, P. Noise-resistant wavelet-based Bayesian fusion of multispectral and hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3834–3843. [Google Scholar] [CrossRef]

- Simões, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2014, 53, 3373–3388. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Application of the stochastic mixing model to hyperspectral resolution enhancement. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1924–1933. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2011, 50, 528–537. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 63–78. [Google Scholar]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In IEEE International Conference on Computer Vision; Springer: Cham, Switzerland, 2015; pp. 3586–3594. [Google Scholar]

- Wang, X.; Zhong, Y.; Zhang, L.; Xu, Y. Spatial group sparsity regularized nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6287–6304. [Google Scholar] [CrossRef]

- Huang, B.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and spectral image fusion using sparse matrix factorization. IEEE Trans. Geosci. Remote Sens. 2013, 52, 1693–1704. [Google Scholar] [CrossRef]

- Nascimento, J.M.; Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Lawson, C.L.; Hanson, R.J. Solving Least Squares Problems; Siam: Ann Arbor, MI, USA, 1995; Volume 15. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1409–1413. [Google Scholar]

- Kruse, F.A.; Lefkoff, A.; Boardman, J.; Heidebrecht, K.; Shapiro, A.; Barloon, P.; Goetz, A. The spectral image processing system (SIPS)—interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference “Fusion of Earth Data: Merging point Measurements, Raster Maps and Remotely Sensed Images”, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Takeyama, S.; Ono, S.; Kumazawa, I. Hyperspectral and Multispectral Data Fusion by a Regularization Considering. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2152–2156. [Google Scholar]

- Lu, T.; Wang, J.; Zhang, Y.; Wang, Z.; Jiang, J. Satellite Image Super-Resolution via Multi-Scale Residual Deep Neural Network. Remote Sens. 2019, 11, 1588. [Google Scholar] [CrossRef]

| Data | Index | Method | ||||||

|---|---|---|---|---|---|---|---|---|

| CNMF | FUSE | GLP | GSA | HySure | MAPSMM | Proposed | ||

| Pavia | PSNR | 31.747 | 26.766 | 27.838 | 30.636 | 28.061 | 26.255 | 32.860 |

| SAM | 6.357 | 13.646 | 8.643 | 11.774 | 11.876 | 9.629 | 5.700 | |

| ERGAS | 4.973 | 8.667 | 7.448 | 5.460 | 7.334 | 8.922 | 4.799 | |

| Q2n | 0.929 | 0.836 | 0.852 | 0.935 | 0.888 | 0.792 | 0.942 | |

| Urban | PSNR | 25.890 | 23.223 | 25.370 | 27.235 | 24.183 | 24.002 | 29.083 |

| SAM | 8.665 | 15.190 | 8.689 | 11.307 | 13.946 | 9.546 | 8.827 | |

| ERGAS | 7.361 | 10.240 | 7.715 | 6.180 | 9.008 | 9.028 | 5.745 | |

| Q2n | 0.863 | 0.772 | 0.817 | 0.886 | 0.802 | 0.772 | 0.933 | |

| Method | Hengqin | ||||||

|---|---|---|---|---|---|---|---|

| CNMF | FUSE | GLP | GSA | HySure | MAPSMM | Proposed | |

| MG | 57.743 | 6.852 | 7.791 | 14.812 | 57.570 | 9.996 | 69.575 |

| SAM | 4.407 | 80.276 | 1.546 | 4.589 | 8.239 | 2.435 | 4.849 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, X.; He, L.; Cheng, Q.; Long, X.; Yuan, Y. Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information. Remote Sens. 2020, 12, 1009. https://doi.org/10.3390/rs12061009

Feng X, He L, Cheng Q, Long X, Yuan Y. Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information. Remote Sensing. 2020; 12(6):1009. https://doi.org/10.3390/rs12061009

Chicago/Turabian StyleFeng, Xiaoxiao, Luxiao He, Qimin Cheng, Xiaoyi Long, and Yuxin Yuan. 2020. "Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information" Remote Sensing 12, no. 6: 1009. https://doi.org/10.3390/rs12061009

APA StyleFeng, X., He, L., Cheng, Q., Long, X., & Yuan, Y. (2020). Hyperspectral and Multispectral Remote Sensing Image Fusion Based on Endmember Spatial Information. Remote Sensing, 12(6), 1009. https://doi.org/10.3390/rs12061009