1. Introduction

GF-1 satellite is the first satellite of China’s high-resolution earth observation system (GF is an acronym which means high-resolution in Chinese). It was successfully launched by the Long March -2D carrier rocket at 12:13:04 on 26 April 2013, which opened a new era of China’s earth observation. The GF-1 satellite is characterized by a breakthrough to the key technologies of high spatial resolution, multi-spectral and wide coverage in optical remote sensing, and has a design life of 5 to 8 years. The satellite plays an important role in the survey and dynamic monitoring of land resources, geological hazard monitoring, climate change monitoring and agricultural facilities distribution survey [

1,

2,

3,

4,

5,

6,

7].

However, due to the transferrable rationale of optical satellite, many remote sensing images are inevitably covered with a large number of clouds. Cloudless weather is rare, especially in southern China. Clouds impact the application of visible-multispectral remote sensing images. In satellite images, clouds are seen as white due to the fact of scattering light, which can blur remote sensing images and even prevent scientists from observing the surface and cause the images to be completely unusable [

8,

9,

10,

11]. At the same time, there are also shadows corresponding to clouds in the image, which block parts of the ground objects and prevent researchers from observing the surface [

12,

13,

14]. Therefore, it is essential to evaluate the quality of remote sensing image data, and identify and calculate the area covered by cloud to avoid the storage of invalid data and the waste of subsequent computing resources.

Until now, the main cloud detection methods can be divided into three categories: The first one is the physical method, which is to detect the cloud in each pixel of a remote sensing image by using its physical multi-spectral features. In the early stage of the study, physical methods often utilized a fixed threshold to distinguish clouds and other objects. In 1987, Saunders used a series of physical thresholds to process the NOAA AVHRR sensor data, which may be the first article to study cloud detection [

15]. Then, on the one hand, many researchers proposed diverse thresholds for different remote sensing datasets (e.g., MODIS, Landsat data and GF-1 imagery) [

16,

17,

18,

19,

20]. On the other hand, some researchers focused on improving a fixed threshold method to acquire an optimal cloud detection result [

16,

19]. However, using a fixed threshold has many shortcomings and limitations. With the increasing demand for cloud detection accuracy, more and more cloud detection researches have adopted a dynamic threshold instead of a fixed threshold. For example, Gennaro et al. used manual intervention to dynamically select threshold [

21]. Although this method can improve the accuracy of cloud detection, it is hard to apply to massive images because it needs manual intervention. In order to address this issue, some researchers proposed several methods which allow thresholds to dynamically change according to the changes of solar altitude angle and adapt to the real atmospheric and surface conditions [

22,

23]. From the above researches, we found that physical methods have an excellent advantage with high calculational speed because of their simple model, which meets our demand to process massive imageries. Meanwhile, although the dynamic threshold method is rarely applied to the dataset of GF-1 images, it is crucial to find the efficient physical features and a reasonable dynamic adaptive threshold method for GF-1 images.

The second cloud detection method is the method based on the texture and spatial features of the cloud, which is carried out according to the spatial information features of images. An image’s texture reflects the spatial change of the spectral brightness value of a remote sensing image. Although the textures of clouds are variable, clouds have their own unique statistical texture characteristics compared with the ground objects they cover. The cloud coverage area has higher brightness and corresponding grey level. Liu et al. carried out cloud detection on MODIS images by using a classification matrix and dynamic clustering algorithm of shortest distance [

24]. Sun et al. generated a cloud mask for Landsat by Gauss filtering [

25]. Liu et al. used a grey level co-occurrence matrix to extract the texture features of clouds as a criterion for their cloud detection algorithm [

26]. This kind of algorithm is complex and computationally intensive; it is hard to satisfy a prompt computational demand.

The third cloud detecting method is machine learning. With the development of computer technology, machine learning has been widely used in the field of remote sensing, and provides another effective means of cloud detection. There are two key points in the research of cloud detection methods based on machine learning: the first is how to extract and select cloud features, and the second is how to design cloud detectors, including artificial neural network, support vector machine, random forest, clustering and other methods [

26,

27]. Jin et al. established a back propagation in a neural network for MODIS remote sensing images, which has a better ability to learn knowledge [

28]. Yu et al. used a clustering method according to texture features to realize automatic detection of cloud area [

29]. Wang Wei et al. used a K-means clustering algorithm to initially classify the clustering feature of the data, and then used spectral threshold judgment to eliminate the interference of smoke and snow to detect clouds in MODIS data [

27,

30]. Fu et al. succeeded to detect clouds in an FY meteorological satellite with Random Forest Method [

31]. However, the method based on machine learning usually requires a large number of training samples and test samples for model construction and accuracy evaluation, which cannot effectively guarantee the generalization ability of the model in a wide range of applications.

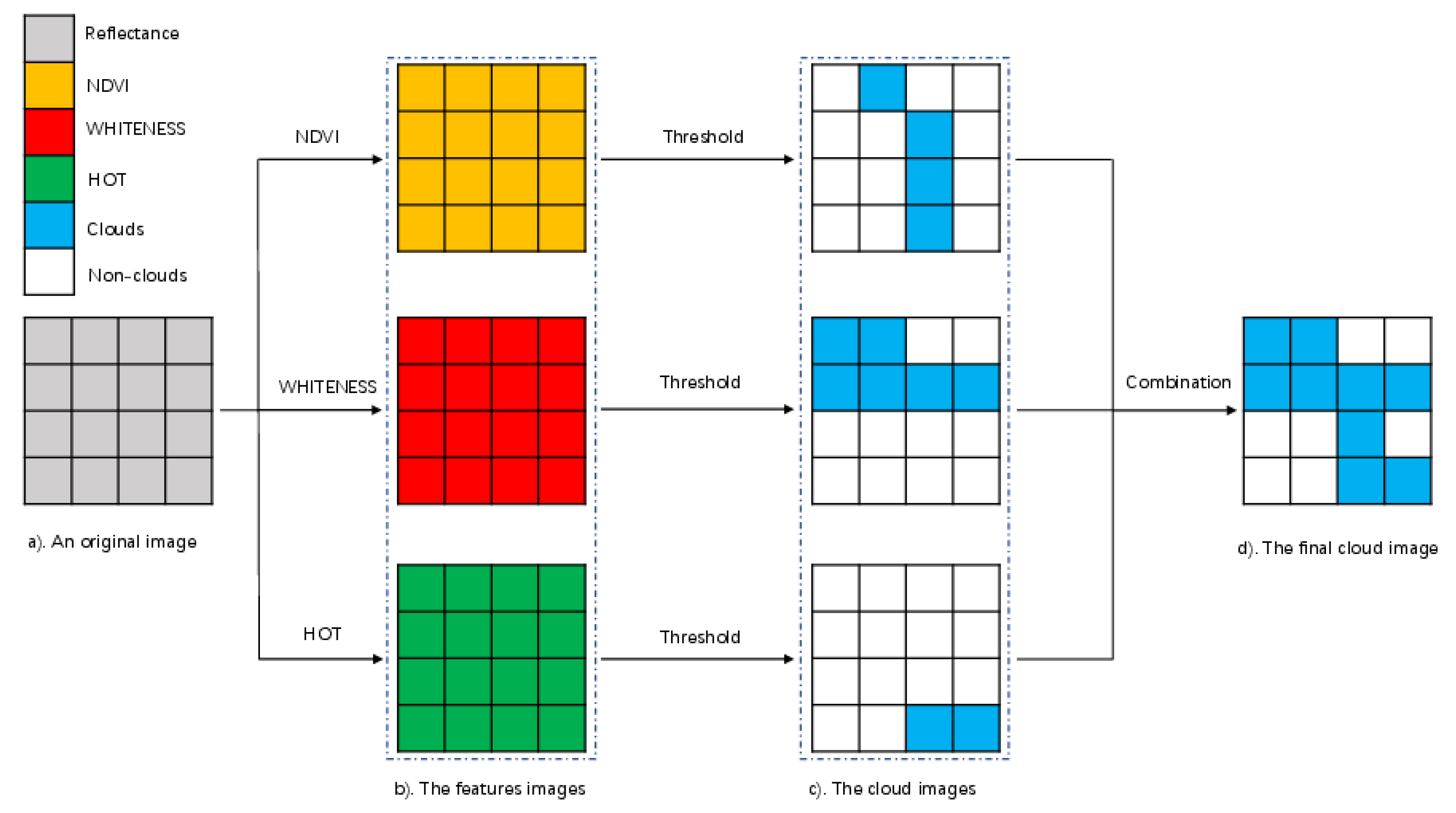

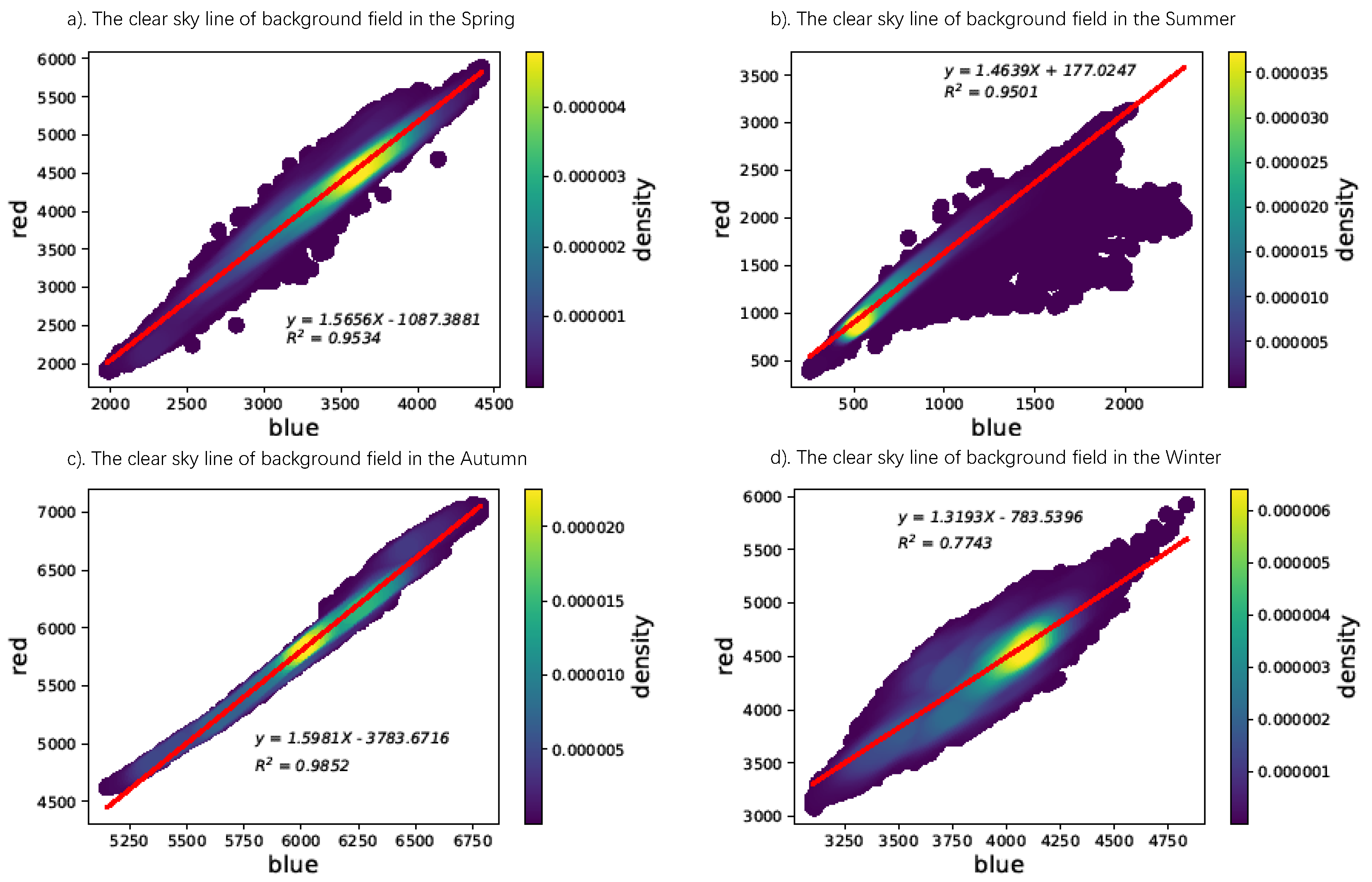

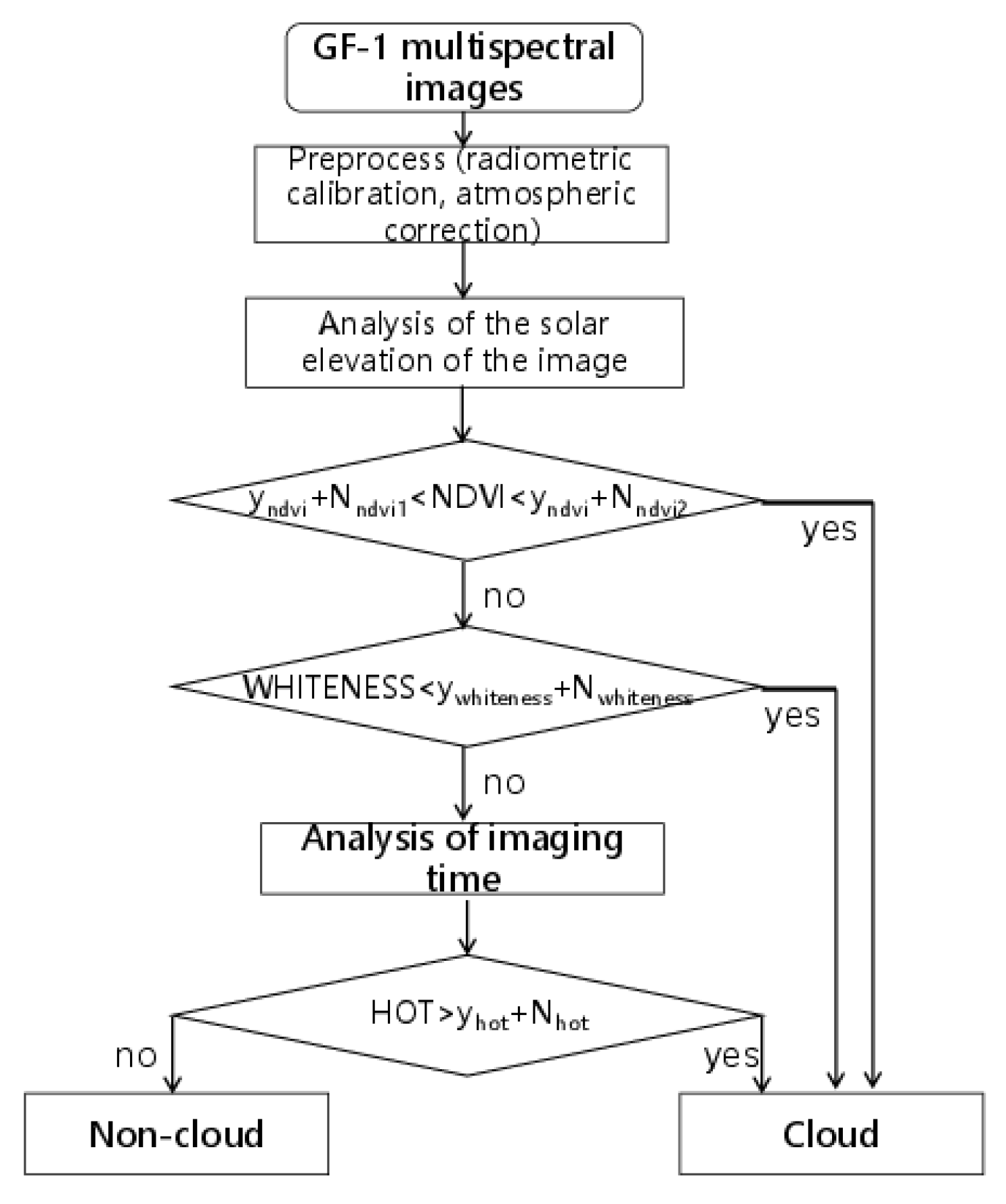

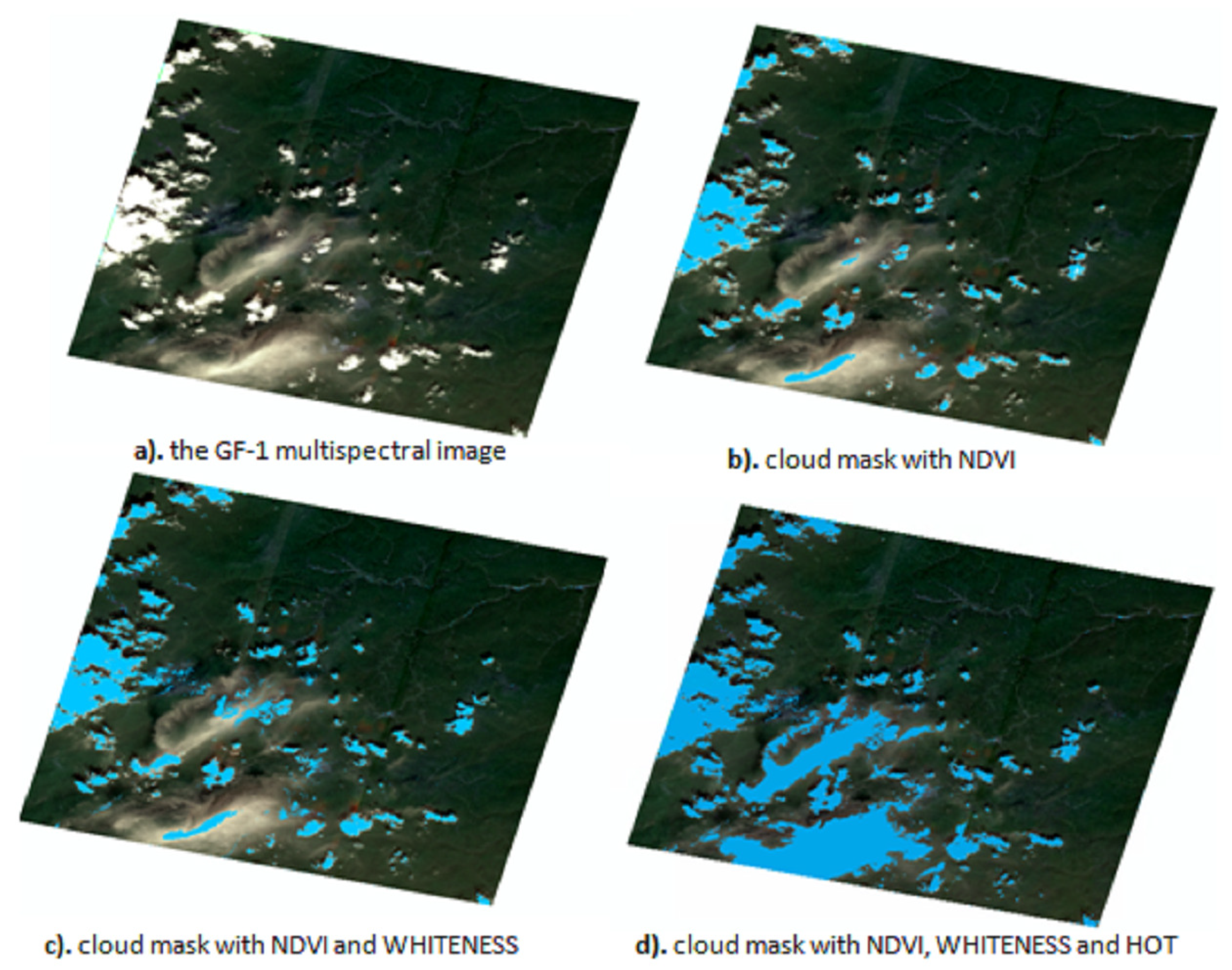

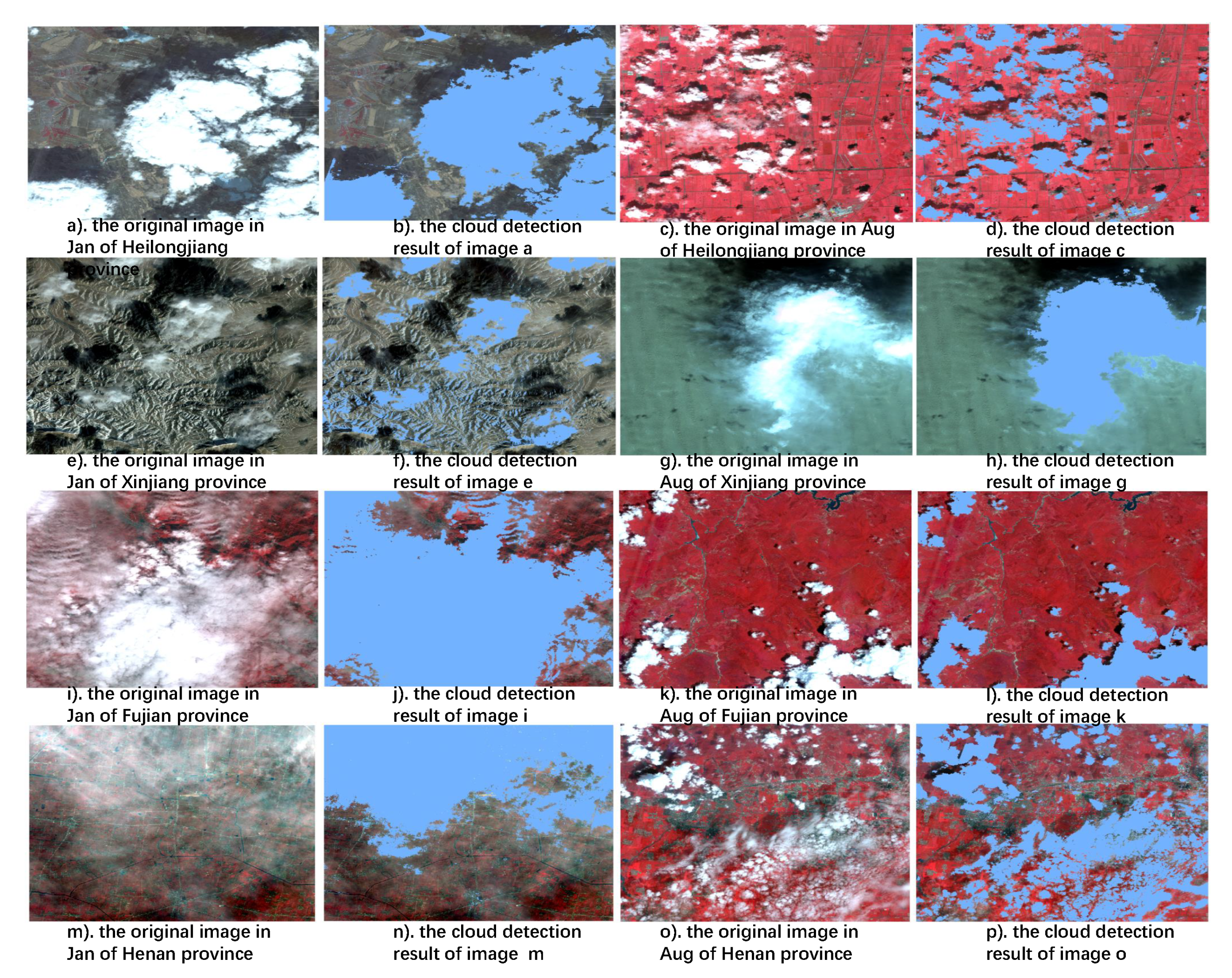

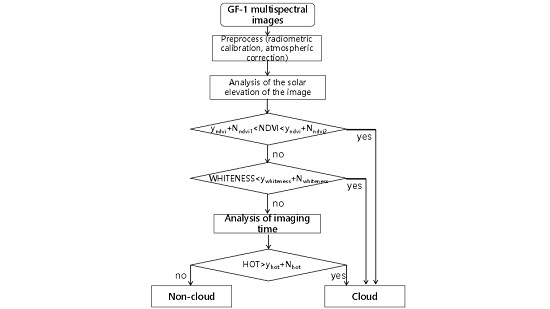

Aiming at detecting clouds promptly for GF-1 remote sensing images in the whole China and considering the data quality requirements of subsequent agricultural applications, this paper considers that it is more important to reduce the leakage recognition rate of clouds (i.e., if there are 100 cloud pixels in an image, we should do our best to find these pixels, no matter what the total pixels we mark as clouds is) than to reduce the error recognition rate of clouds. Therefore, after analyzing the spectral features of typical targets in GF-1 remote sensing images, three cloud detection feature parameters are selected to ensure that the suspected cloud pixels can also be identified. Meanwhile, this work utilizes Kawano’s dynamic threshold method to build a relationship between the features and solar altitude angle of each image, which can realize the dynamic adjustment of thresholds of different features. Experiments demonstrate that the algorithm is simple and efficient. It can be used to realize automatic cloud detection of GF-1 remote sensing images throughout China.

This paper is organized as follows.

Section 2 introduces a cloud detection algorithm for a GF-1 remote sensing image based on hybrid multispectral features with dynamic threshold, including analysis and selection of the feature parameters, seasonal adjustment of coefficients in the HOT calculation and spatial adjustment of feature parameters threshold.

Section 3 demonstrates the cloud detection experimental results. Finally,

Section 4 and

Section 5 discuss the experimental results and list future work.

4. Discussion

The fixed thresholds of each feature parameter found in

Section 3.2 are partially inconsistent with the range of each parameter corresponding to the cloud in

Section 2.1. This is mainly because the data which were used are not the same data, so it shows that although the threshold method can distinguish cloud and non-cloud objects to a certain extent, the thresholds of different regions or different seasons are variable, which implies that there is no fixed threshold for nationwide cloud detection of multispectral features. Thus, the method of cloud detection with a dynamic threshold proposed in this paper is essential, and can calculate the corresponding threshold for each GF-1 remote sensing image.

However, due to the overlap of cloud and snow in spectral characteristics and the lack of the Short Wave Infrared (SWIR) band in the GF-1 images which is needed when calculating the Normalized Difference Snow Index (NDSI), it is easy to make a misjudgement when using this method to detect cloud and snow. The main attempt in this work is to acquire the real reflectance of crops for subsequent agricultural application. If crops are covered by snow, the reflectance of a remote sensing image cannot reflect the real information of a crop, so these pixels cannot be utilized either. Therefore, this kind of misjudgement has a positive impact, which can be ignored in this study. However, for other applications, clouds and snow might need to be distinguished effectively. In this case, interested researchers can consider using texture features to distinguish clouds and snow. Furthermore, cloud shadow is also a challenge in cloud detection. In this paper, the method cannot address this problem. In future work, some feature parameters which can detect cloud shadow will be considered.

In evaluating the accuracy of the cloud detection results, this work and most researchers still choose the corresponding points randomly from an original image and detected image, then evaluate the accuracy by visual interpretation and judge the effect of cloud detection based on the results. Therefore, how to evaluate cloud detection accuracy more objectively is the difficulty of the current research stage, and is also the next research work of this study. This paper establishes a set of parameters from images in Heilongjiang province, even though the nationwide application has demonstrated that this method works. In the future, we would also focus on exploring and researching the differences of these parameters in different regions.