An Ensemble Learning Approach for Urban Land Use Mapping Based on Remote Sensing Imagery and Social Sensing Data

Abstract

1. Introduction

2. Materials and Methods

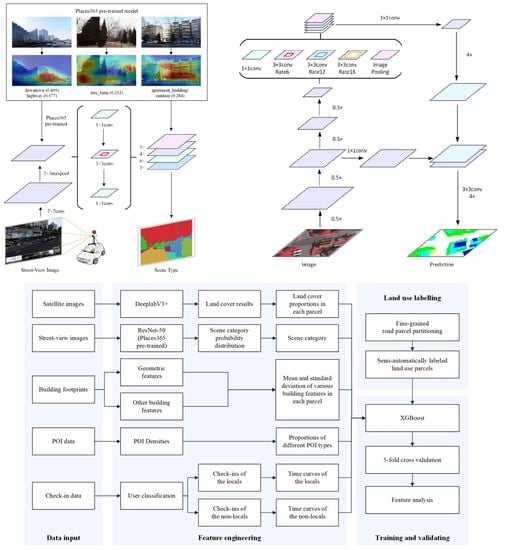

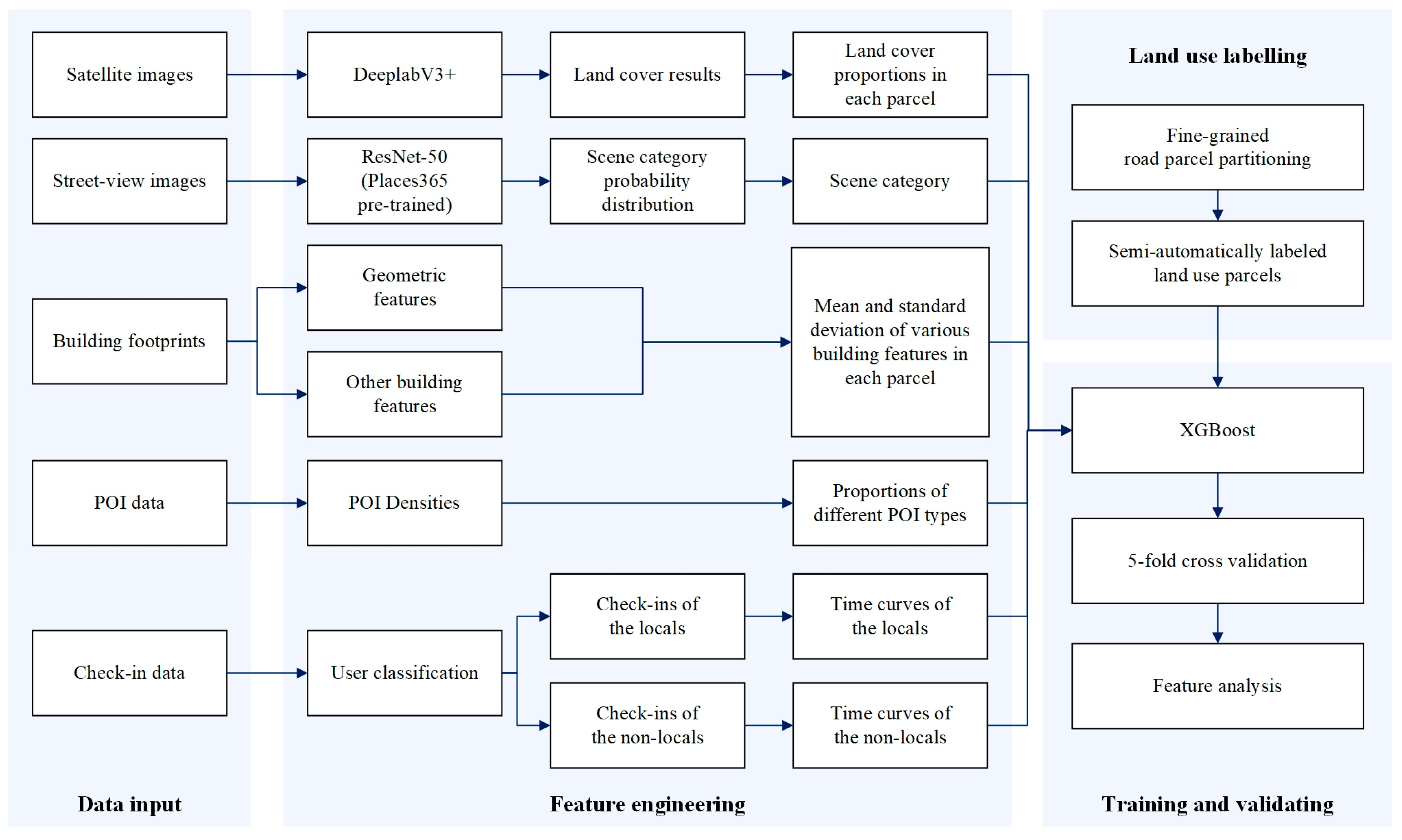

2.1. The Ensemble Learning Model

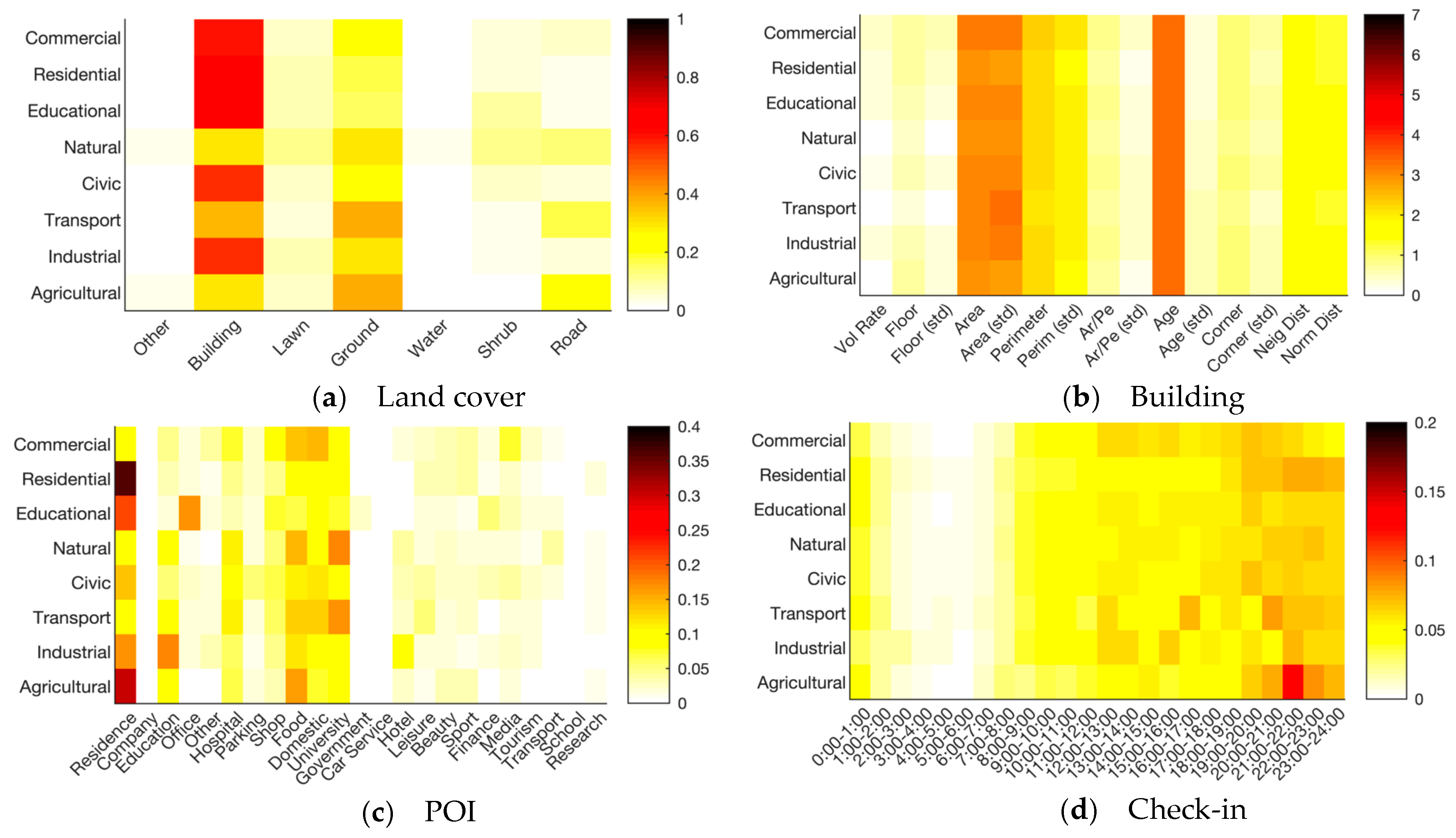

2.2. Urban Feature Engineering

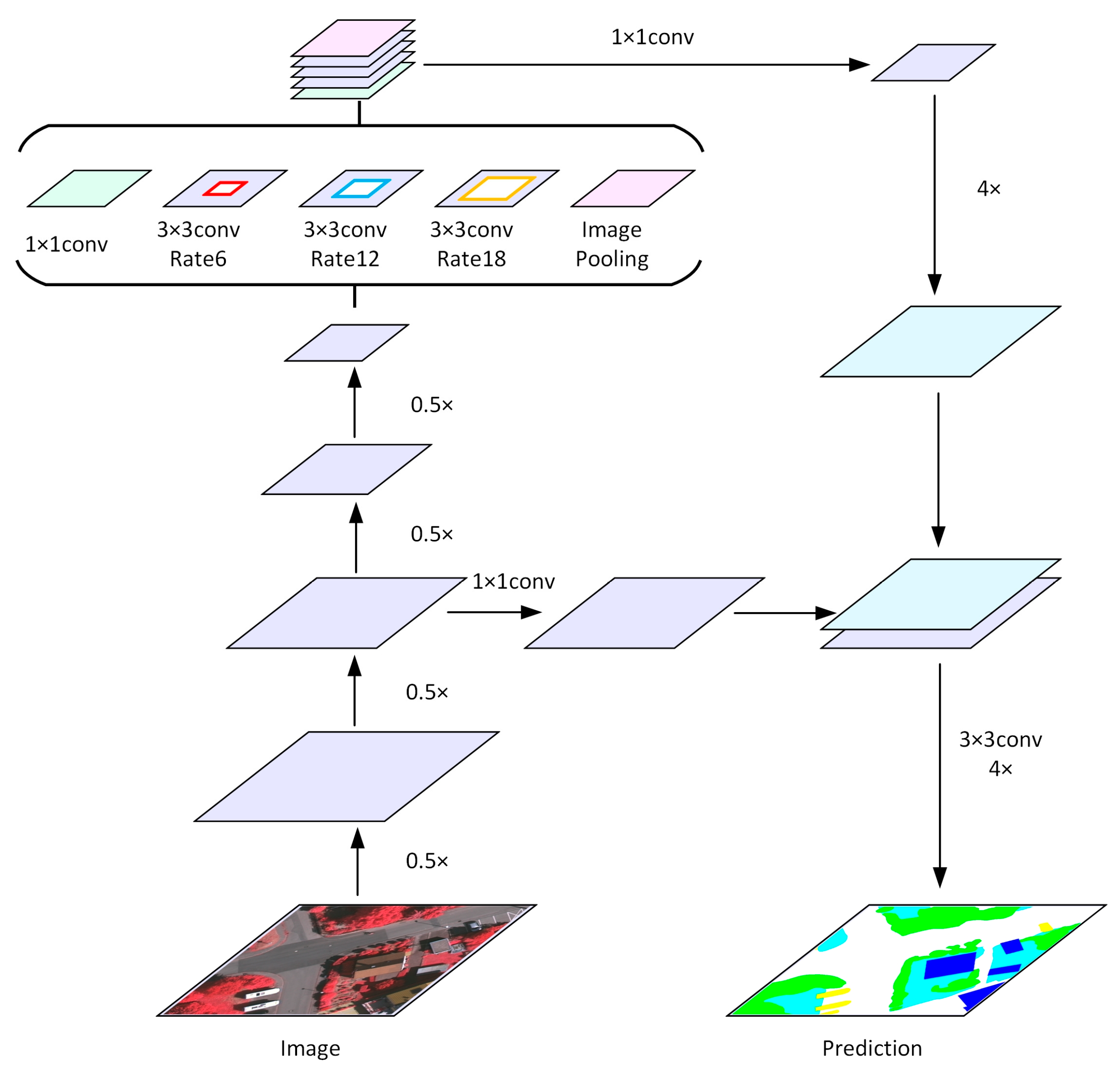

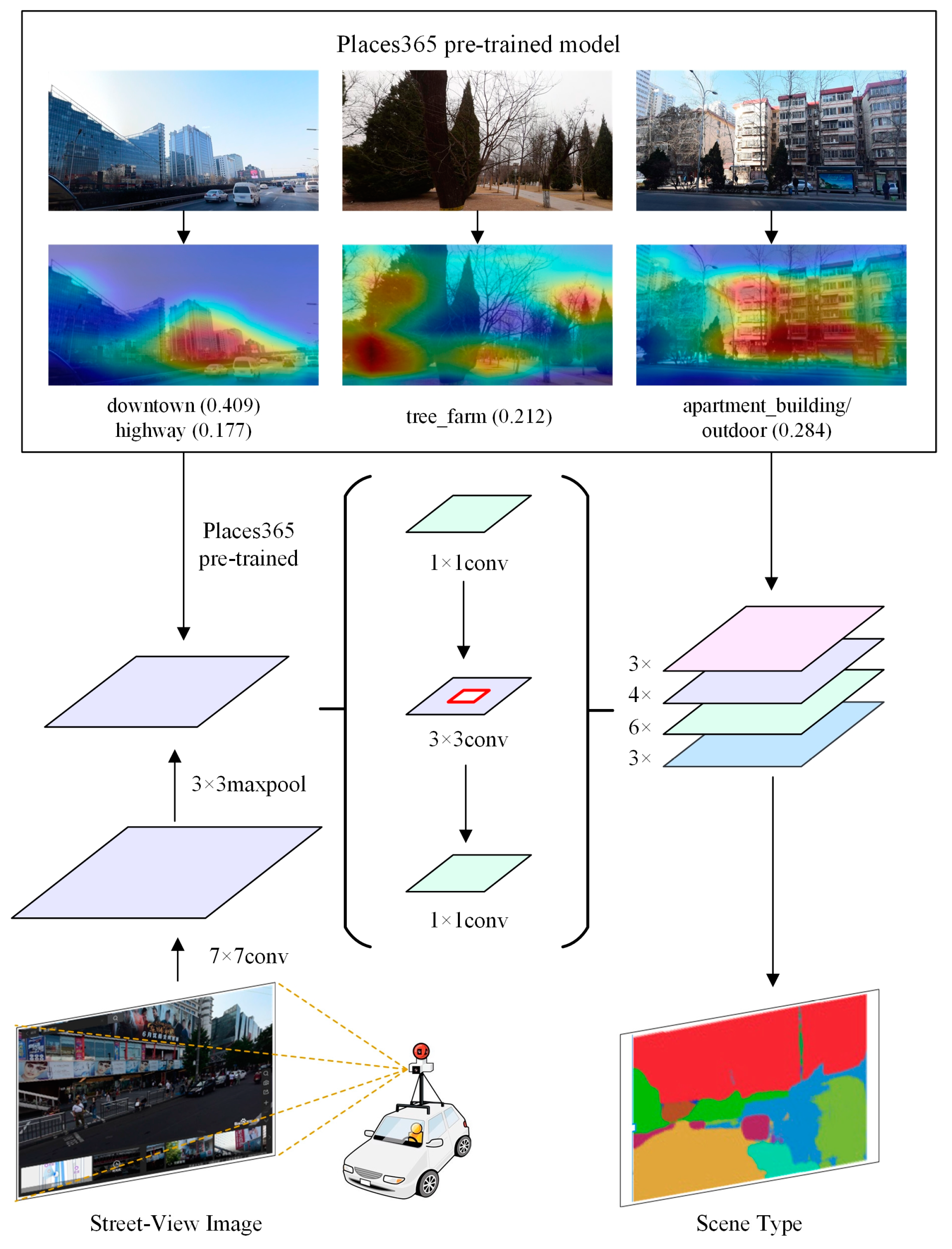

2.2.1. Extraction of Physical Features

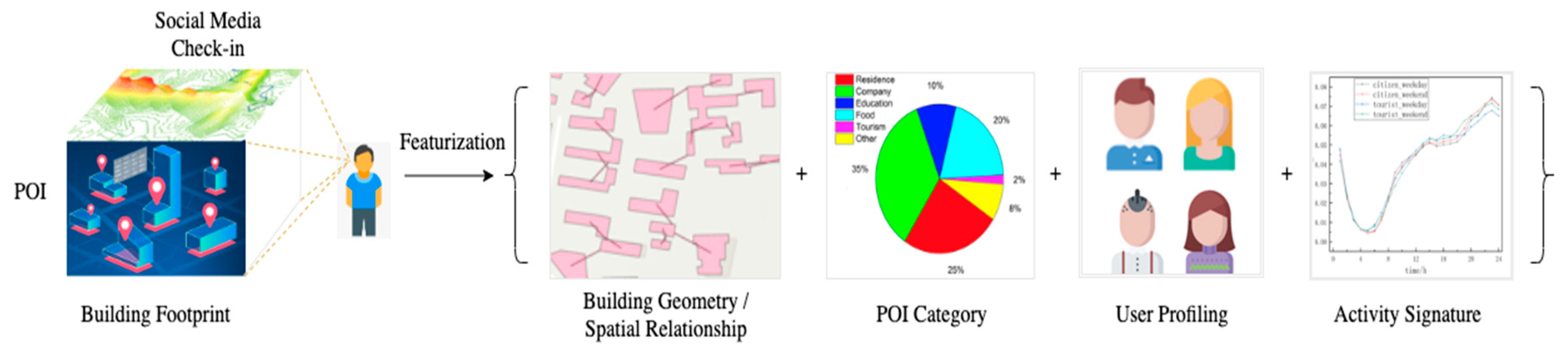

2.2.2. Extraction of Socioeconomic Features

2.3. Land Use Taxonomy for Model Training and Validation

| Algorithm 1 Semi-Automatically Label Method |

| Input: Research units U, Land use type data in raster dataset LU Output: Label of land use type for each unit L Foreach unit u of U do I←the number of each raster cells intersects with u P←Proportion of each type in I P←sort(P) If P [0] > 0.6 do //Pure parcel L[u]←type of P[0] Else if P[0] > 0.4 and P[1] < 0.2 do //Mixed parcel with a major category L[u]←type of P[0] Else //Mixed parcel L[u]←Artificial discrimination considering multisource data such as POI and Street view End End Return L |

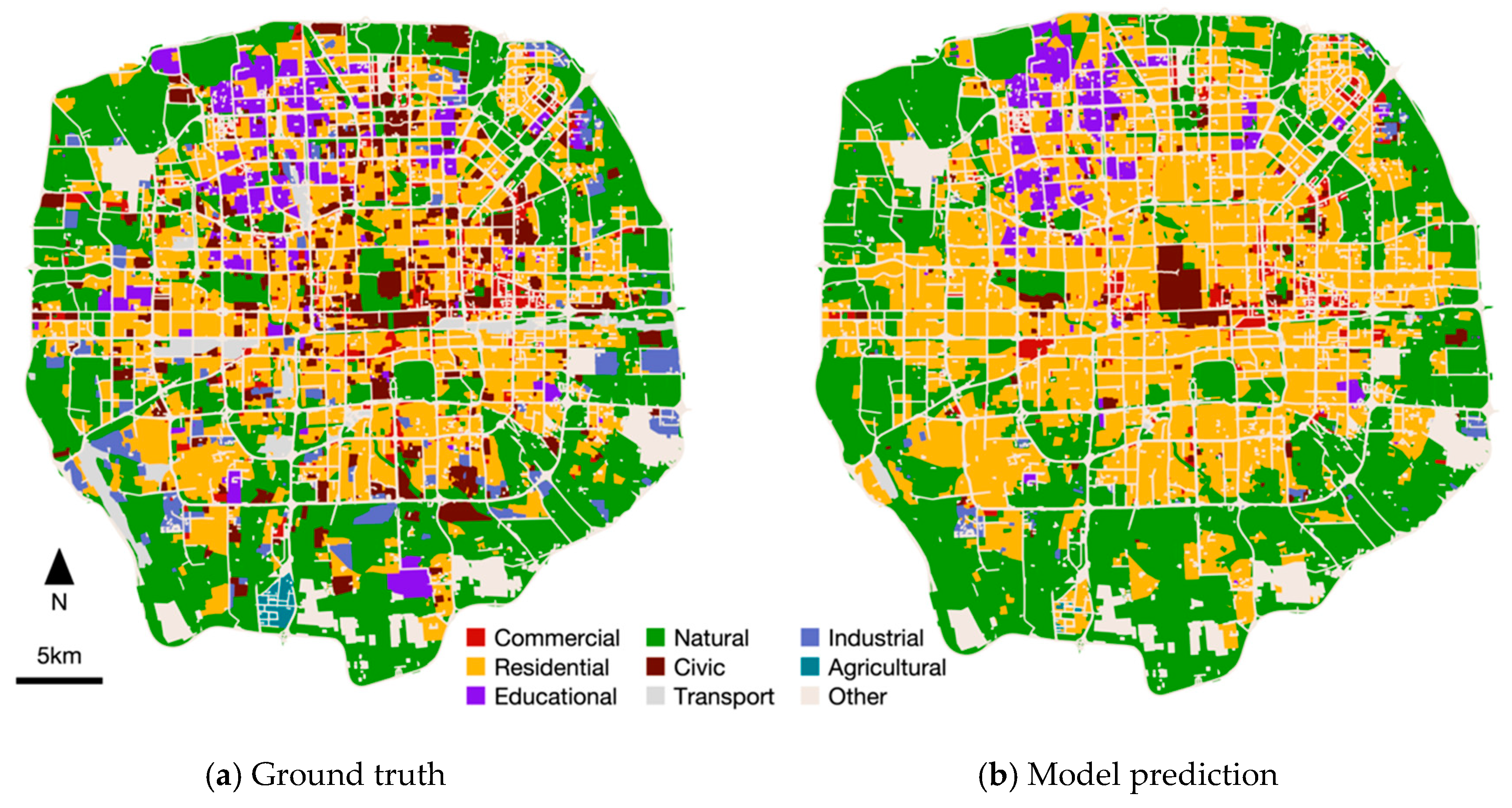

3. Results

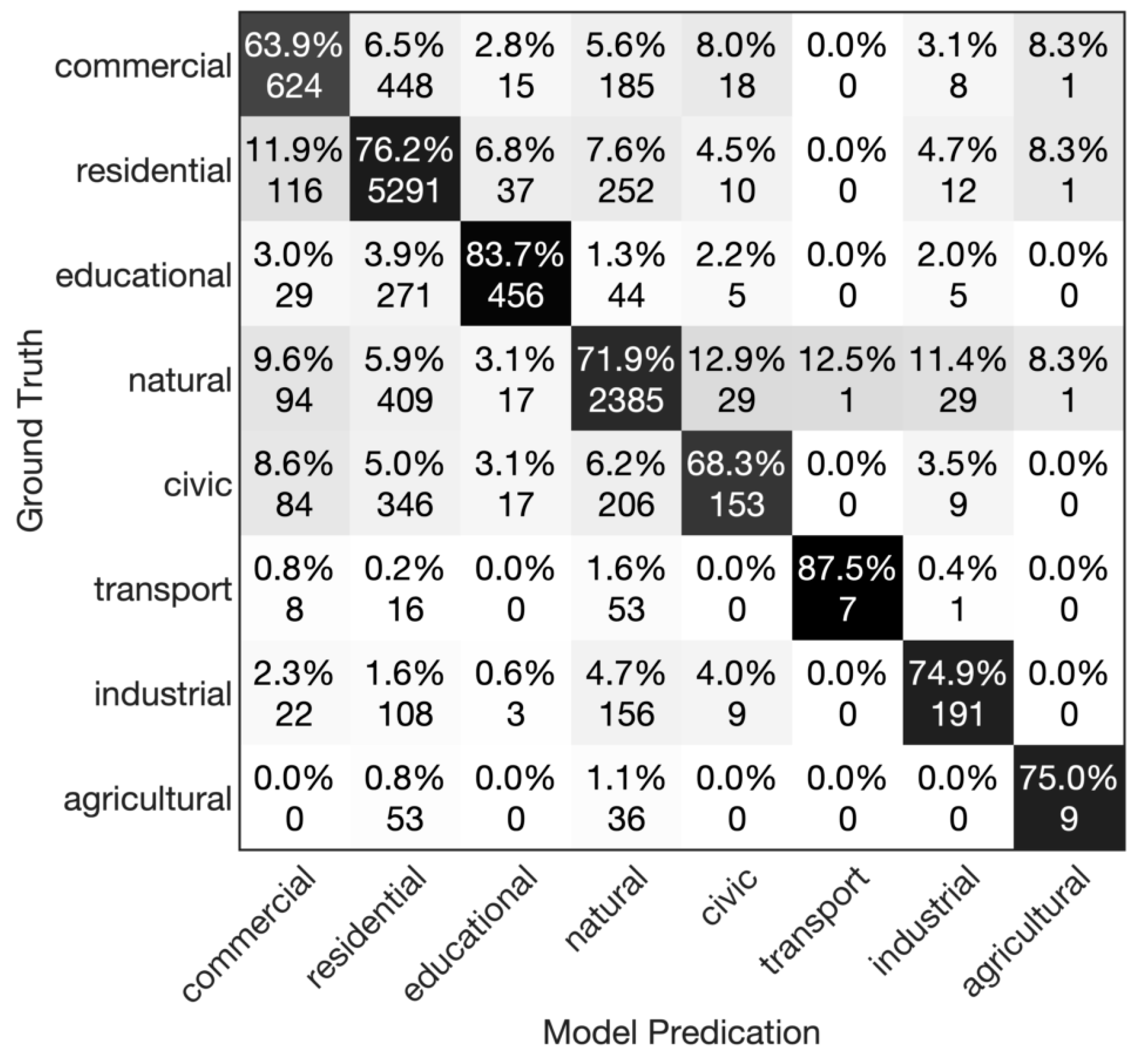

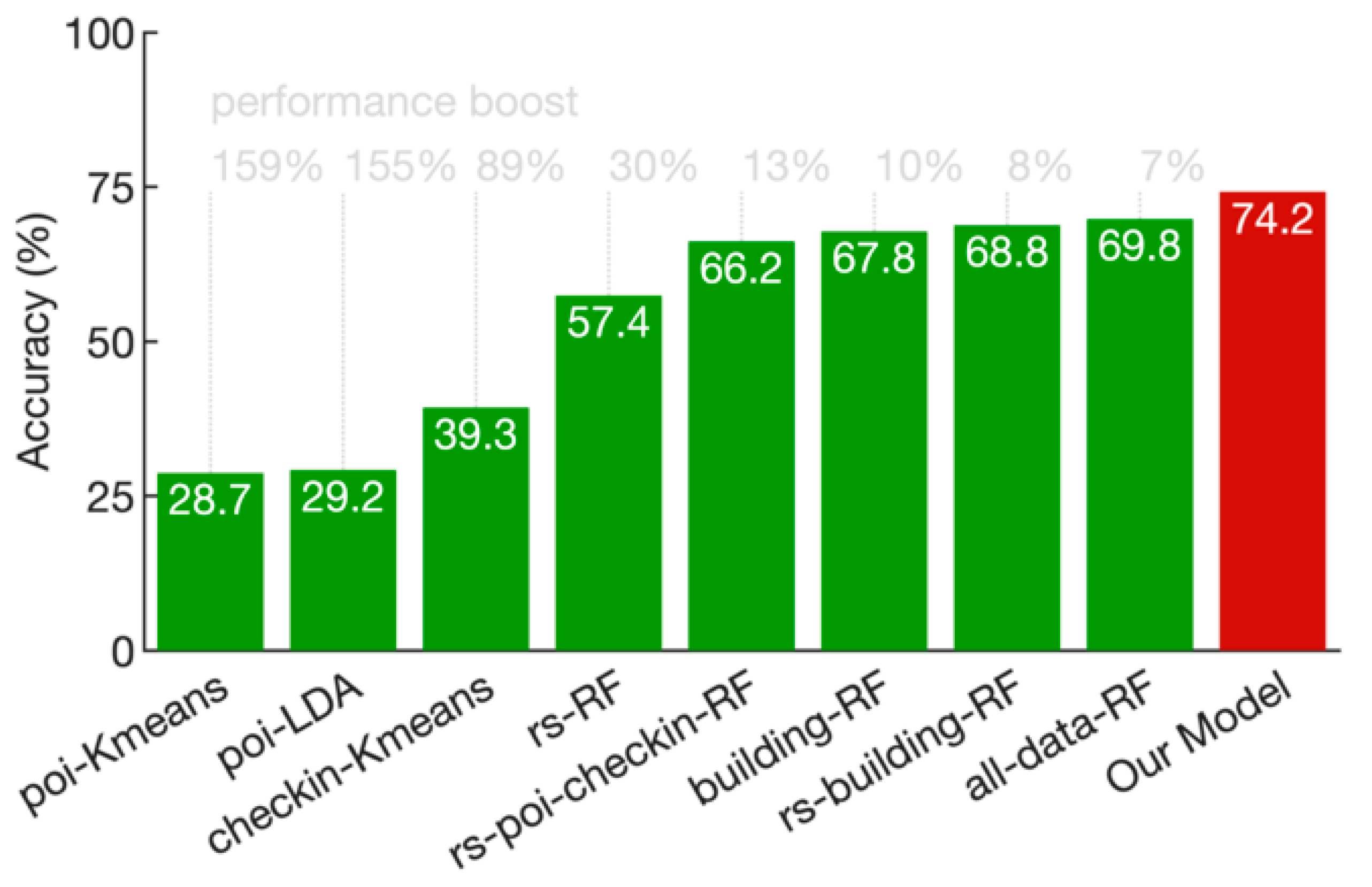

3.1. Classification Accuracy

3.2. Analysis of Contributing Features

3.3. Comparison with Alternative Models

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

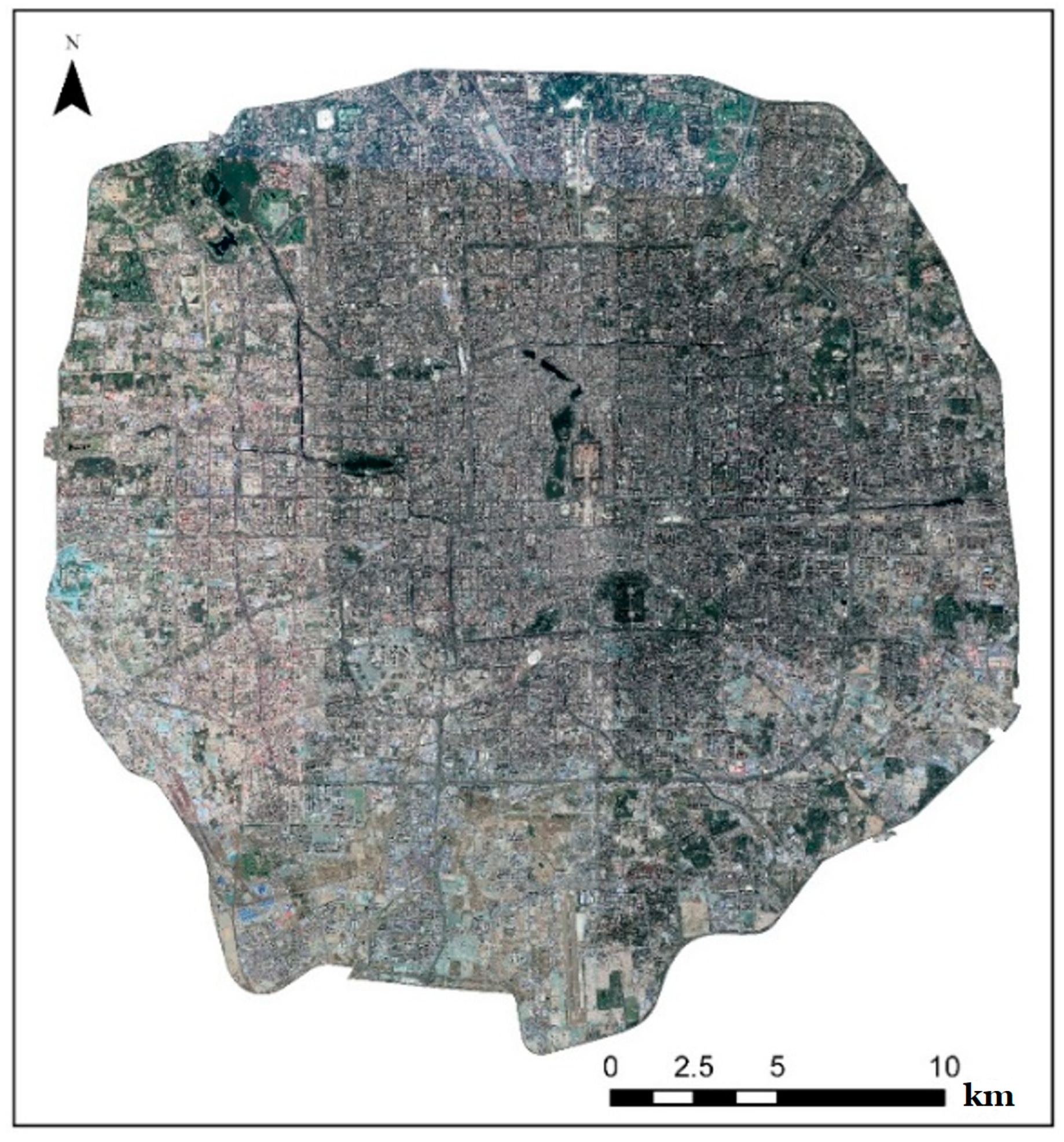

Appendix A.1. Google Remote Sensing Images

Appendix A.2. Tencent Street-View Images

Appendix A.3. Building Footprints

Appendix A.4. Baidu POIs

Appendix A.5. Sina Weibo Check-Ins

Appendix A.6. Derived Urban Features

| Data Source | Variable | Description |

|---|---|---|

| Remote sensing image | Lawn | Lawn, and small-scale permeable land inside a large lawn area |

| Shrub | Shrubs and trees | |

| Ground | Bare land, farmland, construction site, etc. | |

| Impervious surface | Impervious surface except for roads, such as parking lot, square, cement floor | |

| Road | Artificial paved and nonpaved pavement, Including trunk roads, feeder roads, airport runways | |

| Building | Artificial roofed buildings of various shapes and types, excluding open-air stadiums. | |

| Water | Lakes, oceans, rivers, sewage treatment plants, swimming pools, etc. | |

| Street-view image | 365 scene categories | See the link for the full list: https://github.com/metalbubble/places_devkit/blob/master/categories_places365.txt |

| Building footprint | Volume (area) | Plot ratio of a parcel |

| Height (mean) | The average height of all buildings in a parcel. | |

| Height (std) | The standard deviation of the height of all buildings within a parcel. | |

| Height (mean/area) | The average building height weighted by building area. | |

| Height (std/area) | The standard deviation of building height weighted by building area. | |

| Building area (mean) | The average building area of all the buildings in a parcel | |

| Building area (std) | The standard deviation of building area in a parcel | |

| Perimeter (mean) | The average perimeter of the buildings | |

| Perimeter (std) | The standard deviation of building circumference. | |

| Corner (mean) | The average number of buildings’ area/perimeter in a parcel. | |

| Corner (std) | The standard deviation of buildings’ area/perimeter in a parcel. | |

| Age (mean) | Average completion time of the building. | |

| Age (std) | The standard deviation of completion time. | |

| Nearest distance | The average of nearest-neighbor distance between buildings in a parcel | |

| Zonal nearest distance | Neighborhood distance calculated by regional method | |

| POI | Residence | Residential buildings and apartments |

| Company | Companies | |

| Education | Education and training institutions | |

| Office | Office buildings and other workplaces | |

| Hospital | Hospitals, clinics and pharmacies | |

| Parking | Open or indoor parking lot | |

| Shop | Retail store, market or other shopping place | |

| Food | Restaurants and snack bars | |

| Domestic | Domestic services and amenities | |

| University | Universities and colleges | |

| Government | Government agencies and other organizations | |

| Car service | Automobile sales and maintenance | |

| Hotel | Hotels, inns and other places for temporary accommodation | |

| Leisure | Recreation facilities and the bars | |

| Beauty | Beauty salons, hair salons | |

| Sport | Stadiums and other sports facilities | |

| Finance | Banks and other financial institutions | |

| Media | Press, TV station | |

| Tourism | Tourist attractions and museums | |

| Transport | Transportation facilities | |

| School | Kindergartens, primary schools and middle schools | |

| Research | Research institutes | |

| Check-in | Local | Volumes of locals’ check-ins in 24 h |

| Visitor | Volumes of visitor’ check-ins in 24 h |

References

- Li, X.; Yeh, A.G.-O. Analyzing spatial restructuring of land use patterns in a fast growing region using remote sensing and GIS. Landsc. Urban Plan. 2004, 335–354. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D.M. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, X.; Gao, S.; Gong, L.; Kang, C.; Zhi, Y.; Chi, G.; Shi, L. Social Sensing: A New Approach to Understanding Our Socioeconomic Environments. Ann. Assoc. Am. Geogr. 2015, 105, 512–530. [Google Scholar] [CrossRef]

- Peng, X.; Huang, Z. A Novel Popular Tourist Attraction Discovering Approach Based on Geo-Tagged Social Media Big Data. ISPRS Int. J. Geo-Inf. 2017, 6, 216. [Google Scholar] [CrossRef]

- Wu, X.; Huang, Z.; Peng, X.; Chen, Y.; Liu, Y. Building a Spatially-Embedded Network of Tourism Hotspots from Geotagged Social Media Data. IEEE Access 2018, 6, 21945–21955. [Google Scholar] [CrossRef]

- Wu, L.; Cheng, X.; Kang, C.; Zhu, D.; Huang, Z.; Liu, Y. A framework for mixed-use decomposition based on temporal activity signatures extracted from big geo-data. Int. J. Digit. Earth 2020, 13, 708–726. [Google Scholar] [CrossRef]

- Cockx, K.; Van de Voorde, T.; Canters, F. Quantifying uncertainty in remote sensing-based urban land-use mapping. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 154–166. [Google Scholar] [CrossRef]

- Baus, P.; Kováč, U.; Pauditšová, E.; Kohutková, I.; Komorník, J. Identification of interconnections between landscape pattern and urban dynamics—Case study Bratislava, Slovakia. Ecol. Indic. 2014, 42, 104–111. [Google Scholar] [CrossRef]

- Li, M.; Stein, A.; Bijker, W.; Zhan, Q. Urban land use extraction from very high resolution remote sensing imagery using a Bayesian network. ISPRS-J. Photogramm. Remote Sens. 2016, 122, 192–205. [Google Scholar] [CrossRef]

- Vanderhaegen, S.; Canters, F. Mapping urban form and function at city block level using spatial metrics. Landsc. Urban Plan. 2017, 167, 399–409. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Wu, Q.; Chen, Z.; Yao, S.; Huang, Y.; Wu, J. An extended minimum spanning tree method for characterizing local urban patterns. Int. J. Geogr. Inf. Sci. 2018, 32, 450–475. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S. A linear dirichlet mixture model for decomposing scenes: Application to analyzing urban functional zonings. Remote Sens. Environ. 2015, 169, 37–49. [Google Scholar] [CrossRef]

- Chen, F.; Wang, K.; Van de Voorde, T.; Tang, T.F. Mapping urban land cover from high spatial resolution hyperspectral data: An approach based on simultaneously unmixing similar pixels with jointly sparse spectral mixture analysis. Remote Sens. Environ. 2017, 196, 324–342. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef]

- Cao, R.; Zhu, J.; Tu, W.; Li, Q.; Cao, J.; Liu, B.; Zhang, Q.; Qiu, G. Integrating Aerial and Street View Images for Urban Land Use Classification. Remote Sens. 2018, 10, 1553. [Google Scholar] [CrossRef]

- Li, X.; Zhang, C.; Li, W. Building block level urban land-use information retrieval based on Google Street View images. GISci. Remote Sens. 2017, 54, 819–835. [Google Scholar] [CrossRef]

- Kang, J.; Körner, M.; Wang, Y.; Taubenböck, H.; Zhu, X.X. Building instance classification using street view images. ISPRS-J. Photogramm. Remote Sens. 2018, 145, 44–59. [Google Scholar] [CrossRef]

- Zhang, W.; Li, W.; Zhang, C.; Hanink, D.M.; Li, X.; Wang, W. Parcel-based urban land use classification in megacity using airborne LiDAR, high resolution orthoimagery, and Google Street View. Comput. Environ. Urban Syst. 2017, 64, 215–228. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, T.; Tsou, M.-H.; Li, H.; Jiang, W.; Guo, F. Mapping dynamic urban land use patterns with crowdsourced geo-tagged social media (Sina-Weibo) and commercial points of interest collections in Beijing, China. Sustainability 2016, 8, 1202. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, X.; Li, X.; Liu, X.; Yao, Y.; Hu, G.; Xu, X.; Pei, F. Delineating urban functional areas with building-level social media data: A dynamic time warping (DTW) distance based k-medoids method. Landsc. Urban Plan. 2017, 160, 48–60. [Google Scholar] [CrossRef]

- Gao, S.; Janowicz, K.; Couclelis, H. Extracting urban functional regions from points of interest and human activities on location-based social networks. Trans. GIS 2017, 21, 446–467. [Google Scholar] [CrossRef]

- Yao, Y.; Li, X.; Liu, X.; Liu, P.; Liang, Z.; Zhang, J.; Mai, K. Sensing spatial distribution of urban land use by integrating points-of-interest and Google Word2Vec model. Int. J. Geogr. Inf. Sci. 2017, 31, 825–848. [Google Scholar] [CrossRef]

- Ge, P.; He, J.; Zhang, S.; Zhang, L.; She, J. An Integrated Framework Combining Multiple Human Activity Features for Land Use Classification. ISPRS Int. J. Geo-Inf. 2019, 8, 90. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, X.; Liu, Z.; Li, X. Understanding the spatial organization of urban functions based on co-location patterns mining: A comparative analysis for 25 Chinese cities. Cities 2020, 97, 102563. [Google Scholar] [CrossRef]

- Frias-Martinez, V.; Frias-Martinez, E. Spectral clustering for sensing urban land use using Twitter activity. Eng. Appl. Artif. Intell. 2014, 35, 237–245. [Google Scholar] [CrossRef]

- Yuan, N.J.; Zheng, Y.; Xie, X.; Wang, Y.; Zheng, K.; Xiong, H. Discovering urban functional zones using latent activity trajectories. IEEE Trans. Knowl. Data Eng. 2014, 27, 712–725. [Google Scholar] [CrossRef]

- Liu, X.J.; Long, Y. Automated identification and characterization of parcels with OpenStreetMap and points of interest. Environ. Plann. B Plan. Des. 2016, 43, 341–360. [Google Scholar] [CrossRef]

- Xing, H.F.; Meng, Y. Integrating landscape metrics and socioeconomic features for urban functional region classification. Comput. Environ. Urban Syst. 2018, 72, 134–145. [Google Scholar] [CrossRef]

- Pei, T.; Sobolevsky, S.; Ratti, C.; Shaw, S.-L.; Li, T.; Zhou, C. A new insight into land use classification based on aggregated mobile phone data. Int. J. Geogr. Inf. Sci. 2013, 28, 1988–2007. [Google Scholar] [CrossRef]

- Ríos, S.A.; Muñoz, R. Land use detection with cell phone data using topic models: Case Santiago, Chile. Comput. Environ. Urban Syst. 2017, 61, 39–48. [Google Scholar] [CrossRef]

- Tu, W.; Cao, J.; Yue, Y.; Shaw, S.-L.; Zhou, M.; Wang, Z.; Chang, X.; Xu, Y.; Li, Q. Coupling mobile phone and social media data: A new approach to understanding urban functions and diurnal patterns. Int. J. Geogr. Inf. Sci. 2017, 31, 2331–2358. [Google Scholar] [CrossRef]

- Hu, T.; Yang, J.; Li, X.; Gong, P. Mapping urban land use by using landsat images and open social data. Remote Sens. 2016, 8, 151. [Google Scholar] [CrossRef]

- Xia, N.; Cheng, L.; Li, M. Mapping Urban Areas Using a Combination of Remote Sensing and Geolocation Data. Remote Sens. 2019, 11, 1470. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S.; Wang, Q. Hierarchical semantic cognition for urban functional zones with VHR satellite images and POI data. ISPRS-J. Photogramm. Remote Sens. 2017, 132, 170–184. [Google Scholar] [CrossRef]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Q.; Huang, H.; Wu, W.; Du, X.; Wang, H. The combined use of remote sensing and social sensing data in fine-grained urban land use mapping: A case study in Beijing, China. Remote Sens. 2017, 9, 865. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. Lect. Notes Comput. Sci. 2018, 11211, 833–851. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; Association for Computing Machinery, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE T Pattern Anal. 2015, 39, 640–651. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE T Pattern Anal. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE T Pattern Anal. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.; Bao, Y.; Huang, Z. Perceiving Beijing’s “city image” across different groups based on geotagged social media data. IEEE Access 2020, 8, 93868–93881. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; pp. 413–420. [Google Scholar] [CrossRef]

- Xiao, J. Sun database: Exploring a large collection of scene categories. Int. J. Comput. Vis. 2016, 119, 3–22. [Google Scholar] [CrossRef]

- Crookston, N.L.; Finley, A.O. yaImpute: An R package for kNN imputation. J. Stat. Softw. 2007, 23, 16. [Google Scholar]

| Land Use | Description |

|---|---|

| Commercial | Retail, wholesale market, restaurant, office building, shopping center, hotel, entertainment (such as theatre, concert hall, recreational facilities) |

| Educational | Universities, colleges, primary and secondary schools, kindergarten and its ancillary facilities |

| Residential | Urban residential buildings (including bungalow, multistorey or high-rise buildings), homestead |

| Natural | Natural vegetation or artificial vegetation, water and water infrastructure |

| Civic | Government agencies and organizations, hospitals, etc. |

| Transport | Airport, railway station, bus stop, and other transportation facilities |

| Industrial | Industrial land and storehouse |

| Agricultural | Farmland, natural or artificial grasslands and shrublands for grazing livestock |

| Other | Vacant land, bare land, railway, highway, rural road, etc. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Qi, H.; Kang, C.; Su, Y.; Liu, Y. An Ensemble Learning Approach for Urban Land Use Mapping Based on Remote Sensing Imagery and Social Sensing Data. Remote Sens. 2020, 12, 3254. https://doi.org/10.3390/rs12193254

Huang Z, Qi H, Kang C, Su Y, Liu Y. An Ensemble Learning Approach for Urban Land Use Mapping Based on Remote Sensing Imagery and Social Sensing Data. Remote Sensing. 2020; 12(19):3254. https://doi.org/10.3390/rs12193254

Chicago/Turabian StyleHuang, Zhou, Houji Qi, Chaogui Kang, Yuelong Su, and Yu Liu. 2020. "An Ensemble Learning Approach for Urban Land Use Mapping Based on Remote Sensing Imagery and Social Sensing Data" Remote Sensing 12, no. 19: 3254. https://doi.org/10.3390/rs12193254

APA StyleHuang, Z., Qi, H., Kang, C., Su, Y., & Liu, Y. (2020). An Ensemble Learning Approach for Urban Land Use Mapping Based on Remote Sensing Imagery and Social Sensing Data. Remote Sensing, 12(19), 3254. https://doi.org/10.3390/rs12193254