Abstract

The Synthetic Aperture Radar (SAR) time series allows describing the rice phenological cycle by the backscattering time signature. Therefore, the advent of the Copernicus Sentinel-1 program expands studies of radar data (C-band) for rice monitoring at regional scales, due to the high temporal resolution and free data distribution. Recurrent Neural Network (RNN) model has reached state-of-the-art in the pattern recognition of time-sequenced data, obtaining a significant advantage at crop classification on the remote sensing images. One of the most used approaches in the RNN model is the Long Short-Term Memory (LSTM) model and its improvements, such as Bidirectional LSTM (Bi-LSTM). Bi-LSTM models are more effective as their output depends on the previous and the next segment, in contrast to the unidirectional LSTM models. The present research aims to map rice crops from Sentinel-1 time series (band C) using LSTM and Bi-LSTM models in West Rio Grande do Sul (Brazil). We compared the results with traditional Machine Learning techniques: Support Vector Machines (SVM), Random Forest (RF), k-Nearest Neighbors (k-NN), and Normal Bayes (NB). The developed methodology can be subdivided into the following steps: (a) acquisition of the Sentinel time series over two years; (b) data pre-processing and minimizing noise from 3D spatial-temporal filters and smoothing with Savitzky-Golay filter; (c) time series classification procedures; (d) accuracy analysis and comparison among the methods. The results show high overall accuracy and Kappa (>97% for all methods and metrics). Bi-LSTM was the best model, presenting statistical differences in the McNemar test with a significance of 0.05. However, LSTM and Traditional Machine Learning models also achieved high accuracy values. The study establishes an adequate methodology for mapping the rice crops in West Rio Grande do Sul.

1. Introduction

Spatiotemporal monitoring of rice plantations is crucial information for the development of policies for economic growth, food security, and environmental conservation [1]. In many regions of the world, official data used to estimate cultivated areas is based on surveys of statistical data through field visits and farmers’ interviews, which is a very slow, expensive, and laborious process [2]. In this context, remote sensing images allow systematic coverage of a wide geographic area over time, quickly and at a low cost. Thus, in recent decades, different remote sensing data and digital image processing have been developed to monitor crops [3,4,5], especially rice plantations [6,7,8].

In mapping rice paddies, many remote sensing studies were carried out before 2000, in the 1980s and 1990s, using optical images [9,10,11], microwave images [12,13,14], and a combination of these two data [15]. An essential approach to rice plantation detection came with phenological behavior analysis, which requires a dense time series to distinguish it from other crops or between different rice-growing systems. In the optical image time series, various sensors have been evaluated in mapping rice planting: Landsat Series (Terrestrial Remote Sensing Satellite) [16,17,18], NOAA AVHRR [19,20,21], SPOT [22,23,24], and MODIS [25,26,27,28,29,30]. However, optical data analysis requires sensors with a high temporal resolution to acquire enough cloudless images to create reliable time series. In certain places, there are severe climatic limitations to obtain cloudless images throughout the rice-growing season.

Synthetic Aperture Radar (SAR) data overcomes meteorological and lighting concerns, allowing the construction of continuous time series. Different SAR sensors have been used to map rice-growing regions. Considering the X-band (8–12 GHz) radar, several types of research used the sensors: TerraSAR-X [31,32,33,34] and COnstellation of small Satellites for the Mediterranean basin Observation (COSMO)-SkyMed [33,35,36,37,38]. Among the C-Band radar sensors (4–8 GHz) used to map the rice crops were: European Remote Sensing Satellite (ERS) [12,39,40,41], Radarsat [42,43,44,45,46,47], and Advanced Synthetic Aperture Radar (ASAR) [48,49,50,51,52]. Finally, studies on rice mapping with L-band radar sensors (15–30) used the ALOS Phased Array type L-band Synthetic Aperture Radar (PALSAR) [53,54,55].

The advent of the European Space Agency’s Sentinel-1A images (C-band) has significantly increased the volume of high revisit time SAR data with free availability, resulting in greater dissemination and advancement in crop mapping research. These denser time-series SAR data allow us to capture short phenological stages, increasing the classification capacity. Monitoring and mapping the pattern of rice cultivation from Sentinel-1 time-series images has been tested in different locations around the world: Bangladesh [56], China [57], France [58], India [56,59], Japan [60], Spain [57], USA [57], Vietnam [57,61,62,63,64]. Some studies also integrate the Sentinel-1 image with optical images from the Landsat 8 Operational Land Imager (OLI) and the Sentinel-2 A/B [65,66,67,68].

Recently, Deep Learning has reached the state-of-the-art in the field of computer vision, obtaining a significant advantage in the classification of natural images [69,70,71] and remote sensing data [72,73,74]. In studies of dense time series, the Recurrent Neural Network (RNN) methods are the most promising due to their ability to analyze sequential data [75]. Among RNNs, the Long Short-Term Memory (LSTM) model [76] is widely used to capture time correlation efficiently. Therefore, LSTM allows the evaluation of plantations’ phenological variation, detecting pixel coherence between time sequence data. In remote sensing data, the LSTM model was applied in: change detection in bi-temporal images [75,77], time-series classification to distinguish crops [78,79,80,81,82], land use/land cover [83,84], and vegetation dynamics [85].

This research aims to evaluate methods to detect rice cultivation in southern Brazil from the Sentinel-1 time series, using the LSTM and Bidirectional LSTM (Bi-LSTM) methods. The study compared the results based on LSTM and Bidirectional LSTM (Bi-LSTM) with traditional machine learning techniques: Support Vector Machines (SVM), Random Forest (RF), k-Nearest Neighbors (k-NN), and Normal Bayes (NB).

2. Study Area

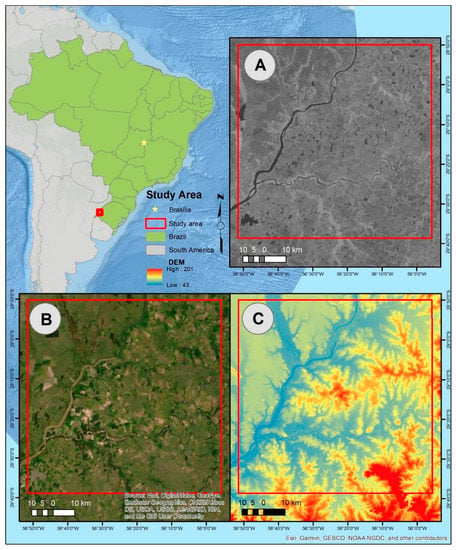

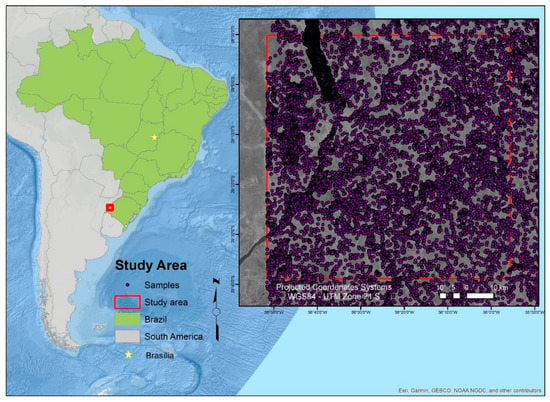

Brazil was the tenth largest producer of milled rice in 2018/2019 [86]. Apart from Asian countries, Brazil became the most significant global producer. However, the southern region concentrates the national production, with 80% of the production, where the State of Rio Grande do Sul is the largest Brazilian producer [87]. The Rio Grande do Sul (composed of 497 municipalities) is the ninth-largest Brazilian State (281,730.2 km2), representing more than 3% of the Brazilian territory. In 2019, the State’s population was 11,377,278 habitants (6% of Brazil) with a demographic density of 40.3 habitants/km2 [88]. The production of rough rice in the Rio Grande do Sul in 2018 was 8401 million tons in a planted area of 1068 million hectares, representing 71% of the national production, which was 11,749 million tons [89]. The study area is the rice-producing region of Uruguaiana, which has been successively the national leader in rice production among Brazilian municipalities [89]. It covers the municipality and its surroundings in the Uruguay River basin, located west of the State of Rio Grande do Sul, on the border with Argentina (Figure 1).

Figure 1.

Location map of study area. (A) vertical transmit and horizontal receive (VH) polarized synthetic aperture radar (SAR) image (Sentinel-1), (B) Operational Land Imager (OLI)-Landsat 8 image, and (C) Shuttle Radar Topography Mission (SRTM) data.

This region is on the Campaign Plateau with the presence of flatforms associated with the Uruguay River and its tributaries (Quaraí, Ibicui, and Butuí-Icamaquã) [90,91]. The rocky substrate consists of intermediate volcanic rocks (andesites and basalts) [92]. The area belongs to the Pampa Biome, where the Vachellia caven grasslands predominate [93]. However, there is a high grassland conversion to crop areas, mainly from rice and secondarily to soybeans, corn, and vegetables.

However, the expansion of irrigated rice fields in flooded plain intensifies the fragmentation of wetlands in southern Brazil, which contains approximately 72% of the fragments smaller than 1 km2 [94]. Many studies analyze the effects of rice plantations in south Brazil biodiversity [95,96,97,98]. In this context, Maltchik et al. [99] propose good practices in managing rice production to conserve biodiversity within production systems. Besides, increasing irrigation to obtain higher productivity than rainfed cultivation intensifies the need for water resource management to avoid contingencies and equalize the demand for agriculture, livestock, and industry.

3. Materials and Methods

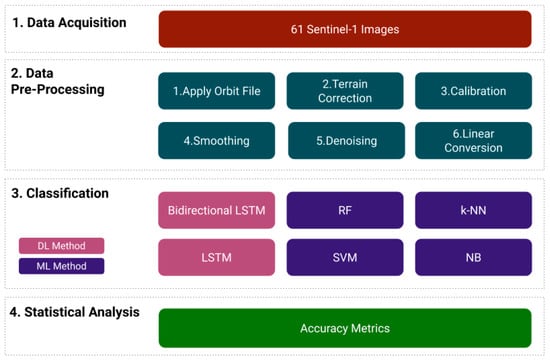

Image processing included the following steps (Figure 2): (a) acquisition of the Sentinel time series (10-m resolution) considering the phenological behavior of the plantation; (b) data pre-processing and minimizing noise from 3D spatial-temporal filters and smoothing with Savistky-Golay filter; (c) comparison of classification methods (LSTM, Bidirectional LSTM, SVM, RF, k-NN, and NB) and; (d) accuracy analysis.

Figure 2.

Methodological flowchart.

3.1. Dataset and Pre-Processing

The research used a Sentinel-1 time series over two years (2017 and 2018). The images are available free of charge by the European Space Agency (ESA) (https://scihub.copernicus.eu/dhus/#/home). The Sentinel-1 images correspond to the C band (5.4 GHz) with four modes with different spatial resolutions and swath width [100]. The present research used the product Ground Range Detected (GRD) in Interferometric Wide Swath mode, with a 10-m resolution image and a large swath width (250 km). The Sentinel-1 revisit cycle is 12 days, obtaining 30 images per year for the study area, which totals 60 images for the 2017–2018 period. We adopted two years of images because the rice cycle starts in half a year and ends in the next. Besides, investigates indicate that the adoption of two-year time signatures facilitates the detection of targets with phenological cycles [101,102]. This temporal resolution allows following the phenological behavior of the rice plantation. In this research, we used Vertical transmit and Horizontal receive (VH) polarization since other studies have shown that the VH polarized backscatter is more sensitive to rice-crop growth than other polarizations [62,65]. The pre-processing of the images used the Sentinel Application Platform (SNAP) software, considering the following workflow [103]: apply orbit file; calibration (procedure that converts digital pixel values to radiometrically calibrated SAR backscatter); a range-Doppler terrain correction by utilizing the SRTM digital terrain model; and linear conversion in decibels (dB). The corrected images (2017–2018) were stacking in just one file generated a temporal cube.

3.2. SAR Image Denoising

The speckle is inherent in SAR images, establishing a granular appearance with light and dark pixels, affecting the identification of surface targets. Speckle increases confusion between different types of surfaces, which are worst in low contrast areas. Therefore, noise filtering is a prerequisite for radar imagery applications. Following other strategies to reduce noise and create a high-quality satellite image time series, we use a two-stage filtering scheme [104,105]: (a) elimination of speckle noise using a 3D-Gamma filter, and (b) smoothing of time series using the method of Savitzky and Golay (S-G) [106].

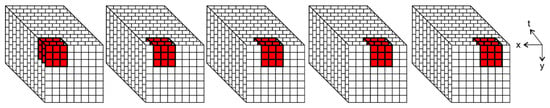

Most filters operate in the spatial domain, using moving windows that perform statistical calculations of central value or adaptive methods. However, noise reduction in the SAR time series allows approaches that integrate the spatial and temporal dimensions to improve the quality of noise reduction [107,108,109,110]. In this context, three-dimensional (3D) filters use spatial-temporal neighborhood information to derive the noise-free value [111,112] (Figure 3). Considering only the spatial domain, the effect of sliding window filters only increases with the expansion of the window size, which reduces the effective spatial resolution. On the other hand, spatiotemporal filters increase the amount of information in the time domain, reducing the edge-blurred effect. For example, the central pixel value of a 3 × 3 window uses nine pixels in the calculation, while a 3 × 3 × 3 cubic window uses 27 pixels in the same area. We adapted the Gamma adaptive filter [113] for a 3D window considering spatiotemporal data. The Gamma filter allows image conservation, filtering homogeneous surfaces, and preserving the edges. The window size used was 5 × 5 in the spatial domain and 9 in the temporal dimension.

Figure 3.

3D convolutional filter considering a time series data with spatial dimensions “x” and “y” and temporal dimension “t”.

Besides, we use the Savitzky and Golay (S-G) method for smoothing the SAR data in the temporal domain [104]. The S-G filter combines noise elimination and preserves the phenological attributes (height, shape, and asymmetry) [101,114,115]. This procedure established a long-term change trend curve that eliminates small interferences still present and establishes a gradual process of the annual rice-crop cycle.

3.3. RNN and Traditional Machine Learning Models

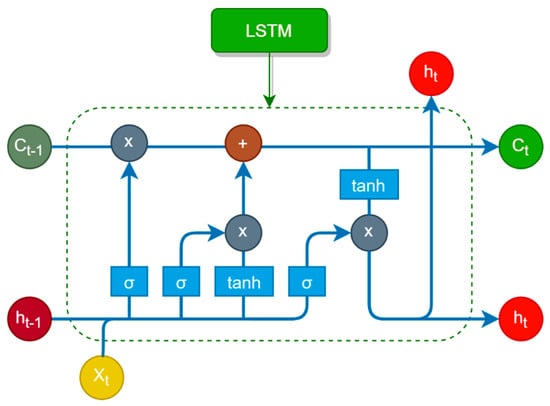

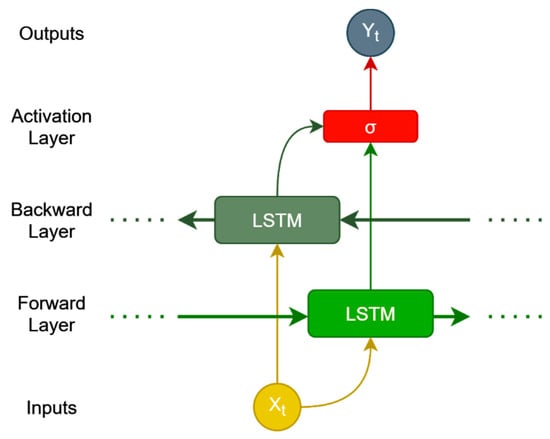

RNN architectures are very efficient when dealing with sequenced data, and its application has increased in the last few years in many scientific areas [116]. Hochreiter et al. [76] proposed Long Short-Term Memory (LSTM) architecture, one of the most common RNN architecture, which shows state-of-the-art results for sequential data tasks because of its ability to capture long time dependencies [117]. The architecture structure presents a memory cell that holds a current state over different sequential instances and non-linear dependencies that control information entering and exiting the cell [117,118]. Figure 4 shows LSTM architecture, where Xt is the input vector, Ct is the memory from the current block, and ht is the output of the current block. Sigmoid (σ) and hyperbolic tangent (tanh) are the nonlinearities and the vector operations are element-wise multiplication (x) and element-wise concatenation (+). Furthermore, Ct-1 and ht-1 are the memory and output from the previous block.

Figure 4.

Long short-term memory (LSTM) architecture.

Many studies have been carried out to improve the LSTM architecture. One of the most powerful approaches is Bidirectional LSTM (Bi-LSTM). Bi-LSTM models are generally more effective when handling contextual information since their output at a given time depends on both previous and next segments, contrasting with LSTM models that are unidirectional [119,120]. The Bi-LSTM architecture has a forward and a backward layer that consists of LSTM units to apprehend past and future information (Figure 5).

Figure 5.

Bidirectional LSTM (Bi-LSTM) architecture.

In the present research, we used the LSTM and Bi-LSTM with two hidden layers (122 neurons in the first layer and 61 neurons in the second layer) and softmax activation function (7 outputs). We trained the models with the following hyperparameters: (a) 5000 epochs; (b) dropout rate of 0.2; (c) Adam optimizer with a starting learning rate of 0.01 (divided by 10 after 1000 epochs); and (d) mini-batch size of 128. Moreover, we used categorical cross-entropy loss function [121] presented in Equation (1), where i is the element’s number, y is the ground truth label, y’ is the predicted label, and K is the number of classes. Furthermore, to generalize the behavior from the loss function, Equation (2) shows the cost where is the total set of weights and m is the total number of elements.

In this paper, we compared the RNN methods (LSTM and Bi-LSTM) with traditional Machine Learning methods used in the remote sensing application: (1) Support Vector Machine (SVM) [122]; (2) Random Forest (RF) [123,124,125]; (3) k-NN [126], and (4) Normal Bayes. The SVM algorithm allocates prediction vectors into a higher dimensional plane, separating the different classes using linear or non-linear kernel functions [127]. The Random Forest algorithm introduced by Breiman [128] combines a high amount of binary classification trees with several bootstrap values, obtaining the final value from the majority vote from all individual trees. The k-NN method is widely used in remote sensing imagery and does not require training a model since the classification of a given point assumes the majority vote from its k nearest neighbors [129]. For last, the Bayesian classifier predicts a given class considering feature vectors from each class are normally distributed, usually presenting better results in data that has a high degree of feature dependencies [130].

The dataset was a manual collection of 28,000-pixel samples (denoised temporal signatures), containing seven classes with equal distribution (4000 samples per class), showing a well-distributed, systematic, and stratified sampling [131] (Figure 6). In this manual sampling of the ground truth points, we used previous surveys of mapping rice crops and high-resolution images from Google Earth to determine the exact location of known points. The sampling process considered balanced data sets that positively impacted the classification results [132]. The train/validation/test split had a total of 19,600-pixel samples for training (70%), 5600-pixel samples for validation (20%), and 2800-pixel samples for testing (10%). The mapping considered the following land-use/land-cover classes: rice crops, the fallow period between rice crops, bodies of water (rivers, lakes, and reservoir), riparian forest/reforestation, grassland, flooded grassland, and other types of crops. We disregard the areas of the city using a mask. In the study region, the vast majority of the rice grown occurred in lowland areas using flood irrigation. The visual interpretation of high-resolution images quickly detects this type of management. The planting has a characteristic pattern, containing the dikes that follow the contour curve and the transversal dikes that divide the land into rectangular plots. The captured and retention of water allows the field to flood during the growing season.

Figure 6.

Map of ground truth points.

3.4. Accuracy Assessment

Accurate analysis of remote sensing image classifications is essential to compare algorithms and establish product quality. In the present study, we use the accuracy metrics from confusion matrix widely used in studies of land-use/land-cover classification, including the overall accuracy, Kappa coefficient, and commission and omission errors [133,134]. Overall accuracy is the most straightforward metric, calculated by dividing the sum of pixels correctly classified by the total number of pixels analyzed [134]. Congalton [133] introduced the Kappa coefficient for accuracy analysis of remote sensing data. The Kappa coefficient is a popular measure of agreement among raters (intermediate reliability) with a value range of −1 to +1 [135]. Commission and omission errors are a very effective way of representing the accuracy of each classified category. Commission error (Type I-false positive) occurs when classifying a pixel as a land-cover/land-use category that is not. Meanwhile, the omission error (Type II-false negative) is not to classify the pixel as a land-cover/land-use category when it is.

We used the McNemar test [136] to assess the statistical significance of differences in remote sensing classification accuracy [137]. McNemar’s test principle is based on the evaluation of a contingency table with a 2 × 2 dimension considering only correct and incorrect points for two different methods (Table 1).

Table 1.

Data layout for McNemar test between two classification results.

In the relative comparison between different classification algorithms, the chi-square (χ2) statistic has one degree of freedom and uses exclusively discordant samples (f12 e f21) (Equation (3)). The null hypothesis of marginal homogeneity means equivalent results between the two classifications. If the result χ2 is higher than the values of the χ2 distribution table, we reject the null hypothesis and assume that the marginal proportion of each classification method is significant and with different behavior. As in other studies that compare classification algorithms, we used the same set of validation data for McNemar’s analysis, consisting of a sample independent of the training set. Therefore, the test used 1200 samples per mapping unit (totaling 8400 samples).

4. Results

4.1. Image Denoising

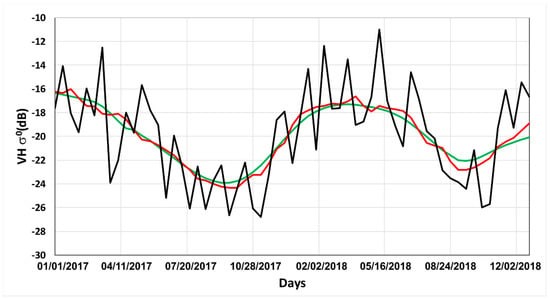

The combination of the 3D Gamma and S-G filters exhibited good results in the noise elimination of the Sentinel time series. The 3D Gamma filter provides an intense minimization of speckles, but still maintains some minor unwanted alterations in the temporal trajectories. The S-G filter with a window size of 19 refined the result obtained by the 3D Gamma filter, smoothing the temporal profile without interfering with the maximum and minimum values and ensuring data integrity. Figure 7 demonstrates the effects of the application of filtering techniques in the Sentinel-1 time series.

Figure 7.

Effect of noise elimination in rice crop temporal trajectory using a 3D convolutional window in the spatial direction “x” and “y” and the temporal direction “t” (x = 5, y = 5, t = 9) and a second smoothing filter using the Savitzky and Golay (S-G) method. The original data is in black, 3D Gamma filter in red, and smoothing with S-G method in green.

4.2. Temporal Backscattering Signatures

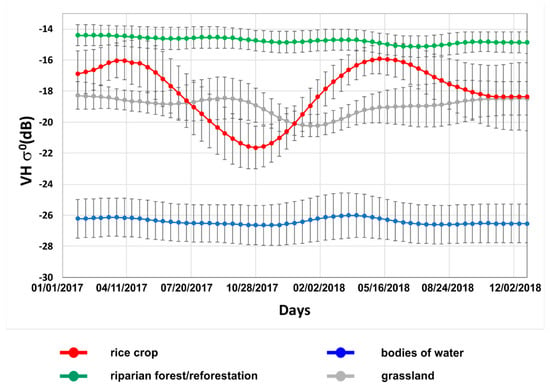

The study area is flat, which reduces the sensitivity to terrain variations and improves the detection of targets. Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 show the average backscattering time series in the cross-polarized data (vertical transmit, horizontal receive-VH) with their corresponding standard deviation for all targets and detailed images of some classes. The temporal profiles show distinctive seasonal backscatter patterns for the analyzed targets, which offers a high contrast of the rice cultivation areas to their surroundings. The riparian forest shows a backscattering time series with the highest values over the whole time series with a stable backscatter between −15.50 and −13.00 dB. Open water surfaces have the lowest backscatter values (<−24.5 dB throughout the year) since the surface has a specular behavior that reflects the emitted sensor energy.

Figure 8.

Behavior of the mean time series with the respective standard deviation bars for the main targets (rice planting, bodies of water, riparian forest, and grassland) present in the region of Uruguaina, southern Brazil.

Figure 9.

Google Earth image demonstrating the flooding of rice fields in the Uruguaina region, southern Brazil.

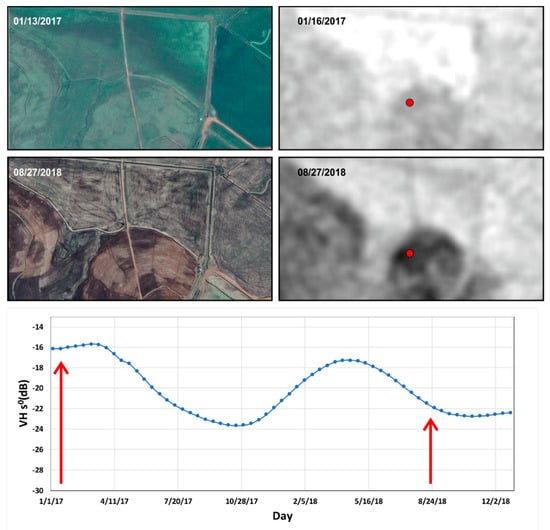

Figure 10.

Google Earth and Sentinel 1 images for rice crop at two different times: planting under development (13 January 2017) and at the beginning of planting (27 August 2018). The time trajectory of the pixel marked in red in the Sentinel 1 images shows the positioning of the scenes over time (red arrows).

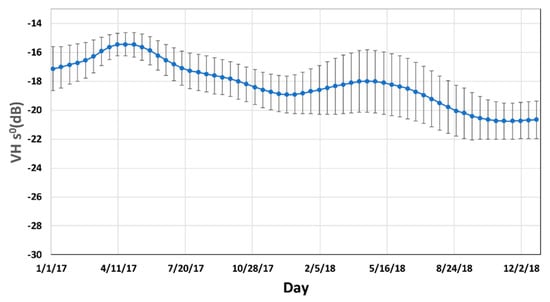

Figure 11.

The average time series behavior with the respective standard deviation bars for areas during the fallow period between rice cultivation, without showing the typical characteristic of the beginning of planting.

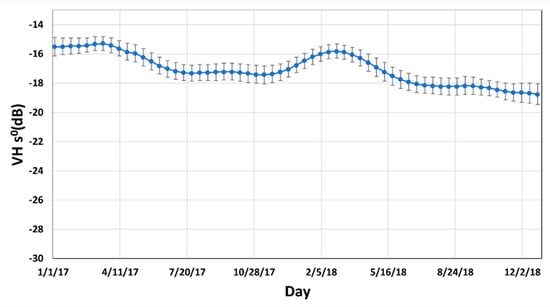

Figure 12.

Behavior of the average time series with the respective standard deviation bars for the other plantations (background areas).

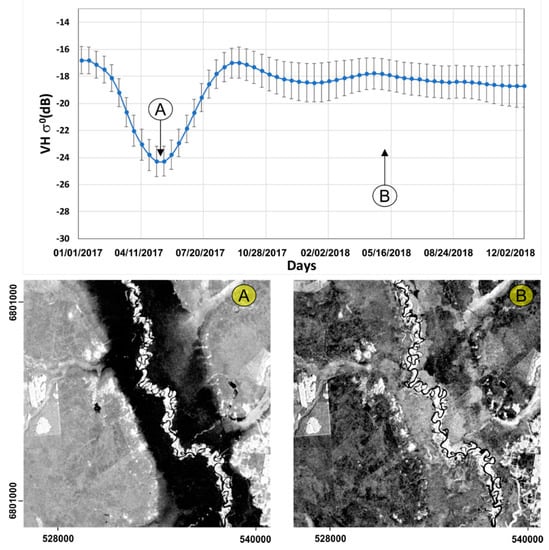

Figure 13.

Behavior of the mean time series with the respective standard deviation bars for grassland regions in floodplain, southern Brazil. Sentinel-1 images in the VH polarization representing: (A) flood period, and (B) dry period.

The rice temporal series almost had a Gaussian behavior for the crop cycles (Figure 8). This Gaussian behavior for rice planting is in agreement with the study developed by Bazzi et al. [58]. In the study region (Western Frontier and Upper Valley of Uruguay), favorable sowing periods for medium-cycle cultivars are from September 21 to November 20 [138]. While for early-cycle cultivars are from 11 October to 10 December [138]. Although varieties with different cycle lengths can coincide, early-cycle cultivars are delayed by about 10 to 15 days to avoid the critical cold period in December that is harmful to the crop [138]. Very late sowing is avoided due to low productivity levels. The harvested area of the 2017/18 crop reached the percentage of 35% of the area harvested close to 29 March and 95% close to 10 May.

In addition to the dates of cultivation, an essential factor in the description of temporal signatures is agronomic practices. The irrigated rice tillage systems for the 2017/2018 harvest in Western Frontier (RS) showed a predominance of minimal tillage use by 74.5% of the planted areas [139]. The remaining regions present 24.1% with the traditional system and 0.3% with the sum of no-tillage and the pre-germinated system [139]. The minimum tillage promotes soil conservation, making management with low interference that supports the presence of vegetation cover and reduces surface irregularities caused by harvesters. Sowing takes place directly in the soil, under a vegetation cover previously desiccated with herbicide. According to Li et al. [140], the minimum tillage system can generate a grain yield of 2.1% higher than conventional planting and produce economic benefits by 11.0%. Therefore, the high use of minimum tillage is a simple conservation practice in humid areas, not preventing rice transplantation or increasing labor costs.

Thus, the rice-crop time series shows the lowest σ° values at the beginning of planting between September and November (with average value on 28 October 2017). During this period, the use of herbicide eliminates the vegetation cover, followed by flooding of rice fields, resulting in low SAR values. Figure 9 shows the Google Earth image from the beginning of the flood of the field in 2016. With the growth of planting, the σ° values gradually increase, reaching its peak in May, when the backscatter values start to fall due to the beginning of the harvest. The decrease is gradual due to the practice of minimum tillage that keeps the vegetation cover. The lowest values return in 2018 between October and November with the beginning of the new planting cycle. Figure 10 shows Google Earth and Sentinel-1 images related to different moments of the rice plantation and their respective positions in the temporal trajectory. In the time signature, the date of 13 January 2017 shows the stage of development of the planting, while the period of 27 August 2018 shows the initial phase of the new planting cycle.

The rice planting areas with irrigation dikes also exhibit temporal signatures without a significant drop in σ° values (Figure 11). Generally, these areas correspond to a fallow period that can extend for up to two years and are sometimes underutilized by cattle. The rice planting areas with irrigation dikes also exhibit temporal signatures without a significant drop in σ° values (Figure 8). The presence of other plantations was merged in a class characterized by slightly higher values and lower amplitude than rice planting (Figure 12).

Finally, we separated the floodplain areas with a distinctive feature due to the flood event’s presence in May–June 2017 (Figure 13). Therefore, these flooded grasslands show a significant decrease in backscatter values during the flood event (blue bar), acquiring well-marked temporal signatures (Figure 13). The duration of the slope in the backscatter values depends on the extent of the flood and its intensity may vary according to its position on the ground.

4.3. Comparison between RNN and Traditional Machine Learning Methods

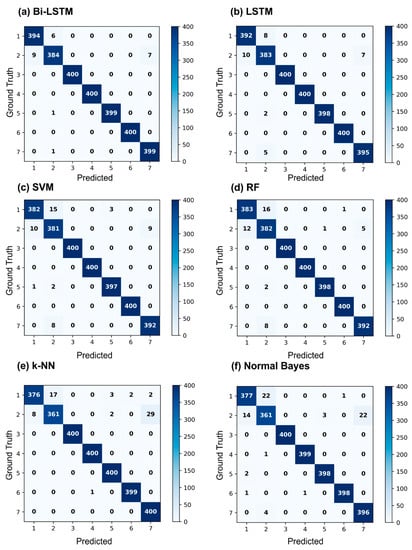

The training stage for each model presented different procedures to achieve the optimal configuration. To obtain the best LSTM and Bi-LSTM models, we monitored the categorical validation loss. Both models presented low categorical loss values (0.027 for Bi-LSTM and 0.029 for LSTM). Traditional Machine Learning methods require parameter tuning. SVM with third-degree polynomial kernels, RF with 150 trees, k-NN with ten neighbors, and Normal Bayes with 1 × 10−9 variation smoothing presented the best results. After performing the optimal hyper-parameter tuning, we analyzed the model’s performances by comparing their test samples confusion matrixes (Figure 14), accuracy, kappa score (Table 2), Commission and Omission Errors (Table 3 and Table 4), and McNemar Test (Table 5).

Figure 14.

Confusion matrices of land use and land cover classification using different algorithms (a) Bi-LSTM, (b) LSTM, (c) support vector machines (SVM), (d) random forest (RF), (e) k-NN, and (f) Bayes. The analyzed classes were: (1) rice crops, (2) fallow period between crops, (3) bodies of water (rivers, lakes, and reservoir), (4) riparian forest/reforestation, (5) grassland, (6) flooded grassland, and (7) other types of crops. We disregard the areas of the city using a mask.

Table 2.

Mean accuracy, Kappa, and F-score values for the models evaluated.

Table 3.

Representation of commission errors for every class.

Table 4.

Representation of omission errors for every class.

Table 5.

McNemar test, where the green cells represent statistically equal models, and the red cells represent statistically different models, using a significance of 0.05.

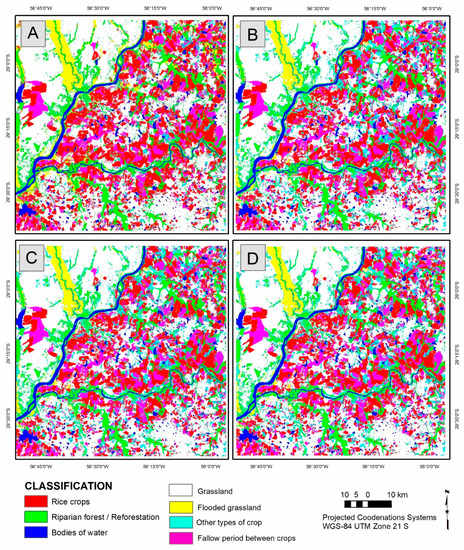

Figure 15 shows the results of the four best models. The macro analysis presented in Table 2 shows high accuracy and kappa values for all models (>97% in all metrics). The filter applied in the raw data can explain this behavior because it increases inter-class similarities and maximizes extra-classes differences. In addition, the seven classes are very different from each other, increasing the classifier’s effectiveness.

Figure 15.

Comparison of classification results within the four best methods in Uruguayan region, located in South Brazil: (a) LSTM, (b) Bi-LSTM, (c) SVM, and (d) RF.

Despite the high metrics in the macro analysis, there is a significant difference within the most critical classes for this study (1 and 2). We can observe from the Confusion Matrixes (Figure 14) that Classes 3, 4, 5, 6, and 7 present very similar results for all models. Classes 1 and 2 show the most significant values of confusion between classes, which are the most critical for the present research. Furthermore, the evaluation of the Commission and Omission errors within those classes clearly indicates that the RNN models had more consistent results than other methods. To verify the statistical differences between the models, we performed the McNemar test presented in Table 5. Thus, using a significance level of 0.05, two pairs of models are equivalent (RF/SVM and NB/k-NN).

The results obtained show that even though the Deep Learning and Machine Learning models presented high values, the Deep Learning models had statistical advantages and had a significant improvement in the most critical classes. Even though Bi-LSTM and LSTM presented statistical differences, the high values of these models within classes 1 and 2 are due to the temporal signature behavior, which shows a relatively symmetrical shape with sinusoidal (periodic) characteristics. In this scenario, the forward and backward sequences within the RNN model’s trajectory are very similar, with opposing derivatives for each instance in the time-sequenced data, yet with the same approximate module.

Furthermore, another significant advantage of the RNN models is the computational cost in the classification. The time to classify the 13000 × 13000 pixel image is about 50 min for Bi-LSTM and LSTM, 104 min for SVM, 110 min for Random Forest, 13 h for k-NN, and 3 h for Normal Bayes. The RNN methods are two times faster than SVM (the faster Traditional Machine Learning method) in the classification processing, having a much more useful application in practical scenarios.

5. Discussion

There are few remote sensing studies for detecting rice cultivation in Brazilian regions (although Brazil is one of the largest rice producers globally), mainly using Deep Learning methods, such as LSTM and Bi-LSTM. In this research, the best results for the LSTM and SVM models are in line with other research developed in the classification of remotely sensed data. Commonly, remote sensing classifications using LSTM show higher accuracy than other machine learning methods. In the detection of urban features using Landsat 7 images, Lyu et al. [77] show better performance of LSTM (95% OA) compared to SVM (80% OA) and Decision Tree (70% OA). Rußwurm and Körner [79] obtain better results with LSTM (90.6% OA) than CNN (89.2% OA) and SVM (40.9% OA) for land cover classification using a temporal sequence of Sentinel-2 images. Mou et al. (2018b) describe the superiority of the combination CNN and LSTM (~98% OA) over SVM (~95% OA) and Decision Tree (~85% OA) for change detection in multispectral images.

However, other researches demonstrate worse LSTM performance when compared to other learning machine methods. In the detection of winter wheat in MODIS images, He et al. [78] obtained a better RF result (0.72 F-Score) than attention-based LSTM (0.71 F-Score). Zhong et al. [141] classified summer crops in California, using the Landsat Enhanced Vegetation Index (EVI) time series. LSTM had the worst performance among all classifiers (82.41% OA and 0.67 F-Score), such as Conv1D-based model (85.54% OA and 0.73 F-Score), XGBoost (84.17% OA and 0.69 F-Score), MLP (83.81% OA and 0.69 F-Score), RF (83.38% OA and 0.67 F-Score), and SVM (82.45% OA and 0.68 F-Score).

Among the researches that implemented Machine Learning methods in rice crops, Onojeghuo et al. [65] compared SVM and RF in rice crops located in China, performing different studies in VV and VH images. The best combination had a prevalence of RF (95.2% OA) over SVM (82.5% OA). Son et al. [64] also compared SVM and RF in rice crops in the South Vietnam area. Even though RF better performed than SVM, the difference was smaller (86% OA from RF and 83.4% from SVM).

The present research had higher accuracy metrics and lower discrepancies between models than the studies related above. This behavior can be explained by the high temporal resolution (61-step steps) added with intense smoothing caused by the 3D filter, which increased the intra-class similarities and highlighted the extra-class differences, facilitating the classification algorithms. The results obtained in this research show that RNN and Machine Learning methods bring significant benefits for its ease, accuracy, and simplicity, being much more useful than cense information used nowadays. Furthermore, the RNN shows significant advantages when comparing the processing time of classification, since it is much faster than any traditional Machine Learning method.

The hybrid combination of convolutional and recurrent networks has applications in this study area in future studies. In this context, Teimouri et al. [81] combined the Fully Convolutional Network (FCN) and Long-Term Convolutional Memory (ConvLSTM) to classify 14 main classes of crops using Sentinel-1 temporal images in Denmark, reaching an accuracy of 86% and Intersection over Union of 0.64, respectively. Another important future research is to evaluate the classification performance using the VH and VV bands together.

6. Conclusions

Crop mapping using SAR temporal series is an alternative to the census survey by field interviews because of its low cost and speed, supporting the Rio Grande do Sul territorial planning. In this article, we applied different models to identify large-scale rice crops from the Sentinel-1 time-series imagery, covering the municipality of Uruguaiana, Brazil’s largest producer. The model application considered a multiclass mapping to delimit the rice plantations in comparison to its surroundings. The temporal behavior of the backscatter coefficient of Sentinel-1 in the polarization of the VH on different types of targets has features and patterns that differentiate them from rice planting plots. We created a sufficiently extensive and balanced training and test dataset to improve results and statistical analysis. RNN models presented a state-of-the-art performance in identifying rice crops, with accuracy and Kappa values greater than 0.98. The comparison of these models indicates that Bi-LSTM presented the best results with statistical differences considering the significance of 0.05. An advantage of Deep Learning models is the speed with GPU acceleration being significantly faster than the traditional machine learning methods in the classification processing. The high accuracy values are due to the distinction between time series behaviors. Rice plantations present a gradual and wide variation throughout the year, acquiring a distinct temporal signature for each target. Besides, continuous and denser time series obtained from SAR data, without interference or data loss, improve the accuracy and generalizability of models. The results demonstrate that Bi-LSTM and LSTM models achieve better performance when compared to traditional Machine Learning methods. Future research may include new architectures, integrating temporal and spatial domains, such as CNN and RNN. Another approach is to analyze non-periodic time series data, which tends to highlight the differences between Bi-LSTM and LSTM, also presenting a more significant gap between Deep Learning and traditional Machine Learning models.

Author Contributions

Conceptualization, H.C.d.C.F., O.A.d.C.J., O.L.F.d.C., and P.P.d.B.; methodology, H.C.d.C.F., O.A.d.C.J., O.L.d.F.C., and P.P.d.B.; software, C.R.S., O.L.d.F.C., P.H.G.F., P.P.d.B., and R.d.S.d.M.; validation, H.C.d.C.F., C.R.S., O.L.F.d.C., and P.H.G.F.; formal analysis, O.A.d.C.J., H.C.d.C.F., and R.A.T.G.; investigation, R.F.G., A.O.d.A., and P.H.G.F.; resources, O.A.d.C.J., R.A.T.G., and R.F.G.; data curation, H.C.d.C.F., O.L.d.F.C., P.H.G.F., and A.O.d.A.; writing—original draft preparation, H.C.d.C.F., O.A.d.C.J., O.L.F.C., and R.F.G.; writing—review and editing, O.A.d.C.J., R.d.S.d.M., R.F.G., and R.A.T.G.; supervision, O.A.d.C.J., R.A.T.G., and R.A.T.G.; project administration, O.A.d.C.J., R.F.G., and R.A.T.G.; funding acquisition, O.A.d.C.J., R.F.G., and R.A.T.G. All authors have read and agreed to the published version of the manuscript.

Funding

The following institutions funded this research: National Council for Scientific and Technological Development (434838/2018-7), Coordination for the Improvement of Higher Education Personnel, and the Union Heritage Secretariat of the Ministry of Economy.

Acknowledgments

The authors are grateful for financial support from CNPq fellowship (Osmar Abílio de Carvalho Júnior, Renato Fontes Guimarães, and Roberto Arnaldo Trancoso Gomes). Special thanks are given to the research group of the Laboratory of Spatial Information System of the University of Brasilia for technical support. The authors thank the researchers form the Union Heritage Secretariat of the Ministry of Economy, who encouraged research with deep learning. Finally, the authors acknowledge the contribution of anonymous reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Laborte, A.G.; Gutierrez, M.A.; Balanza, J.G.; Saito, K.; Zwart, S.J.; Boschetti, M.; Murty, M.V.R.; Villano, L.; Aunario, J.K.; Reinke, R.; et al. RiceAtlas, a spatial database of global rice calendars and production. Sci. Data 2017, 4, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Frolking, S.; Qiu, J.; Boles, S.; Xiao, X.; Liu, J.; Zhuang, Y.; Li, C.; Qin, X. Combining remote sensing and ground census data to develop new maps of the distribution of rice agriculture in China. Glob. Biogeochem. Cycles 2002, 16, 38-1–38-10. [Google Scholar] [CrossRef]

- Jin, X.; Kumar, L.; Li, Z.; Feng, H.; Xu, X.; Yang, G.; Wang, J. A review of data assimilation of remote sensing and crop models. Eur. J. Agron. 2018, 92, 141–152. [Google Scholar] [CrossRef]

- Steele-Dunne, S.C.; McNairn, H.; Monsivais-Huertero, A.; Judge, J.; Liu, P.-W.; Papathanassiou, K. Radar Remote Sensing of Agricultural Canopies: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2249–2273. [Google Scholar] [CrossRef]

- Vibhute, A.D.; Gawali, B.W. Analysis and Modeling of Agricultural Land use using Remote Sensing and Geographic Information System: A Review. Int. J. Eng. Res. Appl. 2013, 3, 81–91. [Google Scholar]

- Dong, J.; Xiao, X. Evolution of regional to global paddy rice mapping methods: A review. ISPRS J. Photogramm. Remote Sens. 2016, 119, 214–227. [Google Scholar] [CrossRef]

- Kuenzer, C.; Knauer, K. Remote sensing of rice crop areas. Int. J. Remote Sens. 2013, 34, 2101–2139. [Google Scholar] [CrossRef]

- Mosleh, M.K.; Hassan, Q.K.; Chowdhury, E.H. Application of remote sensors in mapping rice area and forecasting its production: A review. Sensors 2015, 15, 769–791. [Google Scholar] [CrossRef]

- Fang, H. Rice crop area estimation of an administrative division in China using remote sensing data. Int. J. Remote Sens. 1998, 19, 3411–3419. [Google Scholar] [CrossRef]

- McCloy, K.R.; Smith, F.R.; Robinson, M.R. Monitoring rice areas using LANDSAT MSS data. Int. J. Remote Sens. 1987, 8, 741–749. [Google Scholar] [CrossRef]

- Turner, M.D.; Congalton, R.G. Classification of multi-temporal SPOT-XS satellite data for mapping rice fields on a West African floodplain. Int. J. Remote Sens. 1998, 19, 21–41. [Google Scholar] [CrossRef]

- Liew, S.C. Application of multitemporal ers-2 synthetic aperture radar in delineating rice cropping systems in the mekong river delta, vietnam. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1412–1420. [Google Scholar] [CrossRef]

- Kurosu, T.; Fujita, M.; Chiba, K. The identification of rice fields using multi-temporal ers-1 c band sar data. Int. J. Remote Sens. 1997, 18, 2953–2965. [Google Scholar] [CrossRef]

- Panigrahy, S.; Chakraborty, M.; Sharma, S.A.; Kundu, N.; Ghose, S.C.; Pal, M. Early estimation of rice area using temporal ERS-1 synthetic aperture radar data a case study for the Howrah and Hughly districts of West Bengal, India. Int. J. Remote Sens. 1997, 18, 1827–1833. [Google Scholar] [CrossRef]

- Okamoto, K.; Kawashima, H. Estimation of rice-planted area in the tropical zone using a combination of optical and microwave satellite sensor data. Int. J. Remote Sens. 1999, 20, 1045–1048. [Google Scholar] [CrossRef]

- Diuk-Wasser, M.A.; Bagayoko, M.; Sogoba, N.; Dolo, G.; Touré, M.B.; Traore, S.F.; Taylor, C.E. Mapping rice field anopheline breeding habitats in Mali, West Africa, using Landsat ETM + sensor data. Int. J. Remote Sens. 2004, 25, 359–376. [Google Scholar] [CrossRef] [PubMed]

- Kontgis, C.; Schneider, A.; Ozdogan, M. Mapping rice paddy extent and intensification in the Vietnamese Mekong River Delta with dense time stacks of Landsat data. Remote Sens. Environ. 2015, 169, 255–269. [Google Scholar] [CrossRef]

- Nuarsa, I.W.; Nishio, F.; Hongo, C. Spectral Characteristics and Mapping of Rice Plants Using Multi-Temporal Landsat Data. J. Agric. Sci. 2011, 3, 54–67. [Google Scholar] [CrossRef]

- Huang, J.; Wang, X.; Li, X.; Tian, H.; Pan, Z. Remotely Sensed Rice Yield Prediction Using Multi-Temporal NDVI Data Derived from NOAA’s-AVHRR. PLoS ONE 2013, 8, e0070816. [Google Scholar] [CrossRef]

- Salam, M.A.; Rahman, H. Application of Remote Sensing and Geographic Information System (GIS) Techniques for Monitoring of Boro Rice Area Expansion in Bangladesh. Asian J. Geoinform. 2014, 14, 11–17. [Google Scholar]

- Singh, R.P.; Oza, S.R.; Pandya, M.R. Observing long-term changes in rice phenology using NOAA-AVHRR and DMSP-SSM/I satellite sensor measurements in Punjab, India. Curr. Sci. 2006, 91, 1217–1221. [Google Scholar]

- Kamthonkiat, D.; Honda, K.; Turral, H.; Tripathi, N.K.; Wuwongse, V. Discrimination of irrigated and rainfed rice in a tropical agricultural system using SPOT VEGETATION NDVI and rainfall data. Int. J. Remote Sens. 2005, 26, 2527–2547. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, F.C.; Jing, Y.S.; Jiang, X.D.; Yang, S.B.; Han, X.M. Multi-temporal detection of rice phenological stages using canopy stagespectrum. Rice Sci. 2014, 21, 108–115. [Google Scholar] [CrossRef]

- Nguyen, T.T.H.; de Bie, C.A.J.M.; Ali, A.; Smaling, E.M.A.; Chu, T.H. Mapping the irrigated rice cropping patterns of the Mekong delta, Vietnam, through hyper-temporal spot NDVI image analysis. Int. J. Remote Sens. 2012, 33, 415–434. [Google Scholar] [CrossRef]

- Gumma, M.K.; Nelson, A.; Thenkabail, P.S.; Singh, A.N. Mapping rice areas of South Asia using MODIS multitemporal data. J. Appl. Remote Sens. 2011, 5, 053547. [Google Scholar] [CrossRef]

- Gumma, M.K.; Gauchan, D.; Nelson, A.; Pandey, S.; Rala, A. Temporal changes in rice-growing area and their impact on livelihood over a decade: A case study of Nepal. Agric. Ecosyst. Environ. 2011, 142, 382–392. [Google Scholar] [CrossRef]

- Nuarsa, I.W.; Nishio, F.; Hongo, C.; Mahardika, I.G. Using variance analysis of multitemporal MODIS images for rice field mapping in Bali Province, Indonesia. Int. J. Remote Sens. 2012, 33, 5402–5417. [Google Scholar] [CrossRef]

- Peng, D.; Huete, A.R.; Huang, J.; Wang, F.; Sun, H. Detection and estimation of mixed paddy rice cropping patterns with MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 13–23. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Frolking, S.; Li, C.; Babu, J.Y.; Salas, W.; Moore, B. Mapping paddy rice agriculture in South and Southeast Asia using multi-temporal MODIS images. Remote Sens. Environ. 2006, 100, 95–113. [Google Scholar] [CrossRef]

- Lopez-Sanchez, J.M.; Ballester-Berman, J.D.; Hajnsek, I.; Hajnsek, I. First Results of Rice Monitoring Practices in Spain by Means of Time Series of TerraSAR-X Dual-Pol Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 412–422. [Google Scholar] [CrossRef]

- Koppe, W.; Gnyp, M.L.; Hütt, C.; Yao, Y.; Miao, Y.; Chen, X.; Bareth, G. Rice monitoring with multi-temporal and dual-polarimetric terrasar-X data. Int. J. Appl. Earth Obs. Geoinf. 2012, 21, 568–576. [Google Scholar] [CrossRef]

- Nelson, A.; Setiyono, T.; Rala, A.B.; Quicho, E.D.; Raviz, J.V.; Abonete, P.J.; Maunahan, A.A.; Garcia, C.A.; Bhatti, H.Z.M.; Villano, L.S.; et al. Towards an operational SAR-based rice monitoring system in Asia: Examples from 13 demonstration sites across Asia in the RIICE project. Remote Sens. 2014, 6, 10773–10812. [Google Scholar] [CrossRef]

- Pei, Z.; Zhang, S.; Guo, L.; Mc Nairn, H.; Shang, J.; Jiao, X. Rice identification and change detection using TerraSAR-X data. Can. J. Remote Sens. 2011, 37, 151–156. [Google Scholar] [CrossRef]

- Asilo, S.; de Bie, K.; Skidmore, A.; Nelson, A.; Barbieri, M.; Maunahan, A. Complementarity of two rice mapping approaches: Characterizing strata mapped by hypertemporal MODIS and rice paddy identification using multitemporal SAR. Remote Sens. 2014, 6, 12789–12814. [Google Scholar] [CrossRef]

- Busetto, L.; Casteleyn, S.; Granell, C.; Pepe, M.; Barbieri, M.; Campos-Taberner, M.; Casa, R.; Collivignarelli, F.; Confalonieri, R.; Crema, A.; et al. Downstream Services for Rice Crop Monitoring in Europe: From Regional to Local Scale. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5423–5441. [Google Scholar] [CrossRef]

- Corcione, V.; Nunziata, F.; Mascolo, L.; Migliaccio, M. A study of the use of COSMO-SkyMed SAR PingPong polarimetric mode for rice growth monitoring. Int. J. Remote Sens. 2016, 37, 633–647. [Google Scholar] [CrossRef]

- Phan, H.; Le Toan, T.; Bouvet, A.; Nguyen, L.D.; Duy, T.P.; Zribi, M. Mapping of rice varieties and sowing date using X-band SAR data. Sensors 2018, 18, 316. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, M.; Panigrahy, S.; Sharma, S.A. Discrimination of rice crop grown under different cultural practices using temporal ERS-1 synthetic aperture radar data. ISPRS J. Photogramm. Remote Sens. 1997, 52, 183–191. [Google Scholar] [CrossRef]

- Jia, M.; Tong, L.; Chen, Y.; Wang, Y.; Zhang, Y. Rice biomass retrieval from multitemporal ground-based scatterometer data and RADARSAT-2 images using neural networks. J. Appl. Remote Sens. 2013, 7, 073509. [Google Scholar] [CrossRef]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Chakraborty, M.; Manjunath, K.R.; Panigrahy, S.; Kundu, N.; Parihar, J.S. Rice crop parameter retrieval using multi-temporal, multi-incidence angle Radarsat SAR data. ISPRS J. Photogramm. Remote Sens. 2005, 59, 310–322. [Google Scholar] [CrossRef]

- Choudhury, I.; Chakraborty, M. SAR sig nature investigation of rice crop using RADARSAT data. Int. J. Remote Sens. 2006, 27, 519–534. [Google Scholar] [CrossRef]

- He, Z.; Li, S.; Wang, Y.; Dai, L.; Lin, S. Monitoring rice phenology based on backscattering characteristics of multi-temporal RADARSAT-2 datasets. Remote Sens. 2018, 10, 340. [Google Scholar] [CrossRef]

- Shao, Y.; Fan, X.; Liu, H.; Xiao, J.; Ross, S.; Brisco, B.; Brown, R.; Staples, G. Rice monitoring and production estimation using multitemporal RADARSAT. Remote Sens. Environ. 2001, 76, 310–325. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H.; Zhang, B.; Tang, Y. Rice crop monitoring in South China with RADARSAT-2 quad-polarization SAR data. IEEE Geosci. Remote Sens. Lett. 2011, 8, 196–200. [Google Scholar] [CrossRef]

- Yonezawa, C.; Negishi, M.; Azuma, K.; Watanabe, M.; Ishitsuka, N.; Ogawa, S.; Saito, G. Growth monitoring and classification of rice fields using multitemporal RADARSAT-2 full-polarimetric data. Int. J. Remote Sens. 2012, 33, 5696–5711. [Google Scholar] [CrossRef]

- Bouvet, A.; Le Toan, T.; Lam-Dao, N. Monitoring of the rice cropping system in the Mekong Delta using ENVISAT/ASAR dual polarization data. IEEE Trans. Geosci. Remote Sens. 2009, 47, 517–526. [Google Scholar] [CrossRef]

- Bouvet, A.; Le Toan, T. Use of ENVISAT/ASAR wide-swath data for timely rice fields mapping in the Mekong River Delta. Remote Sens. Environ. 2011, 115, 1090–1101. [Google Scholar] [CrossRef]

- Chen, J.; Lin, H.; Pei, Z. Application of ENVISAT ASAR data in mapping rice crop growth in southern China. IEEE Geosci. Remote Sens. Lett. 2007, 4, 431–435. [Google Scholar] [CrossRef]

- Nguyen, D.B.; Clauss, K.; Cao, S.; Naeimi, V.; Kuenzer, C.; Wagner, W. Mapping Rice Seasonality in the Mekong Delta with multi-year envisat ASAR WSM Data. Remote Sens. 2015, 7, 15868–15893. [Google Scholar] [CrossRef]

- Yang, S.; Shen, S.; Li, B.; Le Toan, T.; He, W. Rice mapping and monitoring using ENVISAT ASAR data. IEEE Geosci. Remote Sens. Lett. 2008, 5, 108–112. [Google Scholar] [CrossRef]

- Wang, C.; Wu, J.; Zhang, Y.; Pan, G.; Qi, J.; Salas, W.A. Characterizing L-band scattering of paddy rice in southeast china with radiative transfer model and multitemporal ALOS/PALSAR imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 988–998. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wu, J.; Qi, J.; Salas, W.A. Mapping paddy rice with multitemporal ALOS/PALSAR imagery in southeast China. Int. J. Remote Sens. 2009, 30, 6301–6315. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Zhang, Q. Identifying paddy fields with dual-polarization ALOS/PALSAR data. Can. J. Remote Sens. 2011, 37, 103–111. [Google Scholar] [CrossRef]

- Singha, M.; Dong, J.; Zhang, G.; Xiao, X. High resolution paddy rice maps in cloud-prone Bangladesh and Northeast India using Sentinel-1 data. Sci. Data 2019, 6, 1–10. [Google Scholar] [CrossRef]

- Clauss, K.; Ottinger, M.; Kuenzer, C. Mapping rice areas with Sentinel-1 time series and superpixel segmentation. Int. J. Remote Sens. 2018, 39, 1399–1420. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; Ienco, D.; El Hajj, M.; Zribi, M.; Belhouchette, H.; Escorihuela, M.J.; Demarez, V. Mapping irrigated areas using Sentinel-1 time series in Catalonia, Spain. Remote Sens. 2019, 11, 1836. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; Bhattacharya, A.; Rao, Y.S.; Siqueira, P.; Bera, S. Sen4Rice: A processing chain for differentiating early and late transplanted rice using time-series sentinel-1 SAR data with google earth engine. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1947–1951. [Google Scholar] [CrossRef]

- Inoue, S.; Ito, A.; Yonezawa, C. Mapping Paddy fields in Japan by using a Sentinel-1 SAR time series supplemented by Sentinel-2 images on Google Earth Engine. Remote Sens. 2020, 12, 1622. [Google Scholar] [CrossRef]

- Clauss, K.; Ottinger, M.; Leinenkugel, P.; Kuenzer, C. Estimating rice production in the Mekong Delta, Vietnam, utilizing time series of Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 574–585. [Google Scholar] [CrossRef]

- Minh, H.V.T.; Avtar, R.; Mohan, G.; Misra, P.; Kurasaki, M. Monitoring and mapping of rice cropping pattern in flooding area in the Vietnamese Mekong delta using Sentinel-1A data: A case of an Giang province. ISPRS Int. J. Geo-Inf. 2019, 8, 211. [Google Scholar] [CrossRef]

- Nguyen, D.B.; Gruber, A.; Wagner, W. Mapping rice extent and cropping scheme in the Mekong Delta using Sentinel-1A data. Remote Sens. Lett. 2016, 7, 1209–1218. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Minh, V.Q. Assessment of Sentinel-1A data for rice crop classification using random forests and support vector machines. Geocarto Int. 2018, 33, 587–601. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.M.; Kindred, D.; Miao, Y. Mapping paddy rice fields by applying machine learning algorithms to multi-temporal sentinel-1A and landsat data. Int. J. Remote Sens. 2018, 39, 1042–1067. [Google Scholar] [CrossRef]

- Tian, H.; Wu, M.; Wang, L.; Niu, Z. Mapping early, middle and late rice extent using Sentinel-1A and Landsat-8 data in the poyang lake plain, China. Sensors 2018, 18, 185. [Google Scholar] [CrossRef]

- Torbick, N.; Chowdhury, D.; Salas, W.; Qi, J. Monitoring Rice Agriculture across Myanmar Using Time Series Sentinel-1 Assisted by Landsat-8 and PALSAR-2. Remote Sens. 2017, 9, 119. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-date paddy rice extent at 10 M resolution in China through the integration of optical and synthetic aperture radar images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Yu, X.; Wu, X.; Luo, C.; Ren, P. Deep learning in remote sensing scene classification: A data augmentation enhanced convolutional neural network framework. GISci. Remote Sens. 2017, 54, 741–758. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatialoral features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Lyu, H.; Lu, H.; Mou, L. Learning a transferable change rule from a recurrent neural network for land cover change detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef]

- He, T.; Xie, C.; Liu, Q.; Guan, S.; Liu, G. Evaluation and comparison of random forest and A-LSTM networks for large-scale winter wheat identification. Remote Sens. 2019, 11, 1665. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Multi-temporal land cover classification with sequential recurrent encoders. ISPRS Int. J. Geo-Inf. 2018, 7, 129. [Google Scholar] [CrossRef]

- Sun, Z.; Di, L.; Fang, H. Using long short-term memory recurrent neural network in land cover classification on Landsat and Cropland data layer time series. Int. J. Remote Sens. 2019, 40, 593–614. [Google Scholar] [CrossRef]

- Teimouri, N.; Dyrmann, M.; Jørgensen, R.N. A novel spatio-temporal FCN-LSTM network for recognizing various crop types using multi-temporal radar images. Remote Sens. 2019, 11, 893. [Google Scholar] [CrossRef]

- Zhou, Y.; Luo, J.; Feng, L.; Yang, Y.; Chen, Y.; Wu, W. Long-short-term-memory-based crop classification using high-resolution optical images and multi-temporal SAR data. GISci. Remote Sens. 2019, 56, 1170–1191. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land Cover Classification via Multitemporal Spatial Data by Deep Recurrent Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, X.; Zhang, X.; Wu, D.; Du, X. Long time series land cover classification in China from 1982 to 2015 based on Bi-LSTM deep learning. Remote Sens. 2019, 11, 1639. [Google Scholar] [CrossRef]

- Reddy, D.S.; Prasad, P.R.C. Prediction of vegetation dynamics using NDVI time series data and LSTM. Model. Earth Syst. Environ. 2018, 4, 409–419. [Google Scholar] [CrossRef]

- USDA FAS—United States Department of Agriculture—Foreign Agricultural Service. World Agricultural Production. Available online: https://apps.fas.usda.gov/psdonline/circulars/production.pdf (accessed on 19 May 2020).

- Companhia Nacional de Abastecimento. Acompanhamento da Safra Brasileira: Grãos, Quarto Levantamento—Janeiro/2020; Companhia Nacional de Abastecimento: Brasilia, Brazil, 2020; Volume 7, p. 104. [Google Scholar]

- IBGE—Instituto Brasileiro de Geografia e Estatística. Cidades@. Available online: https://cidades.ibge.gov.br/ (accessed on 25 May 2020).

- IBGE—Instituto Brasileiro de Geografia e Estatística. Produção Agrícola Municipal—PAM. Available online: https://www.ibge.gov.br/estatisticas/economicas/agricultura-e-pecuaria/9117-producao-agricola-municipal-culturas-temporarias-e-permanentes.html?=&t=o-que-e (accessed on 25 May 2020).

- Robaina, L.E.D.S.; Trentin, R.; Laurent, F. Compartimentação do Estado do Rio Grande do Sul, Brasil, através do Uso de Geomorphons Obtidos em Classificação Topográfica Automatizada. Rev. Bras. Geomorfol. 2016, 17. [Google Scholar] [CrossRef][Green Version]

- Trentin, R.; Robaina, L.E.D.S. Study of the landforms of the ibicuí river basin with use of topographic position index. Rev. Bras. Geomorfol. 2018, 19. [Google Scholar] [CrossRef]

- Martins, L.C.; Wildner, W.; Hartmann, L.A. Estratigrafia dos derrames da Província Vulcânica Paraná na região oeste do Rio Grande do Sul, Brasil, com base em sondagem, perfilagem gamaespectrométrica e geologia de campo. Pesqui. Geociências 2011, 38, 15. [Google Scholar] [CrossRef][Green Version]

- Moreira, A.; Bremm, C.; Fontana, D.C.; Kuplich, T.M. Seasonal dynamics of vegetation indices as a criterion for grouping grassland typologies. Sci. Agric. 2019, 76, 24–32. [Google Scholar] [CrossRef]

- Maltchik, L. Three new wetlands inventories in Brazil. Interciencia 2003, 28, 421–423. [Google Scholar]

- Guadagnin, D.L.; Peter, A.S.; Rolon, A.S.; Stenert, C.; Maltchik, L. Does Non-Intentional Flooding of Rice Fields After Cultivation Contribute to Waterbird Conservation in Southern Brazil? Waterbirds 2012, 35, 371–380. [Google Scholar] [CrossRef]

- Machado, I.F.; Maltchik, L. Can management practices in rice fields contribute to amphibian conservation in southern Brazilian wetlands? Aquat. Conserv. Mar. Freshw. Ecosyst. 2010, 20, 39–46. [Google Scholar] [CrossRef]

- Rolon, A.S.; Maltchik, L. Does flooding of rice fields after cultivation contribute to wetland plant conservation in southern Brazil? Appl. Veg. Sci. 2010, 13, 26–35. [Google Scholar] [CrossRef]

- Stenert, C.; Bacca, R.C.; Maltchik, L.; Rocha, O. Can hydrologic management practices of rice fields contribute to macroinvertebrate conservation in southern brazil wetlands? Hydrobiologia 2009, 635, 339–350. [Google Scholar] [CrossRef]

- Maltchik, L.; Stenert, C.; Batzer, D.P. Can rice field management practices contribute to the conservation of species from natural wetlands? Lessons from Brazil. Basic Appl. Ecol. 2017, 18, 50–56. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.Ö.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Abade, N.; Júnior, O.; Guimarães, R.; de Oliveira, S. Comparative Analysis of MODIS Time-Series Classification Using Support Vector Machines and Methods Based upon Distance and Similarity Measures in the Brazilian Cerrado-Caatinga Boundary. Remote Sens. 2015, 7, 12160–12191. [Google Scholar] [CrossRef]

- Hüttich, C.; Gessner, U.; Herold, M.; Strohbach, B.J.; Schmidt, M.; Keil, M.; Dech, S. On the suitability of MODIS time series metrics to map vegetation types in dry savanna ecosystems: A case study in the Kalahari of NE Namibia. Remote Sens. 2009, 1, 620–643. [Google Scholar] [CrossRef]

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. Proceedings 2019, 18, 11. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality NDVI time-series data set based on the Savitzky-Golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Sakamoto, T.; Wardlow, B.D.; Gitelson, A.A.; Verma, S.B.; Suyker, A.E.; Arkebauer, T.J. A Two-Step Filtering approach for detecting maize and soybean phenology with time-series MODIS data. Remote Sens. Environ. 2010, 114, 2146–2159. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Maghsoudi, Y.; Collins, M.J.; Leckie, D. Speckle reduction for the forest mapping analysis of multi-temporal Radarsat-1 images. Int. J. Remote Sens. 2012, 33, 1349–1359. [Google Scholar] [CrossRef]

- Martinez, J.M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Quegan, S.; Yu, J.J. Filtering of multichannel SAR images. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2373–2379. [Google Scholar] [CrossRef]

- Satalino, G.; Mattia, F.; Le Toan, T.; Rinaldi, M. Wheat Crop Mapping by Using ASAR AP Data. IEEE Trans. Geosci. Remote Sens. 2009, 47, 527–530. [Google Scholar] [CrossRef]

- Ciuc, M.; Bolon, P.; Trouvé, E.; Buzuloiu, V.; Rudant, J. Adaptive-neighborhood speckle removal in multitemporal synthetic aperture radar images. Appl. Opt. 2001, 40, 5954. [Google Scholar] [CrossRef]

- Trouvé, E.; Chambenoit, Y.; Classeau, N.; Bolon, P. Statistical and Operational Performance Assessment of Multitemporal SAR Image Filtering. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2519–2530. [Google Scholar] [CrossRef]

- Lopes, A.; Touzi, R.; Nezry, E. Adaptive Speckle Filters and Scene Heterogeneity. IEEE Trans. Geosci. Remote Sens. 1990, 28, 992–1000. [Google Scholar] [CrossRef]

- Geng, L.; Ma, M.; Wang, X.; Yu, W.; Jia, S.; Wang, H. Comparison of eight techniques for reconstructing multi-satellite sensor time-series NDVI data sets in the heihe river basin, China. Remote Sens. 2014, 6, 2024–2049. [Google Scholar] [CrossRef]

- Ren, J.; Chen, Z.; Zhou, Q.; Tang, H. Regional yield estimation for winter wheat with MODIS-NDVI data in Shandong, China. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 403–413. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF Models for Sequence Tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Ma, X.; Hovy, E. End-to-end sequence labeling via bi-directional LSTM-CNNs-CRF. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 2, p. 1064. [Google Scholar]

- Hameed, Z.; Garcia-Zapirain, B.; Ruiz, I.O. A computationally efficient BiLSTM based approach for the binary sentiment classification. In Proceedings of the 2019 IEEE 19th International Symposium on Signal Processing and Information Technology, ISSPIT 2019, Ajman, UAE, 10–12 December 2019. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Chirici, G.; Mura, M.; McInerney, D.; Py, N.; Tomppo, E.O.; Waser, L.T.; Travaglini, D.; McRoberts, R.E. A meta-analysis and review of the literature on the k-Nearest Neighbors technique for forestry applications that use remotely sensed data. Remote Sens. Environ. 2016, 176, 282–294. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Meng, Q.; Cieszewski, C.J.; Madden, M.; Borders, B.E. K Nearest Neighbor Method for Forest Inventory Using Remote Sensing Data. GISci. Remote Sens. 2007, 44, 149–165. [Google Scholar] [CrossRef]

- Fukunaga, K. Introduction to Statistical Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 1990; ISBN 9780080478654. [Google Scholar]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Estabrooks, A.; Jo, T.; Japkowicz, N. A multiple resampling method for learning from imbalanced data sets. Comput. Intell. 2004, 20, 18–36. [Google Scholar] [CrossRef]

- Congalton, R.G.; Oderwald, R.G.; Mead, R.A. Assessing Landsat Classification Accuracy Using Discrete Multivariate Analysis Statistical Techniques. Photogramm. Eng. Remote Sens. 1983, 49, 1671–1678. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- McHugh, M.L. Lessons in biostatistics interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic Map Comparison. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Steinmetz, S.; Braga, H.J. Zoneamento de arroz irrigado por época de semeadura nos estados do Rio Grande do Sul e de Santa Catarina. Rev. Bras. Agrometeorol. 2001, 9, 429–438. [Google Scholar]

- IRGA—Instituto Rio Grandense do Arroz. Boletim de Resultados da Lavoura de Arroz Safra 2017/18; IRGA—Instituto Rio Grandense do Arroz: Porto Alegre, Brazil, 2018; pp. 1–19.

- Li, H.; Lu, W.; Liu, Y.; Zhang, X. Effect of different tillage methods on rice growth and soil ecology. Chin. J. Appl. Ecol. 2001, 12, 553–556. [Google Scholar]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).