Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying

Abstract

1. Introduction

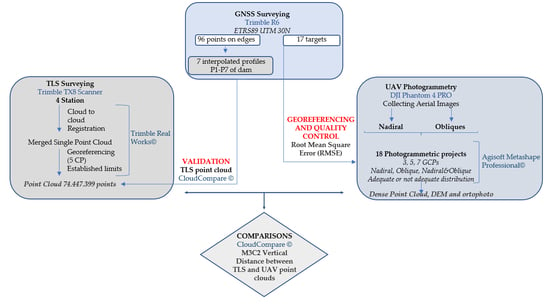

2. Materials and Methods

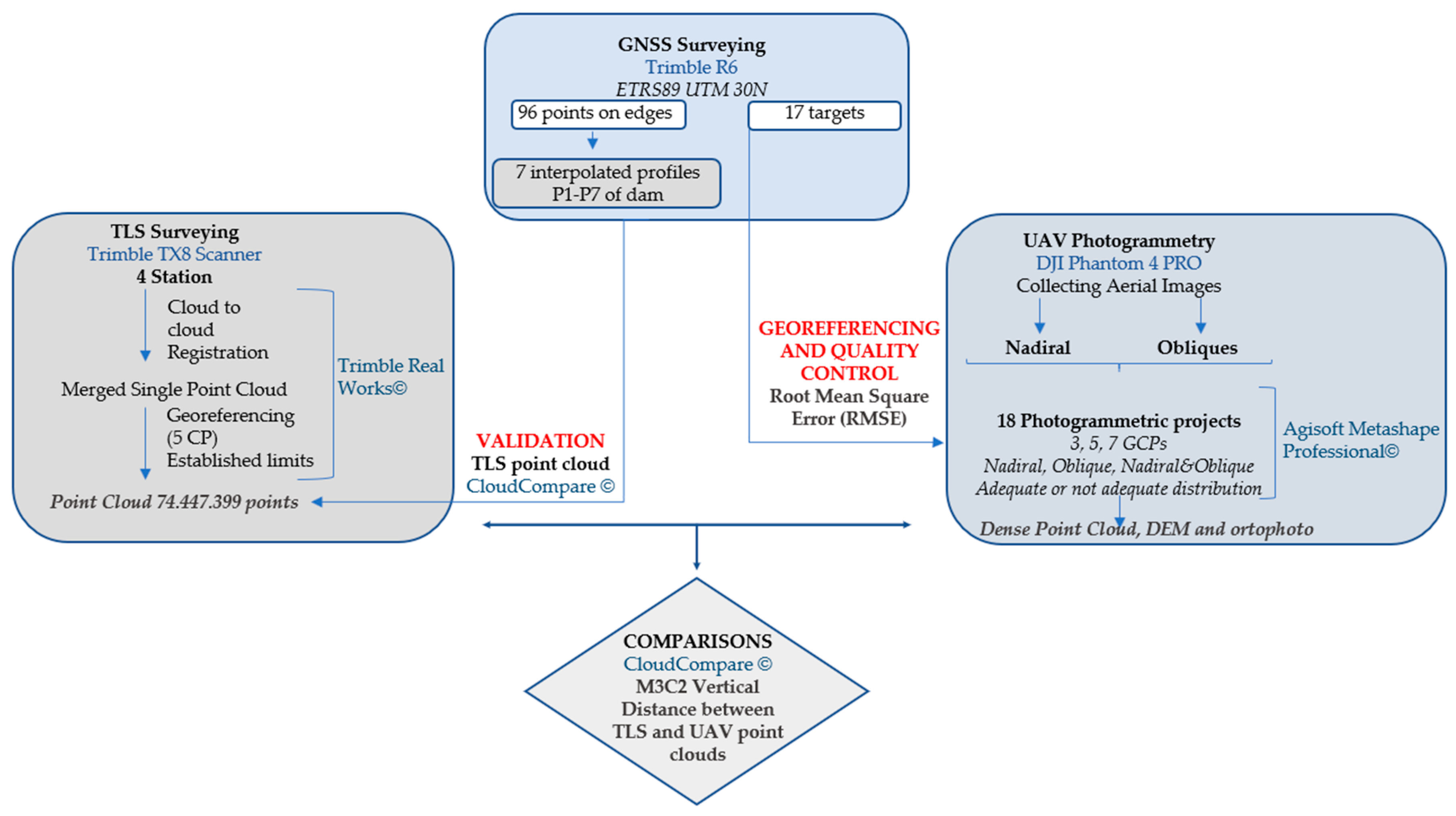

2.1. Study Site

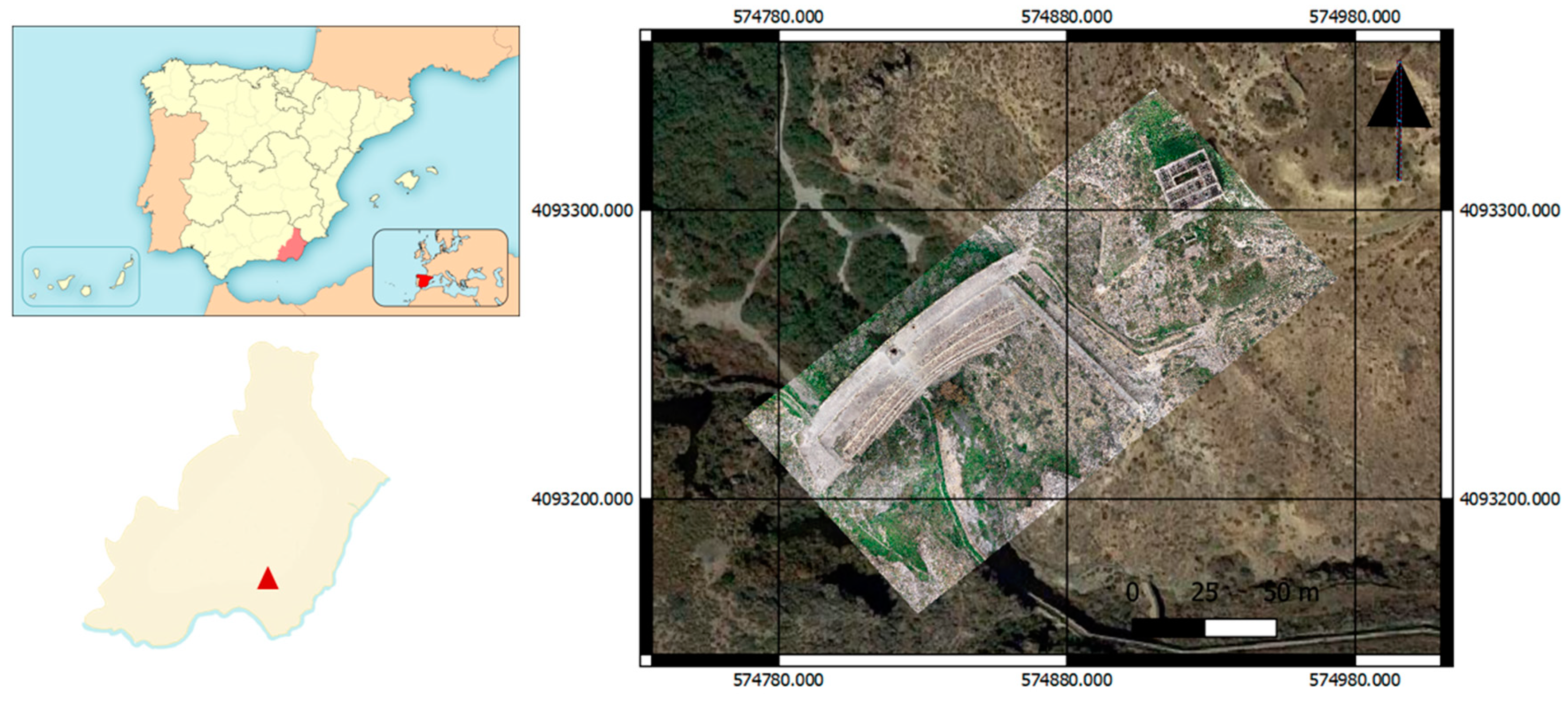

2.2. GNSS Surveying of Dam Edges and Ground Control Points

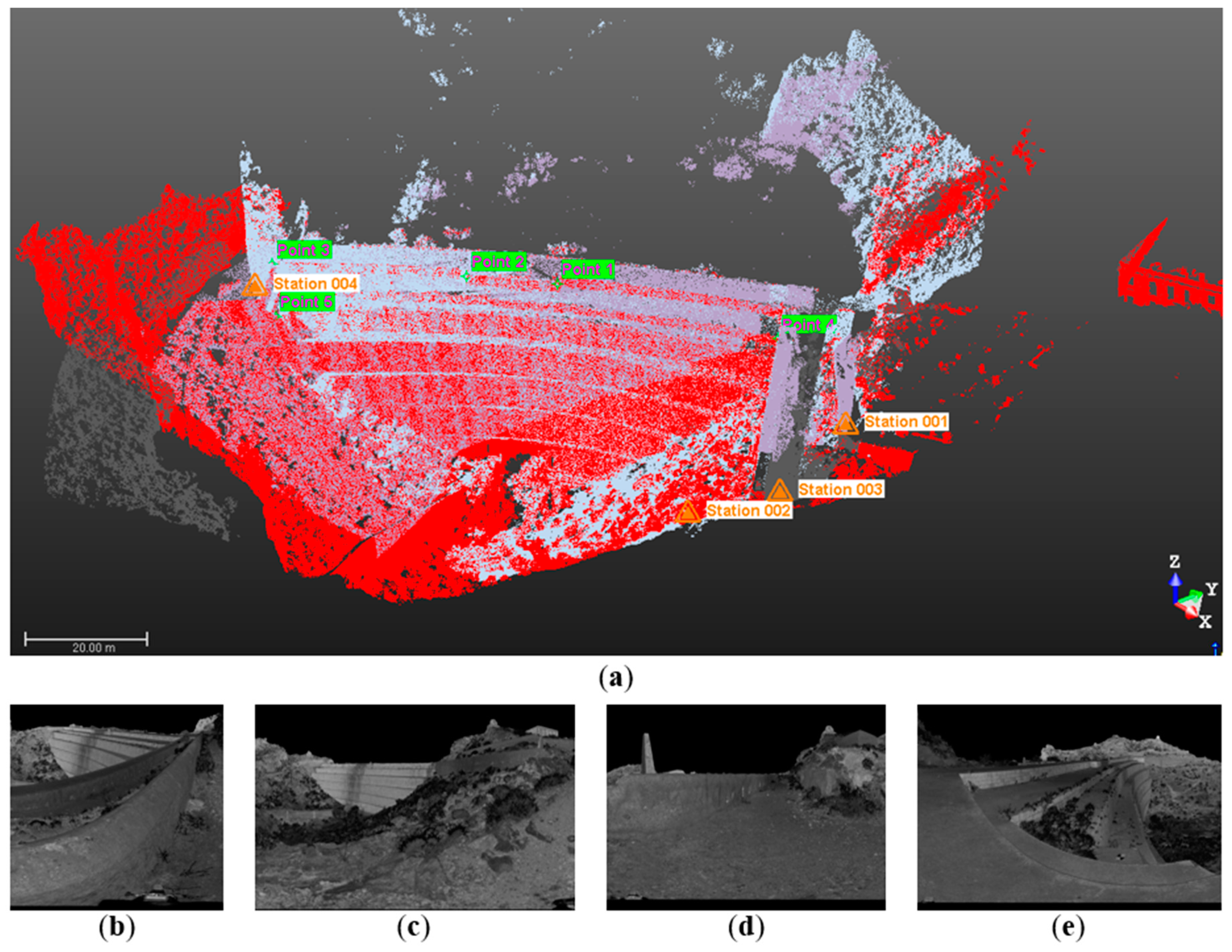

2.3. Topographic Surveying Using TLS

2.3.1. Data Acquisition

2.3.2. Data Processing

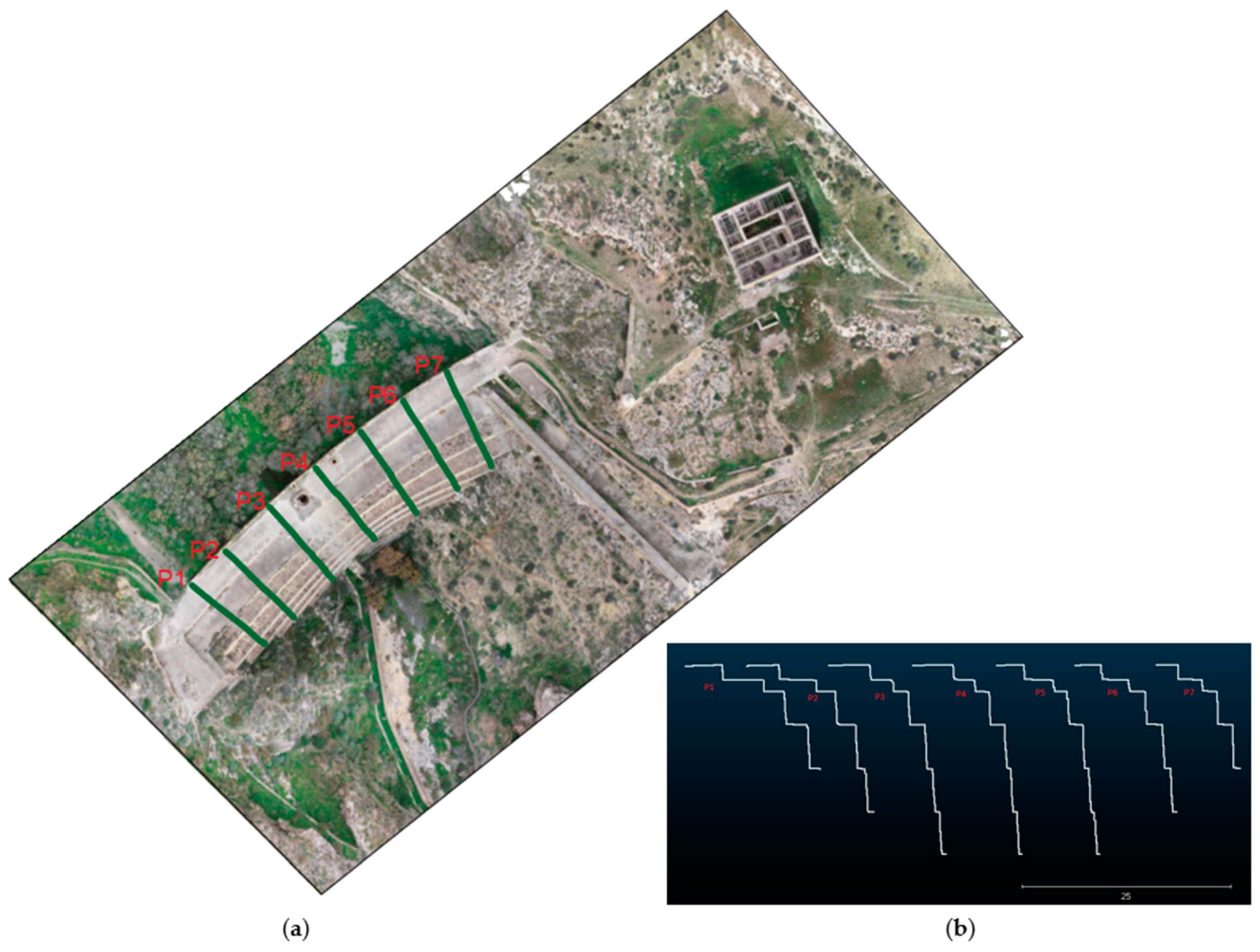

2.4. Topographic Surveying Using UAV Photogrammetry

2.4.1. Image Capture

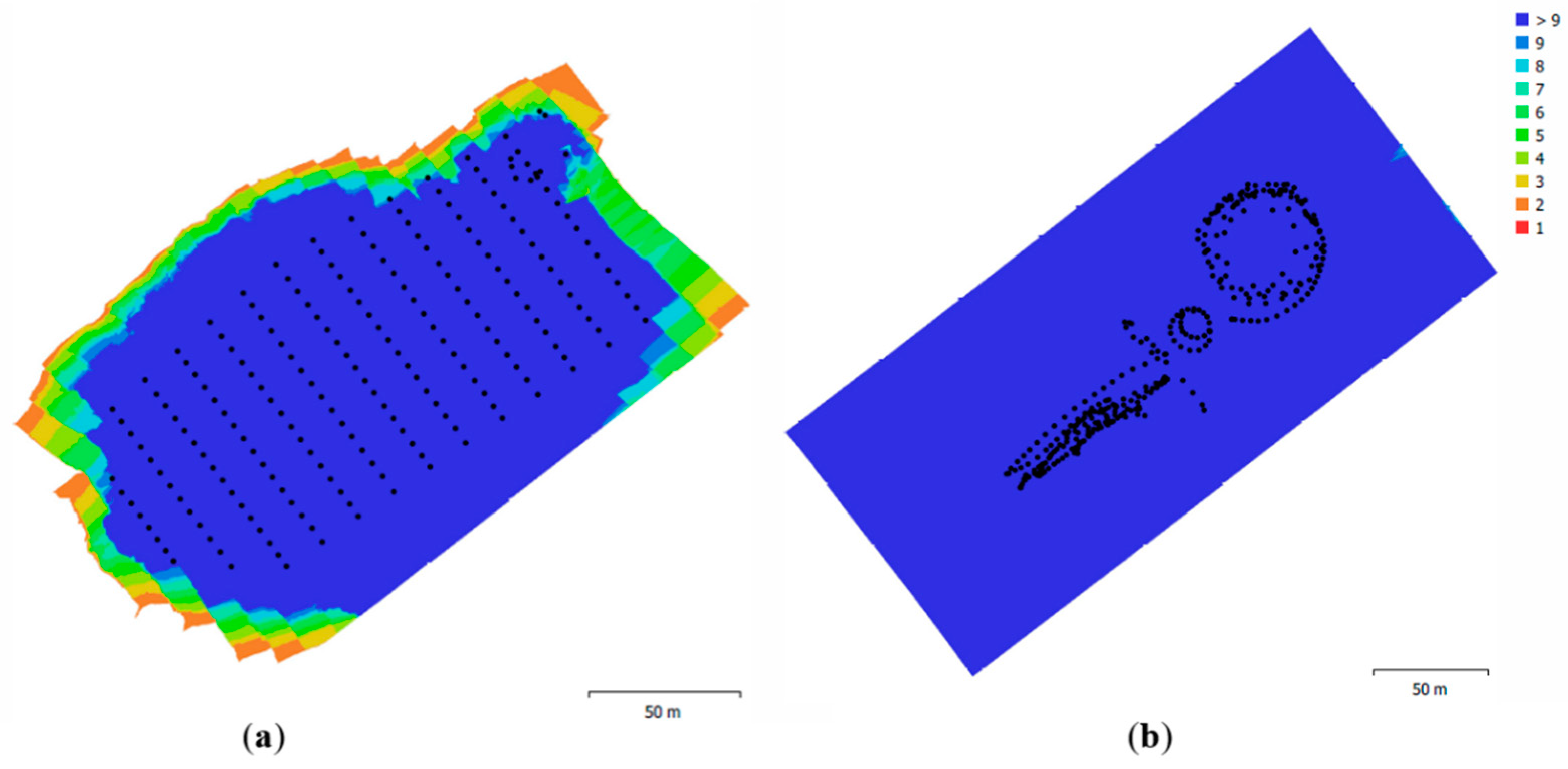

2.4.2. Image Processing

2.4.3. Accuracy Assessment

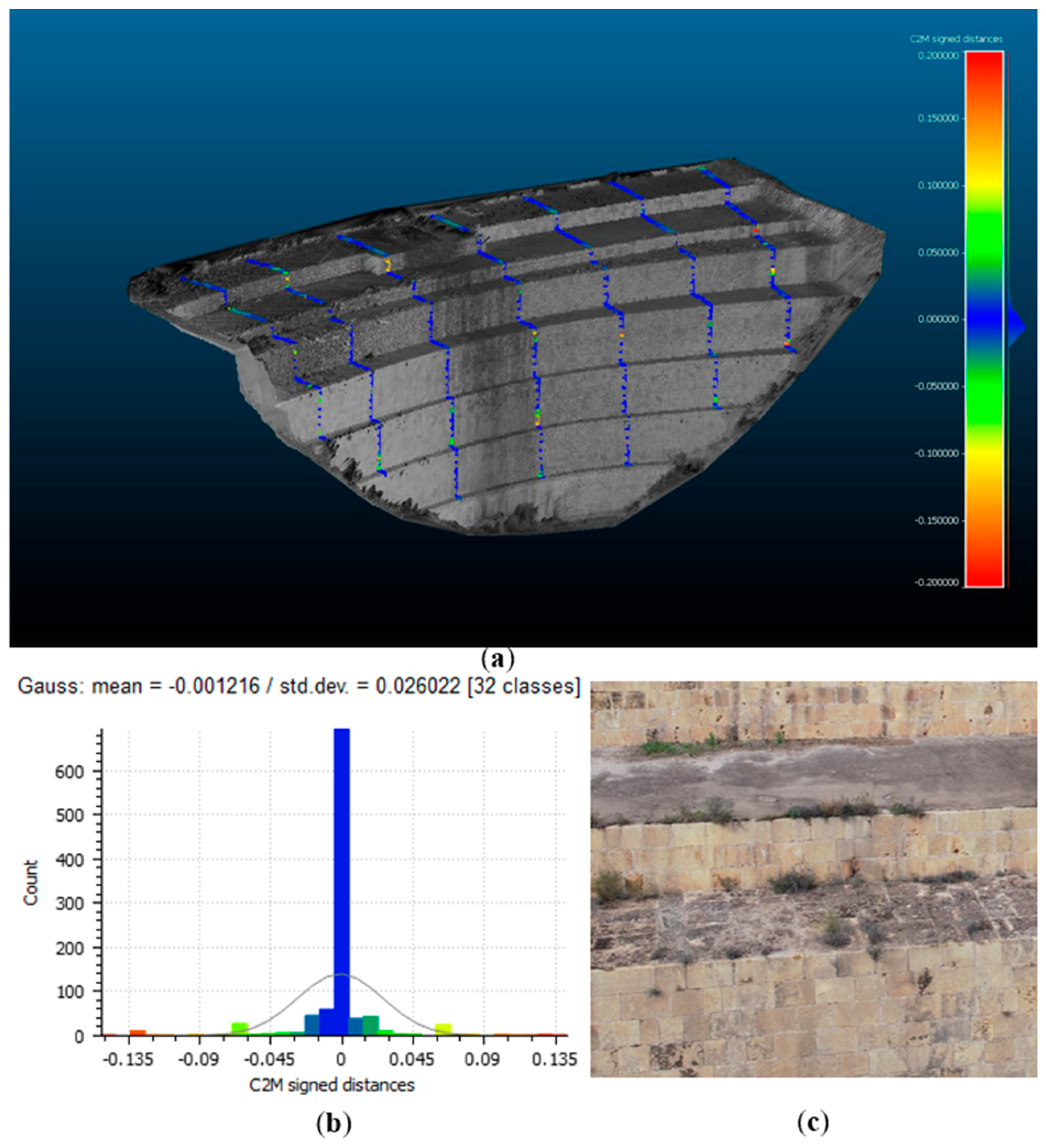

2.5. Point Cloud Management

3. Results

3.1. Validation of Data Derived From TLS in Comparison With Theoretical Profiles Obtained by GNSS

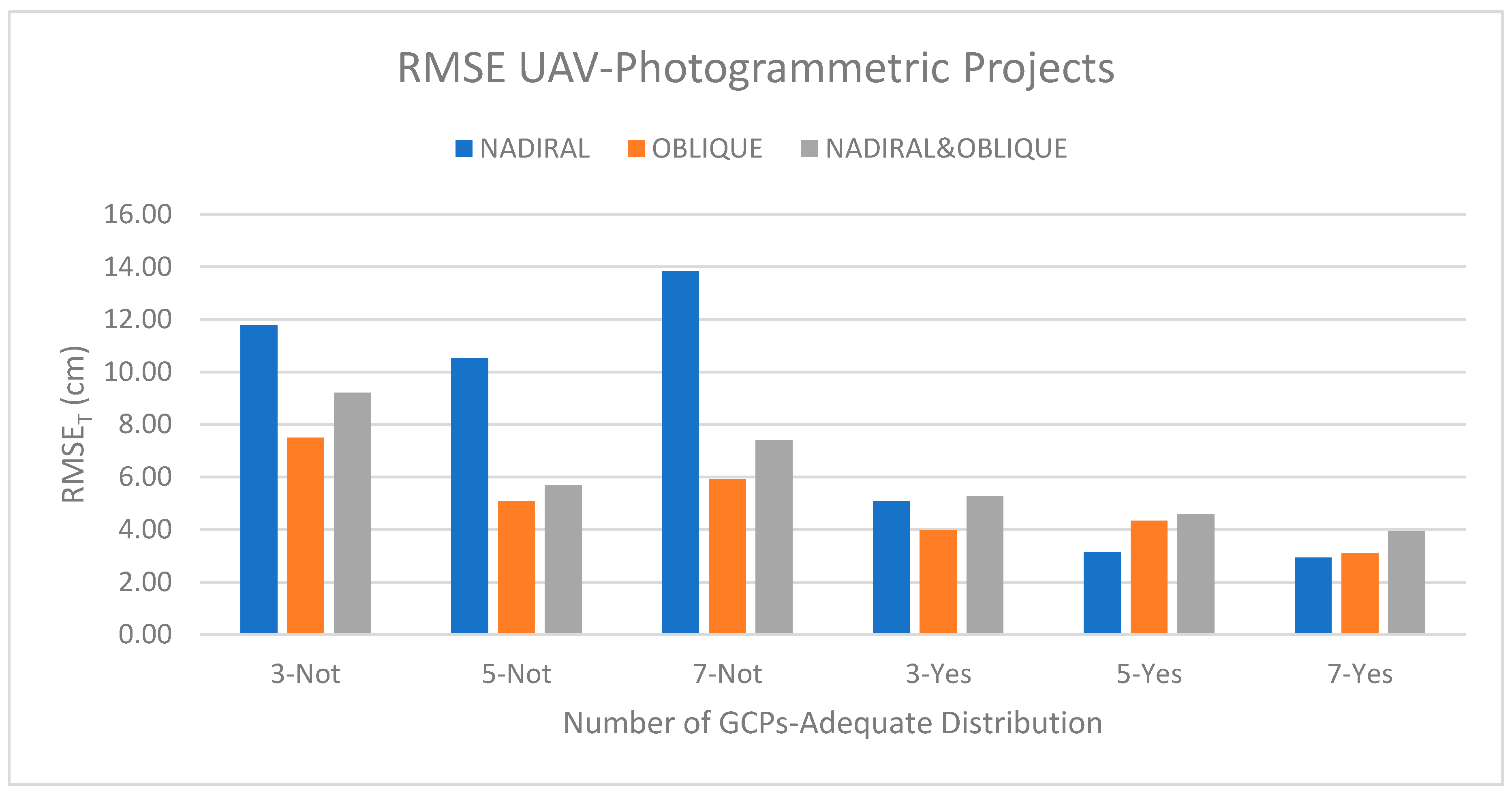

3.2. RMSE UAV-PhotogrAmmetric Projects

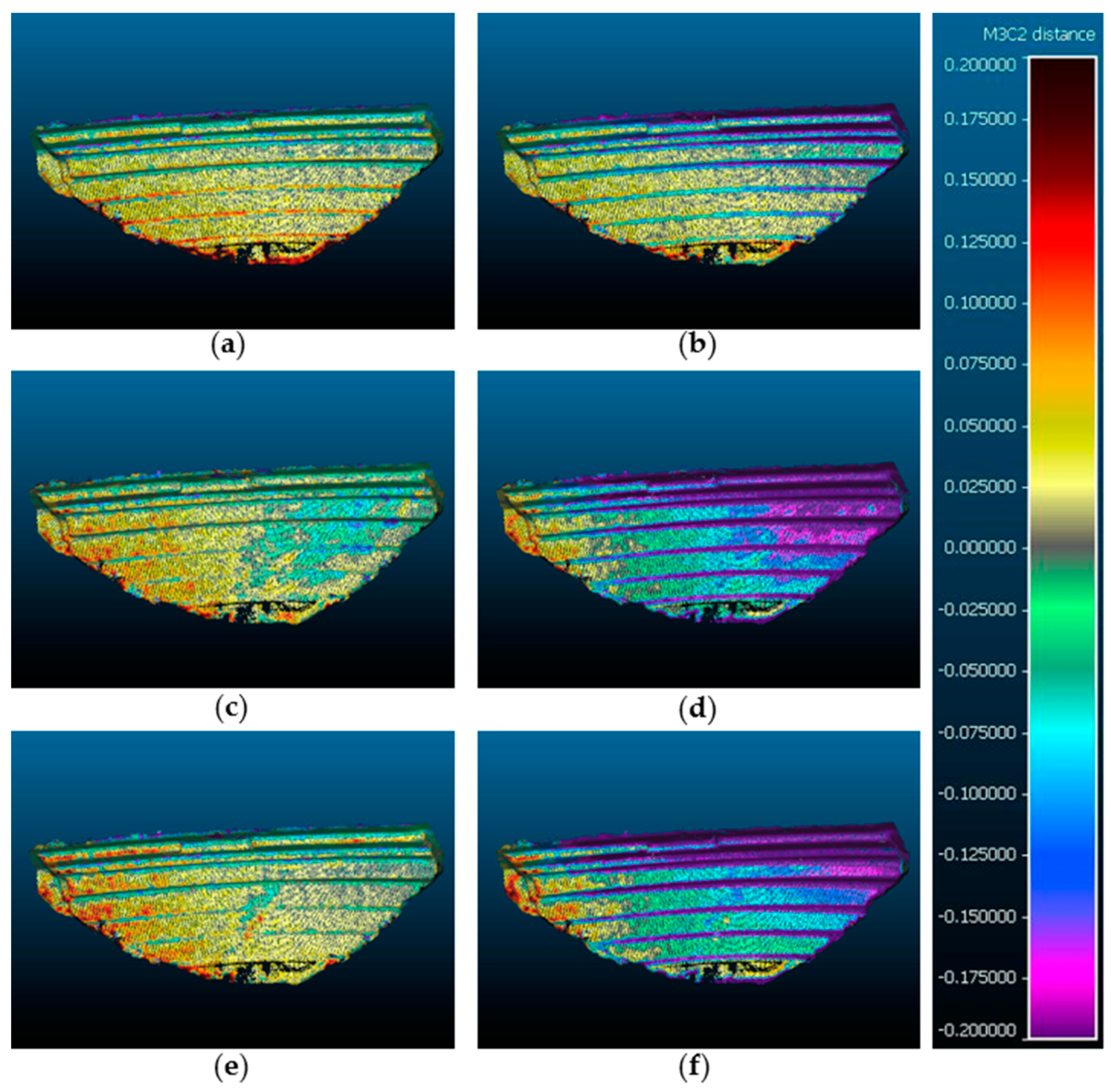

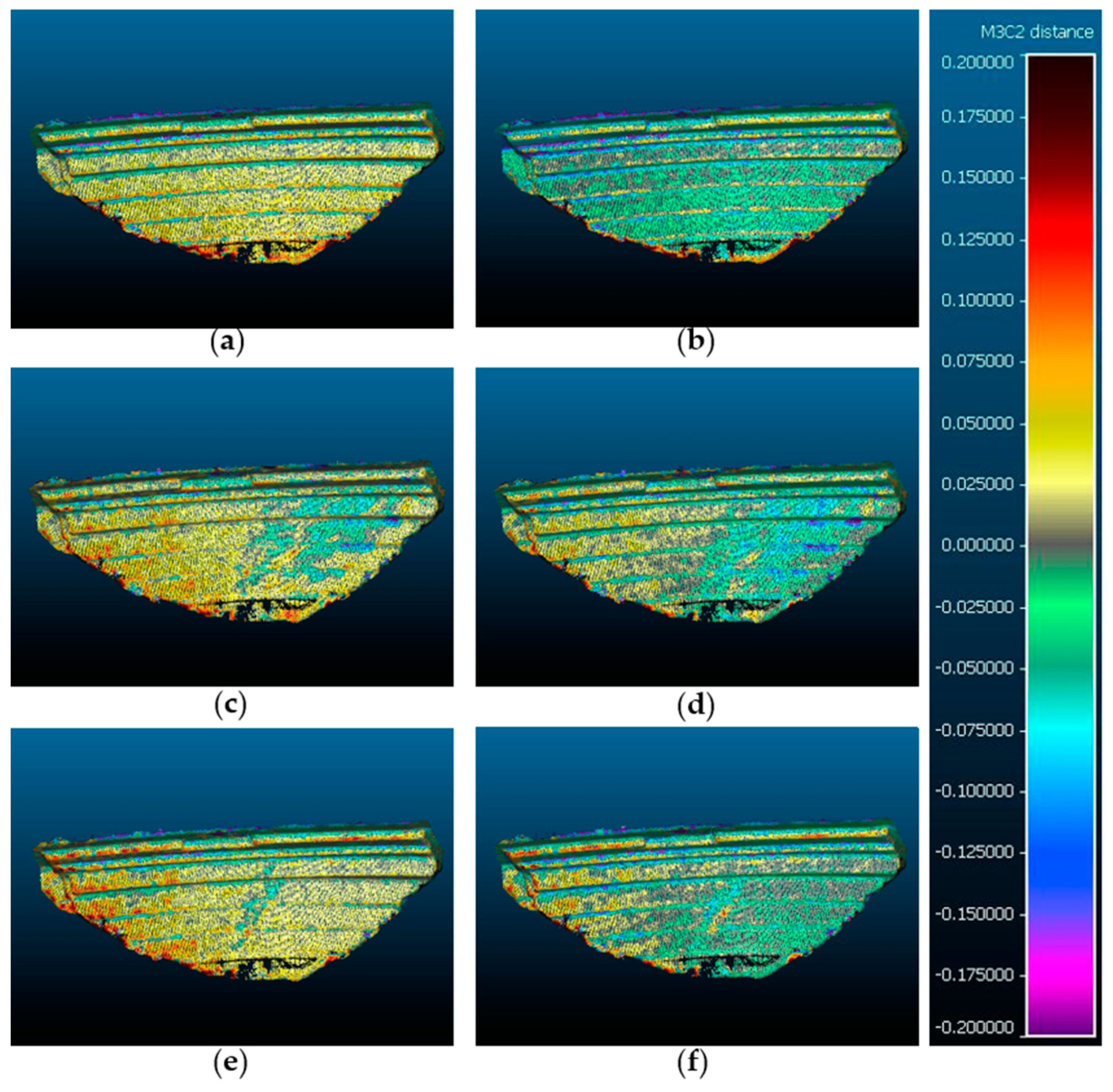

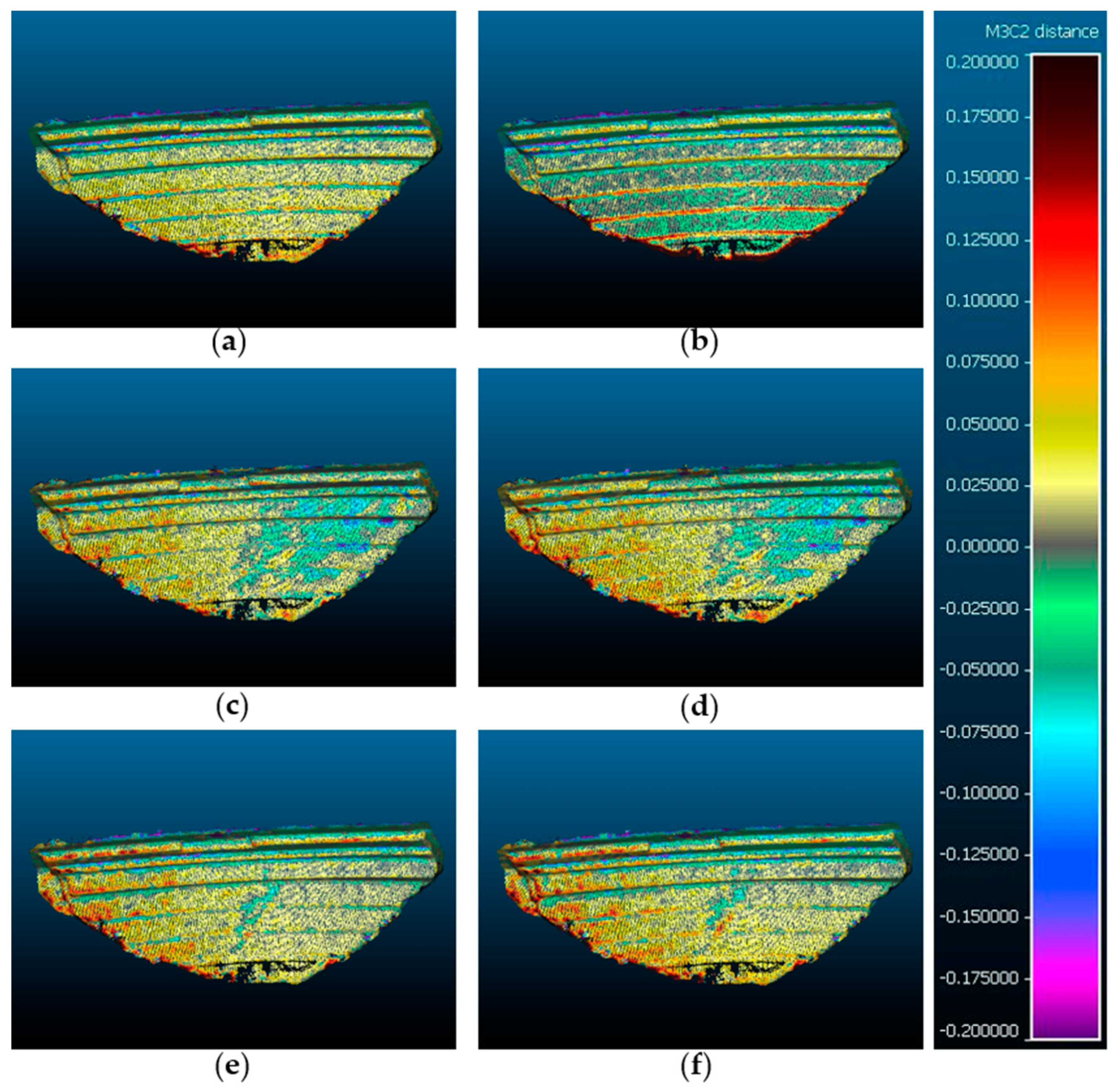

3.3. Vertical Distances between the Point Clouds Obtained by TLS and UAV Photogrammetry

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wallace, L.O.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Koo, J.-B. The Study on Recording Method for Buried Cultural Property Using Photo Scanning Technique. J. Digit. Contents Soc. 2015, 16, 835–847. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Liu, P.; Chen, A.Y.; Huang, Y.-N.; Han, J.-Y.; Lai, J.-S.; Kang, S.-C.; Wu, T.-H.; Wen, M.-C.; Tsai, M.-H. A review of rotorcraft Unmanned Aerial Vehicle (UAV) developments and applications in civil engineering. Smart Struct. Syst. 2014, 13, 1065–1094. [Google Scholar] [CrossRef]

- Bento, M.D.F. Unmanned Aerial Vehicles: An Overview. InsideGNSS 2008, 3, 54–61. [Google Scholar]

- Samad, A.M.; Kamarulzaman, N.; Hamdani, M.A.; Mastor, T.A.; Hashim, K.A. The potential of Unmanned Aerial Vehicle (UAV) for civilian and mapping application. In Proceedings of the 2013 IEEE 3rd International Conference on System Engineering and Technology, Shah Alam, Malaysia, 19–20 August 2013; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2013; pp. 313–318. [Google Scholar]

- Mesas-Carrascosa, F.-J.; Pérez-Porras, F.; De Larriva, J.E.M.; Frau, C.M.; Agüera-Vega, F.; Carvajal-Ramirez, F.; Carricondo, P.J.M.; García-Ferrer, A. Drift Correction of Lightweight Microbolometer Thermal Sensors On-Board Unmanned Aerial Vehicles. Remote Sens. 2018, 10, 615. [Google Scholar] [CrossRef]

- Grenzdörffer, G.; Engel, A.; Teichert, B. The photogrammetric potential of low-cost UAVs in forestry and agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII(Part B1), 1207–1214. [Google Scholar]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- Kachamba, D.; Ørka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef]

- Carvajal-Ramirez, F.; Da Silva, J.M.; Agüera-Vega, F.; Carricondo, P.J.M.; Serrano, J.; Moral, F.J. Evaluation of Fire Severity Indices Based on Pre- and Post-Fire Multispectral Imagery Sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Carricondo, P.J.M.; Carvajal-Ramirez, F.; Yero-Paneque, L.; Vega, F.A. Combination of nadiral and oblique UAV photogrammetry and HBIM for the virtual reconstruction of cultural heritage. Case study of Cortijo del Fraile in Níjar, Almería (Spain). Build. Res. Inf. 2019, 48, 140–159. [Google Scholar] [CrossRef]

- Carvajal-Ramirez, F.; Navarro-Ortega, A.D.; Agüera-Vega, F.; Martínez-Carricondo, P.; Mancini, F. Virtual reconstruction of damaged archaeological sites based on Unmanned Aerial Vehicle Photogrammetry and 3D modelling. Study case of a southeastern Iberia production area in the Bronze Age. Measurement 2019, 136, 225–236. [Google Scholar] [CrossRef]

- Doumit, J. Structure from motion technology for historic building information modeling of Toron fortress (Lebanon). Proc. Int. Conf. InterCarto InterGIS 2019, 25. [Google Scholar] [CrossRef]

- Salvo, G.; Caruso, L.; Scordo, A. Urban Traffic Analysis through an UAV. Proc. Soc. Behav. Sci. 2014, 111, 1083–1091. [Google Scholar] [CrossRef]

- Kanistras, K.; Martins, G.; Rutherford, M.J.; Valavanis, K.P. Survey of unmanned aerial vehicles (uavs) for traffic monitoring. In Handbook of Unmanned Aerial Vehicles; Valavanis, K.P., Vachtsevanos, G.J., Eds.; Springer Reference: Dordrecht, The Netherlands, 2015; ISBN 9789048197071. [Google Scholar]

- Eltner, A.; Mulsow, C.; Maas, H.-G. Quantitative Measurement of Soil Erosion from Tls And Uav Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 119–124. [Google Scholar] [CrossRef]

- Piras, M.; Taddia, G.; Forno, M.G.; Gattiglio, M.; Aicardi, I.; Dabove, P.; Russo, S.L.; Lingua, A. Detailed geological mapping in mountain areas using an unmanned aerial vehicle: Application to the Rodoretto Valley, NW Italian Alps. Geomat. Nat. Hazards Risk 2016, 8, 137–149. [Google Scholar] [CrossRef]

- Pavlidis, G.; Koutsoudis, A.; Arnaoutoglou, F.; Tsioukas, V.; Chamzas, C. Methods for 3D digitization of Cultural Heritage. J. Cult. Heritage 2007, 8, 93–98. [Google Scholar] [CrossRef]

- Püschel, H.; Sauerbier, M.; Eisenbeiss, H. A 3D Model of Castle Landenberg (CH) from Combined Photogrametric Processing of Terrestrial and UAV Based Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 93–98. [Google Scholar]

- Themistocleous, K.; Agapiou, A.; Hadjimitsis, D. 3D Documentation And Bim Modeling of Cultural Heritage Structures using Uavs: The Case of the Foinikaria Church. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 42, 45–49. [Google Scholar] [CrossRef]

- Aber, J.S.; Marzolff, I.; Ries, J.B. Small-Format Aerial Photography; Elsevier Science: Amsterdam, The Netherlands, 2010; ISBN 9780444532602. [Google Scholar]

- Atkinson, K.B. Close Range Photogrammetry and Machine Vision; Whittles Publishing: Dunbeath, UK, 2001; ISBN 978-1870325738. [Google Scholar]

- Fernández-Hernandez, J.; González-Aguilera, D.; Rodríguez-Gonzálvez, P.; Mancera-Taboada, J. Image-Based Modelling from Unmanned Aerial Vehicle (UAV) Photogrammetry: An Effective, Low-Cost Tool for Archaeological Applications. Archaeometry 2014, 57, 128–145. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the topography of shallow braided rivers using Structure-from-Motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2007, 80, 189–210. [Google Scholar] [CrossRef]

- Vasuki, Y.; Holden, E.-J.; Kovesi, P.; Micklethwaite, S. Semi-automatic mapping of geological Structures using UAV-based photogrammetric data: An image analysis approach. Comput. Geosci. 2014, 69, 22–32. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1362–1376. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Kamal, W.A.; Samar, R. A mission planning approach for UAV applications. In Proceedings of the 2008 47th IEEE Conference on Decision and Control, Cancun, Mexico, 9–11 December 2008; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2008; pp. 3101–3106. [Google Scholar]

- Hugenholtz, C.; Brown, O.; Walker, J.; Barchyn, T.E.; Nesbit, P.; Kucharczyk, M.; Myshak, S. Spatial Accuracy of UAV-Derived Orthoimagery and Topography: Comparing Photogrammetric Models Processed with Direct Geo-Referencing and Ground Control Points. Geomatica 2016, 70, 21–30. [Google Scholar] [CrossRef]

- Vega, F.A.; Carvajal-Ramirez, F.; Martínez-Carricondo, P. Accuracy of Digital Surface Models and Orthophotos Derived from Unmanned Aerial Vehicle Photogrammetry. J. Surv. Eng. 2017, 143, 04016025. [Google Scholar] [CrossRef]

- Amrullah, C.; Suwardhi, D.; Meilano, I. Product Accuracy Effect of Oblique and Vertical Non-Metric Digital Camera Utilization in Uav-Photogrammetry to Determine Fault Plane. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41–48. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; Rumbao, I.C.; Berrocal, J.A.B.; García-Ferrer, A. Positional Quality Assessment of Orthophotos Obtained from Sensors Onboard Multi-Rotor UAV Platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef] [PubMed]

- Vautherin, J.; Rutishauser, S.; Schneider-Zapp, K.; Choi, H.F.; Chovancova, V.; Glass, A.; Strecha, C. Photogrammetric Accuracy and Modeling of Rolling Shutter Cameras. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 139–146. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Documentation of heritage buildings using close-range UAV images: Dense matching issues, comparison and case studies. Photogramm. Rec. 2017, 32, 206–229. [Google Scholar] [CrossRef]

- Carricondo, P.J.M.; Vega, F.A.; Carvajal-Ramirez, F.; Mesas-Carrascosa, F.-J.; García-Ferrer, A.; Pérez-Porras, F.-J. Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Grasso, N.; Lingua, A.M.; Noardo, F.; Spanò, A. Uav Photogrammetry with Oblique Images: First Analysis on Data Acquisition and Processing. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 835–842. [Google Scholar] [CrossRef]

- García-Sánchez, J.F. El pantano de Isabel II de Níjar (Almería): Paisaje, fondo y figura. ph Investig. 2014, 3, 55–74. [Google Scholar]

- Lovas, T.; Barsi, A.; Polgar, A.; Kibedy, Z.; Berenyi, A.; Detrekoi, A.; Dunai, L. Potential of terrestrial laserscanning in deformation measurement of structures. Am. Soc. Photogramm. Remote Sens. ASPRS Annu. Conf. 2008 Bridg. Horiz. New Front. Geospat. Collab. 2008, 2, 454–463. [Google Scholar]

- Danson, F.M.; Hetherington, D.; Koetz, B.; Morsdorf, F.; Allgöwer, B. Forest Canopy Gap Fraction from Terrestrial Laser Scanning. IEEE Geosci. Remote Sens. Lett. 2007, 4, 157–160. [Google Scholar] [CrossRef]

- Lumme, J.; Karjalainen, M.; Kaartinen, H.; Kukko, A.; Hyyppä, J.; Hyyppä, H.; Jaakkola, A.; Kleemola, J. Terrestrial laser scanning of agricultural crops. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 47, 563–566. [Google Scholar]

- Abellan, A.; Calvet, J.; Vilaplana, J.M.; Blanchard, J. Detection and spatial prediction of rockfalls by means of terrestrial laser scanner monitoring. Geomorphology 2010, 119, 162–171. [Google Scholar] [CrossRef]

- Schulz, T. Calibration of a Terrestrial Laser Scanner for Engineering Geodesy. Geod. Metrol. Eng. Geod. 2007. [Google Scholar] [CrossRef]

- Kwiatkowski, J.; Anigacz, W.; Beben, D. Comparison of Non-Destructive Techniques for Technological Bridge Deflection Testing. Materials 2020, 13, 1908. [Google Scholar] [CrossRef]

- Fryskowska, A. Accuracy Assessment of Point Clouds Geo-Referencing in Surveying and Documentation of Historical Complexes. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017. [Google Scholar] [CrossRef]

- Nesbit, P.; Hugenholtz, C.H. Enhancing UAV–SfM 3D Model Accuracy in High-Relief Landscapes by Incorporating Oblique Images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef]

- Abbas, M.A.; Luh, L.C.; Setan, H.; Majid, Z.; Chong, A.K.; Aspuri, A.; Idris, K.M.; Ariff, M.F.M. Terrestrial Laser Scanners Pre-Processing: Registration and Georeferencing. J. Teknol. 2014, 71. [Google Scholar] [CrossRef][Green Version]

- Martin, R.; Rojas, I.; Franke, K.W.; Hedengren, J.D. Evolutionary View Planning for Optimized UAV Terrain Modeling in a Simulated Environment. Remote Sens. 2015, 8, 26. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Efficient structure from motion for oblique UAV images based on maximal spanning tree expansion. ISPRS J. Photogramm. Remote Sens. 2017, 132, 140–161. [Google Scholar] [CrossRef]

- Vega, F.A.; Carvajal-Ramirez, F.; Martínez-Carricondo, P. Assessment of photogrammetric mapping accuracy based on variation ground control points number using unmanned aerial vehicle. Measurement 2017, 98, 221–227. [Google Scholar] [CrossRef]

- Rosnell, T.; Honkavaara, E. Point Cloud Generation from Aerial Image Data Acquired by a Quadrocopter Type Micro Unmanned Aerial Vehicle and a Digital Still Camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [PubMed]

- Assessing the Impact of the Number of GCPS on the Accuracy of Photogrammetric Mapping from UAV Imagery. Balt. Surv. 2019, 10, 43–51. [CrossRef]

- Siqueira, H.L.; Marcato, J.; Matsubara, E.T.; Eltner, A.; Colares, R.A.; Santos, F.M.; Junior, J.M. The Impact of Ground Control Point Quantity on Area and Volume Measurements with UAV SFM Photogrammetry Applied in Open Pit Mines. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Tonkin, T.; Midgley, N. Ground-Control Networks for Image Based Surface Reconstruction: An Investigation of Optimum Survey Designs Using UAV Derived Imagery and Structure-from-Motion Photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. Cloud Compare: 3D Point Cloud and Mesh Processing Software, Open-Source Project. Available online: http://cloudcompare.org/ (accessed on 10 May 2020).

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Rossi, P.; Mancini, F.; Dubbini, M.; Mazzone, F.; Capra, A. Combining nadir and oblique UAV imagery to reconstruct quarry topography: Methodology and feasibility analysis. Eur. J. Remote Sens. 2017, 50, 211–221. [Google Scholar] [CrossRef]

- Dos Santos, D.R.; Poz, A.P.D.; Khoshelham, K. Indirect Georeferencing of Terrestrial Laser Scanning Data using Control Lines. Photogramm. Rec. 2013, 28, 276–292. [Google Scholar] [CrossRef]

- Awasthi, B.; Karki, S.; Regmi, P.; Dhami, D.S.; Thapa, S.; Panday, U.S. Analyzing the Effect of Distribution Pattern and Number of GCPs on Overall Accuracy of UAV Photogrammetric Results. In Lecture Notes in Civil Engineering; di Prisco, M., Chen, S.-H., Vayas, I., Kumar Shukla, S., Sharma, A., Kumar, N., Wang, C.M., Eds.; Springer Science and Business Media LLC: Berlin, Germany, 2020; pp. 339–354. [Google Scholar]

- Lingua, A.; Noardo, F.; Spanò, A.; Sanna, S.; Matrone, F. 3D Model Generation Using Oblique Images Acquired by Uav. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 107–115. [Google Scholar] [CrossRef]

| Station | Cloud to Cloud Error (cm) | Common Points (%) | Confidence (%) |

|---|---|---|---|

| SS-1 | |||

| SS-2 | 0.237 | 35% | 97% |

| SS-3 | 0.278 | 18% | 80% |

| SS-4 | 0.469 | 48% | 99% |

| SS-2 | |||

| SS-1 | 0.237 | 35% | 97% |

| SS-4 | 0.381 | 57% | 100% |

| SS-3 | |||

| SS-1 | 0.278 | 18% | 80% |

| SS-4 | |||

| SS-1 | 0.469 | 48% | 99% |

| SS-2 | 0.381 | 57% | 100% |

| Point | X-Error (cm) | Y-Error (cm) | Z-Error (cm) | Total Error (cm) |

|---|---|---|---|---|

| Point-1 | ||||

| SS-1 | −1.062 | 0.491 | 0.404 | 1.238 |

| Point-2 | ||||

| SS-2 | −1.841 | −2.341 | −1.459 | 3.316 |

| Point-3 | ||||

| SS-2 | 0.741 | 2.998 | 1.619 | 3.487 |

| Point-4 | ||||

| SS-4 | 1.741 | 0.973 | 0.354 | 2.026 |

| Point-5 | ||||

| SS-2 | 0.420 | −2.120 | −0.917 | 2.348 |

| Id Photogrammetric Project | Photographic Orientation | Number of GCPs Used | Adequate Distribution |

|---|---|---|---|

| 1 | Nadiral | 3 | Yes |

| 2 | Oblique | 3 | Yes |

| 3 | Nadiral & Oblique | 3 | Yes |

| 4 | Nadiral | 3 | Not |

| 5 | Oblique | 3 | Not |

| 6 | Nadiral & Oblique | 3 | Not |

| 7 | Nadiral | 5 | Yes |

| 8 | Oblique | 5 | Yes |

| 9 | Nadiral & Oblique | 5 | Yes |

| 10 | Nadiral | 5 | Not |

| 11 | Oblique | 5 | Not |

| 12 | Nadiral & Oblique | 5 | Not |

| 13 | Nadiral | 7 | Yes |

| 14 | Oblique | 7 | Yes |

| 15 | Nadiral & Oblique | 7 | Yes |

| 16 | Nadiral | 7 | Not |

| 17 | Oblique | 7 | Not |

| 18 | Nadiral & Oblique | 7 | Not |

| Errors TLS vs GNSS | ||

|---|---|---|

| Profiles | Mean (cm) | Standard Deviation (cm) |

| P-1 | −0.180 | 2.888 |

| P-2 | −0.122 | 2.602 |

| P-3 | −0.244 | 2.820 |

| P-4 | 0.003 | 3.487 |

| P-5 | 0.166 | 2.617 |

| P-6 | −0.139 | 1.877 |

| P-7 | 0.302 | 4.463 |

| Mean of profiles | −0.030 | 2.965 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F. Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying. Remote Sens. 2020, 12, 2221. https://doi.org/10.3390/rs12142221

Martínez-Carricondo P, Agüera-Vega F, Carvajal-Ramírez F. Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying. Remote Sensing. 2020; 12(14):2221. https://doi.org/10.3390/rs12142221

Chicago/Turabian StyleMartínez-Carricondo, Patricio, Francisco Agüera-Vega, and Fernando Carvajal-Ramírez. 2020. "Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying" Remote Sensing 12, no. 14: 2221. https://doi.org/10.3390/rs12142221

APA StyleMartínez-Carricondo, P., Agüera-Vega, F., & Carvajal-Ramírez, F. (2020). Use of UAV-Photogrammetry for Quasi-Vertical Wall Surveying. Remote Sensing, 12(14), 2221. https://doi.org/10.3390/rs12142221