Rapid Flood Mapping and Evaluation with a Supervised Classifier and Change Detection in Shouguang Using Sentinel-1 SAR and Sentinel-2 Optical Data

Abstract

1. Introduction

2. Study Area and Data

2.1. Study Area

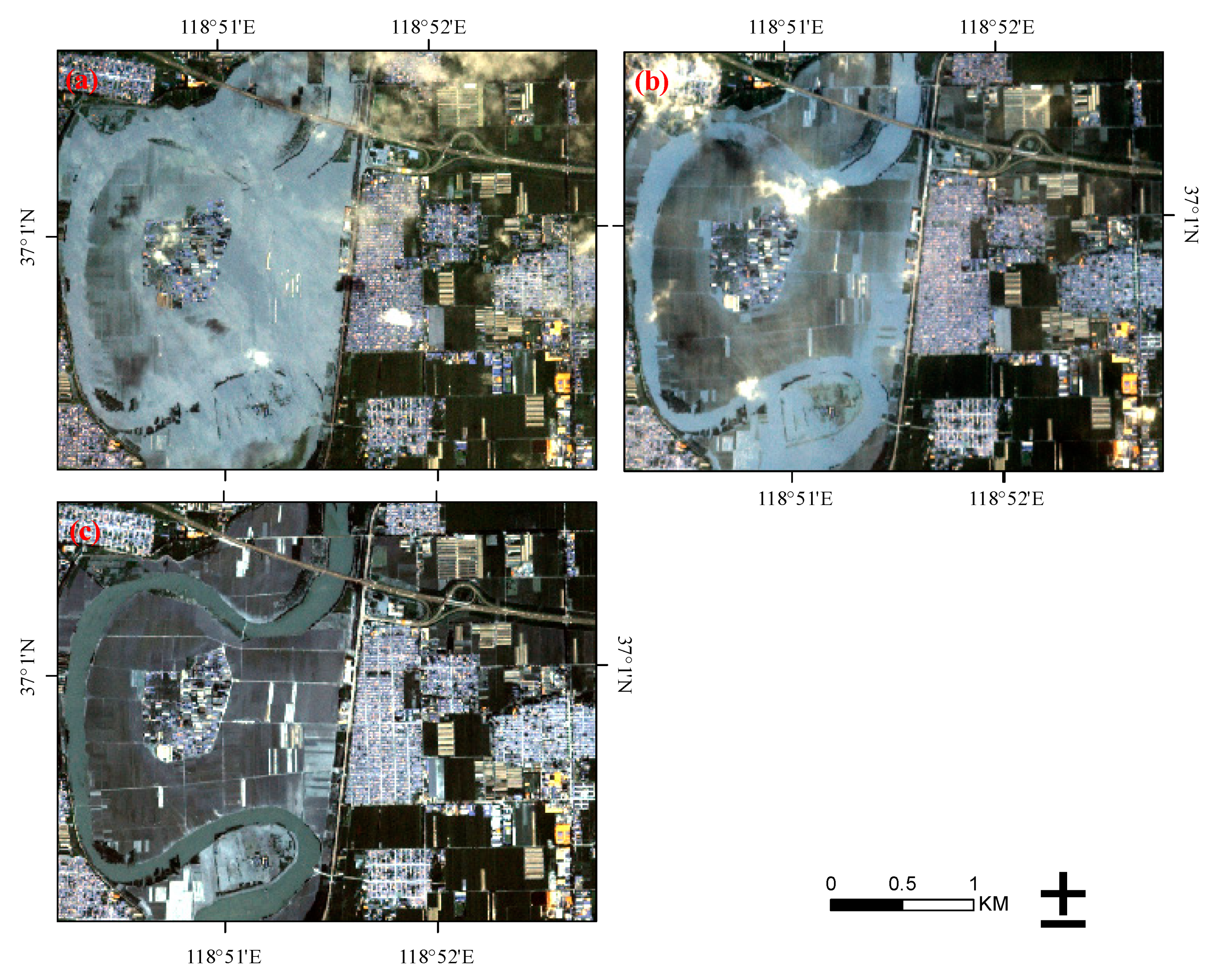

2.2. Data

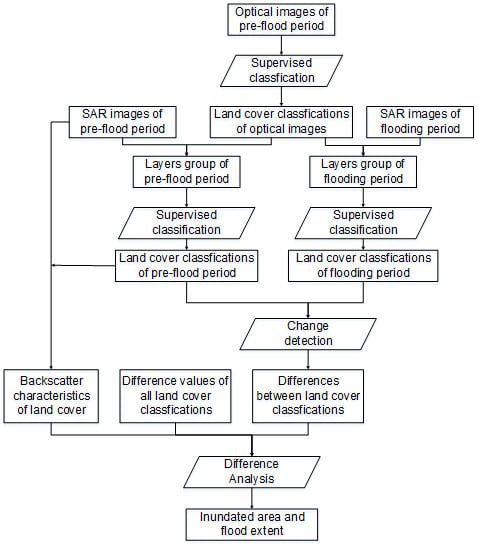

3. Methodology

3.1. Method of Flood Mapping

- (1)

- Determine the land cover categories in the study area;

- (2)

- Download high-quality optical images and GRD images of the pre-flood and flooding period whose date is closest to the flood event. The dates of these two images may be different but must contain a flood;

- (3)

- Get the land cover classification based on the optical images with a supervised classification method, which is called normal optical classification;

- (4)

- Combine the GRD images of the pre-flood period with the normal optical classification into a layer group, and get the backscatter classification of the pre-flood period with a supervised method based on the layer group;

- (5)

- Combine the GRD images of the flooding period with the normal optical classification into a layer group, and get the backscatter classification of the flooding period with a supervised method based on the layer group;

- (6)

- Compute the average backscatter coefficient of each category based on the backscatter classification of the pre-flood period and arrange them in ascending order, which is one of the pieces of prior knowledge for flood mapping and evaluation;

- (7)

- The number of each category is the nth power of two and the two backscatter classification results are remarked in number according to the order of their average backscatter;

- (8)

- The other piece of prior knowledge is the backscatter variation rules of different classes;

- (9)

- Detect change information between the two backscatter classification results with the pixel-based method;

- (10)

- Determine the flood extent according to the prior knowledge in steps (6) and (8).

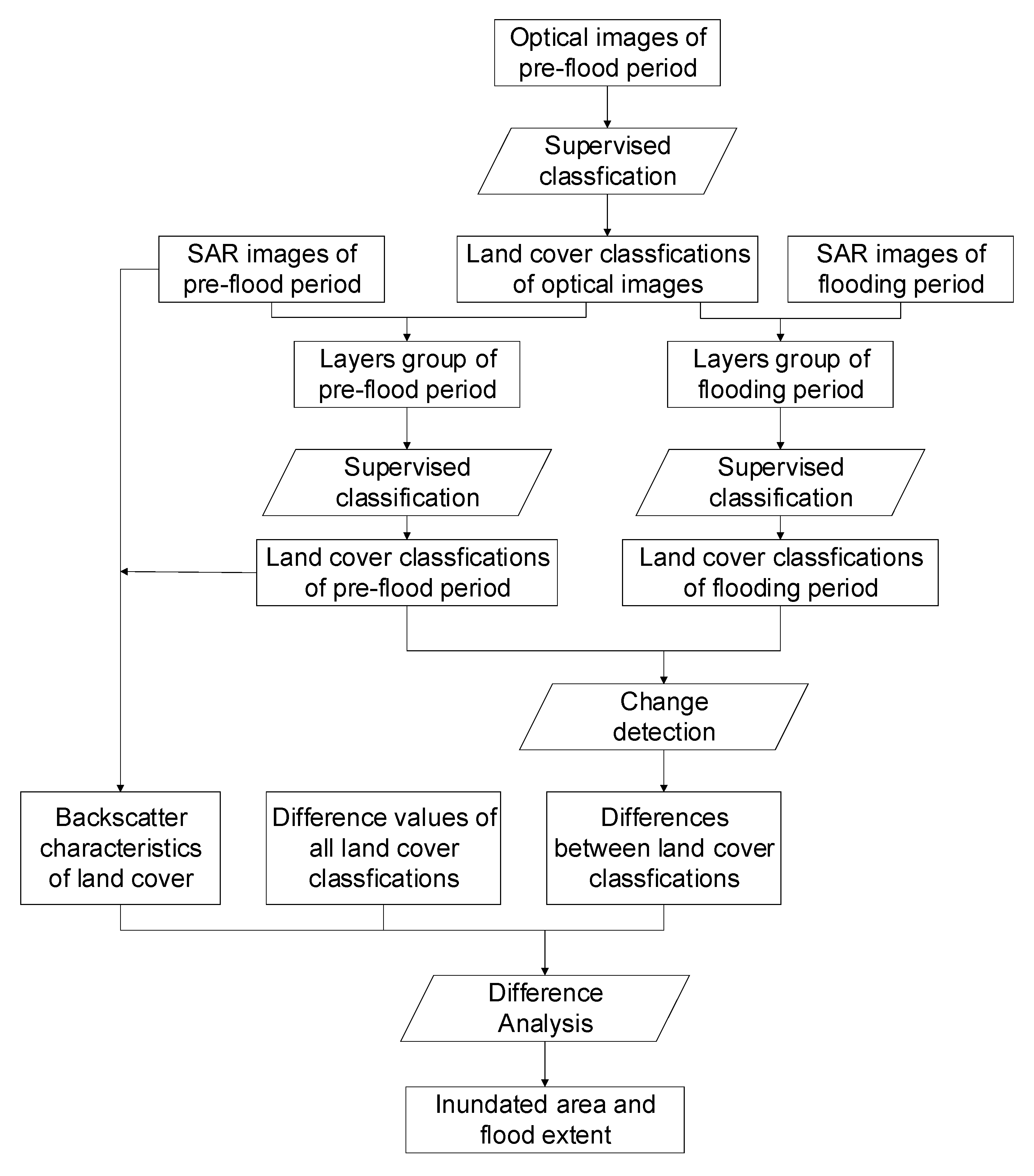

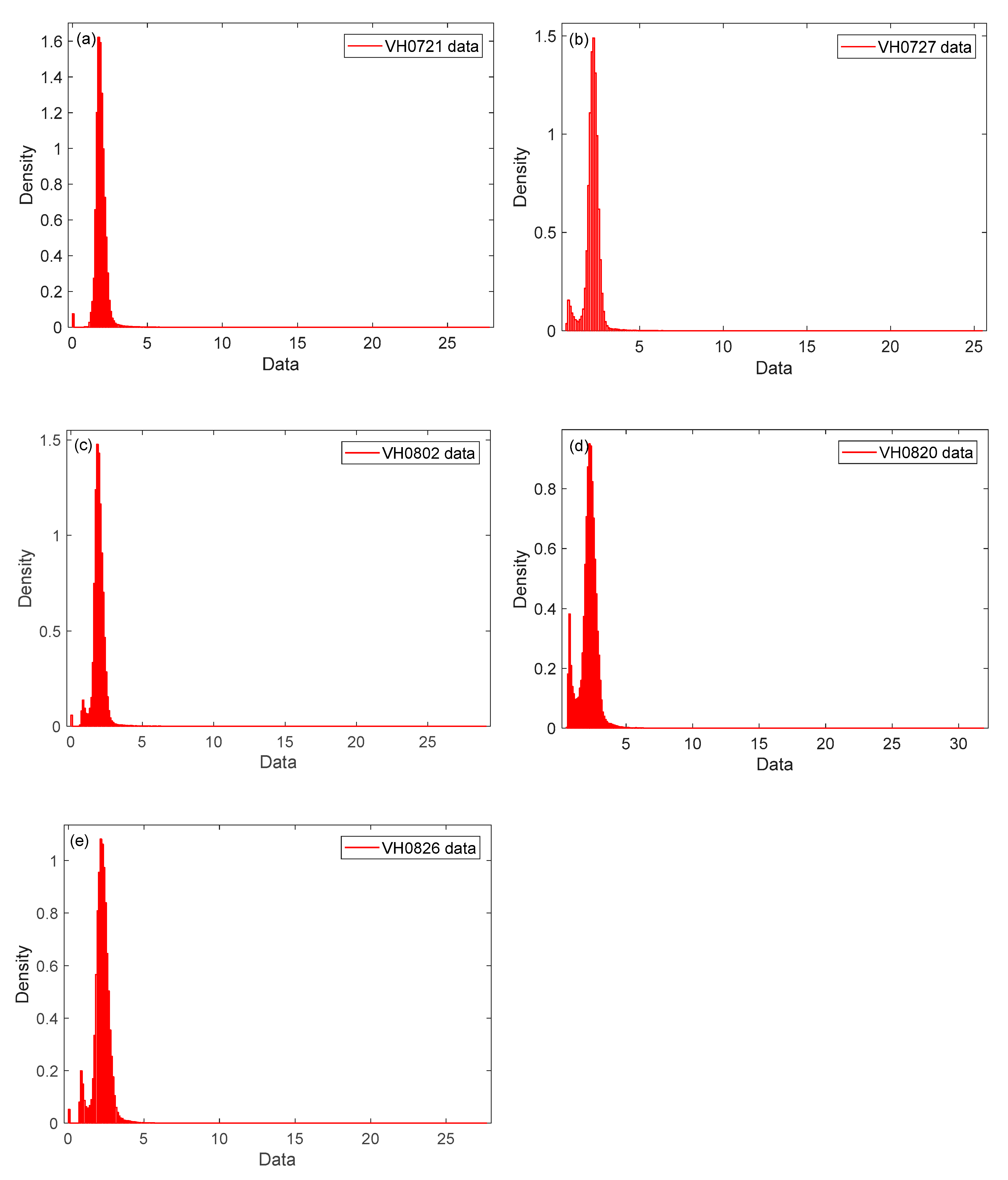

3.1.1. SAR Image Processing

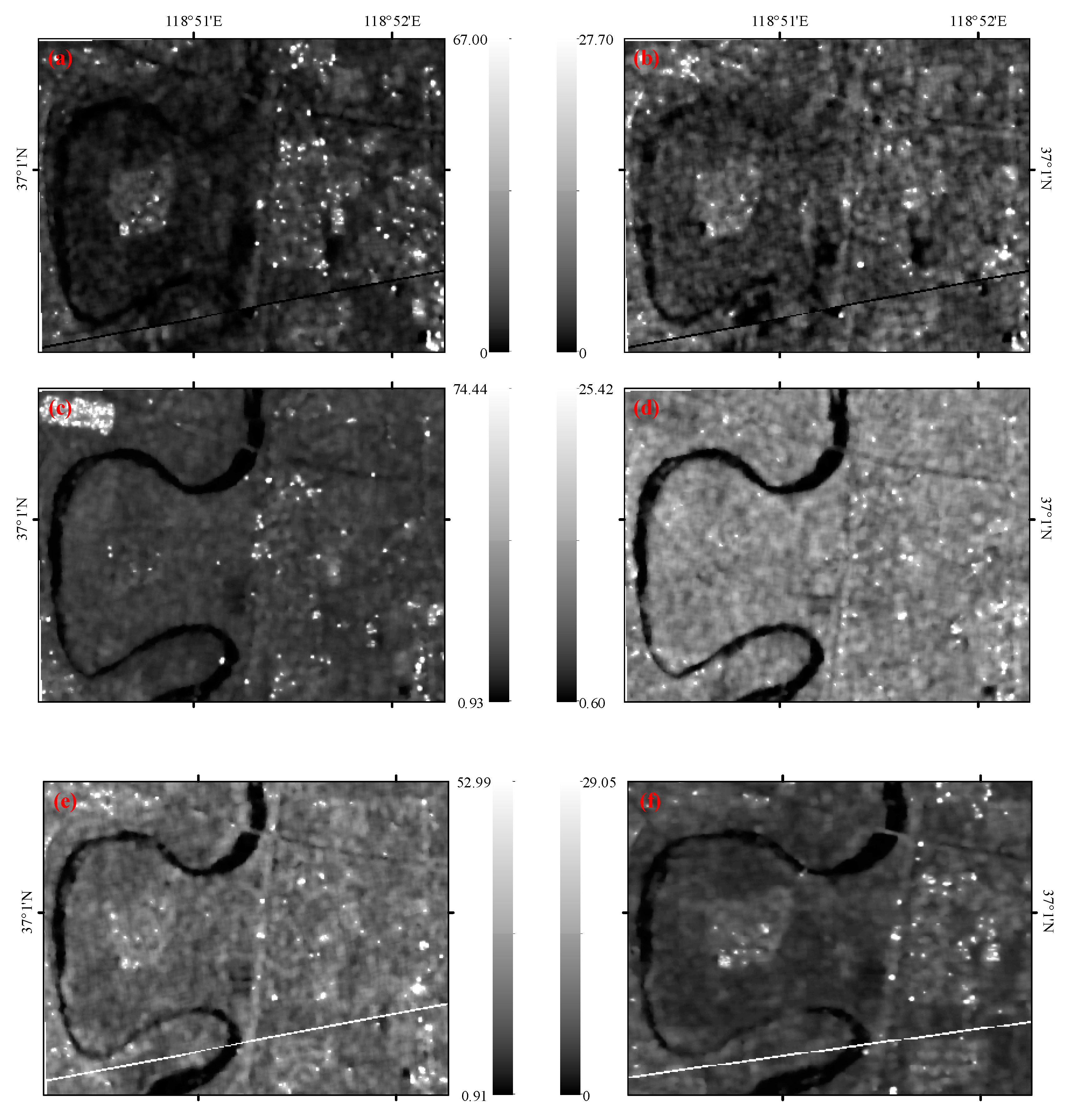

3.1.2. Supervised Classification

3.1.3. Backscatter Characteristics and Variation Rules of Ground Objects

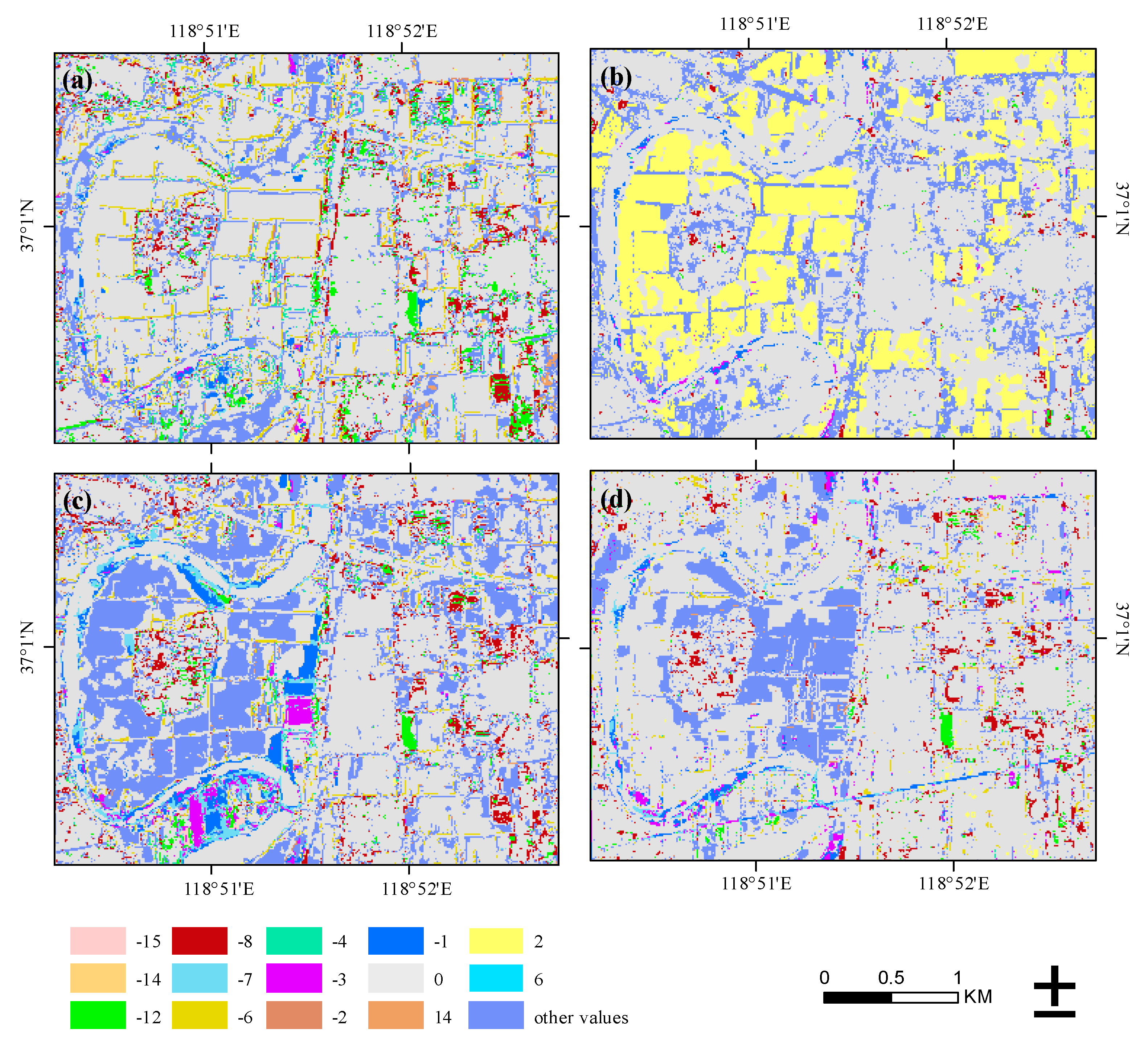

3.1.4. Change Detection and Flood Estimation Rules

3.2. Flood Extraction with Otsu Thresholding and NDWI

4. Results and Discussion

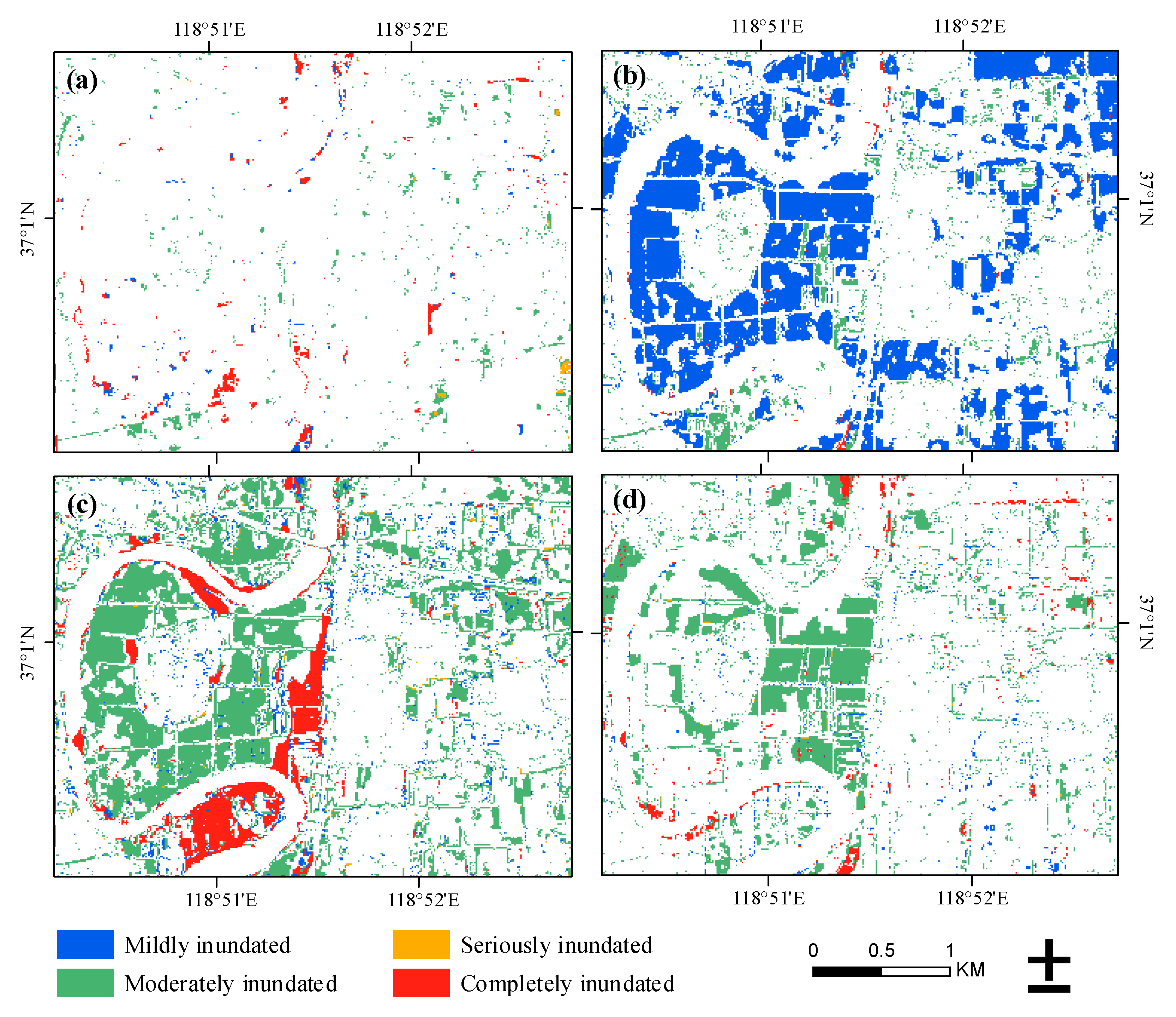

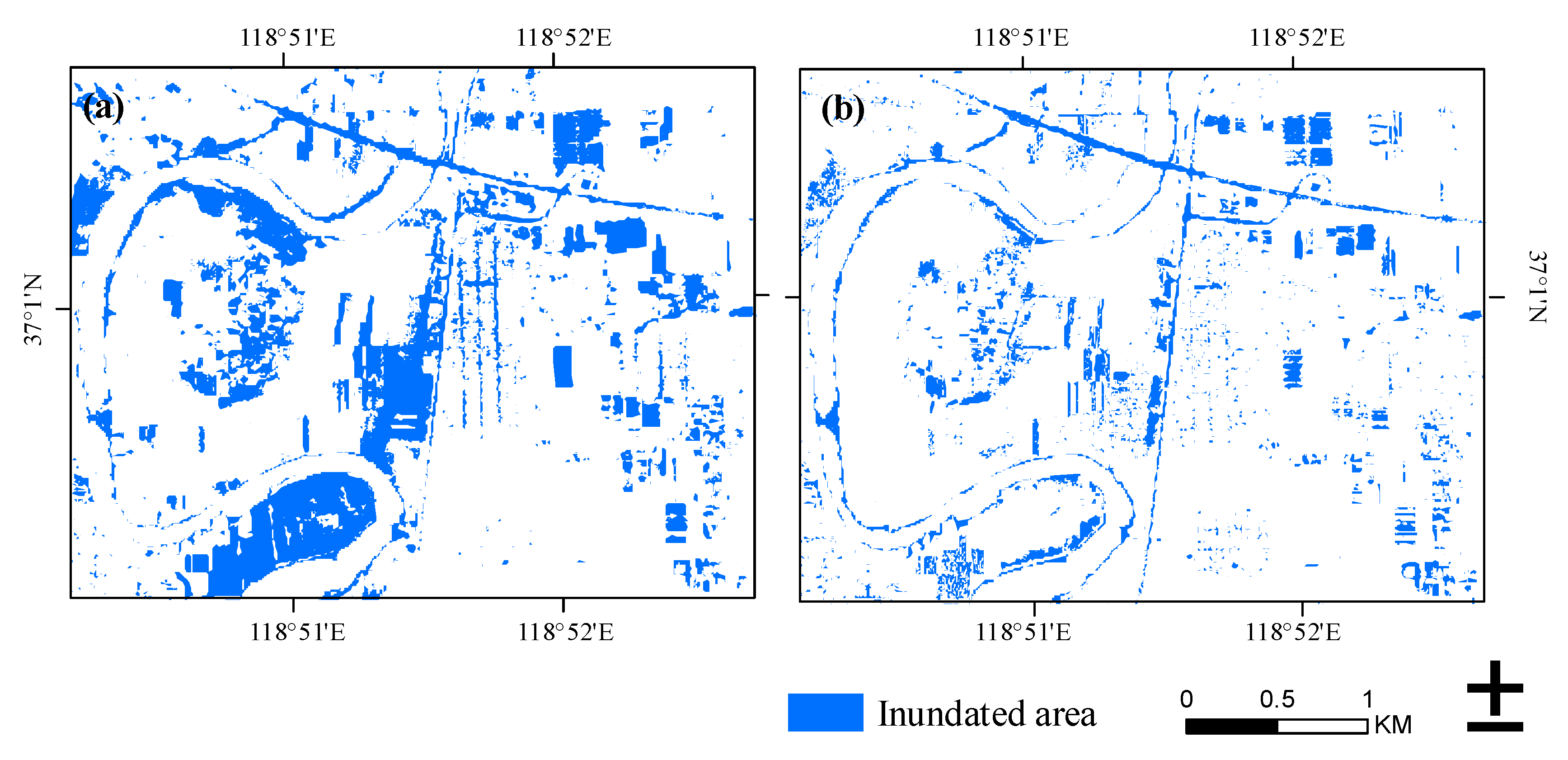

4.1. Flood Extraction and Evaluation

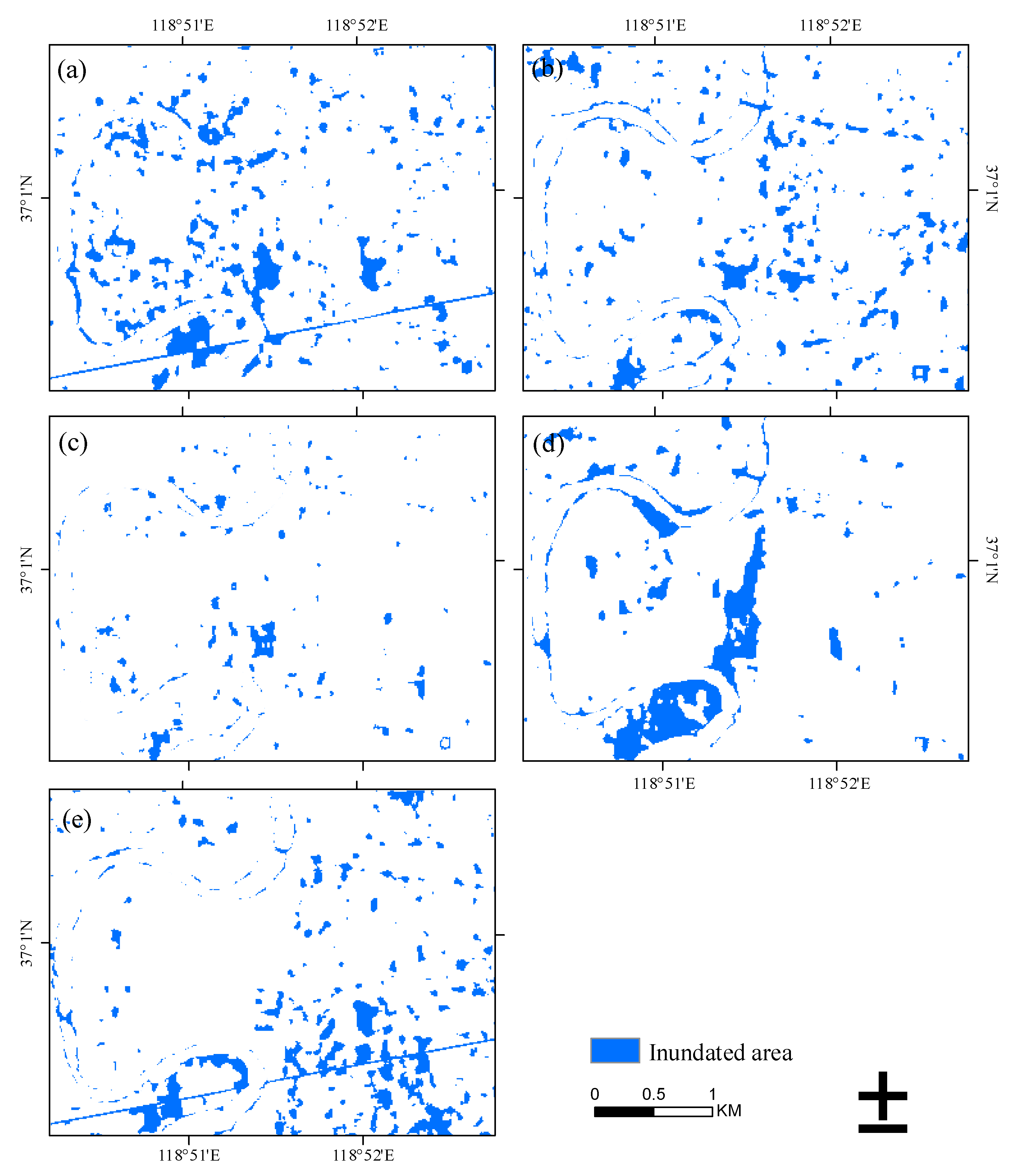

4.2. Flood Extraction with Otsu Thresholding

4.3. Flood Extraction with NDWI

4.4. Discussion

5. Conclusions

- (1)

- The accuracy of the RFC results based on Sentinel-1 and Sentinel-2 images reaches 80.95%, which avoids the inaccuracy caused by a single threshold. Furthermore, the optical images from Planet are used to validate the results.

- (2)

- The final accuracy of rapid extent estimation using Sentinel-1 and Sentinel-2 images on 20 and 26 August 2018 are 85.22% and 95.45, respectively. Moreover, all required data and data processing are simple, so it can be popularized in rapid flood mapping in early disaster relief.

- (3)

- The flood area and degree can be evaluated rapidly by our approach, but only the flooded regions can be extracted with the other two methods. The completely inundated areas were almost the same from the three methods.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Manavalan, R. SAR image analysis techniques for flood area mapping-literature survey. Earth Sci. Inform. 2017, 10, 1–14. [Google Scholar] [CrossRef]

- Huang, M.; Jin, S. A methodology for simple 2-D inundation analysis in urban area using SWMM and GIS. Nat. Hazards 2019, 97, 15–43. [Google Scholar] [CrossRef]

- Capolongo, D.; Refice, A.; Bocchiola, D.; D’Addabbo, A.; Vouvalidis, K.; Soncini, A.; Zingaro, M.; Bovenga, F.; Stamatopoulos, L. Coupling multitemporal remote sensing with geomorphology and hydrological modeling for post flood recovery in the Strymonas dammed river basin (Greece). Sci. Total Environ. 2019, 651, 1958–1968. [Google Scholar] [CrossRef] [PubMed]

- Shangguan, M.; Wang, W.; Jin, S.G. Variability of temperature and ozone in the upper troposphere and lower stratosphere from multi-satellite observations and reanalysis data. Atmos. Chem. Phys. 2019, 19, 6659–6679. [Google Scholar] [CrossRef]

- Shen, X.; Anagnostou, E.N.; Allen, G.H.; Robert Brakenridge, G.; Kettner, A.J. Near-real-time non-obstructed flood inundation mapping using synthetic aperture radar. Remote Sens. Environ. 2019, 221, 302–315. [Google Scholar] [CrossRef]

- Goffi, A.; Stroppiana, D.; Brivio, P.A.; Bordogna, G.; Boschetti, M. Towards an automated approach to map flooded areas from Sentinel-2 MSI data and soft integration of water spectral features. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101951. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Plank, S.; Ludwig, R. An automatic change detection approach for rapid flood mapping in Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 123–135. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised rapid flood mapping using sentinel-1 GRD SAR images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Martinis, S.; Rieke, C. Backscatter Analysis Using Multi-Temporal and Multi-Frequency SAR Data in the Context of Flood Mapping at River Saale, Germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef]

- Schlaffer, S.; Matgen, P.; Hollaus, M.; Wagner, W. Flood detection from multi-temporal SAR data using harmonic analysis and change detection. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 15–24. [Google Scholar] [CrossRef]

- Long, S.; Fatoyinbo, T.E.; Policelli, F. Flood extent mapping for Namibia using change detection and thresholding with SAR. Environ. Res. Lett. 2014, 9, 035002. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Integration of SAR intensity and coherence data to improve flood mapping. In Proceedings of the IGARSS 2015—2015 IEEE International Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015. [Google Scholar]

- Benoudjit, A.; Guida, R. A Novel Fully Automated Mapping of the Flood Extent on SAR Images Using a Supervised Classifier. Remote Sens. 2019, 11, 779. [Google Scholar] [CrossRef]

- Borah, S.B.; Sivasankar, T.; Ramya, M.N.S.; Raju, P.L.N. Flood inundation mapping and monitoring in Kaziranga National Park, Assam using Sentinel-1 SAR data. Environ. Monit. Assess. 2018, 190, 520. [Google Scholar] [CrossRef] [PubMed]

- Carreño Conde, F.; De Mata Muñoz, M. Flood Monitoring Based on the Study of Sentinel-1 SAR Images: The Ebro River Case Study. Water 2019, 11, 2454. [Google Scholar] [CrossRef]

- Li, N.; Niu, S.; Guo, Z.; Wu, L.; Zhao, J.; Min, L.; Ge, D.; Chen, J. Dynamic Waterline Mapping of Inland Great Lakes Using Time-Series SAR Data From GF-3 and S-1A Satellites: A Case Study of DJK Reservoir, China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4297–4314. [Google Scholar] [CrossRef]

- Ezzine, A.; Darragi, F.; Rajhi, H.; Ghatassi, A. Evaluation of Sentinel-1 data for flood mapping in the upstream of Sidi Salem dam (Northern Tunisia). Arab. J. Geosci. 2018, 11, 170. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Xin, N.; Zhang, J.; Wang, G.; Liu, Q. Research on Normality of Power Transform in Radar Target Recognition. Mod. Radar 2007, 7, 48–51. [Google Scholar]

- Cao, H.; Zhang, H.; Wang, C.; Zhang, B. Operational Flood Detection Using Sentinel-1 SAR Data over Large Areas. Water 2019, 11, 786. [Google Scholar] [CrossRef]

- Cai, Y.; Lin, H.; Zhang, M. Mapping paddy rice by the object-based random forest method using time series Sentinel-1/ Sentinel-2 data. Adv. Space Res. 2019, 64, 2233–2244. [Google Scholar] [CrossRef]

- Grimaldi, S.; Xu, J.; Li, Y.; Pauwels, V.R.N.; Walker, J.P. Flood mapping under vegetation using single SAR acquisitions. Remote Sens. Environ. 2020, 237, 111582. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Kundu, S.; Aggarwal, S.P.; Kingma, N.; Mondal, A.; Khare, D. Flood monitoring using microwave remote sensing in a part of Nuna river basin, Odisha, India. Nat. Hazards 2015, 76, 123–138. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Chung, S.Y.; Park, R.H.J.C.V.G.; Processing, I. A comparative performance study of several global thresholding techniques for segmentation. Comput. Vis. Graph. Image Process. 1990, 52, 171–190. [Google Scholar]

- Liu, Q.; Huang, C.; Shi, Z.; Zhang, S. Probabilistic River Water Mapping from Landsat-8 Using the Support Vector Machine Method. Remote Sens. 2020, 12, 1374. [Google Scholar] [CrossRef]

| Product | Time | Polarization |

|---|---|---|

| S1A_IW_GRDH_1SDV_20180721T100424_20180721T100449_022891_027B8F_9CD7 | 2018/07/21, 10:04:24 | VV + VH |

| S1A_IW_GRDH_1SDV_20180721T100449_20180721T100514_022891_027B8F_F983 | 2018/07/21, 10:04:49 | VV + VH |

| S1B_IW_GRDH_1SDV_20180727T220424_20180727T220449_012002_01618E_3530 | 2018/07/27, 22:04:25 | VV + VH |

| S1A_IW_GRDH_1SDV_20180802T100449_20180802T100514_023066_028115_88D7 | 2018/08/02, 10:04:49 | VV + VH |

| S1A_IW_GRDH_1SDV_20180802T100424_20180802T100449_023066_028115_1A62 | 2018/08/02, 10:04:24 | VV + VH |

| S1B_IW_SLC__1SDV_20180820T220420_20180820T220448_012352_016C5A_AA0A | 2018/08/20, 22:04:20 | VV + VH |

| S1A_IW_SLC__1SDV_20180826T100425_20180826T100452_023416_028C52_1376 | 2018/08/26, 10:04:25 | VV + VH |

| Product | Time | Spatial Resolution |

|---|---|---|

| S2B_MSIL1C_20180810T024539_N0206_R132_T50SPF_20180810T053506 | 2018/08/10,02:45:39 | 10 m |

| S2B_MSIL1C_20180810T024539_N0206_R132_T50SPG_20180810T053506 | 2018/08/10,02:45:39 | 10 m |

| Product | Time | Spatial Resolution | Bands |

|---|---|---|---|

| 20180820_022056_0f12 | 2018/08/20,02:20:56 | 3 m | Red, green, blue, NIR |

| 20180821_021924_1003 | 2018/08/21,02:19:24 | 3 m | Red, green, blue, NIR |

| 20180827_022047_1006 | 2018/08/27,02:20:47 | 3 m | Red, green, blue, NIR |

| Category | Number | VV | VH | ||

|---|---|---|---|---|---|

| Average S_Gamma | Standard Deviation | Average S_Gamma | Standard Deviation | ||

| Water bodies | 1 | 1.982416 | 0.863452 | 1.143954 | 0.35071 |

| Farmland without plastic sheds | 2 | 3.46729 | 0.683308 | 1.876859 | 0.217285 |

| Farmland with plastic sheds | 4 | 3.768043 | 0.740086 | 1.909334 | 0.319949 |

| Roads | 8 | 3.863828 | 0.663861 | 2.014632 | 0.295261 |

| Constructions | 16 | 5.151355 | 2.348478 | 2.20923 | 0.623642 |

| Flood Degree | D-value | Category Number of Pre-Flood Period | Category Number of Flooding Period |

|---|---|---|---|

| Completely inundated | −15 | Constructions | Water bodies |

| −7 | Roads | Water bodies | |

| −3 | Farmland with plastic sheds | Water bodies | |

| −1 | Farmland without plastic sheds | Water bodies | |

| Seriously inundated | 14 | Farmland without plastic sheds | Constructions |

| Moderately inundated | 12 | Farmland with plastic sheds | Constructions |

| −6 | Roads | Farmland without plastic sheds | |

| 6 | Farmland without plastic sheds | Roads | |

| Mildly inundated | −4 | Roads | Farmland with plastic sheds |

| −2 | Farmland with plastic sheds | Farmland without plastic sheds | |

| 2 | Farmland without plastic sheds | Farmland with plastic sheds | |

| No flood | Other value | - | - |

| User Accuracy | Producer Accuracy | |

|---|---|---|

| Water bodies | 91.67% | 84.62% |

| Farmland without plastic sheds | 97.91% | 88.46% |

| Farmland with plastic sheds | 90.9% | 75% |

| Roads | 65.22% | 64.71% |

| Constructions | 78.13% | 78.13 |

| Overall accuracy | 80.95% | |

| Kappa index | 74.1% | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, M.; Jin, S. Rapid Flood Mapping and Evaluation with a Supervised Classifier and Change Detection in Shouguang Using Sentinel-1 SAR and Sentinel-2 Optical Data. Remote Sens. 2020, 12, 2073. https://doi.org/10.3390/rs12132073

Huang M, Jin S. Rapid Flood Mapping and Evaluation with a Supervised Classifier and Change Detection in Shouguang Using Sentinel-1 SAR and Sentinel-2 Optical Data. Remote Sensing. 2020; 12(13):2073. https://doi.org/10.3390/rs12132073

Chicago/Turabian StyleHuang, Minmin, and Shuanggen Jin. 2020. "Rapid Flood Mapping and Evaluation with a Supervised Classifier and Change Detection in Shouguang Using Sentinel-1 SAR and Sentinel-2 Optical Data" Remote Sensing 12, no. 13: 2073. https://doi.org/10.3390/rs12132073

APA StyleHuang, M., & Jin, S. (2020). Rapid Flood Mapping and Evaluation with a Supervised Classifier and Change Detection in Shouguang Using Sentinel-1 SAR and Sentinel-2 Optical Data. Remote Sensing, 12(13), 2073. https://doi.org/10.3390/rs12132073