Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree

Abstract

1. Introduction

2. Data

2.1. COMS/GOCI

2.2. Himawari-8/Advanced Himawari Imager (AHI)

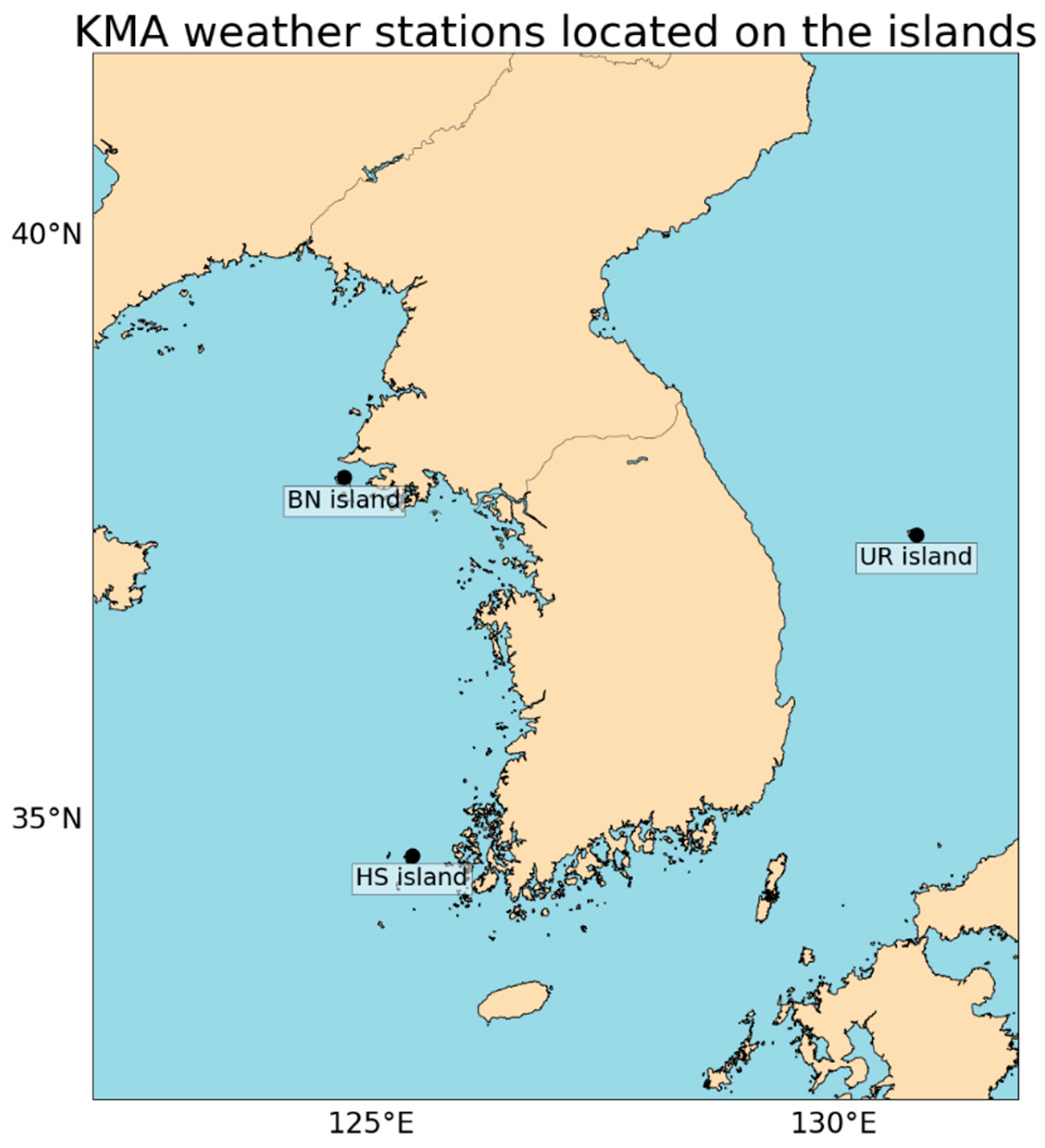

2.3. Automated Synoptic Observing System (ASOS) of the Korean Meteorological Administration (KMA)

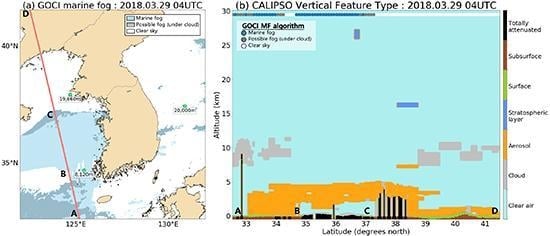

2.4. The Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation (CALIPSO)

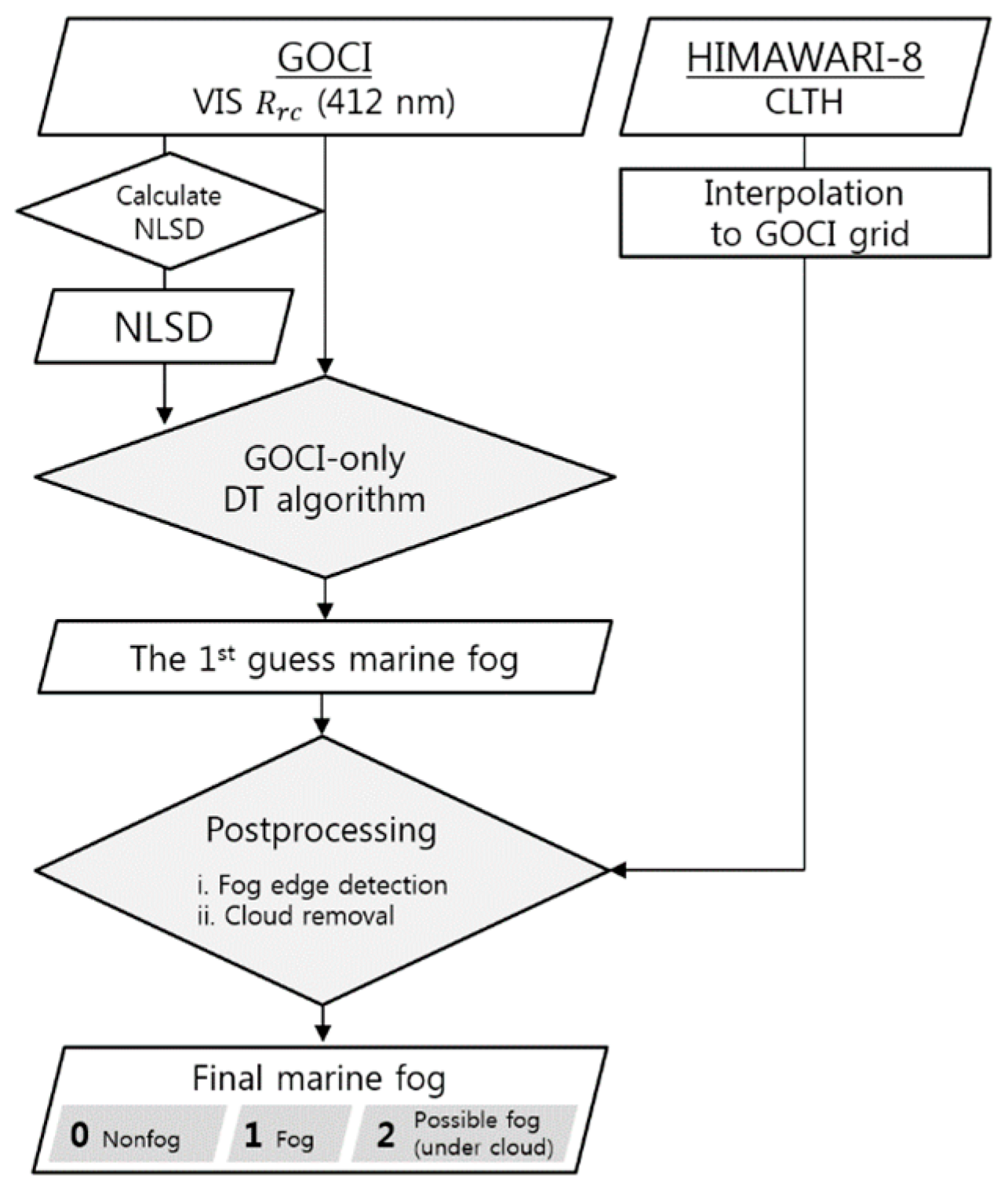

3. Methodology and Model Development Procedure

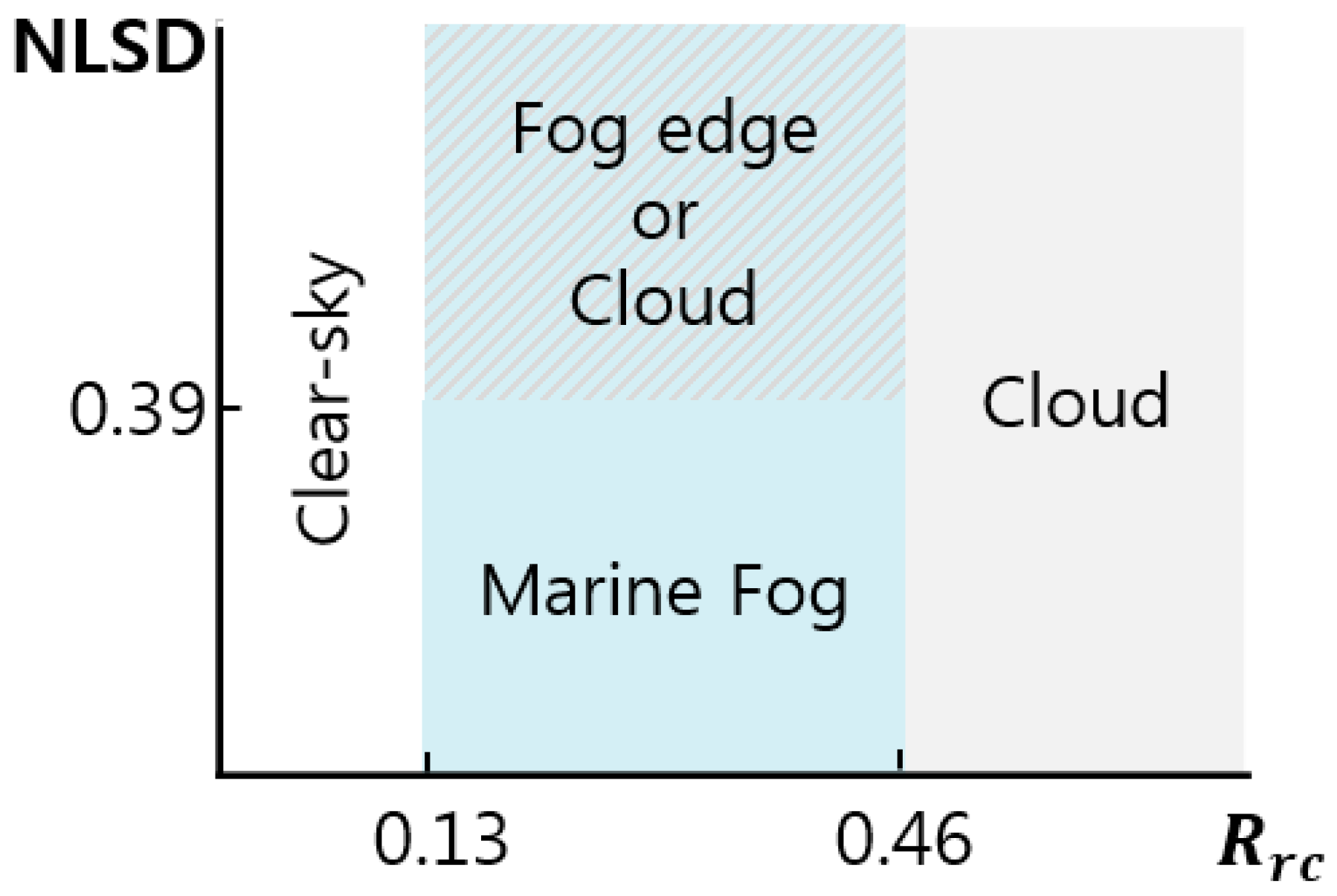

3.1. Decision Tree (DT)

3.2. Satellite-Weather Station Match-Up Data for Training and Validation

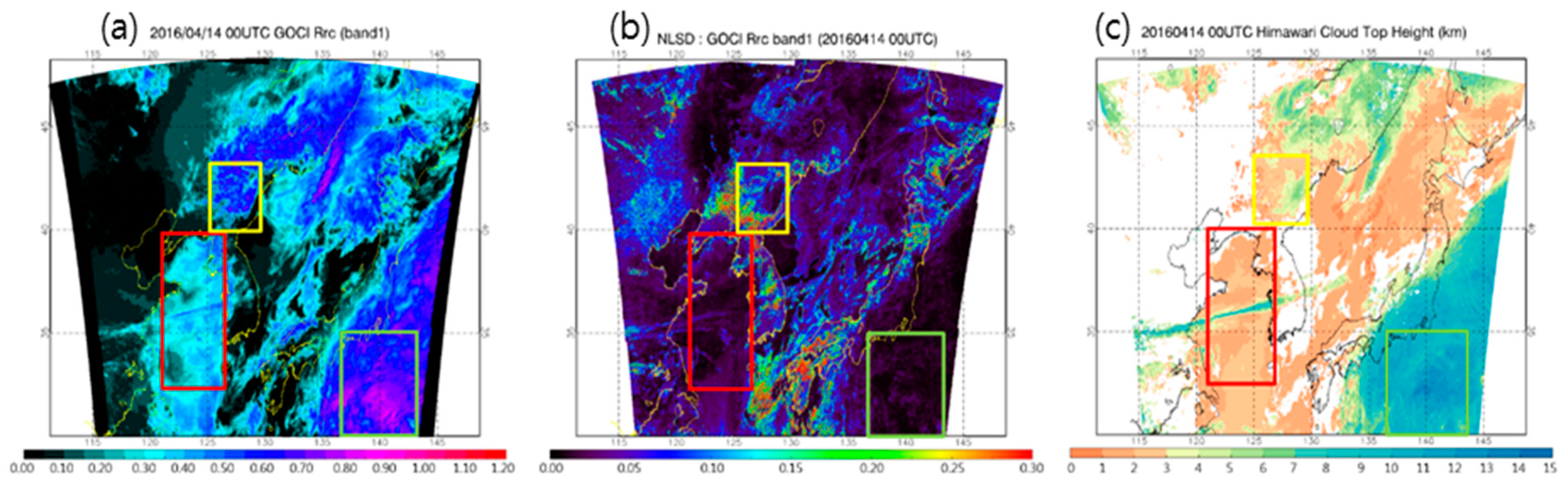

3.3. Satellite Input Variables for the MF Algorithm

4. Results

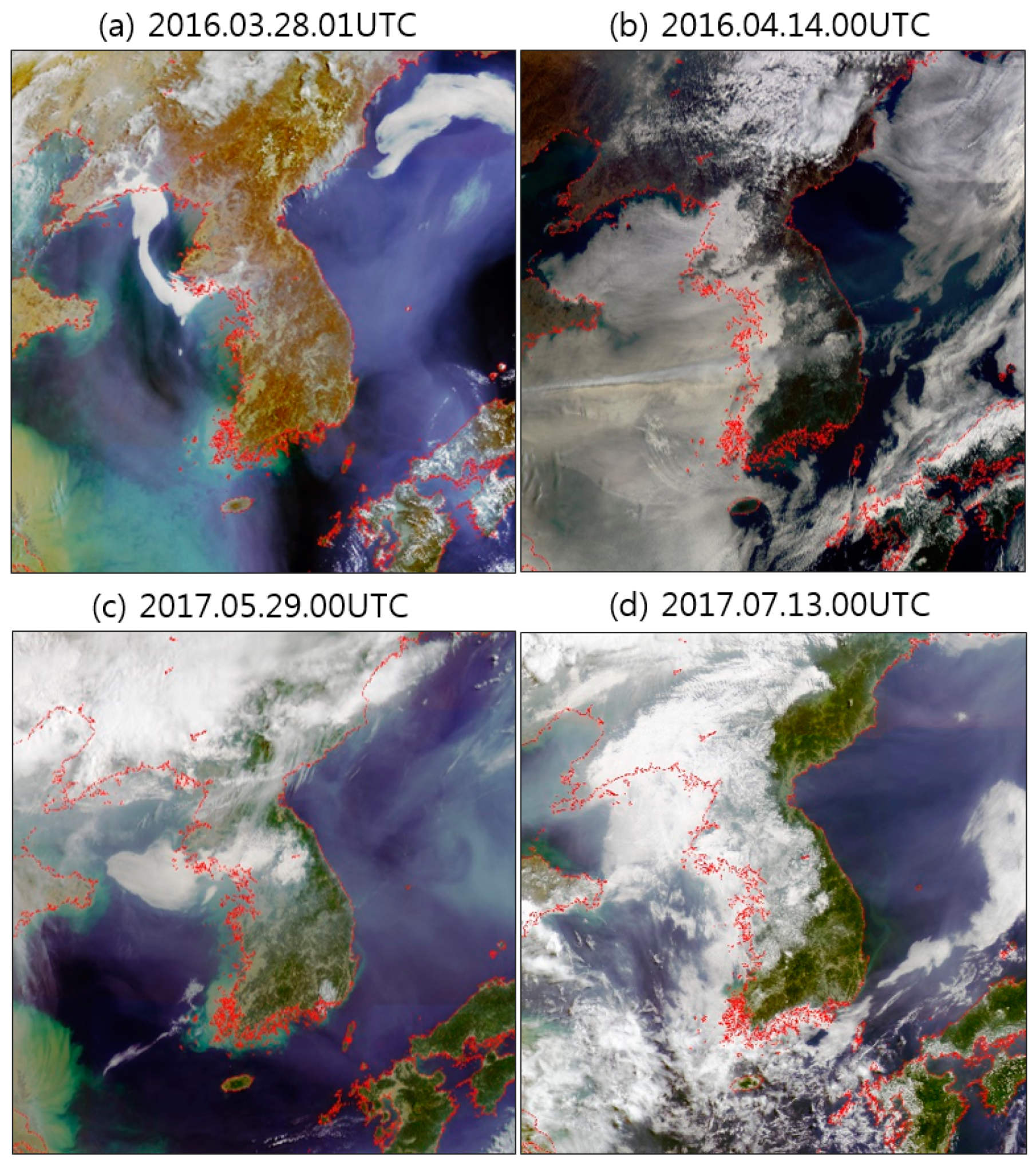

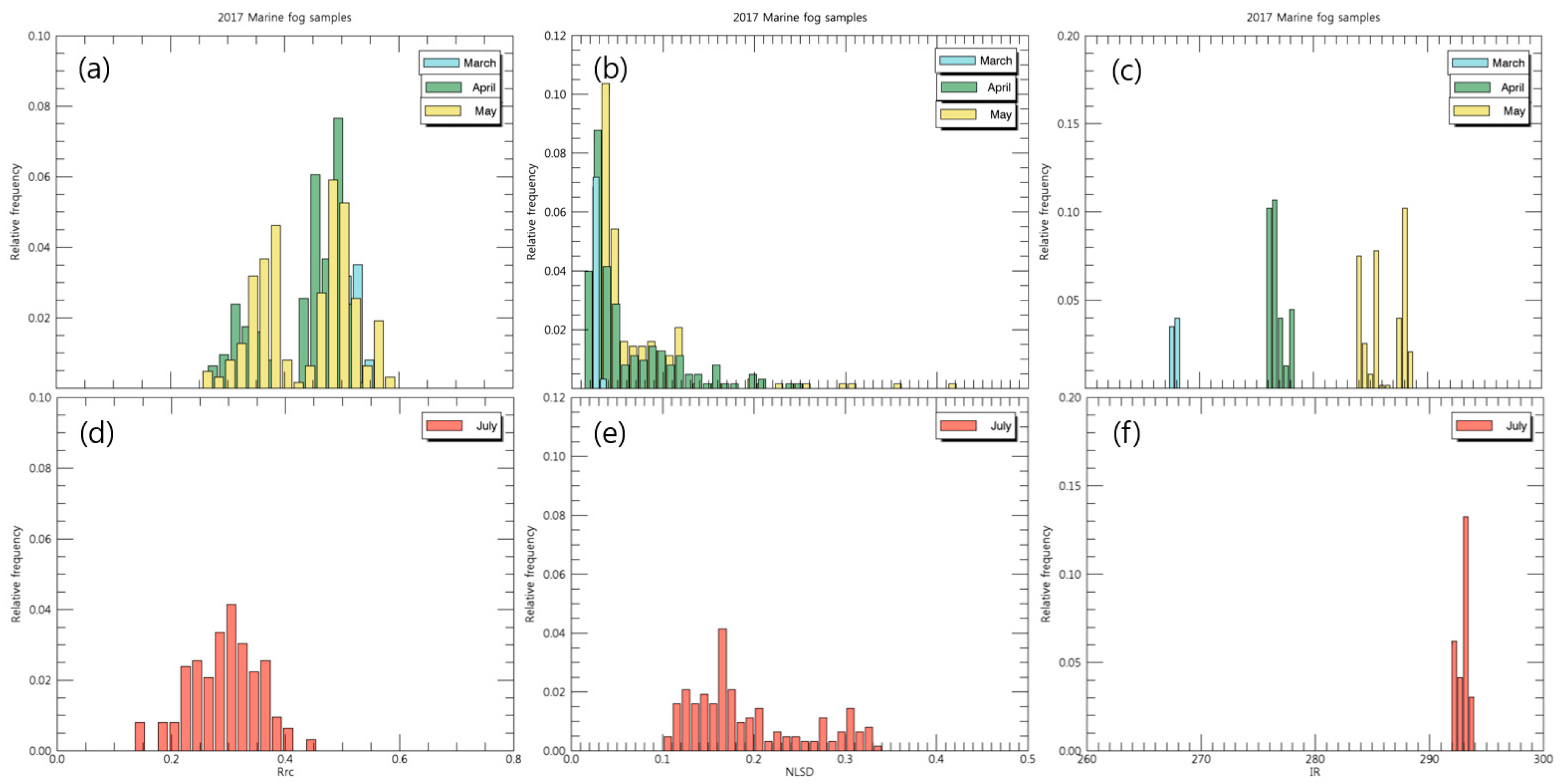

4.1. GOCI MF Detection Algorithm: DT-Trained Results

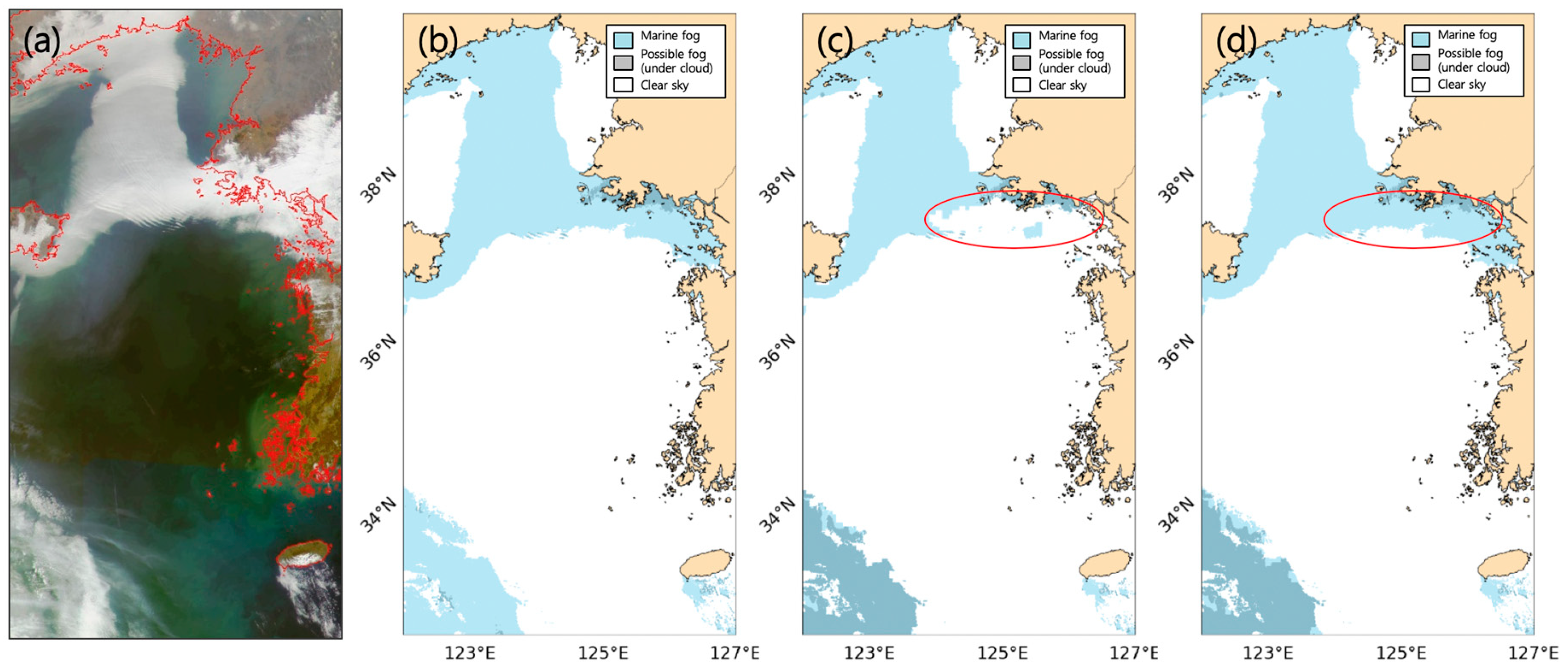

4.2. Postprocessing

5. Validation

5.1. Validation Using the 2017 Samples

5.2. Additional Algorithm Validation

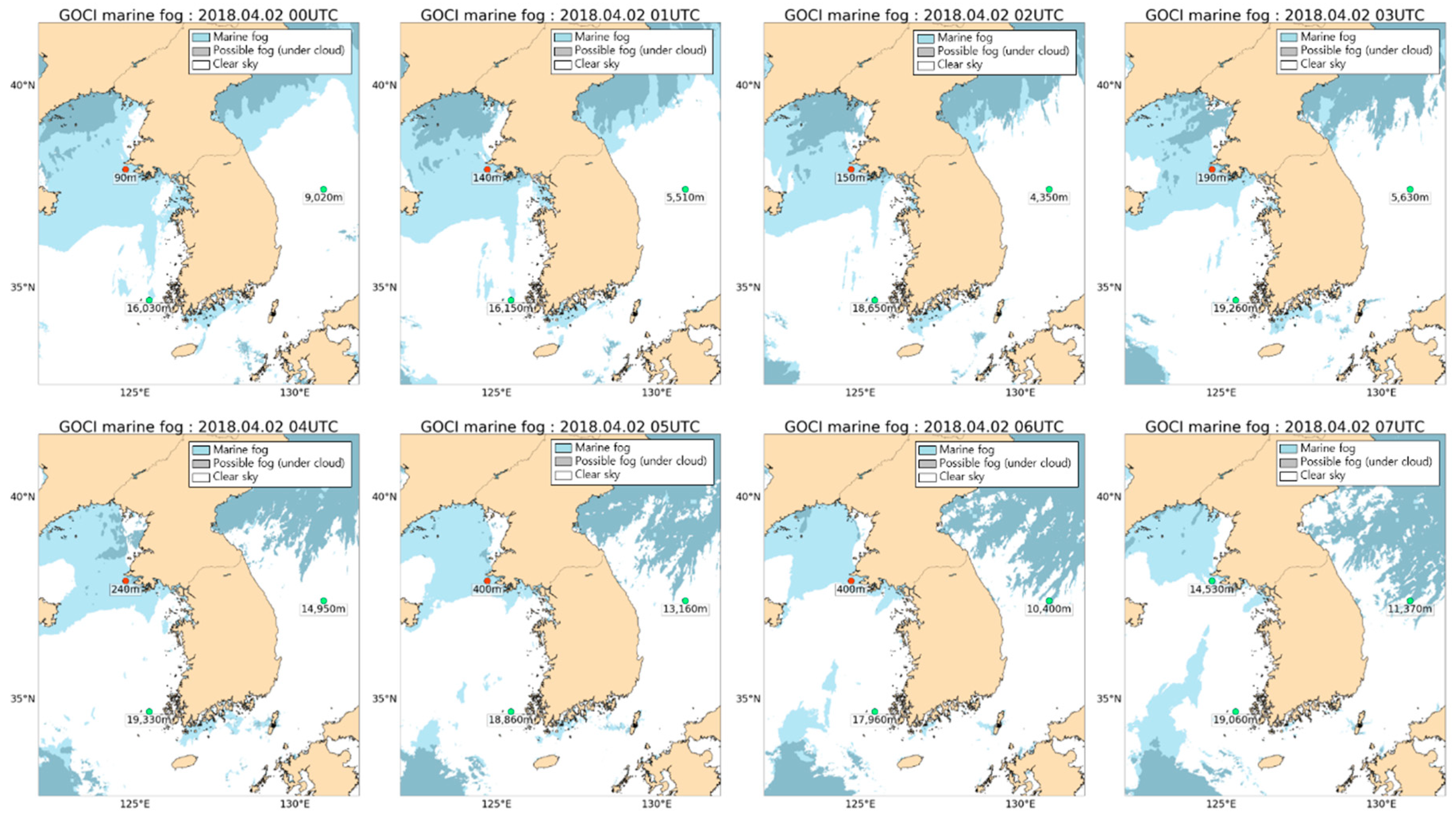

5.2.1. In Situ Visibility Observation

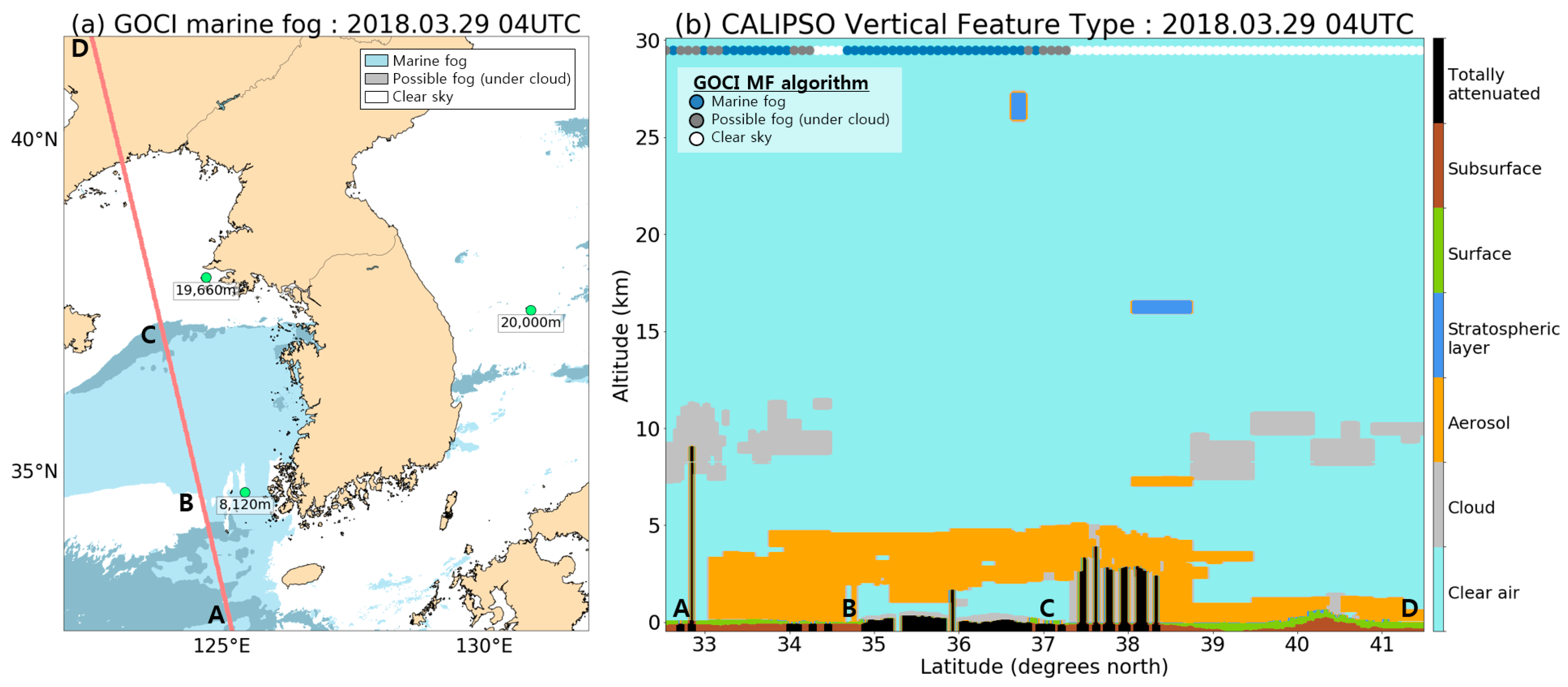

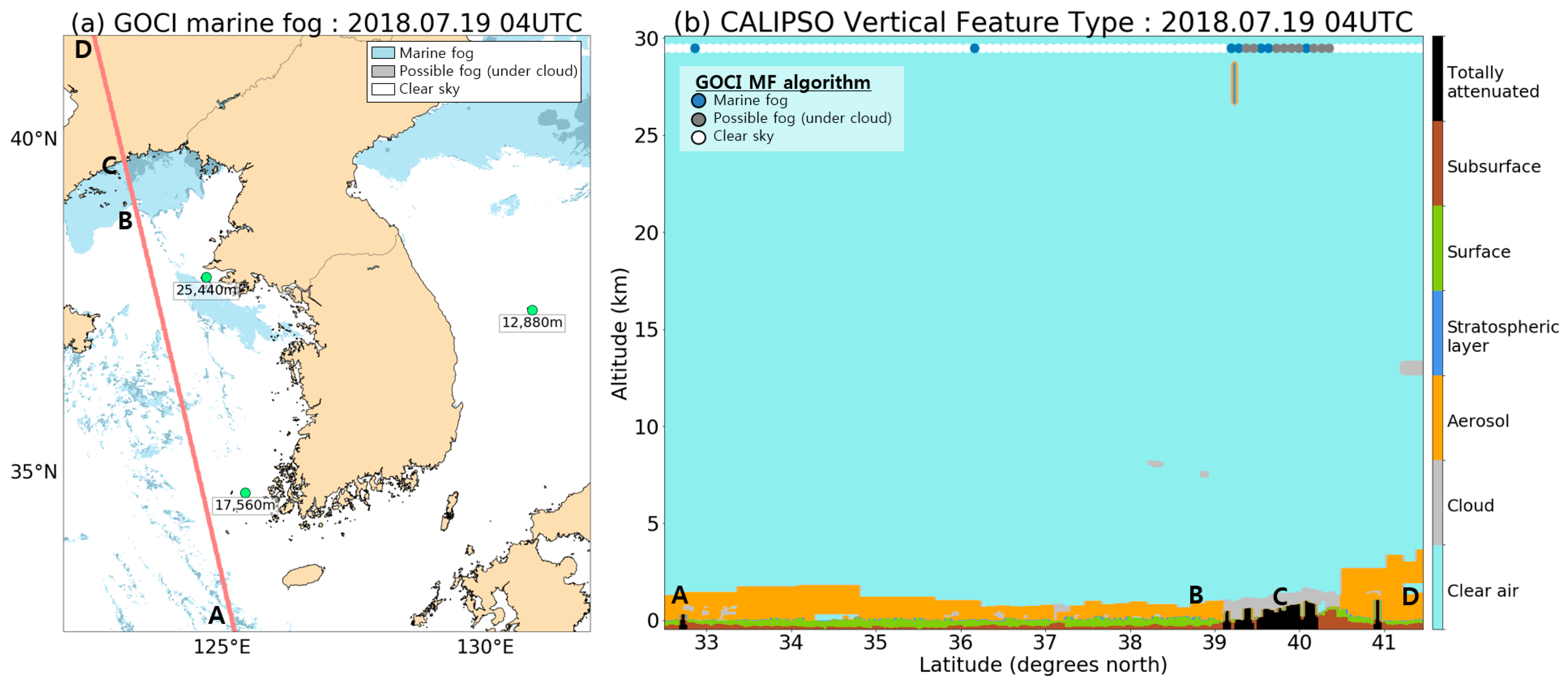

5.2.2. CALIPSO

6. Summary and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gultepe, I.; Tardif, R.; Michaelides, S.C.; Cermak, J.; Bott, A.; Bendix, J.; Müller, M.D.; Pagowski, M.; Hansen, B.; Ellrod, G.; et al. Fog research: a review of past achievements and future perspectives. Pure Appl. Geophys. 2007, 164, 1121–1159. [Google Scholar] [CrossRef]

- Tremant, M. La prévision du brouillard en mer. Météorologie Maritime et Activities Océanographique Connexes. WMO 1987, 20, 127. [Google Scholar]

- Park, J.-M.; Lee, S.M. Greening methods on the back of coastal waterproof wall using halophytes. J. Korean Soc. Fish. Mar. Sci. Educ. 2018, 30, 342–353. [Google Scholar]

- Scorer, R.S. Cloud Investigation by Satellite; Halstead Press: Chichester, UK; Ellis Horwood: New York, NY, USA, 1986. [Google Scholar]

- Eyre, J.R. Detection of fog at night using Advanced Very High Resolution Radiometer (AVHRR) imagery. Meteorol. Mag. 1984, 113, 266–271. [Google Scholar]

- Hunt, G. Radiative properties of terrestrial clouds at visible and infra-red thermal window wavelengths. Q. J. R. Meteorol. Soc. 1973, 99, 346–369. [Google Scholar] [CrossRef]

- Ellrod, G.P. Advances in the detection and analysis of fog at night using GOES multispectral infrared imagery. Weather Forecast. 1995, 10, 606–619. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. A novel approach to fog/low stratus detection using Meteosat 8 data. Atmos. Res. 2008, 87, 279–292. [Google Scholar] [CrossRef]

- Anthis, A.I.; Cracknell, A.P. Use of satellite images for fog detection (AVHRR) and forecast of fog dissipation (METEOSAT) over lowland Thessalia, Hellas. Int. J. Remote Sens. 1999, 20, 1107–1124. [Google Scholar] [CrossRef]

- Park, H.S.; Kim, Y.H.; Suh, A.S.; Lee, H.H. Detection of fog and the low stratus cloud at night using derived dual channel difference of NOAA/AVHRR data. In Proceedings of the 18th Asian Conference on Remote Sensing, Kuala Lumpur, Malaysia, 20–24 October 1997. [Google Scholar]

- Lee, J.R.; Chung, C.Y.; Ou, M.L. Fog detection using geostationary satellite data: Temporally continuous algorithm. Asia-Pac. J. Atmos. Sci. 2011, 47, 113–122. [Google Scholar] [CrossRef]

- Suh, M.S.; Lee, S.J.; Kim, S.H.; Han, J.H.; Seo, E.K. Development of land fog detection algorithm based on the optical and textural properties of fog using COMS data. Korean J. Remote Sens. 2017, 33, 359–375. [Google Scholar]

- Heo, K.Y.; Kim, J.H.; Shim, J.S.; Ha, K.J.; Suh, A.S.; Oh, H.M.; Min, S.Y. A remote sensed data combined method for sea fog detection. Korean J. Remote Sens. 2008, 24, 1–16. [Google Scholar]

- Gao, S.H.; Wu, W.; Zhu, L.; Fu, G. Detection of nighttime sea fog/stratus over the Huang-hai Sea using MTSAT-1R IR data. Acta Oceanol. Sin. 2009, 28, 23–35. [Google Scholar]

- Yuan, Y.; Qiu, Z.; Sun, D.; Wang, S.; Yue, X. Daytime sea fog retrieval based on GOCI data: a case study over the Yellow Sea. Opt. Express 2016, 24, 787–801. [Google Scholar] [CrossRef] [PubMed]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Env. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Park, M.S.; Kim, M.; Lee, M.I.; Im, J.; Park, S. Detection of tropical cyclone genesis via quantitative satellite ocean surface wind pattern and intensity analyses using decision trees. Remote Sens. Environ. 2016, 183, 205–214. [Google Scholar] [CrossRef]

- Kim, M.; Park, M.S.; Im, J.; Park, S.; Lee, M. Machine Learning Approaches for Detecting Tropical Cyclone Formation Using Satellite Data. Remote Sens. 2019, 11, 1195. [Google Scholar] [CrossRef]

- Ahn, J.H.; Park, Y.J.; Ryu, J.H.; Lee, B.; Oh, I.S. Development of Atmospheric Correction Algorithm for Geostationary Ocean Color Imager (GOCI). Ocean Sci. J. 2012, 47, 247–259. [Google Scholar] [CrossRef]

- Choi, J.K.; Park, Y.J.; Ahn, J.H.; Lim, H.S.; Eom, J.; Ryu, J.H. GOCI, the world’s first geostationary ocean color observation satellite, for the monitoring of temporal variability in coastal water turbidity. J. Geophys. Res.-Ocean. 2012, 117. [Google Scholar] [CrossRef]

- Choi, M.; Kim, J.; Lee, J.; Kim, M.; Park, Y.J.; Jeong, U.; Song, C.H. GOCI Yonsei Aerosol Retrieval (YAER) algorithm and validation during the DRAGON-NE Asia 2012 campaign. Atmos. Meas. Tech. 2016, 9, 1377–1398. [Google Scholar] [CrossRef]

- Gordon, H.R.; Brown, J.W.; Evans, R.H. Exact Rayleigh scattering calculations for use with the Nimbus-7 coastal zone color scanner. Appl. Opt. 1988, 27, 862–871. [Google Scholar] [CrossRef]

- Gordon, H.R.; Wang, M. Surface-roughness considerations for atmospheric correction of ocean color sensors. 1: The Rayleigh-scattering component. Appl. Opt. 1992, 31, 4247–4260. [Google Scholar] [CrossRef] [PubMed]

- Wang, M. The Rayleigh lookup tables for the SeaWiFS data processing: Accounting for the effects of ocean surface roughness. Int. J. Remote Sens. 2002, 23, 2693–2702. [Google Scholar] [CrossRef]

- Wang, M. A refinement for the Rayleigh radiance computation with variation of the atmospheric pressure. Int. J. Remote Sens. 2005, 26, 5651–5663. [Google Scholar] [CrossRef]

- Wang, M. Rayleigh radiance computations for satellite remote sensing: Accounting for the effect of sensor spectral response function. Opt. Express 2016, 24, 12414–12429. [Google Scholar] [CrossRef]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Imai, T.; Inoue, H.; Okuyama, A. An introduction to Himawari-8/9—Japan’s new-generation geostationary meteorological satellites. J. Meteorol. Soc. Jpn. Ser. Ii 2016, 94, 151–183. [Google Scholar] [CrossRef]

- KMA. Meteorological Information Portal Service System. 2017. Available online: http://afso.kma.go.kr (accessed on 1 June 2019).

- Vaisala, 2010: User’s guide-Vaisala Present Weather Detec-tor PWD22/52, 210543EN-D. Available online: https://www.vaisala.com/en/products/instruments-sensors-and-other-measurement-devices/weather-stations-and-sensors/pwd22-52 (accessed on 30 December 2019).

- WMO. Guide to Meteorological Instruments and Methods of Observation; 6 edition WMO-No. 8; World Meteorological Organisation: Geneva, Switzerland, 2008; 716p. [Google Scholar]

- Winker, D.M.; Pelon, J.; Coakley, J.A., Jr.; Ackerman, S.A.; Charlson, R.J.; Colarco, P.R.; Flamant, P.; Fu, Q.; Hoff, R.M.; Kittaka, C.; et al. The CALIPSO Mission: A Global 3D View of Aerosols and Clouds. Bull. Am. Meteorol. Soc. 2010, 91, 1211–1229. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Mather, P.; Tso, B. Classification Methods for Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Han, H.; Lee, S.; Im, J.; Kim, M.; Lee, M.I.; Ahn, M.; Chung, S.R. Detection of convective initiation using Meteorological Imager onboard Communication, Ocean, and Meteorological Satellite based on machine learning approaches. Remote Sens. 2015, 7, 9184–9204. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.I. Classification and Regression Trees; Chapman & Hall/CRC: Boca Raton, FL, USA, 1984. [Google Scholar]

- Houze, R.A. Cloud Dynamics, 53th ed.; Academic Press: San Diego, CA, USA, 1993; p. 137. [Google Scholar]

| Training | ||||

| Year | Month | Day | Hour (UTC) | Station number or location |

| 2016 | 3 | 28 | 1 | 102 |

| 4 | 8 | 1 | 102/169/124.8°E, 39.28°N | |

| 4 | 8 | 5 | 124.8°E, 39.28°N | |

| 4 | 14 | 0 | 102/169 | |

| 4 | 14 | 1 | 102/169 | |

| 4 | 14 | 2 | 102/169 | |

| 4 | 14 | 3 | 169 | |

| 4 | 14 | 4 | 169 | |

| 4 | 14 | 7 | 102 | |

| 4 | 22 | 0 | 102/169 | |

| 4 | 22 | 1 | 102 | |

| 4 | 22 | 2 | 102 | |

| 4 | 22 | 3 | 102 | |

| 7 | 25 | 1 | 102 | |

| 7 | 25 | 2 | 102 | |

| 7 | 25 | 3 | 102 | |

| Validation | ||||

| Year | Month | Day | Hour (UTC) | Station number or location |

| 2017 | 3 | 5 | 5 | 102 |

| 4 | 6 | 0 | 102 | |

| 4 | 6 | 1 | 102 | |

| 4 | 6 | 7 | 115 | |

| 4 | 15 | 0 | 102 | |

| 4 | 25 | 0 | 102 | |

| 5 | 25 | 0 | 115 | |

| 5 | 29 | 0 | 102 | |

| 5 | 29 | 1 | 102 | |

| 5 | 31 | 6 | 169 | |

| 5 | 31 | 8 | 169 | |

| 7 | 11 | 0 | 102 | |

| 7 | 13 | 0 | 102 | |

| 7 | 13 | 0 | 169 | |

| 7 | 14 | 0 | 102 | |

| Marine fog Classification | Data | Training | Validation |

|---|---|---|---|

| 1 | Marine fog (2016) | 4868 | |

| Marine fog (2017) | 1281 | ||

| 0 | Nonmarine fog (2016) | 7875 | |

| Nonmarine fog (2017) | 1592 | ||

| Total number | 12,743 | 2873 | |

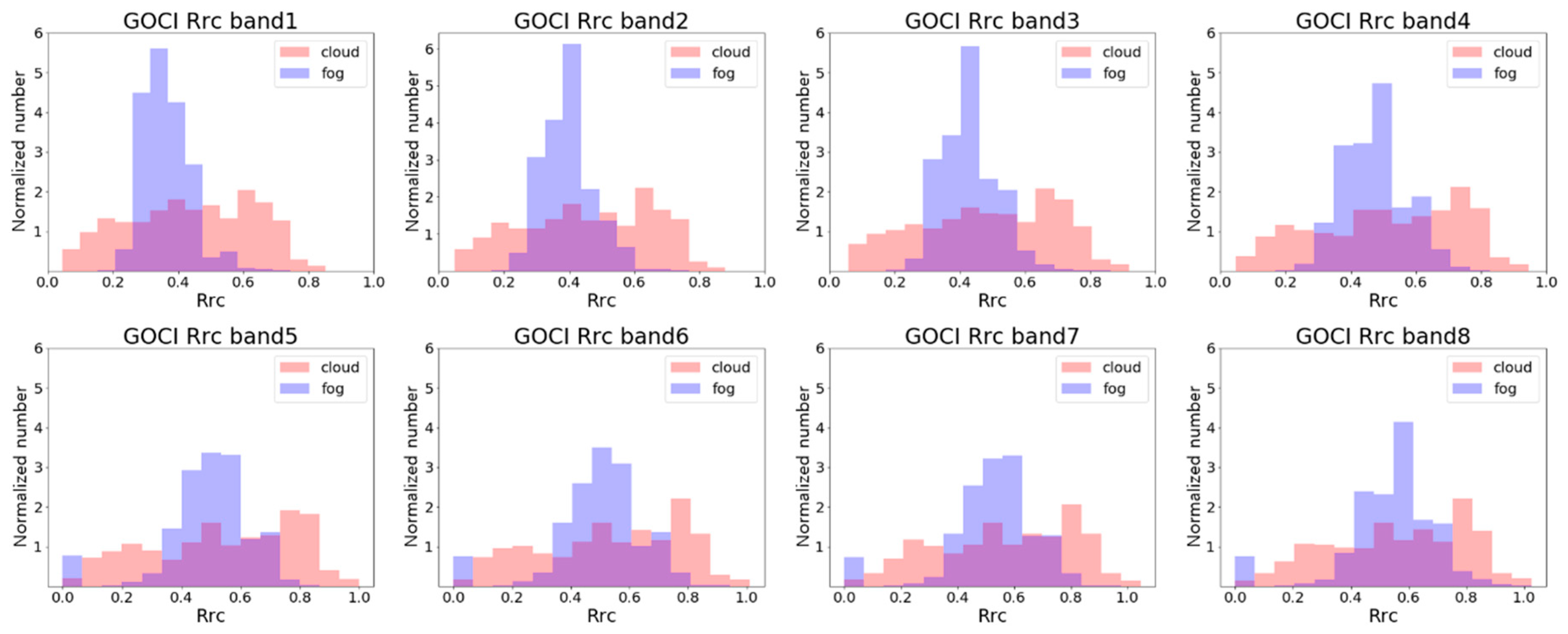

| GOCI Band | Median of Rrc | Difference | |

|---|---|---|---|

| Fog | Cloud | ||

| 1 | 0.36 | 0.44 | 0.08 |

| 2 | 0.39 | 0.46 | 0.07 |

| 3 | 0.43 | 0.49 | 0.06 |

| 4 | 0.47 | 0.52 | 0.05 |

| 5 | 0.51 | 0.56 | 0.05 |

| 6 | 0.52 | 0.56 | 0.04 |

| 7 | 0.55 | 0.58 | 0.03 |

| 8 | 0.56 | 0.58 | 0.02 |

| (a) GOCI-Only DT Algorithm | ||||

| Validation | Observed | Sum of the forecasts | ||

| “0” Nonfog | “1” Marine fog | |||

| Forecast | “0” is nonfog | 1020 | 292 | 1317 |

| “1” is marine fog | 509 | 577 | 1086 | |

| “2” is possible fog (under cloud) | - | - | ||

| Sum of the observations | 1529 | 869 | 2403 | |

| Overall accuracy | 0.67 | |||

| Hit rate | 0.66 | |||

| False alarm rate | 0.33 | |||

| (b) After Postprocessing | ||||

| Validation | Observed | Sum of the forecasts | ||

| “0” Nonfog | “1” Marine fog | |||

| Forecast | “0” is nonfog | 713 | 0 | 713 |

| “1” is marine fog | 327 | 534 | 861 | |

| “2” is possible fog (under cloud) | 489 | 335 | 824 | |

| Sum of the observations for all pixels | 1529 | 869 | 2403 | |

| (excluding “2”) | (1040) | (534) | (1574) | |

| Overall accuracy | 0.72 (0.79) | |||

| Hit rate | 0.61 (1.0) | |||

| False alarm rate | 0.21 (0.31) | |||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Park, M.-S.; Park, Y.-J.; Kim, W. Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree. Remote Sens. 2020, 12, 149. https://doi.org/10.3390/rs12010149

Kim D, Park M-S, Park Y-J, Kim W. Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree. Remote Sensing. 2020; 12(1):149. https://doi.org/10.3390/rs12010149

Chicago/Turabian StyleKim, Donghee, Myung-Sook Park, Young-Je Park, and Wonkook Kim. 2020. "Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree" Remote Sensing 12, no. 1: 149. https://doi.org/10.3390/rs12010149

APA StyleKim, D., Park, M.-S., Park, Y.-J., & Kim, W. (2020). Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree. Remote Sensing, 12(1), 149. https://doi.org/10.3390/rs12010149