Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection

Abstract

:1. Introduction

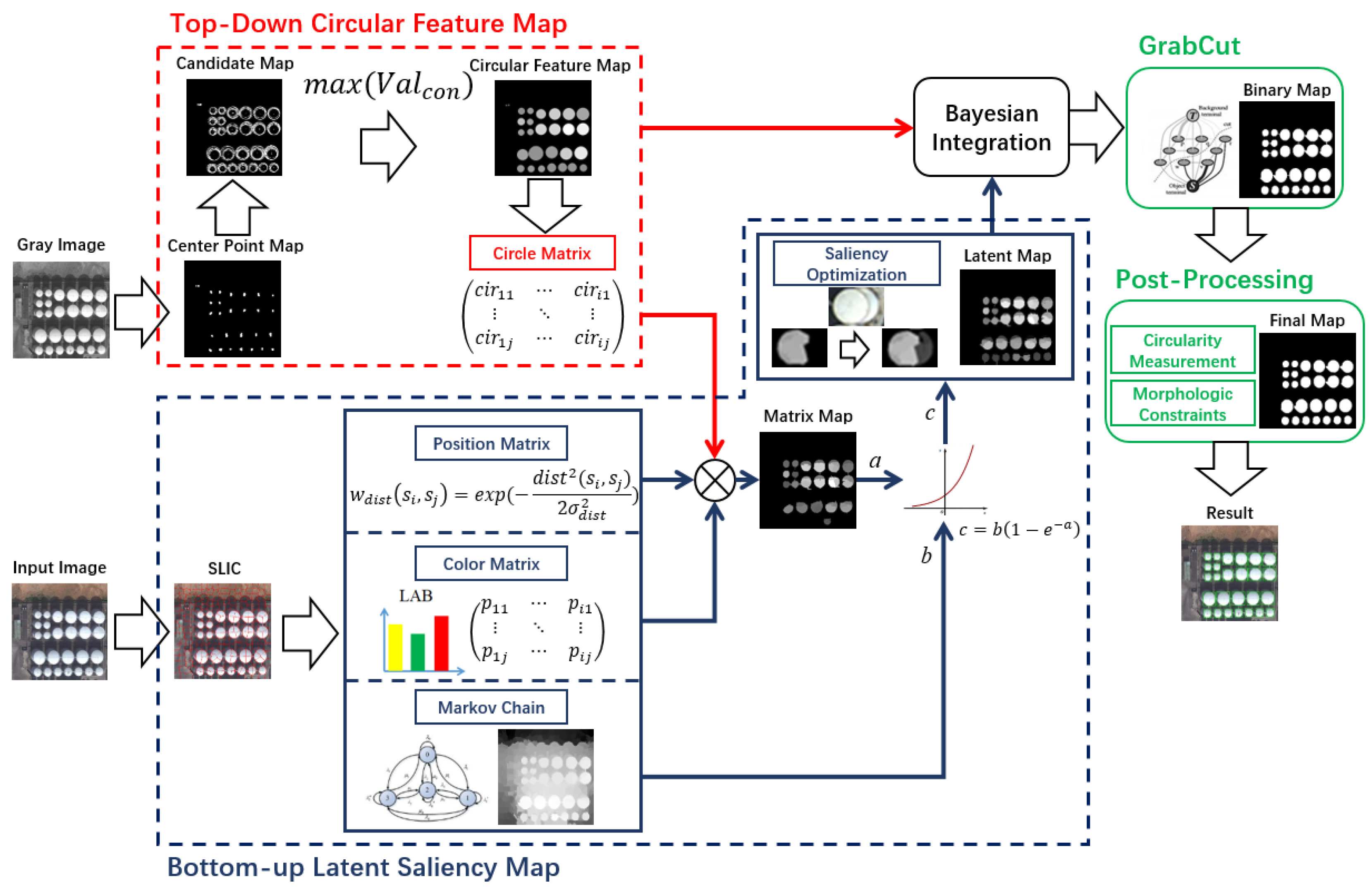

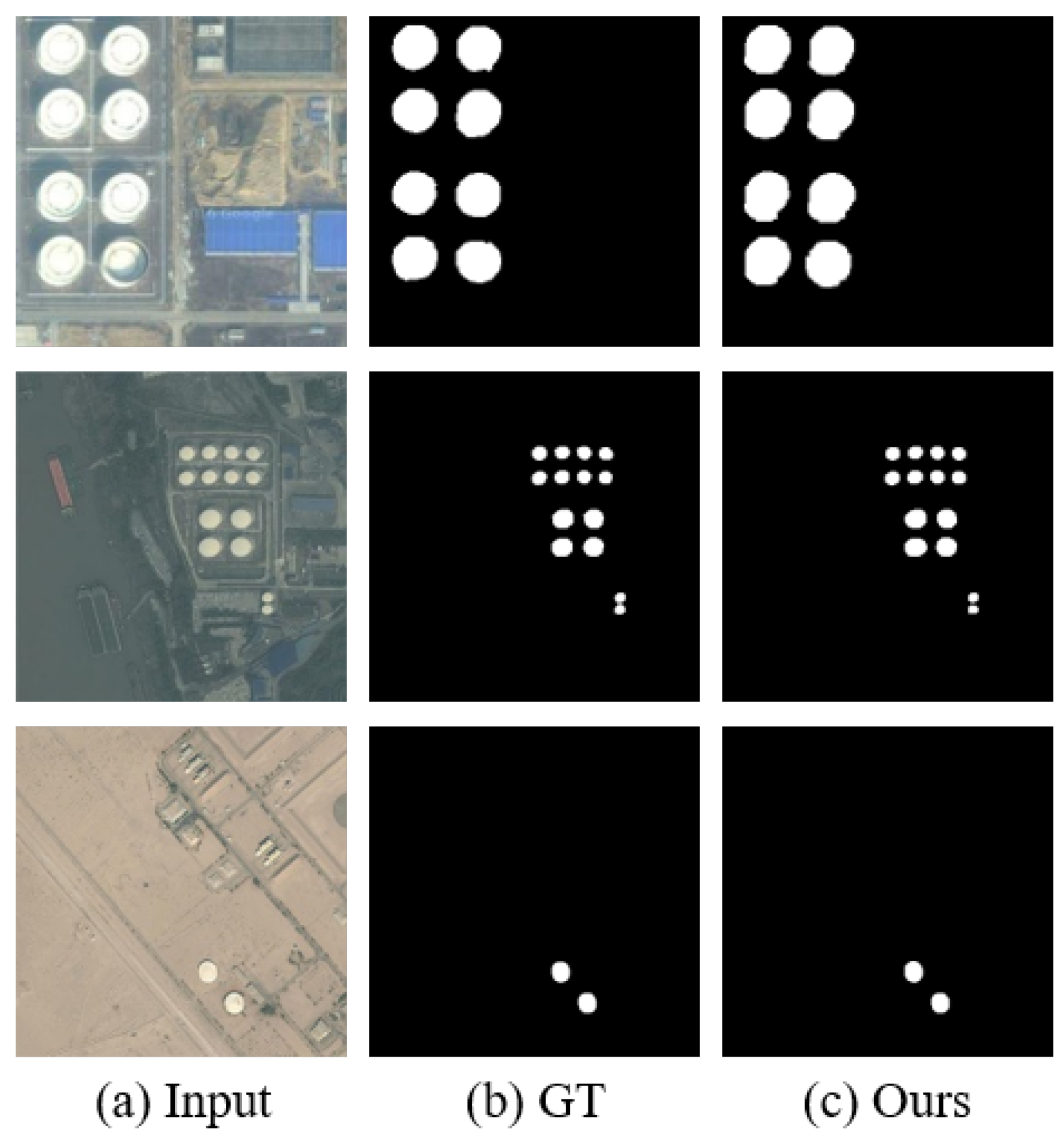

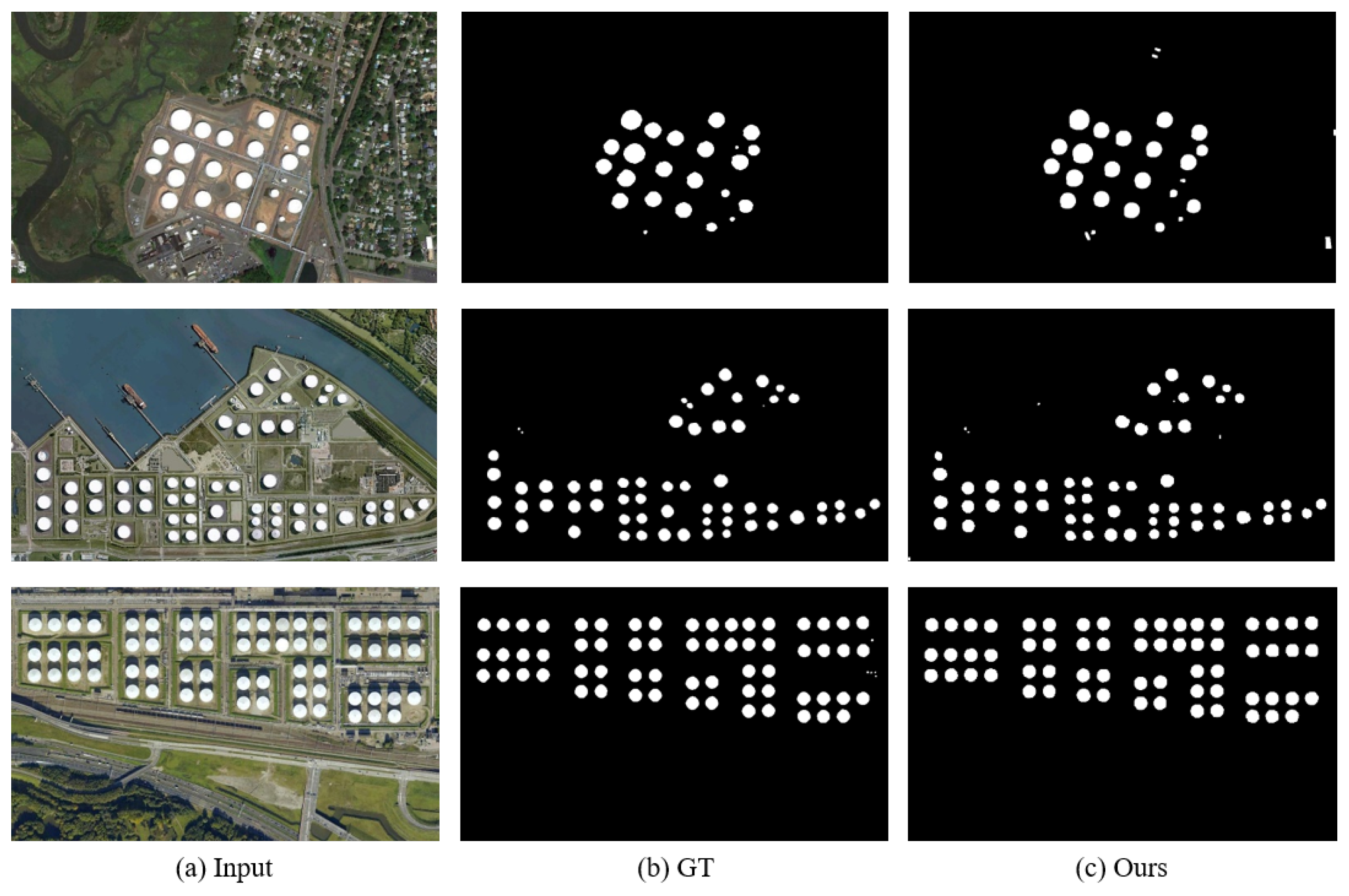

- Aiming at the difficult problems of oil tank detection, we propose an unsupervised saliency model with Color Markov Chain (US-CMC). US-CMC makes use of the CIE Lab space to construct a Color Markov Chain for effectively reducing the influence of the shadow. Moreover, the constraint matrix is constructed to suppress the interference of non-oil-tank circle areas. By using the linear interpolation process, the oil tank targets with variable view angles can also be detected completely.

- Different from the previous methods using circular features, US-CMC transforms circular radial symmetry features into a circular gradient map and then generates a series of confidence values for the circular region.

- We employ an unsupervised framework, which can avoid the extra time cost in large sample training. Furthermore, US-CMC can restrain other salient regions apart from oil tanks by combining bottom-up latent saliency maps with top-down circular feature maps. Consequently, our model can not only quickly locate the oil tank targets, but can also maintain the detection accuracy.

2. Proposed Method

2.1. Bottom-Up Latent Saliency Map Based on Color Markov Chain

2.2. Saliency Map Optimization and Background Suppression

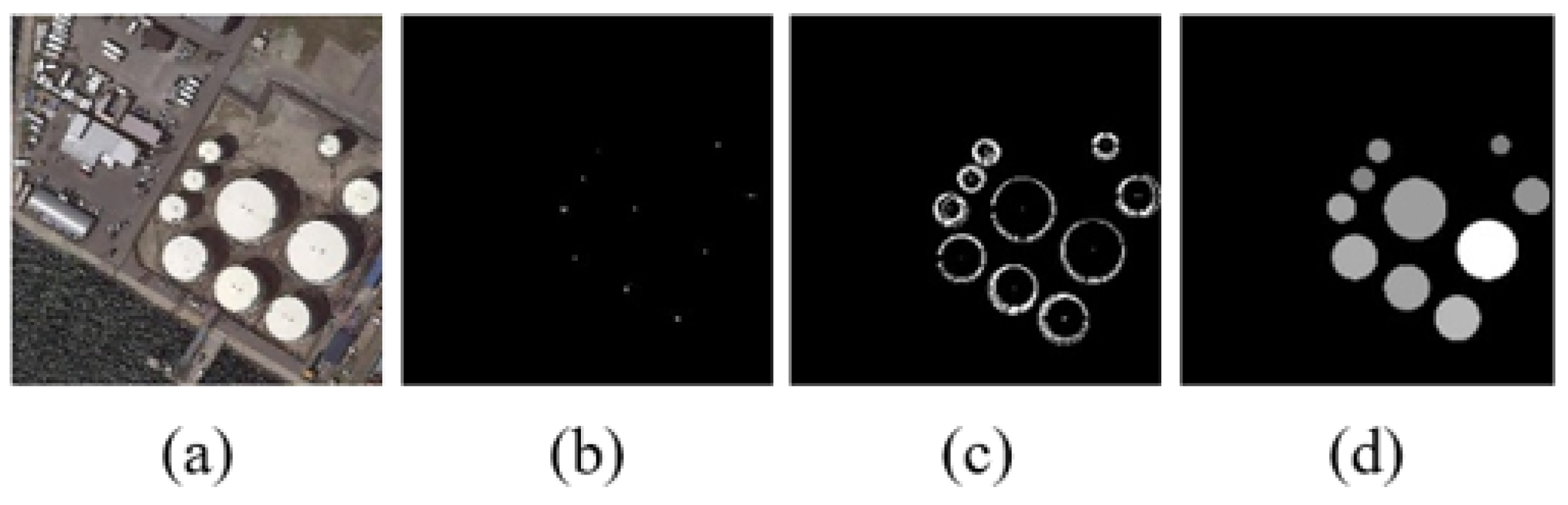

2.3. Top-Down Circular Feature Map Based on Circular Confidence Value

2.4. Fusion of Color Saliency Map and Circular Feature Map

2.5. Post-Processing

3. Experimental Results And Discussion

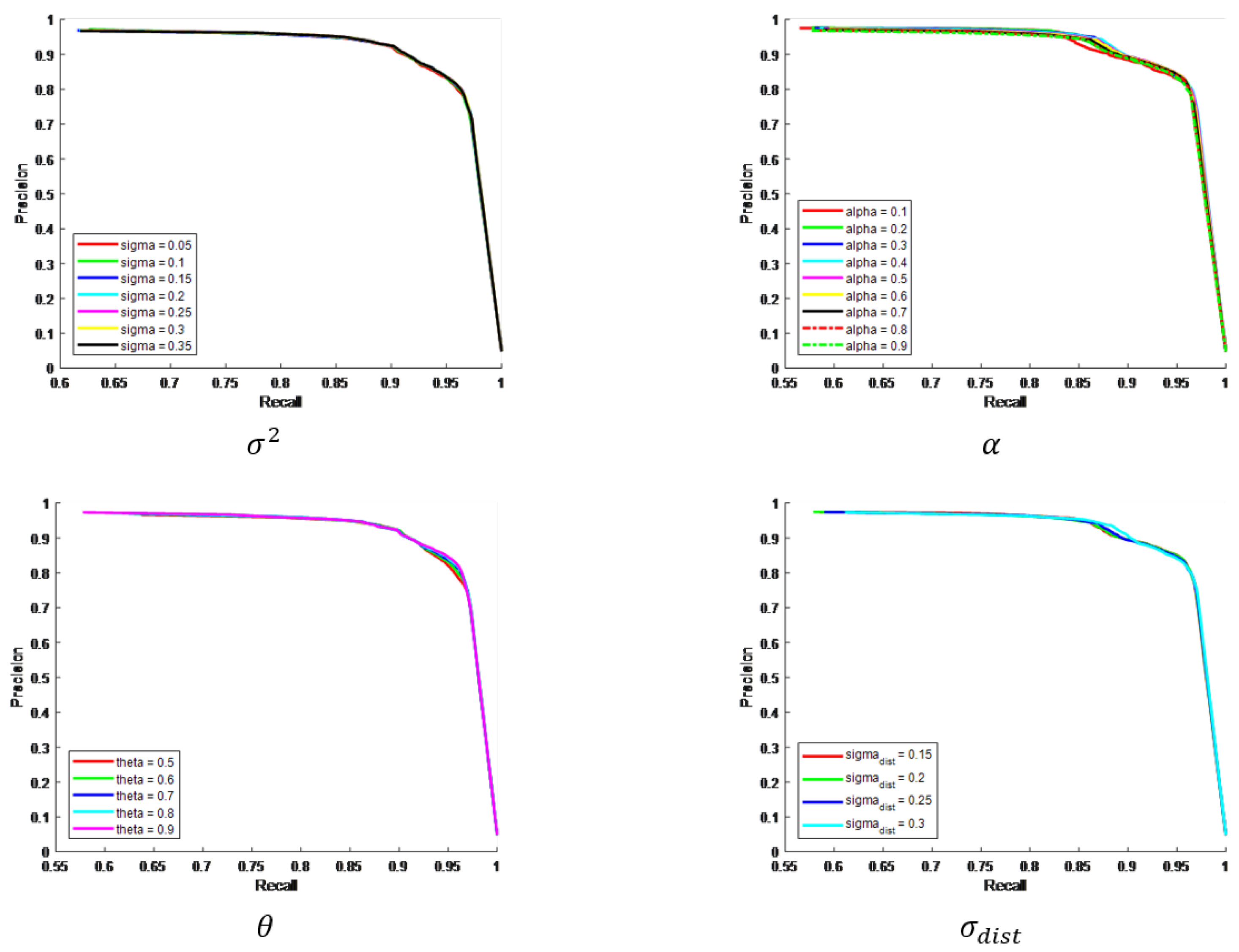

3.1. Parameter Selection

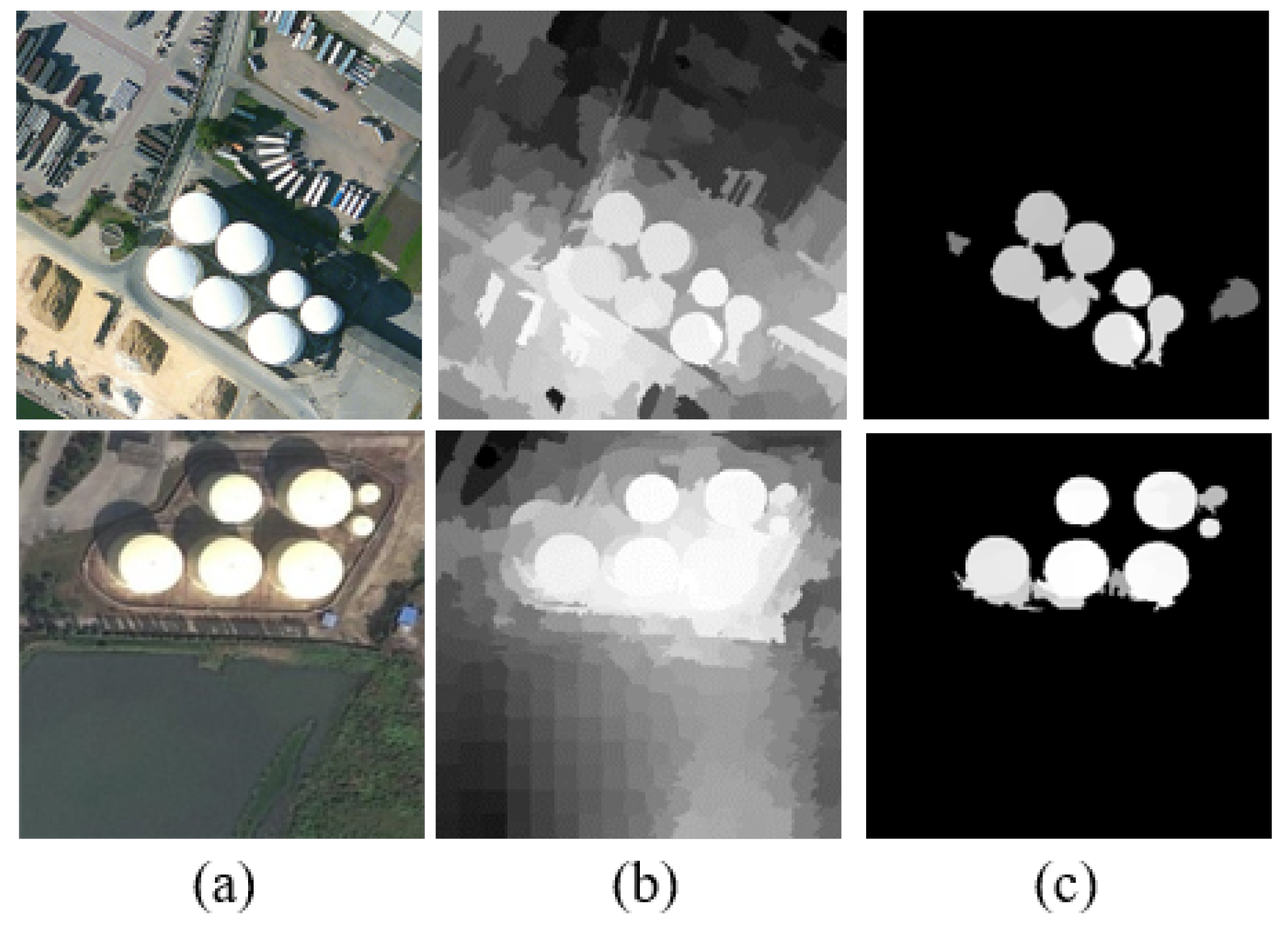

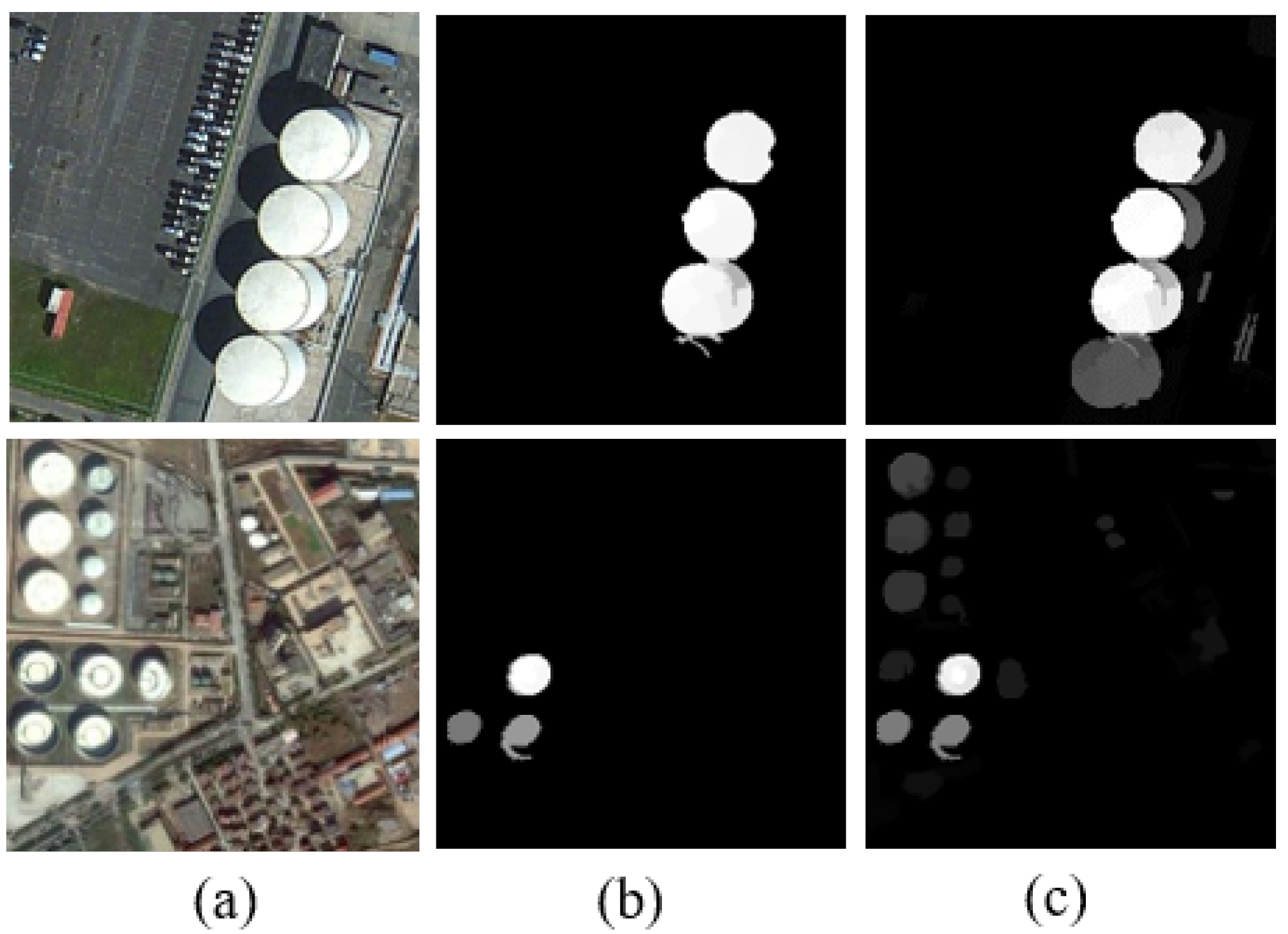

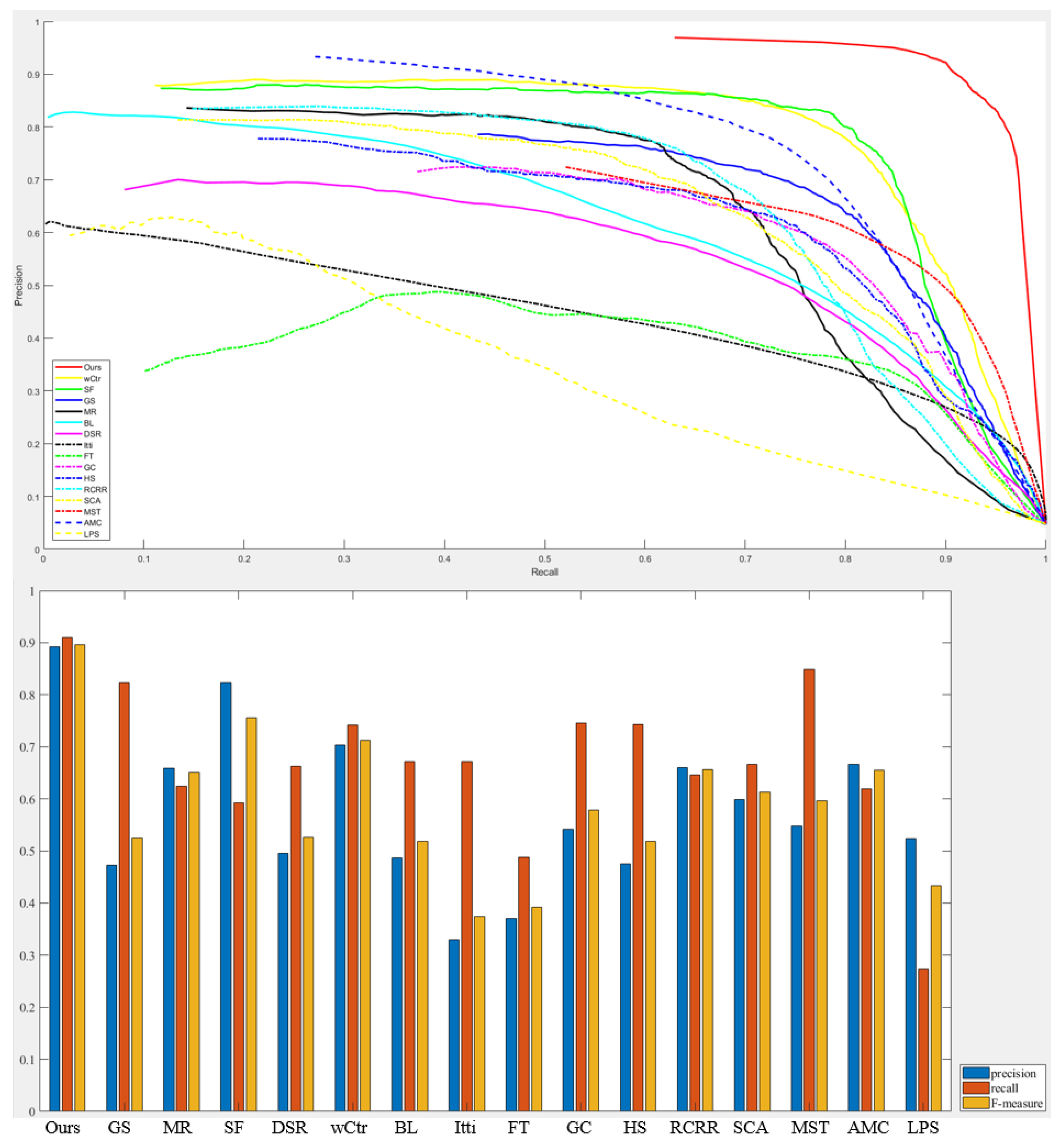

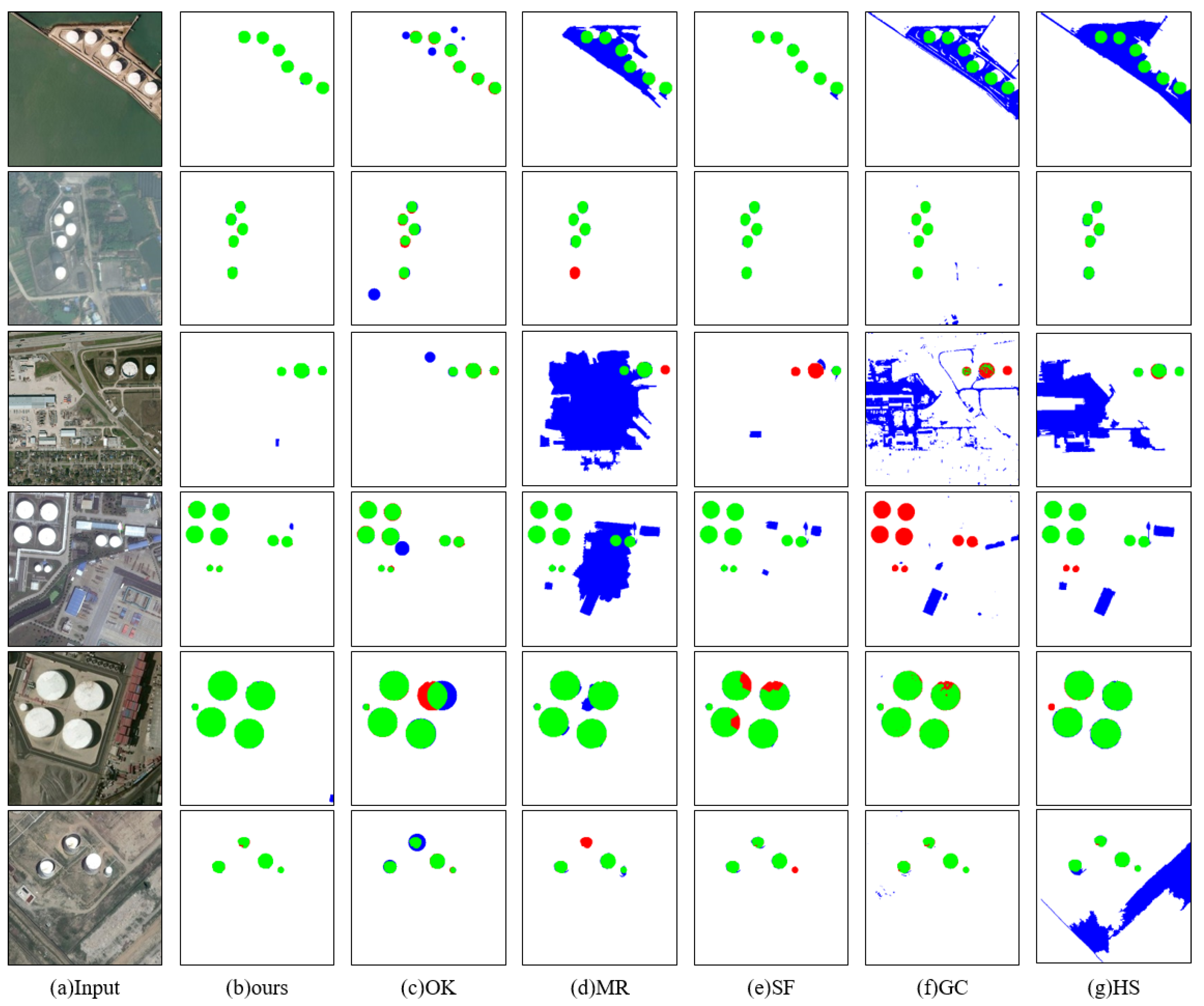

3.2. Comparison with Saliency Models

3.3. Comparison with Oil Tank Detection Methods

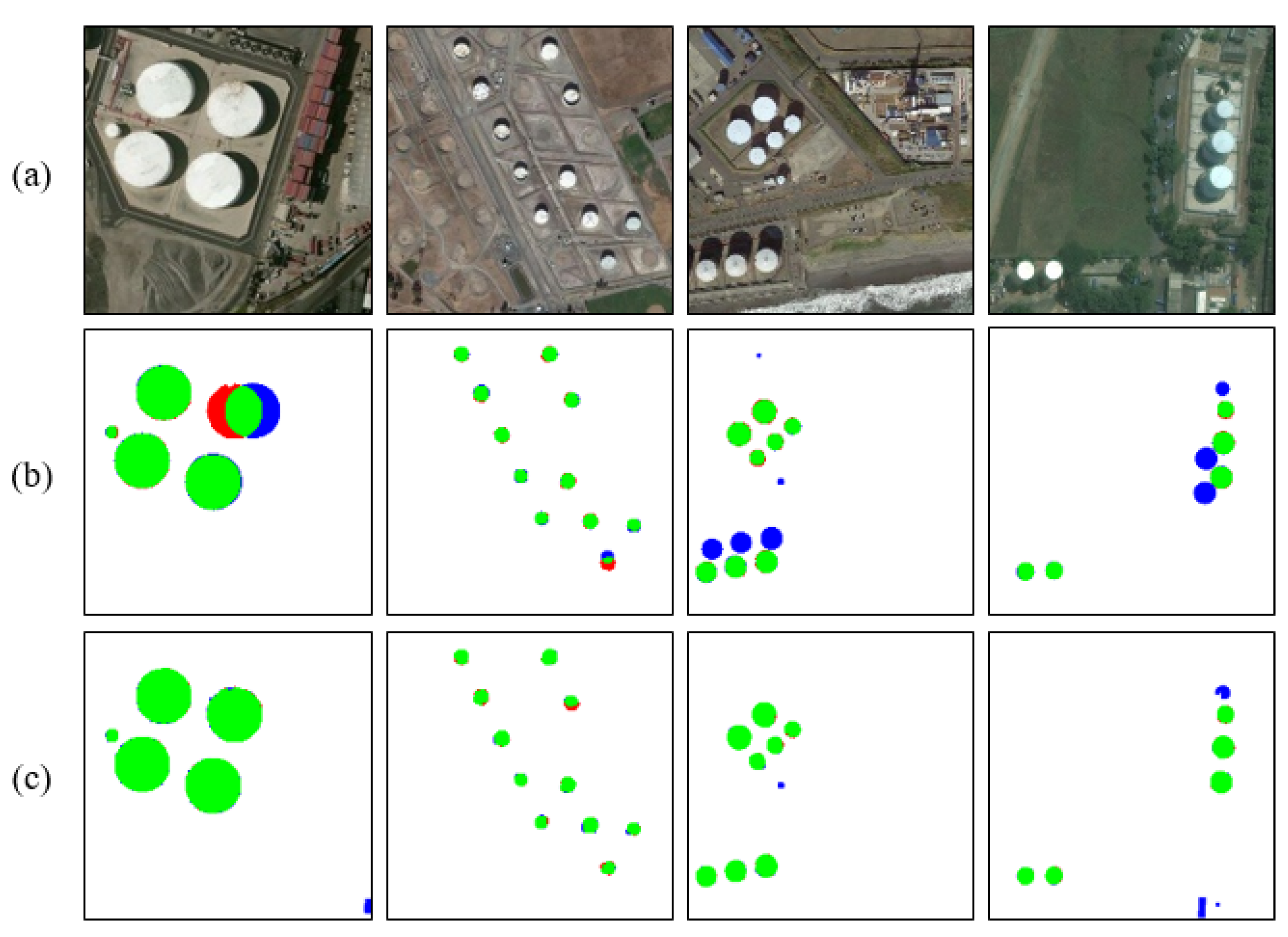

3.4. Robustness for Our Methods

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, H.; Chen, W.; Sun, B.; Chen, Y.; Li, C. Oil tank detection in synthetic aperture radar images based on quasi-circular shadow and highlighting arcs. J. Appl. Remote Sens. 2014, 8, 397–398. [Google Scholar] [CrossRef]

- Zhang, W.; Hong, Z.; Chao, W.; Tao, W. Automatic oil tank detection algorithm based on remote sensing image fusion. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Seoul, Korea, 25–29 July 2005; pp. 3956–3958. [Google Scholar]

- Atherton, T.J.; Kerbyson, D.J. Size invariant circle detection. Image Vis. Comput. 1999, 17, 795–803. [Google Scholar] [CrossRef]

- Li, B.; Yin, D.; Yuan, X.; Li, G. Oilcan recognition method based on improved Hough transform. Opto-Electron. Eng. 2008, 35, 30–44. [Google Scholar]

- Han, X.; Fu, Y.; Li, G. Oil depots recognition based on improved Hough transform and graph search. J. Electron. Inf. Technol. 2011, 33, 66–72. [Google Scholar] [CrossRef]

- Zhu, C.; Liu, B.; Zhou, Y.; Yu, Q.; Liu, X.; Yu, W. Framework design and implementation for oil tank detection in optical satellite imagery. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 6016–6019. [Google Scholar]

- Ok, A.O.; Baseski, E. Circular oil tank detection from panchromatic satellite images: A new automated approach. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1347–1351. [Google Scholar] [CrossRef]

- Cai, X.; Sui, H.; Lv, R.; Song, Z. Automatic circular oil tank detection in high-resolution optical image based on visual saliency and hough transform. In Proceedings of the 2014 IEEE Workshop on Electronics, Computer and Applications(IWECA), Ottawa, ON, Canada, 8–9 May 2014; pp. 408–411. [Google Scholar]

- Yao, Y.; Jiang, Z.; Zhang, H. Oil tank detection based on salient region and geometric features. In Proceedings of the SPIE/COS Photonics Asia, Beijing, China, 9 October 2014. [Google Scholar]

- Zhang, L.; Shi, Z.; Wu, J. A hierarchical oil tank detector with deep surrounding features for high-resolution optical satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4895–4909. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, J.; Hu, X.; Wang, Y. Automatic Detection and Classification of Oil Tanks in Optical Satellite Images Based on Convolutional Neural Network. In Proceedings of the International Conference on Image and Signal Processing(ICISP), Trois-Rivières, QC, Canada, 30 May–1 June 2016; pp. 304–313. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in vhr optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Liu, C.; Wang, Y. Saliency-Driven Oil Tank Detection Based on Multidimensional Feature Vector Clustering for SAR Images. IEEE Geosci. Remote Sens. Lett. 2014, 1–5. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 18–23 June 2007. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2376–2383. [Google Scholar]

- Cheng, M.; Zhang, G.; Mitra, N.J.; Huang, X.; Hu, S. Global contrast based salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 409–416. [Google Scholar]

- Perazzi, F.; Krahenbuhl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Lu, H.; Li, X.; Zhang, L.; Ruan, X.; Yang, M.H. Dense and sparse reconstruction error based saliency descriptor. IEEE Trans. Image Process. 2016, 25, 1592–1603. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Liang, S.; Wei, Y.; Sun, J. Saliency optimization from robust background detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 2814–2821. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M.H. Saliency Detection via Graph-Based Manifold Ranking. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Wei, Y.; Wen, F.; Zhu, W.; Sun, J. Geodesic Saliency Using Background Priors. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 29–42. [Google Scholar]

- Tu, W.; He, S.; Yang, Q.; Chien, S. Real-Time Salient Object Detection with a Minimum Spanning Tree. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2334–2342. [Google Scholar]

- Tong, N.; Lu, H.; Ruan, X.; Yang, M. Salient Object Detection via Bootstrap Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1884–1892. [Google Scholar]

- Qin, Y.; Lu, H.; Xu, Y.; Wang, H. Saliency Detection via Cellular Automata. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 110–119. [Google Scholar]

- Lee, G.; Tai, Y.; Kim, J. Deep Saliency with Encoded Low Level Distance Map and High Level Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 660–668. [Google Scholar]

- Wang, T.; Zhang, L.; Wang, S.; Lu, H.; Yang, G.; Ruan, X.; Borji, A. Detect Globally, Refine Locally: A Novel Approach to Saliency Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4321–4329. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Tang, M.; Gorelick, L.; Veksler, O.; Boykov, Y. GrabCut in One Cut. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1769–1776. [Google Scholar]

- Cheng, M.; Warrell, J.; Lin, W.; Zheng, S.; Vineet, V.; Crook, N. Efficient Salient Region Detection with Soft Image Abstraction. In Proceedings of the International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1529–1536. [Google Scholar]

- Yan, Q.; Xu, L.; Shi, J.; Jia, J. Hierarchical Saliency Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1155–1162. [Google Scholar]

- Yuan, Y.; Li, C.; Kim, J.; Cai, W.; Feng, D.D. Reversion Correction and Regularized Random Walk Ranking for Saliency Detection. IEEE Trans. Image Process. 2018, 27, 1311–1322. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Lu, H.; Liu, X. Saliency Region Detection Based on Markov Absorption Probabilities. IEEE Trans. Image Process. 2015, 24, 1639–1649. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Lu, H.; Lin, Z.; Shen, X.; Price, B. Inner and Inter Label Propagation: Salient Object Detection in the Wild. IEEE Trans. Image Process. 2015, 24, 3176–3186. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Zhao, D.; Shi, Z.; Jiang, Z. Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection. Remote Sens. 2019, 11, 1089. https://doi.org/10.3390/rs11091089

Liu Z, Zhao D, Shi Z, Jiang Z. Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection. Remote Sensing. 2019; 11(9):1089. https://doi.org/10.3390/rs11091089

Chicago/Turabian StyleLiu, Ziming, Danpei Zhao, Zhenwei Shi, and Zhiguo Jiang. 2019. "Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection" Remote Sensing 11, no. 9: 1089. https://doi.org/10.3390/rs11091089

APA StyleLiu, Z., Zhao, D., Shi, Z., & Jiang, Z. (2019). Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection. Remote Sensing, 11(9), 1089. https://doi.org/10.3390/rs11091089