In this section, we describe our deep learning regression approach to predicting the GSD value for a given remote sensing image. First, we explain why the predicted GSD value is represented by a floating-point number representation, after which we propose a regression tree CNN.

3.1. Floating-Point Regression of GSD

Our problem is to estimate the GSD value of an input remote sensing image using a CNN which is trained in a mini-batch. To train the CNN for regression, a loss function can be defined as absolute loss:

where

is the ground truth GSD of

n-th image in the mini-batch with

N samples, and

is the predicted GSD value. In this paper, we denote the variable without hat as the ground truth value and the variable with hat as the predicted value.

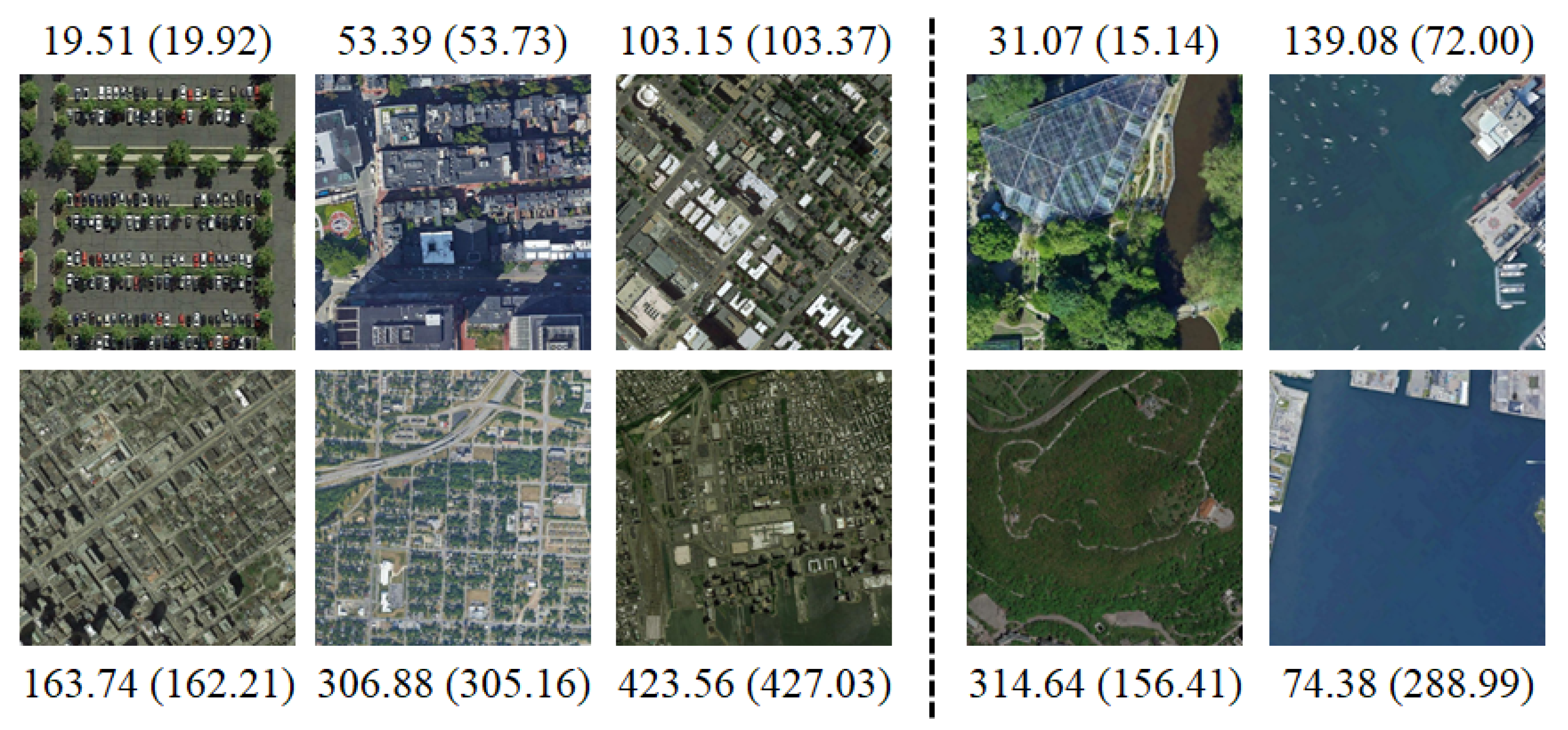

The absolute loss is actually the sum of the absolute errors. However, the absolute error of 3 cm with the true GSD value of 15 cm corresponding to a relative error of 20% could be considered to be worse than the absolute error of 3 cm with the true GSD value of 300 cm corresponding to a relative error of 1%. Thus, it is more reasonable to use the relative errors of 20% and 1%, not the absolute error of 3 cm. Relative error makes sense for GSD since it is measured on a ratio scale which has a true meaningful zero [

33]. For example, on the Celsius temperature scale, the value 2 °C is not the true double of 1 °C, and relative error is meaningless because 0 °C is not the true meaningful zero. However, on the Kelvin temperature scale, 2 °K is the true double of 1 °K, and relative error is meaningful because 0 °K is the true meaningful zero, or absolute zero. In GSD, 0 cm is the true meaningful zero, and thus the relative error is a meaningful value. Hence we use the loss function that reflects the relative error. Intuitively, the loss function could be as follows:

which is the sum of the relative errors.

We constructed a baseline network consisting of a feature extraction CNN and two convolution layers of 64 channels/1 channel that outputs the real value of GSD. As a training loss for the baseline network, we experimentally compared the relative loss Equation (

2) with the absolute loss Equation (

1) and obtained similar performance. The simple use of the relative loss Equation (

2) as a loss function does not seem to yield a satisfactory result, and thus we formulate an alternative loss function, resulting in a floating-point representation of a GSD value and its implementation using a binomial tree layer.

The relative error is related to the logarithmic error, which is the difference on a log scale [

34]:

Using a Taylor series,

with sufficiently small

x, Equation (

3) becomes:

Thus, the use of the logarithmic error as a loss reflects the relative error when training the network for the GSD prediction. Suppose GSD

is expressed as an exponential form using an exponent with the real value

:

We decompose the real value exponent

into the integer part

and decimal part

, i.e., it is expressed as a floating-point representation with the real value mantissa of

and the integer exponent of

:

The logarithmic loss using Equation (

6) then becomes:

where the first term

represents the difference between the integer exponents, and the second term

represents the log ratio of the real value mantissas.

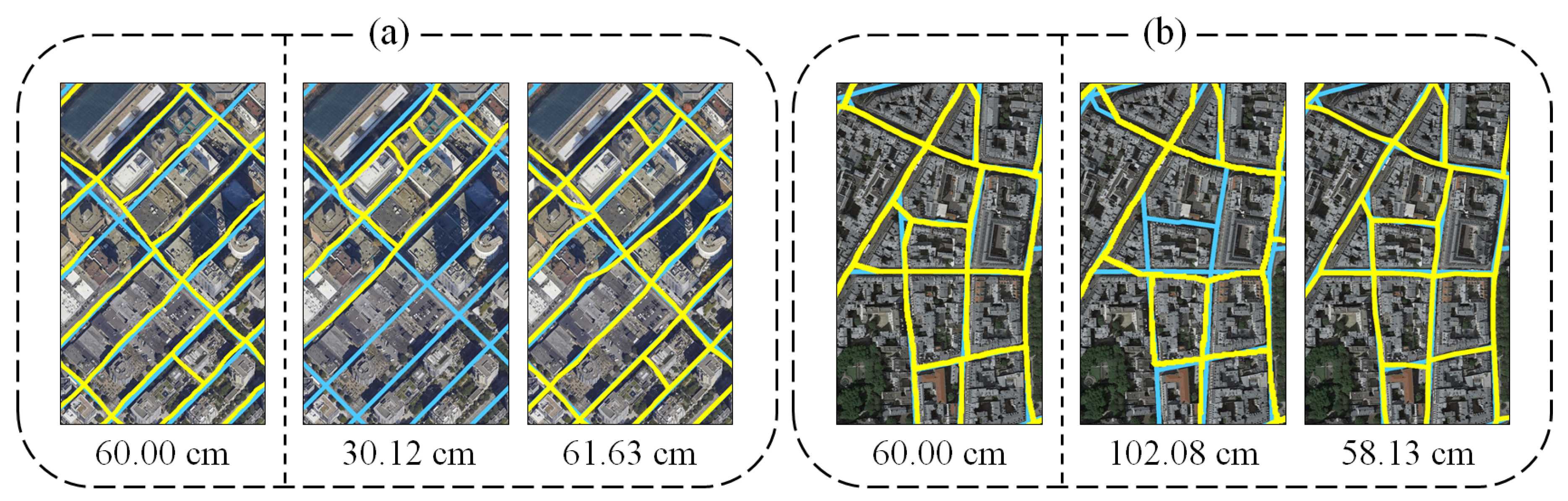

To properly associate the resulting value of the GSDs with the floating-point representation, we divide the target range of the GSDs into several segments/classes and determine the relative position within the segment. The segment is determined by coarse classification, and the relative position is computed by finer scale regression. Suppose the target range of 15∼480 cm. If 15∼480 cm is equally divided on the log scale, the five segments correspond to 15∼30 cm, 30∼60 cm, 60∼120 cm, 120∼240 cm and 240∼480 cm, which we call the log GSD scale classes. The corresponding reference value for each class is defined as 20 cm, 40 cm, 80 cm, 160 cm and 320 cm, respectively. For example, the value 50 cm is represented as the second class (log, 30∼60 cm, 40 cm) and the relative position of ×1.25 from its reference value 40 cm. Thus, the GSD value

can be obtained from the floating-point representation:

where

is the integer exponent (

) corresponding to its class, and

is the real number mantissa within the range of 0.75∼1.5. The constant 20 is used to properly represent the target range of each class by using the mantissa values of 0.75∼1.5.

Regarding our use of the log GSD scale classes defined above, we suppose the linear GSD scale classes that are defined by dividing the GSD range of 15∼480 cm into equal size segments of 15∼108 cm, 108∼201 cm, 201∼294 cm, 294∼387 cm and 387∼480 cm. The relative position is determined by the difference from a reference value. For example, if the reference value of 15∼108 cm is defined as 61.5 cm, 50 cm is represented by the first class (linear, 15∼108 cm) and the relative position of −11.5. The relative position divided by the interval value of 93 is expressed as a real value

between −0.5 and 0.5. Additionally, defining

as the integer class index (

), the GSD value

for the linear GSD scale classes is represented as follows:

We expect that the log GSD scale classes improve the GSD prediction compared to the linear GSD scale classes since the log GSD classes better reflect the relative error through the logarithmic loss, as shown in Equation (

7).

In this paper, we implement the first term in Equation (

7) (the difference between the integer exponents) as a classification by using the cross entropy and then implement the second term in Equation (

7) (the log ratio of the real value mantissas) as a regression by using the absolute loss, respectively, as follows:

where

represents the probability of the

c-th class (corresponding to its appropriate exponent) of the

n-th image in a mini-batch. The collection of

is represented as a vector

where

is the

c-th channel of the vector

. The ground truth

is a one-hot vector representing the class of the image

n where the one-hot vector is defined as a vector with all zero elements except a single one used to uniquely identify the class. For example, if the exponent

is

k, the class index is considered to be

k, and thus

becomes 1 and the others

are 0. Letting

be the mantissa of the GSD for image

n,

and

are then computed from the CNN, and

is obtained from the arg-max of

over

c, resulting in

using Equation (

6).

We initially considered a simple multi-output network for exponent and mantissa prediction. If the mantissa range is limited to 0.75 to 1.5, only one pair of values for the exponent and the mantissa is associated with a specific GSD value. For example, if the GSD of an input remote sensing image is 36 cm, it corresponds to the second class (log, 30∼60 cm, 40 cm) and a mantissa of 0.9. However, when the classification error of one occurs with the same predicted mantissa value of 0.9, the predicted class becomes the first (log, 15∼30 cm, 20 cm) or third (log, 60∼120 cm, 80 cm), resulting in an incorrect GSD value of 18 cm or 72 cm. In order to compensate for this, we tried to train the adjusted ground truth mantissa value when the classification error occurs. For example, if the class becomes 1, the mantissa should be 1.8. However, the multiple pairs representing the same GSD value cause the problem of a one-to-many correspondence, which requires outputting multiple different pairs of values of the exponent and mantissa for the same input image. Our regression tree CNN allows the one-to-many correspondence, reducing the error caused by the classification by one, as described below.

3.2. Regression Tree CNN

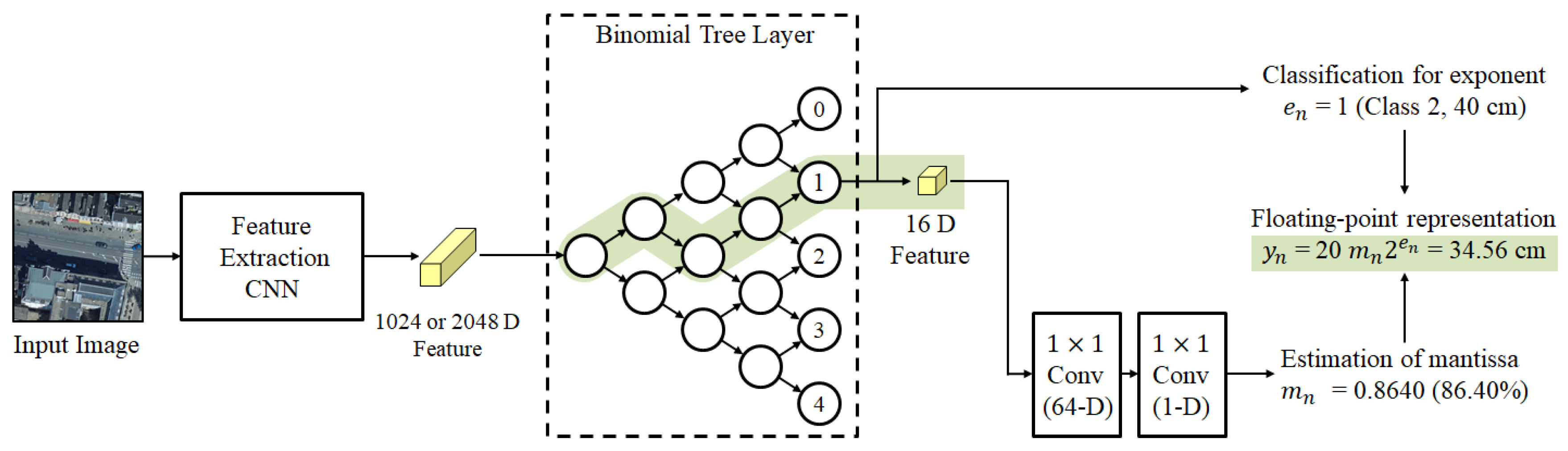

Figure 2 shows our proposed regression tree CNN consisting of a feature extraction CNN and a binomial tree layer, the output of which is used to form a floating-point representation of the GSD value for a given input image. Features are first extracted by the CNN from an input image and are then input to the binomial tree layer. By combining the exponent and mantissa from the binomial tree, we obtain the GSD prediction.

To design a network that performs both classification and regression simultaneously, we employ the vector representation of the CapsNet [

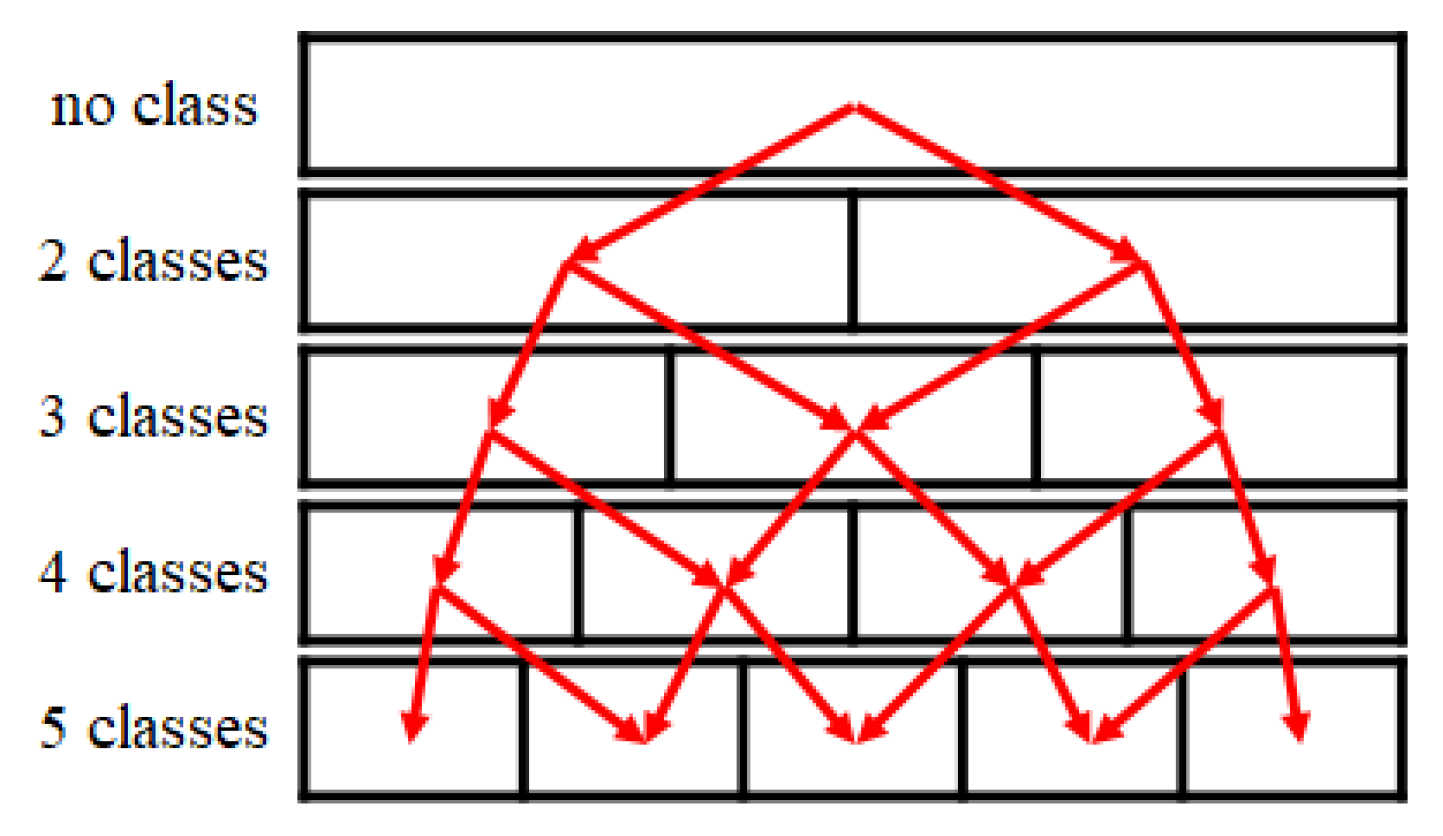

35] with some modification. We estimate an output vector which is used to select the class (or exponent) and to estimate the mantissa. To improve the classification at the class boundary, we successively refine the classification with the binomial tree structure, as shown in

Figure 3. Because the child-level class boundaries reside within the parent-level classes from the root to its leaves, the information related to the class boundary can be better delivered, improving both the classification and regression. We call the whole structure a binomial tree layer.

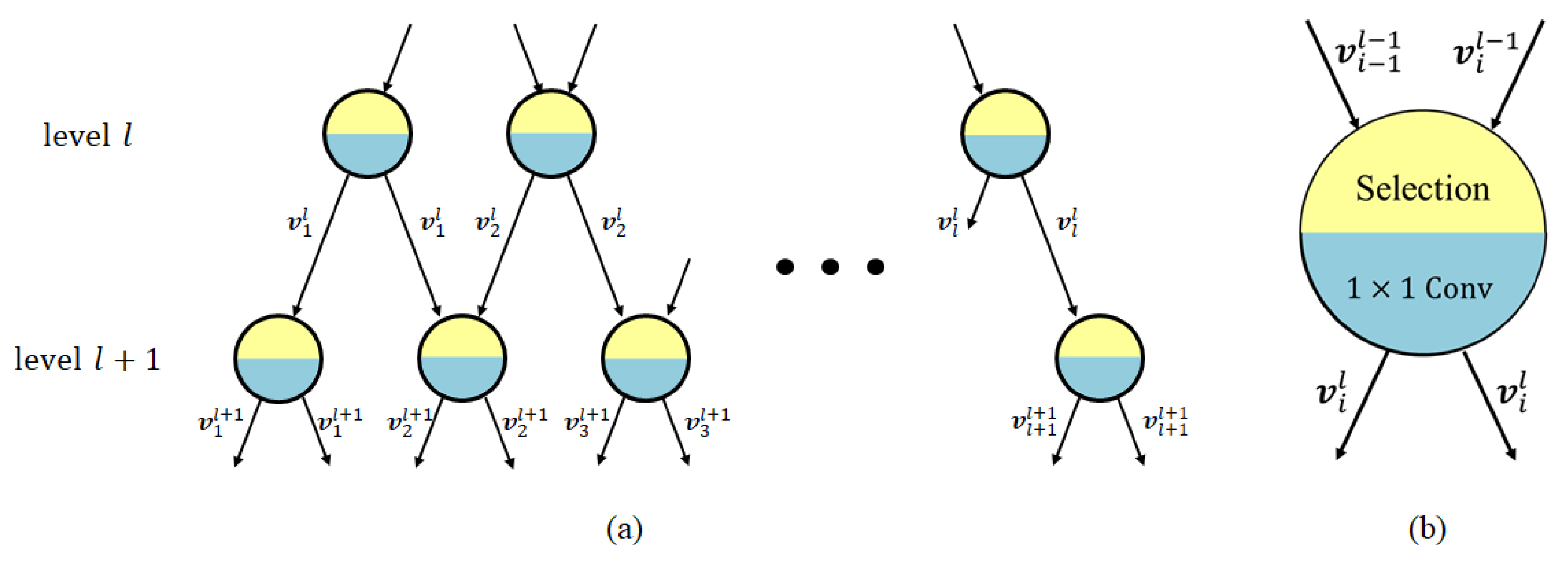

The root node contains the feature map of the last convolution layer of the feature extraction CNN. The number of nodes grows one by one as the tree becomes deeper, as shown in

Figure 4. Of the two vectors associated with the two parent nodes, only the one with the larger length is transmitted to the next level. Node selection in the binomial tree layer is performed using the following equation:

where

and conv

denote the feature vector and the pointwise convolution of the

i-th node at level

l, respectively. The feature vector with the larger length is selected, and a pointwise convolution is performed.

To train the pointwise convolution for each node, the local classification among l classes is performed using the length of the l vectors at level l. For example, the local class border is defined by . At level 3, we perform a classification of three classes with the ranges of 15.00∼47.62, 47.62∼151.19 and 151.19∼480.00.

In the leaf nodes, we compute the exponent and mantissa to estimate the GSD value. The

V-dimensional vector with the maximum

norm among

C vectors is selected, and the class or the integer exponent is determined by the leaf node containing the largest vector. The values of

C = 5 and

V = 16 are used. We then normalize the selected vector and perform two pointwise convolutions of 64 channels/1 channel to estimate the mantissa. The whole architecture of the proposed network is shown in

Figure 2.

If an error occurs during the classification, especially when the classification differs by one from the correct vector, our binomial tree layers can reduce the resulting GSD prediction error by adaptively adjusting the mantissa value for the incorrectly selected vector. The incorrectly selected vector is trained to output the adjusted mantissa value, which is different from the mantissa value for the correctly selected vector. Due to the one-to-many correspondence training, the final prediction becomes correct despite the classification error. Because most of the classification errors had a size of 1 in the experiments, we only consider the class errors of size 1 by training the additional two pairs of the class vector k− 1 and k + 1 and their respective mantissa values for the correct class vector k.

During backpropagation, the weight update is affected by the selected leaf node and the corresponding path. In

Figure 2, the weights of the nodes on the path are updated by

and

in Equation (

10), and the weights of the other nodes are updated by only

in Equation (

10). However, considering the mini-batch size of

N, the corresponding

N paths are trained simultaneously in the mini-batch. For each path leading to a correct class vector, we augment 10% of the data with the simulated classification errors of size 1 and the corresponding adjusted mantissa value during training.

As a feature extraction CNN, 1.0 MobileNet-224 [

36] and ResNet-101 [

37] are used. The number of channels of the last feature maps from the feature extraction CNN is 1024 for MobileNet and 2048 for ResNet. We excluded the global average pooling or the fully connected layers of the original networks.

While the tree structure was also used in option pricing [

38], Tree-CNN [

39] and the classification and regression tree (CART) [

40], our regression tree CNN estimates the GSD by computing the exponent (classification), calculating the mantissa (regression) and then combining them.