Examining the Deep Belief Network for Subpixel Unmixing with Medium Spatial Resolution Multispectral Imagery in Urban Environments

Abstract

1. Introduction

2. Materials and Methods

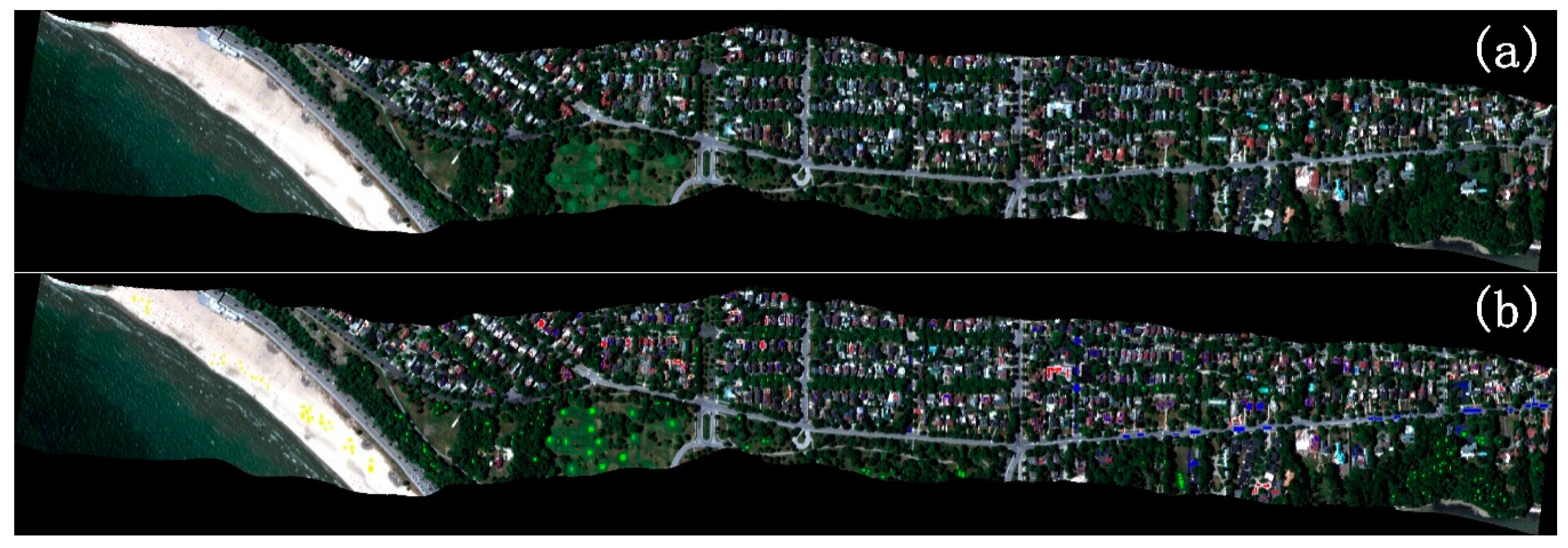

2.1. Study Area and Data Source

2.2. Subpixel Unmixing with DBN

2.3. Accuracy Assessment and Comparative Analysis

3. Results

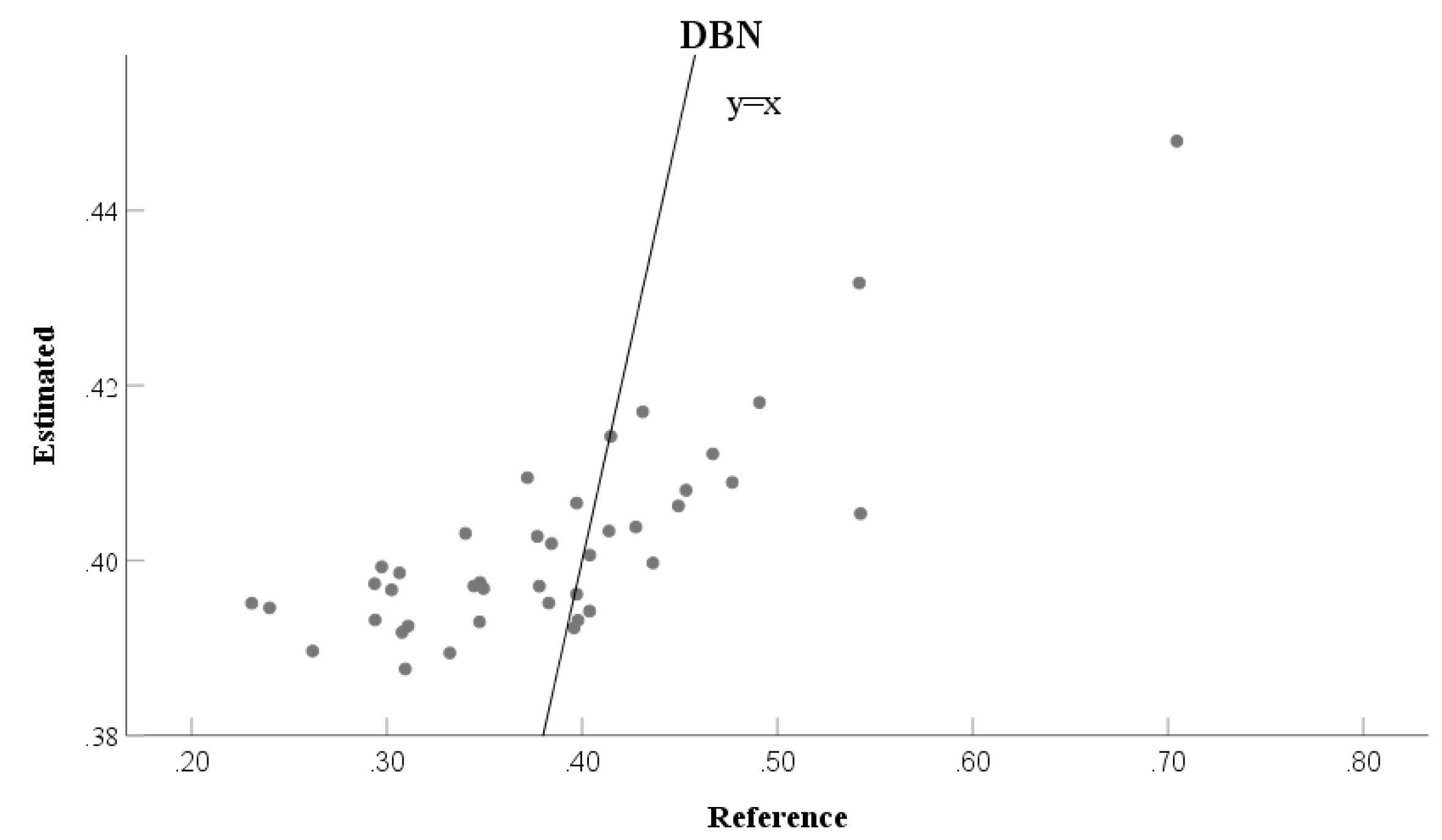

3.1. Subpixel Unmixing Using DBN

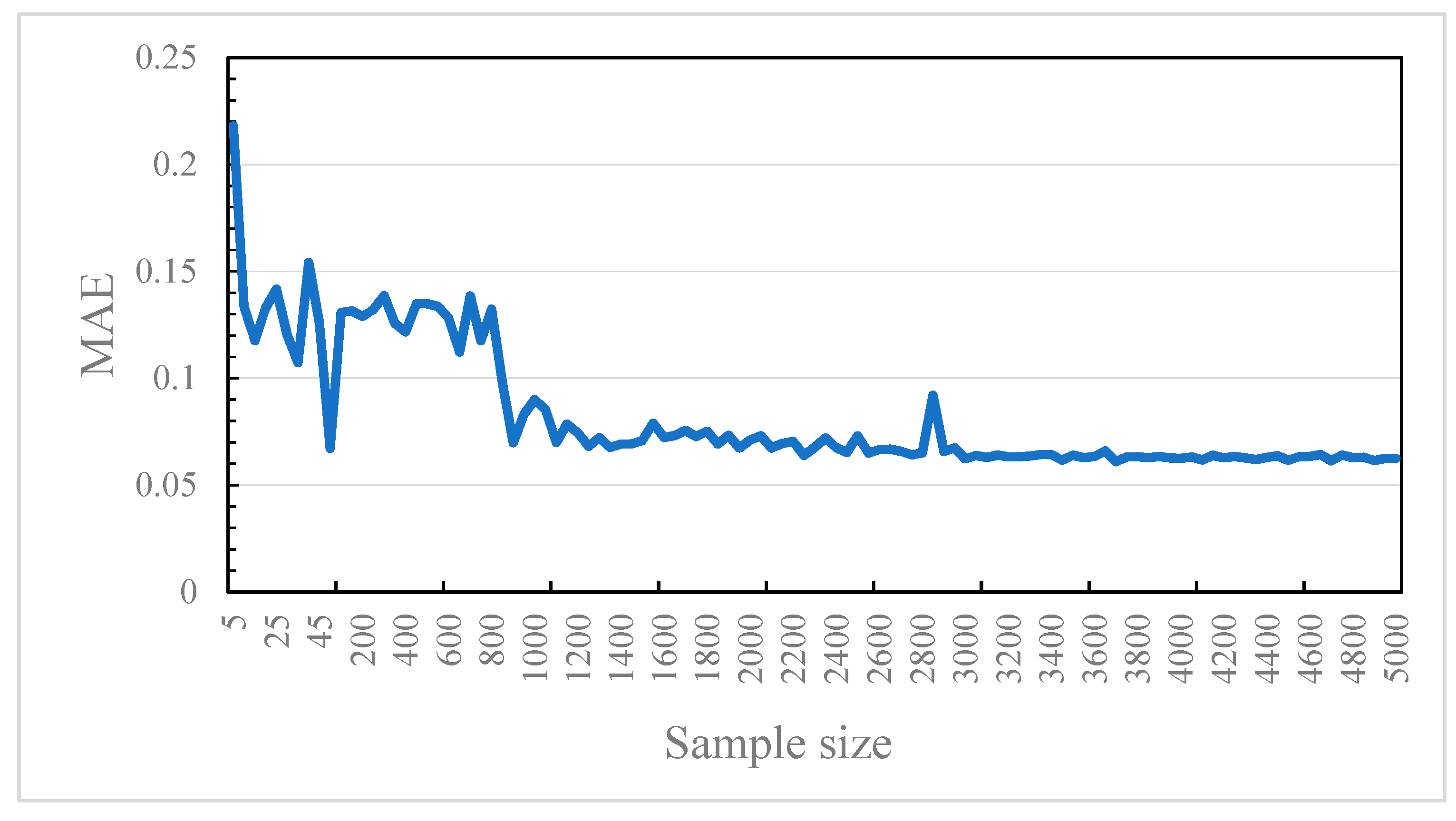

3.1.1. Sample Size

3.1.2. Number of RBM Layer

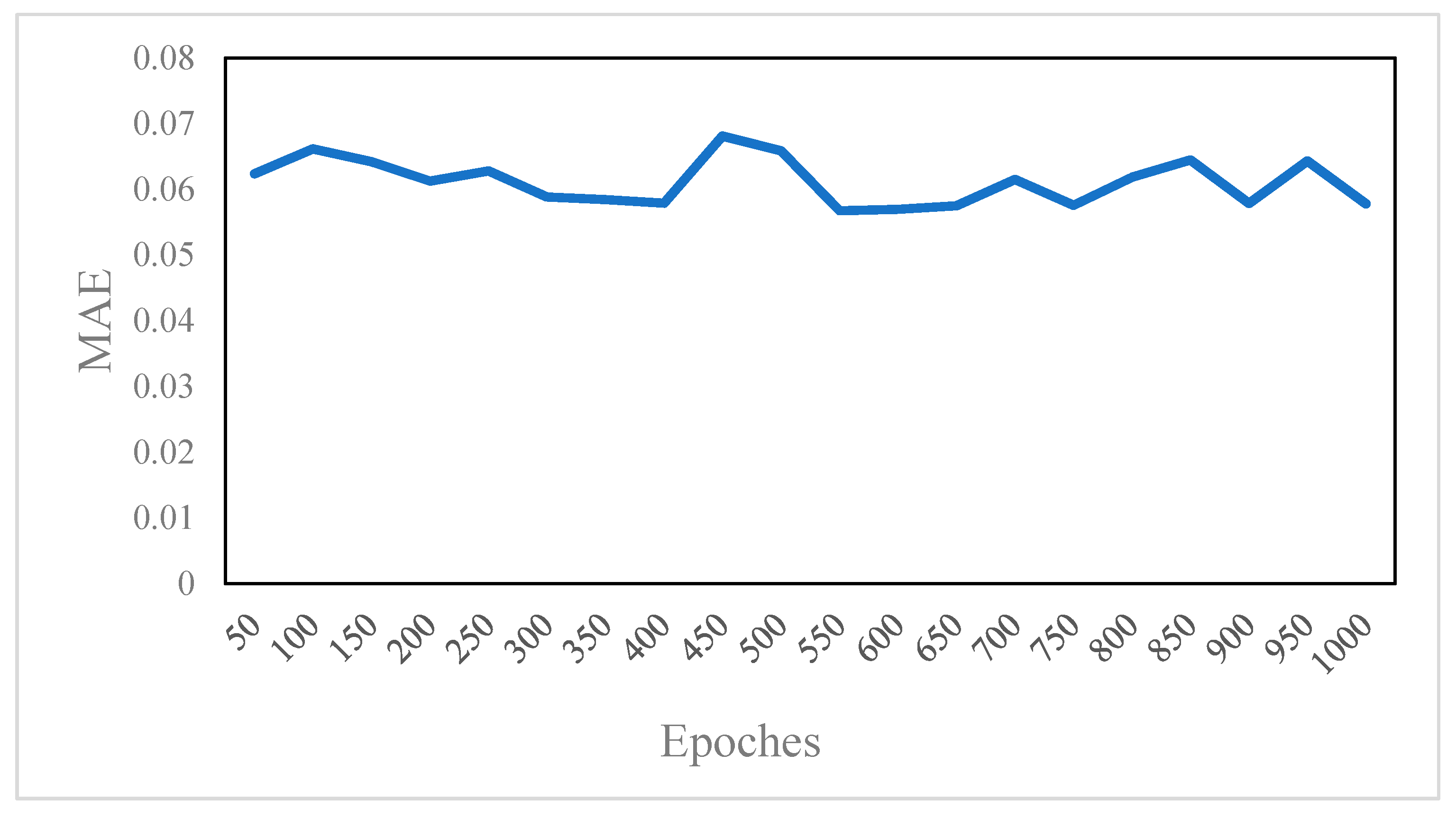

3.1.3. Number of Epochs

3.1.4. Number of Batch Size

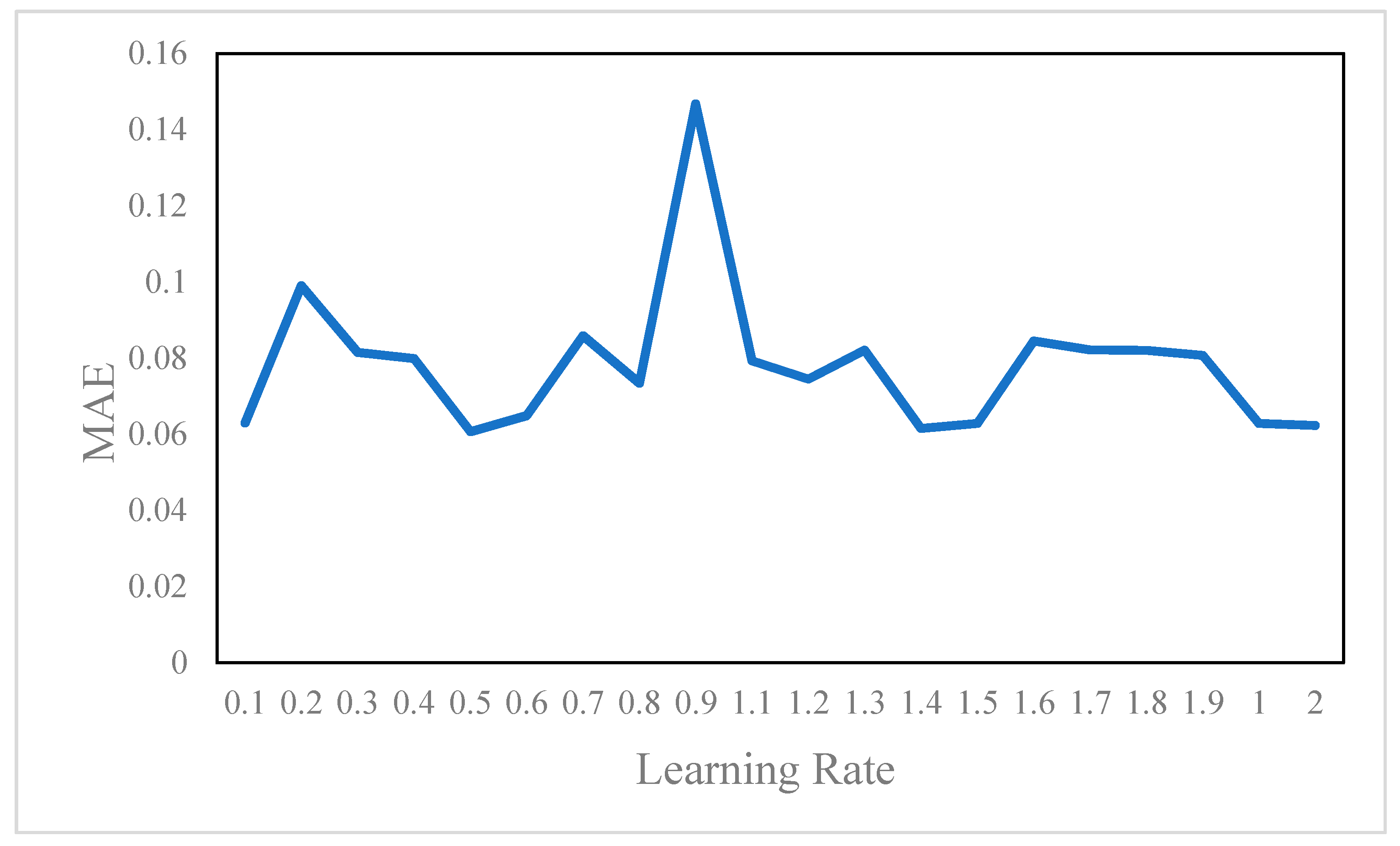

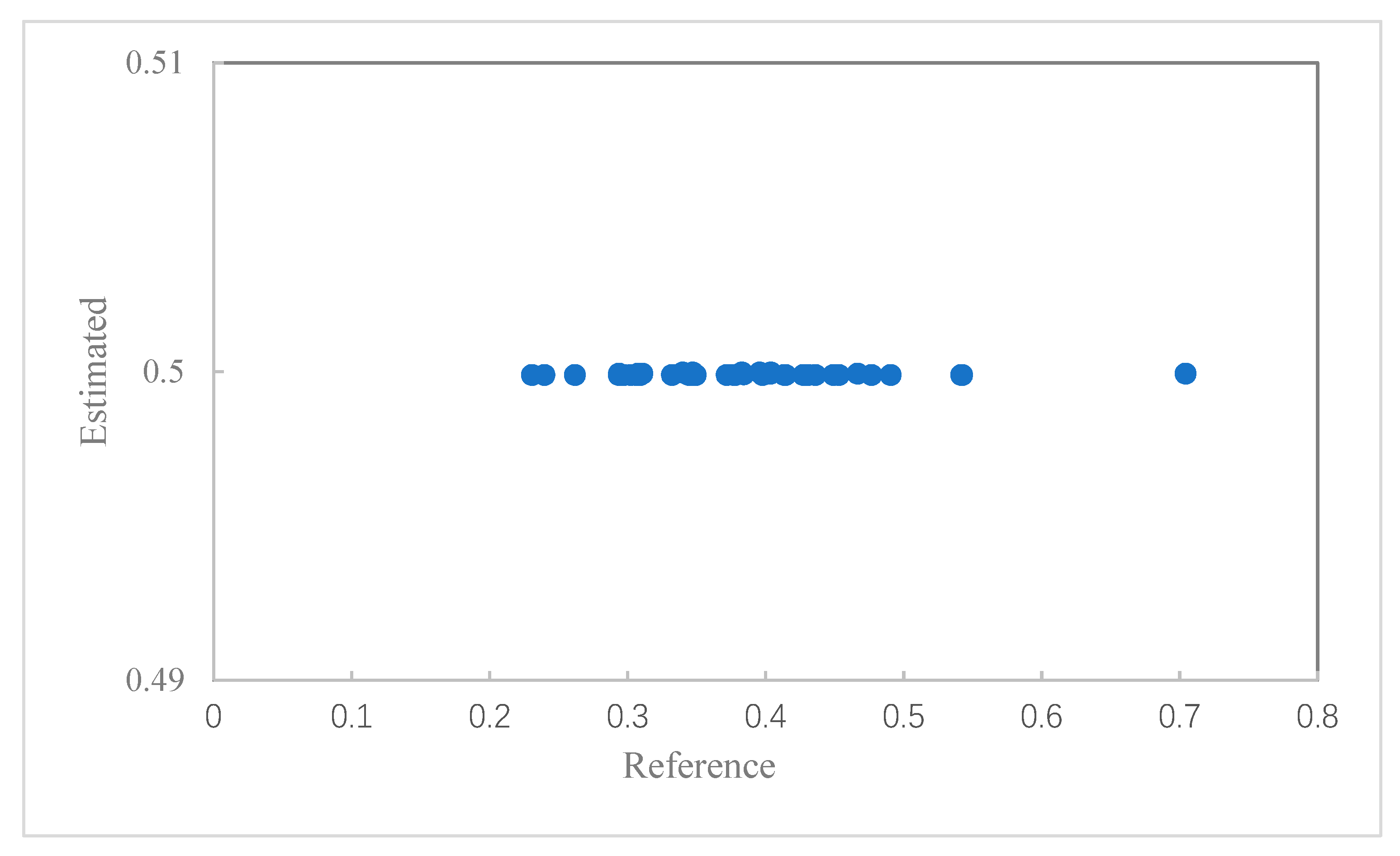

3.1.5. Learning Rate

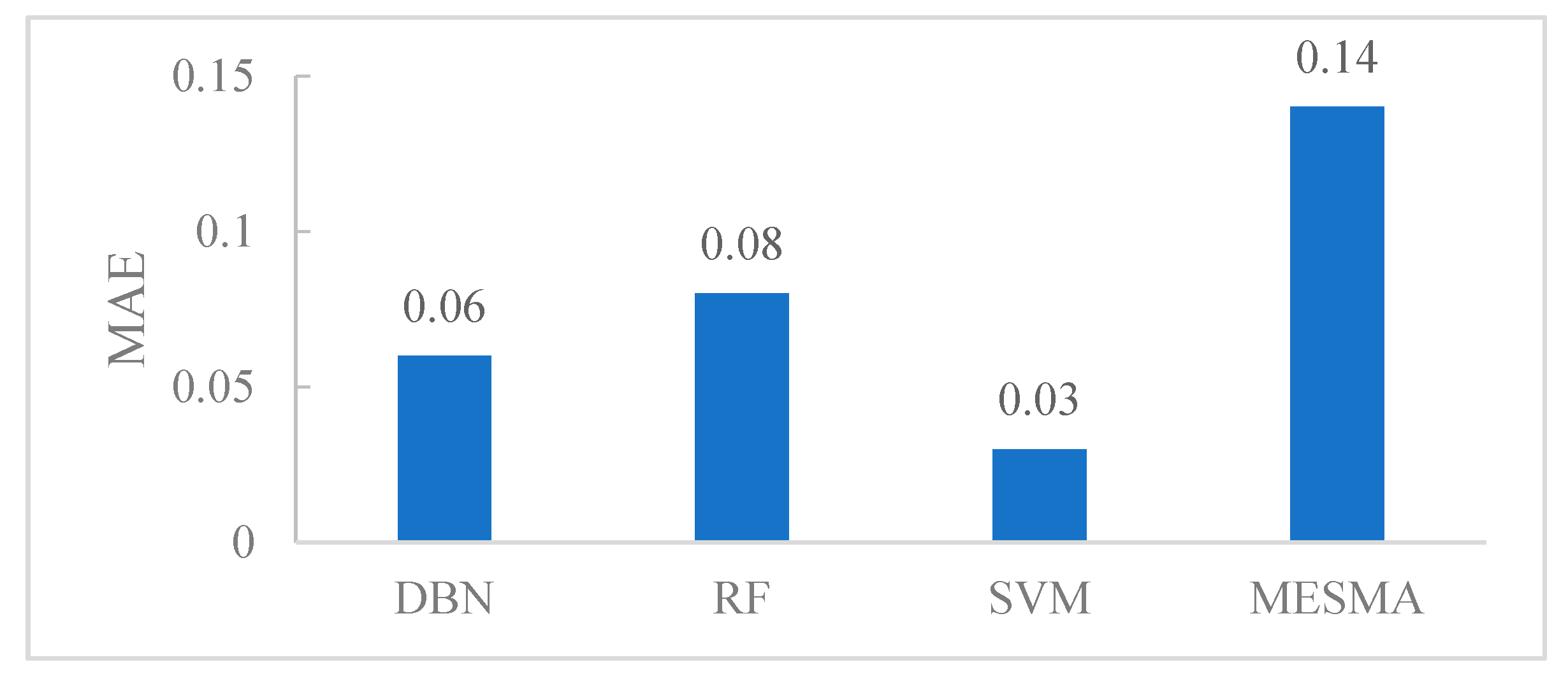

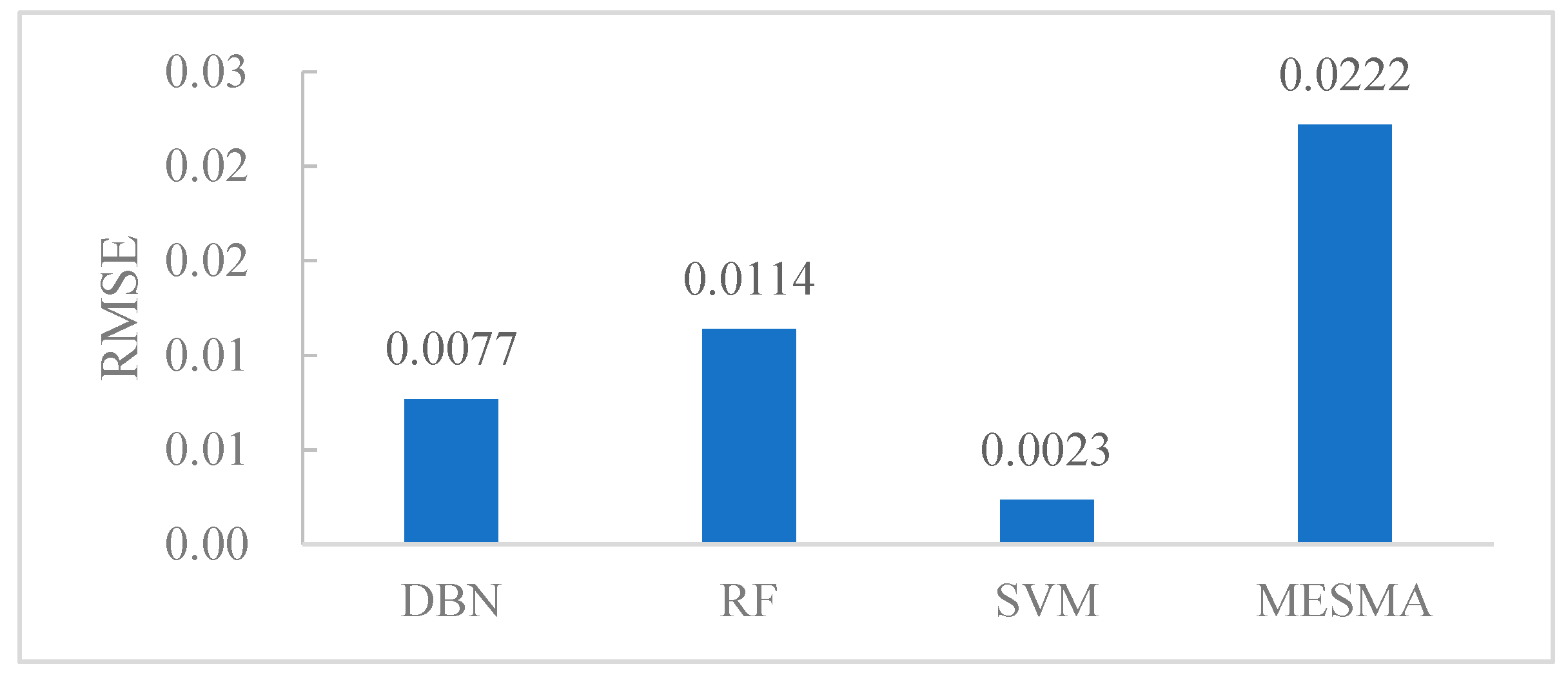

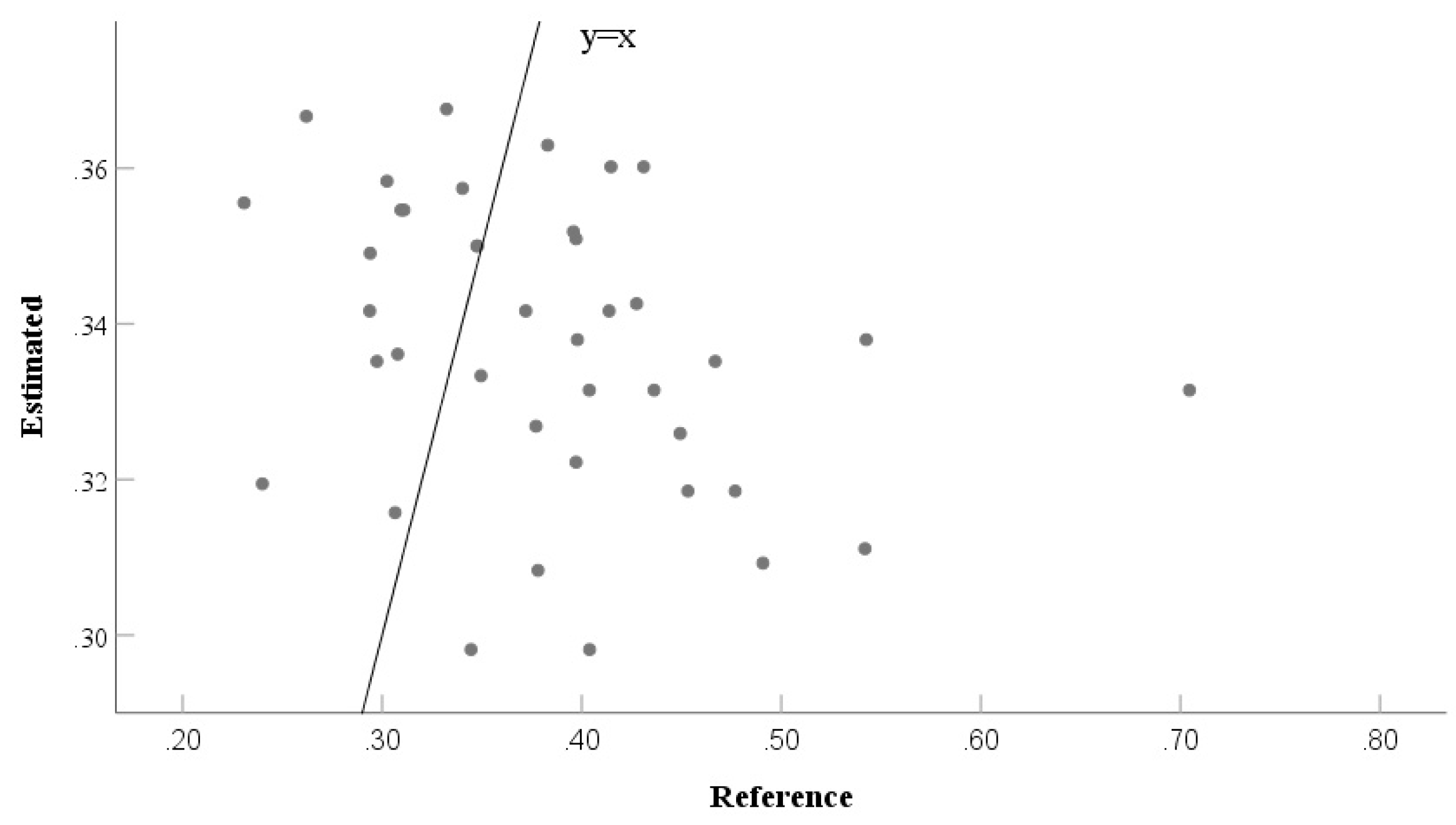

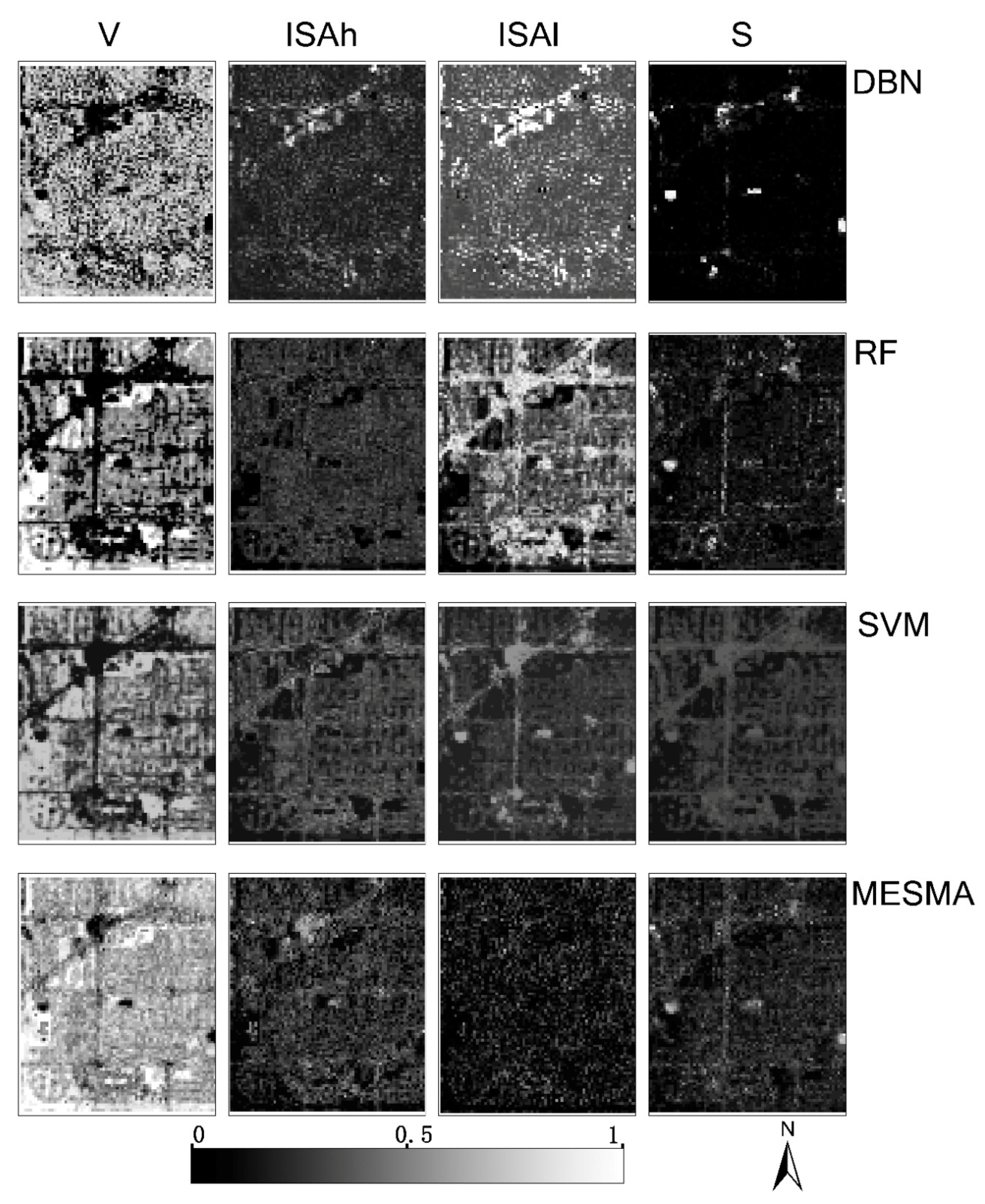

3.2. Accuracy Assessment and Comparative Analysis

4. Discussion

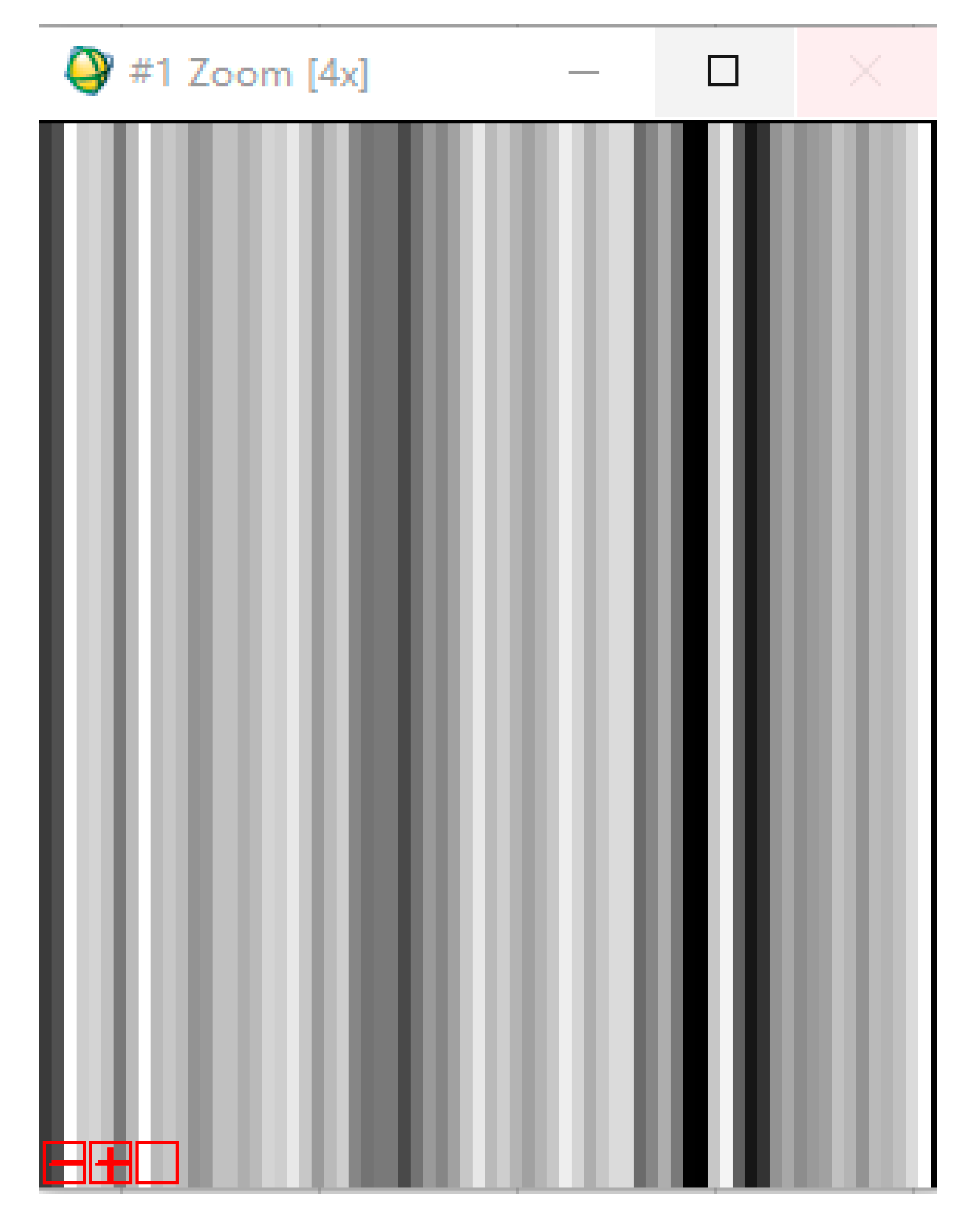

4.1. Application of DBN in Landsat Imagery

4.2. Application of DBN in Subpixel Unmixing

4.3. Comparisons with Other Models

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Adams, J.B.; Smith, M.O.; Johnson, P.E. Spectral mixture modeling: A new analysis of rock and soil types at the Viking Lander 1 site. J. Geophys. Res. Solid Earth 1986, 91, 8098–8112. [Google Scholar] [CrossRef]

- Horwitz, H.M.; Nalepka, R.F.; Hyde, P.D.; Morgenstern, J.P. Estimating the Proportions of Objects within a Single Resolution Element of a Multispectral Scanner; NASA Contract NAS-9-9784; University of Michigan: Ann Arbor, MI, USA, 1971. [Google Scholar]

- Marsh, S.E.; Switzer, P.; Kowalik, W.S.; Lyon, R.J. Resolving the percentage of component terrains within single resolution elements. Photogramm. Eng. Remote Sens. 1980, 46, 1079–1086. [Google Scholar]

- Li, X.; Strahler, A.H. Geometric-optical modeling of a conifer forest canopy. IEEE Trans. Geosci. Remote Sens. 1985, GE-23, 705–721. [Google Scholar] [CrossRef]

- Strahler, A.; Woodcock, C.; Xiaowen, L.; Jupp, D. Discrete-Object Modeling of Remotely Sensed Scenes. In Proceedings of the 18th International Symposium on Remote Sensing of Environment, Paris, France, 1–5 October 1984; Environmental Research Institute of Michigan: Ann Arbor, MI, USA, 1985; Volume 1, pp. 465–473. [Google Scholar]

- Jasinski, M.F.; Eagleson, P.S. The structure of red-infrared scattergrams of semivegetated landscapes. IEEE Trans. Geosci. Remote Sens. 1989, 27, 441–451. [Google Scholar] [CrossRef]

- Jasinski, M.F.; Eagleson, P.S. Estimation of subpixel vegetation cover using red-infrared scattergrams. IEEE Trans. Geosci. Remote Sens. 1990, 28, 253–267. [Google Scholar] [CrossRef]

- Kent, J.T.; Mardia, K.V. Spatial classification using fuzzy membership models. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 659–671. [Google Scholar] [CrossRef]

- Foody, G. A fuzzy sets approach to the representation of vegetation continua from remotely sensed data: An example from lowland heath. Photogramm. Eng. Remote Sens. 1992, 58, 221–225. [Google Scholar]

- Arun, P.; Buddhiraju, K.M.; Porwal, A. CNN based sub-pixel mapping for hyperspectral images. Neurocomputing 2018, 311, 51–64. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, F.; Hang, R.; Yuan, X. Bidirectional-convolutional LSTM based spectral-spatial feature learning for hyperspectral image classification. Remote Sens. 2017, 9, 1330. [Google Scholar]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, Russia, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Ling, F.; Foody, G.M. Super-resolution land cover mapping by deep learning. Remote Sens. Lett. 2019, 10, 598–606. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, L.; Shen, Y.; Zhu, Y. A CNN-Based Depth Estimation Approach with Multi-scale Sub-pixel Convolutions and a Smoothness Constraint. In Proceedings of the 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 365–380. [Google Scholar]

- Arun, P.; Buddhiraju, K.M. A deep learning based spatial dependency modelling approach towards super-resolution. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 6533–6536. [Google Scholar]

- Guo, R.; Wang, W.; Qi, H. Hyperspectral image unmixing using autoencoder cascade. In Proceedings of the 2015 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015; pp. 1–4. [Google Scholar]

- Ma, A.; Zhong, Y.; He, D.; Zhang, L. Multiobjective subpixel land-cover mapping. IEEE Trans. Geosci. Remote Sens. 2018, 56, 422–435. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Lv, Q.; Dou, Y.; Niu, X.; Xu, J.; Xu, J.; Xia, F. Urban land use and land cover classification using remotely sensed SAR data through deep belief networks. J. Sens. 2015, 2015, 538063. [Google Scholar] [CrossRef]

- Zhong, P.; Gong, Z.; Li, S.; Schönlieb, C.-B. Learning to diversify deep belief networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3516–3530. [Google Scholar] [CrossRef]

- Diao, W.; Sun, X.; Zheng, X.; Dou, F.; Wang, H.; Fu, K. Efficient saliency-based object detection in remote sensing images using deep belief networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 137–141. [Google Scholar] [CrossRef]

- Diao, W.; Sun, X.; Dou, F.; Yan, M.; Wang, H.; Fu, K. Object recognition in remote sensing images using sparse deep belief networks. Remote Sens. Lett. 2015, 6, 745–754. [Google Scholar] [CrossRef]

- Nakashika, T.; Takiguchi, T.; Ariki, Y. High-frequency restoration using deep belief nets for super-resolution. In Proceedings of the 2013 International Conference on Signal-Image Technology & Internet-Based Systems, Kyoto, Japan, 2–5 December 2013; pp. 38–42. [Google Scholar]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Moser, G.; Zerubia, J.; Serpico, S.B. Dictionary-based stochastic expectation-maximization for SAR amplitude probability density function estimation. IEEE Trans. Geosci. Remote Sens. 2005, 44, 188–200. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Application of deep belief network to land cover classification using hyperspectral images. In Advances in Neural Networks—ISNN 2017; Cong, F., Leung, A., Wei, Q., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10261, pp. 269–276. [Google Scholar]

- Mughees, A.; Tao, L. Multiple deep-belief-network-based spectral-spatial classification of hyperspectral images. Tsinghua Sci. Technol. 2019, 24, 183–194. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Sowmya, V.; Ajay, A.; Govind, D.; Soman, K. Improved color scene classification system using deep belief networks and support vector machines. In Proceedings of the 2017 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuching, Malaysia, 12–14 September 2017; pp. 33–38. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Roberts, D.A.; Gardner, M.; Church, R.; Ustin, S.; Scheer, G.; Green, R. Mapping chaparral in the Santa Monica Mountains using multiple endmember spectral mixture models. Remote Sens Environ. 1998, 65, 267–279. [Google Scholar] [CrossRef]

- Reschke, J.; Hüttich, C. Continuous field mapping of Mediterranean wetlands using sub-pixel spectral signatures and multi-temporal Landsat data. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 220–229. [Google Scholar] [CrossRef]

- Tsutsumida, N.; Comber, A.; Barrett, K.; Saizen, I.; Rustiadi, E. Sub-pixel classification of MODIS EVI for annual mappings of impervious surface areas. Remote Sens. 2016, 8, 143. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Carlin, L. A novel technique for subpixel image classification based on support vector machine. IEEE Trans. Image Process. 2010, 19, 2983–2999. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Ridd, M.K. Exploring a VIS (vegetation-impervious surface-soil) model for urban ecosystem analysis through remote sensing: Comparative anatomy for cities†. Int. J. Remote Sens 1995, 16, 2165–2185. [Google Scholar] [CrossRef]

- Song, C. Spectral mixture analysis for subpixel vegetation fractions in the urban environment: How to incorporate endmember variability? Remote Sens Environ. 2005, 95, 248–263. [Google Scholar] [CrossRef]

- Brown, M.; Gunn, S.R.; Lewis, H.G. Support vector machines for optimal classification and spectral unmixing. Ecol. Model. 1999, 120, 167–179. [Google Scholar] [CrossRef]

- Huang, X.; Schneider, A.; Friedl, M.A. Mapping sub-pixel urban expansion in China using MODIS and DMSP/OLS nighttime lights. Remote Sens Environ. 2016, 175, 92–108. [Google Scholar] [CrossRef]

- Jiang, Z.; Ma, Y.; Jiang, T.; Chen, C. Research on the Extraction of Red Tide Hyperspectral Remote Sensing Based on the Deep Belief Network (DBN). J. Ocean. Technol. 2019, 38, 1–7. [Google Scholar]

- Xu, L.; Liu, X.; Xiang, X. Recognition and Classification for Remote Sensing Image Based on Depth Belief Network. Geol. Sci. Technol. Inf. 2017, 36, 244–249. [Google Scholar]

- Deng, L.; Fu, S.; Zhang, R. Application of deep belief network in polarimetric SAR image classification. J. Image Graph. 2016, 21, 933–941. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. Geosci. Remote Sens. IEEE Trans. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Hu, X.; Weng, Q. Estimating impervious surfaces from medium spatial resolution imagery using the self-organizing map and multi-layer perceptron neural networks. Remote Sens. Environ. 2009, 113, 2089–2102. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. Extraction of urban impervious surfaces from an IKONOS image. Int. J. Remote Sens. 2009, 30, 1297–1311. [Google Scholar] [CrossRef]

- Deng, Y.; Wu, C. Development of a Class-Based Multiple Endmember Spectral Mixture Analysis (C-MESMA) Approach for Analyzing Urban Environments. Remote Sens. 2016, 8, 349. [Google Scholar] [CrossRef]

- Su, Y.; Li, J.; Plaza, A.; Marinoni, A.; Gamba, P.; Chakravortty, S. DAEN: Deep Autoencoder Networks for Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4309–4321. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, Y.; Zhao, X.; Wang, G. Spectral-spatial classification of hyperspectral image using autoencoders. In Proceedings of the 2013 9th International Conference on Information, Communications & Signal Processing, Tainan, Taiwan, 10–13 December 2013; pp. 1–5. [Google Scholar]

- Zhang, X.; Sun, Y.; Zhang, J.; Wu, P.; Jiao, L. Hyperspectral Unmixing via Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1755–1759. [Google Scholar] [CrossRef]

| DBN | RF | SVM | MESMA | |

|---|---|---|---|---|

| Samples for each class | 3000 | 100 | 5 | 10 |

| Training Time (seconds) | 305.60 | 0.52 | 0.03 | / |

| Prediction Time (seconds) | 5.29 | 637.6 | 0.33 | 2.16 × 106 |

| Total Time (seconds) | 310.89 | 638.12 | 0.36 | 2.16 × 106 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, Y.; Chen, R.; Wu, C. Examining the Deep Belief Network for Subpixel Unmixing with Medium Spatial Resolution Multispectral Imagery in Urban Environments. Remote Sens. 2019, 11, 1566. https://doi.org/10.3390/rs11131566

Deng Y, Chen R, Wu C. Examining the Deep Belief Network for Subpixel Unmixing with Medium Spatial Resolution Multispectral Imagery in Urban Environments. Remote Sensing. 2019; 11(13):1566. https://doi.org/10.3390/rs11131566

Chicago/Turabian StyleDeng, Yingbin, Renrong Chen, and Changshan Wu. 2019. "Examining the Deep Belief Network for Subpixel Unmixing with Medium Spatial Resolution Multispectral Imagery in Urban Environments" Remote Sensing 11, no. 13: 1566. https://doi.org/10.3390/rs11131566

APA StyleDeng, Y., Chen, R., & Wu, C. (2019). Examining the Deep Belief Network for Subpixel Unmixing with Medium Spatial Resolution Multispectral Imagery in Urban Environments. Remote Sensing, 11(13), 1566. https://doi.org/10.3390/rs11131566