Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model

Abstract

1. Introduction

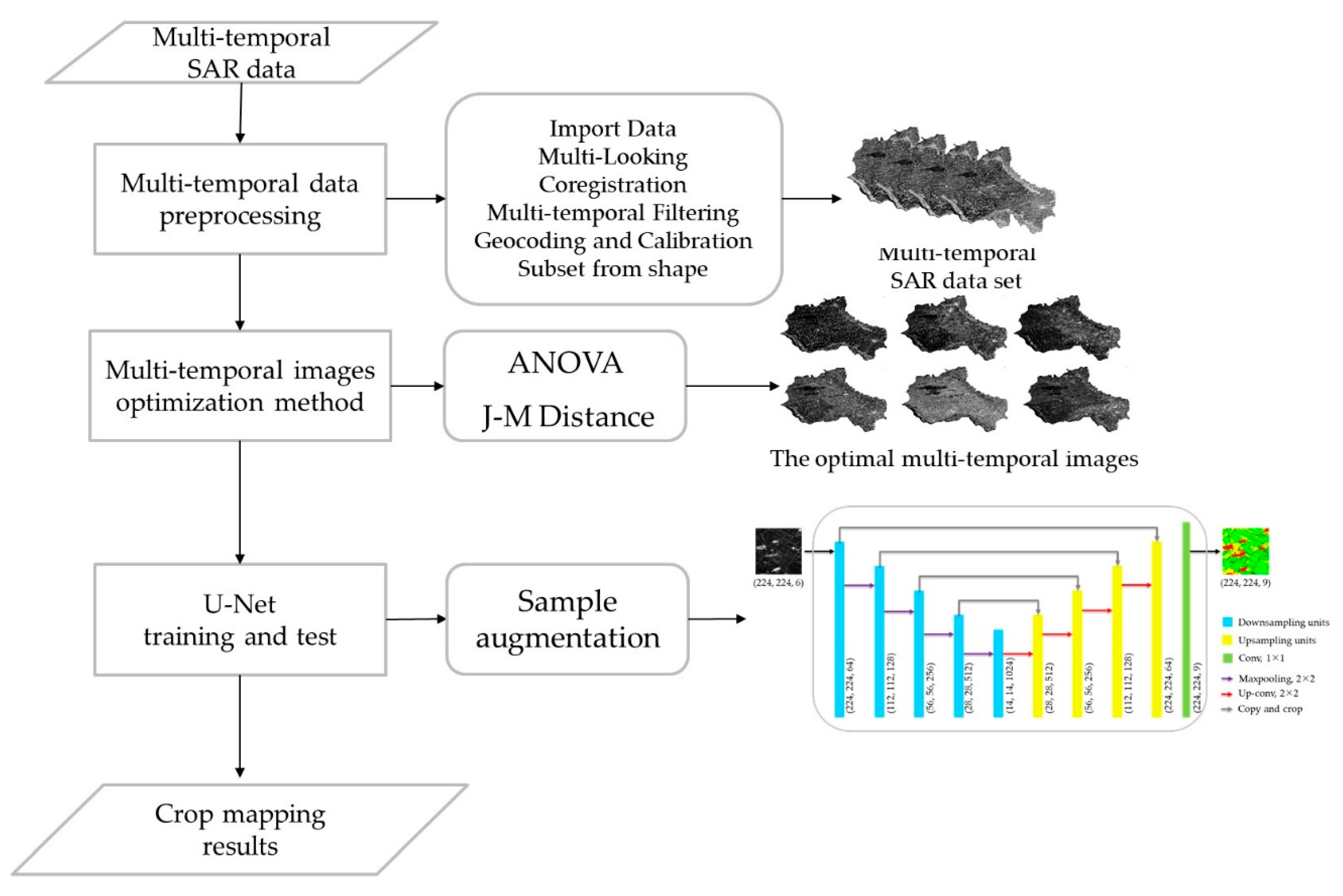

2. Methods

2.1. Multi-Temporal Images Optimization Method

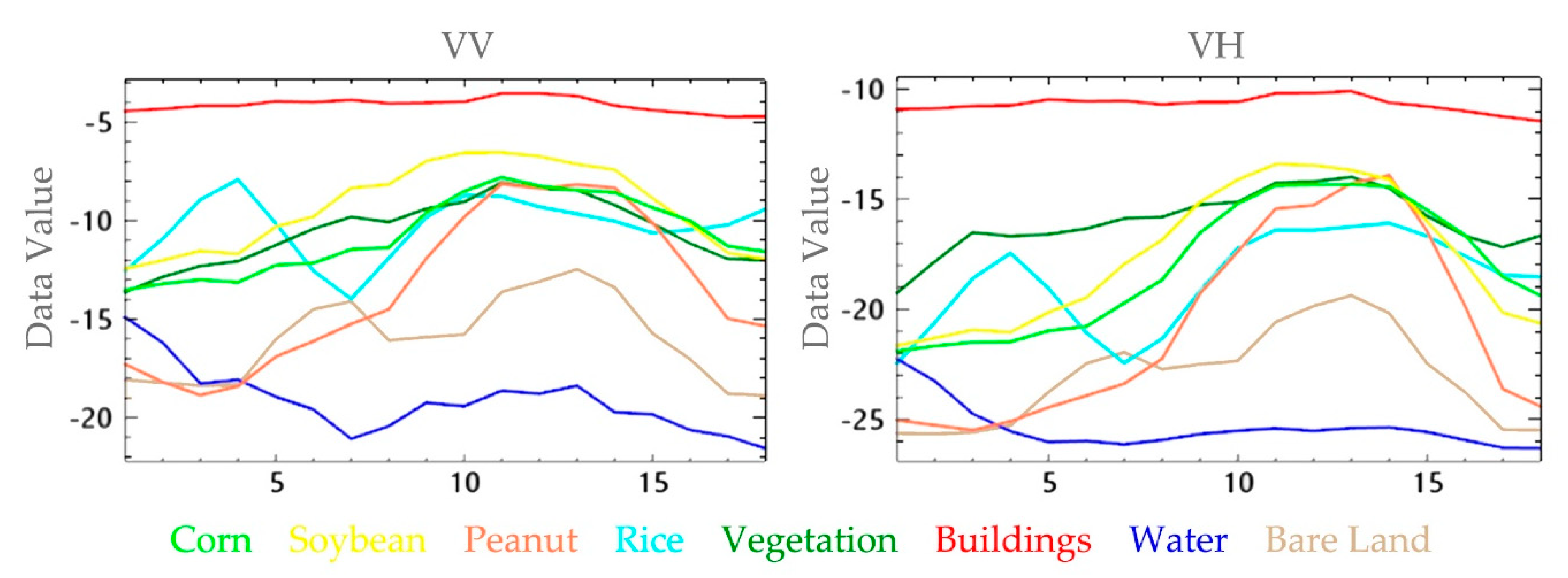

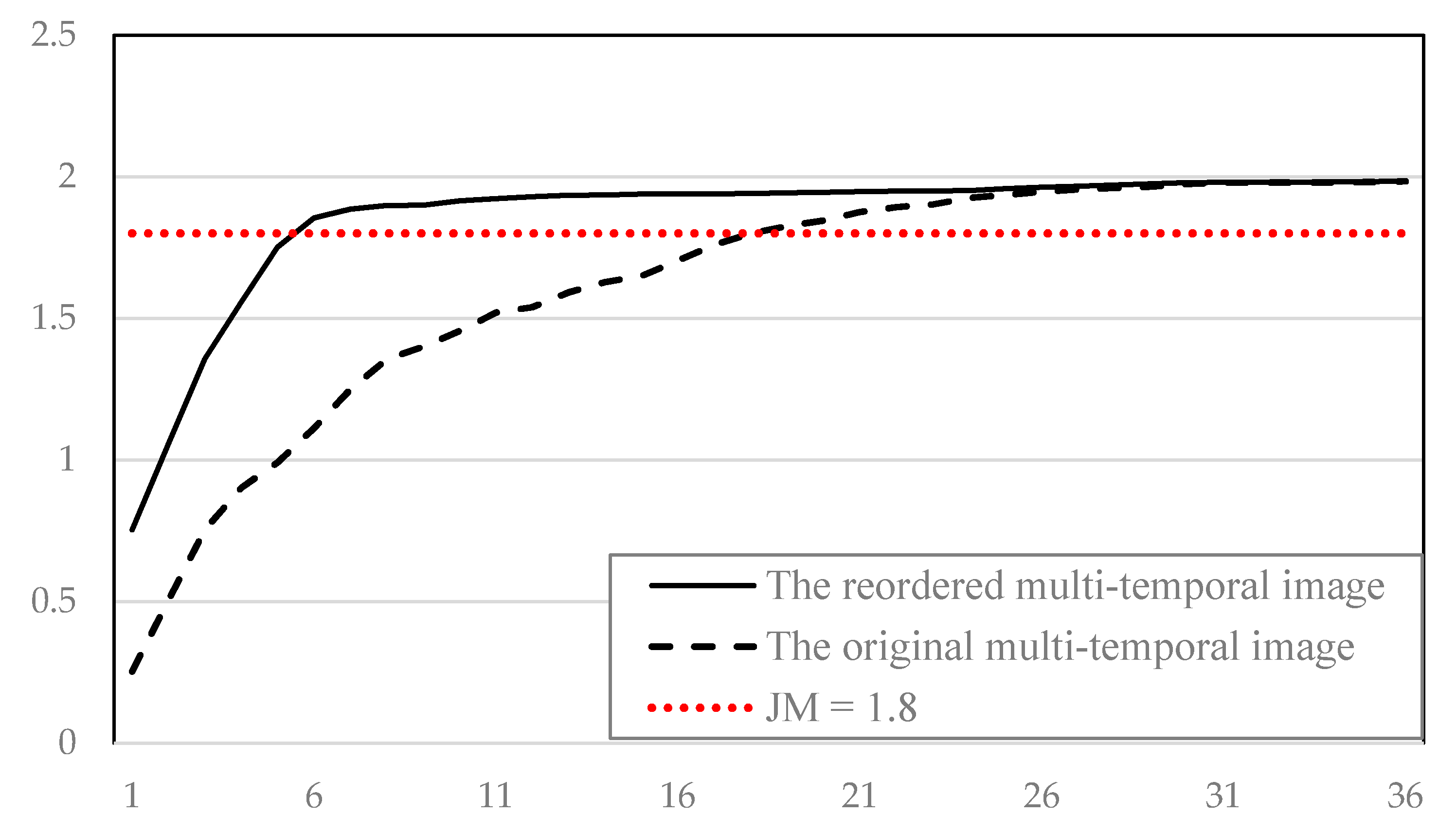

2.1.1. Analysis of Variance

2.1.2. Jeffries–Matusita Distance

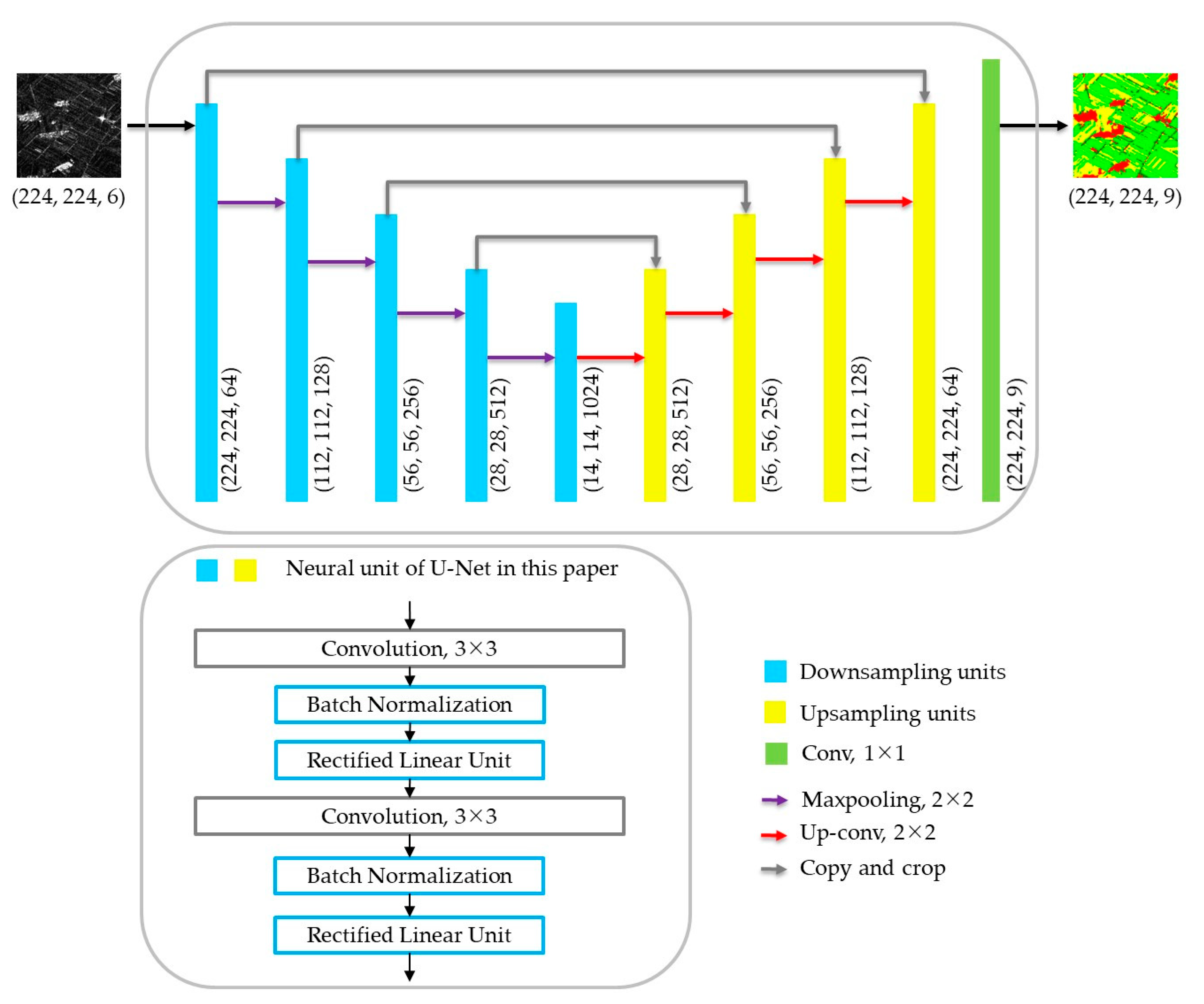

2.2. U-Net

- (1)

- Considering the number of various types of crop in the training sample set is uneven, which is caused by the disproportion acreage of different crops in actual production, this paper introduces the BN algorithm [42] into the U-Net network model, that is, adding a batch normalization layer between convolution layer and ReLU in each neural unit of U-Net, to improve the network training efficiency.The BN algorithm is a simple and efficient method to improve the performance of neural networks proposed by Ioffe and Szegedy in 2015 [42]. BN acts on the input of each neural unit activation function (such as sigmoid or ReLU function) during training to ensure the input of activation function can satisfy the distribution of mean is 0 and variance is 1 based on each batch of training samples.For a value in a batch of data, an initial BN formula such as Formula (7).In Formula (7), BN restricts the input of activation function to normal distribution, which restricts the expressive capacity of network layer. Therefore, a new proportional parameter and a new displacement parameter are added to the BN formula. Both and are learnable parameters. Finally, batch standardized formulas for deep learning networks are obtained, such as Formula (8).

- (2)

- In addition, this paper uses the U-Net network with padding to ensure that the input image and the output image size are unchanged, and the padding value is 1.

3. Study Area and Data

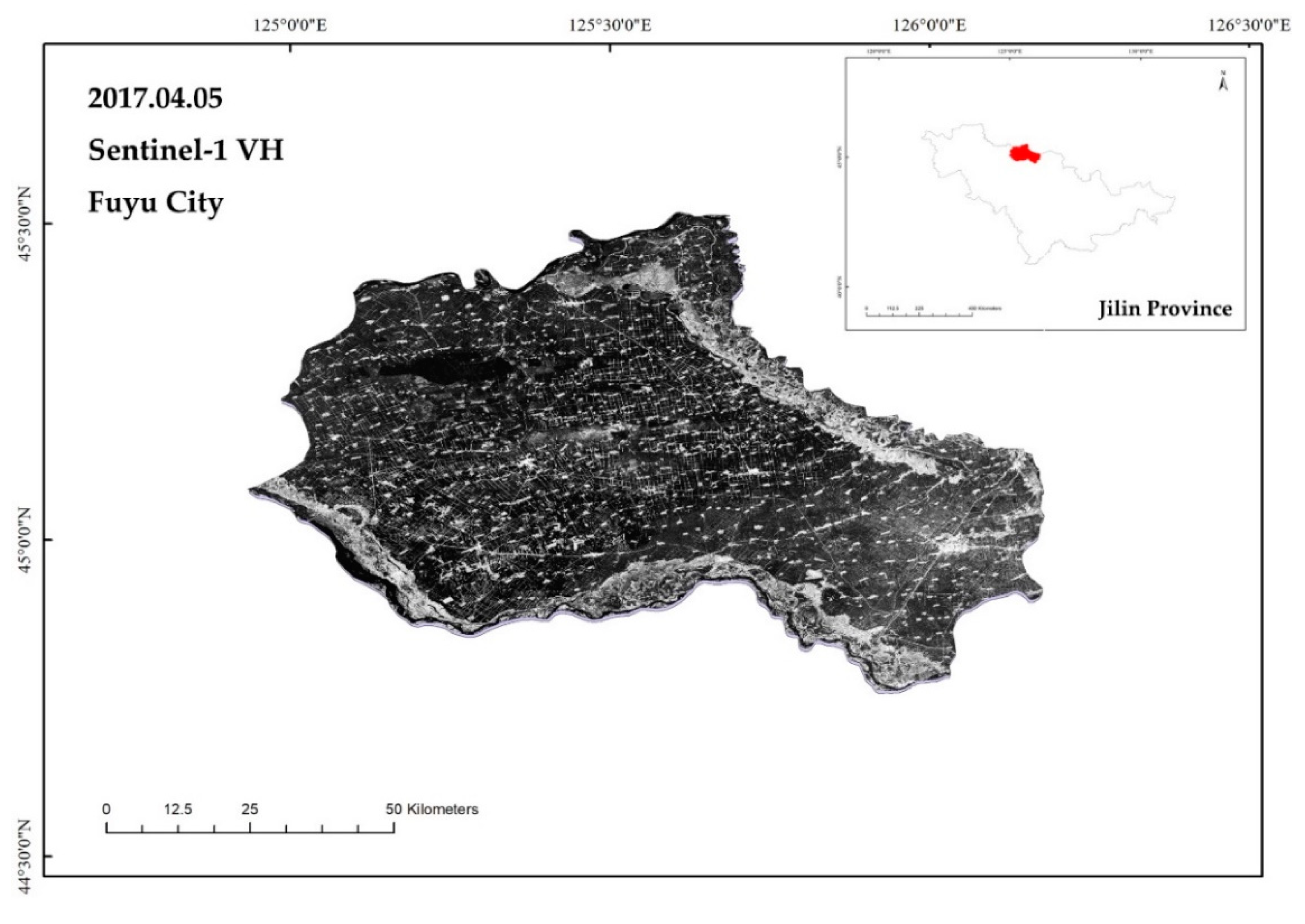

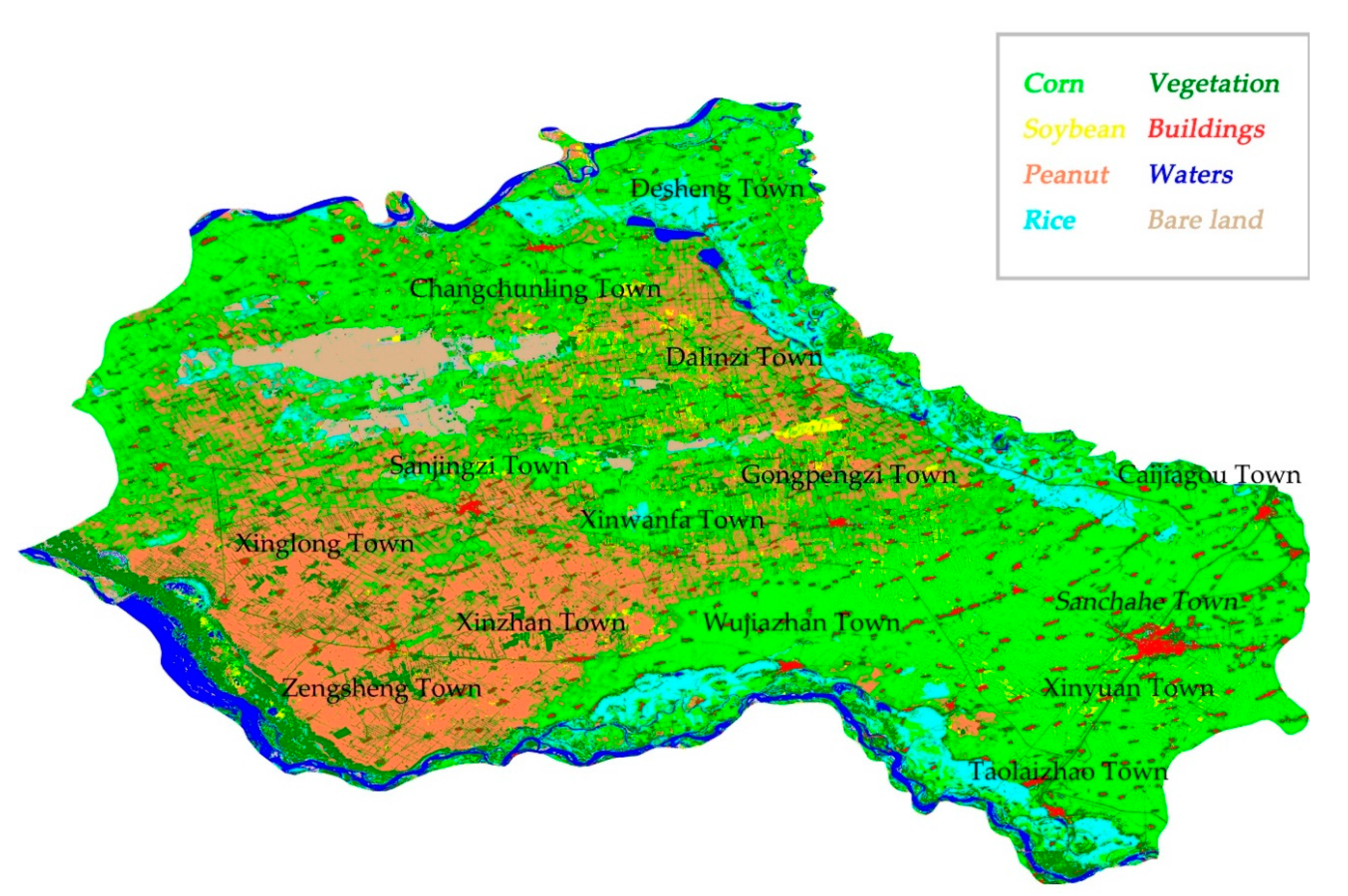

3.1. Fuyu City

3.2. Experimental Data

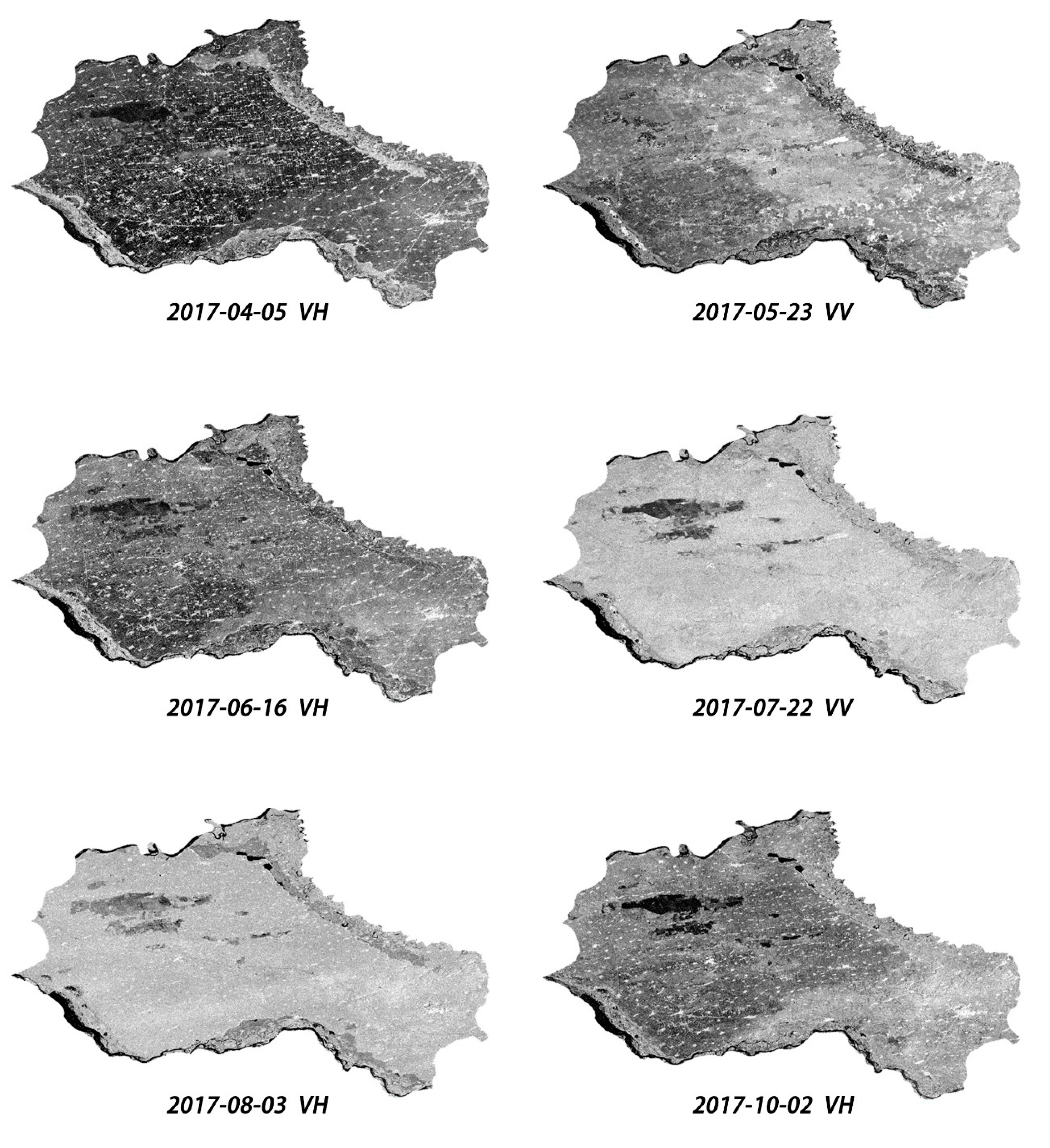

3.2.1. SAR Data

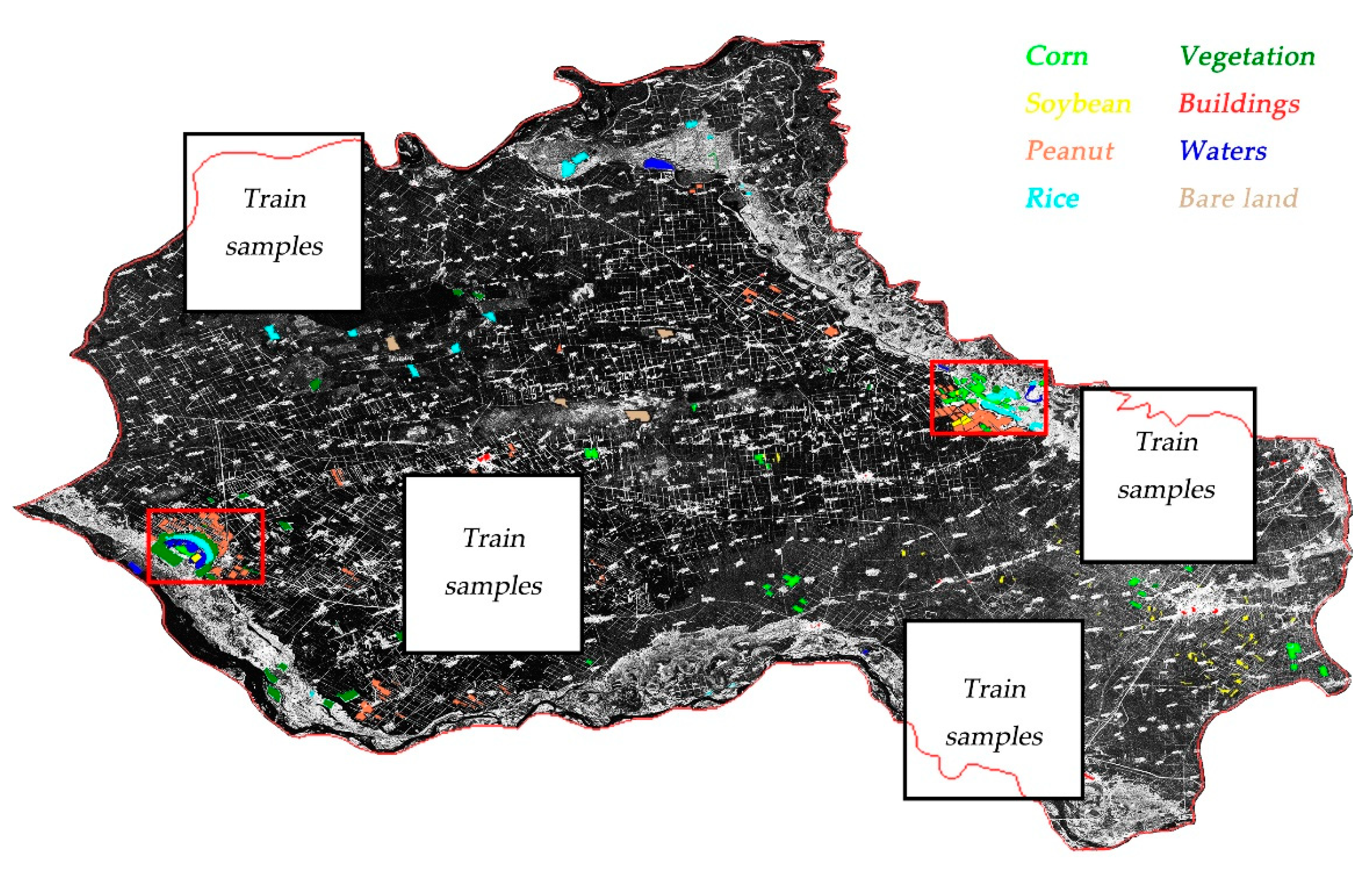

3.2.2. Ground Truth Data

4. Experimental Results

4.1. Results of Multi-Temporal Images Optimization

4.2. U-Net Model Training Details

4.2.1. Training Samples

4.2.2. Training Details

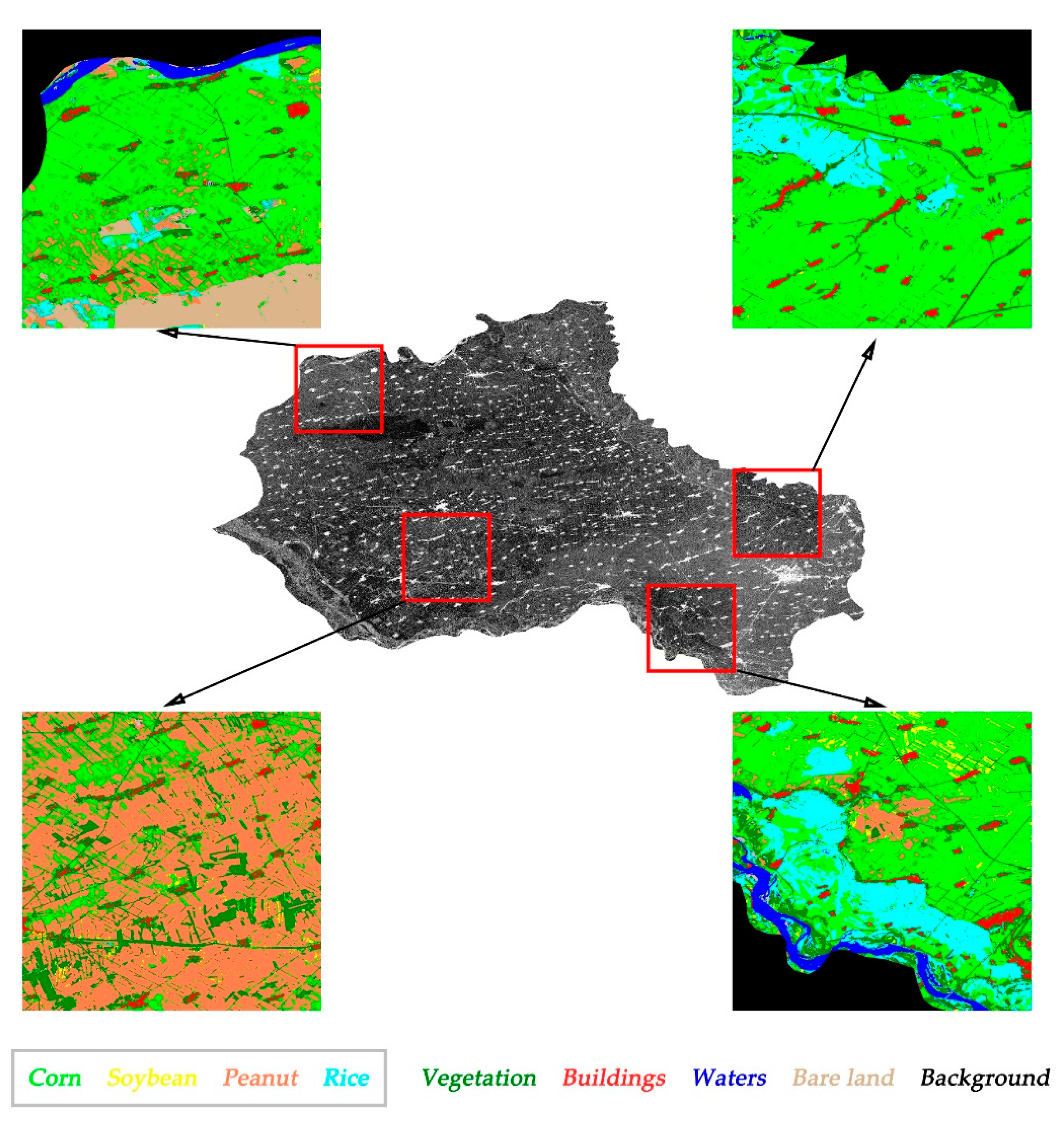

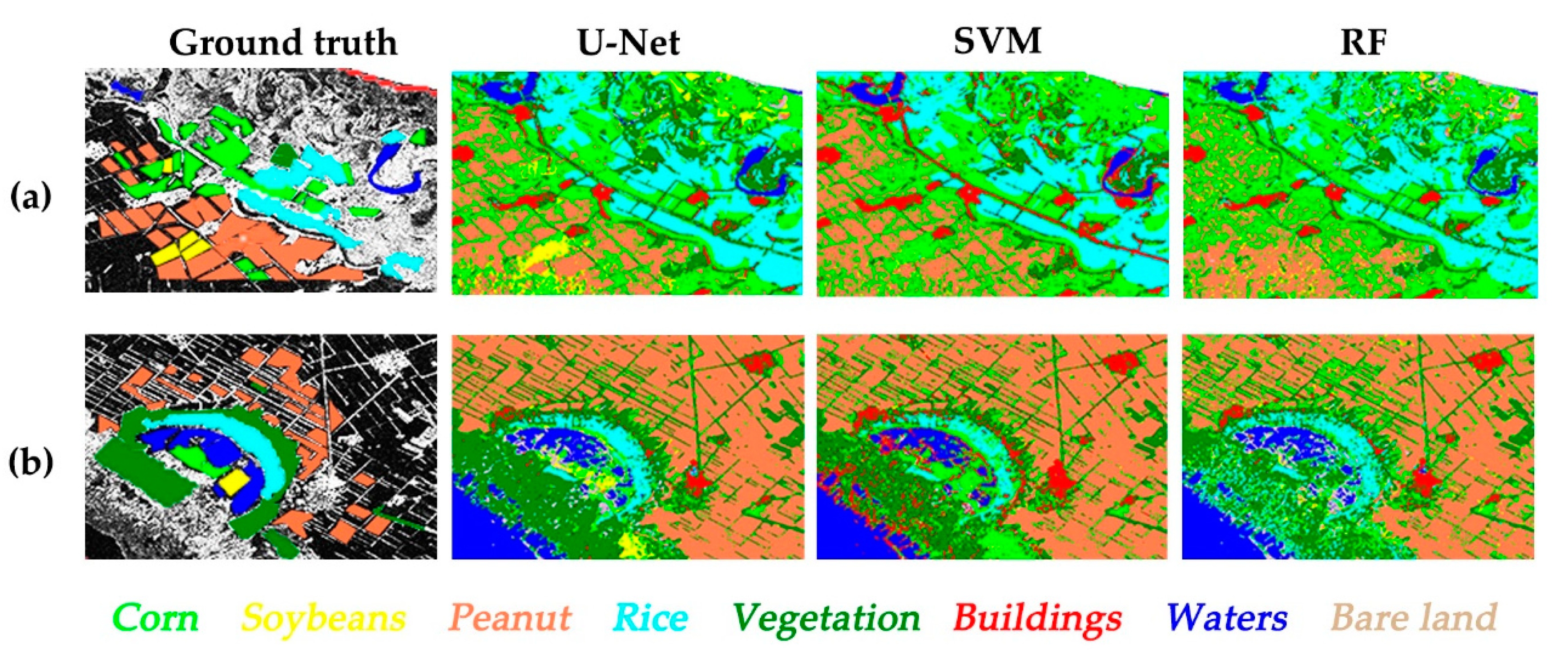

4.3. The Results of Crop Mapping

5. Discussion

6. Conclusions

- (a)

- A multi-temporal images optimization method combining ANOVA and J–M distance is proposed to realize the reduction dimension of time series images, and to effectively reduce the redundancy of multi-temporal SAR data.

- (b)

- Through geometric transformation methods, such as cutting, flipping, and rotation, the diversity of samples is enhanced, and the network overfitting problem caused by fewer training samples is effectively solved.

- (c)

- The experimental results show that the multi-temporal SAR data crop mapping method based on the U-Net model can achieve higher classification accuracy under the condition of a complex crop planting structure.

Author Contributions

Funding

Conflicts of Interest

References

- Fang, X.M.; Liu, C. Striving for IT-based Agriculture Modernization: Challenges and Strategies. J. Xinjiang Normal Univ. 2018, 39, 68–74. [Google Scholar] [CrossRef]

- Qiong, H.U.; Wenbin, W.U.; Xiang, M.T.; Chen, D.; Long, Y.Q.; Song, Q.; Liu, Y.Z.; Miao, L.U.; Qiangyi, Y.U. Spatio-Temporal Changes in Global Cultivated Land over 2000–2010. Sci. Agric. Sin. 2018, 51, 1091–1105. [Google Scholar]

- González-Sanpedro, M.C.; Le Toan, T.; Moreno, J.; Kergoat, L.; Rubio, E. Seasonal variations of leaf area index of agricultural fields retrieved from Landsat data. Remote Sens. Environ. 2008, 112, 810–824. [Google Scholar] [CrossRef]

- Mustafa, T.; Asli, O. Field-based crop classification using SPOT4, SPOT5, IKONOS and QuickBird imagery for agricultural areas: A comparison study. Int. J. Remote Sens. 2011, 32, 9735–9768. [Google Scholar]

- Qi, Z.; Yeh, G.O.; Li, X.; Lin, Z. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Ian, G. Polarimetric Radar Imaging: From basics to applications, by Jong-Sen Lee and Eric Pottier. Int. J. Remote Sens. 2012, 33, 333–334. [Google Scholar]

- Gang, H.; Zhang, A.N.; Zhou, F.Q.; Brisco, B. Integration of optical and synthetic aperture radar (SAR) images to differentiate grassland and alfalfa in Prairie area. Int. J. Appl. Earth Observ. Geoinf. 2014, 28, 12–19. [Google Scholar]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Corn mapping uisng multi-temporal fully and compact SAR data. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–4. [Google Scholar]

- Kun, J.; Qiangzi, L.; Yichen, T.; Bingfang, W.; Feifei, Z.; Jihua, M. Crop classification using multi-configuration SAR data in the North China Plain. Int. J. Remote Sens. 2012, 33, 170–183. [Google Scholar]

- Skakun, S.; Kussul, N.; Shelestov, A.Y.; Lavreniuk, M.; Kussul, O. Efficiency Assessment of Multitemporal C-Band Radarsat-2 Intensity and Landsat-8 Surface Reflectance Satellite Imagery for Crop Classification in Ukraine. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 3712–3719. [Google Scholar] [CrossRef]

- Le Toan, T.; Laur, H.; Mougin, E.; Lopes, A. Multitemporal and dual-polarization observations of agricultural vegetation covers by X-band SAR images. Eur. J. Nutr. 2016, 56, 1339–1346. [Google Scholar] [CrossRef]

- Dan, W.; Lin, H.; Chen, J.S.; Zhang, Y.Z.; Zeng, Q.W.; Gong, P.; Howarth, P.J.; Xu, B.; Ju, W. Application of multi-temporal ENVISAT ASAR data to agricultural area mapping in the Pearl River Delta. Int. J. Remote Sens. 2010, 31, 1555–1572. [Google Scholar]

- Villa, P.; Stroppiana, D.; Fontanelli, G.; Azar, R.; Brivio, A.P. In-Season Mapping of Crop Type with Optical and X-Band SAR Data: A Classification Tree Approach Using Synoptic Seasonal Features. Remote Sens. 2015, 7, 12859–12886. [Google Scholar] [CrossRef]

- Zhu, J.; Qiu, X.; Pan, Z.; Zhang, Y.; Lei, B. Projection Shape Template-Based Ship Target Recognition in TerraSAR-X Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 222–226. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, C.C.; Zhang, J.C.; Geomatics, S.O.; University, L.T. Land cover classification using RADARSAT-2 full polarmetric SAR data. Eng. Surv. Mapp. 2015, 24, 61–65. [Google Scholar] [CrossRef]

- Lucas, R.; Rebelo, L.M.; Fatoyinbo, L.; Rosenqvist, A.; Itoh, T.; Shimada, M.; Simard, M.; Souzafilho, P.W.; Thomas, N.; Trettin, C. Contribution of L-band SAR to systematic global mangrove monitoring. Mar. Freshw. Res. 2014, 65, 589–603. [Google Scholar] [CrossRef]

- Lian, H.; Qin, Q.; Ren, H.; Du, J.; Meng, J.; Chen, D. Soil moisture retrieval using multi-temporal Sentinel-1 SAR data in agricultural areas. Trans. Chin. Soc. Agric. Eng. 2016, 32, 142–148. [Google Scholar]

- Abdikan, S.; Sekertekin, A.; Ustunern, M.; Balik Sanli, F.; Nasirzadehdizaji, R. Backscatter analysis using multi-temporal sentinel-1 sar data for crop growth of maize in konya basin, turkey. Int. Arch. Photogramm. Remote Sens. Spat. Inform. Sci. 2018, 42, 9–13. [Google Scholar] [CrossRef]

- Chue Poh, T.; Hong Tat, E.; Hean Teik, C. Agricultural crop-type classification of multi-polarization SAR images using a hybrid entropy decomposition and support vector machine technique. Int. J. Remote Sens. 2011, 32, 7057–7071. [Google Scholar]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Lijing, B.U.; Huang, P.; Shen, L. Integrating color features in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2197–2216. [Google Scholar]

- Bengio, Y. Learning Deep Architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Hirose, A. Complex-Valued Neural Networks: Theories and Applications; World Scientific: London, UK, 2003; pp. 181–204. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Castro, J.D.B.; Feitoza, R.Q.; Rosa, L.C.L.; Diaz, P.M.A.; Sanches, I.D.A. A Comparative Analysis of Deep Learning Techniques for Sub-Tropical Crop Types Recognition from Multitemporal Optical/SAR Image Sequences. In Proceedings of the 2017 30th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Niteroi, Brazil, 17–20 October 2017; pp. 382–389. [Google Scholar]

- Ndikumana, E.; Ho Tong Minh, D.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Liu, W.; Yang, L.; Sun, S.; Hu, W.; Zhang, F.; Li, W. DeepUNet: A Deep Fully Convolutional Network for Pixel-Level Sea-Land Segmentation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 11, 3954–3982. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H. Integrating H-A-α with fully convolutional networks for fully PolSAR classification. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2018; pp. 1–4. [Google Scholar]

- An, Q.; Pan, Z.; You, H. Ship Detection in Gaofen-3 SAR Images Based on Sea Clutter Distribution Analysis and Deep Convolutional Neural Network. Sensors 2018, 18, 334. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Dixon, W.J.; Massey, F.J., Jr. Introduction to Statistical Analysis, 2nd ed.; McGraw-Hill: New York, NY, USA, 1957; pp. 11–50. [Google Scholar]

- Wu, S.; Li, W.; Shi, Y. Detection for steganography based on Hilbert Huang Transform. In Proceedings of the SPIE-International Conference on Photonics, 3D-imaging, and Visualization, Guangzhou, China, 28 October 2011; Volume 82, pp. 44–49. [Google Scholar] [CrossRef]

- Amster, S.J. Beyond ANOVA, Basics of Applied Statistics. Technometrics 1986, 29, 387. [Google Scholar] [CrossRef]

- Niel, T.G.V.; McVicar, T.R.; Datt, B. On the relationship between training sample size and data dimensionality: Monte Carlo analysis of broadband multi-temporal classification. Remote Sens. Environ. 2005, 98, 468–480. [Google Scholar]

- Adam, E.; Mutanga, O. Spectral discrimination of papyrus vegetation (Cyperus papyrus L.) in swamp wetlands using field spectrometry. Isprs J. Photogramm. Remote Sens. 2009, 64, 612–620. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A Review on Deep Learning Techniques Applied to Semantic Segmentation. arXiv Preprint, 2017; arXiv:1704.06857. [Google Scholar]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef]

- Bai, Y.; Mas, E.; Koshimura, S. Towards Operational Satellite-Based Damage-Mapping Using U-Net Convolutional Network: A Case Study of 2011 Tohoku Earthquake-Tsunami. Remote Sens. 2018, 10, 1626. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Ouyang, L.; Mao, D.; Wang, Z.; Li, H.; Man, W.; Jia, M.; Liu, M.; Zhang, M.; Liu, H. Analysis crops planting structure and yield based on GF-1 and Landsat8 OLI images. Trans. Chin. Soc. Agric. Eng. 2017, 33, 10. [Google Scholar] [CrossRef]

- Xing, L.; Niu, Z.; Wang, H.; Tang, X.; Wang, G. Study on Wetland Extraction Using Optimal Features and Monthly Synthetic Landsat Data. Geogr. Geo-Inf. Sci. 2018, 34, 80–86. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1998, 37, 270–279. [Google Scholar] [CrossRef]

| Num. | Date | Satellite | Polarization | Orbit Direction |

|---|---|---|---|---|

| 1 | 4 February 2017 | S1B | VV/VH | Descend Left-looking |

| 2 | 24 March 2017 | S1B | VV/VH | Descend Left-looking |

| 3 | 5 April 2017 | S1B | VV/VH | Descend Left-looking |

| 4 | 17 April 2017 | S1B | VV/VH | Descend Left-looking |

| 5 | 29 April 2017 | S1B | VV/VH | Descend Left-looking |

| 6 | 23 May 2017 | S1B | VV/VH | Descend Left-looking |

| 7 | 4 June 2017 | S1B | VV/VH | Descend Left-looking |

| 8 | 16 June 2017 | S1B | VV/VH | Descend Left-looking |

| 9 | 28 June 2017 | S1B | VV/VH | Descend Left-looking |

| 10 | 10 July 2017 | S1B | VV/VH | Descend Left-looking |

| 11 | 22 July 2017 | S1B | VV/VH | Descend Left-looking |

| 12 | 3 August 2017 | S1B | VV/VH | Descend Left-looking |

| 13 | 15 August 2017 | S1B | VV/VH | Descend Left-looking |

| 14 | 8 September 2017 | S1B | VV/VH | Descend Left-looking |

| 15 | 20 September 2017 | S1B | VV/VH | Descend Left-looking |

| 16 | 2 October 2017 | S1B | VV/VH | Descend Left-looking |

| 17 | 14 October 2017 | S1B | VV/VH | Descend Left-looking |

| 18 | 26 October 2017 | S1B | VV/VH | Descend Left-looking |

| Num. | Date | Satellite | Sensor | Orbit Number |

|---|---|---|---|---|

| 1 | 23 July 2017 | Landsat-8 | OLI | 118/29 |

| 2 | 24 August 2017 | Landsat-8 | OLI | 118/29 |

| 3 | 9 September 2017 | Landsat-8 | OLI | 118/29 |

| Class | Plot Count | Pixel Count | Acreage/km2 | Percent (Area)/% |

|---|---|---|---|---|

| corn | 185 | 37,607 | 9.13 | 16.99 |

| Peanut | 153 | 39,181 | 9.51 | 17.70 |

| Soybeans | 118 | 13,444 | 3.26 | 6.07 |

| Rice | 124 | 38,890 | 9.44 | 17.56 |

| Buildings | 30 | 7818 | 1.90 | 3.53 |

| Vegetation | 88 | 45,477 | 11.04 | 20.54 |

| Waters | 20 | 10,872 | 2.64 | 4.91 |

| Bare land | 30 | 28,120 | 6.83 | 12.70 |

| Total | 748 | 221409 | 53.76 | 100 |

| Type | SS 1 | Df 2 | MS 3 | F | α | Fα |

|---|---|---|---|---|---|---|

| Between group | 0.120312 | 7 | 0.017187 | 53.40586 | 0.05 | 2.140 |

| Within group | 0.023171 | 72 | 0.000322 | |||

| Total | 0.143483 | 79 | —— |

| Value | Class | Pixel Count | Percent |

|---|---|---|---|

| 0 | Background | 194,626 | 4.87 |

| 1 | Buildings | 123,579 | 3.09 |

| 2 | Vegetation | 401,397 | 10.03 |

| 3 | Waters | 64,622 | 1.62 |

| 4 | Soybeans | 96,656 | 2.42 |

| 5 | Rice | 356,618 | 8.92 |

| 6 | Corn | 1,809,786 | 45.24 |

| 7 | Peanut | 806,050 | 20.15 |

| 8 | Bare land | 146,666 | 3.67 |

| total | 4,000,000 | 100.00 |

| Training Environment | |

| CPU 1 | Core i7 |

| GPU 2 | NVIDIA GTX 1080Ti |

| Platform | Tensorflow |

| Training Parameters | |

| Input size | 224 × 224 × 6 |

| batch-size | 5 |

| Learning rate | 0.001 |

| Total number of sample | 7860 |

| Epoch | 10 |

| Class | RF | SVM | U-Net | |||

|---|---|---|---|---|---|---|

| Commission (%) | Omission (%) | Commission (%) | Omission (%) | Commission (%) | Omission (%) | |

| Corn | 40.67 | 11.28 | 45.96 | 7.66 | 32.32 | 5.82 |

| Soybeans | 33.22 | 30.76 | 26.20 | 19.70 | 28.38 | 12.78 |

| Peanut | 20.52 | 21.34 | 12.98 | 13.97 | 12.43 | 14.64 |

| Rice | 12.59 | 30.43 | 0.71 | 37.23 | 1.48 | 24.52 |

| OA | 77.8582% | 78.6219% | 85.0616% | |||

| Kappa | 0.7381 | 0.7487 | 0.8234 | |||

| Ground Truth | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Class | Build | Vegetation | Soybeans | Rice | Water | Peanut | Corn | Bare Land | Total | |

| Predicted | Build | 7805 | 424 | 3 | 84 | 0 | 0 | 10 | 0 | 8326 |

| Vegetation | 8 | 37,683 | 147 | 2257 | 0 | 108 | 166 | 211 | 40,414 | |

| Soybeans | 0 | 791 | 11,744 | 246 | 0 | 1075 | 607 | 1927 | 16,372 | |

| Rice | 1 | 296 | 78 | 29,451 | 1 | 1 | 55 | 9 | 29,795 | |

| Water | 0 | 6 | 58 | 22 | 10,856 | 0 | 0 | 121 | 11,062 | |

| Peanut | 0 | 334 | 34 | 2163 | 0 | 33,514 | 1297 | 919 | 38,190 | |

| Corn | 4 | 4170 | 1208 | 4489 | 0 | 4423 | 35,462 | 2622 | 52,336 | |

| Bare land | 0 | 1773 | 172 | 160 | 15 | 60 | 10 | 22,311 | 24,422 | |

| Total | 7818 | 45,477 | 13,426 | 38,890 | 10,872 | 39,181 | 37,607 | 28,120 | 221,409 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sens. 2019, 11, 68. https://doi.org/10.3390/rs11010068

Wei S, Zhang H, Wang C, Wang Y, Xu L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sensing. 2019; 11(1):68. https://doi.org/10.3390/rs11010068

Chicago/Turabian StyleWei, Sisi, Hong Zhang, Chao Wang, Yuanyuan Wang, and Lu Xu. 2019. "Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model" Remote Sensing 11, no. 1: 68. https://doi.org/10.3390/rs11010068

APA StyleWei, S., Zhang, H., Wang, C., Wang, Y., & Xu, L. (2019). Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sensing, 11(1), 68. https://doi.org/10.3390/rs11010068