1. Introduction

Landsat images have the longest continuous record of the Earth surface with fine resolution of 30 m (denoted by fine-resolution image henceforth). It has been widely applied by the scientific community [

1]. However, due to the trade-off between spatial and temporal resolution, Landsat sensor revisits the same region every 16 days, which limits its applicability in studies. For example, fine-resolution vegetation phenology mapping [

2] and crop type mapping [

3] require both high spatial and temporal resolution images. For regions with frequent cloud contamination, the time interval may be longer to achieve an image that can be used to extract information on the instantaneous field of view [

4]. To overcome this constraint, spatiotemporal image fusion methods have been developed to synthesize time series of Landsat-like images by blending low spatial resolution but high temporal resolution images (henceforth denoted by coarse-resolution image) such as the MODerate resolution Imaging Spectroradiometer (MODIS) and the Medium Resolution Imaging Spectrometer (MERIS) [

5,

6,

7,

8,

9,

10,

11,

12]. In the remote sensing image fusion domain, spatiotemporal fusion is different from the superresolution methods which enhance the spatial resolution by combining single-frame or multi-frame images [

13], and different from the pansharpening methods (referred to as spatial-spectral fusion) which enhance the spatial resolution and retain spectral resolution of multi-spectral bands with a simultaneously acquired panchromatic band [

14]. Spatiotemporal fusion methods aim to generate fine-resolution images with frequent temporal coverage [

15]. Inputs of these methods are one or more Landsat and coarse-resolution image pairs (L-C pairs) observed on the same dates (henceforth denoted by base date,

), and one coarse-resolution image observed on the prediction date (

). Output is a Landsat-like image on

, which captures the temporal change during

and

and retains the spatial details. The synthetic time series Landsat-like images have been effectively applied in vegetation monitoring [

16,

17], crop types mapping [

18], fine-resolution land surface temperature monitoring [

19,

20], daily evapotranspiration mapping [

21,

22], and water environment monitoring [

23,

24].

Typically, in technical literature, the spatiotemporal image fusion methods can be categorized into five types [

15]: spatial-unmixing based, weight-function based, learning based, Bayesian based, and hybrid methods. The method proposed by Zurita-Milla et al. [

6], the STDFA [

25] and the STRUM [

26] are typical spatial-unmixing based methods. The theoretical basis of these methods is linear spectral mixture of coarse pixels [

6]. Different from the spectral-unmixing, which computes the abundance with known spectra of endmembers, spatial-unmixing computes the spectra of endmembers with known abundance. Spatial-unmixing based methods have the advantage of retrieving accurate spectra of endmembers [

27]. However, their shortcoming is the synthetic image lacks intra-class spectral variability and spatial details [

27]. Because the methods assume each fine pixel contains only one class type and the computed spectra of endmembers are directly assigned to a class image to synthesize the fine-resolution image [

6]. The spatial and temporal adaptive reflectance fusion model (STARFM) [

5], the Enhanced STARFM (ESTARFM) [

7], and the spatial and temporal nonlocal filter-based fusion model (STNLFFM) [

28] are typical weight-function based methods. A fine pixel value is estimated by weighting the information of its surrounding similar pixels in these methods. Spatial details are better retained than spatial-unmixing based methods. However, the spectral mixture of coarse pixels are not taken into account in the weighted model [

27]. Learning-based methods generally contain two processes, i.e., the learning and applying of the model [

15]. Dictionary-pair learning [

8,

9], regression tree [

29], and deep convolutional network [

30] have been adopted in learning-based methods with good performance. However, selection of learning samples has significant influence on the accuracy of learning-based methods, and the computation expense is also high to train a model [

31]. Some relatively simple but efficient learning-based methods were also proposed. Hazaymeh and Hassan [

32,

33] proposed a spatiotemporal image-fusion model (STI-FM) which builds the relationship between coarse-resolution images observed on

and

, and applies the model to Landsat image on

to synthesize Landsat-like image. STI-FM is capable of enhancing the temporal resolution of both land surface temperature and reflectance images of Landsat-8. Kwan et al. [

34,

35] proposed the HCM which first learns a pixel-to-pixel transformation matrix based on coarse-resolution images and then applies the matrix to Landsat image. Both STI-FM and HCM are simple and computationally efficient with high performance [

34]. Bayesian based methods synthesize images in a probabilistic manner based on the Bayesian estimation theory. By making use of the advantage of multivariate arguments, it is flexible to predict temporal changes [

36]. However, fusion accuracy is sensitive to the model assumptions and parameter estimations. Spectral mixture of coarse pixels is also not fully incorporated in the methods [

36]. By combining advantages of two or more methods of the above four categories, hybrid methods were proposed [

15]. The FSDAF [

10] is a typical hybrid method. It performs temporal and spatial predictions by spatial-unmixing and Thin Plate Spline (TPS) interpolator separately, and combines two predictions with the idea of weight-function based methods. Hybrid methods could be more flexible in dealing with complicated scenarios, e.g., heterogeneous landscapes [

37], abrupt land cover type changes [

10] and shape changes [

12]. However, accuracy improvement is at the cost of complicated processing steps and computational expense.

Spatiotemporal image fusion methods should capture temporal change of land surface. Because land surface changes in different ways across different regions and periods, each fusion method may have its own suitable situations [

26]. There is probably no universally best method. Simple methods can outperform complicated methods if the conditions are suitable [

38]. It is valuable to diversify the fusion methods to give more choices in applications [

34]. It is also meaningful to improve existing fusion methods. Aiming to fix the shortcoming of the spatial-unmixing based method proposed by Zurita-Milla et al. [

6], Gevaert and García-Haro [

26] proposed STRUM. Different from the method proposed by Zurita-Milla et al. [

6] which directly performs the spatial-unmixing to coarse-resolution image on

, STRUM first calculates a coarse-resolution temporal change image by subtracting coarse-resolution image on

from the image on

. Then, the difference image is disaggregated by spatial-unmixing to synthesize the fine-resolution temporal change image, which is further added to Landsat image on

to synthesize Landsat-like image on

. Therefore, the synthetic image inherits spectral variability and spatial details from Landsat image on

to some degree [

26]. The STRUM outperforms STARFM and original spatial-unmixing method, even when there are few reference Landsat images [

26]. However, because classification image is applied in spatial-unmixing process, the downscaled fine-resolution temporal change image in STRUM still lacks intra-class spectral variability and spatial details.

Because of the heterogeneity of land surface, more than one land cover types could exist in a Landsat pixel at the scale of 30 m. Mixed pixels commonly occur in Landsat image [

39]. The hard-classification image is unable to represent the varied combinations of endmembers in the pixels [

39]. Generally, land surface varies gradually even on the boundaries of different land cover types. The abundance image, which records area fractions of endmembers in the pixel, could report continuous gradations and retain the spatial structure of land surface, thus providing a more accurate representation of land surface than class image [

39]. Therefore, it is more appropriate to mix spectra of endmembers with fine-resolution abundance image than to assign it to classification image. For the reasons above, we proposed Improved STRUM (ISTRUM) by taking advantages of abundance images in order to tackle the aforementioned shortcoming of STRUM. By surveying the studies on the spatial-unmixing based methods [

6,

25,

26,

40,

41], we found that ISTRUM is the first study that applies fine-resolution abundance image in spatial-unmixing process. In STRUM, the derived fine-resolution temporal change image based on coarse-resolution image is directly added to Landsat image. The sensor difference between Landsat and coarse-resolution imaging systems is not considered [

26]. It was adjusted with a linear model in ISTRUM. Moreover, STRUM only considered the situation when only one L-C image pair is available [

26]. A method to combine results synthesized by multiple L-C pairs was integrated in ISTRUM in order to enhance its applicability for situations when multiple L-C pairs are available.

The objective of this paper is: (1) to describe the framework of ISTRUM; (2) to evaluate the performance improvement of ISTRUM by comparing it with STRUM; (3) to analyze the sensitivity of ISTRUM to factors that influence the accuracy; and (4) to compare the performance of ISTRUM with STDFA, ESTARFM, HCM and FSDAF.

2. Prediction Method

ISTRUM aims to synthesize time series of Landsat-like images based on at least one L-C pair observed on

, and a coarse-resolution image observed on

. Similar to other spatiotemporal fusion methods [

5,

6,

26], all input images should be atmospherically corrected and geometrically co-registered. The coarse-resolution images should contain similar spectral bands of Landsat images.

2.1. Notations and Definitions

The coarse-resolution images observed on and are stored in array and , respectively. Dimensions of and are , where and denote the lines and samples of the coarse-resolution images and denotes spectral bands. The Landsat image observed on and is stored in array and , respectively. The synthetic Landsat-like image based on L-C pair on is stored in . Dimensions of , , and are , where and denote the lines and samples of the Landsat images. The spatial resolution ratio is .

A pixel is selected according to its sample, line, and band indices which are denoted by i, j, and b, respectively. For example, denotes a coarse pixel at location of band b, where , and . Subscript c and f denot coarse- and fine-resolution images, respectively. For all valid sample, line, or band indices of an image, we use the asterisk notation. For example, refers to all band values for pixel at location , and refers to all pixels of band b. To select pixels in a sliding-window from the image, we use :: to denote the subset covers lines and samples of band b. There are fine pixels within a coarse pixel at location . For k-th fine pixel, its location on the fine-resolution image is denoted as with .

2.2. Introduction of STRUM

STRUM generally contains the following steps [

26]: (1) Define the number of classes on image

and perform classification to obtain a fine-resolution class image. The class types are considered as endmembers of coarse-resolution image. (2) Calculate a coarse-resolution abundance image with the class image. For a coarse pixel, abundance of each endmember is the ratio of the number of fine pixels with corresponding class type to

. (3) Calculate coarse-resolution temporal change image

by

. (4)

is assumed to be linear mixture of endmembers and downscaled with spatial-unmixing to obtain a fine-resolution temporal change image

. The step first calculates spectra of endmembers by solving a system of linear equations which is established by coarse pixels in a sliding-window, then assigns the spectra to fine pixels with corresponding class type. (5)

is calculated by

.

STRUM assumes a fine pixel contains only one class type. Therefore, Step (2) calculates coarse-resolution abundance by counting the number of fine pixels, and Step (4) directly assign spectra of endmembers to fine pixels. It results in the lack of intra-class spectral variability on image because pixels of same class type have same values. In addition, spectra derived by unmixing of have spectral characteristics of coarse-resolution imaging system. Sensor difference should be adjusted before adding to .

2.3. Theoretical Basis and Prediction Model of ISTRUM

Because of the heterogeneity of land surface, more than one land cover types can exist in both Landsat pixels (e.g., 30 m × 30 m) and coarse pixels (e.g., 500 m × 500 m for MODIS). A linear mixture model could represent the combinations of endmembers’ reflectance and abundance [

42]. For a Landsat pixel

, its reflectance on

and

are

where

is the number of endmembers and

m denotes the

m-th endmember with

.

and

are fine-resolution abundance images on

and

with dimensions of

.

and

are

arrays that store the reflectance of endmembers on

and

.

is the residual. Assuming abundance images do not change between

and

, i.e.,

, and

is constant, we have

with

where

denotes the temporal change of fine-resolution images and

denotes the reflectance change of endmembers on fine-resolution images. Among the variables, only

is known and

could be derived from

by spectral-unmixing. If

were obtained,

would be calculated by linear mixture (Equation (

3)) and a Landsat-like image would be predicted by adding

to

(Equation (

4)).

Similarly with Equations (

3)–(

5), temporal change of coarse-resolution images

can be expressed as

with

where

denotes the coarse-resolution abundance image with dimensions of

.

and

are

arrays that identify the reflectance of endmembers on coarse-resolution image, and

represents the reflectance change of the endmembers on coarse-resolution images.

Among the variables in Equations (

6)–(

8),

and

are known and

could be calculated. Moreover, because the types and spatial distributions of endmembers should be same for fine- and coarse-resolution images in same regions,

can be aggregated from

with

Equation (

9) indicates the abundance of

m-th endmember in a coarse pixel

is calculated by averaging the abundances of

m-th endmember in the corresponding fine pixels. However, in STRUM,

is the ratio of the count of fine pixels for class

m to

[

26].

Although

and

of Equation (

6) are known variables,

is unsolvable because Equation (

6) is underdetermined since there are

unknowns of

[

6].

According to Waldo Tobler’s first law of geography [

43], reflectance of same land cover type in a small region can change similarly over time, e.g., the maize in adjacent crop fields may grow in similar ways and have analogous spectral features. Therefore, it is reasonable to assume

is same in a sliding-window of coarse-resolution image. With half of the sliding-window size

, which is measured by the number of coarse pixels and should be defined by user, a sliding-window centred at pixel

is selected by

:

:

. Size of sliding-window is

. Then,

linear equations similar with Equation (

6) are established with the coarse pixels. To derive

by solving the linear equations,

should be greater than

[

6]. Given the reflectance change is also related to environmental factors (e.g., altitude, morphology, soil type, and fertilization) [

40], the assumption that

is the same may be valid only in a small region. Therefore, the definition of

should take spatial resolution of the coarse-resolution image and heterogeneity of land surface into consideration.

should not be too large, otherwise, it may induce errors in the calculation of

.

Theoretically, reflectance of endmembers on Landsat and coarse-resolution images should also be the same. However, sensor difference, caused by differences between fine- and coarse-resolution sensor systems such as bandwidth, solar-viewing geometry conditions, atmospheric correction, and surface reflectance anisotropy [

44,

45] may exist. The computation of

is based on

. Thus, it has spectral characteristics of coarse-resolution imaging system. To obtain

, sensor difference should be adjusted. Linear and nonlinear models have been proposed to normalize the difference between images of different sensors [

46]. Similar to previous studies [

47,

48], a simple linear model is applied to adjust sensor difference.

In Equation (

10),

and

are slope and interception of the linear model for band

b and can be calculated by linear regression between the observed fine- and coarse-resolution images. Sensor difference between

and

can be adjusted by Equation (

11). The

in Equation (

10) is reduced after subtraction.

In STRUM,

is directly assigned to fine pixels with same class type according to a fine class image [

26]. However,

is linearly mixed with

in ISTRUM. Since

is the local reflectance change of the endmembers, the mixture is only performed on fine pixels that fall in the central coarse pixel. The

is calculated as follow:

Based on one L-C pair, the Landsat-like image on

is finally calculated by

The aforesaid steps describe the prediction model with one L-C pair. When multiple L-C pairs are applied, could be first predicted based on each L-C pair and then combined to make a final prediction.

2.4. Implementation of ISTRUM

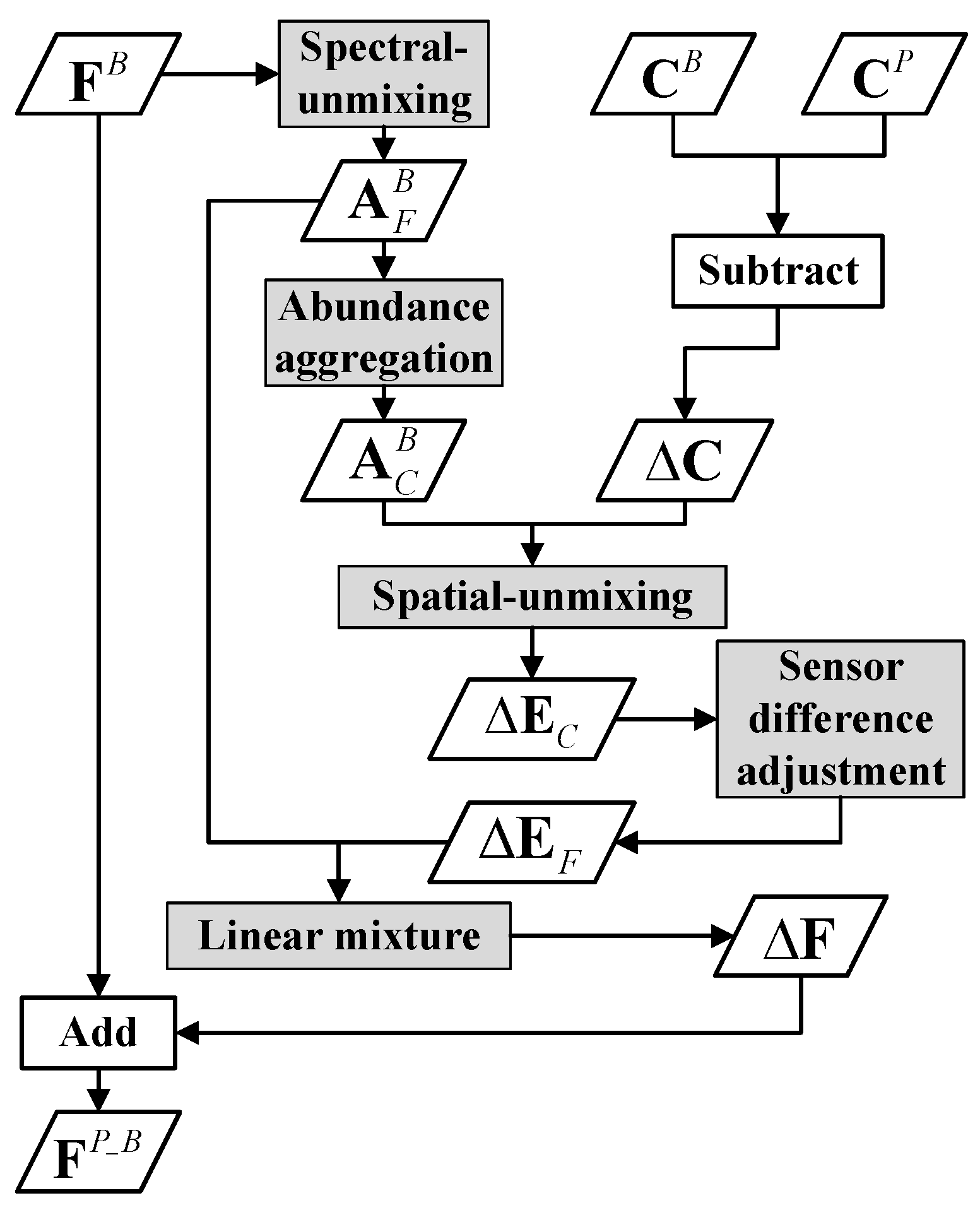

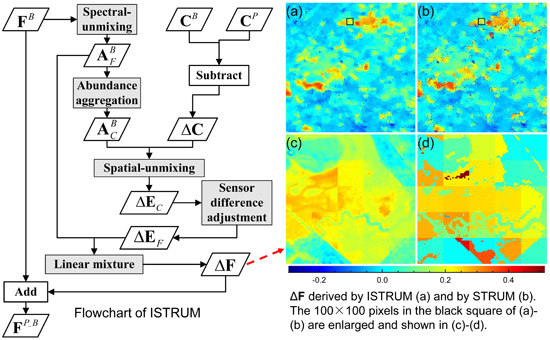

The workflow of ISTRUM is outlined by the flowchart in

Figure 1. Key steps (steps with gray background) include spectral-unmixing, abundance aggregation, spatial-unmixing, sensor difference adjustment and linear mixture. Spectral-unmixing is the first step aiming to derive fine-resolution abundance image

which is used in abundance aggregation and linear mixture. Based on

, coarse-resolution abundance

is calculated in abundance aggregation. With known

and coarse-resolution temporal change image

, reflectance change of endmembers are calculated in spatial-unmixing process. Derived

has spectral characteristics of coarse-resolution imaging system. Sensor difference is adjusted to obtain

, which is further linear mixed with

to obtain fine-resolution temporal change image. Detailed descriptions are in the following subsections.

2.4.1. Spectral-Unmixing

Spectral-unmixing is the first step applied to

to obtain

which is further used to calculate

. To strengthen the applicability of ISTRUM on the global scale, a globally representative spectral linear mixture model (SVD model) for Landsat TM/ETM+/OLI images [

39,

49,

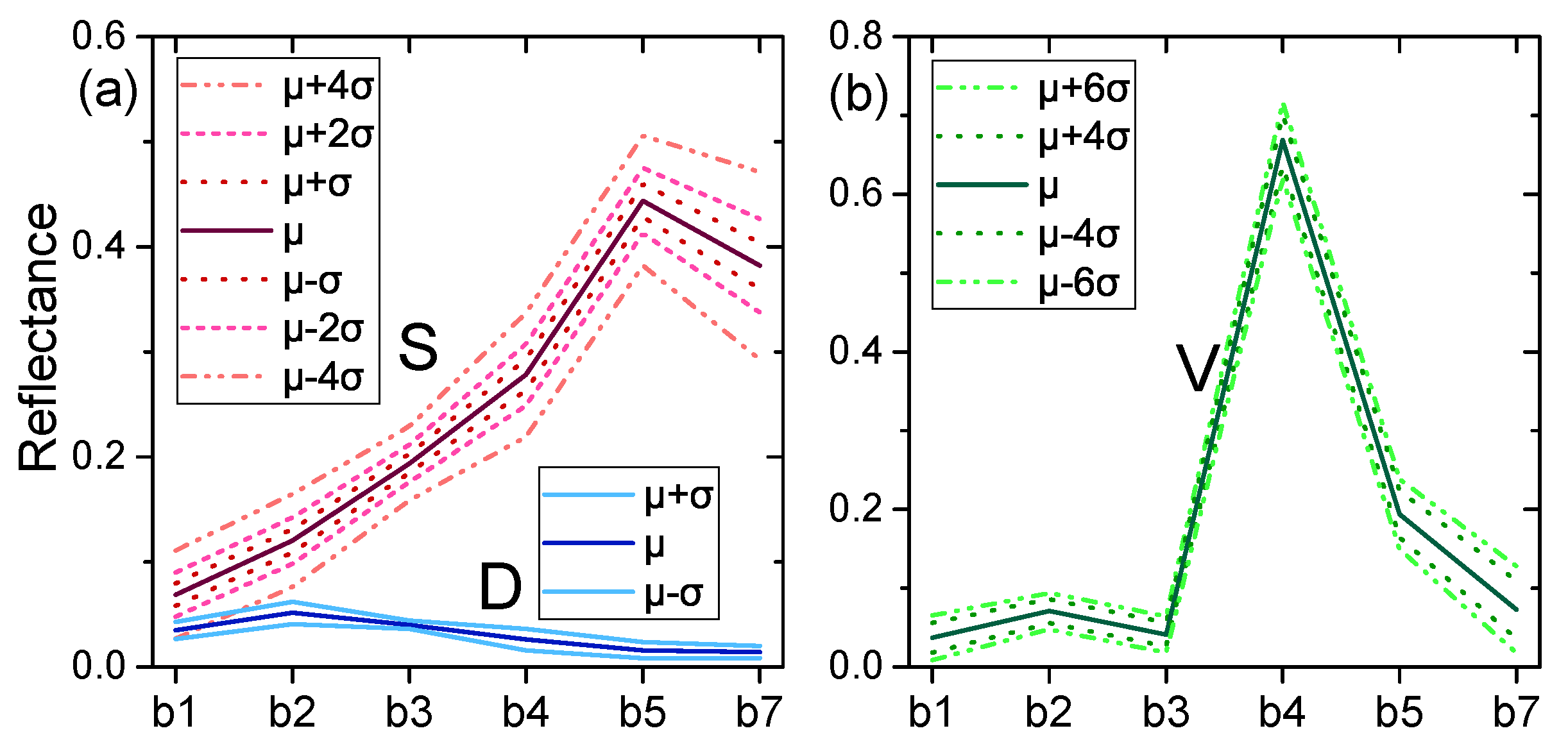

50] was selected in the implementation of spectral-unmixing. Endmembers were classified to three types: substrate (S), vegetation (V), and dark surface (D).

Spectra of endmembers (i.e.,

) are extracted from

with the approach described by Small [

39]. The approach first applies Principal Component Analysis (PCA) to

, and then selects pure pixels by analyzing the mixing space of the first three primary components. The approach generally selects a suite of pure pixels for each endmember and mean spectra are used in spectral-unmixing. Based on the mixture model, the Fully Constrained Least Square (FCLS) method [

51] is used to unmix

in the spectral-unmixing process. The computed abundance

varied from 0 to 1 and the sum of

is 1.

2.4.2. Abundance Aggregation

is calculated with

in this process. For a coarse pixel at location

, abundance of each endmember is calculated by Equation (

9). If an endmember abundance is low in a coarse pixel, its errors in spatial-unmixing may be large [

6]. Therefore, if the abundance of endmember

m is

, it is merged to its spectrally most similar class whose abundance should also be greater than 0. In the implementation of ISTRUM, similarity between each endmember is measured by Spectral Angle (SA), which is calculated with

.

is set to 0.05 according to previous studies [

6,

52].

2.4.3. Spatial-Unmixing

The spatial-unmixing process aims to derive

by solving a system of linear equations. For a coarse pixel at location

, a sliding-window around it is selected by

:

:

and

equations similar with Equation (

6) are considered. The least square method is applied to solve the equations because it does not require additional parameters and has been widely implemented in spatial-unmixing based methods [

6,

25,

48].

is computed by minimizing Equation (

14) based on the Generalized Reduced Gradient Method [

53] as follow:

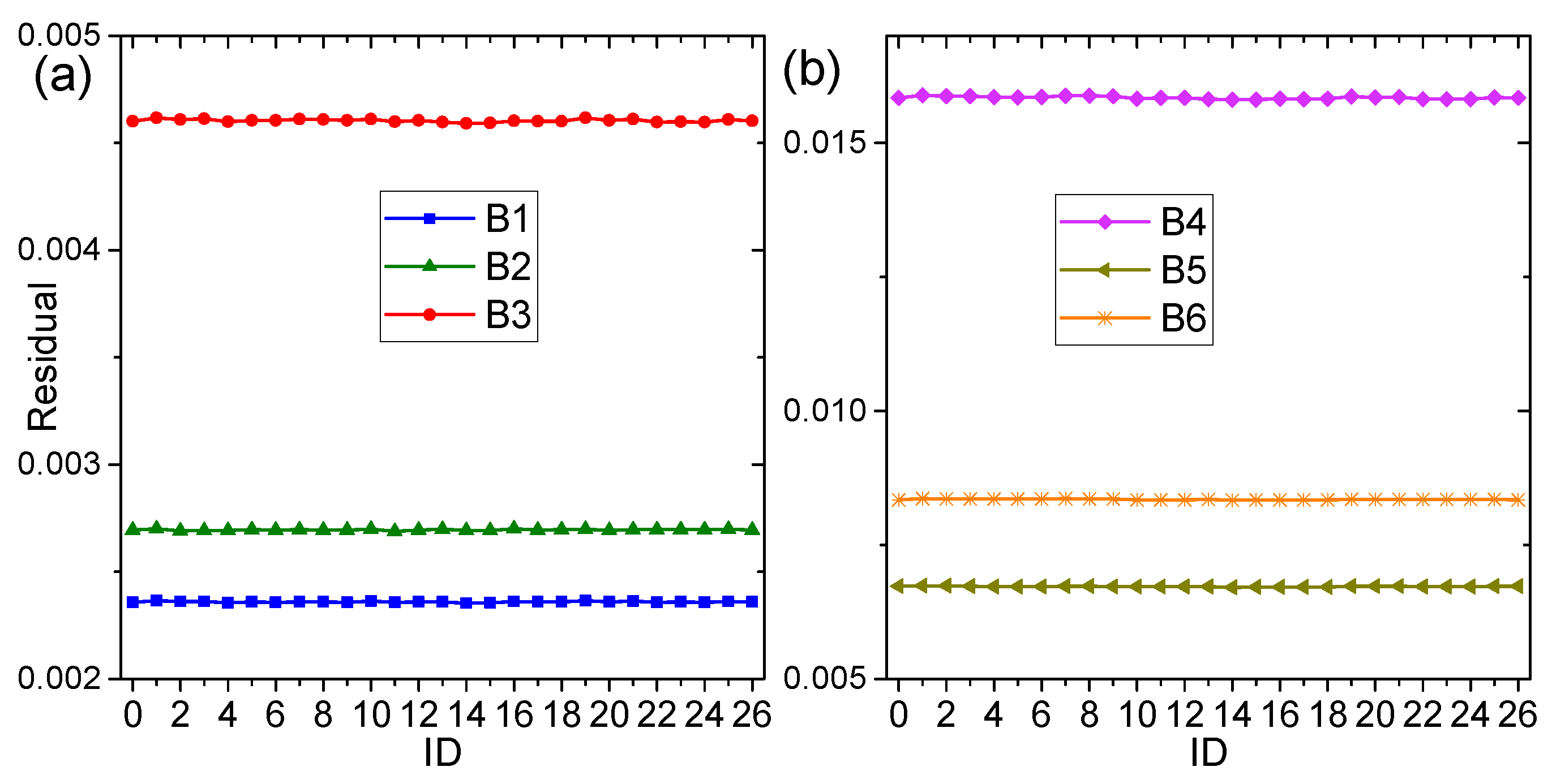

2.4.4. Sensor Difference Adjustment

Sensor difference is adjusted to obtain

from

by means of Equation (

11). The coefficient

is calculated with

and

.

is firstly aggregated to coarse-resolution image

by averaging the band values of fine pixels that fall in an individual coarse pixel. Then,

is computed by linear regression with

as dependent variable and

as independent variable.

2.4.5. Linear Mixture

The

is linearly mixed with

(Equation (

12)) to reconstruct

. The mixture is only applied to fine pixels (i.e.,

) falling in the center coarse pixel

of the currently selected sliding-window. Because

represents the reflectance change of endmembers in the sliding-window.

The spatial-unmixing and linear mixture processes are performed pixel-by-pixel for each band of

. When all the pixels of

are processed, image

is obtained and the Landsat-like image

predicted by one L-C pair is finally calculated by Equation (

13).

2.4.6. Method to Combine Images Predicted by Multiple L-C Pairs

Suppose there are

L-C pairs observed on different base dates

with

. Each L-C pair could synthesize an individual Landsat-like image

. The final prediction is weighted summation of all the

. Absolute temporal difference (

) between

and

is used to calculate the weights.

is calculated locally by Equation (

15) with

in a sliding-window. The window is same with the one applied in spatial-unmixing because reflectance change of each endmember is assumed to be same in the sliding-window. When

value approximates 0, it indicates little reflectance change between

and

, so the prediction based on L-C pair on date

should be more reliable. Therefore,

should have a large weight. Hence, weight

is calculated with the reciprocal of

in Equation (

16). The

is for the central coarse pixel

of the sliding-window, and fine pixels within it share the same weight. The final prediction (

) with multiple L-C pairs is calculated by Equation (

17).

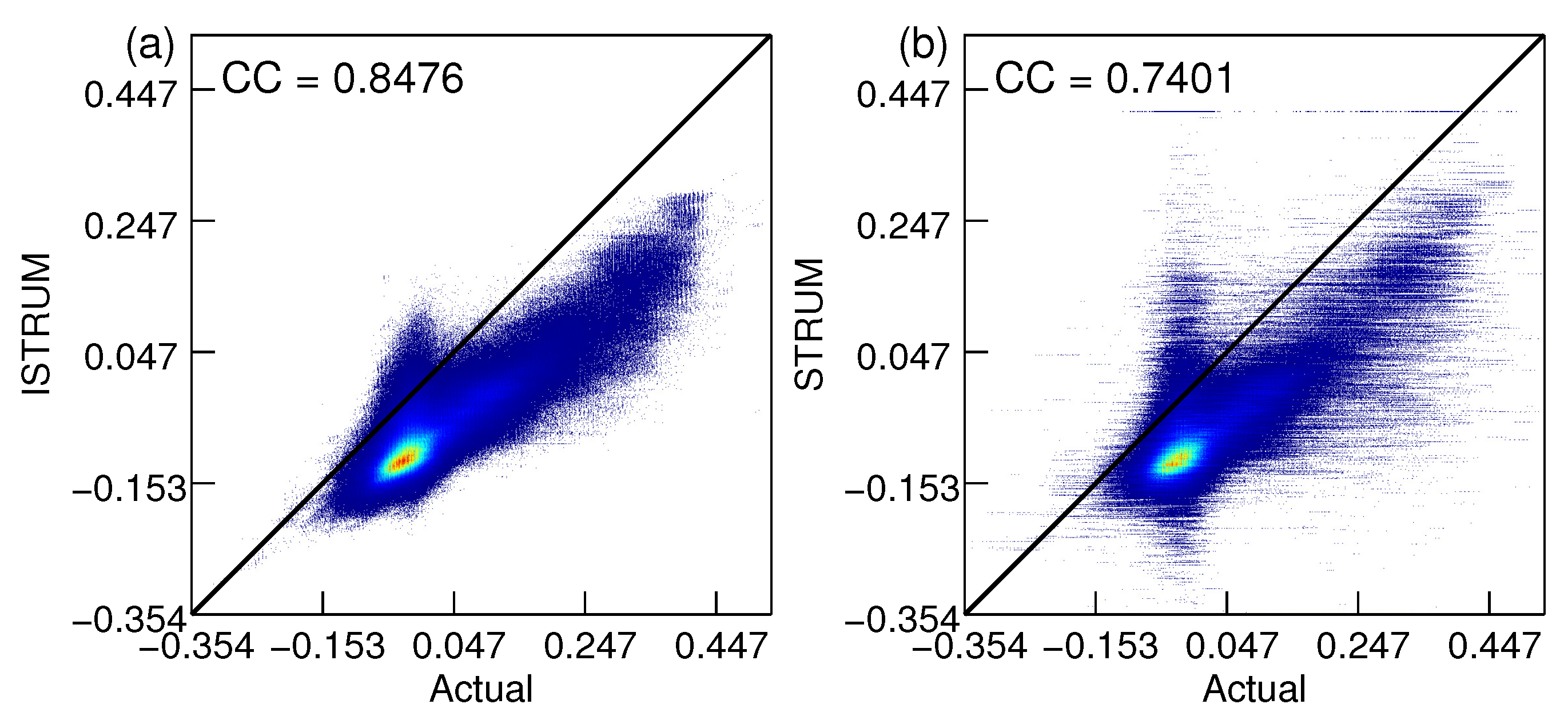

2.5. Differences between ISTRUM and STRUM

Both ISTRUM and STRUM applied spatial-unmixing process directly to the coarse-resolution temporal change image. However, there are some differences. ISTRUM considers the heterogeneity of land surface and regards the pixels on Landsat image are linear mixture of endmembers at the scale of 30 m. Therefore, the fine-resolution abundance image is applied in ISTRUM, while class image is used in STRUM. Based on the difference, the is further linearly mixed with to obtain . The step ensures to better preserve spectral variability and spatial details than the one derived by STRUM. In addition, sensor difference between and is considered and adjusted in ISTRUM. While STRUM directly assigns to fine pixels according to the class image without adjusting sensor difference.

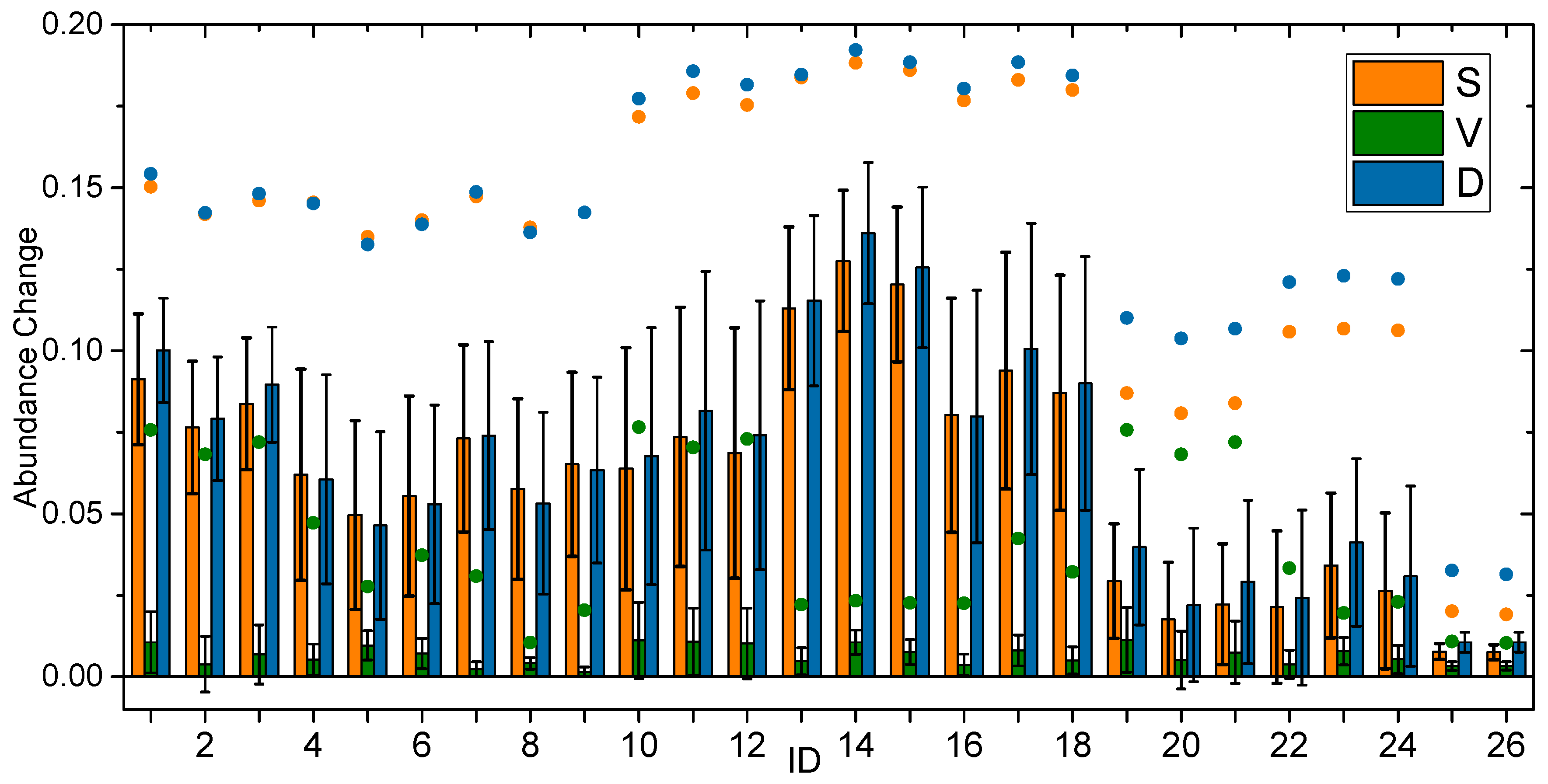

As reference images, the L-C pairs input into the fusion method have significant influence on the prediction accuracy [

28,

54]. According to [

55], two L-C pairs is preferred to be applied in the prediction when temporal change is consistent. ISTRUM can combine prediction results of multiple L-C pairs with a weighted summation method, which strengthens the applicability, while STRUM is applicable with only one L-C pair.

5. Discussion

Spatiotemporal image fusion is a feasible and cost-efficient approach to synthesize images with high spatial and temporal resolution [

15]. Spatial-unmixing based method is one kind of the most widely studied spatiotemporal fusion methods [

6,

25,

26,

40,

41]. One challenge is that the image derived in spatial-unmixing based method generally lacks spectral variability and spatial details [

10,

26,

27]. To tackle the problem, this study improved the STRUM, which is a well-performing spatial-unmixing based method [

26], by applying fine-resolution abundance image. Experimental results revealed ISTRUM generated higher accuracy than STRUM and was competitive with benchmark spatiotemporal fusion methods. Based on the results in this study, relevant issues are discussed in the following paragraphs.

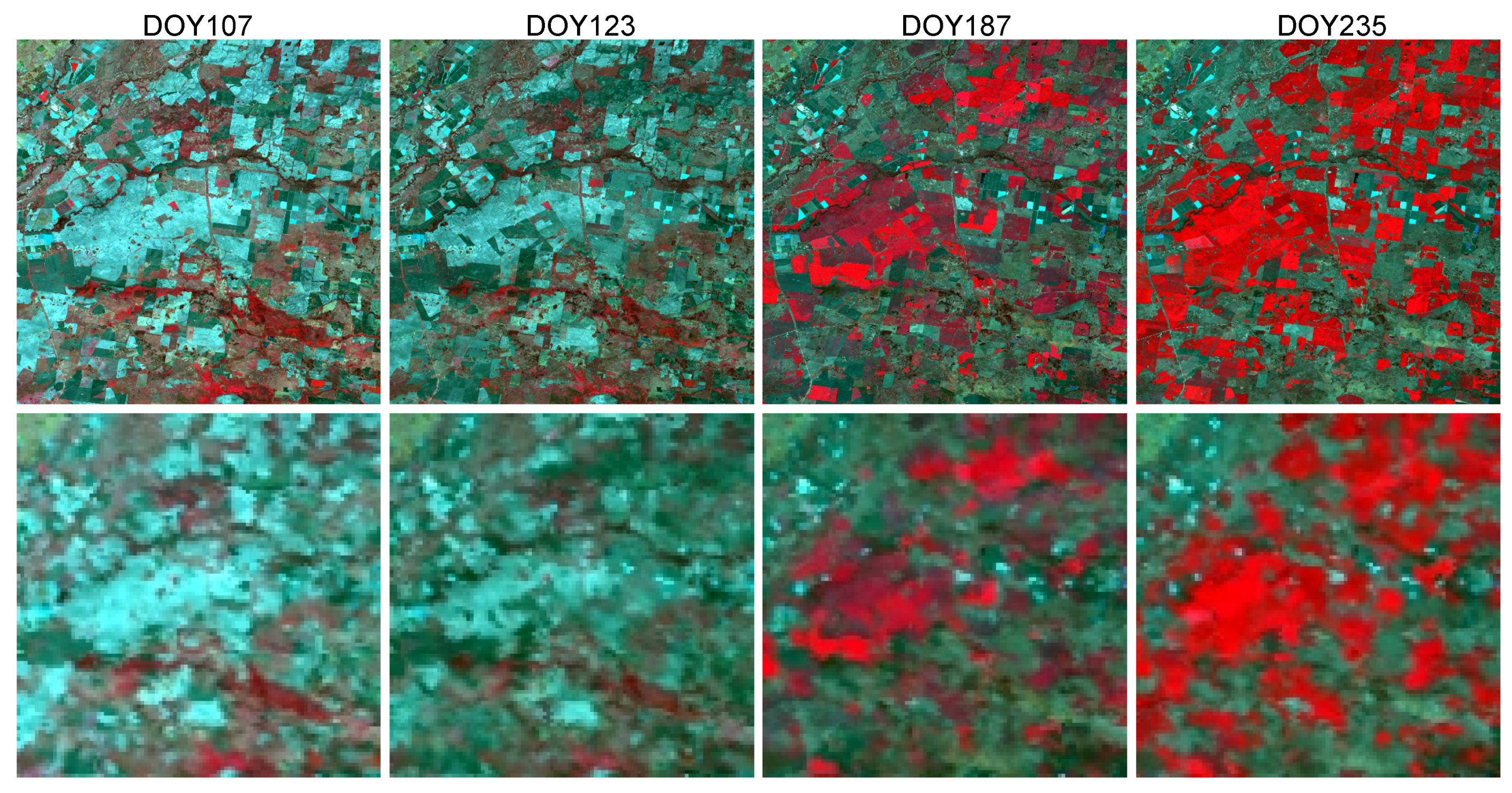

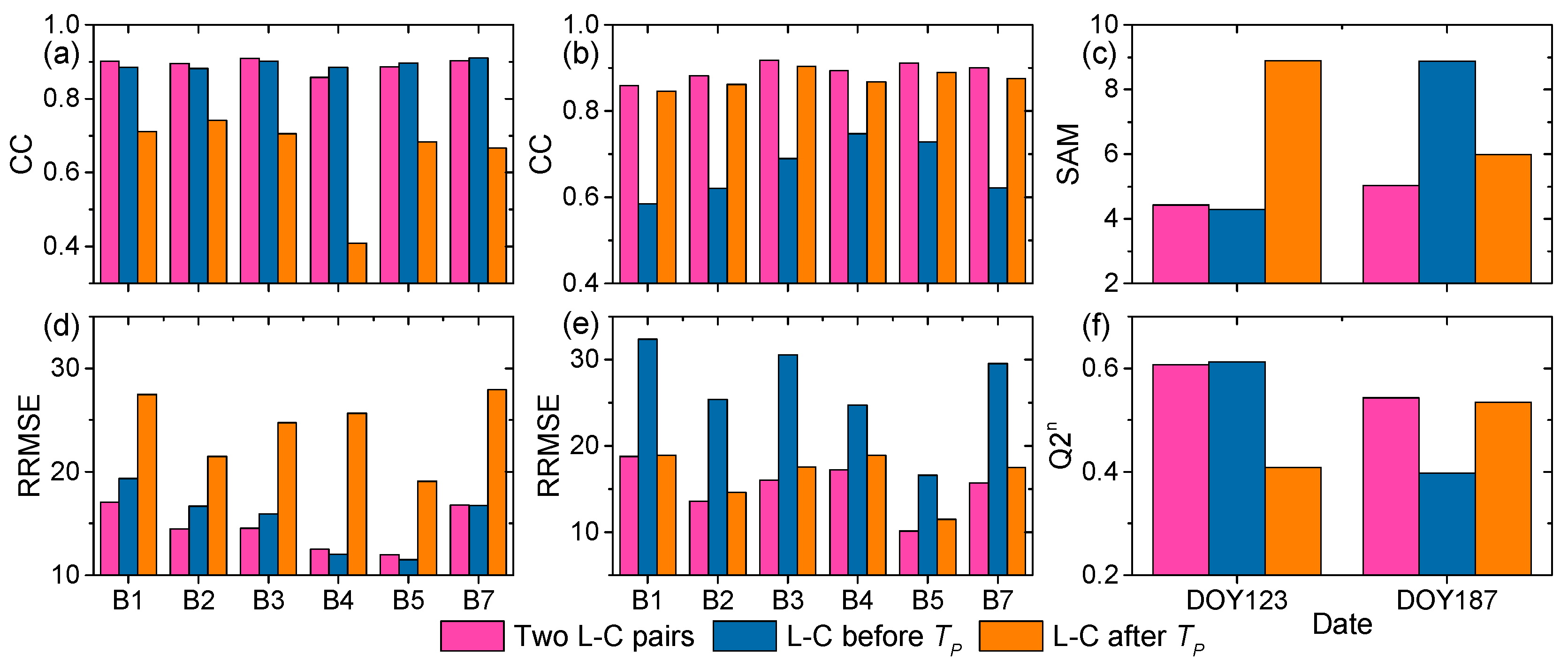

Used as the base images in spatiotemporal fusion methods, L-C image pair is significant to improve the accuracy of synthetic image. Accuracy of the same method varied greatly when input L-C pair is different. In our study, Landsat-like image on DOY187 synthesized based on L-C pair on DOY235 (

Section 4.4) has higher accuracy than the one based on DOY123 and DOY107. The accuracy of Landsat-like image is improved when L-C image pair strongly correlates to images on

. Cheng et al. [

28] found accuracy increased as the time interval between

and

decreased because the likelihood of large temporal change is low and correlation between images on

and

is high when the time interval is short. According to the correlation between coarse-resolution images on

and

, optimal L-C pair can be determined [

54]. In addition, multiple L-C pairs can also improve the accuracy for situations when more than one L-C pair are available.

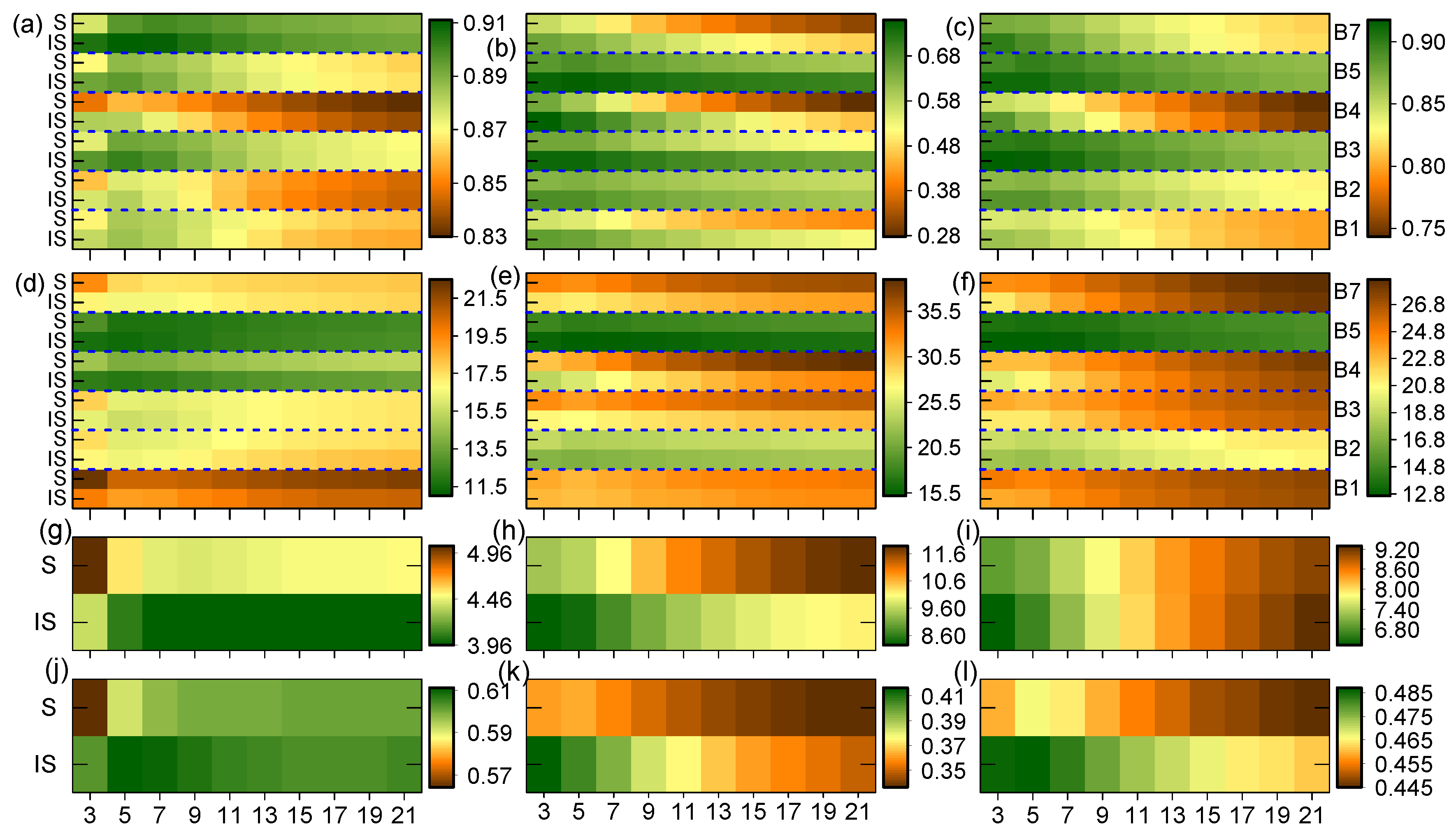

Since accuracy of spatiotemporal fusion methods is influenced by spatial heterogeneity [

5,

7], land cover type [

48], and the complexity of temporal change [

38], performance of the methods varied under different conditions. There is no universal method that performs well under all conditions [

34]. For example, although FSDAF considers land cover type change, it has problems in overestimating spatial variations [

11]; and ESTARFM improves the accuracy in heterogeneous regions but has problems in estimating nonlinear reflectance changes [

38]. Our study also found ISTRUM outperformed HCM when temporal change was large while HCM was better when temporal change was small. Therefore, selecting and combining different methods according to the conditions is meaningful in application [

34]. For example, to synthesize time series NDVI, methods with better performance at Red and NIR bands are preferred considering the fusion accuracy is band dependent. In addition, for large area and long term applications of spatiotemporal fusion methods, processing many images is required. The trade-off between accuracy and computational efficiency should be considered. With one user defined parameter and high computational efficiency, ISTRUM provides an easy-to-use and efficient alternative.

Fine- and coarse-resolution images are generally regarded as consistent when developing the spatiotemporal fusion methods [

10,

11]. However, sensor difference commonly existed and impacts fusion accuracy. Xie et al. [

54] found MODIS images with smaller view zenith angle produce better predictions. Preprocessing to reduce of sensor difference helps to improve the accuracy. Models to adjust sensor difference are worth of integration into spatiotemporal fusion methods. Similar with previous studies [

47,

48], ISTRUM adjusted the sensor difference with a simple linear model and improved the performance to some degree. However, nonlinear models [

46] to normalize the inconsistency between fine- and coarse-resolution images are worth studying in the future.